We humans carry around in our heads rich internal mental models that constitute our construction of the world, and the relation of that world to us. These models can be expressed at multiple levels of abstraction, including beliefs about sensory stimuli and the output of our motor programs, or higher-level beliefs about self. Advancing our understanding of the brain’s internal processing states, however challenging, could lead to breakthroughs in understanding states such as dreaming, consciousness, and mental disorders (1). Computational theories propose that internal models are broadcast throughout the brain, including to sensory areas of the brain (2). Techniques to read out internal mental models will deliver insight into how our brains use beliefs or predictions to “construct” the environment. Internal models in higher brain areas are complex though, creating a paradox that they are difficult to constrain if we want to decipher the brain’s coding of them. However, when internal models are fed back to sensory cortex, we assume that they are translated into sensory predictions that would intuitively have simpler content. In PNAS, Chong et al. (3) provide empirical evidence needed to drive this theory forward. Using brain reading, they show that the brain constructs new plausible predictions of expected sensory input, and that these predictions can be read out in sensory cortex.

There are two information streams that coincide in V1: the high-precision retinal input that is processed with high spatial acuity and the broad, abstract, less-precise strokes painted by cortical feedback. V1 is retinotopically organized and has small classical feed-forward receptive fields. Conversely, feedback conveys information about larger portions of the visual field, giving rise to extraclassical or contextual receptive fields. By eliminating the feed-forward input to the classical receptive field, we can investigate internal signals carried by feedback, which originate from diffuse brain areas (4). There are many challenges that we need to understand, including what kind of features are signaled in feedback. In a novel variation of the apparent motion illusion, Chong et al. (3) used inducer stimuli that allowed them to show that the motion prediction fed back to V1 contained detailed texture information. The information that they were able to read out from V1 was created by the brain's prediction about an object's rotational movement as it moved through the visual field. But, in fact, this experience of rotation was never present in the sensory input to the brain—it was fabricated by the brain out of acquired world knowledge. A previous study used objects rotating in depth to show a similar construction of an unseen (but implied) object position, but only in higher areas of the visual hierarchy (5). A number of other studies have shown that internal processing is contextual, both in higher (6) and lower visual areas (7, 8). The innovation of the data of Chong et al. (3) is in showing that internal processing is constructive and dynamic and contains motion and feature information even in primary visual cortex V1.

An important distinction to make is that during the long-range apparent motion used by Chong et al. (3) observers do not fill the intermediate space with a perceptual illusion of the rotated object. Wertheimer (9) extensively discussed the “visibility” of an object on the “Bewegungsfeld” (apparent motion trace). Although observers sense that the object is continuously crossing the field, they also know that it is an illusion and not real motion. This is an example of amodal completion: the knowledge that a figure continues to exist even when placed behind an occluded object (10–12) or that motion is continued behind objects (13). The important notion for the data of Chong et al. (3) is that, whereas only the gray surface was visible, observers’ knowledge constructed the object that was passing by (i.e., the intermediate grating). That the constructed grating can be read out of the activation pattern in V1 even though subjects do not actively perceive the “hidden object” shows that visual perception and internal constructions of visual content exist in independent neural codes. These codes might be compared with one another as is proposed in predictive coding frameworks, or they might be preserved in the system simultaneously to allow for an internal code distinct from an external.

In the data of Chong et al.(3), knowledge about the two objects is used to construct a prediction of a new, rotated object, which was never seen. However, our knowledge of the world spans several levels of complexity. For example, we recognize objects and can understand their interactions and their social meanings for us. Whereas sensory predictions are more fixed because perception leaves little room for ambiguity, our knowledge of the world that forms more complex mental models has more flexibility. These models can guide how we interact with the world and govern emotional responses. The significance of their flexibility is that maladaptive higher-level models can be altered with psychotherapeutic or pharmacological intervention. In depression, undesirable internal models bias attention and memory toward negative stimuli (14), leading to the abnormal processing of reward and punishment, and the confirmation of maladaptive internal mental models. Deepening our understanding of how the brain internally represents the world may then be crucial for guiding treatment intervention in mental disorders.

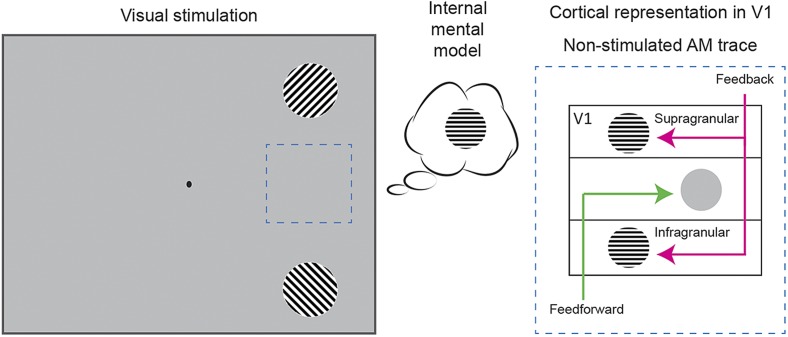

One question that arises from the data of Chong et al. (3) is this: What is the neural mechanism responsible for integrating model-based feedback signals with sensory-driven feed-forward signals? Rodent data of Larkum (15) and others suggest that this integration might happen within individual pyramidal neurons. Pyramidal neurons have two functionally distinct compartments to process feedback and feed-forward inputs, in the apical tuft and basal dendrites, respectively. These functional compartments reside in different layers of cortex, which in turn can be studied noninvasively in humans. For example, using high-field, high-resolution functional brain imaging, it has been shown that superficial layers of human V1 contain contextual feedback about natural scenes (16). We can conjecture that the predicted object representation found in the data of Chong et al. (3) may also be localized to the superficial layers that represent the nonstimulated apparent motion trace (Fig. 1, Right). It may also be present in deep layers; for example, while monkeys perform figure ground segregation, feedback arrives to V1 in the superficial layers but also in layer 5 (17). It remains to be tested how feedback effects in specific layers (superficial and/or deep) are modulated by the complexity of the feed-forward stimulation, and by the distance from V1 that feedback originates.

Fig. 1.

(Left) Apparent motion illusion stimulation similar to that in the experiment of Chong et al. (3). Observers reconstructed the intermediate dynamic representation on the apparent motion trace (dashed box, thought bubble). (Right) Possible cortical layer distribution of feed-forward and feedback information in the data of Chong et al. (3).

In his novel 1Q84, author Haruki Murakami (18) writes, “What we call the present is given shape by an accumulation of the past.” Chong et al. (3) use a simple and clever paradigm to demonstrate that during the interpolation of motion, the brain reconstructs object features in V1 that were never shown to the retina. Indeed, it seems the brain can “give shape” to its environment.

Acknowledgments

This work was supported by European Research Council Grant StG 2012_311751, “Brain reading of contextual feedback and predictions.”

Footnotes

The authors declare no conflict of interest.

See companion article on page 1453.

References

- 1.Meyer K. Another remembered present. Science. 2012;335(6067):415–416. doi: 10.1126/science.1214652. [DOI] [PubMed] [Google Scholar]

- 2.Clark A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav Brain Sci. 2013;36(3):181–204. doi: 10.1017/S0140525X12000477. [DOI] [PubMed] [Google Scholar]

- 3.Chong E, Familiar AM, Shim WM. Reconstructing representations of dynamic visual objects in early visual cortex. Proc Natl Acad Sci USA. 2016;113:1453–1458. doi: 10.1073/pnas.1512144113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Muckli L, Petro LS. Network interactions: Non-geniculate input to V1. Curr Opin Neurobiol. 2013;23(2):195–201. doi: 10.1016/j.conb.2013.01.020. [DOI] [PubMed] [Google Scholar]

- 5.Weigelt S, Kourtzi Z, Kohler A, Singer W, Muckli L. The cortical representation of objects rotating in depth. J Neurosci. 2007;27(14):3864–3874. doi: 10.1523/JNEUROSCI.0340-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Smith ML, Gosselin F, Schyns PG. Measuring internal representations from behavioral and brain data. Curr Biol. 2012;22(3):191–196. doi: 10.1016/j.cub.2011.11.061. [DOI] [PubMed] [Google Scholar]

- 7.Smith FW, Muckli L. Nonstimulated early visual areas carry information about surrounding context. Proc Natl Acad Sci USA. 2010;107(46):20099–20103. doi: 10.1073/pnas.1000233107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Williams MA, et al. Feedback of visual object information to foveal retinotopic cortex. Nat Neurosci. 2008;11(12):1439–1445. doi: 10.1038/nn.2218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wertheimer M. Experimentelle Studien liber das Sehen von Bewegung. Zeitschrift fur Psychologie und Physiologie der Sinnesorgane. 1912;61:161–265. [Google Scholar]

- 10.Michotte A, Thinès G, Crabbé G. 1964. Les compléments amodaux des structures perceptives. Studia Psycologica (Publications Universitaires de Louvain, Leuven, Belgium)

- 11.Wagemans J, van Lier R, Scholl BJ. Introduction to Michotte’s heritage in perception and cognition research. Acta Psychol (Amst) 2006;123(1-2):1–19. doi: 10.1016/j.actpsy.2006.06.003. [DOI] [PubMed] [Google Scholar]

- 12.Weigelt S, Singer W, Muckli L. Separate cortical stages in amodal completion revealed by functional magnetic resonance adaptation. BMC Neurosci. 2007;8:70. doi: 10.1186/1471-2202-8-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sugita Y. Grouping of image fragments in primary visual cortex. Nature. 1999;401(6750):269–272. doi: 10.1038/45785. [DOI] [PubMed] [Google Scholar]

- 14.Disner SG, Beevers CG, Haigh EA, Beck AT. Neural mechanisms of the cognitive model of depression. Nat Rev Neurosci. 2011;12(8):467–477. doi: 10.1038/nrn3027. [DOI] [PubMed] [Google Scholar]

- 15.Larkum M. A cellular mechanism for cortical associations: An organizing principle for the cerebral cortex. Trends Neurosci. 2013;36(3):141–151. doi: 10.1016/j.tins.2012.11.006. [DOI] [PubMed] [Google Scholar]

- 16.Muckli L, et al. Contextual feedback to superficial layers of V1. Curr Biol. 2015;25(20):2690–2695. doi: 10.1016/j.cub.2015.08.057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Self MW, van Kerkoerle T, Supèr H, Roelfsema PR. Distinct roles of the cortical layers of area V1 in figure-ground segregation. Curr Biol. 2013;23(21):2121–2129. doi: 10.1016/j.cub.2013.09.013. [DOI] [PubMed] [Google Scholar]

- 18.Murakami H. 1Q84. Knopf; New York: 2011. [Google Scholar]