Abstract

In the framework of Bayesian model-based clustering based on a finite mixture of Gaussian distributions, we present a joint approach to estimate the number of mixture components and identify cluster-relevant variables simultaneously as well as to obtain an identified model. Our approach consists in specifying sparse hierarchical priors on the mixture weights and component means. In a deliberately overfitting mixture model the sparse prior on the weights empties superfluous components during MCMC. A straightforward estimator for the true number of components is given by the most frequent number of non-empty components visited during MCMC sampling. Specifying a shrinkage prior, namely the normal gamma prior, on the component means leads to improved parameter estimates as well as identification of cluster-relevant variables. After estimating the mixture model using MCMC methods based on data augmentation and Gibbs sampling, an identified model is obtained by relabeling the MCMC output in the point process representation of the draws. This is performed using -centroids cluster analysis based on the Mahalanobis distance. We evaluate our proposed strategy in a simulation setup with artificial data and by applying it to benchmark data sets.

Keywords: Bayesian mixture model, Multivariate Gaussian distribution, Dirichlet prior, Normal gamma prior, Sparse modeling, Cluster analysis

Introduction

Finite mixture models provide a well-known probabilistic approach to model-based clustering. They assume that the data are generated by drawing from a finite set of exchangeable mixture components where each mixture component corresponds to one specific data cluster. More specifically, observations , , are assumed to be drawn from the following mixture distribution:

| 1 |

where the mixture components are in general assumed to belong to a well-known parametric distribution family with density and are the component weights, satisfying and ; see McLachlan and Peel (2000) and Frühwirth-Schnatter (2006) for a review of finite mixtures.

Since the pioneering papers by Banfield and Raftery (1993), Bensmail et al. (1997) and Dasgupta and Raftery (1998), model-based clustering based on finite mixtures has been applied successfully in many areas of applied research, such as genetics (McLachlan et al. 2002; Yeung et al. 2001), economics time series analysis (Frühwirth-Schnatter and Kaufmann 2008; Juárez and Steel 2010), social sciences (Handcock et al. 2007), and panel and longitudinal data analysis (McNicholas and Murphy 2010; Frühwirth-Schnatter 2011b), just to mention a few.

Despite this success and popularity of model-based clustering based on finite mixtures, several issues remain that deserve further investigation and are addressed in the present paper within a Bayesian framework. Above all, in applications typically little knowledge is available on the specific number of data clusters we are looking for, and, as a consequence, the unknown number of mixture components corresponding to these data clusters needs to be estimated from the data. Tremendous research effort has been devoted to this question, however, no uniquely best method has been identified. Likelihood-based inference typically relies on model choice criteria such as BIC, approximate weight of evidence, or the integrated classification likelihood criterion to select , see e.g. Biernacki et al. (2000) for a comparison of different criteria. Bayesian approaches sometimes pursue a similar strategy, often adding the DIC to the list of model choice criteria; see e.g. Celeux et al. (2006). However, methods that treat as an unknown parameter to be estimated jointly with the component-specific parameters are preferable from a Bayesian viewpoint.

Within finite mixtures, a fully Bayesian approach toward selecting is often based on reversible jump Markov chain Monte Carlo (RJMCMC), as introduced by Richardson and Green (1997). RJMCMC creates a Markov chain that moves between finite mixtures with different number of components, based on carefully selected degenerate proposal densities which are difficult to design, in particular in higher dimensional mixtures, see e.g. Dellaportas and Papageorgiou (2006). Alternatively, the choice of has been based on the marginal likelihood , which has to be combined with a suitable prior to obtain a valid posterior distribution over the number of components (Nobile 2004). However, also the computation of the marginal likelihood turns out to be a challenging numerical problem in a finite mixture model even for moderate (Frühwirth-Schnatter 2004).

A quite different approach of selecting the number of components exists outside the framework of finite mixture models and relies on a nonparametric Bayesian approach based on mixture models with countably infinite number of components. To derive a partition of the data, Molitor et al. (2010) and Liverani et al. (2013) define a Dirichlet process prior on the mixture weights and cluster the pairwise association matrix, which is obtained by aggregating over all partitions obtained during Markov chain Monte Carlo (MCMC) sampling, using partitioning around medoids (PAM; Kaufman and Rousseeuw 1990). The optimal number of clusters is determined by maximizing an associated clustering score.

A second issue to be addressed concerns the selection of cluster-relevant variables, as heterogeneity often is present only in a subset of the available variables. Since the inclusion of unnecessary variables might mask the cluster structure, statistical interest lies in identifying these cluster-relevant variables. Several papers have suggested to solve the selection of the number of components and the identification of cluster-relevant variables simultaneously. One way is to recast the choice both of and the cluster-relevant variables as a model selection problem. For instance, in the context of maximum likelihood estimation Raftery and Dean (2006), Maugis et al. (2009) and Dean and Raftery (2010) use a greedy search algorithm by comparing the various models through BIC. Penalized clustering approaches using the norm to shrink cluster means together for variable selection are considered in Pan and Shen (2007), with adaptations of the penalty term taking the group structure into account suggested in Wang and Zhu (2008) and Xie et al. (2008). Based on a model using mode association Lee and Li (2012) propose a variable selection algorithm using a forward search for maximizing an aggregated index of pairwise cluster separability.

In the Bayesian framework, Tadesse et al. (2005) propose RJMCMC techniques to move between mixture models with different numbers of components while variable selection is accomplished by stochastic search through the model space. Stingo et al. (2012) extend their approach in combination with Raftery and Dean (2006) to the discriminant analysis framework. Frühwirth-Schnatter (2011a) pursues a slightly different approach by specifying a normal gamma prior on the component means to shrink the cluster means for homogeneous components, while model selection with respect to is performed by calculating marginal likelihoods under these shrinkage priors.

Variable selection in the context of infinite mixture models has also been considered. Kim et al. (2006), for instance, combine stochastic search for cluster-relevant variables with a Dirichlet process prior on the mixture weights to estimate the number of components. In a regression setting Chung and Dunson (2009) and Kundu and Dunson (2014) also use stochastic search variable selection methods in combination with nonparametric Bayesian estimation based on a probit stick-breaking process mixture or a Dirichlet process location mixture. Similarily, Yau and Holmes (2011) define a Dirichlet process prior on the weights, however, they identify cluster-relevant variables by using a double exponential distribution as shrinkage prior on the component means. Lian (2010) uses Dirichlet process priors for simultaneous clustering and variable selection employing a base measure inducing shinkage on the cluster-specific covariate effects.

The main contribution of the present paper is to propose the use of sparse finite mixture models as an alternative to infinite mixtures in the context of model-based clustering. While remaining within the framework of finite mixtures, sparse finite mixture models provide a semi-parametric Bayesian approach insofar as neither the number of mixture components nor the cluster-relevant variables are assumed to be known in advance. The basic idea of sparse finite mixture modeling is to deliberately specify an overfitting finite mixture model with too many components and to assume heterogeneity for all available variables apriori. Sparse solutions with regard to the number of mixture components and with regard to heterogeneity of component locations are induced by specifying suitable shrinkage priors on, respectively, the mixture weights and the component means. This proposal leads to a simple Bayesian framework where a straightforward MCMC sampling procedure is applied to jointly estimate the unknown number of mixture components, to determine cluster-relevant variables, and to perform component-specific inference.

To obtain sparse solutions with regard to the number of mixture components, an appropriate prior on the weight distribution has to be selected. We stay within the common framework by choosing a Dirichlet prior on , however, the hyperparameters of this prior are selected such that superfluous components are emptied automatically during MCMC sampling. The choice of these hyperparameters is governed by the asymptotic results of Rousseau and Mengersen (2011), who show that, asymptotically, an overfitting mixture converges to the true mixture, if these hyperparameters are smaller than , where is the dimension of the component-specific parameter .

Sparse finite mixtures are related to infinite mixtures based on a Dirichlet process prior, if a symmetric Dirichlet prior is employed for and the hyperparameter is selected such that converges to the concentration parameter of the Dirichlet process as goes to infinity. For finite , sparse finite mixtures provide a two-parameter alternative to the Dirichlet process prior where, for instance, can be held fixed while increases.

Following Ishwaran et al. (2001) and Nobile (2004), we derive the posterior distribution of the number of non-empty mixture components from the MCMC output. To estimate the number of mixture components, we derive a point estimator from this distribution, typically, the posterior mode which is equal to the most frequent number of non-empty components visited during MCMC sampling. This approach constitutes a simple and automatic strategy to estimate the unknown number of mixture components, without making use of model selection criteria, RJMCMC, or marginal likelihoods.

Although sparse finite mixtures can be based on arbitrary mixture components, investigation will be confined in the present paper to sparse Gaussian mixtures where the mixture components in (1) arise from multivariate Gaussian densities with component-specific parameters consisting of the component mean and the variance-covariance matrix , i.e.

| 2 |

To identify cluster-relevant variables within the framework of sparse Gaussian mixtures, we include all variables and assess their cluster-relevance by formulating a sparsity prior on the component means , rather than excluding variables explicitly from the model as it is done by stochastic search. This strategy to identify cluster-relevant variables has been applied previously by Yau and Holmes (2011) who define a Laplace prior as a shrinkage prior on the mixture component means. To achieve more flexibility and to allow stronger shrinkage, we follow in the present paper Frühwirth-Schnatter (2011a) by using instead the normal gamma prior as a shrinkage prior on the mixture component means which is a two-parameter generalization of the Laplace prior.

Specifying a sparse prior on the component means has in addition the effect of allowing component means to be pulled together in dimensions where the data are homogeneous, yielding more precise estimates of the component means in every dimension. Moreover, the dispersion of the estimated component means in different variables can be compared. In this way, a distinction between cluster-relevant variables, which are characterized by a high dispersion of the cluster locations, and homogeneous variables, where cluster locations are identical, is possible by visual inspection. For high-dimensional data, however, this approach might be cumbersome, as pointed out by a reviewer, and automatic tools for identifying cluster-relevant variables using the posterior distributions of the shrinkage parameters might need to be considered.

Finally, in applied research it is often not only of interest to derive a partition of the data, but also to characterize the clusters by providing inference with respect to the cluster-specific parameters appearing in (1). The framework of finite mixtures allows for identification of component-specific parameters, as soon as the label switching problem in an overfitting mixture model with empty components is solved. As suggested by Frühwirth-Schnatter (2001), we ensure balanced label switching during MCMC sampling by adding a random permutation step to the MCMC scheme. For relabeling the draws in a post-processing procedure, a range of different methods has been proposed in the literature, see Sperrin et al. (2010) and Jasra et al. (2005) for an overview. However, most of these proposed relabeling methods become computationally prohibitive for multivariate data with increasing dimensionality and a reasonable number of components.

To obtain a unique labeling we follow Frühwirth-Schnatter (2011a), who suggests to cluster the draws in the point process representation after having removed the draws where the number of non-empty components does not correspond to the estimated number of non-empty components and using only component-specific draws from non-empty components. Clustering the component-specific draws in the point process representation reduces the dimensionality of the relabeling problem, making this method feasible also for multivariate data. For clustering the draws we use -centroids cluster analysis (Leisch 2006) based on the Mahalanobis distance, which allows to fit also elliptical clusters and thereby considerably improves the clustering performance. The cluster assignments for the component-specific draws can be used to obtain a unique labeling and an identified model, if in each iteration each component-specific draw is assigned to a different cluster. We suggest to use this proportion of draws with unique assignment as a qualitative indicator of the suitability of the fitted mixture model for clustering.

The article is organized as follows. Section 2 describes the proposed strategy for selecting the true number of mixture components and introduces the normal gamma prior on the mixture component means. Section 3 provides details on MCMC estimation and sheds more light on the relation between shrinkage on the prior component means and weights. In Sect. 4 the strategy for solving the label switching problem for an overfitting mixture model is presented. In Sect. 5 the performance of the proposed strategy is evaluated in a simulation study and application of the proposed method is illustrated on two benchmark data sets. Section 6 summarizes results and limitations of the proposed approach and discusses issues to be considered in future research.

Model specification

In a Bayesian approach, the model specification given in Eqs. (1) and (2) is completed by specifying priors for all model parameters. As mentioned in the introduction, sparse finite mixtures rely on specifying a prior on the mixture weights which helps in identifying the number of mixture components (see Sect. 2.1). To achieve identification of cluster-relevant variables, a shrinkage prior on the component means is chosen (see Sect. 2.2), while a standard hierarchical prior is chosen for the component variances (see Sect. 2.3).

Identifying the number of mixture components

Following the usual approach, we assume that the prior on the weight distribution is a symmetric Dirichlet prior, i.e. . However, since sparse finite mixtures are based on choosing deliberately an overfitting mixture where the number of components exceeds the true number , the hyperparameter has to be selected carefully to enable shrinkage of the number of non-empty mixture components toward .

For an overfitting mixture model, it turns out that the hyperparameter considerably influences the way the posterior distribution handles redundant mixture components. As observed by Frühwirth-Schnatter (2006, 2011a) in an exploratory manner, the posterior distribution of an overfitting mixture model with might exhibit quite an irregular shape, since the likelihood mixes two possible strategies of handling superfluous components. For an overfitting mixture model, high likelihood is assigned either to mixture components with weights close to 0 or to mixture components with nearly identical component-specific parameters. In both cases, several mixture model parameters are poorly identified, such as the component-specific parameters of a nearly empty component in the first case, while only the sum of the weights of nearly identical mixture components, but not their individual values, is identified in the second case.

Rousseau and Mengersen (2011) investigate the asymptotic behavior of the posterior distribution of an overfitting mixture model in a rigorous mathematical manner. They show that the shape of the posterior distribution is largely determined by the size of the hyperparameter of the Dirichlet prior on the weights. In more detail, if the hyperparameter , where is the dimension of the component-specific parameter , then the posterior expectation of the weights asymptotically converges to zero for superfluous components. On the other hand, if , then the posterior density handles overfitting by defining at least two identical components, each with non-negligible weight. In the second case, the posterior density is less stable than in the first case since the selection of the components that split may vary. Therefore, Rousseau and Mengersen (2011) suggest to guide the posterior towards the first more stable case and to “compute the posterior distribution in a mixture model with a rather large number of components and a Dirichlet-type prior on the weights with small parameters (...) and to check for small weights in the posterior distribution.” (p. 694). Following these suggestions, our approach consists in purposely specifying an overfitting mixture model with being a reasonable upper bound for the number of mixture components. Simultaneously, we favor apriori values of small enough to allow emptying of superfluous components.

An important issue is how to select a specific value for in an empirical application. The asymptotic results of Rousseau and Mengersen (2011) suggest that choosing has the effect of emptying all superfluous components, regardless of the specific value, as the number of observations goes to infinity. However, in the case of a finite number of observations, we found it necessary to select much smaller values for .

We choose either a very small, but fixed Dirichlet parameter , in particular in combination with the sparsity prior on the component means introduced in Sect. 2.2, as will be discussed further in Sect. 3.2. Alternatively, to learn from the data how much sparsity is needed, we consider to be an unknown parameter with a gamma hyperprior .

To define the expectation of this prior, we follow Ishwaran et al. (2001) who recommend to choose . In this case, the Dirichlet prior approximates a Dirichlet process prior with concentration parameter as becomes large, as already noted in the introduction. Since simulation studies performed in Ishwaran et al. (2001) yield good approximations for and reasonable large, we match the expectation obtained in this way:

| 3 |

The parameter has to be selected carefully since it controls the variance of . For our simulation studies and applications, we set , as we noted in simulation studies (not reported here) that values smaller than 10 allow large values for , and, as a consequence, superfluous components were not emptied during MCMC sampling.

For a sparse finite mixture, the posterior distribution will handle redundant components by assigning to them vanishing weights and, as will be discussed in Sect. 3, superfluous components are emptied during MCMC sampling. Regarding the selection of the number of components, we deviate from Rousseau and Mengersen (2011), because the strategy of separating between “true” and “superfluous” components based on posterior size of the weights of the various components might fail in cases where a threshold for separating between “large” or “small” weights is difficult to identify.

Following, instead, Nobile (2004) and Ishwaran et al. (2001) we derive the posterior distribution of the number of non-empty components from the MCMC output. I.e., for each iteration of the MCMC sampling to be discussed in Sect. 3, we consider the number of non-empty components, i.e. components to which observations have been assigned for this particular sweep of the sampler,

| 4 |

where is the number of observations allocated to component and denotes the indicator function, and estimate the posterior for each value , by the corresponding relative frequency.

To estimate the number of mixture components, we derive a point estimator from this distribution. We typically use the posterior mode estimator which maximizes the (estimated) posterior distribution and is equal to the most frequent number of non-empty components visited during MCMC sampling. The posterior mode estimator is optimal under a 0/1 loss function which is indifferent to the degree of overfitting . This appears particularly sensible in the present context where adding very small, non-empty components hardly changes the marginal likelihood. This makes the posterior distribution extremely right-skewed and other point estimators such as the posterior mean extremely sensitive to prior choices, see Nobile (2004).

Identifying cluster-relevant variables

The usual prior on the mixture component means is the independence prior,

| 5 |

where denotes the multivariate normal distribution. It is common to assume that all component means are independent a priori, given data-dependent hyperparameters and ; see e.g. Richardson and Green (1997), Stephens (1997) and Frühwirth-Schnatter (2006). Subsequently, we call this prior the standard prior and choose the median to define and the range of the data in each dimension to define , where .

Previous investigations in Yau and Holmes (2011) and Frühwirth-Schnatter (2011a) indicate that it is preferable to replace the standard prior for the component means by a shrinkage prior, if it is expected that in some dimensions no cluster structure is present because all component means are homogeneous. Shrinkage priors are well-known from variable selection in regression analysis where they are used to achieve sparse estimation of regression coefficients, see Polson and Scott (2010) and Armagan et al. (2011) for a recent review. Shrinkage priors are also very convenient from a computational point of view, because they can be represented as a scale mixture of normals which makes it easy to implement MCMC sampling under these priors.

We apply in the following the normal gamma prior, for which the mixing distribution for the scale is specified by a gamma distribution. The normal gamma prior was introduced by Griffin and Brown (2010) for variable selection in regression models and has been applied previously by Frühwirth-Schnatter (2011a) in the context of finite mixture distributions. As opposed to the standard prior (5) which is based on fixed hyperparameters and , a hierarchical prior is introduced, which places a normal prior on the prior mean and a shrinkage prior on the prior variance matrix :

| 6 |

where

In (6), a multivariate version of the normal gamma prior is employed, where it is assumed that in each dimension all component means follow a normal distribution, where the variance depends on different scaling factors drawn from a gamma distribution with parameters and . The marginal prior for can be expressed in closed form as (see Frühwirth-Schnatter 2011a):

| 7 |

where

and is the modified Bessel function of the second kind. Furthermore, if the hyperparameters and are equal, then in each dimension the marginal variance of is equal to as for the standard prior.

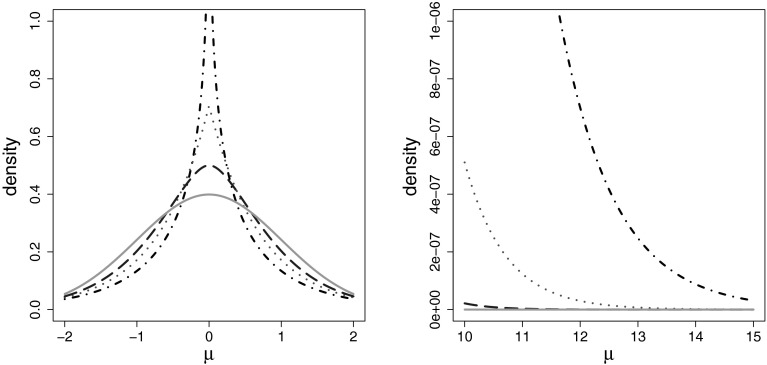

Yau and Holmes (2011) considered a closely related, special case of prior (6) where , which corresponds to the double exponential or Laplace prior, also known as the Bayesian Lasso (Park and Casella 2008). However, in the context of regression analysis, this specific prior has been shown to be suboptimal in the sense that shrinkage to 0 is too weak for insignificant coefficients, while a bias is introduced for large coefficients, see e.g. Polson and Scott (2010) and Armagan et al. (2011). The normal gamma prior introduced by Griffin and Brown (2010) is more flexible in this respect. Since the excess kurtosis is given by , the normal gamma prior has heavier tails than the Laplace distribution for , reducing the bias for large coefficients. At the same time, it is more peaked than the Laplace distribution which leads to stronger shrinkage to 0 for insignificant coefficients. This can be seen in Fig. 1 where the normal gamma prior is plotted for and compared to the standard normal distribution.

Fig. 1.

Normal gamma prior with a variance of 1 for different values of , (black dot-dashed line), (red dotted line), (blue long-dashed line), and the standard normal density (green solid line), at zero (left-hand side) and the tails (right-hand side). (Color figure online)

In the context of finite mixtures, the normal gamma prior introduces exactly the flexibility we are seeking to identify cluster-relevant variables. To achieve this goal, the normal gamma prior is employed in (6) with value . This implies that can assume values considerable smaller than 1 in dimension , which leads the prior distribution of to concentrate around the mean , pulling all the component means towards . This property becomes important in dimensions where component densities are heavily overlapping or in the case of homogeneous variables, where actually no mixture structure is present and all observations are generated by a single component only. In these cases, allowing the prior variance to pull component means together yields more precise estimates of the actually closely adjacent or even identical component means.

In this way implicit variable selection is performed and variables which are uninformative for the cluster structure are effectively fit by a single component avoiding overfitting heterogeneity and diminishing the masking effect of these variables. Thus the same benefits regarding the fitted model are obtained as if the variables were excluded through a model search procedure.

For cases where the variance of the prior for , , is shrunk to a small value, the mean of the prior becomes important. Thus, rather than assuming that is a fixed parameter as for the standard prior, we treat as an unknown hyperparameter with its own prior distribution, see (6).

While variable selection is performed only implicitly with shrinkage priors in Bayesian estimation, explicit identification of the relevant clustering variables is possible a posteriori for the hierarchical shrinkage prior based on the normal gamma distribution. In the context of multivariate finite mixtures, can be interpreted as a local shrinkage factor in dimension which allows a priori that component means are pulled together and, at the same time, is flexible enough to be overruled by the data a posteriori, if the component means are actually different in dimension . Hence, a visual inspection of the posterior distributions of the scaling factors , , e.g. through box plots as in Yau and Holmes (2011), reveals in which dimension a high dispersion of the component means is present and where, on the contrary, all component means are pulled together.

It remains to discuss the choice of the hyperparameters , , and in (6). In the following simulation studies and applications, the hyperparameters and are set to to allow considerable shrinkage of the prior variance of the component means. Furthermore, we specify an improper prior on , where and .

Prior on the variance-covariance matrices

Finally, a prior on the variance-covariance matrices has to be specified. Several papers, including Raftery and Dean (2006) and McNicholas and Murphy (2008), impose constraints on the variance-covariance matrices to reduce the number of parameters which, however, implies that the correlation structure of the data needs to be modeled explicitly.

In contrast to these papers, we do not focus on modeling sparse variance-covariance matrices, we rather model the matrices without constraints on their geometric shape. Following Stephens (1997) and Frühwirth-Schnatter (2006, p. 193), we assume the conjugate hierarchical prior , , where denotes the Wishart distribution. Regularization of variance-covariance matrices in order to avoid degenerate solutions is achieved through specification of the prior hyperparameter by choosing

see Frühwirth-Schnatter (2006, p. 192).

Bayesian estimation

To cluster observations , it is assumed that the data are drawn from the mixture distribution defined in (1) and (2), and that each observation is generated by one specific component .

The corresponding mixture likelihood derived from (1) and (2) is combined with the prior distributions introduced, respectively, for the weights in Sect. 2.1, for the component means in Sect. 2.2, and for in Sect. 2.3, assuming independence between these components. The resulting posterior distribution does not have a closed form and MCMC sampling methods have to be employed, see Sect. 3.1.

The proposed strategy of estimating the number of components relies on the correct identification of non-empty components. In Sect. 3.2 we study in more detail that prior dependence between and might be necessary to achieve this goal. In particular, we argue why stronger shrinkage of very small component weights toward 0 might be necessary for the normal gamma prior (6) than for the standard prior (5), by choosing a very small value of .

MCMC sampling

Estimation of the sparse finite mixture model is performed through MCMC sampling based on data augmentation and Gibbs sampling (Diebolt and Robert 1994; Frühwirth-Schnatter 2006, chap. 3). To indicate the component from which each observation stems, latent allocation variables taking values in are introduced such that

| 8 |

and

| 9 |

As suggested by Frühwirth-Schnatter (2001), after each iteration an additional random permutation step is added to the MCMC scheme which randomly permutes the current labeling of the components. Random permutation ensures that the sampler explores all modes of the full posterior distribution and avoids that the sampler is trapped around a single posterior mode, see also Geweke (2007). Without the random permutation step, it has to be verified for each functional of the parameters of interest, whether it is invariant to relabeling of the components. Only in this case, it does not matter whether the random permutation step is performed. The detailed sampling scheme is provided in Appendix 1 and most of the sampling steps are standard in finite mixture modeling, with two exceptions.

The first non-standard step is the full conditional distribution of the shrinkage factor . The combination of a gamma prior for with the product of normal likelihoods , where the variance depends on , yields a generalized inverted Gaussian distribution () as posterior distribution, see Frühwirth-Schnatter (2011a). Hence,

where the parameters , and are defined in (7).

Furthermore, if the hyperparameter of the Dirichlet prior is random, a random walk Metropolis-Hastings step is implemented to sample from , where

| 10 |

and is equal to the hyperprior introduced in (3).

On the relation between shrinkage in the weights and in the component means

As common for finite mixtures, MCMC sampling alternates between a classification and a parameter simulation step, see Appendix 1. During classification, observations are allocated to component according to the (non-normalized) conditional probability , whereas the component-specific parameters and the weight are simulated conditional on the current classification during parameter simulation. If no observation is allocated to component during classification, then, subsequently, all component-specific parameters of this empty component are sampled from the prior. In particular, the location of an empty component heavily depends on the prior location and prior covariance matrix .

Under the standard prior (5), where

the location of an empty component is likely to be far away from the data center , since in each dimension with 5 % probability the will be further away from than . As a consequence, is very small for any observation in the subsequent classification step and an empty component is likely to remain empty under the standard prior.

In contrast, under the normal gamma prior (6), where , the scaling factor shrinks the prior variance of considerably, in particular in dimensions, where the component means are homogeneous. However, the scaling factor adjusts the prior variance also in cluster-relevant dimensions, since is generally much larger than the spread of the non-empty component means which are typically allocated within the data range . As a consequence, the location of an empty component is either close to the data center (in the case of homogeneous variables) or close to the range spanned by the locations of the non-empty components (in the case of cluster-relevant variables). In both cases, evaluating in the subsequent classification step yields a non-negligible probability and, as a consequence, observations are more likely to be allocated to an empty component than in the standard prior case.

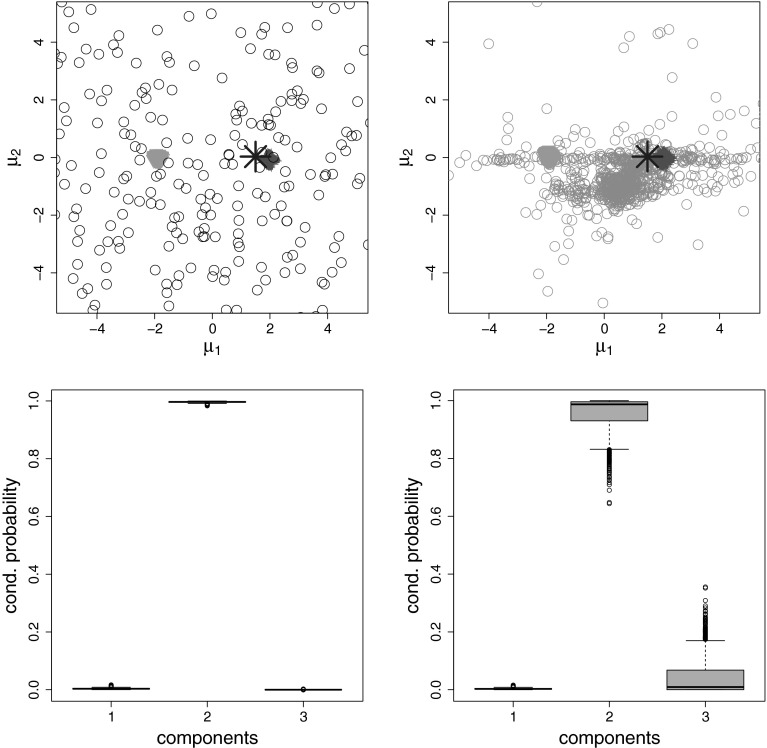

To illustrate the different allocation behavior of the standard and the normal gamma prior in the presence of a superfluous component more explicitly, we simulate = 1,000 observations from a bivariate two-component mixture model where , , , and . We fit an overfitting mixture distribution with components, assuming that . We skip the random permutation step, since the modes of the posterior distribution are well separated and the sampler is trapped in the neighborhood of a single mode, yielding implicit identification.

In the top row of Fig. 2, posterior draws of all three component means are displayed in a scatter plot both for the standard (left-hand side) and the normal gamma prior (right-hand side). Under both priors, the posterior draws of the first two component means, displayed by triangle and cross points respectively, are concentrated around the true means and . However, the posterior draws of the mean of the third (superfluous) component, shown as circle points, are quite different, displaying a large dispersion over the plane under the standard prior and being located either close to the two true component means or the data center under the normal gamma prior. To illustrate the ensuing effect on classification, we select a specific observation , which location is marked by a (blue) star in the scatter plots of the top, and determine for each MCMC sweep the probability for to be allocated, respectively, to component or . The corresponding box plots in the bottom row of Fig. 2 clearly indicate that the allocation probability for the third (superfluous) component is considerably higher under the normal gamma prior (plot on the right-hand side) than under the standard prior (plot on the left-hand side).

Fig. 2.

Fitting a 3-component normal mixture to data generated by a 2-component normal mixture. Top row Scatter plots of the draws of the posterior component means under the standard prior (left-hand side) and the normal gamma prior (right-hand side). Draws from component 1, 2, and 3 are displayed as green triangles, red crosses, and grey circles, respectively. Bottom row For a single observation which location is marked with a (blue) star in the scatter plots in the top row, box plots of the conditional probabilities during MCMC sampling to be assigned to component 1, 2 or 3 are displayed, under the standard prior (left-hand side) and normal gamma prior (right-hand side). MCMC is run for 1,000 iterations, after discarding the first 1,000 draws. (Color figure online)

Since our strategy to estimate the number of mixture components relies on the number of non-empty components during MCMC sampling, we conclude from this investigation that stronger shrinkage in might be necessary for the normal gamma prior (6) than for the standard prior (5). We compensate the tendency of the normal gamma prior to overestimate the number of non-empty components, by encouraging very small prior weights for empty components in order to keep the conditional probability of an observation to be allocated to an empty component during classification small. This is achieved by specifying a very small fixed hyperparameter in the Dirichlet prior, which is proportional to . Thus, the smaller , the smaller the weight of an empty component will be.

Identifying sparse finite mixtures

Identification of the finite mixture model requires handling the label switching problem caused by invariance of the representation (1) with respect to reordering the components:

where is an arbitrary permutation of . The resulting multimodality and perfect symmetry of the posterior distribution for symmetric priors makes it difficult to perform component-specific inference. To solve the label switching problem arising in Bayesian mixture model estimation, it is necessary to post-process the MCMC output to obtain a unique labeling. Many useful methods have been developed to force a unique labeling on draws from this posterior distribution when the number of components is known (Celeux 1998; Celeux et al. 2000; Frühwirth-Schnatter 2001; Stephens 2000; Jasra et al. 2005; Yao and Lindsay 2009; Grün and Leisch 2009; Sperrin et al. 2010). However, most of these proposed relabeling methods become computationally prohibitive for multivariate data with increasing dimensionality. For instance, as explained in (Frühwirth-Schnatter (2006), p. 96), Celeux (1998) proposes to use a -means cluster algorithm to allocate the draws of one iteration to one of clusters, which initial centers are determined by the first 100 draws. The distance of the draws to each of the reference centers is used to determine the labeling of the draws for this iteration. In general, most of the relabeling methods use the complete vector of parameters which grows as a multiple of even if they do not require all modes to be considered (see, for example Yao and Lindsay 2009).

Following Frühwirth-Schnatter (2011a), we apply -means clustering to the point process representation of the MCMC draws to identify a sparse finite mixture model, see Sect. 4.1. This allows to reduce the dimension of the problem to the dimension of the component-specific parameters. As described in Sect. 4.2, we generalize this approach by replacing -means clustering based on the squared Euclidean distance by -centroids cluster analysis based on the Mahalanobis distance (Leisch 2006).

Clustering the MCMC output in the point process representation

The point process representation of the MCMC draws introduced in (Frühwirth-Schnatter (2006), Sect. 3.7.1) allows to study the posterior distribution of the component-specific parameters regardless of potential label switching, which makes it very useful for model identification. If the number of mixture components matches the true number of components, then the posterior draws of the component-specific parameters cluster around the “true” points . To visualize the point process representation of the posterior mixture distribution, projections of the point process representation onto two-dimensional planes can be considered. These correspond to scatter plots of the MCMC draws , for two dimensions and and across .

After clustering the draws in the point process representation, a unique labeling is achieved for all those draws where the resulting classification is a permutation. By reordering each of these draws according to its classification, a (subset) of identified draws is obtained which can be used for component-specific parameter inference.

Note that to reduce dimensionality, it is possible to cluster only a subset of parameters of the component-specific parameter vector and to apply the obtained classification sequences to the entire parameter vector. In the present context of multivariate Gaussian mixtures, we only clustered the posterior component means and the obtained classification sequence was then used to reorder and identify the other component-specific parameters, namely covariance matrices and weights. This is also based on the assumption that the obtained clusters differ in the component means allowing clusters to be characterized by their means.

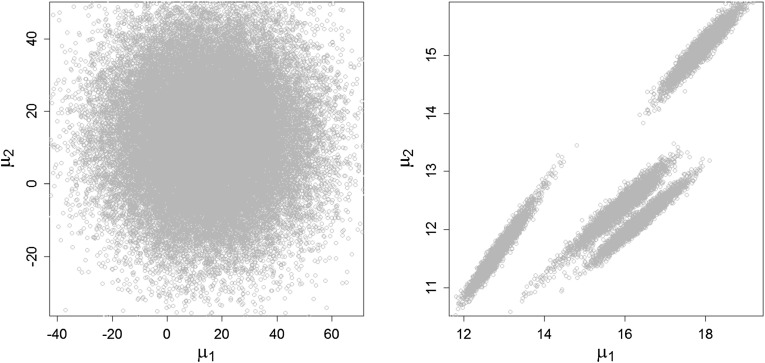

In the case of a sparse finite mixture model, where the prior on the weights favors small weights, many of the components will have small weights and no observation will be assigned to these components in the classification step. Component means of all empty components are sampled from the prior and tend to confuse the cluster fit. Therefore, Frühwirth-Schnatter (2011a) suggests to remove all draws from empty components before clustering. Additionally, after having estimated the number of non-empty components , all draws where the number of non-empty components is different from are sampled conditional on a “wrong” model and are removed as well. The remaining draws can be seen as samples from a mixture model with exactly components. In Fig. 3 an example of the point process representation of the MCMC draws is given. After having fitted a sparse finite mixture with components to the Crabs data set described in Sect. 5.2, the left-hand side shows the scatter plot of the MCMC draws ), , from all components (including draws from empty components). On the right-hand side, only draws from those iterations are plotted where and which were non-empty. In this case, the posterior distributions of the means of the four non-empty components can be clearly distinguished. These draws are now clustered into groups. The clusters naturally can be assumed to be of equal size and to have an approximate Gaussian distribution, thus suggesting the choice of -means for clustering or, in order to capture also non-spherical shapes or different volumes of the posterior distributions, the choice of -centroids cluster analysis where the distance is defined by a cluster-specific Mahalanobis distance. The algorithm is explained in the following subsection. The detailed scheme to identify a sparse finite mixture model can be found in Appendix 2.

Fig. 3.

Crabs data, , , standard prior. Point process representation of posterior mean draws, plotted against , across . Left-hand side draws of all components. Right-hand side only draws from those iterations where and the components which were non-empty

-centroids clustering based on the Mahalanobis distance

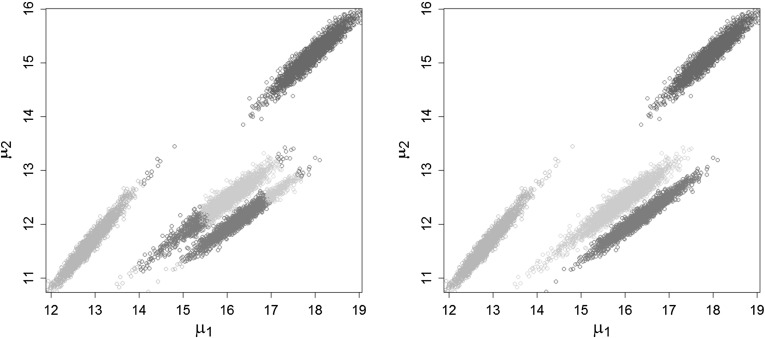

Defining the distance between a point and a centroid using the Mahalanobis distance may considerably improve the cluster fit in the point process representation. As can be seen in Fig. 4, where the clustering results for the Crabs data are displayed, if the posterior distributions have elliptical shape, clustering based on the Mahalanobis distance is able to catch the elongated, elliptical clusters whereas -means based on the squared Euclidean distance splits a single cluster into several parts and at the same time combines these parts to one new artificial cluster.

Fig. 4.

Crabs data: Clustering of the posterior mean draws of the plot on the right-hand side in Fig. 3, through -means (left-hand side) and -centroids cluster analysis based on the Mahalanobis distance (right-hand side)

For posterior draws of the component-specific parameter vector and a fixed number of clusters , the -centroids cluster problem based on the Mahalanobis distance consists of finding a “good” set of centroids and dispersion matrices

| 11 |

where are points in and are instances of the set of all positive definite matrices. “Good” means that using the assigned dispersion matrices , the sum of all distances between objects and their assigned centroids is minimized:

| 12 |

and the distance between an object and a centroid and a dispersion matrix is defined by the Mahalanobis distance:

| 13 |

Since no closed form solution exists for the -centroids cluster problem, an iterative estimation procedure is used. A popular choice is the well-known -means algorithm, its general form can be found in Leisch (2006). For the Mahalanobis distance (13), the -centroids cluster algorithm is given by:

Start with a random set of initial centroids and variance-covariance matrices .

- Assign to each the nearest centroid where the distance is defined by the Mahalanobis distance (13):

- Update the set of centroids and dispersion matrices holding the cluster membership fixed:

Repeat steps 2 and 3 until convergence.

The algorithm is guaranteed to converge in a finite number of iterations to a local optimum of the objective function (12) (Leisch 2006). As starting values of the algorithm in step 1, the MAP estimates of the hyperparameters of the prior of the component-specific means are used.

Simulations and applications

Simulation study

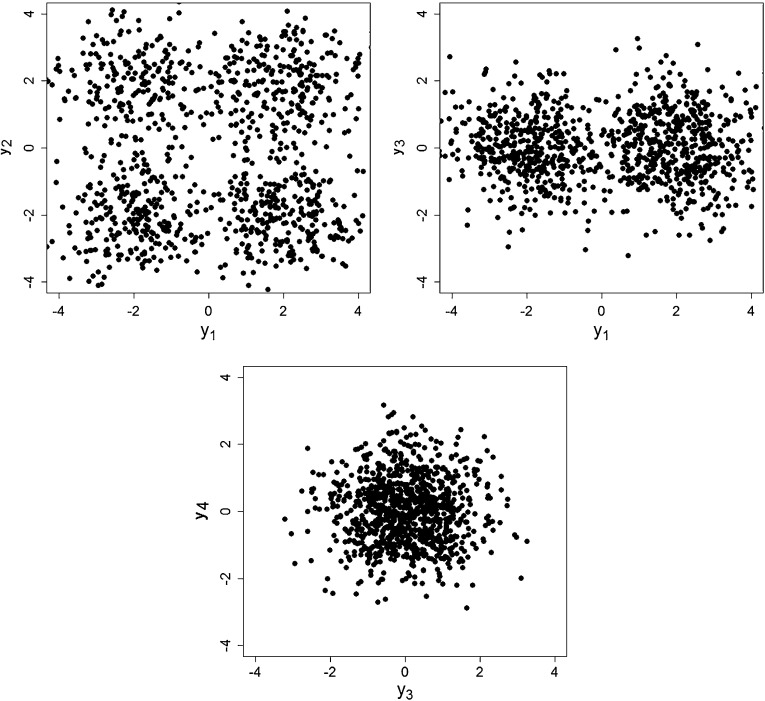

In the following simulation study, the performance of the proposed strategy for selecting the unknown number of mixture components and identifying cluster-relevant variables is illustrated for the case where the component densities are truly multivariate Gaussian. We use a mixture of four multivariate Gaussian distributions with component means , , , and and isotropic covariance matrices , , as data generating mechanism. Hence, this simulation setup consists of two cluster-generating variables in dimension 1 and 2 and two homogeneous variables in dimension 3 and 4 and is chosen in order to study, whether cluster-relevant variables and homogeneous variables can be distinguished. In Fig. 5, a randomly selected data set is shown, which was samples with equal weights. This figure indicates that, while in the scatter plot of the first two variables four clusters are still visually discernible, the clusters are slightly overlapping in these dimensions indicating that the cluster membership of some observations is difficult to estimate. The other two variables are homogenous variables and do not contain any cluster information, but render the clustering task more difficult.

Fig. 5.

Simulation setup with equal weights. Scatter plots of different variables for one randomly selected data set

As described in Sect. 2.1, we deliberately choose an overfitting mixture with components and base our estimate of the true number of mixture components on the frequency of non-empty components during MCMC sampling. We select strongly overfitting mixtures with and , to assess robustness of the proposed strategy to choosing , and investigate, if the number of non-empty components increases as increases. We simulate relatively large samples of 1,000 observations to make it more difficult to really empty all superfluous components.

In addition, we consider two different weight distributions, namely a mixture with equal weights, i.e. , and a mixture with a very small component, where , in order to study how sensitive our method is to different cluster sizes. For the second mixture, we investigate whether the small component survives or is emptied during MCMC sampling together with all superfluous components.

Prior distributions and the corresponding hyperparameters are specified as described in Sect. 2. The prior on the weight distribution defines a sparse finite mixture distribution. We either use the gamma prior on defined in (3) or choose a very small, but fixed value for as motivated by Sect. 3.2. In addition, we compare the standard prior (5) for the component means with the hierarchical shrinkage prior (6) based on the normal gamma distribution.

For each setting, ten data sets are generated, each consisting of 1,000 data points , and MCMC sampling is run for each data set for 10,000 iterations after a burn-in of 2,000 draws. The starting classification of the observations is obtained by -means. Estimation results are averaged over the ten data sets and are reported in Tables 1 and 2 where provides the posterior median of under the prior (3), whereas “ fixed” indicates that was fixed at the reported value. is the posterior mode estimator of the true number of non-empty components which is equal to 4. If the estimator did not yield the same value for all data sets, then the number of data sets where was the estimated number of non-empty components is given in parentheses. is the average number of iterations where exactly components were non-empty.

Table 1.

Simulation setup with equal weights: results for different under the standard prior (Sta), the normal gamma Prior (Ng), and when fitting an infinite mixture using the R package PReMiuM. Results are averaged over ten data sets

| Prior | fixed | |||||||

|---|---|---|---|---|---|---|---|---|

| Sta | 4 | 0.28 | 4 | 10000 | 0 | 0.049 | 0.167 | |

| 15 | 0.05 | 4 | 9802 | 0 | 0.049 | 0.167 | ||

| 30 | 0.03 | 4 | 9742 | 0 | 0.048 | 0.168 | ||

| Ng | 4 | 0.28 | 4 | 10000 | 0 | 0.049 | 0.137 | |

| 15 | 0.06 | 5(6) | 2845 | 0.85 | 0.050 | |||

| 30 | 0.03 | 5(5) | 2541 | 0.93 | 0.050 | |||

| Ng | 4 | 4 | 10000 | 0 | 0.047 | 0.136 | ||

| 15 | 4 | 7465 | 0 | 0.048 | 0.137 | |||

| 30 | 4(8) | 4971 | 0 | 0.048 | 0.136 | |||

| 30 | 4 | 9368 | 0 | 0.048 | 0.136 | |||

| 30 | 4 | 9998 | 0 | 0.047 | 0.136 | |||

| PReMiuM | ||||||||

| 0.66 | 4 | 0.047 | 0.231 |

Table 2.

Simulation setup with unequal weights: results for different under the standard prior (Sta), the normal gamma prior (Ng), and when fitting an infinite mixture using the R package PReMiuM. Results are averaged over ten data sets

| Prior | fixed | |||||||

|---|---|---|---|---|---|---|---|---|

| Sta | 4 | 0.27 | 4 | 10000 | 0.00 | 0.038 | 1.670 | |

| 15 | 0.05 | 4 | 9780 | 0.00 | 0.037 | 1.668 | ||

| 30 | 0.03 | 4 | 9728 | 0.00 | 0.038 | 1.663 | ||

| Ng | 4 | 0.01 | 4 | 10000 | 0.02 | 0.037 | 1.385 | |

| 15 | 0.01 | 4 | 7517 | 0.02 | 0.038 | 1.314 | ||

| 30 | 0.01 | 4(9) | 5221 | 0.00 | 0.037 | 1.279 | ||

| 30 | 0.001 | 4 | 9297 | 0.01 | 0.037 | 1.325 | ||

| 30 | 0.00001 | 4 | 9997 | 0.02 | 0.038 | 1.336 | ||

| PReMiuM | ||||||||

| 0.65 | 4(9) | 0.038 | 2.841 |

For each data set, these draws are identified as described in Sect. 4 using clustering in the point process representation. is the (average) rate among the iterations where the corresponding classifications assigned to the draws by the clustering procedure fail to be a permutation. Since in these cases the labels cannot be assigned uniquely to the components, these draws are discarded. For illustration, see the example in the Appendix 2. The non-permutation rate is a measure for how well-separated the posterior distributions of the component-specific parameters are in the point process representation, with a value of 0 indicating perfect separation, see Appendix 2 and Frühwirth-Schnatter (2011a) for more details. In the following component-specific inference is based on the remaining draws where a unique labeling was achieved.

Accuracy of the estimated mixture model is measured by two additional criteria. Firstly, we consider the misclassification rate () of the estimated classification. The estimated classification of the observations is obtained by assigning each observation to the component where it has been assigned to most often during MCMC sampling among the draws where components were non-empty and which could be uniquely relabeled. The misclassification rate is measured by the number of misclassified observations divided by all observations and should be as small as possible. The labeling of the estimated classification is calculated by “optimally” matching true components to the estimated mixture components obtaining in this way the minimum misclassification rate over all possible matches.

Secondly, whenever the true number of mixture components is selected for a data set, the mean squared error of the estimated mixture component means based on the Mahalanobis distance is determined as

| 14 |

where is the number of identified draws with exactly non-empty components. In Sects. 5.2 and 5.3, where the true parameters and are unknown, the Bayes estimates of the parameters are taken instead. They are calculated by running the MCMC sampler with known allocations and taking the mean of the corresponding posterior draws. Evidently, should be as small as possible.

For comparison, results are also reported for a finite mixture, where is known to be equal to the true value.

Finally, to compare our results to the clustering results obtained by fitting infinite mixtures, the R package PReMiuM (Liverani et al. 2013) is used to compute a Dirichlet process mixture model DP() with concentration parameter . The number of initial clusters is set to 30, the number of burn-in draws and number of iterations are set to 2,000 and 10,000, respectively. All other settings, such as hyperparameter specifications, calculation of the similarity matrix and the derivation of the best partition, are left at the default values of the package. After having obtained the estimated number of groups and the best partition vector , in a post-processing way identification and summarization methods are applied on the MCMC output as proposed in Molitor et al. (2010). To obtain the posterior distributions of the component means of each group, for each iteration the average value of group is computed by:

| 15 |

where denotes the number of individuals in group of and is the component to which observation was assigned in iteration . By averaging over all associated cluster means in each iteration, the posterior distribution of the component means is reconstructed in a post-processing way and represents the uncertainty associated with the final classification of the observations. Therefore, a larger MSE is expected than for our approach where the assumed model, a finite mixture of Gaussian distributions, corresponds to the true underlying model and the posterior component mean distribution is directly estimated during MCMC sampling.

The is then computed using Eq. (14). In the tables, the posterior mean of the concentration parameter is reported. Following Ishwaran et al. (2001), the estimated can be compared to using that a sparse finite mixture model with prior on the weights approximates an infinite mixture model with Dirichlet process prior DP() if , see Sect. 2.1.

Simulation setup with equal weights

Table 1 reports the results for the simulation setup with equal weights. Under the standard prior, the estimated number of non-empty mixture components matches the true number of components for both overfitting mixtures for all data sets, regardless whether or components have been used. Furthermore, exactly four components are non-empty for most of the draws, since . The non-permutation rate is zero for all overfitting mixtures, indicating that the posterior distributions of all non-empty components are well-separated in the point process representation.

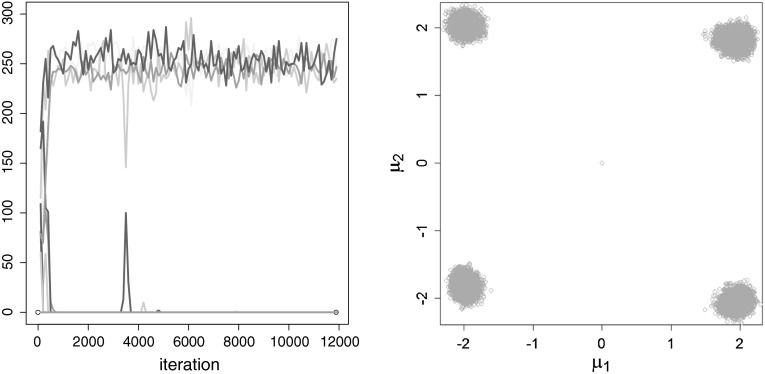

MCMC estimation for an overfitting mixture with components is explored in more detail in Fig. 6 for a randomly selected data set. The trace plot of allocations displays the number of observations allocated to the different components during MCMC sampling, also the burn-in draws are considered. Due to the starting classification through -means, the observations are assigned to all components at the beginning, however, rather quickly all but four components are emptied. In the scatter plot of the point process representation of the component mean draws of non-empty components , , sampled from exactly non-empty components, it can be seen very clearly that the draws cluster around the true parameter values and .

Fig. 6.

Simulation setup with equal weights: MCMC run of a single data set, , standard prior. Trace plot of the number of observations allocated to the different components, burn-in included (left-hand side) and point process representation of the posterior component mean draws where and which were non-empty, is plotted against (right-hand side)

If is considered to be random with prior (3), the estimated Dirichlet parameter has a very small value, much smaller than the (asymptotic) upper bound given by Rousseau and Mengersen (2011), and decreases, as the number of redundant components increases. This is true both for the standard prior and the normal gamma prior. However, under the normal gamma prior, the estimated number of non-empty components overfits the true number of mixture components for most of the data sets and increases with . For example, if , the number of non-empty components is overestimated with for 6 data sets. Also the high average non-permutation rate indicates that the selected model is overfitting. However, the is not higher than for , indicating that the fifth non-empty component is a very small one.

Given the considerations in Sect. 3.2, we combine the normal gamma prior with a sparse prior on the weight distribution where is set to a fixed very small value, e.g. to 0.01 which is smaller than the 1 % quantile of the posterior of . For this combination of priors, superfluous components are emptied also under the normal gamma prior and the estimated number of non-empty components matches the true number of mixture components in most cases. For , has to be chosen even smaller, namely equal to , to empty all superfluous components for all data sets. To investigate the effect of an even smaller value of , we also set . Again, four groups are estimated. Thus evidently as long as the cluster information is strong small values of do not lead to underestimating the number of clusters in a data set. As a consequence, for the following simulations, we generally combine the normal gamma distribution with a sparse prior on the weight distribution where is always set to fixed, very small values.

Both for the standard prior (with random) and the normal gamma prior (with fixed), the misclassification rate and the mean-squared error of the estimated models have the same size, as if we had known the number of mixture components in advance to be equal to . This oracle property of the sparse finite mixture approach is very encouraging.

While the misclassification rate is about the same for both priors, interestingly, the is considerably smaller under the normal gamma prior (0.136) than under the standard prior 0.167) for all . This gain in efficiency illustrates the merit of choosing a shrinkage prior on the component-specific means.

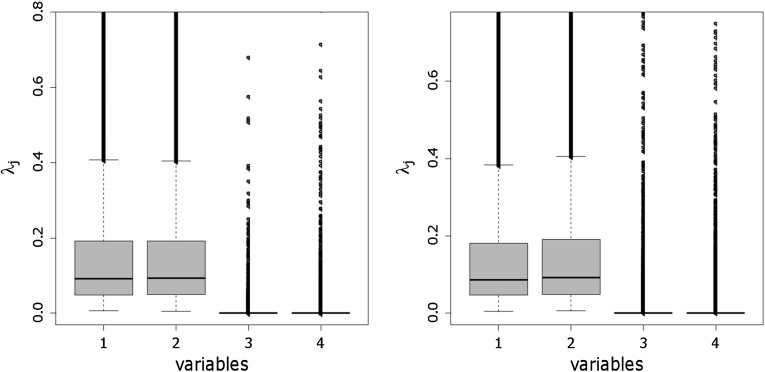

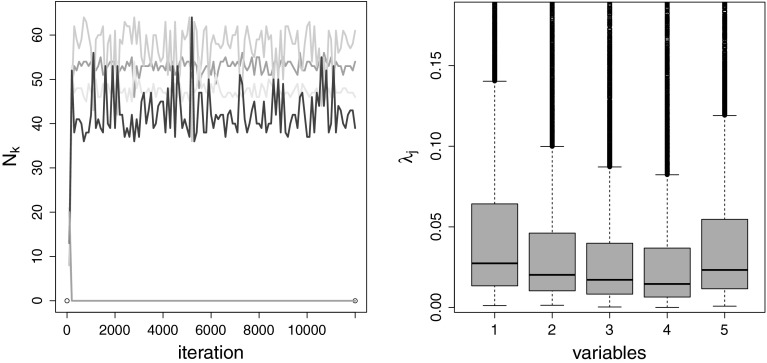

As noted in Sect. 2.2, a further advantage of specifying a normal gamma prior for the component means, is the possibility to explore the posterior distribution of the scaling factor . Therefore, visual inspection of the box plots of the posterior draws of helps to distinguish between variables, where component distributions are well separated, and variables, where component densities are strongly overlapping or even identical. The box plots of the posterior draws of displayed in Fig. 8 clearly indicate that only the first two variables show a high dispersion of the component means, whereas for the two other dimensions the posterior distribution of is strongly pulled toward zero indicating that component means are located close to each other and concentrate at the same point.

Fig. 8.

, normal gamma prior: Box plots of shrinkage factors , for the simulation setup with equal weights (left-hand side) and unequal weights (right-hand side). Posterior draws of all data sets are plotted

If the data sets are clustered by fitting an infinite mixture model with the R package PReMiuM, similar clustering results are obtained. For all ten data sets four groups are estimated. The averaged estimated concentration parameter is 0.66. This indicates, that a sparse finite mixture model with components and is a good approximation to a Dirichlet process DP as . As expected, the of the cluster means is considerable larger (0.231) than for sparse finite mixtures, whereas the misclassification rate (0.047) is as for finite mixtures.

Simulation setup with unequal weights

To study if small non-empty components can be identified under a sparse prior on the weights, the second simulation setup uses the weight distribution for data generation, where the first component generates only 2 % of the data.

The simulation results are reported in Table 2. Regardless of the number of specified components , non-empty components are identified under both priors. Again, for the normal gamma prior, the hyperparameter of the Dirichlet distribution has to be set to a very small value ( or even smaller) to empty all superfluous components in all data sets.

While our estimator is robust to the presence of a very small component, selecting the number of components by identifying ”large” weights, as has been suggested by Rousseau and Mengersen (2011), is likely to miss the fourth small component. In the left-hand side plot of Fig. 7, the (unidentified) posterior weights sorted by size in each iteration are displayed for a single data set. The forth largest weight in each iteration is very small and there might be uncertainty whether the forth component belongs to either the superfluous components or constitutes a non-empty component. However, by looking for non-empty components during MCMC sampling as our approach does, the small component can be clearly identified since it is never emptied during the whole MCMC run, as can be seen in the trace plot of allocations in Fig. 7.

Fig. 7.

Simulation setup with unequal weights, diagnostic plots of a single MCMC run, , standard prior: Box plots of the (unidentified) posterior weight draws, sorted by size in each iteration (left-hand side) and trace plot of the number of observations allocated to the different mixture components, burn-in included (right-hand side)

Again, both for the standard prior and the normal gamma prior, the misclassification rate and the mean-squared error of the estimated models have the same size, as if we had known the number of mixture components in advance to be equal to . Again, the normal gamma prior dominates the standard prior, with the being considerably smaller under the normal gamma prior ( 1.385) than under the standard prior 1.670) for all . This illustrates once more the efficiency gain of choosing a shrinkage prior on the component-specific means.

Also for this simulation setting, the posterior distribution of scaling factors , sampled for under the normal gamma prior and displayed in Fig. 8 on the right-hand side, clearly indicates that only the first two variables are cluster-generating, regardless of the small cluster size of the first component.

Again similar clustering results are obtained when fitting infinite mixtures. For almost all data sets (9 out of 10) the true number of groups is estimated. Again the is larger for infinite mixtures (2.841) than for sparse finite mixtures.

Crabs data

The Crabs data set, first published by Campbell and Mahon (1974) and included in the R package MASS (Venables and Ripley 2002), consists of 200 observations of a crabs population. It describes five morphological measurements on 200 crabs which have one of two color forms and differ in sex. Thus, four different groups are “pre-defined” and in the following we aim at recovering these four groups using the sparse finite mixture approach. Thus we would expect to find four data clusters. However, the correct number of clusters may be more than four (if some of the “pre-defined” groups are heterogeneous themselves) or less than four (if some of the “pre-defined” groups are not distinguishable), see considerations made by Hennig (2010). Among others, the data set was analyzed by Raftery and Dean (2006), Hennig (2010) and Yau and Holmes (2011). We used the original data without transformations.

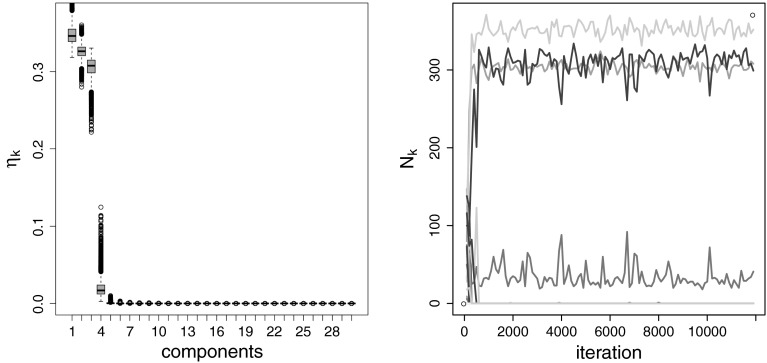

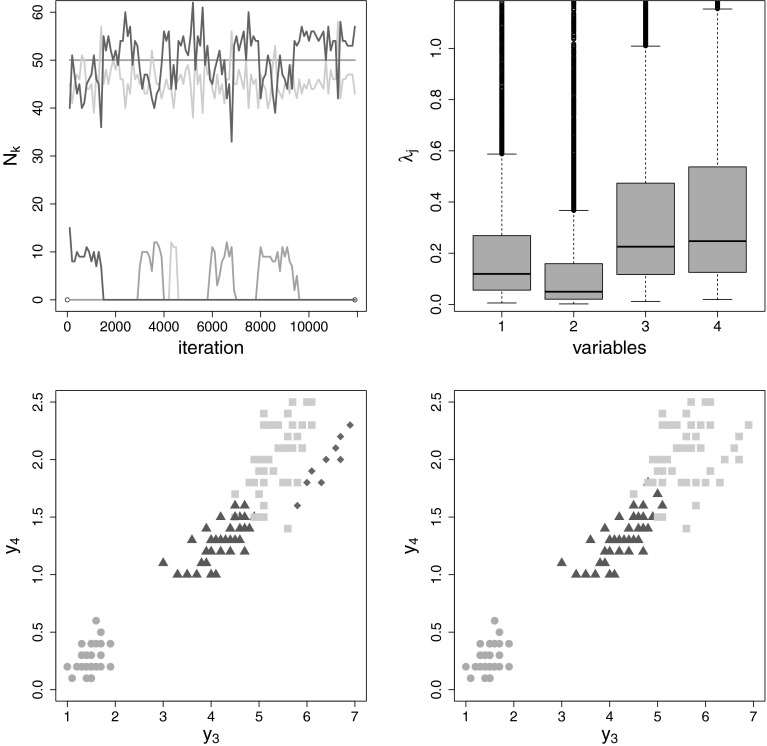

For selecting the number of mixture components, sparse finite mixture models with and mixture components are specified. As can be seen in Table 3, under both the standard and the normal gamma prior the expected number of components is selected. The posterior distribution converges rather fast to four non-empty components, as can be seen in the trace plot on the left-hand side in Fig. 9, where the number of observations allocated to the 15 components are displayed.

Table 3.

Crabs data: results for different under the standard prior (Sta) and the normal gamma prior (Ng), and when fitting an infinite mixture using the R package PReMiuM. The is calculated using the Mahalanobis distance based on Bayes estimates. , and are the results based on the clustering of the draws in the point process representation through -means instead of the -centroids cluster analysis based on the Mahalanobis distance

| Prior | fixed | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Sta | 4 | 0.27 | 4 | 10,000 | 0 | 0.08 | 0.80 | 0.27 | 0.08 | 3.67 | |

| 15 | 0.05 | 4 | 10,000 | 0 | 0.08 | 0.81 | 0.28 | 0.08 | 3.82 | ||

| 30 | 0.03 | 4 | 10,000 | 0 | 0.08 | 0.80 | 0.29 | 0.08 | 3.42 | ||

| Ng | 4 | 0.01 | 4 | 10,000 | 0 | 0.07 | 0.68 | 0.44 | 0.08 | 6.72 | |

| 15 | 0.01 | 4 | 9,938 | 0 | 0.07 | 0.67 | 0.46 | 0.08 | 8.19 | ||

| 30 | 0.01 | 4 | 9,628 | 0 | 0.07 | 0.68 | 0.46 | 0.08 | 8.10 | ||

| PReMiuM | |||||||||||

| 0.67 | 3 | 0.28 |

Fig. 9.

Crabs data, normal gamma prior, : Trace plot of the number of observations allocated to the different components, burn-in included (left-hand side). Box plots of the shrinkage factors for all five variables (right-hand side)

The misclassification rate of the identified model is 0.08 for the standard prior and 0.07 for the normal gamma prior. In Raftery and Dean (2006) the misclassification rate was 0.40 when using all variables as we do, and 0.075 when excluding one variable. Again, there is a considerable reduction in under the normal gamma prior compared to the standard prior. Box plots of the posterior draws of the shrinkage factor in Fig. 9 reveal that all five variables are cluster-relevant which might be due to the high correlation between variables.

This specific case study also demonstrates the importance of the refined procedure introduced in Sect. 4.2 to identify a mixture by clustering the MCMC draws of the component-specific means in the point process representation. Clustering using the squared Euclidean distance fails to capture the geometry of the posterior mean distribution and leads to a high non-permutation rate, denoted by in Table 3. Clustering using -centroids cluster analysis based on the Mahalanobis distance, however, allows to capture the elliptical shapes of the posterior mean distribution properly, see Fig. 4, which in turn reduces the non-permutation rate to 0. In this way, inference with respect to the component-specific parameters is considerably improved, as is evident from comparing and for both clustering methods in Table 3.

By clustering the Crabs data using an infinite mixture model with initial settings as explained in Sect. 5.1, three groups are estimated.

Iris data

The Iris data set (Anderson 1935; Fisher 1936) consists of 50 samples from each of three species of Iris, namely Iris setosa, Iris virginica and Iris versicolor. Four features are measured for each sample, the length and width of the sepals and petals, respectively. We aim at recovering the three underlying classes using the sparse finite mixture approach and thus expect to find three data clusters, although, as mentioned already for the Crabs data in Sect. 5.2, the true number of clusters for a finite mixture of Gaussian distributions may be more or less than three.

The results are reported in Table 4 by fitting sparse finite mixture models with 15 and 30 components, respectively. Values given in parentheses refer to the draws associated with the number of non-empty components given in parenthesis in column . Under both priors, the expected number of components is selected, as the majority of the draws is sampled from a mixture model with exactly three non-empty components. Under the standard prior, the misclassification rate of the identified model is 0.027, which outperforms the rate of 0.04 given in Raftery and Dean (2006).

Table 4.

Iris data: results for different , under the standard prior (Sta) and the normal gamma prior (Ng), and when fitting an infinite mixture using the R package PReMiuM. The is calculated using the Mahalanobis distance based on Bayes estimates. Values given in parentheses refer to the draws associated with the number of non-empty components given in parenthesis in column

| Prior | fixed | |||||||

|---|---|---|---|---|---|---|---|---|

| Sta | 3 | 0.34 | 3 | 10,000 | 0 | 0.027 | 0.338 | |

| 15 | 0.05 | 3 (4) | 5,900 (4086) | 0 (0.004) | 0.027 (0.093) | 0.336 | ||

| 30 | 0.03 | 3 (4) | 6889 (3111) | 0 (0.002) | 0.027 (0.093) | 0.338 | ||

| Ng | 3 | 0.01 | 3 | 10,000 | 0 | 0.027 | 0.350 | |

| 15 | 0.01 | 3 (4) | 7469 (2496) | 0 (0.043) | 0.033 (0.093) | 0.343 | ||

| 30 | 0.01 | 3 (4) | 6157 (3730) | 0 (0.147) | 0.033 (0.093) | 0.349 | ||

| PReMiuM | ||||||||

| 0.52 | 2 | 0.33 |

However, there is strong evidence for a fourth, non-empty component, actually not present in the true classification. Under both priors, a considerable number of draws is sampled from a mixture model with four non-empty components for all overfitting mixtures. We study the MCMC draws for under the standard prior in more detail. On the top row, in the left-hand side plot of Fig. 10, the number of observations allocated to the different components during MCMC sampling is displayed, indicating frequent switches between and non-empty components. This indicates that the posterior distribution does not converge clearly to a solution with three non-empty components and that a mixture model with non-empty components has also considerable posterior probability. Moreover, the non-permutation rate is small for (0.004), indicating that the component means are well separated. If further inference is based on the draws with non-empty components, the obtained four-cluster solution seems to be a reasonable solution. This can be seen in Fig. 10 where scatter plots of 2 variables (petal length and petal width) under both the resulting classification for and the true classification are displayed. Observations of the fourth estimated component, displayed in dark grey diamonds, constitute a rather isolated group.

Fig. 10.

Iris data, : Top row Trace plot of number of observations allocated to the different components under the standard prior (left-hand side), box plots of posterior shrinkage factors , for all four variables, under the normal gamma prior (right-hand side). Bottom row estimated classification for under the standard prior (left-hande side) and true classification (right-hande side)

Regarding the identification of cluster-relevant variables, box plots of the shrinkage factors displayed in Fig. 10 indicate that variable 2 (sepal width) is the most homogeneous variable which coincides with results in Yau and Holmes (2011).

If the number of groups is estimated by specifying an infinite mixture model, only the two data clusters are identified indicating that the infinite mixture approach implemented in package PReMiuM with the default settings aims at identifying clearly separated clusters with minimum overlap.

Discussion

In the framework of Bayesian model-based clustering, we propose a straightforward and simple strategy for simultaneous estimation of the unknown number of mixture components, component-specific parameters, classification of observations, and identification of cluster-relevant variables for multivariate Gaussian mixtures. Our approach relies on specifying sparse priors on the mixture parameters and involves only standard MCMC methods.

Estimation of the unknown number of mixture components is based on sparse finite mixtures where a sparse prior on the weights empties superfluous components during MCMC sampling and the number of true components can be estimated from the number of non-empty components. An advantage of this strategy is that model selection can be performed without computer-intensive calculations of marginal likelihoods or designing sophisticated proposals within RJMCMC. This approach works astonishingly well if the number of observations and the number of variables is not too large.

However, there are also limitations to the proposed strategy. First of all, we investigated our strategy under the assumption that the mixture components truly arise from multivariate Gaussian distributions. In order to catch non-symmetrical cluster shapes or handle outliers it would also be interesting to extend the approach to non-Gaussian component distributions, e.g. the -distribution and the skew-normal distribution (see Frühwirth-Schnatter and Pyne 2010; Lee and McLachlan 2014).

We may apply sparse finite Gaussian mixtures to data from such skew or fat-tailed mixture distributions, however, in this case the posterior mixture distribution tends to fit more than one Gaussian component to represent a single non-Gaussian cluster, in particular for an increasing number of observations. As a consequence, the method is fitting Gaussian mixtures in the sense of density estimation, where the number of components is of no specific interest, and the estimated number of non-empty components no longer represents the number of distinct clusters. An important issue for further investigation is therefore how to combine mixture components, i.e. how to identify adjacent located components and merge them into one larger cluster. Several recent papers have considered the problem of merging Gaussian mixture components, see e.g. Li (2005), Baudry et al. (2010), and Hennig (2010).

To identify cluster-relevant variables, the standard prior for the component means commonly applied for multivariate Gaussian mixtures is substituted by a hierarchical shrinkage prior based on the normal gamma prior. This prior tends to fill superfluous components, since it becomes informative during MCMC sampling and superfluous components are placed in reasonable locations. We found that the normal gamma prior requires specification of a very sparse prior on the mixture weights, which is achieved by setting the hyperparameter of the Dirichlet prior to a very small fixed value. Under this shrinkage prior, the true number of components is recovered from overfitting finite mixtures for simulated data. Additionally, under the normal gamma prior box plots of shrinkage factors allow visual identification of cluster-relevant and homogeneous variables. Furthermore, component locations are estimated more precisely under the normal gamma than under the standard prior.

A limitation of this strategy is that explicit identification of cluster-relevant variables is based on visual detection in a post-processing step. In the presence of a huge number of variables, this strategy might be too cumbersome and an automatic approach might possibly be preferable. This could be developed similar to the automatic approaches which are for example used for explicit variable selection in a regression setting when using the Bayesian Lasso.

Finally, we note a further limitation of the proposed strategy for high-dimensional data sets. When applying sparse finite mixtures to high-dimensional data sets, Gibbs sampling with data augmentation tends to get stuck in local modes, so that superfluous components do not become empty during sampling. An issue for further studies is therefore how to improve mixing, i.e. to design well-mixing samplers, a problem also mentioned in Yau and Holmes (2011).

Acknowledgments

This research was supported by the Austrian Science Fund (FWF): V170-N18.

Appendix 1: Scheme for estimating using MCMC sampling

The sampling scheme iterates the following steps:

- Parameter simulation conditional on the classification :

- Sample

where

and is the number of observations assigned to component . - For : sample

where - For : sample

where

and is the mean of the observations assigned by to component .

- Classification of each observation conditional on knowing :

- For : sample from

- Sample hyperparameters:

- Sample

- For a Dirichlet prior with random hyperparameter , sample via a Metropolis-Hastings step from

see Eq. (10).

-

(c)For , sample

where -

(d)Sample

where

- Random permutation of the labeling: select randomly one permutation of possible permutations of and substitute:

Appendix 2: Scheme for clustering in the point process representation

After the MCMC run, a sparse finite mixture is identified by post-processing the MCMC draws through the following steps:

For each iteration of the MCMC run, determine the number of non-empty components according to Eq. (4).

Estimate the true number of mixture components by , the value of the number of non-empty components occurring most often during MCMC sampling. Consider only the subsequence of all MCMC iterations where the number of non-empty components is exactly equal to .

For all iterations, remove the draws from empty components.

Arrange the remaining draws of the different component means in a matrix with rows and columns. Cluster all draws into clusters using -centroids cluster analysis (Leisch 2006) where the distance between a point and a cluster centroid is determined by the Mahalanobis distance. Details on the cluster algorithm are given in Sect. 4.2. The -centroids clustering algorithm results in a classification indicating to which clusters the component-specific parameters of each single draw belong.