Abstract

The tactile perception of the shape of objects critically guides our ability to interact with them. In this review, we describe how shape information is processed as it ascends the somatosensory neuraxis of primates. At the somatosensory periphery, spatial form is represented in the spatial patterns of activation evoked across populations of mechanoreceptive afferents. In the cerebral cortex, neurons respond selectively to particular spatial features, like orientation and curvature. While feature selectivity of neurons in the earlier processing stages can be understood in terms of linear receptive field models, higher order somatosensory neurons exhibit nonlinear response properties that result in tuning for more complex geometrical features. In fact, tactile shape processing bears remarkable analogies to its visual counterpart and the two may rely on shared neural circuitry. Furthermore, one of the unique aspects of primate somatosensation is that it contains a deformable sensory sheet. Because the relative positions of cutaneous mechanoreceptors depend on the conformation of the hand, the haptic perception of three-dimensional objects requires the integration of cutaneous and proprioceptive signals, an integration that is observed throughout somatosensory cortex.

Keywords: neurophysiology, touch, neural coding, shape, perception, tactile, objects

the hand is a remarkable organ that allows us to interact with objects with unmatched versatility and dexterity. Our ability to grasp and manipulate objects relies not only on sophisticated neural circuits that drive muscles to move the hand but also on neural signals from the hand that provide feedback about the consequences of those movements (Johansson and Flanagan 2009). Individuals deprived of this sensory information struggle to perform even the most basic activities of daily living, like picking up a coffee cup or using a spoon (Sainburg et al. 1995). More complex tasks, like tying shoes or buttoning a shirt, are out of the question.

Neural signals from the hand convey information about the shape, size, and texture of an object; if the object moves across the skin, information about its speed and direction of this motion is also conveyed. Discriminative touch signals from the hand are transmitted to the cerebral cortex along an ascending pathway that includes synapses in the brainstem and thalamus. Little is known about the coding properties of these two structures. Ultimately, however, touch signals project to primary somatosensory cortex, where behaviorally relevant features about the object—its shape, size, texture, and motion—are encoded in the responses of individual neurons.

In the present review, we discuss the neural basis of haptic perception with the hands in human and non-human primates. We track neural signals about objects from their genesis in the skin through their ascension up the somatosensory neuraxis, focusing on signals that convey information about the shape of objects, defined in a geometric sense. Shape information is available at multiple spatial scales. Signals from individual fingerpads convey information about local shape, for example, the presence and configuration of edges or bumps. Braille reading is a canonical example of our ability to recognize two-dimensional (2D) spatial patterns, in this case embossed dots in different configurations, with high speed and precision. Much of our understanding of tactile shape processing comes from studies of the neural coding of local shape information. Of all sensory modalities, however, somatosensation is unique in that it contains a deformable sensory sheet: mechanoreceptors move relative to one another as hand posture changes (Hsiao 2008). This flexible sensory sheet allows us to perceive the three-dimensional (3D) structure of objects that are large enough to contact the hand at multiple locations simultaneously. Less is known about the coding of 3D “global” object shape, but synthesis of 3D shape representations likely requires integrating proprioceptive signals about the configuration of the hand with cutaneous signals stemming from the different contact points with an object. We summarize the recent progress in identifying the neural interactions between cutaneous and proprioceptive signaling for shape processing. Not only are tactile signals about shape critical to our ability to interact with objects, they also allow us to identify objects by touch when vision is unavailable. It turns out that the neural mechanisms that mediate shape processing in touch are remarkably analogous to their counterparts in vision, a theme that will be developed throughout this review.

Shape Coding at the Somatosensory Periphery

Forces applied to the skin's surface propagate through the tissue to produce stresses and strains on mechanoreceptors embedded in the skin (Phillips and Johnson 1981b; Sripati et al. 2006a). In the glabrous skin, which covers the palmar surface of the hand, four classes of mechanoreceptors each respond to different aspects of skin deformations and send projections to the brain via large-diameter nerve fibers, each with its own designation (Bensmaia and Manfredi 2012): slowly adapting type 1 (SA1) afferents, which innervate Merkel cell receptors, respond to static indentations or slowly moving stimuli; Ruffini corpuscles, innervated by slowly adapting type 2 (SA2) fibers, respond to skin stretch; Meissner corpuscles, associated with rapidly adapting (RA) fibers, respond to low-frequency skin vibrations and movements across the skin, and Pacinian corpuscles (PC), associated with PC fibers, respond to high-frequency vibrations and finely textured surfaces. The designation slowly vs. rapidly adapting refers to the responses of these afferents to static indentations: SA1 and SA2 fibers respond throughout a skin indentation whereas RA and PC fibers only produce a transient response at the onset and offset of the indentation. Importantly, however, all receptor types respond during typical interactions with most objects (Saal and Bensmaia 2014), and information about the different features of an object—size, shape, texture, motion, etc.—is multiplexed in these responses.

To understand mechanosensory transduction and afferent responses to indented spatial patterns, one must take skin mechanics into account. The forces applied on the skin when spatial patterns are indented into it must therefore propagate through the tissue before they deform and excite mechanoreceptors in the skin (Dandekar et al. 2003; Phillips and Johnson 1981b; Sripati et al. 2006a). The resulting spatial pattern of mechanoreceptor activation is thus a somewhat distorted version of the spatial pattern at the skin's surface. This transformation is well approximated by models that first convert the displacement profile of a spatial pattern indented into the skin into a pattern of stresses and strains at the location of the receptor (Sripati et al. 2006a). The impact of a tactile stimulus on any individual mechanoreceptor thus depends both on the physical properties of the skin itself, such as its mass, stiffness, and resistance, and characteristics of the receptor, such as its location in the tissue and the spatial layout of its transduction sites. Ultimately, the skin tends to enhance certain stimulus features (for example, corners) and obscure others (for example, small internal features), a process that is reflected in the neural image conveyed by the somatosensory nerves.

A critical property of mechanoreceptive afferent fibers in determining their role in tactile shape perception is the size of their receptive fields (RFs), itself determined by the depth of the receptors they innervate and by the degree to which individual afferents branch out to innervate multiple receptors. Indeed, SA2 and PC fibers respond to mechanical stimulation of large swaths of skin, so activation of these afferents conveys little information about the precise location of object contact or the spatial configuration of the object. In contrast, SA1 and RA afferents only respond to mechanical stimulation applied to very restricted patches of skin. The smaller “pixels” of SA1 and RA responses allow these afferent populations to convey more acute spatial information than do the huge “pixels” of SA2 and PC responses.

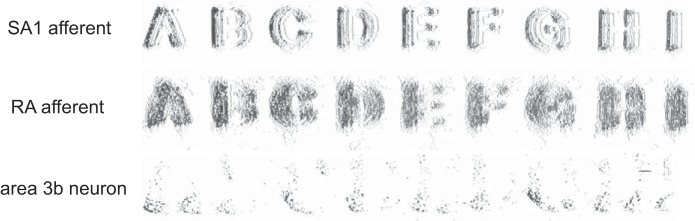

At the somatosensory periphery, then, the shape of objects is encoded in the spatial pattern of activation evoked in mechanoreceptive fibers, much like the shape of visual objects is encoded in the spatial pattern of activation evoked in photoreceptors in the retina. For example, the spatial configuration of edges (Srinivasan and LaMotte 1987), embossed letters (Phillips et al. 1988), Braille-like dot patterns (Johnson and Lamb 1981; Phillips et al. 1990, 1992), and half spheres of varying curvature (Goodwin et al. 1995) is reflected in the spatial pattern of activation evoked in mechanoreceptive afferents (Fig. 1). Furthermore, the spatial image carried by SA1 afferents is sharper than its RA counterpart. For example, the shape of embossed letters is better defined in the spatial event plots (SEPs) reconstructed from SA1 responses than in those reconstructed from RA responses (Fig. 1). SEPs have also been used to estimate the ability of peripheral afferents to signal the curvature and orientation of objects indented into the skin (LaMotte et al. 1998; LaMotte and Srinivasan 1996). Again, SA1 afferents were found to convey the most reliable information about these stimulus features (Khalsa et al. 1998). In fact, paired psychophysical and neurophysiological experiments have shown that SA1 afferents respond to the finest tangible spatial features and set the bottleneck for spatial acuity, at least for stimuli indented into the skin (Johnson and Phillips 1981; Phillips and Johnson 1981a). Spatial acuity actually improves when RA signals are reduced (Bensmaia et al. 2006).

Fig. 1.

Spatial event plots (SEPs). SEPs reconstructed from a slowly adapting type 1 (SA1) afferent (top), a rapidly adapting (RA) afferent (middle), and a neuron in area 3b (bottom). While the spatial pattern of afferent activation reflects the spatial configuration of the stimulus, cortical neurons respond to specific stimulus features (adapted from Phillips et al. 1988).

However, RA afferents also convey shape information, as evidenced by the successful use of the optical-to-tactile converter (Optacon), a sensory substitution device that consists of an array of vibrating pins (Bliss et al. 1970; Craig 1980). The idea behind the Optacon was that blind individuals would scan printed text with a camera and the text would be converted to a spatial pattern of pin activation that reflected the scanned letters. When pins configured in the shape of letter were made to vibrate, for example, subjects were able to identify the letter. Importantly, however, the Optacon excites RA and PC but not SA1 fibers (Gardner and Palmer 1989). As PC fibers are unlikely to contribute to shape perception given their sparseness and large RFs (Johansson and Vallbo 1979; Vallbo and Johansson 1984), the ability to discern shape through the Optacon is almost certainly mediated by RA fibers.

While the shape signal carried by SA1 afferents tends to be sharper for static or slowly moving tactile stimuli, that carried by RA afferents may be more informative under some circumstances, for example, during rapid hand interactions with objects that may drive RA afferent responses more robustly, but this has not been tested.

In addition to carrying a spatial image of a tactile stimulus, afferents may also begin the process of extracting behaviorally relevant features of the stimulus. Indeed, the responses of individual SA1 and RA human tactile afferents convey information about the direction of forces experienced at the fingertip and the shape (i.e., curvature) of the surfaces contacting the skin (Birznieks et al. 1999; Jenmalm et al. 2001, 2003; Johansson and Birznieks 2004). Afferents can also signal the orientation of bars and edges by producing responses whose strength and timing are dependent on orientation (Pruszynski and Johansson 2014). This selectivity for spatial features likely results from the nonuniform distribution of highly sensitive “hot spots” across its RF (Pruszynski and Johansson 2014), each of which corresponds to a receptor that is innervated by the afferent. While afferent RFs with multiple hot spots are also found in non-human primates (Phillips and Johnson 1981a), these may be more pronounced in humans due to their larger digit pads (Johansson 1978; Phillips et al. 1992). Some evidence for spatial feature selectivity is also observed in retinal ganglion cells (Gollisch and Meister 2010), suggesting that precortical geometric feature extraction may be a general operation performed by sensory systems.

Transmission of Shape Information from the Hand to the Brain

Somatosensory shape information carried by peripheral afferent populations is primarily transmitted to the cortex via the dorsal column-medial lemniscal pathway. In this pathway, axons from nerve fibers carrying somatosensory signals from the forelimb ascend the spinal cord and synapse onto neurons in the cuneate nucleus (CN) in the medulla. Second order somatosensory neurons in the CN project to the ventroposterior lateral nuclei (VPL) in the thalamus. Little is known about the tactile coding properties of neurons in the CN and VPL of primates. However, while these structures have traditionally been considered relay stations that perform limited information processing operations, recent evidence from other organisms suggests that they implement more complex processing (Jorntell et al. 2014). The implications of this processing for the neural processing of shape in primates remain to be elucidated.

Primary somatosensory cortex (S1), comprising Brodmann areas 3a, 3b, 1, and 2, is the principal recipient of somatosensory thalamic inputs. Each S1 subdivision displays a distinct pattern of thalamic and cortico-cortical connections. Neurons in area 3a receive their inputs from the shell region surrounding VPL and respond primarily to proprioceptive stimulation (Gardner 1988). Neurons in area 3b primarily receive thalamic inputs from the core regions of VPL (Jones 1983; Jones and Friedman 1982) and respond best to cutaneous stimulation (Paul et al. 1972; Sur et al. 1980, 1985). Areas 1 and 2 receive dense projections from area 3b in addition to thalamic inputs (Burton and Fabri 1995) and contain neurons whose RFs often span multiple digits (Hyvärinen and Poranen 1978b; Iwamura et al. 1993; Vierck et al. 1988). Furthermore, neurons in area 2 respond to both cutaneous and proprioceptive stimulation (Hyvärinen and Poranen 1978b; Iwamura and Tanaka 1978). After processing in S1, tactile information is routed to association areas in posterior parietal cortex (PPC) (Kalaska et al. 1983; Mountcastle et al. 1975) and to the second somatosensory cortex (S2) (Burton et al. 1995; Friedman et al. 1980, 1986). Neurons in these higher order somatosensory areas have large RFs and complex response properties with cutaneous and proprioceptive components. S2 in humans (Eickhoff et al. 2007; Eickhoff et al. 2006) and non-human primates (Fitzgerald et al. 2004; Kaas and Collins 2003) can be divided into at least three subregions, each of which with distinct response properties (Burton et al. 2008; Fitzgerald et al. 2004). In the following section, we consider how shape information is encoded and transformed along the somatosensory processing pathway.

Shape Representations and Transformations in the Somatosensory System

As discussed above, the spatial pattern of activation across SA1 and RA afferents is largely isomorphic with the stimulus. Such isomorphic neural images can be appreciated from SEPs (Fig. 1), which are generated by registering a neuron's spiking activity with the position of a stimulus (e.g., an embossed letter) that is scanned across the neuron's RF (Phillips et al. 1988). SEPs constructed from a single neuron's responses to embossed letter stimuli (Fig. 1) approximate the neural images of the letters as they would appear distributed across a population of identically responsive neurons whose receptive fields are densely and uniformly distributed across the skin (Phillips et al. 1988). Each afferent's spiking activity simply reflects the presence or absence of any stimulus component that falls within its RF, with some spatial filtering (blurring) due to skin mechanics (Phillips and Johnson 1981b; Sripati et al. 2006a) and afferent branching (Pare et al. 2002). In contrast, cortical neurons in area 3b display a wider range of response patterns to the same stimuli, ranging from strongly isomorphic to highly structured but nonisomorphic (Fig. 1) to weakly structured (Phillips et al. 1988). The heterogeneity of 3b response types implies a shift from strict isomorphism and an emergence of feature selectivity in somatosensory cortex. Critically, strongly isomorphic cortical responses may be partially inherited from subcortical processing levels as RF size, RF complexity, and neural feature selectivity in area 3b neurons are correlated with laminar position (DiCarlo and Johnson 2000) with the most “afferent-like” responses occurring in the granular layer, which receives the bulk of the thalamocortical projections (Jones and Burton 1976).

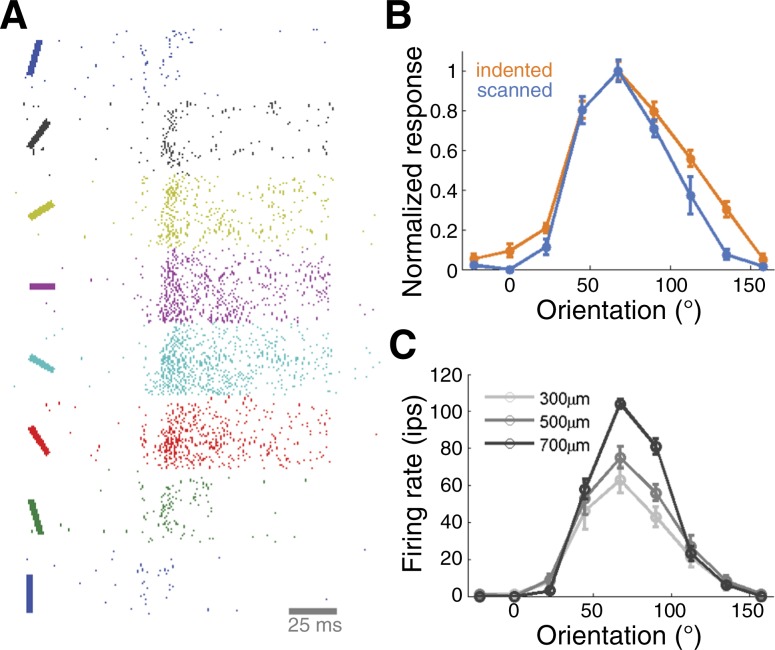

Orientation is an elementary contour feature and many S1 neurons in areas 3b and 1 respond selectively to bars and edges at particular orientations when these impinge upon their RFs (Bensmaia et al. 2008a; Pubols and LeRoy 1977; Warren et al. 1986). An orientation-selective neuron responds vigorously to a stimulus presented at its preferred orientation and weakly as the orientation of the stimulus deviates from the neuron's preferred orientation (Fig. 2A). In many neurons, orientation preferences are consistent whether the stimuli are indented or scanned over the finger (Fig. 2B) and tuning is robust to changes in stimulus amplitude (Fig. 2C) (Bensmaia et al. 2008a). The sensitivity of orientation-tuned neurons is comparable to that of human observers so orientation signals in areas 3b and 1 can in principle account for the tactile perception of oriented edges (Bensmaia et al. 2008b). The degree to which this orientation tuning in S1 is shaped by the tuning observed in afferent signals (Pruszynski and Johansson 2014) remains to be elucidated.

Fig. 2.

Orientation tuning in primary somatosensory cortex. A: responses of a neuron in area 3b to bars indented into its receptive field at different orientations. B: orientation tuning curves for another neuron in neuron area 3b reveal consistent preferred orientation, whether the bars are indented (blue) or scanned (orange) bars. C: orientation tuning is also consistent across changes in stimulus amplitude (same neuron as in B) (adapted from Bensmaia et al. 2008).

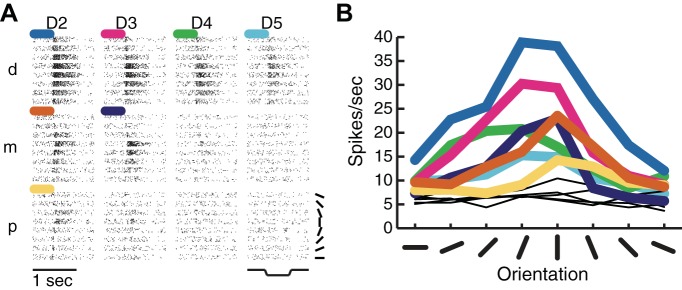

Higher order somatosensory neurons in area 2 (Hyvärinen and Poranen 1978a) and S2 (Fitzgerald et al. 2006b) also exhibit orientation selectivity, with tuning strength that is comparable to that observed in areas 3b and 1 but over much larger skin regions. Indeed, RFs in area 2 and S2 span multiple fingers and can even encompass both hands in their entirety (Fitzgerald et al. 2006a). The orientation preference of higher-order, orientation-tuned neurons tends to be consistent across their RF (Fig. 3). Thus orientation tuning becomes invariant to position in higher order neurons (Fitzgerald et al. 2006b; Thakur et al. 2006), a phenomenon that is also observed in neurons in higher order visual cortex (Ito et al. 1995).

Fig. 3.

Orientation tuning in multidigit second somatosensory cortex (S2) receptive fields. A: responses of an S2 neuron to oriented bars presented to the distal (d), middle (m), and proximal (p) finger pads on digits 2–5 (index to little finger). Colored traces indicate significant orientation tuning. B: orientating tuning curves for each finger pad depicted in A. Orientation preferences tended to be similar across tuned pads (adapted from Fitzgerald et al. 2006).

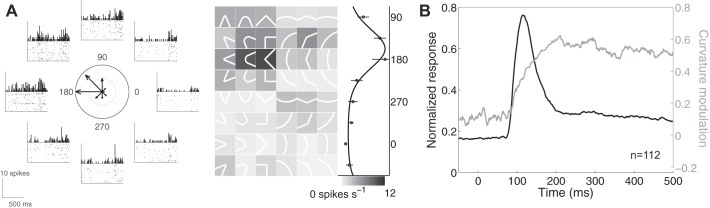

Neurons in area 2 and S2 also exhibit selectivity for the curvature of contours indented into the skin (Yau et al. 2009, 2013a). In this context, curvature denotes the change in the contour's orientation. Thus any contour or edge with nonuniform orientation is curved with levels of curvature ranging from sharp (i.e., abrupt orientation changes) to broad (i.e., gradual orientation changes). Curvature fragments can also be characterized according to their curvature direction, defined as the direction of a vector along the fragment's axis of symmetry that points away from its interior. Somatosensory cortical neurons respond preferentially to contour fragments pointing in a specific direction (Fig. 4A). These explicit neural representations of curvature, a higher order shape feature, are thought to be generated by integrating information from local orientation detectors in areas 3b and 1. Indeed, the degree to which the activity of individual neurons differentiates between curvature directions grows gradually (Fig. 4B). This dynamic suggests that curvature representations emerge from network interactions (Yau et al. 2013a), also thought to be necessary for visual curvature synthesis (Yau et al. 2013b). Because curvature direction preferences are consistent across multiple RF locations (Yau et al. 2009, 2013a), curvature tuning in area 2 and S2 neurons, like orientation tuning, is tolerant to changes in stimulus position.

Fig. 4.

Curvature direction tuning in somatosensory cortex. A, left: responses of a neuron in area 2 to curvature fragments (2D angles and arcs) sorted by curvature direction. A, right: gray scale indicates the average response rates for angles and arcs. This neuron responds best to curvature fragments pointing leftward (135–180°). B: time courses of normalized spiking activity (black) and curvature signaling (gray) in a population of neurons in area 2. Curvature modulation is computed by taking the difference between the maximum and minimum responses and normalizing it by the sum of these. Population activity peaks relatively quickly while curvature signals build gradually and peak more slowly (adapted from Yau et al. 2013a).

In sum, the transformation of 2D shape representations in the somatosensory system is similar to that in the ventral visual pathway: isomorphic spatial representations in the retina give rise to increasingly complex feature tuning that is increasingly invariant to other properties of the stimulus, such as its size and position (Connor et al. 2007). These remarkable similarities between visual and tactile shape processing suggest that the two systems implement analogous neural computations, as has been shown to be the case for motion processing (Pack and Bensmaia 2015; Pei and Bensmaia 2014; Pei et al. 2010, 2011).

Dynamic Spatial Filtering as a Mechanism for Shape Feature Selectivity

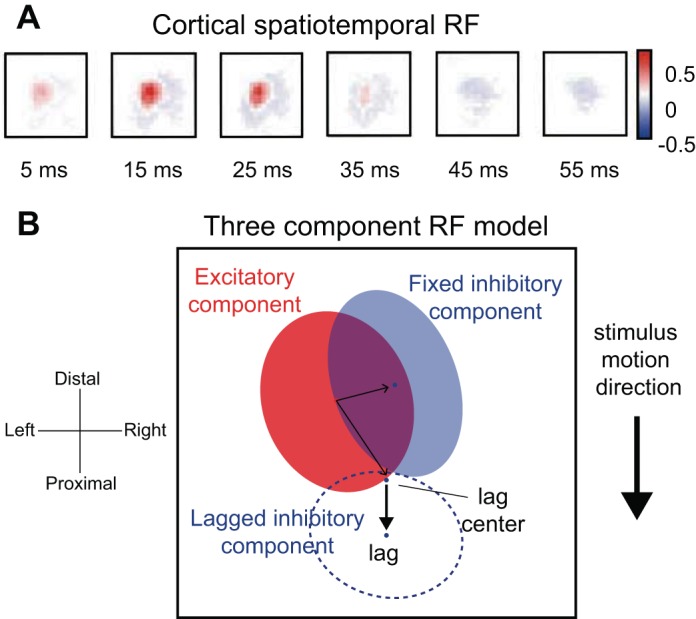

One way to describe the neuronal response properties that lead to feature selectivity is to characterize the dynamic spatial structure of the neuron's RF. The dominant feature in the RFs of mechanoreceptive afferents tends to be a small excitatory region (Sripati et al. 2006b; Vega-Bermudez and Johnson 1999). In contrast, RFs of neurons in area 3b typically comprise a central excitatory region flanked by one or more inhibitory ones (DiCarlo et al. 1998). Furthermore, this spatial structure evolves over time, on millisecond time scales (Fig. 5A); for example, the central excitatory subfield is replaced by an inhibitory field (Gardner and Costanzo 1980; Sripati et al. 2006b). The spatiotemporal RFs (STRF) of area 3b neurons (DiCarlo and Johnson 2000) can be described with three linear components: an excitatory core, inhibitory regions that flank the excitatory core, and replacing inhibition that overlaps the excitatory core after some delay (Fig. 5B). Although linear models offer adequate characterizations of RFs, models that incorporate quadratic components provide substantially improved predictions of neuronal responses (Thakur et al. 2012). It is important to note that the RFs of SA1 afferents, mapped with random dot patterns or spatiotemporal indentations, exhibit properties that, at first glance, resemble surround inhibition. However, this so-called “surround suppression” is a product of skin mechanics: the presence of an indentation just outside of the excitatory region of the RF decreases the forces exerted by an indentation in the hot-spot, thereby decreasing its effectiveness (Sripati et al. 2006b). Additionally, the STRFs of both SA1 and RA afferents exhibit what resembles replacing inhibition (Sripati et al. 2006b) but is simply neural refractoriness. Although some of the inhibition observed in cortical STRFs is undoubtedly inherited from these inhibition-like peripheral response properties, the increased degree of inhibition with respect to excitation in cortex, the dynamics of inhibition in cortex, and the differences in the properties of inhibition in areas 3b and 1 imply that a substantial component of the inhibition in cortical STRFs is of intracortical origin (Sripati et al. 2006b).

Fig. 5.

Spatial temporal receptive fields (STRFs). A: STRF estimated for a neuron in area 1 using a modified form of linear regression similar to reverse correlation. This STRF comprises a central excitatory region, fixed inhibition, and lagged inhibition on a single finger. Color bar indicates pixel intensities in spikes per second per micrometer. B: 3-component model that includes fixed excitatory (red), fixed inhibitory (solid blue), and lagged inhibitory components (dashed blue). The sum of the elliptical regions yields the full STRF. The centers of the fixed and lagged inhibitory components are indicated by the blue dots. For RFs estimated from responses to moving dot stimuli (see DiCarlo and Johnson, 2000), the location of the lagged inhibitory component, with respect to the excitatory region, depends on the direction in which the stimulus is scanned (thick black arrow). The location of the fixed inhibitory component remains unchanged over motion directions (adapted from Sripati et al. 2006b).

These RF descriptions account for several aspects of the feature selectivity and invariance that emerge along the somatosensory pathway. First, the geometry of the excitatory and inhibitory subfields accounts, at least in part, for the orientation selectivity of S1 neurons (Bensmaia et al. 2008a; DiCarlo et al. 1998), as is the case for simple cells in area V1 (Hubel and Wiesel 1968; Ringach 2002). Second, the opponency of excitatory and inhibitory subfields accounts for the observation that S1 responses are independent of the number of simultaneous contacts within their RF (Thakur et al. 2012), which stands in contrast to those of peripheral afferents, whose response rates increase logarithmically with stimulation density (Sripati et al. 2006b). Third, the presence of a lagged inhibitory field causes neural responses to the spatial features of a stimulus to be relatively tolerant to changes in scanning velocity (DiCarlo and Johnson 2002). Fourth, the position of the delayed inhibitory field relative to the excitatory core may underlie, to some extent, S1 directional selectivity (DiCarlo and Johnson 2000; Pack and Bensmaia 2015; Pei et al. 2010).

While STRFs are similar in areas 3b and 1, such linear representations of RFs capture significantly less variance in the responses of neurons in area 1 than in area 3b (Sripati et al. 2006b), consistent with the hypothesis that neurons in area 1 encode more complex and invariant spatial features. For instance, orientation tuning strength is comparable in the most selective neurons in areas 3b and 1 (Bensmaia et al. 2008a). However, orientation tuning in area 1 may be more tolerant to position changes or to movement.

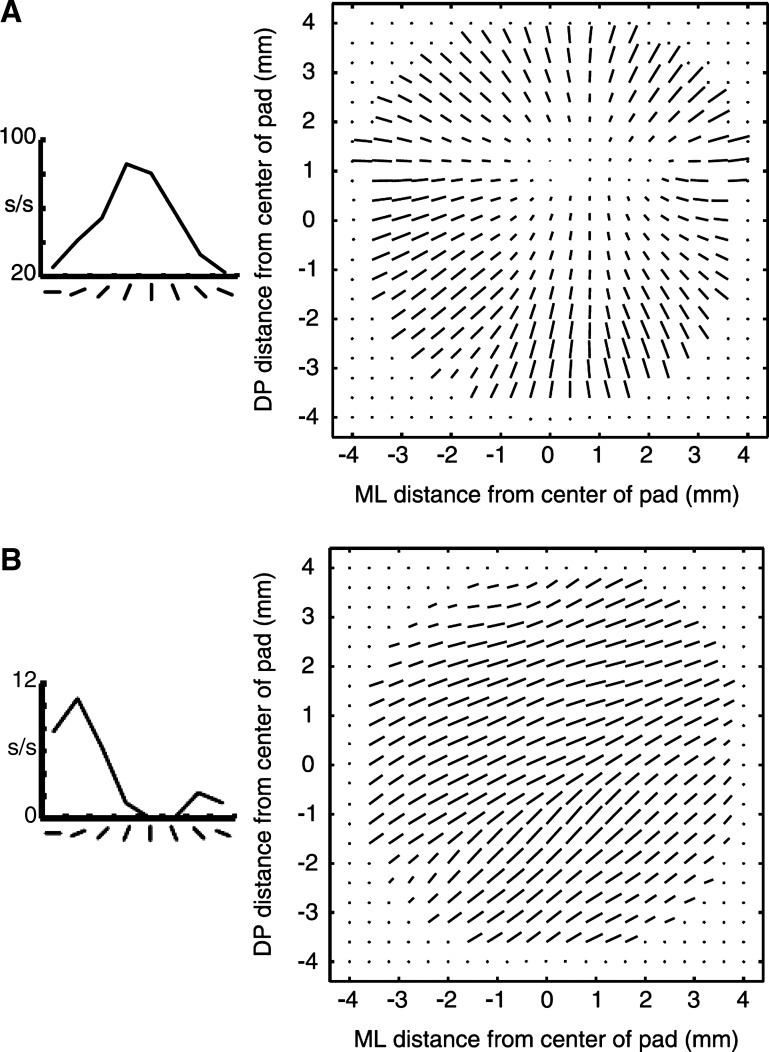

While linear models can capture some of the response properties of S1 neurons, they cannot account for the responses of many S2 neurons (Fig. 6B). Like their counterparts in S1, S2 neurons are tuned for the orientation of bars presented to the centers of finger pads (Fig. 6, orientation response plots). However, these cells differ widely in their responses to oriented bars presented at other locations within the finger pad, as demonstrated using a vector field representation (Fig. 6, vector fields). Vector fields indicate the consistency of orientation tuning across a finger pad, which can provide clues as to the underlying neural mechanisms. For example, a divergent vector field (Fig. 6A), which comprises vectors radiating from a single untuned region of the finger pad, describes the orientation preference of a neuron whose RF consists solely of a central excitatory region, i.e., a “hot spot” centered on the untuned region: a bar at any location will evoke a response if it contacts the hot spot. In contrast, vector fields with relatively uniform vector directions (Fig. 6B) reveal position-tolerant orientation tuning, which cannot be easily explained by linear mechanisms (Thakur et al. 2006).

Fig. 6.

Orientation tuning and vector fields in S2. A and B, left: response rates of two S2 neurons to bars presented to the center of a finger pad at different orientations. A and B, right: vector fields indicate estimated orientation preference (vector direction) and tuning strength (vector magnitude) across a grid of positions spanning a single finger pad. Note that the vector fields are constructed from neural responses to a large bar stimulus rotated to 1 of 8 orientations and indented into the distal fingerpad at multiple locations per orientation. Response functions and vector fields for 2 neurons are depicted. Although the neurons each exhibit clear orientation tuning for bars presented at the center of the finger pad, their vector fields differ dramatically reflecting distinct orientation tuning mechanisms. A: divergent vector field, which can be explained by a linear RF model comprising a single excitatory field. B: invariant vector field, which cannot be accounted for with a linear RF model (adapted from Thakur et al. 2006).

Interactions Between Proprioceptive and Cutaneous Signals Support 3D Shape Processing

A unique aspect of the somatosensory system is that it contains a deformable sensory sheet. That is, the relative positions of cutaneous mechanoreceptors in 3D space depend on the conformation of the hand. Thus, while the perception of 2D shape can be achieved solely from cutaneous signals, haptic perception of 3D shape requires combining cutaneous signals with proprioceptive signals that track the hand's movements and posture (Berryman et al. 2006; Goodwin and Wheat 2004; Hsiao 2008; Klatzky et al. 1993; Pont et al. 1999). Hand proprioception relies on several types of mechanoreceptors (see Proske and Gandevia 2012 for a review), including muscle spindles (Cordo et al. 1995, 2002; Winter et al. 2005; Wise et al. 1999), Golgi tendon organs (Appenteng and Prochazka 1984; Houk and Henneman 1967; Houk and Simon 1967; Matthews and Simmonds 1974; Proske and Gregory 2002; Roll et al. 1989), joint receptors (Burgess and Clark 1969), and SA2 afferents innervating the skin of the hand (Edin 1992; Edin and Abbs 1991; Edin and Johansson 1995). Proprioceptive signals carried by these different afferent populations are transmitted to somatosensory cortex directly along the dorsal column-medial lemniscal pathway (Mountcastle 1984; Poggio and Mountcastle 1960) and possibly indirectly along the cuneocerebellar tract, passing through the external cuneate nucleus (Akintunde and Eisenman 1994; Campbell et al. 1974; Cerminara et al. 2003; Davidoff 1989; Huang et al. 2013; McCurdy et al. 1998; Quy et al. 2011), via the cerebellum (Blakemore et al. 1999).

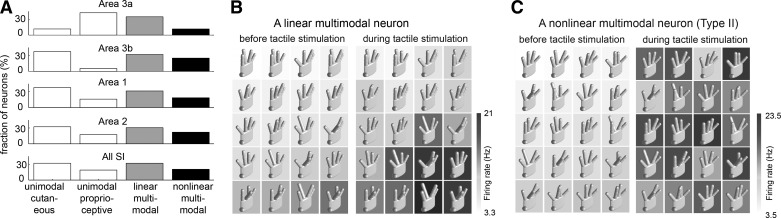

According to the traditional model of somatosensory cortex, proprioceptive signals project to area 3a and cutaneous signals to areas 3b and 1 (Iwamura et al. 1995; Kalaska 1994; Krubitzer et al. 2004; Porter and Izraeli 1993) before these are integrated in higher order areas like area 2 (Porter and Izraeli 1993) and S2 (Fitzgerald et al. 2004). However, passive limb movements and postural changes alone can activate areas 3b and 1 (Ageranioti-Belanger and Chapman 1992; Chapman and Ageranioti-Belanger 1991; Rincon-Gonzalez et al. 2011) and cutaneous stimulation alone can evoke responses in area 3a (Cohen et al. 1994; Kim et al. 2015; Krubitzer et al. 2004; Prud'homme et al. 1994). Thus all S1 areas may in principle encode both proprioceptive and cutaneous information (Fig. 7A) (Kim et al. 2015).

Fig. 7.

Cutaneous, proprioceptive, and multimodal responses in S1 (adapted from Kim et al. 2015). A: population distributions of unimodal and multimodal neurons across S1. B, left: linear multimodal neuron whose responses are modulated by hand posture before cutaneous stimulation. B, right: cutaneous stimulation caused uniform increases in response rates over all hand postures. C: multimodal nonlinear neuron whose responses are modulated by hand posture before cutaneous stimulation (left) and by cutaneous stimulation (right). The response patterns over postures differ between the baseline and cutaneous conditions.

Hand posture modulates neural activity in all S1 areas, as might be expected given that the skin is stretched or compressed during finger movements (Chapman and Ageranioti-Belanger 1991; Costanzo and Gardner 1981; Gardner and Costanzo 1981; Iwamura et al. 1993; Kalaska 1994; Kalaska et al. 1998; Krubitzer et al. 2004; Nelson et al. 1991). Not surprisingly, then, S1 neurons exhibit a wide repertoire of proprioceptive responses (Kim et al. 2015). Some neurons encode the angular position of a single joint while others encode complex hand postures. Many neurons, however, exhibit both proprioceptive and cutaneous responses (multimodal neurons in Fig. 7A). In most multimodal neurons, rate modulations related to hand conformation are superimposed on rate modulations related to cutaneous stimulation (Fig. 7B). In some multimodal neurons, cutaneous and proprioceptive signals interact in complex, nonlinear ways (Fig. 7C). For example, the response to cutaneous stimulation is modulated by hand conformation or the response to hand conformation is modulated by cutaneous stimulation (Fig. 7C).

The dynamics of multimodal responses may provide clues as to the underlying neural mechanisms. In some multimodal neurons, the integration of proprioceptive and cutaneous signals is evident immediately following cutaneous stimulation and/or movement (Kim et al. 2015), consistent with a processing scheme in which cutaneous and proprioceptive interactions build from feedforward computations. In other multimodal neurons, this integration emerges more gradually (Kim et al. 2015), suggesting the involvement of network interactions. The faster multimodal signal may provide an initial estimate of 3D shape to guide object manipulation, while the slower one may be involved in object perception, a process that requires time (Klatzky and Lederman 2011).

Tactile Shape Processing in the Human Brain

Consistent with the monkey neurophysiological evidence, tactile spatial form processing results in robust activations in human S1 and S2 (also referred to as the parietal operculum). Extensive and overlapping somatosensory brain regions respond to spatial dot patterns (Harada et al. 2004; Li Hegner et al. 2007), oriented gratings (Kitada et al. 2006; Van Boven et al. 2005), embossed letters (Burton et al. 2008), and abstract shapes (Stilla and Sathian 2008). These neuroimaging results collectively support a model of hierarchical processing within the somatosensory cortical system: in S1, area 2 exhibits substantially greater shape-selective responses, e.g., to surface curvature and 3D objects, compared with areas 3b and 1 (Bodegard et al. 2001). According to the hierarchical processing model, additional recruitment of S2 and PPC reflects the elaboration of shape information following S1 processing (Ostry and Romo 2001). In fact, distributed networks of parietal and frontal brain regions are also coactivated with somatosensory cortex while participants perform tasks involving tactile shape perception (Hegner et al. 2010; Zhang et al. 2005). These association areas may not support sensory processing per se, but rather may mediate memory and executive functions related to performing the perceptual tasks. PPC may also be well suited to mediate the bidirectional exchange of shape information between the somatosensory and visual systems (Deshpande et al. 2008).

Relationship Between Tactile and Visual Shape Processing

We perceive 2D and 3D shape by touch or vision alone. Multiple lines of evidence indicate that tactile and visual shape perception are not only similar but also related. First, visual discrimination performance drops to tactile levels when subjects experience visual stimuli through a limited field of view (Dopjans et al. 2012; Loomis et al. 1991). Second, the ways in which subjects categorize (Gaissert et al. 2010) and confuse (Brusco 2004) visual and tactile shapes is highly correlated. Third, spatial form illusions, like the Muller-Lyer illusion, are experienced in both vision and touch (Mancini et al. 2011). Not surprisingly, then, somatosensory and visual neurons encode shape information in similar ways. These shared neural codes may also be important for facilitating cross talk between the somatosensory and visual systems (Ghazanfar and Schroeder 2006).

The conventional view has been that visual and tactile processing of spatial patterns is comparable after taking into account factors like the number of activated receptors (Phillips et al. 1983) and the blurring caused by skin mechanics (Loomis 1982, 1990). However, a recent study found that visual pattern perception remained superior to touch after carefully equating the visual and tactile stimulus conditions (Cho et al. 2015). Because vision outperforms touch even when peripheral processing is precisely matched, differences in central processing must contribute to the superiority of vision in shape processing. Thus, while vision and touch may exploit similar neural mechanisms for shape processing, those that support vision may be more extensive and refined. Not surprisingly, we typically rely more on vision than touch when perceiving shape information in our environment.

Tactile processing and visual shape processing is not only based on similar neural codes, but they may be supported in part by common neural circuits. Indeed, tactile perception of 2D shapes (Prather et al. 2004) and 3D objects (Amedi et al. 2001; Pietrini et al. 2004; Tal and Amedi 2009) activates regions of inferior temporal cortex and posterior parietal cortex that are typically activated during visual shape processing. Touch-related activity in visual cortex has also been observed in single-unit recordings: under some conditions, orientation tuned neurons in area V4 respond to oriented bars presented on the skin (Haenny et al. 1988; Maunsell et al. 1991). Although tactile shape responses in visual cortex may in part reflect visual imagery (Lacey and Sathian 2014), neuropsychological evidence from stroke patients suggests that occipital and temporal lobe regions contribute critically to tactile shape perception (Feinberg et al. 1986) and haptic learning of novel objects (James et al. 2006). Similarly, noninvasive brain stimulation targeting regions in visual cortex can influence tactile shape perception (Mancini et al. 2011; Merabet et al. 2004; Yau et al. 2014; Zangaladze et al. 1999). Thus the haptic perception of shape relies on distributed brain networks that extend beyond traditionally defined somatosensory cortex (Pascual-Leone and Hamilton 2001).

Tactile and visual signals interact not only in perceiving object shape but also in guiding object manipulation. PPC is known to contain neurons with multisensory properties (Avillac et al. 2005; Murata et al. 2000; Pouget et al. 2002). Although neural responses in caudal S1 and PPC exhibit some degree of shape selectivity (Gardner et al. 2007a; Iwamura and Tanaka 1978), the activity of many neurons in areas 5 and 7 and the anterior intraparietal area appears to encode information relevant to grasping and manipulating objects (Murata et al. 1997, 2000) rather than object geometry, per se. Indeed, firing rates in PPC increase gradually as monkeys reach for objects, particularly during object approach trajectories when the fingers are preshaped for grasping (Gardner et al. 2007b). In many cells, response magnitude and dynamics are highly similar if the animals grasp at objects of different shapes using similar grasps (Chen et al. 2009). Because activity in PPC peaks at the time of object contact, PPC activity may be particularly suited to serve both sensory and motor functions aimed at providing the animal with feedback concerning the accuracy of its reach and grasp and guidance for error correction (Chen et al. 2009; Gardner et al. 2007b). At the time of contact, visual and tactile feedback concerning the hand's movements relative to the object is brought into register, providing a powerful signal to shape subsequent motor commands. The dense projections between PPC and frontal motor regions provide a clear and direct pathway to support this sensorimotor processing (Andersen and Buneo 2002).

Conclusions

The spatial features of objects that we grasp or manipulate are encoded in the spatial pattern of activation of populations of mechanoreceptive afferents. Information about behaviorally relevant features is then gradually extracted from these neural images along a hierarchical processing pathway that is very analogous to the corresponding pathway in the visual system. Neurons at early stages of processing encode simple stimulus features, e.g., edge orientation, and their feature selectivity can be explained in terms of the spatial structure of the neurons' receptive fields. At higher processing stages, neurons encode more complex stimulus features, e.g., curvature, and these feature selectivities are increasingly invariant to the position of the stimulus on the hand and cannot be explained in terms of linear receptive fields. Cutaneous signals interact with proprioceptive signals that carry information about hand conformation and these multimodal interactions are likely critical to haptic stereognosis, the ability to discern the three dimensional shape of objects. While the main function of tactile shape processing is to support interactions with objects, the underlying neural circuits also enable the identification of objects when vision is unavailable.

GRANTS

S. J. Bensmaia is supported by National Science Foundation Grant IOS-1150209 and National Institute of Neurological Disorders and Stroke Grant NS-082865.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Author contributions: J.M.Y., S.S.K., P.H.T., and S.J.B. prepared figures; J.M.Y., S.S.K., P.H.T., and S.J.B. drafted manuscript; J.M.Y., S.S.K., P.H.T., and S.J.B. edited and revised manuscript; J.M.Y., S.S.K., P.H.T., and S.J.B. approved final version of manuscript.

REFERENCES

- Ageranioti-Belanger SA, Chapman CE. Discharge properties of neurones in the hand area of primary somatosensory cortex in monkeys in relation to the performance of an active tactile discrimination task. II. Area 2 compared with areas 3b and 1. Exp Brain Res 91: 207–228, 1992. [DOI] [PubMed] [Google Scholar]

- Akintunde A, Eisenman LM. External cuneocerebellar projection and Purkinje cell zebrin II bands: a direct comparison of parasagittal banding in the mouse cerebellum. J Chem Neuroanat 7: 75–86, 1994. [DOI] [PubMed] [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci 4: 324–330, 2001. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci 25: 189–220, 2002. [DOI] [PubMed] [Google Scholar]

- Appenteng K, Prochazka A. Tendon organ firing during active muscle lengthening in awake, normally behaving cats. J Physiol 353: 81–92, 1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Deneve S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci 8: 941–949, 2005. [DOI] [PubMed] [Google Scholar]

- Bensmaia SJ, Craig JC, Johnson KO. Temporal factors in tactile spatial acuity: evidence for RA interference in fine spatial processing. J Neurophysiol 95: 1783–1791, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bensmaia SJ, Denchev PV, Dammann JF III, Craig JC, Hsiao SS. The representation of stimulus orientation in the early stages of somatosensory processing. J Neurophysiol 28: 776–786, 2008a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bensmaia SJ, Hsiao SS, Denchev PV, Killebrew JH, Craig JC. The tactile perception of stimulus orientation. Somatosens Mot Res 25: 49–59, 2008b. [DOI] [PubMed] [Google Scholar]

- Bensmaia SJ, Manfredi LR. The sense of touch. In: Encyclopedia of Human Behavior, edited by Ramachandran VS. Amsterdam, The Netherlands: Elsevier, 2012. [Google Scholar]

- Berryman LJ, Yau JM, Hsiao SS. Representation of object size in the somatosensory system. J Neurophysiol 96: 27–39, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birznieks I, Jenmalm P, Goodwin AW, Johansson RS. Directional encoding of fingertip force by human tactile afferents. Acta Physiol Scand 167: A24, 1999. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Wolpert DM, Frith CD. The cerebellum contributes to somatosensory cortical activity during self-produced tactile stimulation. Neuroimage 10: 448–459, 1999. [DOI] [PubMed] [Google Scholar]

- Bliss JC, Katcher MH, Rogers CH, Shepard RP. Optical-to-tactile image conversion for the blind. IEEE Trans Man-Machine Sys MMS-11: 58–65, 1970. [Google Scholar]

- Bodegard A, Geyer S, Grefkes C, Zilles K, Roland PE. Hierarchical processing of tactile shape in the human brain. Neuron 31: 317–328, 2001. [DOI] [PubMed] [Google Scholar]

- Brusco MJ. On the concordance among empirical confusion matrices for visual and tactual letter recognition. Percept Psychophys 66: 392–397, 2004. [DOI] [PubMed] [Google Scholar]

- Burgess PR, Clark FJ. Characteristics of knee joint receptors in the cat. J Physiol 203: 317–335, 1969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Fabri M. Ipsilateral intracortical connections of physiologically defined cutaneous representations in areas 3b and 1 of macaque monkeys: projections in the vicinity of the central sulcus. J Comp Neurol 355: 508–538, 1995. [DOI] [PubMed] [Google Scholar]

- Burton H, Fabri M, Alloway KD. Cortical areas within the lateral sulcus connected to cutaneous representations in areas 3b and 1: A revised interpretation of the second somatosensory area in macaque monkeys. J Comp Neurol 355: 539–562, 1995. [DOI] [PubMed] [Google Scholar]

- Burton H, Sinclair RJ, Wingert JR, Dierker DL. Multiple parietal operculum subdivisions in humans: tactile activation maps. Somatosens Mot Res 25: 149–162, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell SK, Parker TD, Welker W. Somatotopic organization of the external cuneate nucleus in albino rats. Brain Res 77: 1–23, 1974. [DOI] [PubMed] [Google Scholar]

- Cerminara NL, Makarabhirom K, Rawson JA. Somatosensory properties of cuneocerebellar neurones in the main cuneate nucleus of the rat. Cerebellum 2: 131–145, 2003. [DOI] [PubMed] [Google Scholar]

- Chapman CE, Ageranioti-Belanger SA. Discharge properties of neurones in the hand area of primary somatosensory cortex in monkeys in relation to the performance of an active tactile discrimination task. I. Areas 3b and 1. Exp Brain Res 87: 319–339, 1991. [DOI] [PubMed] [Google Scholar]

- Chen J, Reitzen SD, Kohlenstein JB, Gardner EP. Neural representation of hand kinematics during prehension in posterior parietal cortex of the macaque monkey. J Neurophysiol 102: 3310–3328, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho YJ, Craig JC, Bensmaia SJ. Vision superior to touch in shape perception even with equivalent peripheral input. J Neurophysiol. First published October 28, 2015; doi: 10.1152/jn.00654.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen DA, Prud'homme MJ, Kalaska JF. Tactile activity in primate primary somatosensory cortex during active arm movements: correlation with receptive field properties. J Neurophysiol 71: 161–172, 1994. [DOI] [PubMed] [Google Scholar]

- Connor CE, Brincat SL, Pasupathy A. Transformation of shape information in the ventral pathway. Curr Opin Neurobiol 17: 140–147, 2007. [DOI] [PubMed] [Google Scholar]

- Cordo P, Gurfinkel VS, Bevan L, Kerr GK. Proprioceptive consequences of tendon vibration during movement. J Neurophysiol 74: 1675–1688, 1995. [DOI] [PubMed] [Google Scholar]

- Cordo PJ, Flores-Vieira C, Verschueren SM, Inglis JT, Gurfinkel V. Position sensitivity of human muscle spindles: single afferent and population representations. J Neurophysiol 87: 1186–1195, 2002. [DOI] [PubMed] [Google Scholar]

- Costanzo RM, Gardner EP. Multiple-joint neurons in somatosensory cortex of awake monkeys. Brain Res 214: 321–333, 1981. [DOI] [PubMed] [Google Scholar]

- Craig JC. Modes of vibrotactile pattern generation. J Exp Psychol Hum Percept Perform 6: 151–166, 1980. [DOI] [PubMed] [Google Scholar]

- Dandekar K, Raju BI, Srinivasan MA. 3-D finite-element models of human and monkey fingertips to investigate the mechanics of tactile sense. J Biomech Eng 125: 682–691, 2003. [DOI] [PubMed] [Google Scholar]

- Davidoff RA. The dorsal columns. Neurology 39: 1377–1385, 1989. [DOI] [PubMed] [Google Scholar]

- Deshpande G, Hu X, Stilla R, Sathian K. Effective connectivity during haptic perception: a study using Granger causality analysis of functional magnetic resonance imaging data. Neuroimage 40: 1807–1814, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DiCarlo JJ, Johnson KO. Receptive field structure in cortical area 3b of the alert monkey. Behav Brain Res 135 167–178, 2002. [DOI] [PubMed] [Google Scholar]

- DiCarlo JJ, Johnson KO. Spatial and temporal structure of receptive fields in primate somatosensory area 3b: effects of stimulus scanning direction and orientation. J Neurosci 20: 495–510, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DiCarlo JJ, Johnson KO, Hsiao SS. Structure of receptive fields in area 3b of primary somatosensory cortex in the alert monkey. J Neurosci 18: 2626–2645, 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dopjans L, Bulthoff HH, Wallraven C. Serial exploration of faces: comparing vision and touch. J Vis 12: 2012. [DOI] [PubMed] [Google Scholar]

- Edin BB. Quantitative analysis of static strain sensitivity in human mechanoreceptors from hairy skin. J Neurophysiol 67: 1105–1113, 1992. [DOI] [PubMed] [Google Scholar]

- Edin BB, Abbs JH. Finger movement responses of cutaneous mechanoreceptors in the dorsal skin of the human hand. J Neurophysiol 65: 657–670, 1991. [DOI] [PubMed] [Google Scholar]

- Edin BB, Johansson N. Skin strain patterns provide kinaesthetic information to the human central nervous system. J Physiol 487: 243–251, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Grefkes C, Zilles K, Fink GR. The somatotopic organization of cytoarchitectonic areas on the human parietal operculum. Cereb Cortex 17: 1800–1811, 2007. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Schleicher A, Zilles K, Amunts K. The human parietal operculum. I. Cytoarchitectonic mapping of subdivisions. Cereb Cortex 16: 254–267, 2006. [DOI] [PubMed] [Google Scholar]

- Feinberg TE, Rothi LJ, Heilman KM. Multimodal agnosia after unilateral left hemisphere lesion. Neurology 36: 864–867, 1986. [DOI] [PubMed] [Google Scholar]

- Fitzgerald PJ, Lane JW, Thakur PH, Hsiao SS. Receptive field (RF) properties of the macaque second somatosensory cortex: RF size, shape, and somatotopic organization. J Neurosci 26 6485–6495, 2006a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald PJ, Lane JW, Thakur PH, Hsiao SS. Receptive field properties of the macaque second somatosensory cortex: evidence for multiple functional representations. J Neurosci 24: 11193–11204, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald PJ, Lane JW, Thakur PH, Hsiao SS. Receptive field properties of the macaque second somatosensory cortex: representation of orientation on different finger pads. J Neurosci 26: 6473–6484, 2006b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman DP, Jones EG, Burton H. Representation pattern in the second somatic sensory area of the monkey cerebral cortex. J Comp Neurol 192: 21–41, 1980. [DOI] [PubMed] [Google Scholar]

- Friedman DP, Murray EA, O'Neill JB, Mishkin M. Cortical connections of the somatosensory fields of the lateral sulcus of macaques: Evidence for a corticolimbic pathway for touch. J Comp Neurol 252: 323–347, 1986. [DOI] [PubMed] [Google Scholar]

- Gaissert N, Wallraven C, Bulthoff HH. Visual and haptic perceptual spaces show high similarity in humans. J Vis 10: 2, 2010. [DOI] [PubMed] [Google Scholar]

- Gardner EP. Somatosensory cortical mechanisms of feature detection in tactile and kinesthetic discrimination. Can J Physiol Pharmacol 66: 439–454, 1988. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Babu KS, Ghosh S, Sherwood A, Chen J. Neurophysiology of prehension. III. Representation of object features in posterior parietal cortex of the macaque monkey. J Neurophysiol 98: 3708–3730, 2007a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Babu KS, Reitzen SD, Ghosh S, Brown AS, Chen J, Hall AL, Herzlinger MD, Kohlenstein JB, Ro JY. Neurophysiology of prehension. I. Posterior parietal cortex and object-oriented hand behaviors. J Neurophysiol 97: 387–406, 2007b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Costanzo RM. Properties of kinesthetic neurons in somatosensory cortex of awake monkeys. Brain Res 214: 301–319, 1981. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Costanzo RM. Temporal integration of multiple-point stimuli in primary somatosensory cortical receptive fields of alert monkeys. J Neurophysiol 43: 444–468, 1980. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Palmer CI. Simulation of motion on the skin. I. Receptive fields and temporal frequency coding by cutaneous mechanoreceptors of OPTACON pulses delivered to the hand. J Neurophysiol 62: 1410–1436, 1989. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cogn Sci 10: 278–285, 2006. [DOI] [PubMed] [Google Scholar]

- Gollisch T, Meister M. Eye smarter than scientists believed: neural computations in circuits of the retina. Neuron 65: 150–164, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodwin AW, Browning AS, Wheat HE. Representation of curved surfaces in responses of mechanoreceptive afferent fibers innervating the monkey's fingerpad. J Neurosci 15: 798–810, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodwin AW, Wheat HE. Sensory signals in neural populations underlying tactile perception and manipulation. Annu Rev Neurosci 27: 53–77, 2004. [DOI] [PubMed] [Google Scholar]

- Haenny PE, Maunsell JH, Schiller PH. State dependent activity in monkey visual cortex II. Retinal and extraretinal factors in V4. Exp Brain Res 69: 245–259, 1988. [DOI] [PubMed] [Google Scholar]

- Harada T, Saito DN, Kashikura K, Sato T, Yonekura Y, Honda M, Sadato N. Asymmetrical neural substrates of tactile discrimination in humans: a functional magnetic resonance imaging study. J Neurosci 24: 7524–7530, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hegner YL, Lee Y, Grodd W, Braun C. Comparing tactile pattern and vibrotactile frequency discrimination: a human fMRI study. J Neurophysiol 103: 3115–3122, 2010. [DOI] [PubMed] [Google Scholar]

- Houk J, Henneman E. Responses of Golgi tendon organs to active contractions of the soleus muscle of the cat. J Neurophysiol 30: 466–481, 1967. [DOI] [PubMed] [Google Scholar]

- Houk J, Simon W. Responses of Golgi tendon organs to forces applied to muscle tendon. J Neurophysiol 30: 1466–1481, 1967. [DOI] [PubMed] [Google Scholar]

- Hsiao S. Central mechanisms of tactile shape perception. Curr Opin Neurobiol 18: 418–424, 2008. [DOI] [PubMed] [Google Scholar]

- Huang CC, Sugino K, Shima Y, Guo C, Bai S, Mensh BD, Nelson SB, Hantman AW. Convergence of pontine and proprioceptive streams onto multimodal cerebellar granule cells. Elife 2: e00400, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. J Physiol 195: 215–243, 1968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen J, Poranen A. Movement-sensitive and direction and orientation-selective cutaneous receptive fields in the hand area of the post-central gyrus in monkeys. J Physiol 283: 523–537, 1978a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen J, Poranen A. Receptive field integration and submodality convergence in the hand area of the post-central gyrus of the alert monkey. J Physiol 283: 539–556, 1978b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito M, Tamura H, Fujita I, Tanaka K. Size and position invariance of neuronal responses in monkey inferotemporal cortex. J Neurophysiol 73: 218–226, 1995. [DOI] [PubMed] [Google Scholar]

- Iwamura Y, Tanaka M. Postcentral neurons in hand region of area 2: their possible role in the form discrimination of tactile objects. Brain Res 150: 662–666, 1978. [DOI] [PubMed] [Google Scholar]

- Iwamura Y, Tanaka M, Hikosaka O, Sakamoto M. Postcentral neurons of alert monkeys activated by the contact of the hand with objects other than the monkey's own body. Neurosci Lett 186: 127–130, 1995. [DOI] [PubMed] [Google Scholar]

- Iwamura Y, Tanaka M, Sakamoto M, Hikosaka O. Rostrocaudal gradients in the neuronal receptive field complexity in the finger region of the alert monkey's postcentral gyrus. Exp Brain Res 92: 360–368, 1993. [DOI] [PubMed] [Google Scholar]

- James TW, James KH, Humphrey GK, Goodale MA. Do Visual and Tactile Object Representations Share the Same Neural Substrate? Mahwah, NJ: Lawrence Erlbaum, 2006. [Google Scholar]

- Jenmalm P, Birznieks I, Goodwin AW, Johansson RS. Influence of object shape on responses of human tactile afferents under conditions characteristic of manipulation. Eur J Neurosci 18: 164–176, 2003. [DOI] [PubMed] [Google Scholar]

- Jenmalm P, Birznieks I, Johansson RS, Goodwin AW. Encoding of object curvature by human fingertip tactile afferents. J Neurosci 21: 8222–8237, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johansson RS. Tactile sensibility in the human hand: receptive field characteristics of mechanoreceptive units in the glabrous skin area. J Physiol 281: 101–123, 1978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johansson RS, Birznieks I. First spikes in ensembles of human tactile afferents code complex spatial fingertip events. Nat Neurosci 7: 170–177, 2004. [DOI] [PubMed] [Google Scholar]

- Johansson RS, Flanagan JR. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat Rev Neurosci 10: 345–359, 2009. [DOI] [PubMed] [Google Scholar]

- Johansson RS, Vallbo AB. Tactile sensibility in the human hand: relative and absolute densities of four types of mechanoreceptive units in glabrous skin. J Physiol 286: 283–300, 1979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson KO, Lamb GD. Neural mechanisms of spatial tactile discrimination: neural patterns evoked by braille-like dot patterns in the monkey. J Physiol 310: 117–144, 1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson KO, Phillips JR. Tactile spatial resolution. I. Two-point discrimination, gap detection, grating resolution, and letter recognition. J Neurophysiol 46: 1177–1192, 1981. [DOI] [PubMed] [Google Scholar]

- Jones EG. Lack of collateral thalamocortical projections to fields of the first somatic sensory cortex in monkeys. Exp Brain Res 52: 375–384, 1983. [DOI] [PubMed] [Google Scholar]

- Jones EG, Burton H. Areal differences in the laminar distribution of thalamic afferents in cortical fields of the insular, parietal and temporal regions of primates. J Comp Neurol 168: 197–248, 1976. [DOI] [PubMed] [Google Scholar]

- Jones EG, Friedman DP. Projection pattern of functional components of thalamic ventrobasal complex upon monkey somatic sensory cortex. J Neurophysiol 48: 521–544, 1982. [DOI] [PubMed] [Google Scholar]

- Jorntell H, Bengtsson F, Geborek P, Spanne A, Terekhov AV, Hayward V. Segregation of tactile input features in neurons of the cuneate nucleus. Neuron 83: 1444–1452, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaas JH, Collins CE. The organization of somatosensory cortex in anthropoid primates. Adv Neurol 93: 57–67, 2003. [PubMed] [Google Scholar]

- Kalaska JF. Central neural mechanisms of touch and proprioception. Can J Physiol Pharmacol 72: 542–545, 1994. [DOI] [PubMed] [Google Scholar]

- Kalaska JF, Caminiti R, Georgopoulos AP. Cortical mechanisms related to the direction of two-dimensional arm movements: Relations in parietal area 5 and comparison with motor cortex. Exp Brain Res 51: 247–260, 1983. [DOI] [PubMed] [Google Scholar]

- Kalaska JF, Sergio LE, Cisek P. Cortical control of whole-arm motor tasks. Novartis Found Symp 218: 176–190; discussion 190–201, 1998. [DOI] [PubMed] [Google Scholar]

- Khalsa PS, Friedman RM, Srinivasan MA, LaMotte RH. Encoding of shape and orientation of objects indented into the monkey fingerpad by populations of slowly and rapidly adapting mechanoreceptors. J Neurophysiol 79: 3238–3251, 1998. [DOI] [PubMed] [Google Scholar]

- Kim SS, Gomez-Ramirez M, Thakur PH, Hsiao Steven S. Multimodal interactions between proprioceptive and cutaneous signals in primary somatosensory cortex. Neuron 86: 555–566, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitada R, Kito T, Saito DN, Kochiyama T, Matsumura M, Sadato N, Lederman SJ. Multisensory activation of the intraparietal area when classifying grating orientation: a functional magnetic resonance imaging study. J Neurosci 26: 7491–7501, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klatzky RL, Lederman SJ. Haptic object perception: spatial dimensionality and relation to vision. Philos Trans R Soc Lond B Biol Sci 366: 3097–3105, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klatzky RL, Loomis JM, Lederman SJ, Wake H, Fujita N. Haptic identification of objects and their depictions. Percept Psychophys 54: 170–178, 1993. [DOI] [PubMed] [Google Scholar]

- Krubitzer L, Huffman KJ, Disbrow E, Recanzone G. Organization of area 3a in macaque monkeys: contributions to the cortical phenotype. J Comp Neurol 471: 97–111, 2004. [DOI] [PubMed] [Google Scholar]

- Lacey S, Sathian K. Visuo-haptic multisensory object recognition, categorization, and representation. Front Psychol 5: 730, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaMotte RH, Friedman RM, Lu C, Khalsa PS, Srinivasan MA. Raised object on a planar surface stroked across the fingerpad: Responses of cutaneous mechanoreceptors to shape and orientation. J Neurophysiol 80: 2446–2466, 1998. [DOI] [PubMed] [Google Scholar]

- LaMotte RH, Srinivasan MA. Neural encoding of shape: responses of cutaneous mechanoreceptors to a wavy surface stroked across the monkey fingerpad. J Neurophysiol 76: 3787–3797, 1996. [DOI] [PubMed] [Google Scholar]

- Li Hegner Y, Lutzenberger W, Leiberg S, Braun C. The involvement of ipsilateral temporoparietal cortex in tactile pattern working memory as reflected in beta event-related desynchronization. Neuroimage 37: 1362–1370, 2007. [DOI] [PubMed] [Google Scholar]

- Loomis JM. Analysis of tactile and visual confusion matrices. Percept Psychophys 31: 41–52, 1982. [DOI] [PubMed] [Google Scholar]

- Loomis JM. A model of character recognition and legibility. J Exp Psychol Hum Percept Perform 16: 106–120, 1990. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Klatzky RL, Lederman SJ. Similarity of tactual and visual picture recognition with limited field of view. Perception 20: 167–177, 1991. [DOI] [PubMed] [Google Scholar]

- Mancini F, Bolognini N, Bricolo E, Vallar G. Cross-modal processing in the occipito-temporal cortex: a TMS study of the Muller-Lyer illusion. J Cogn Neurosci 23: 1987–1997, 2011. [DOI] [PubMed] [Google Scholar]

- Matthews PB, Simmonds A. Sensations of finger movement elicited by pulling upon flexor tendons in man. J Physiol 239: 27P–28P, 1974. [PubMed] [Google Scholar]

- Maunsell JH, Sclar G, Nealey TA, DePriest DD. Extra retinal representations in area V4 in the macaque monkey. Vis Neurosci 7: 561–573, 1991. [DOI] [PubMed] [Google Scholar]

- McCurdy ML, Houk JC, Gibson AR. Organization of ascending pathways to the forelimb area of the dorsal accessory olive in the cat. J Comp Neurol 392: 115–133, 1998. [PubMed] [Google Scholar]

- Merabet L, Thut G, Murray B, Andrews J, Hsiao S, Pascual-Leone A. Feeling by sight or seeing by touch? Neuron 42: 173–179, 2004. [DOI] [PubMed] [Google Scholar]

- Mountcastle VB. Central nervous system mechanisms in mechanoreceptive sensibility. In: Handbook of Physiology. The Nervous System. Sensory Processes, edited by Geiger SR, Darian-Smith I, Brookhart JM, Mountcastle VB. Bethesda, MD: Am. Physiol. Soc., 1984, sect. 1, vol. 3, p. 789–878. [Google Scholar]

- Mountcastle VB, Lynch JC, Georgopoulos AP, Sakata H, Acuna C. Posterior parietal association cortex of the monkey: command functions for operations within extrapersonal space. J Neurophysiol 38: 871–908, 1975. [DOI] [PubMed] [Google Scholar]

- Murata A, Fadiga L, Fogassi L, Gallese V, Raos V, Rizzolatti G. Object representation in the ventral premotor cortex (area F5) of the monkey. J Neurophysiol 78: 2226–2230, 1997. [DOI] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol 83: 2580–2601, 2000. [DOI] [PubMed] [Google Scholar]

- Nelson RJ, Li B, Douglas VD. Sensory response enhancement and suppression of monkey primary somatosensory cortical neurons. Brain Res Bull 27: 751–757, 1991. [DOI] [PubMed] [Google Scholar]

- Ostry DJ, Romo R. Tactile shape processing. Neuron 31: 173–174, 2001. [DOI] [PubMed] [Google Scholar]

- Pack CC, Bensmaia SJ. Seeing and feeling motion: canonical computations in motion processing. PLoS Biol 13: e1002271, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pare M, Smith AM, Rice FL. Distribution and terminal arborizations of cutaneous mechanoreceptors in the glabrous finger pads of the monkey. J Comp Neurol 445: 347–359, 2002. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Hamilton R. The metamodal organization of the brain. Progr Brain Res 134: 427–445, 2001. [DOI] [PubMed] [Google Scholar]

- Paul RL, Merzenich MM, Goodman H. Representation of slowly and rapidly adapting cutaneous mechanoreceptors of the hand in Brodmann's areas 3 and 1 of Macaca mulatta. Brain Res 36: 229–249, 1972. [DOI] [PubMed] [Google Scholar]

- Pei YC, Bensmaia SJ. The neural basis of tactile motion perception. J Neurophysiol 112: 3023–3032, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei YC, Hsiao SS, Craig JC, Bensmaia SJ. Neural mechanisms of tactile motion integration in somatosensory cortex. Neuron 69: 536–547, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei YC, Hsiao SS, Craig JC, Bensmaia SJ. Shape invariant coding of motion direction in somatosensory cortex. PLoS Biol 8: e1000305, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips JR, Johansson RS, Johnson KO. Representation of braille characters in human nerve fibres. Exp Brain Res 81: 589–592, 1990. [DOI] [PubMed] [Google Scholar]

- Phillips JR, Johansson RS, Johnson KO. Responses of human mechanoreceptive afferents to embossed dot arrays scanned across fingerpad skin. J Neurosci 12: 827–839, 1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips JR, Johnson KO. Tactile spatial resolution. II. Neural representation of bars, edges, and gratings in monkey primary afferents. J Neurophysiol 46: 1192–1203, 1981a. [DOI] [PubMed] [Google Scholar]

- Phillips JR, Johnson KO. Tactile spatial resolution. III. A continuum mechanics model of skin predicting mechanoreceptor responses to bars, edges, and gratings. J Neurophysiol 46: 1204–1225, 1981b. [DOI] [PubMed] [Google Scholar]

- Phillips JR, Johnson KO, Browne HM. A comparison of visual and two modes of tactual letter resolution. Percept Psychophys 34: 243–249, 1983. [DOI] [PubMed] [Google Scholar]

- Phillips JR, Johnson KO, Hsiao SS. Spatial pattern representation and transformation in monkey somatosensory cortex. Proc Natl Acad Sci USA 85: 1317–1321, 1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WH, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: object-based representation in the human ventral pathway. Proc Natl Acad Sci USA 101: 5658–5663, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poggio GF, Mountcastle VB. A study of the functional contributions of the lemniscal and spinothalamic systems to somatic sensibility. Central nervous mechanisms in pain. Bull Johns Hopkins Hosp 106: 266–316, 1960. [PubMed] [Google Scholar]

- Pont SC, Kappers AM, Koenderink JJ. Similar mechanisms underlie curvature comparison by static and dynamic touch. Percept Psychophys 61: 874–894, 1999. [DOI] [PubMed] [Google Scholar]

- Porter LL, Izraeli R. The effects of localized inactivation of somatosensory cortex, area 3a, on area 2 in cats. Somatosens Mot Res 10: 399–413, 1993. [DOI] [PubMed] [Google Scholar]

- Pouget A, Deneve S, Duhamel JR. A computational perspective on the neural basis of multisensory spatial representations. Nat Rev Neurosci 3: 741–747, 2002. [DOI] [PubMed] [Google Scholar]

- Prather SC, Votaw JR, Sathian K. Task-specific recruitment of dorsal and ventral visual areas during tactile perception. Neuropsychologia 42: 1079–1087, 2004. [DOI] [PubMed] [Google Scholar]

- Proske U, Gandevia SC. The proprioceptive senses: their roles in signaling body shape, body position and movement, and muscle force. Physiol Rev 92: 1651–1697, 2012. [DOI] [PubMed] [Google Scholar]

- Proske U, Gregory JE. Signalling properties of muscle spindles and tendon organs. Adv Exp Med Biol 508: 5–12, 2002. [DOI] [PubMed] [Google Scholar]

- Prud'homme MJ, Cohen DA, Kalaska JF. Tactile activity in primate primary somatosensory cortex during active arm movements: cytoarchitectonic distribution. J Neurophysiol 71: 173–181, 1994. [DOI] [PubMed] [Google Scholar]

- Pruszynski JA, Johansson RS. Edge-orientation processing in first-order tactile neurons. Nat Neurosci 17: 1404–1409, 2014. [DOI] [PubMed] [Google Scholar]

- Pubols LM, LeRoy RF. Orientation detectors in the primary somatosensory neocortex of the raccoon. Brain Res 129: 61–74, 1977. [DOI] [PubMed] [Google Scholar]

- Quy PN, Fujita H, Sakamoto Y, Na J, Sugihara I. Projection patterns of single mossy fiber axons originating from the dorsal column nuclei mapped on the aldolase C compartments in the rat cerebellar cortex. J Comp Neurol 519: 874–899, 2011. [DOI] [PubMed] [Google Scholar]

- Rincon-Gonzalez L, Warren JP, Meller DM, Tillery SH. Haptic interaction of touch and proprioception: implications for neuroprosthetics. IEEE Trans Neural Syst Rehabil Eng 19: 490–500, 2011. [DOI] [PubMed] [Google Scholar]

- Ringach DL. Spatial structure and symmetry of simple-cell receptive fields in macaque primary visual cortex. J Neurophysiol 88: 455–463, 2002. [DOI] [PubMed] [Google Scholar]

- Roll JP, Vedel JP, Ribot E. Alteration of proprioceptive messages induced by tendon vibration in man: a microneurographic study. Exp Brain Res 76: 213–222, 1989. [DOI] [PubMed] [Google Scholar]

- Saal HP, Bensmaia SJ. Touch is a team effort: interplay of submodalities in cutaneous sensibility. Trends Neurosci 37: 689–697, 2014. [DOI] [PubMed] [Google Scholar]

- Sainburg RL, Ghilardi MF, Poizner H, Ghez C. Control of limb dynamics in normal subjects and patients without proprioception. J Neurophysiol 73: 820–835, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Srinivasan MA, LaMotte RH. Tactile discrimination of shape: responses of slowly and rapidly adapting mechanoreceptive afferents to a step indented into the monkey fingerpad. J Neurosci 7: 1682–1697, 1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sripati AP, Bensmaia SJ, Johnson KO. A continuum mechanical model of mechanoreceptive afferent responses to indented spatial patterns. J Neurophysiol 95: 3852–3864, 2006a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sripati AP, Yoshioka T, Denchev P, Hsiao SS, Johnson KO. Spatiotemporal receptive fields of peripheral afferents and cortical area 3b and 1 neurons in the primate somatosensory system. J Neurosci 26: 2101–2114, 2006b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stilla R, Sathian K. Selective visuo-haptic processing of shape and texture. Hum Brain Mapp 29: 1123–1138, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sur M, Garraghty PE, Bruce CJ. Somatosensory cortex in macaque monkeys: laminar differences in receptive field size in areas 3b and 1. Brain Res 342: 391–395, 1985. [DOI] [PubMed] [Google Scholar]

- Sur M, Merzenich MM, Kaas JH. Magnification, receptive-field area, and hypercolumn size in areas 3b and 1 of somatosensory cortex in owl monkeys. J Neurophysiol 44: 295–311, 1980. [DOI] [PubMed] [Google Scholar]

- Tal N, Amedi A. Multisensory visual-tactile object related network in humans: insights gained using a novel crossmodal adaptation approach. Exp Brain Res 198: 165–182, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thakur PH, Fitzgerald PJ, Hsiao SS. Second-order receptive fields reveal multidigit interactions in area 3b of the macaque monkey. J Neurophysiol 108: 243–262, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thakur PH, Fitzgerald PJ, Lane JW, Hsiao SS. Receptive field properties of the macaque second somatosensory cortex: nonlinear mechanisms underlying the representation of orientation within a finger pad. J Neurosci 26: 13567–13575, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vallbo AB, Johansson RS. Properties of cutaneous mechanoreceptors in the human hand related to touch sensation. Hum Neurobiol 3: 3–14, 1984. [PubMed] [Google Scholar]

- Van Boven RW, Ingeholm JE, Beauchamp MS, Bikle PC, Ungerleider LG. Tactile form and location processing in the human brain. Proc Natl Acad Sci USA 102: 12601–12605, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vega-Bermudez F, Johnson KO. SA1 and RA receptive fields, response variability, and population responses mapped with a probe array. J Neurophysiol 81: 2701–2710, 1999. [DOI] [PubMed] [Google Scholar]

- Vierck CJ, Favorov OV, Whitsel BL. Neural mechanisms of absolute tactile localization in monkeys. Somatosens Motor Res 6: 41–61, 1988. [DOI] [PubMed] [Google Scholar]

- Warren S, Hämäläinen HA, Gardner EP. Coding of the spatial period of gratings rolled across the receptive fields of somatosensory cortical neurons in awake monkeys. J Neurophysiol 56: 623–639, 1986. [DOI] [PubMed] [Google Scholar]

- Winter JA, Allen TJ, Proske U. Muscle spindle signals combine with the sense of effort to indicate limb position. J Physiol 568: 1035–1046, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise AK, Gregory JE, Proske U. The responses of muscle spindles to small, slow movements in passive muscle and during fusimotor activity. Brain Res 821: 87–94, 1999. [DOI] [PubMed] [Google Scholar]

- Yau JM, Celnik P, Hsiao SS, Desmond JE. Feeling better: separate pathways for targeted enhancement of spatial and temporal touch. Psychol Sci 25: 555–565, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yau JM, Connor CE, Hsiao SS. Representation of tactile curvature in macaque somatosensory area 2. J Neurophysiol 109: 2999–3012, 2013a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yau JM, Pasupathy A, Brincat SL, Connor CE. Curvature processing dynamics in macaque area V4. Cereb Cortex 23: 198–209, 2013b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yau JM, Pasupathy A, Fitzgerald PJ, Hsiao SS, Connor CE. Analogous intermediate shape coding in vision and touch. Proc Natl Acad Sci USA 106: 16457–16462, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zangaladze A, Epstein CM, Grafton ST, Sathian K. Involvement of visual cortex in tactile discrimination of orientation. Nature 401: 587–590, 1999. [DOI] [PubMed] [Google Scholar]

- Zhang M, Mariola E, Stilla R, Stoesz M, Mao H, Hu X, Sathian K. Tactile discrimination of grating orientation: fMRI activation patterns. Hum Brain Mapp 25: 370–377, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]