Abstract

Under‐recruitment into clinical trials is a common and costly problem that undermines medical research. To better understand barriers to recruitment into clinical trials in our region, we conducted a multimethod descriptive study. We initially surveyed investigators who had conducted or were currently conducting studies that utilized an adult or pediatric clinical research center (n = 92). We then conducted focus groups and key informant interviews with investigators, coordinators, and other stakeholders in clinical and translational research (n = 32 individuals). Only 41% of respondents reported that they had or were successfully meeting recruitment goals and 24% of the closed studies actually met their targeted recruitment goals. Varied reasons were identified for poor recruitment but there was not a single investigator or study “phenotype” that predicted enrollment outcome. Investigators commonly recruited from their own practice or clinic, and 29% used a manual electronic medical record search. The majority of investigators would utilize a service that provides recruitment advice, including feasibility assessment and consultation, easier access to the electronic health record and assistance with institutional review board and other regulatory requirements. Our findings suggest potential benefits providing assistance across a range of services that can be individualized to the varied needs of clinical and translational investigators.

Keywords: registries, regulatory science, participant recruitment

Introduction

Despite an abundance of recommendations to improve recruitment of participants into clinical trials,1, 2, 3, 4 poor recruitment has remained a costly and common barrier in advancing medical knowledge and improving health. Recently, Kitterman et al.5 reported that 31% of studies (range 10%–60% across academic departments) conducted at one academic medical center in the Northwest region were low enrollment studies, defined as one or fewer participants at study termination, with an overall impact estimated to be $1 million dollars for 2009. Indeed, and as reported in numerous reviews, low recruitment rates occur globally and across disease states and age groups.6, 7, 8, 9

Although there is consensus that under‐recruitment into clinical trials is a universal problem that dramatically reduces study efficiency while increasing the cost of research,5 investigators have only recently begun to more precisely measure the extent of poor or under‐recruitment and to examine root causes and predictors.

In 2006, the National Institutes of Health began the Clinical and Translational Science Awards (CTSA) program to catalyze clinical research in the United States by encouraging collaborations and team science across disciplines, departments, and institutions. CTSAs have recognized the importance of improving research recruitment and participant recruitment, and advancing regulatory science by evaluating such efforts.10 In a survey of pediatric clinical trials conducted by the Child Health Outcomes Committee of the Clinical and Translational Science Awards, 3/7 projects closed without meeting accrual targets. Recommendations from this report include using flexible recruitment plans for multisite studies, customizable to each site and prospectively incorporated, as well as targeted media outreach.11 Furthermore, in response to a recent Institute of Medicine Review, the aims of the CTSA have been revised. Two of the current aims of the CTSA program are (1) improving patient recruitment into studies and (2) reducing regulatory hurdles into multisite studies. The Institute of Translational Health Sciences (ITHS) at University of Washington (UW) is one of 62 CTSA programs and is dedicated to translating research into clinical practice for the benefit of patients and communities throughout Washington, Wyoming, Alaska, Montana, and Idaho.

As an initial step in designing an intervention to improve study recruitment in our region, we conducted a multimethod approach to identify common barriers and accelerators of clinical research by obtaining both quantitative and qualitative data on closed and open studies being conducted in ITHS supported clinical research centers at UW and Seattle Children's Hospital (SCH), and researchers affiliated with our programs. We sought to describe and identify characteristics at the investigator/coordinator, study, and system level related to study recruitment into clinical studies, to examine the relationship between investigator and study factors with perceptions of successful versus unsuccessful recruitment, and to assess the acceptability of various recruitment services to investigators.

Methods

Participant selection and survey development

Initially, a PubMed literature search was conducted to identify barriers and accelerators to recruitment using the key words/topics: (barriers/clinical trial enrollment/retention/minority recruitment/predicting or modeling recruitment/interventions/strategies clinical trial recruitment). Based on this review of the literature and discussion of our multidisciplinary team composed of pediatric and adult clinicians and researchers associated with ITHS, a survey was developed to assess study, investigator, and systems characteristics related to recruitment (Available upon request). In Phase I, the survey was administered using REDCap,12 emailing the survey link to investigators and/or coordinators conducting studies at the UW and SCH CRCs to be completed online. Studies were included if they were open for study activities at the time of the survey or if they had closed in the previous 2 years (March 2012 to March 2014). There were minor differences in the surveys for the closed versus open studies to make them more applicable for their respective status. Studies were excluded if they involved only questionnaires without other participant procedures. Investigators or coordinators who participated received a beverage coupon for $5.00, and were asked to indicate their interest in attending the follow‐up focus groups. Additionally, details of study characteristics and metrics of study recruitment were obtained from the CRC and PCRC study databases.

Focus groups

Focus groups were conducted to gather qualitative data on the experiences and perceptions of investigators and study staff currently engaged in clinical trial recruitment. Recruitment for the focus groups used a targeted email invitation sent to Phase I participants who had indicated interest in participating. Additional participants were identified by the project team and were sent individual email invitations. For participants unable to attend a focus group, a key informant interview was offered by a member of the project team. All key informants and focus group participants provided written informed consent and received a gift card incentive of $20 per focus group or interview.

Using a structured guide, focus group participants were asked to identify successes and challenges to recruitment in clinical trials. They also were given a list of possible interventions and asked to rank their interest. Four, 1‐hour groups were conducted with participants representing investigators and research study staff from the University of Washington, Seattle Children's, and other research partner institutions. Additionally, key informant interviews were conducted using the structured guide with investigators unable to attend a focus group.

All focus group participants were currently engaged in conducting a clinical investigation as either an investigator or research study staff. Focus groups’ discussions were audio‐recorded and subsequently deidentified and transcribed. The facilitators took field notes during each focus group. Key informant interviews lasted approximately 30 minutes, and interviewers took detailed notes and wrote a synopsis of each interview.

We analyzed transcripts and field notes by using open coding and constant comparative methods based on Glaser and Strauss’ grounded theory approach to qualitative data analysis.13, 14 A sample size of greater than 30 was chosen as it has been reported that 30 participants is a sufficient size for analyzing qualitative data for main themes.15 The transcripts were analyzed and tagged with codes, which were then grouped into two categories, barriers and successes to clinical trial enrollment. Data were coded using Dedoose Version 5.0.11.16 All materials and data interpretation were shared with the team for review and input. Analyses in process were discussed as a team on a bimonthly basis.

The study was approved by the Institutional Review Boards at University of Washington and Seattle Children's Hospital.

Statistical analysis

Descriptive statistics were prepared for all study characteristics obtained from clinical research center records as well as the study surveys, including frequencies and percentages for categorical variables and means, standard deviations, quartiles, and ranges for continuous variables. Additional descriptive statistics were examined stratifying the sample by status of study (open or closed) and location of the study (UW Clinical Research Center or SCH Pediatric Clinical Research Center). Chi‐square tests and logistic regression were used to examine the association between the outcome of whether or not a study was meeting (if open) or had met (if closed) its recruitment goals and categorical and continuous study characteristics, respectively. Additionally, heat maps of study characteristics were constructed separately for successful and unsuccessful studies to determine if there were apparent phenotypes of successful studies. All analyses were conducted using SAS Versions 9.2 and 9.4 (SAS Institute Inc., Cary, NC, USA).

Results

Study descriptors

A total of 225 studies met the inclusion/exclusion criteria. REDCap surveys were completed for 92 (41%) studies (41 closed studies, 51 open studies). (We also obtained limited information for an additional 25 pediatric Phase I hematology/oncology studies which were not included in the quantitative analysis as they represent a distinct subgroup with very small targeted enrollment.)

The majority of the studies were pediatric trials that utilized the PCRC (79%). Fifty‐five (60%) were investigations new drug (IND) or device exemption (IDE) studies, of which 29 (53%) were Phase 1–2 clinical trials. The studies were largely multicenter (70%) and over half were investigator‐initiated. The investigators and staff had significant prior experience with 60% of investigators having conducted more than 10 previous trials. The median number of days between the time the study was open for enrollment and first participant enrolled was 68 days, with an interquartile range of 22–186. Descriptive information on study type and personnel are displayed in Table 1.

Table 1.

Description of studies (N = 92)

| Characteristic | n (%) or Median [25th–75th percentile] |

|---|---|

| Location | |

| Adult CRC | 19 (21%) |

| Pediatric CRC | 73 (79%) |

| Trial type | |

| Phase I–II | 29 (32%) |

| Phase III | 23 (25%) |

| IND/IDE | 55 (60%) |

| Multicenter | 64 (70%) |

| Rare/orphan disease | 18 (20%) |

| Investigator initiated | 54 (59%) |

| Funding | |

| NIH | 30 (33%) |

| Industry | 42 (46%) |

| Investigator experience | |

| <6 Previous studies | 25 (27%) |

| >10 Previous studies | 55 (60%) |

| Study coordinator experience | |

| Five or fewer years | 16 (17%) |

| >10 Years | 41 (45%) |

| Currently open for study activities | 51 (55%) |

| Local target for enrollment | 11 [5–40] |

| Days between study open and first patient enrolled | 68 [22–186] |

Pretrial recruitment‐related activities

About half of the investigators conducted a pre‐trial feasibility assessment (Table 2) but few of these, 11/48 (22%), used a formal feasibility tool. Although a minority, 15 (16%), specifically budgeted resources for recruitment activities, most (n = 62, 67%) dedicated specific staff time to recruitment. The majority of investigators, 73 (79%) used knowledge of their own clinic population to determine the availability of their targeted potential study participants, with only 19 (21%) using the electronic health record (EHR) to determine potential participant availability. Only one study specifically targeted minority recruitment.

Table 2.

Recruitment activities

| Activity | n (%) Using/conducting |

|---|---|

| Feasibility assessment | 51 (55%) |

| Recruited from a patient registry | 24 (26%) |

| Used automated or manual EHR search | 44 (48%) |

| Recruited from PI/co‐PI clinic | 57 (62%) |

| Flyer/poster/print media | 33 (36%) |

| Radio/TV/Internet Ads | 8 (9%) |

| Direct community outreach | 14 (15%) |

| Specifically targeted minority populations | 1 (1%) |

| Specifically budgeted resources for recruitment | 15 (16%) |

| Allocated staff time for recruitment | 62 (67%) |

Recruitment activities

The most common strategy was to recruit directly from the principal investigator's (PI's) or co‐PI's clinic during a clinic visit (62%), followed by using print media such as flyers/posters (36%) (Table 2). An EHR search was used less than half the time to identify potential study participants; a manual EHR search was conducted for 29% of the studies, while an automated search was conducted by 23%. Direct mailing was used in 20% and advertising on radio or television was used by 5%. Only four studies used Internet Ads. A third of studies recruited via patient registries.

Successful versus unsuccessful recruitment

To assess investigator/coordinator perception of study recruitment, informants were asked, “were you/are you successfully meeting your recruitment goals?” For open studies (still enrolling), 45% reported they were meeting their recruitment goals. In contrast, 37% of closed studies were viewed as successful in recruitment, with the average across open and closed studies being 41%.

We also examined the percentage of closed studies who met their recruitment goals as determined by their targeted recruitment goal provided in response to the survey. Only 10/41 (24%) closed studies actually met their targeted recruitment goal. Thus, only a minority of studies were successful in meeting their recruitment goal based on either a prespecified target or subjective report.

We then compared “successful” versus “unsuccessful” trials to see if there were study or recruitment factors which discriminated between the two groups and could be used to identify studies or investigators at high risk for poor accrual (See Table 3). There were no significant differences in study or investigator characteristics between successful and unsuccessful studies.

Table 3.

Successful versus unsuccessful trials

| Characteristic | Successful (N = 38) | Unsuccessful (N = 54) |

|---|---|---|

| Length of time from study open for recruitment to first patient enrolled—median [25th–75th percentile] | 63 [22–143] | 81 [25–236] |

| Feasibility assessment prior to trial initiation—n (%) yes | 22 (58%) | 29 (54%) |

| Was the restrictiveness of incl/excl criteria a barrier to recruitment—n (%) yes | 17 (45%) | 29 (54%) |

| Assessment of burden on participants—n (%) extensive | 21 (55%) | 25 (48%) |

| Direct contact PI/Co‐PI clinic population—N (%) yes | 25 (66%) | 32 (59%) |

| NIH funding vs. other—n (%) NIH funded | 14 (37%) | 16 (30%) |

| Used automated EMR search/notification—n (%) yes | 7 (18%) | 14 (26%) |

Focus groups

Twenty‐nine informants participated in the four focus groups and three provided key informant interviews, yielding 127 pages (48,124 words) of transcripts and notes. Participants included junior and senior investigators, study coordinators, research scientists, and other support staff.

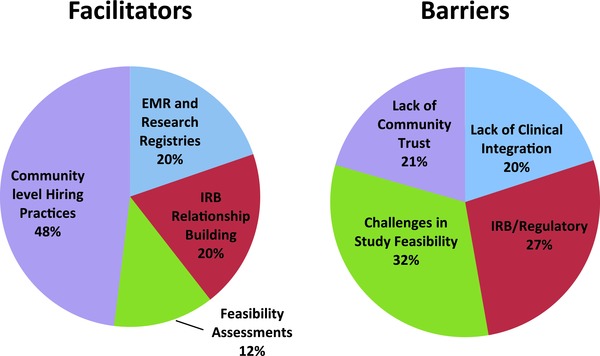

Participants described clinical trial recruitment as fraught with barriers at the institutional and community level. These barriers included lack of clinical support, IRB/regulatory issues, study feasibility, and lack of community trust and buy‐in. They also identified resources that allowed them to overcome these barriers such as accessing electronic health records and registries, establishing relationships with the IRB, conducting feasibility assessments, and hiring research staff from the target community. In general, the participants expressed a strong desire for additional resources at the institutional level for recruitment, particularly as it related to the recruitment and retention of underrepresented minorities. There were 180 different barriers, and 152 facilitators of success mentioned in the transcripts.

Several common themes emerged from the focus group/key informant data (Figure 1).

Figure 1.

Factors identified by focus group attendees as facilitators of and barriers to clinical trials recruitments. Percentage of different facilitators (n = 125) and barriers (n = 180).

Clinical practices are often utilized as the primary location of patient recruitment in clinical trials. However, the ability to recruit often relies on the cooperation from the clinicians and support staff. The focus group participants identified lack of support from the clinical staff as a barrier to recruitment. They felt their studies were often seen as “extra work” or “intrusive” to the clinic work flow. For studies not located in the investigators’ own clinical practice, participants articulated the need to engage the clinicians but noted that they weren't often trained or funded to do that.

“It was kind of like a hidden recruitment element, we were trained to recruit participants who are patients at a clinic, but the doctors weren't supportive. We realized we had to work with the doctors to get them on board before we could recruit their patients, but nobody had written that into anything, so it's like there was this extra step.”

Investigators were challenged to integrate research and clinical care even when they were recruiting from their own clinic population.

“I wear two hats, both researcher and clinical side. When I'm doing the clinical side, you're doing 15 things in 30 minutes. You just don't have time to think about it. I'll be quite frank. It's a shame because I know that I should be trying to encourage these folks. There's just not enough time.” “I don't think it [that a clinic patient qualified for the study] ever crossed my mind, and I was the PI. Because I was there wearing my clinician hat, and I was not wearing a researcher hat at all.”

Several participants discussed their successful methods for engaging the clinicians and support staff, including monthly study updates from the investigator at clinic staff meetings, including opt‐in paperwork with the general patient intake forms, and use of the electronic medical records to identify patients that fit the study criteria.

IRB/Regulatory: Regulatory systems are an integral part of ensuring that research is conducted in an ethical manner. And while participants consistently articulated their understanding of this requirement, they also spoke to the barriers these regulatory processes placed on recruitment. In particular, many of the participants work for multiple institutions, or their studies include more than one institution. A participant expressed frustration with the varying standards and processes across affiliated institutions.

“I work for three different institutions, and they all have different IRB standards.”

Additionally, many expressed a lack of communication and understanding of the requirements of the different IRB committees.

“An area of improvement would really be around a way for all of these partnered institutions to really be partners and to really have a common language, and to have a way of asking for what the other institution has or what they need in a way that I feel like I can—I'm a translator between institutions whenever I'm trying to facilitate a study from one site to another or with one IRB at another site can be very complicating."

The participants felt this lack of understanding translates into delayed approval processes and expressed frustration with the length of time it takes to make modifications. A participant stated these long delays do not allow them to be “nimble” and respond quickly to recruitment opportunities not stated in their original IRB applications.

Several participants shared their successes in working with their institutions regulatory systems. These comments focused on building a relationship with the IRB committee most commonly used, consulting with the IRB on the initial grant application to identify potential problems prior to submission to a funder, and consulting with colleagues and other research staff.

“It helps to have colleagues who've worked with that same committee, or even find someone who has, even if you don't know someone, cuz they can help walk you through it. You have to answer all of their questions, but it doesn't have to be an antagonistic relationship.”

Feasibility: A common topic of conversation was the feasibly of the study design and recruitment goals. Participants shared their experiences working on studies in which the recruitment goals were unrealistic and/or the target population was overestimated. A senior investigator stated:

“I think we start by setting studies up for struggles and barriers by having inflated recruiting goals. There's the balance between what we need to say to a study section to convince them that this research will yield valuable results and what we actually think is possible, which are not always the same things.”

In particular, junior investigators, who have limited experience in recruiting, were deemed more likely to create protocols that were not feasible.

“Sometimes you have PIs write grants and they get funded, but they have never done any recruiting and they have no idea [of recruitment barriers], making recruitment almost impossible.”

Several participants expressed frustration with being unable to find mentors or support from their departments to assist them in a feasibility assessment of their proposed protocol prior to submission. However, one participant shared their positive experience working in a department that conducts a feasibility assessment of all protocols. The participant noted that their study has had no problems in recruitment as a direct result of a consultation with a senior investigator, an IRB expert, community liaison and a research coordinator with more than 10 years of experience prior to the grant submission. The investigator used this assessment to create a protocol and recruitment strategy that has proven successful. Other participants expressed their interest in sharing information and using a similar process and felt it would be of great assistance toward successful recruitment.

Community trust: Participants identified community distrust in research as a barrier, particularly for minority communities who may have negative perceptions due to past experiences. Participants stated the need to acknowledge perception of research not benefitting the community and work with the community to overcome this barrier. However, they emphasized that this type of engagement takes time and funding that is not often available. A participant who works predominantly with minority communities stated:

“Research takes time. So does engagement.”

Building community trust is not a quick process but focus group participants who are engaged in community‐based participatory research emphasized its importance and the benefits to study recruitment.

“Preplanning with the community to find out best ways to recruit people and [it allows us to identify] the most realistic number of people we're gonna recruit.”

One participant shared their experience working with a community liaison; the liaison had already established the community trust and worked successfully with the investigator to engage the community in the study. Participants indicated high interest in similar community liaison services.

Use of the EHR and patient registries: The use of EHR data and patient registries was identified as a successful method of recruiting participants into clinical trials. Several focus group participants had worked with population or disease specific patient registries and felt this facilitated their successful trial recruitment. One participant stated:

“Recruitment…has not been a challenge, because we have an IRB‐approved registry, in which we register all our new families or ask them to register.”

However, they did express concerns regarding the perceived prohibitive cost of using these registries.

Coaching/mentoring/training: Participants identified lack of support and isolation as a barrier for investigators and staff to identify additional resources and solutions to low recruitment. Participants strongly advocated for a formal coaching/mentoring/training service that could link them to other investigators and staff who have been engaged in clinical trial recruitment. One participant stated:

“I think that's one of our biggest strengths, talking to a colleague.”

Additionally, they felt formal training in marketing, minority recruitment, and IRB processes would be valuable.

Acceptability and ranking of intervention strategies: Focus group attendees were provided a list of potential interventions and asked to indicate on a five‐point scale how likely they would be to use the specified intervention. The top rated potential interventions are listed in Table 4. Most participants stated they would utilize a service that would provide practical assistance to assist with poorly recruiting studies, as well as one that would provide assistance with accessing the EHR for recruitment. Also rated highly was working with the IRB to develop creative yet acceptable recruitment strategies.

Table 4.

Intervention acceptability data from the focus groups

| How likely would you be to use this intervention to improve your recruitment process? | Likely/most likely n/N (%) |

|---|---|

| Assistance in identify recruitment barriers and provide practical solutions while the study is enrolling | 28/29 (97) |

| Work with IRB to develop creative yet acceptable ways to recruit and consent | 24/29 (83) |

| Addressing issues which lead to delays at grants and contracts and IRB approval | 22/28 (78) |

| Development of improved methods for participant identification using the EMR | 21/28 (75) |

| Study staff training in recruitment best practices | 20/29 (69) |

| Prestudy consult for feasibility assessment | 19/28 (68) |

Discussion

Under‐recruitment was common in our sample of both closed and open studies, with 41% reporting successfully meeting local recruitment goals. As we did not collect data on the overall trial enrollment for multicenter studies, this enrollment data may not reflect overall trial success. However, despite increased awareness of the impact of under‐recruitment, our findings are entirely consistent with previous reports indicating that up to 80% of studies experience slow enrollment and 40%–60% fail to meet their targeted enrollment.5, 7, 8, 9, 17

Contributing to the lack of progress are two methodological issues: defining successful enrollment and the process of gathering recruitment and enrollment data. Indeed, direct comparison with other reports is difficult as there is not a standard metric for assessing enrollment success and benchmarking with other programs. In our cohort of studies, median time to first patient enrolled was over 2 months, with marked variability between studies. Previously, time to first accrual has been shown to predict accrual into cancer studies.9 While this metric has not been systematically validated as a predictor for ultimate accrual, first accrual may be a useful marker of studies at risk for poor accrual that would benefit from early intervention. Both the number of studies in our cohort that were not successful in enrolling participants and the lag between study initiation and first enrollment suggests the need for an intervention early in the course of a study. Systematically monitoring study enrollment and intervening early in studies that are slowly accruing participants may provide opportunity to modify the enrollment trajectory. Time to first accrual can also serve as an outcome measure for interventions designed to improve recruitment, as demonstrated by Kost et al.18 who evaluated the impact of a recruitment service and reported a median elapsed time from initiation of recruitment efforts to first participant enrolled of 10 days. Whether this translated to overall improved recruitment was not addressed.

A consistent theme in the survey and focus groups was that regulatory barriers impede participant recruitment due to the time that it takes to accomplish all of the regulatory and contract approvals. Coordinators felt that developing relationships with the IRB and learning how to maneuver between different IRB offices was important to expedite the approval process. They identified assistance and training for investigators and coordinators in working with the IRB around recruitment barriers as an important component of any recruitment assistance. Monitoring of latency to IRB approval and the contract process could provide bench marks to institutions and highlight the need for additional resources or alternative processes to reduce these barriers.19

Difficulty in obtaining study data on accrual was one lesson learned from this project. There was no central place within the institutions that uniformly tracked the relevant study metrics and obtaining data from institutional offices that collect information was problematic. For example, while the IRBs include the number of proposed study participants in the initial application and the number enrolled to date at each subsequent annual renewal, this data is not entered into a data base in a manner in which it can easily be retrieved. We obtained some of the data from the CRC databases, but most had to be requested from the investigators themselves. Thus, we were limited by the willingness of investigators to complete a survey as well as institutional and administrative barriers. Hopefully, CTSAs will develop reliable metrics and a process to implement tracking that can be embraced and replicated across institutions as interventions are developed to improve recruitment across the translational continuum.

In our heterogeneous sample of studies and investigators, we did not identify a consistent investigator or study “phenotype” that predicted recruitment success or failure. Although we had hoped to find a key barrier or accelerator variable that would lend itself to a targeted intervention, it is not surprising we did not, given the wide range of studies and investigators surveyed. This suggests that multiple barriers to recruitment would need to be addressed in order to provide benefit to the wide range of investigations conducted at an academic institution. There were however several key themes that emerged from the focus groups and study surveys related to the studies, the research environment and the community.

In our cohort, while a pretrial feasibility assessment occurred for about half the studies, few investigators used a formal tool/process for feasibility assessment, thus it is not clear on what basis the feasibility of the study was determined. It has been estimated that it is necessary to approach 10–40 times the number of people needed to study in order to achieve sufficient accrual.20 Therefore, understanding the drop‐off from each stage of recruitment (what has been termed the “leaky pipe”21) and how to estimate the number needed to screen in order to reach required accrual for any particular trial through an accurate feasibility assessment would better inform planning for recruitment efforts.22

Focus group participants emphasized the benefit of feasibility assessments, particularly for junior investigators, in identifying factors that may accelerate recruitment. Furthermore, research coordinators also noted that they would like to have been involved earlier in study development to influence the study design and/or recruitment plan to improve the feasibility of recruitment. Participant burden and restrictiveness of inclusion/exclusion criteria were identified as factors that affect feasibility. This suggests that an accurate pretrial feasibility assessment process easily available to investigators may be helpful, especially if assessing feasibility is linked with potential solutions.

A variety of recruitment techniques were used in the studies and opinions varied on their utility. Use of the electronic health record (EHR) for identification and recruitment of participants was used less than half the time with most investigators/staff conducting a manual search of the EHR. We do not know if this is because it was not necessary as they were largely recruiting from their own clinics, because of limited access to automated EHR searches, or because of other hurdles. Similarly, few investigators recruited from patient registries. However, despite being underutilized, focus group participants who used electronic medical records and/or registries felt these methods were conducive to meeting recruitment goals. Improving access to the EHR for recruitment activities and expansion of research registries could have a significant effect on recruitment efficiency. This approach has been used successfully by several CTSAs to aid investigators in recruitment.18, 23

The focus groups identified the difficulty of integrating clinical care and research as a key barrier to recruitment. This is not surprising as there is increasing push for efficiency in providing clinical care, which leaves little room for research activities when there are limited resources for research in clinical settings. Although many studies recruited from the PI or co‐PI's clinic, both investigators and staff related the difficulties in finding time and space to conduct study activities within the clinics. The lack of time available to integrate research into clinical activities has been identified as a barrier to recruitment in other study populations.24 Existing relationships between the clinicians and the researchers as well as increased support for research activities within the clinical setting was identified as helpful to recruitment efforts in general practice clinics in the United Kingdom.8, 25

There were no consistent investigator or study factors that predicted recruitment success or failure. This suggests that an effective recruitment service should provide expertise and a range of services that are not specific to a type of study or geared to an investigator characteristic. In addition, assistance should be provided which would span the spectrum of recruitment activities from the initial study development, identifying IRB and regulatory hurdles for specific types of studies to identifying local resources for increasing public awareness and acceptance of clinical trials. When considering the economic and personal costs of under‐recruitment to both specific institutions and the public health, institutional support for recruitment services would seem to be a reasonable investment.

While the studies in this sample set were largely disease‐specific rather than population‐specific, it is telling that only one study specifically had a plan for targeting minority recruitment. Currently, minority populations are underrepresented in clinical trial research despite a mandate by the National Institute of Health to ensure minority participation in trials.26 While we did not gather data on whether the closed studies in the sample set met the NIH standard for minority recruitment, research shows minority participation remains limited in clinical trials27, 28 and the lack of studies having a targeted minority recruitment plan may be indicative as to why minority populations are not represented in research.27, 29, 30 Community trust and engagement was a theme that emerged from the focus groups, particularly important for recruitment from minority communities, as the level of distrust may be high.30, 31, 32 Participants engaged in community‐based research articulated that it was essential to devote time and resources to develop relationships within the targeted community, yet few studies or investigators devoted resources to this goal.

This study had several limitations that should be considered. First, this is an observational study and was conducted with a limited number of studies with a preponderance of pediatric studies and studies conducted in a clinical research center. Thus, caution should be used in generalizing to other types of research, such as health services or practice based research. However, in our focus groups we did include a broad group of investigators including health services researchers, who reported similar concerns and several investigators/coordinators who work with rural populations. Nonetheless, most studies were conducted at a large urban medical center in the Northwest, and may not generalize to other rural populations or other geographic areas. Since the studies included in this investigation all utilized the clinical research centers at an academic institution, not surprisingly the majority were clinical trials, and over half being investigational new drug studies. As a result they were largely disease specific treatment trials that would explain why investigators most commonly recruited from their own clinic or clinical population. Nonetheless, investigator‐ and study‐specific interventions to improve recruitment, such as assessing feasibility, improving access to electronic medical record, and providing individualized study review by a recruitment specialist prior to IRB submission would likely be helpful regardless of the type of study or where it is conducted.

In summary, our findings are consistent with previous literature on recruitment and confirm the pervasiveness of poorly recruiting trials. We were unable to identify a single investigator or study “phenotype” that predicted poor study recruitment, suggesting that recruitment success is highly specific to the study or research team. Nevertheless, both our quantitative and qualitative data suggest common barriers at multiple levels starting with trial design which may have feasibility issues in the local setting, IRB/regulatory issues which delay trial start, identification and access to trial participants and lack of participant and community support for trials. The investigators and research staff also identified accelerators of participant recruitment including optimized community‐level recruitment practices, IRB relationship building including systematic and frequent dialogue and education of both IRB and coordinators and facilitating use of EMR and research registries. Notably, at this time there was broad support among investigators and coordinators for a recruitment service that could identify recruitment barriers and tailor solutions while also working to address system issues such factors contributing to IRB delays or providing assistance and access to EMR. Furthermore, our acceptability data suggest that educational and consultative services would be utilized by investigators and coordinators if available. However, effectiveness studies of interventions directed at improving study recruitment are hindered by difficulties in gathering data on study accrual during a trial, and definitional issues, and as yet there are few empirical studies demonstrating improved recruitment that is not study or disease specific.

Acknowledgments

This publication was supported by the National Center for Advancing Translational Sciences of the National Institutes of Health under Award Number UL1TR000423. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1. McDonald AM, Treweek S, Shakur H, Free C, Knight R, Speed C, Campbell MK. Using a business model approach and marketing techniques for recruitment to clinical trials. Trials. 2011; 12: 74‐85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Treweek S, Lockhart P, Pitkethly M, Cook JA, Kjeldstrøm M, Johansen M, Taskila TK, Sullivan FM, Wilson S, Jackson C, et al. Methods to improve recruitment to randomised controlled trials: Cochrane systematic review and meta‐analysis. BMJ Open. 2013: 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Caldwell PH, Hamilton S, Tan A, Craig JC. Strategies for increasing recruitment to randomised controlled trials: systematic review. PLoS Med. 2010; 7: e1000368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Fletcher B, Gheorghe A, Moore D, Wilson S, Damery S. Improving the recruitment activity of clinicians in randomised controlled trials: a systematic review. BMJ Open. 2012; 2: e000496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Kitterman DR, Cheng SK, Dilts DM, Orwoll ES. The prevalence and economic impact of low‐enrolling clinical studies at an academic medical center. Acad Med. 2011; 86: 1360–1366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Schroen AT, Petroni GR, Wang H, Gray R, Wang XF, Cronin W, Sargent DJ, Benedetti J, Wickerham DL, Djulbegovic B, et al. Preliminary evaluation of factors associated with premature trial closure and feasibility of accrual benchmarks in phase III oncology trials. Clin Trials. 2010; 7: 312–321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. McDonald AM, Knight RC, Campbell MK, Entwistle VA, Grant AM, Cook JA, Elbourne DR, Francis D, Garcia J, Roberts I, et al. What influences recruitment to randomised controlled trials? A review of trials funded by two UK funding agencies. Trials. 2006; 7: 9‐16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Campbell MK, Snowdon C, Francis D, Elbourne D, McDonald AM, Knight R, Entwistle V, Garcia J, Roberts I, Grant A, et al.; STEPS group . Recruitment to randomised trials: strategies for trial enrollment and participation study. The STEPS study. Health Technol Assess. 2007; 11: iii, ix‐105. [DOI] [PubMed] [Google Scholar]

- 9. Cheng SK, Dietrich MS, Dilts DM. Predicting accrual achievement: monitoring accrual milestones of NCI‐CTEP‐sponsored clinical trials. Clin Cancer Res. 2011; 17: 1947–1955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Kost RG, Mervin‐Blake S, Hallarn R, Rathmann C, Kolb HR, Himmelfarb CD, D'Agostino T, Rubinstein EP, Dozier AM, Schuff KG. Accrual and recruitment practices at Clinical and Translational Science Award (CTSA) institutions: a call for expectations, expertise, and evaluation. Acad Med. 2014; 89: 1180–1189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Tuchman M, Bosson‐Heenan J, Gipson D, Hirschfelld K, Lakes K, MacHardy J, Pemberton M, Parucker M, Johnson T, VanHuysen C. Enhancing subject accrual in multi‐site trials. Presentation on behalf of the CTSA Child Health Oversight Committee. Clinical Trials Science Award Principal Investigators Annual meeting. Bethesda, Maryland 2011.

- 12. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata‐driven methodology and workflow process for providing translational research informatics support. J Biomed. Informat. 2009; 42: 377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Glaser BG, Strauss AL. The Discovery of Grounded Theory: Strategies for Qualitative Research. Chicago: Aldine Publishing Company; 1967. [Google Scholar]

- 14. Starks H, Brown Trinidad S. Choose your method: a comparison of phenomenology, discourse analysis, and grounded theory. Qual Health Res. 2007; 17: 1372–1380. [DOI] [PubMed] [Google Scholar]

- 15. Sandelowski M. Sample size in qualitative research. Res Nurs Health. 1995; 18: 179–183. [DOI] [PubMed] [Google Scholar]

- 16. Dedoose Version 5.0.11, web application for managing, analyzing, and presenting qualitative and mixed method research data SocioCultural Research Consultants, LLC, 2014. www.dedoose.com. Accessed November 20, 2014.

- 17. Cheng SK, Dietrich MS, Dilts DM. A sense of urgency: evaluating the link between clinical trial development time and the accrual performance of cancer therapy evaluation program (NCI‐CTEP) sponsored studies. Clin Cancer Res. 2010; 16: 5557–5563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Kost RG, Corregano LM, Rainer TL, Melendez C, Coller BS. A data‐rich recruitment core to support translational clinical research. Clin Transl Sci. 2015; 8: 91–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Strasser JE, Cola PA, Rosenblum D. Evaluating various areas of process improvement in an effort to improve clinical research: discussions from the 2012 Clinical Translational Science Award (CTSA) Clinical Research Management workshop. Clin Transl Sci. 2013; 6: 317–320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. McGill JB. Recruiting research participants In: Schuster DP, Powers WJ, eds. Translational and Experimental Clinical Research. Philadelphia, PA: Lippincott Williams & Wilkins; 2005: 54–66. [Google Scholar]

- 21.Understanding the Process of Subject Participation. CPP Inc. http://www.drugdevelopment‐technology.com/contractors/consulting/cpp/cpp2.html. Accessed June 2, 2015.

- 22. Hagen NA, Wu JS, Stiles CR. A proposed taxonomy of terms to guide the clinical trial recruitment process. J Pain Symp Manage. 2010; 40: 102–110. [DOI] [PubMed] [Google Scholar]

- 23. Ferranti JM, Gilbert W, McCall J, Shang H, Barros T, Horvath MM. The design and implementation of an open‐source, data‐driven cohort recruitment system: the Duke Integrated Subject Cohort and Enrollment Research Network (DISCERN). JAMIA. 2012; 19: e68–e75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Spaar A, Frey M, Turk A, Karrer W, Puhan MA. Recruitment barriers in a randomized controlled trial from the physicians’ perspective: a postal survey. BMC Med Res Methodol. 2009; 9: 14‐21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Patterson S, Mairs H, Borschmann R. Successful recruitment to trials: a phased approach to opening gates and building bridges. BMC Med Res Methodol. 2011; 11: 73‐78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.NIH Policy and Guidelines on The Inclusion of Women and Minorities as Subjects in Clinical Research—Amended, October, 2001. http://grants.nih.gov/grants/funding/women_min/guidelines_amended_10_2001.htm. Accessed August 24, 2015.

- 27. Anwuri VV, Hall LE, Mathews K, Springer BC, Tappenden JR, Farria DM, Jackson S, Goodman MS, Eberlein TJ, Colditz GA. An institutional strategy to increase minority recruitment to therapeutic trials. Cancer Causes Contr. 2013; 24: 1797–1809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Castillo‐Mancilla JR, Cohn SE, Krishnan S, Cespedes M, Floris‐Moore M, Schulte G, Pavlov G, Mildvan D, Smith KY, Actg Underrepresented Populations Survey Group . Minorities remain underrepresented in HIV/AIDS research despite access to clinical trials. HIV Clin Trials. 2014; 15: 14–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Durant RW, Wenzel JA, Scarinci IC, Paterniti DA, Fouad MN, Hurd TC, Martin MY. Perspectives on barriers and facilitators to minority recruitment for clinical trials among cancer center leaders, investigators, research staff, and referring clinicians: enhancing minority participation in clinical trials (EMPaCT). Cancer. 2014; 120(Suppl 7): 1097–1105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Lang R, Kelkar VA, Byrd JR, Edwards CL, Pericak‐Vance M, Byrd GS. African American participation in health‐related research studies: indicators for effective recruitment. J Public Health Manag Pract. 2013; 19: 110–118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Braunstein JB, Sherber NS, Schulman SP, Ding EL, Powe NR. Race, medical researcher distrust, perceived harm, and willingness to participate in cardiovascular prevention trials. Medicine (Baltimore). 2008; 87: 1–9. [DOI] [PubMed] [Google Scholar]

- 32. Shavers VL, Lynch CF, Burmeister LF. Racial differences in factors that influence the willingness to participate in medical research studies. Ann Epidemiol. 2002; 12: 248–256. [DOI] [PubMed] [Google Scholar]