Abstract

In spoken word perception, voice specificity effects are well-documented: When people hear repeated words in some task, performance is generally better when repeated items are presented in their originally heard voices, relative to changed voices. A key theoretical question about voice specificity effects concerns their time-course: Some studies suggest that episodic traces exert their influence late in lexical processing (the time-course hypothesis; McLennan & Luce, 2005), whereas others suggest that episodic traces influence immediate, online processing. We report two eye-tracking studies investigating the time-course of voice-specific priming within and across cognitive tasks. In Experiment 1, participants performed modified lexical decision or semantic classification to words spoken by four speakers. The tasks required participants to click a red “×” or a blue “+” located randomly within separate visual half-fields, necessitating trial-by-trial visual search with consistent half-field response mapping. After a break, participants completed a second block with new and repeated items, half spoken in changed voices. Voice effects were robust very early, appearing in saccade initiation times. Experiment 2 replicated this pattern while changing tasks across blocks, ruling out a response priming account. In the General Discussion, we address the time-course hypothesis, focusing on the challenge it presents for empirical disconfirmation, and highlighting the broad importance of indexical effects, beyond studies of priming.

Spoken word recognition is a complex process, involving the appreciation of highly variable speech signals as discrete, meaningful units. Despite superficial variations, including differences in talker identity, amplitude, speaking rate, pitch, and other idiosyncratic details (all commonly referred to as indexical variations; Abercrombie 1967; Pisoni, 1993), speech recognition is typically robust. Listeners fluently recognize words and discourse, with no apparent hindrance from surface variation. But what happens to the idiosyncratic details that accompany spoken words? Are they stored in memory as integral components of those words, do they fade away, or are they stored as “separate,” non-phonetic information? The answers to such questions are central for evaluating theories of lexical representation and access. In fact, beyond spoken word perception, many theories in perception, memory and categorization rely critically on the general premise of “content addressable memory.” In broad terms, there are two ways that perceptual stimuli may contact stored information in the brain. One is based on pointers, or addresses: When hearing a spoken word, seeing a face, etc., “address addressable” systems use that input to help locate the appropriate stored, abstract representation. In contrast, in content addressable memory (as in connectionist theories; Bechtel & Abrahamsen, 2002; Hinton & Anderson, 1981), information is retrieved “directly,” by using stimulus features as retrieval cues to activate stored knowledge. Thus, partial information can activate perception or recall of entire stimulus assemblies. A key advantage in content-addressable systems is noise tolerance: “Address addressable” systems function by resolving noise to isolate stable cues. Content-addressable systems can use “noise” (e.g., voice-specific features) to directly access prior memories.

There are certain behavioral domains that naturally encourage content-addressable accounts. For example, in face perception, we are able to simultaneously classify faces into categories such as “man” or “woman,” estimate ages, appreciate ethnic variations, etc. But we also fluently recognize friends and family, despite variations in appearances or contexts. Therefore, theories of face perception are often geared toward explaining how variable inputs activate specific stored knowledge (e.g., Lewis, 2004; Valentine, 1991), allowing us to recognize personal acquaintances. Other domains, however, encourage more abstract accounts. For example, reading is naturally characterized as a combinatorial process, wherein (in English) 26 letters are recombined into thousands of words. As such, theoretical accounts tend to treat reading as a constructive process, such that small units are rapidly combined to “unlock” stored knowledge. The foregoing contrast, between pointer systems and content-addressable memory, has long characterized a theoretical debate in speech perception. Indeed, the central theoretical question in speech perception is how listeners cope with multiple, overlapping cues to segment and word identity (e.g., Apfelbaum, Bullock-Rest, Rhone, Jongman & McMurray, 2014). The idiosyncratic signal changes introduced by different speakers can be viewed either as adding yet more noise and complexity to speech processing (e.g., Neary, 1997), or they can be viewed as beneficial information that constrains phonetic interpretations (e.g., McMurray & Jongman, 2011; Smits, 2001). The adjudication between these divergent views has long been one of main goals in theories of speech (and spoken word) perception.

By necessity, all theories of word perception posit some form of abstraction, suggesting that speech input activates sublexical units, codes that allow content-addressable access to lexical knowledge (Luce & Pisoni, 1998; Norris, 1994; Stevens, 2002). By most accounts, although indexical variations are clearly noticed (and used) by the listener, once those details have been exploited for speech decoding, they have no linguistic role and are likely forgotten (e.g., Lahiri & Marslen-Wilson, 1991; Marslen-Wilson & Warren, 1994). Abundant evidence (and common intuition) supports this view: After a conversation, people typically remember the messages that were shared, with relatively little memory for precise wording or sound patterns. By its very nature, language requires a person to fluently recombine small sets of speech units (e.g., segments, syllables, words) into new, meaningful strings – such behavior requires abstract representations at multiple levels (e.g., Chomsky, 1995). At the same time, however, people learn the vocal habits of their friends, speech imitation is common, and perceptual learning occurs for accented or idiosyncratic speech.

Intuitively, both linguistic and indexical information affect the experience of language. We hear messages, and we hear people. Clearly, the linguistic dimension is primary: It carries far more information (in bits per second), varies far more quickly over time, and constitutes the reason for speaking in the first place. The indexical dimension is inherently less important, and provides relatively little novel information from moment to moment in normal speech (although emotional tones may change relatively quickly). Nevertheless, despite their asymmetric psychological importance, both dimensions of speech are interwoven into the signal, and neither can be attended in the absence of the other. For this reason, some theories suggest that words and voices (broadly speaking) are encoded in memory as holistic exemplar representations, with the potential to facilitate later processing of perceptually similar words (e.g., Goldinger, 1996). Although such exemplar views are fairly extreme, other models propose that voice-specific information helps constrain immediate phonetic processing. For example, in the C-CuRE (Computing Cues Relative to Expectations) model, speech perception entails the simultaneous coding of multiple, overlapping spectral cues (Apfelbaum et al., 2014; McMurray & Jongman, 2011). Although some raw cues are reliable, C-CuRE optimizes processing by computing cue values in relative terms, such as appreciating that an F0 cue is high for one speaker, but low for another, and changing the evidence for voicing accordingly. Thus, voice-specific information is critically involved in “abstract” speech classification, from the earliest moments of perception. As the level of general principles, a theory such as C-CuRE offers an approach to content-addressable memory, and could (for example) be used to explain how a familiar melody can be recognized when played on a strange instrument.

Empirically, it is well-known that speaker variability across words creates processing “costs” for listeners. These are observed in perception1 in young adults (Mullennix, Pisoni, & Martin 1989; Magnuson & Nusbaum, 2007; Nusbaum & Morin, 1992), hearing-impaired adults (Kirk, Pisoni, & Miyamoto, 1997), elderly adults (Sommers, 1996) and preschool children (Ryalls & Pisoni, 1997). Similar costs are observed in word learning: Explicit memory is reduced for word lists presented in multiple voices (Goldinger, Pisoni, & Logan, 1991; Martin, Mullennix, Pisoni, & Summers, 1989). The evidence suggests that attention is captured by abrupt speaker changes, perhaps because they force the listener to “recalibrate” their internal models for deriving the segmental content of words (Apfelbaum et al., 2014; Mullennix et al., 1989). At the same time, word-to-word speaker variations are also encoded into memory. Word perception typically improves with repeated presentations (the repetition effect; Jacoby & Brooks, 1984; Jacoby & Dallas, 1981; Jacoby & Hayman, 1987). When idiosyncratic surface details such as voice are preserved, this facilitation is enhanced (e.g., Church & Schacter, 1994; Schacter & Church, 1992).2

Across many studies, different-voice repetitions yield performance decrements, relative to same-speaker repetitions (Bradlow, Nygaard, & Pisoni, 1999; Craik & Kirsner, 1974; Fujimoto, 2003; Goh, 2005; Goldinger, 1996, 1998; Palmeri et al., 1993; Sheffert, 1998a; 1998b; Yonan & Sommers, 2000). Such voice specificity effects arise in both perceptual and memorial tasks. For example, Goldinger (1996) found long-lasting voice effects in explicit and implicit memory. And, by measuring perceptual similarity (using MDS) among the voices, Goldinger found that priming was highly specific: As the similarity between the study and test voices increased, so did recognition accuracy (see also Goh, 2005).

Beyond “off-line” memory measures, voice effects are occasionally (but not always) observed in perceptual RTs (e.g., Meehan & Pilotti, 1996), suggesting that episodic traces of encoded words affect the online perception of test words. For example, Goldinger (1998) found that shadowing RTs were shorter for same-voice (SV) word repetitions, relative to different-voice (DV) repetitions. Moreover, while shadowing, participants spontaneously imitated words they had previously encountered, even if the last encounter was several days earlier (Goldinger & Azuma, 2004), a finding that suggests great fidelity in voice-specific memory for prior tokens. These results were modeled with MINERVA 2 (Hintzman, 1986, 1988), an exemplar model in which each encountered word creates a new memory trace. The model successfully predicted both shadowing RTs and degrees of imitation, and was sensitive to word frequency and recency, as were participants. Episodic (or exemplar) theories of word perception have proliferated (e.g., Johnson, 2006; Pierrehumbert, 2001; Walsh, Möbius, Wade, & Schütze, 2010) because – like exemplar models of perceptual classification – they are able to explain “abstract” behavior while retaining sensitivity to specific experiences. The same dual benefits arise in relative coding models, such as C-CuRE (McMurray & Jongman, 2011), which derive abstract codes by using specific, voice-level cues. (Although C-CuRE has not been tested as a model for specificity effects, it appears to be relatively straightforward extension.) Although assumptions differ across models, they generally all assume that segmental, semantic, and indexical information are encoded together. Presenting that same stimulus complex later will (potentially) activate its prior memory trace, affecting performance (although, as noted by Orfanidou, Davis, Ford & Marslen-Wilson, 2011, the locus of such effects may be response-based, rather than perceptual).

The Time-Course Hypothesis

Based on voice specificity effects, many theories of word perception now assume that both abstract and episodic information can affect processing. A theoretically critical question, however, regards the time-course of specificity effects in the flow of lexical processing. The question is whether episodic memory traces affect early perceptual stages, or whether abstract representations truly “drive” perceptual processing. (In this context, it is important to note that all models of word perception – whether episodic or abstract – can easily incorporate structures such as phonemes, which could theoretically dominate early processing; e.g., Wade et al., 2010.) McLennan, Luce, and colleagues suggest that voice specificity effects arise late in processing, after abstract sublexical and lexical units have already been perceptually resolved, or nearly resolved (Luce & Lyons, 1998; Krestar & McLennan, 2013; McLennan & Luce, 2005), a prediction called the time-course hypothesis. Conversely, others have suggested that episodes influence the earliest moments of perception (e.g., Creel, Aslin, & Tanenhaus, 2008; Goldinger, 1998).

To examine the time-course hypothesis, McLennan and Luce (2005) manipulated the relative ease of differentiation in a lexical decision task (by manipulating the phonotactic probabilities of the nonwords), and response delays in a shadowing task. They predicted that difficult lexical decisions and delayed shadowing would yield stronger specificity effects, owing to extended processing times. Indeed, when lexical decisions were difficult, voice specificity effects emerged. When the task was easier, only word repetition (priming) effects were observed. Additionally, voice effects only emerged in delayed shadowing RTs. This is opposite from the pattern observed by Goldinger (1998), although for several methodological reasons, it is difficult to compare across studies, which involved different experimental tasks, and included different numbers of words and voices. Further, one indexical variation used by McLennan and Luce (2005) was a manipulation of speaking rate, which may interact with voice-specific priming (see General Discussion). Given these methodological differences, the studies cannot be directly compared, making it difficult to appreciate the time-course of voice specificity effects.

A strength of the time-course hypothesis is its basis in a well-defined speech processing model, adaptive resonance theory (ART, Grossberg, 1980; 1999; 2003). According to ART (specifically, variants called ARTPHONE and ARTWORD; Grossberg, Boardman & Cohen, 1997; Grossberg & Myers, 2000), conscious speech perception is an emergent property of resonant feed-forward/feedback loops, acting upon speech units of all grain sizes. In brief, processing in ART spreads upward, as smaller perceptual units activate larger units, with feedback loops between levels. Initially, feature input activates items in working memory, which then activate list chunks from long-term memory. List chunks reflect prior learning, and correspond to any number of feature combinations (e.g., phonemes, syllables, words). Once list chunks are activated, items continue to feed activation upward via synaptic connections, and input-consistent chunks return activation in a self-perpetuating feedback loop (a resonance). This resonance binds sensory input into a coherent gestalt, allowing attention to be directed to the percept and an episodic memory trace to be formed (for reviews of ART, as it pertains to speech perception, see Goldinger & Azuma, 2003; Grossberg, 2003).

Of particular relevance, according to ART, high-frequency items in memory establish resonance quickly and efficiently when activated, relative to lower-frequency items. McLennan and Luce (2005) cited this ART principle to predict late-arriving specificity effects. By their account, frequently encountered items (e.g., phonemes, biphones, syllables, common words) are functionally abstract, whereas combinations of those items and indexical features are not. By definition, abstract features are far more common than any idiosyncratic variations (e.g., you hear the word “waffle” more often than you hear “waffle” in any particular voice). Therefore, sublexical and lexical information will achieve resonance sooner than voice-specific information. If voice specificity effects arise, it should be when performance is relatively slow, consistent with McLennan and Luce’s (2005) findings.

Although ART predicts that high-frequency elements will resonate more quickly than rare elements, voice specificity effects typically arise after recent presentation. (This is true for specificity effects that arise in reading, music perception, category learning, and other domains.) In many models, frequency and recency are nearly isomorphic, and repetition effects often overpower frequency effects (e.g., Scarborough, Cortese, & Scarborough, 1977). Indeed, low-frequency words elicit more repetition priming, relative to high-frequency words, and also larger voice specificity effects (Goldinger, 1998). In order for ART to explain priming, word perception must create memory traces, capable of enhancing resonance upon repetition. Thus, it is not entirely clear whether ART actually predicts the time-course hypothesis for recently encountered tokens: The recency of the whole stimulus (voice included) may overpower the frequency of its component parts, depending upon parameter settings in the model. As it happens, the empirical literature is somewhat mixed as well: McLennan (2007, p. 68) noted that “few studies offer support for the involvement of episodic representations during the immediate on-line perception of spoken language (e.g., by reporting reaction times).” Shortly thereafter, Creel, Aslin, and Tanenhaus (2008) observed early voice-specificity effects using the visual world paradigm.

Eye movements are an excellent medium for examining the time course of lexical access, and many studies show a tight correspondence between speech processing and eye movements. For example, Allopenna, Magnuson, and Tanenhaus (1998) found that eye fixations reveal phonological competition during spoken word comprehension. Similarly, Dahan et al. (2001) observed word frequency effects within the first moments of perception; when high- and low-frequency cohort competitors compete with a target, eye movements are preferentially drawn to the high-frequency competitor (see also Dahan & Tanenhaus, 2004; Magnuson, Tanenhaus, Aslin, & Dahan, 2003, for related findings). Creel et al. (2008) observed voice specificity effects within a few hundred milliseconds of target word onset. In their first experiment, participants viewed four pictures on a computer screen, two of which were onset competitors (e.g., cows and couch). Competitors were repeated 20 times, and were either produced by the same speaker (e.g., female-cows and female-couch) or by different speakers (e.g., female-cows and male-couch). After repeated exposures, participants’ initial eye movements to the competitor object decreased in different-voice pairs, relative to same-voice pairs, suggesting that participants encoded the voices and words, facilitating later perception. This effect emerged early in processing, before word offset. In a second experiment, Creel et al. replicated this pattern for novel words, although with a slightly longer time-course.

The Present Study

Although the results from Creel et al. (2008) appear to contradict the time-course hypothesis, there are methodological factors that complicate interpretation. Perhaps most salient, the sheer number of word-voice repetitions used by Creel et al. may have “forced” their observed effect. In the visual world paradigm, icons are presented approximately 500 ms prior to speech onset: Given such intense training, participants may have learned to anticipate voices for certain images, allowing eye-movements to be triggered by raw acoustic cues, such as pitch or timbre, irrespective of phonetic processing. (Goldinger, 1998, also observed stronger voice specificity effects when word-voice pairs had been encoded multiple times.) From a theoretical perspective, we must emphasize the “stakes” of the current question. According to numerous accounts, whether they are pure exemplar theories (e.g., Goldinger, 1998; Wade et al., 2010) or relative coding theories (e.g., Apfelbaum et al., 2014; McMurray & Jongman, 2011), the essential function of indexical information is to provide early constraints on speech processing. That is, voice information (whether activated from stored exemplars, or coded directly from the signal) shapes ongoing perception, leading to criterion shifts, faster processing, spontaneous imitation, etc. In contrast, the time-course hypothesis makes the directly opposite claim, such that almost all speech processing is accomplished by activating stable codes in memory, and voice-specific information is only involved as a late-arriving constraint of last resort. The evidence in favor of this latter view comes from repeated findings that voice-specific priming effects occur mainly when people respond relatively slowly (e.g., in a lexical decision task). Given its theoretically incisive nature, the time-course hypothesis requires further study.

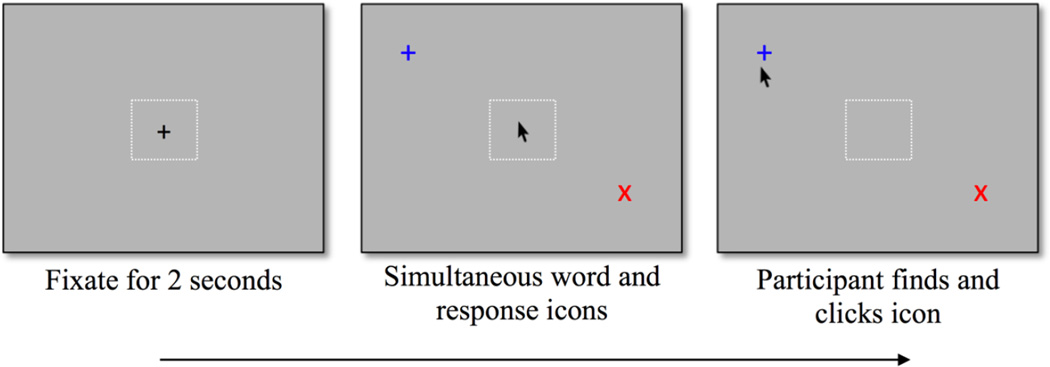

In the present study, we examined eye-movements to assess the time-course of voice specificity effects, while reducing the disparity between the studies by McLennan & Luce (2005) and Creel et al. (2008). We developed a novel two-alternative classification method, which we applied to both lexical decision and semantic classification. The method combines spoken word classification (such as word/nonword) with simple visual search (a schematic trial is shown in Figure 1). In each trial, the participant first gazed at a central fixation cross. Once gaze was maintained for two seconds, the cross vanished and two things happened simultaneously: A spoken word (or nonword) was played over headphones, and two target objects (a red “×” and a blue “+”) appeared on the screen. Depending upon condition, these symbols represented different response options, such as word versus nonword. The symbols appeared at random locations across trials, but were constrained to always appear in the same visual half-field (e.g., the red “×” would always appear on the left side of the screen), at least 8 degrees (in visual angle) from central fixation. In this manner, the task was similar to standard classification with responses mapped to left- and right-hand buttons. The participant’s task was to quickly make a decision (e.g., word/nonword), and indicate that decision by finding and mouse-clicking the corresponding symbol. The task was conducted in two blocks per experiment, a “study” block that introduced the word-voice pairings and a “test” block that intermixed same- and different-voice repetitions (along with new words, used to estimate priming).

Figure 1.

Schematic trial outline. Each trial began with a gaze-contingent 2000-ms fixation cross, followed by the onset of a spoken word for either lexical decision (LD) or semantic classification (SC). ‘Word’ (‘larger than a toaster’) decisions were made by locating and clicking a blue ‘+’; ‘nonword’ (‘smaller than a toaster’) decisions were made by clicking a red ‘×’. Response options were randomly located within the same visual half-field throughout the experiment, but changed locations in every trial. The dashed box in the center did not appear in the procedure, but is shown to illustrate a buffer zone where response icons could not appear.

One advantage of the current method is that it afforded analysis of voice specificity effects at multiple points in time, including saccade initiation, target location with the eyes, and the eventual mouse-click. Testing saccade initiation, in particular, allowed us to assess whether voice specificity affects early word perception. In keeping with the spirit of McLennan and Luce (2005), we used low- and high-frequency words, allowing a natural division of faster and slower trials. (For reasons we address in the General Discussion, we did not manipulate task difficulty by changing the nonwords.) Manipulating word frequency also incorporated a variable that we considered likely to “work,” allowing method validation, which was important given its novelty. (We also conducted two small-scale experiments, using keyboards as the response mechanism, reported in Appendix A. These experiments verify that our new procedure produces similar behavioral patterns, but with faster RTs for the time-to-initiate measure, as described below.) We report two experiments on the time-course and generality of voice-specificity effects: In Experiment 1, participants completed consecutive blocks of the same task (either lexical decision or semantic classification), allowing assessment of within-task priming. Because such within-task priming may be response-based, rather than perceptual (Orfanidou et al., 2011), participants in Experiment 2 completed both tasks (in counterbalanced order), allowing assessment of cross-task, perceptual priming.

Experiment 1

Experiment 1 assessed the time-course of voice specificity effects in two tasks, lexical decision (LD) and semantic classification (SC). To examine the time-course, we used three different moments in an eye-tracking task, the initial saccade off of central fixation (indicating a “word/nonword” decision), fixation of the target icon, and the final mouse-click. There were several questions of interest: First was whether we could observe voice effects in the earliest measure, when participants first moved their eyes toward the left or right. Second was whether voice effects would become larger as the dependent measures were extended in time. Third was whether (at each tested interval) the observed voice effects would be related to overall RTs. The time-course hypothesis predicts that slower trials will show larger voice effects. If voice effects are mainly observed in the later-arriving dependent measures, or if they are positively correlated with RTs within measures, the time-course hypothesis would appear to be supported.3 Alternatively, if episodic traces affect perception from its earliest moments, we should observe voice effects in the faster dependent measures, and they should not necessarily require slower processing.

Method

Participants

Ninety-three Arizona State University students participated for partial course credit; all were native English speakers with no known hearing deficits and normal, or corrected-to-normal, vision. Four participants were dropped for having excessive error rates (as described below), leaving 48 (31 female, mean age 19.6 years) participants randomly assigned to lexical decision (LD) and 41 (27 female, mean age 20.1 years) assigned to semantic classification (SC).

Stimuli

Four speakers (two male, two female) recorded a list of 220 words (110 high-frequency, HF; 110 low-frequency, LF) and 80 nonwords (NW; see Table 1 for stimulus characteristics). Among the words, 140 were selected for use in the SC condition; 70 represented items larger than a toaster, and 70 represented items smaller than a toaster (with 35 HF and LF words within each set). The remaining 80 words (and nonwords) were used in the LD condition. For stimulus recording, words were spoken at a comfortable speaking rate, and nonword pronunciation was standardized by having the speakers shadow a recording of the experimenter pronouncing the items. The average word duration was 616 ms, with no difference based on lexical variables. HF and LF words were 611 and 621 ms, respectively, t(219) = 0.43, ns. Nonwords were longer (708 ms), but were not compared to words in any analyses. Among the four speakers, there were small differences in average speaking rates per item: Collapsing across words and nonwords, the male speakers had average rates of 633 and 651 ms, respectively. The female speakers had average rates of 599 and 618 ms, respectively. In all experiments, all recorded tokens were used equally often as “same” trials, and were used equally often on “switch” trials. Thus, differences in speaking rate were controlled and would not systematically affect RTs.

Table 1.

Summary Statistics for the Stimulus Items

| Means | |||||||

|---|---|---|---|---|---|---|---|

| Item Type | n | Letters | Syllables | Length (ms) | KF† | Subtitle Freq.‡ | |

| Exp 1: LD | |||||||

| HF | 40 | 4.28 | 1.20 | 676 | 414.35 | 518.86 | |

| LF | 40 | 4.82 | 1.20 | 717 | 7.57 | 11.23 | |

| NW | 80 | 5.33 | 1.52 | 772 | n/a | n/a | |

| Exp 1: SC | |||||||

| HF | 70 | 4.47 | 1.21 | 681 | 87.57 | 125.09 | |

| LF | 70 | 4.78 | 1.32 | 693 | 10.35 | 9.81 | |

| Exp 2 | |||||||

| HF | 56 | 4.48 | 1.20 | 680 | 84.10 | 115.21 | |

| LF | 56 | 4.91 | 1.35 | 697 | 11.35 | 10.42 | |

| NW | 80 | 5.33 | 1.52 | 772 | n/a | n/a | |

Procedure

Participants were tested individually in a quiet, dimly-lit room. The experiment was conducted using an EyeLink 1000 eye-tracker, with a table-mounted camera, recording at 1000 Hz. A chin-rest was used to maintain constant distance (55 cm) to the screen. Participants were first calibrated using a 9-dot calibration routine; all were successfully calibrated within two attempts. Following calibration, the task was explained and participants completed two practice trials, hearing a voice not used in the experiment proper. Each trial began with a central fixation cross: This was a + sign, in 18-point, enclosed in an invisible interest area. The interest area was 100 × 100 pixels (with screen resolution 1024 × 768), and was 3.3 × 3.1 degrees of visual angle. The participant had to maintain gaze in this interest area for 2000 ms before a trial would continue.

After the fixation cross disappeared, participants heard a spoken item over headphones (in one of four voices, randomly selected per trial). At the same time, two target symbols appeared in randomly selected locations on the left and right sides of the screen. Based on condition, participants quickly made a semantic classification or lexical decision by locating and clicking the appropriate response option (see Figure 1). To respond “word” (or “larger than a toaster”), participants located and clicked a blue ‘+.’ To respond “nonword” (or “smaller than a toaster”), participants located and clicked a red ‘×.’ These symbols were each shown in 22-point font, and were enclosed in interest areas that were 2.7 × 2.2 degrees of visual angle. These interest areas were used both to record target fixations and clicks. The response icons were moved randomly on each trial, but were constrained to always appear in the same visual half-field (e.g., the red ×, for a “word” response, would always appear on the right). This regularity allowed participants to begin executing lateral eye movements as the word unfolded in time (i.e., once they had sufficient information to make a decision), but they still had to find the response option on the screen. An invisible region surrounding fixation that ensured that no response icon appeared closer than 3.5 degrees from fixation; the average distance of the response icon from fixation was 6.5 degrees.

Following the practice trials, participants received clarifying instructions (as needed) before starting the experimental trials. In LD, participants completed 120 study trials; in SC, they completed 112 study trials. In either task, all four voices were used equally often. After the study trials and a 5-minute break, LD participants completed a test block of 160 trials (120 were repeated from Block 1), and SC participants completed a test block of 142 trials (112 repeated from Block 1). Response mapping was maintained from Block 1. Of the repeated trials in Block 2, half were same-voice (SV) repetitions, whereas 25% were spoken by a new speaker of the opposite sex of the original speaker (across-gender) and 25% were spoken by a new speaker of the same sex (within-gender). Ultimately, this gender manipulation did not contribute any findings of interest, so we report findings classified simply as SV and DV. Therefore, in the second block of each task, there were new items, used to calculate priming effects, comparing them to repeated items. Among the repeated items, half were SV trials and half were DV trials, allowing calculation of voice effects. Results from the initial encoding block were not examined. The experiment took approximately 60 minutes.

Results

Throughout this article, we focus on RTs from correct classification trials. Because our key question regarded timing of initial saccades off central fixation, we only examined trials wherein the initial saccade was in the correct lateral direction. Four participants were removed for having an excessive bias (always toward rightward movement, in > 80% of trials), with two each in the LD and SC conditions. Among the remaining participants, 94.7% of all trials were retained for RT analyses, and we do not consider accuracy further. (There were not enough errors to allow statistical analysis, with too many design cells with zero observations per participant. At a general level, however, no experiments showed any evidence of speed-accuracy trade-offs, with errors being higher in conditions with slower RTs.) Alpha was maintained at .05. Voice effects were tested as planned pairwise comparisons (separately for nonwords, high-frequency words and low-frequency words); these were treated as multiple comparisons with Bonferonni correction. All analyses were conducted both with participants and items as random factors. For clarity, the results are presented in sections, first corresponding to LD and SC. Within each of those conditions, we present analyses moving from the slowest dependent measure (mouse RTs) toward the fastest (saccade initiation).

Lexical Decision (LD)

Validation Measures

Given the nature of our questions and the novelty of our method, we first conducted several analyses to ensure the validity of the data. In particular, our goal was to assess voice specificity effects at three points in time, measured by time to initiate saccades (TTI), time to fixate the target (TTF), and time to click with the mouse (RT). Analytically, this involved separate ANOVAs for each dependent measure, which is conservative but cannot reveal whether the measures relate to each other: Does faster saccade initiation reliably predict faster target location or clicking? To ensure that the results were self-consistent, we first tested correlations within trials, finding high positive values for all measures: TTI was correlated with TTF (r = .78) and with RT (r = .63), and TTF was correlated with RT (r = .87; all p < .0001). These correlations verify that the results were orderly, and in particular that the TTI measure truly relates to overall performance. Similar results were obtained for semantic classification and in Experiment 2 (all r > .65), but are not reported.

Mouse RTs

The “slowest” index of processing was RT, operationalized by the time it took participants to click the response option. Table 2 shows all the LD results from Experiment 1, with the upper section showing mouse RTs for HF words, LF words, and nonwords. Each is shown as a function of old/new status and repetition voice (for repeated items). Derived priming effects (relative to new words) and voice effects (DV minus SV) are shown for each measure; asterisks indicate reliable effects. Mouse RTs were first analyzed in a 3 (Item Type: HF/LF/NW)×3 (Voice: New, SV, DV) within-subjects, repeated-measures (RM) ANOVA. A parallel item-based ANOVA had the same factors, but Item Type was a between-subjects variable. In each ANOVA, planned comparisons (with Bonferroni adjustments) we used to assess priming and voice effects.

Table 2.

RTs to new and repeated items (with resultant priming measures) in LDT, as a function of stimulus type and voice, Experiment 1.

| HF Words | Priming | Voice Effect | LF Words | Priming | Voice Effect | Nonwords | Priming | Voice Effect | |

|---|---|---|---|---|---|---|---|---|---|

| Mouse-Click RTs | |||||||||

| New Item | 1621 (31) | 1763 (40) | 1848 (33) | ||||||

| Same Voice | 1565 (28) | 56** | 1626 (31) | 137*** | 1785 (29) | 63** | |||

| Different Voice | 1605 (44) | 17, ns | 40, ns | 1678 (68) | 85** | 52, ns | 1804 (37) | 44* | 19, ns |

| Time-to-Fixate | |||||||||

| New Item | 893 (26) | 958 (35) | 1121 (31) | ||||||

| Same Voice | 845 (21) | 48* | 870 (21) | 88** | 1051 (22) | 70*** | |||

| Different Voice | 877 (30) | 17, ns | 41* | 911 (30) | 48* | 41** | 1105 (33) | 17, ns | 53** |

| Time-to-Initiate | |||||||||

| New Item | 662 (23) | 724 (25) | 768 (31) | ||||||

| Same Voice | 591 (23) | 71*** | 610 (21) | 114*** | 657 (29) | 111*** | |||

| Different Voice | 637 (23) | 25, ns | 46*** | 672 (26) | 52*** | 62*** | 717 (28) | 51*** | 60*** |

Notes: Standard errors shown in parentheses. "Priming" denotes the contrast of repeated items versus new items; "Voice Effect" denotes the comparison of same- and diffferent-voice repetitions.

p < .05;

p < .01;

p < .001

There was a main effect of Item Type, FS(2, 46) = 55.1, p < .001, η2p = .71; FI(2, 157) = 38.9, p < .001, η2p = .43: RTs were fastest to HF words, followed by LF words and nonwords, respectively. The main effect of Voice was also reliable, FS (2, 46) = 19.9, p < .001, η2p = .46; FI(2, 156) = 22.0, p < .001, η2p = .39, with fastest RTs to same-voice repetitions, followed by different-voice repetitions, then new items. The interaction of Item Type × Voice was not reliable, FS (4, 44) = 2.2, p = .09; FI(4, 314) = 1.9, p = .13, showing that voice effects were similar for all item types. Nevertheless, because of their central role in the experiment, we still evaluated voice effects for each stimulus type. Considering first HF words, reliable priming was observed for SV repetitions (p = .009 by subjects, p < .001 by items), but not for DV repetitions (pS and pI both > .35). The 40-ms voice effect was not reliable, (pS and pI both > .11). Among the LF words, reliable priming was again observed for the SV words, (pS < .001; pI < .001), and also for DV words (pS = .003; pI < .001). Despite the numerical trend, the 52-ms voice effect was not reliable, (pS = .09; pI =.12). Finally, among the nonwords, reliable priming was observed for SV items, (pS < .001; pI = .006), and was marginal for DV items (pS = .05; pI < .002). The 19-ms voice effect was not reliable (pS and pI both > .39).

Time-to-fixate (TTF)

The “intermediate” processing index was participants’ average times to fixate the correct response icon, operationalized by a 100-ms (or longer) fixation within an interest area surrounding either icon. As in the mouse RTs, these results (shown in the central rows of Table 2) were first analyzed in two 3×3 RM ANOVAs (by participants and items), including planned comparisons with Bonferroni-corrected alpha. We observed a main effect of Item Type, FS(2, 46) = 30.6, p < .001, η2p = .51; FI(2, 157) = 27.0, p < .001, η2p = .55. TTFs were fastest to HF words, followed in order by LF words and nonwords. The main effect of Voice was also reliable, FS(2, 46) = 16.7, p < .001, η2p = .42; FI(2, 157) = 21.6, p < .001, η2p = .45, with fastest TTFs to SV repetitions, followed by DV repetitions, then new items. The Item Type × Voice interaction was not reliable, FS (4, 44) = 1.6, p = .43; FI(4, 314) = 1.5, p = .51, as voice effects were similar for all item types.

As with the mouse RTs, planned comparisons were used to evaluate priming and voice effects. For HF words, reliable priming was observed for SV repetitions, (pS < .001; pI < .001), but not for DV repetitions, (pS and pI both > .61). The 41-ms voice effect was marginal (pS = .03; pI < .06). Among the LF words, reliable priming was again observed for the SV words (pS = .008; pI < .001), and also for DV words (pS = .002; pI = .003). The 41-ms voice effect was also reliable, (pS = .009; pI = .002). Finally, among the nonwords, reliable priming was observed for SV items (pS < .001; pI < .001), but was null for DV items (pS and pI both > .36). The 53-ms voice effect was reliable (pS = .003; pI < .001).

Time-to-initiate (TTI)

In each trial, the earliest index of lexical decision was the latency to initiate saccadic eye movements (in the correct direction) off the fixation cross. As the word unfolded in time, participants could initiate a leftward or rightward saccade at any time. As in the prior RTs, these results (lower rows of Table 2) were first analyzed in two 3×3 RM ANOVAs (participants and items), followed by planned comparisons. We observed a main effect of Item Type, FS(2, 46) = 35.3, p < .001, η2p =. 43; FI(2, 157) = 38.4, p < .001, η2p = .41. TTIs were fastest to HF words, followed in order by LF words and nonwords. The Voice effect was also reliable, FS(2, 46) = 71.9, p < .001, η2p =. 60; FI(2, 157) = 93.1, p < .001, η2p = .58, with fastest RTs to SV repetitions, followed by DV repetitions, then new items. The Item Type × Voice interaction was not reliable, FS (4, 44) = 1.8, p = .13; FI(4, 314) = 0.9, p = .39, as voice effects were similar for all item types. Among the HF words, reliable priming was observed for SV repetitions (pS < .001; pI < .001), but not for DV repetitions (pS and pI both > .14). The 46-ms voice effect was reliable (pS < .001; pI < .001). Among the LF words, reliable priming was again observed for the SV words (pS < .001; pI < .001), and also for DV words (pS < .001; pI < .001). The 62-ms voice effect was also reliable (pS < .001; pI < .001). Finally, among the nonwords, reliable priming was observed for SV items (pS < .001; pI < .001) and for DV items (pS < .001; pI < .001). The 60-ms voice effect was reliable (pS < .001; pI < .001).

Semantic Classification (SC)

Mouse RTs

The SC results are shown in Table 3; these were analyzed in the same manner as the LD data. The main effect of Frequency was not reliable, FS(1, 40) = 2.9, p = .09; FI(1, 140) = 1.8, p = .25. Mean RTs to HF and LF words were 1671 and 1647 ms, respectively. The Voice effect was reliable, FS(2, 39) = 37.7, p < .001, η2p = .66; FI(2, 139) = 49.0, p < .001, η2p = .71, with fastest RTs to SV repetitions, followed by DV repetitions, then new items. The Frequency × Voice interaction was also reliable, Fs(2, 39) = 4.9, p < .02, η2p = .18; FI(2, 139) = 6.6, p < .01, η2p = .23. This interaction was driven by the new words, which showed a large (and backwards) frequency effect. In planned comparisons for the HF words, reliable priming was observed for SV repetitions (pS < .001; pI < .001) and for DV repetitions, (pS < .001; pI < .001). The 39-ms voice effect was also reliable (pS = .005; pI = .003). Among the LF words, reliable priming was again observed for the SV (pS < .001; pI < .001) and the DV repetitions (pS < .001; pI < .001). The 36-ms voice effect was also reliable, although it was not a strong effect, and marginal by items (pS = .03; pI = .07).

Table 3.

RTs to new and repeated items (with resultant priming measures) in Semantic Classification, as a function of stimulus type and voice, Experiment 1.

| HF Words | Priming | Voice Effect | LF Words | Priming | Voice Effect | |

|---|---|---|---|---|---|---|

| Mouse-Click RTs | ||||||

| New Item | 1803 (47) | 1721 (43) | ||||

| Same Voice | 1586 (35) | 217*** | 1593 (35) | 128*** | ||

| Different Voice | 1625 (36) | 178*** | 39* | 1629 (38) | 92*** | 36* |

| Time-to-Fixate | ||||||

| New Item | 1083 (31) | 1136 (39) | ||||

| Same Voice | 956 (22) | 127*** | 974 (31) | 162*** | ||

| Different Voice | 1014 (22) | 69** | 58** | 1044 (28) | 92** | 70** |

| Time-to-Initiate | ||||||

| New Item | 813 (27) | 837 (27) | ||||

| Same Voice | 667 (25) | 96*** | 662 (25) | 125*** | ||

| Different Voice | 704 (25) | 59*** | 37** | 701 (26) | 86*** | 39** |

Notes: Standard errors shown in parentheses. "Priming" denotes the contrast of repeated items versus new items; "Voice Effect" denotes the comparison of same- and diffferent-voice repetitions.

p < .05;

p < .01;

p < .001

Time-to-fixate (TTF)

The TTF results (central rows of Table 3) were analyzed in the same manner as the Mouse RT data. In the overall ANOVA, there was a main effect of Frequency, FS(1, 40) = 5.6, p = .028, η2p = .12; FI(1, 140) = 8.1, p < .01, η2p = .35, with faster fixations to HF words (818 ms) than to LF words (852 ms). The Voice effect was also reliable, FS (2, 39) = 26.6, p < .001, η2p = .40; FI(2, 139) = 23.9, p < .001, η2p = .51, with fastest RTs to SV repetitions, followed by DV repetitions, then new items. The Frequency × Voice interaction was not reliable, FS(2, 39) = 0.6, p = .54; FI(2, 139) = 1.8, p = .28. In planned comparisons for HF words, reliable priming was observed for SV (pS < .001; pI < .001) and DV repetitions (pS < .001; pI < .001). The 58-ms voice effect was also reliable (pS = .004; pI = .006). Among LF words, reliable priming was again observed for SV (pS < .001; pI < .001) and DV trials (pS < .001; pI < .001). The 70-ms voice effect was also reliable (pS = .007; pI < .001).

Time-to-initiate (TTI)

The TTI results (lower rows of Table 3) were analyzed in the same manner as the foregoing data. In the overall ANOVA, there was no Frequency effect, FS (1, 40) = 0.3, p = .57; FI(2, 139) = 0.4, p = .65, with equivalent initiation times to HF (517 ms) and LF (522 ms) words. The Voice effect was reliable, FS(2, 39) = 83.4, p < .001, η2p = .77; FI(2, 139) = 79.0, p < .001, η2p = .61, with fastest RTs to SV repetitions, followed by DV repetitions, then new items. The Frequency × Voice interaction was not reliable, FS(2, 39) = 1.7, p = .19; FI(2, 139) = 0.9, p = .58. In planned comparisons for HF words, reliable priming was observed for SV (pS < .001; pI < .001) and DV repetitions (pS < .001; pI < .001). The 37-ms voice effect was also reliable (pS = .002; pI < .001). Among the LF words, reliable priming was again observed for SV (pS < .001; pI < .001) and DV trials (pS < .001; pI < .001). The 39-ms voice effect was also reliable (pS = .008; pI = .004).

Correlations

As noted earlier, a key prediction from the time-course hypothesis is that, as responses become slower, the strength of voice-specificity effects should increase. We tested this prediction for all dependent measures, in both LD and SC, analyzing words (excluding nonwords from LD), collapsed across participants. Before turning to the results, we must note two important points. First, although this prediction is inherent to the time-course hypothesis, prior studies (e.g., McLennan & Luce, 2005; Krestar & McLennan, 2013) have divided items into “easier” and “harder” groups and examined results categorically, rather than continuously. Our inclusion of these analyses is meant to illustrate a point that is elaborated in the General Discussion. Specifically, a challenge arises for the time-course hypothesis, as its central prediction (larger specificity effects given slower RTs) may reflect statistical properties of RTs, rather than any underlying psychological principle. Second, when examining Tables 2 and 3, it is clear that robust frequency effects were observed, and that priming effects (old versus new) were generally stronger for LF words, relative to HF words. (The sole exception to this pattern was mouse-click RTs in SC.) Nevertheless, voice-specificity effects were nearly equivalent for LF and HF words, with an average difference of only 6.5 ms. Thus, although category-level data did not follow the time-course hypothesis prediction, it remained possible that the trial-level data would show such an effect.

To test whether voice-specificity effects were indeed larger for more challenging items, we calculated mean RTs for each repeated word, separately for those trials that were SV and DV repetitions.4 The average RT per word was computed (averaging SV and DV trials); voice effects were calculated as the difference score, DV minus SV. In every case, the correlations of mean RTs and voice effects were positive and reliable: In LD, the correlations for mouse RTs, TTF, and TTI were r = .19 (p < .05), r = .32 (p < .001) and r = .37 (p < .001), respectively. In SC, these same correlations were r = .16 (p < .05), r = .19 (p < .05) and r = .30 (p < .001). Although the pattern cannot be appreciated by grouping words into LF and HF categories, there were reliable associations between item RTs and voice-specificity effects.

Discussion

In Experiment 1, we created a modified 2AFC method, applied to both lexical decision and semantic classification. The method uses lateral eye-movements to provide an early measure of classification, with later measures of target location and selection. At a broad level, the results validated the method. By all three dependent measures, the results were orderly and expected: Word frequency, repetition priming, and voice specificity effects were observed at all time-points in both LD and SC (although voice effects were not reliable in mouse-click RTs in LD, there were consistent numerical trends).

With respect to the time-course hypothesis, Experiment 1 provided mixed evidence. Applied to the present method, the hypothesis most comfortably predicts that voice effects should be stronger in later-arriving measures of lexical processing, such as mouse-click RTs. Nevertheless, we observed robust voice effects in saccade initiation times; these were replicated in the later measures. Indeed, given repeated words in LD, people initiated saccades in the correct direction an average of 12 ms after word offset; this value was 68 ms in SC. (These results are reminiscent of those from Creel et al., 2008, who observed voice-specific effects on eye-movements prior to word offset.) On the other hand, by all dependent measures, we also observed positive associations: As response times grew slower, voice-specificity effects grew larger, as the time-course hypothesis would predict.

Taken together, the results from Experiment 1 show that voice-specificity effects can arise early in lexical processing, although those effects positively correlated with overall RTs to different words. A key question, however, is the degree to which the effects in Experiment 1 may have reflected response priming, rather than perceptual priming. In SV trials, participants were required to make the same response (e.g., word-nonword) to the same recorded token. Strong repetition effects were observed, but cannot be clearly interpreted as perceptual effects (see Orfanidou et al., 2011). Instead, repetitions could serve as episodic memory probes, reactivating recent responses made to those same items. Although such an interpretation may be interesting in its own right, our present goal was to assess perceptual specificity effects. In Experiment 2, we crossed the study and test procedures from Experiment 1, such that half the participants completed LD first, followed by SC; the other half completed both tasks in the opposite order. The crossed procedure made it unlikely for priming to be response-driven, allowing greater focus on perceptual processes.

Experiment 2

The goal of Experiment 2 was to determine whether voice-specific priming would occur across changes in task. Participants again completed an initial blocks of trials, either LD or SC, then completed the complementary task in a second block.

Method

Participants

Fifty native English speakers (31 female, mean age 19.9 years), with no self-reported hearing deficits and normal, or corrected-to-normal, vision participated in exchange for partial course credit. Half the participants were randomly assigned to complete LD first, followed by SC; the other half completed tasks in the reverse order. Two participants were dropped from analysis for having excessive errors (as in Experiment 1, both showed consistent rightward bias > 85% of trials), leaving 48 in the final analysis.

Stimuli

The materials from Experiment 1 were used in Experiment 2.

Procedure

The procedures were identical to Experiment 1, except all participants completed both tasks in counterbalanced order. Half of the participants first completed 160 LD trials (80 words and 80 nonwords). After a short break, they completed 112 SC trials, including 80 words that had been presented in Block 1. The remaining participants first completed a block of 64 SC trials, followed by 160 LD trials in Block 2, with 64 words repeated from Block 1. In the second block of either condition, half the repeated words retained their original presentation voices, and half were changed to one of three other voices.

Results

After removing errors, 91% of trials were retained for RT analyses; we do not consider accuracy further. Because the LD and SC blocks were presented in counterbalanced order (to avoid task-order effects), all analyses were conducted on average Block 2 data, collapsing across tasks. (For interested readers, Appendix B shows results from the different task orders. Perhaps not surprisingly, SC had greater priming effects on LD, relative to the reverse. For purposes of drawing conclusions, we limit analyses to the full design.) The results (for words only) are shown in Table 4, which is arranged in similar fashion to Tables 2 and 3. As in Experiment 1, we first conducted omnibus ANOVAs (by both participants and items), with planned comparisons for priming and voice effects.

Table 4.

RTs to new and repeated items (with resultant priming measures) as a function of stimulus type and voice, Experiment 2.

| HF Words | Priming | Voice Effect | LF Words | Priming | Voice Effect | |

|---|---|---|---|---|---|---|

| Mouse-Click RTs | ||||||

| New Item | 1865 (48) | 1843 (37) | ||||

| Same Voice | 1775 (46) | 90*** | 1716 (49) | 127*** | ||

| Different Voice | 1786 (50) | 79*** | 11, ns | 1790 (40) | 53* | 74* |

| Time-to-Fixate | ||||||

| New Item | 1026 (39) | 999 (24) | ||||

| Same Voice | 936 (30) | 90** | 928 (29) | 71** | ||

| Different Voice | 969 (32) | 57* | 33, ns | 972 (30) | 27, ns | 44* |

| Time-to-Initiate | ||||||

| New Item | 786 (22) | 850 (24) | ||||

| Same Voice | 734 (20) | 52** | 773 (26) | 77** | ||

| Different Voice | 780 (22) | 6, ns | 46* | 811 (23) | 39* | 38* |

Notes: Results are collapsed over the counterbalanced order of tasks. Standard errors shown in parentheses. "Priming" denotes the contrast of repeated items versus new words. "Voice Effect" denotes the comparison of same- and diffferent-voice repetitions.

p < .05;

p < .01;

p < .001

Mouse RTs

The upper rows of Table 4 show mouse-click RTs for low- and high-frequency words, each as a function of old/new status and voice (for repeated items). Derived priming and voice effects are shown, as before. Mouse RTs were first analyzed in a 2 (Frequency: HF/LF) × 3 (Voice: New, Same, Different) RM ANOVA. There was no main effect of Frequency, FS(1, 47) = 0.61, p = .71; FI(1, 79) = 1.31, p = .60. The numerical trend was for a “backwards” frequency effect, with RTs 26 ms faster to LF words, relative to HF words. The Voice effect was reliable, FS (2, 46) = 36.8, p < .001, η2p = .62; FI(2, 77) = 28.0, p < .001, η2p = .39, with fastest RTs to SV repetitions, followed by DV repetitions, then new items. The Item Type × Voice interaction was not reliable, FS(1, 47) = 1.20, p = .49; FI(2, 77) = 0.99, p = .58. Among the HF words, reliable priming was observed for SV (pS < .001; pI < .001) and DV repetitions (pS = .006; pI = .002). The 11-ms voice effect not reliable. Among the LF words, reliable priming was again observed for SV (pS < .001; pI < .001) and DV trials (pS = .03; pI = .009). The 74-ms voice effect was also reliable (pS = .008; pI = .008).

Time-to-fixate (TTF)

The TTF results (central rows of Table 4) were analyzed in the same manner as the mouse RTs. The main effect of Frequency was again numerically backwards (by 11 ms) and unreliable. The main effect of Voice was reliable, FS (2, 46) = 10.9, p < .001, η2p = .32; FI(2, 77) = 15.5, p < .001, η2p = .35, with fastest RTs to SV repetitions, followed by DV repetitions, then new items. The Frequency × Voice interaction was null. For HF words, reliable priming was observed for SV (pS = .005; pI = .001) and DV repetitions, (pS = .004; pI = .002). The 33-ms voice effect was not reliable (pS and pI both > .12). Among the LF words, reliable priming was again observed for SV trials (pS = .003; pI < .001), but not for DV trials (pS and pI both > .09). The 44-ms voice effect was reliable (pS = .03; pI = .007).

Time-to-initiate (TTI)

The TTI results (lower rows of Table 4) were analyzed in the same manner as the prior results. The main effect of Frequency was reliable, with a 45-ms effect in the expected direction, FS(1, 47) = 16.0, p < .001, η2p = .36; FI(1, 79) = 21.8, p < .001, η2p = .43. The Voice effect was reliable, FS (2, 46) = 7.4, p < .01, η2p = .24; FI(2, 77) = 9.1, p < .01, η2p = .30, with fastest RTs to SV repetitions, followed by DV repetitions, then new items. The Frequency × Voice interaction was null. Among the HF words, reliable priming was observed for SV repetitions (pS = .007; pI = .008), but not for DV repetitions (pS and pI both > .29). The 46-ms voice effect was reliable (pS = .02; pI = .006). Among the LF words, reliable priming was again observed for the SV (pS = .003; pI < .001) and DV trials (pS = .005; pI = .002). The 38-ms voice effect was reliable (pS = .009; pI = .004).

Correlations

As in Experiment 1, the group-level data shown in Table 4 show little support for the time-course hypothesis: The overall voice specificity effect was 22 ms larger for LF words, relative to HF words, but that difference mainly reflected the mouse RTs. At the level of individual trials, however, we again found positive correlations between overall RTs (averaging SV and DV repetitions) and voice effects (DV minus SV). Combining all words together, the observed correlations for mouse RTs, TTF, and TTI were r = .22 (p < .05), r = .29 (p < .01) and r = .28 (p < .01), respectively. Thus, although the predicted pattern was not evident at the categorical level, there were reliable associations between item RTs and voice-specificity effects.

Discussion

Experiment 2 conceptually replicated Experiment 1, while reducing the likelihood for response priming. Notably, the response times shown in Table 4 were slower overall (by roughly 150 ms, on average) than those in Tables 2 and 3, suggesting that response priming likely did affect Experiment 1. Nevertheless, in both experiments, reliable priming occurred and voice-specificity effects showed the same general pattern: They were numerically evident in all dependent measures, and were robust in the earliest, TTI measure. Indeed, among HF words, voice effects were only reliable in the TTI data (they were evident for all measures among the LF words). Finally, as in Experiment 1, although we found little evidence for the time-course hypothesis (McLennan & Luce, 2005) at the broad level of LF versus HF words, we found reliable correlations of item RTs and voice effects. Thus, we have verified that specificity effects can arise early in perception: In Experiment 2, laterally correct eye-movements were initiated approximately 76 ms after the offsets of repeated words. There remained, however, some evidence consistent with the time-course hypothesis, which we consider further below.

General Discussion

The present study was motivated by a theoretical discrepancy in the literature, regarding the time-course of voice specificity effects in spoken word recognition. Although this sounds like a fairly nuanced topic, it has great theoretical gravity: Whereas episodic models (e.g., Goldinger, 1996; 1998) or relative-coding models (McMurray & Jongman, 2011) predict that indexical information mediates early perceptual processes, the time-course hypothesis (McLennan & Luce, 2005), derived from ART (Grossberg & Myers, 2000), predicts that abstract sublexical elements will dominate early perception, with indexical effects only arising when processing is slow. Prior studies have lent support to both views, with evidence for early voice effects (e.g., Creel et al., 2008; Goldinger, 1998) but also evidence that such effects “arrive late” in processing (e.g., Krestar & McLennan, 2013; Luce & Lyons, 1998; McLennan & Luce, 2005). Reconciling these prior studies is challenging, however, as their methods differed in numerous regards. In this study, we examined the time-course hypothesis, using a method that allowed detection of early voice effects, while retaining a familiar 2AFC methodology.

The present method gave us several “moments” when voice-specific priming could be observed (time for the initial saccade, time to locate the object, and time to click). Although it was theoretically possible that voice effects would be absent in initial saccades, only arising in “later” measures, we did not consider this likely: In any given trial, the sequence of RTs were derived from a coherent underlying event, the recognition and classification of a spoken word. We thus considered saccade-initiation times as the critical dependent measure; the later-arriving measures served to validate that those eye-movements were meaningful.

In the visual world paradigm (e.g., Dahan et al., 2008; Magnuson et al., 2003), eye-movements are linked to fixated objects, each with their own names, so the “meanings” of eye movements can be directly inferred. In our case, quick left or right saccades had several layers of validation: First, they were in the correct direction more than 90% of the time. Second, they produced effects of classic variables (word frequency and repetition). Third, initial saccades typically predicted equivalent psychological effects later in time, such as correct mouse clicks. We are therefore confident that initial saccades reflected meaningful perceptual processes, and their implications were clear: From the earliest moments of word perception, effects such as frequency are evident (Dahan et al., 2001). For repeated words, effects of indexical repetition were observed very early, often before the spoken word was complete. This was seen in Experiment 1, which could have involved response priming (Orfanidou et al., 2011), but was also seen in Experiment 2, wherein response priming was likely minimized. In the remainder of this article, we address three further points. These include the interpretive challenge posed by the time-course hypothesis, the status of voice-specificity effects in the literature, and their implications for psycholinguistic theory.

The time-course hypothesis

In a series of articles, McLennan and colleagues have advanced the time-course hypothesis for voice-specificity effects in word perception. In summarizing their findings, McLennan and Luce (2005, p. 306) wrote, “… indexical variability affects participants’ perception of spoken words only when processing is relatively slow and effortful.” This theoretical hypothesis can be restated in two ways, being more or less restrictive. The more restrictive interpretation is that “voice-specific priming will not be observed when lexical processing is fluent.” This strong version has been implicitly adopted in various studies: In addition to McLennan and Luce (2005), other reports have been framed in terms of null voice-specificity effects in “easy” conditions, with effects emerging only in “hard” conditions (e.g., Krestar & McLennan, 2013; McLennan & González, 2012; Vitevitch & Donoso, 2011). The present results, and other findings of early-arriving voice effects (e.g., Creel et al., 2008; Creel & Tumlin, 2011) seemingly refute the strong hypothesis: We presented relatively easy and hard words (based on frequency) for classification, and observed robust voice-specificity effects within 100 ms of word offset, equivalently for LF and HF words. In a recent article, Maibauer, Markis, Newell and McLennan (2014) also found early voice-specificity effects when famous voices were used.

Given such results, a strong version of the time-course hypothesis appears untenable. And in a scientific sense, it is difficult to defend a theoretical position that “certain conditions will create null results, and others will create positive results,” because null results can arise for many unimportant reasons. The less restrictive version of the hypothesis focuses on the implied interaction, or the continuous relationship between variables: Voice-specificity effects are predicted to increase when lexical processing takes more time. This weaker version is more easily supported from an empirical standpoint, appears to have support in the prior literature, and is more scientifically tractable. Indeed, in the present data, although we observed early voice effects, we also consistently found significant, positive correlations between word RTs and derived voice effects. Such findings, however, beg a question: How convincing is the evidence for the time-course hypothesis? We suggest that the evidence is surprisingly weak, for three key reasons. First, prior studies supporting the time-course hypothesis are typically underpowered. Second, many prior studies have a critical design flaw (in our view), allowing an alternative account of their results. Third, we suggest that the key finding – larger voice effects with slower lexical processing – may be an inevitable outcome, given the nature of RT distributions. We briefly address the first and second points, then focus on the third.

Experimental power

The time-course hypothesis is well-motivated theoretically (from ART), and could be correct. Its prior empirical support, however, is not compelling. The study by McLennan and Luce (2005) was the first articulation of the time-course hypothesis, and produced its initial support. In their Experiment 2 (which bears the closest resemblance to the current study), people classified words and nonwords spoken in two voices (one male, one female) in two consecutive blocks: The first block exposed listeners to a set of words and voices; the second block had new and old words, with some old words presented in new voices. Reliable priming (studied versus new) was observed whether processing was easy (Experiment 2A) or hard (2B). However, no voice effect was observed in the easy condition (an 8-ms trend), but was observed in the hard condition (35 ms). This established the time-course pattern and has been widely cited, but the experimental details are not compelling. In particular, the experiment only included 24 stimuli, with only 12 words constituting the critical trials. Four words were control items; the remaining eight were repeated from Block 1, with four SV and four DV trials. Despite testing many participants (72 each in Experiments 2A and 2B), this provides a very limited data set, mathematically and linguistically. Mathematically, each participant only contributed eight relevant data points per experiment, divided into two distributions of four RTs each. Linguistically, the stimuli were also limited, with 12 monosyllabic words (bear, bee, book, bowl, car, cat, deer, fly, key, leg, nail, nut) featuring six phonological onsets. In our view, even though the results were reliable by participants and items, it is challenging to interpret such a narrow data set.

The issue of limited sampling in one study is not necessarily cause for great concern. However, McLennan and González (2012) later used the same stimulus set and reported more statistical details, allowing us to estimate their power (to detect voice specificity effects) at .5, indicating a .5 probability of detecting a true effect, should it be present. (In the present study, the observed power ranged from .87 to .99 for all tests of voice-specific priming.) As noted by Francis (2012; 2013), low-power experiments are more likely to create both false-positive and false-negative outcomes. McLennan and González (2012) also included 72 participants per test, suggesting that the low power derived from using few items per condition. These same stimulus items were also used by Krestar and McLennan (2013), with similar subdivision into conditions.

In a different example, Vitevitch and Donosio (2011) examined the time-course hypothesis using a “change deafness” paradigm. In their first experiment, listeners made lexical decisions; these were made relatively easy or hard by manipulating the nonwords. Harder nonwords slowed “word” decisions by 82 ms. Halfway through the experiment, the voice producing items was changed, from one male speaker to another. Afterward, participants were asked whether they noticed (1) “anything unusual” and (2) a speaker change. Out of 22 people in the “easy” condition, 14 (63%) detected the change in speaker, compared to 19 of 22 (86%) people in the “hard” condition. Clearly there was a difference: Five more people detected the voice change when the task was more challenging. However, two-thirds of participants in the easy condition also noticed the change, and the statistical difference between conditions was small and low-power, with each person providing a single data point. In a second experiment, using a confidence-scale method, the observed power for a similar effect was approximately .5, which is again quite low.

An alternative explanation

As noted earlier, in the current experiments, we opted not to manipulate lexical decision difficulty by changing the nonword foils. This decision was partly motivated by a desire to maintain parallel structure between the LD and SC tasks. Our deeper motivation, however, was to avoid the typical approach taken in prior experiments (e.g., Krestar & McLennan, 2013; McLennan & González, 2012; McLennan & Luce, 2005; Vitevitch & Donoso, 2011). The standard approach (making nonwords more or less word-like) invites an alternative explanation of the results. We focus on McLennan and Luce (2005) as a relevant example: As noted, listeners were assigned to groups for either easy or hard LD. Words were constant across conditions, while nonword foils were changed, allowing comparison of the same words under more or less fluent conditions. In the easy condition, the nonwords were very low-probability phonotactic sequences. For example, people had to discriminate BACON from THUSHTHUDGE. By contrast, in the hard condition, the nonwords differed from target words by one phoneme in final position, such as BACON versus BACOV. Another experiment was similar, but with monosyllables. By virtue of making nonwords more word-like in the hard condition, McLennan and Luce (2005, page 312, Table 4) increased “word” RTs by an average of 33 ms, and also observed voice-specific priming. This finding motivated the time-course hypothesis that slower lexical processing gave voice-specific features greater opportunity to affect the resonant dynamics of lexical access.

Although the time-course account is consistent with the data, does it provide the most likely interpretation? Do 33 extra milliseconds of processing truly change lexical dynamics to such a degree that voice effects will emerge? We suggest a more parsimonious interpretation. Specifically, changing the nonword foils does not merely increase average RTs. It also dramatically changes how attention must be allocated to the bottom-up speech signal. Given unusual nonwords such as THUSHTHUDGE, people can likely perform lexical decision with minimal attention to the signal -- the words should essentially “pop out.” But in the hard condition, words and nonwords can only be discriminated by carefully listening for the final segment, and the listener cannot predict when that segment will arrive as the signal unfolds in time. Changing the nonword foils does not merely slow down processing; it forces participants to attend to fine-grained details in the speech signal. In previous studies, voice-specific priming is most robust when listeners focus on “surface” features of words, such as judging how clearly words are enunciated (Goldinger, 1996; Schacter & Church, 1992).

According to the time-course hypothesis, the challenging lexical decision task slows down processing, and that extra processing time allows voice effects to emerge. We suggest that changing the nonwords changes the listener’s focus of attention, forcing careful bottom-up monitoring. This simultaneously creates episodic memory traces that more strongly represent superficial (e.g., voice) details of the signal. Note that this account is also entirely consistent with classic exemplar models (e.g., the attention hypothesis in Logan, 1988).

What is the correct null hypothesis?

Setting prior studies aside, the present experiments did produce a pattern predicted from the time-course hypothesis. In all measures, as mean RTs for repeated words increased, the magnitudes of voice effects also increased. This follows directly from the less restrictive version of the hypothesis, as noted above, and the present experiments had high observed power (always > .85). Nevertheless, a difficult question arises: What relationship between the variables should be expected, under the null hypothesis? RTs have a natural lower “boundary,” and are typically distributed in a roughly ex-Gaussian fashion (Balota & Spieler, 1999; Ratcliff & Rouder, 1998). As a result, creating faster RTs (for example, by repetition priming) is inherently nonlinear: Words that typically elicit fast RTs can only be slightly improved by priming, whereas words that typically elicit slow RTs can show large benefits. By extension to the current study, any effect (such as voice-specific priming) that is compared across relatively fast and slow items will typically have larger effects on the slower end of the spectrum. This has long been recognized as the problem of response time scaling: As a general rule, effects tend to increase when RTs increase.

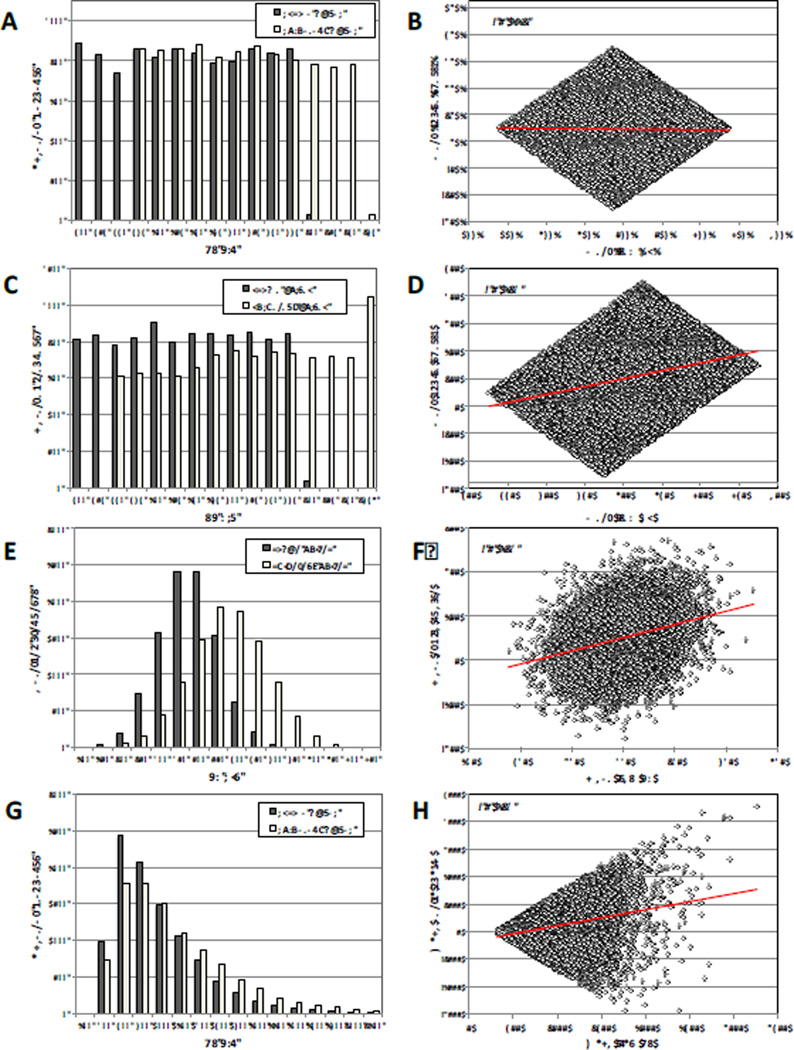

To better understand the correct null hypothesis for these experiments, we conducted a series of simulations, beginning with simple scenarios, then moving toward more faithful representations of real RT experiments. In the first four simulations (see Figure 2), we generated 10,000 artificial RTs for “same-voice” and “different-voice” repetitions of words, using different distributional assumptions. For each, we then calculated RTs and “voice effects” (DV minus SV). The upper row of Figure 2 (panels A and B) shows the results from shifted, flat distributions, with no relation between the mean and variance: SV word RTs were generated as randomly selected values between 500 and 800 ms; DV words were randomly selected values between 575 and 875 ms. Panel A shows the frequency distributions for observed RTs, and panel B shows no relationship between mean RT and voice effect. Of course, natural RT distributions are not flat, and their standard deviations typically increase (approximately linearly) with mean increases (Wagenmakers & Brown, 2007). To better approximate natural RTs, we next simultaneously changed the means and standard deviations, and tested different underlying distributions.

Figure 2.

Simulated sampling of “same-voice” and “different-voice” RTs, showing the relationship that emerges between item RT and voice effects. Panels A, C, E, and G show RT frequency distributions for hypothetical SV and DV words, sampled from flat distributions with equal variance, flat distributions with unequal variance, Gaussian distributions with unequal variance, and Weibull distributions with unequal variance (respectively). Panels B, D, F, and H show the associations that emerge between mean item RTs (SV+DV/2) and the size of voice effects (DV-SV).

In the second row of Figure 2 (panels C and D), we again selected from flat distributions, but SV words ranged from 500 to 800 ms, whereas DV words ranged from 550 to 950 ms. As shown in panel D, a positive relationship emerged between item RT and voice effects (across simulations, the correlation ranged from .25 to .31). In the third row (panels E and F), we sampled from Gaussian distributions: SV words had M = 500 and SD = 75; DV words had M = 600 and SD = 100, and the same relationship emerged. Finally, the bottom row of Figure 2 (panels G and H) show RTs sampled from Weibull distributions (SV mean = 850, DV mean = 1000, with a 100-ms increase in standard deviation). Weibull distributions have the same general shape as ex-Gaussian distributions and therefore resemble typical RTs (Balota & Spieler, 1999). As shown, the same relationship between item mean and voice effect was observed again.

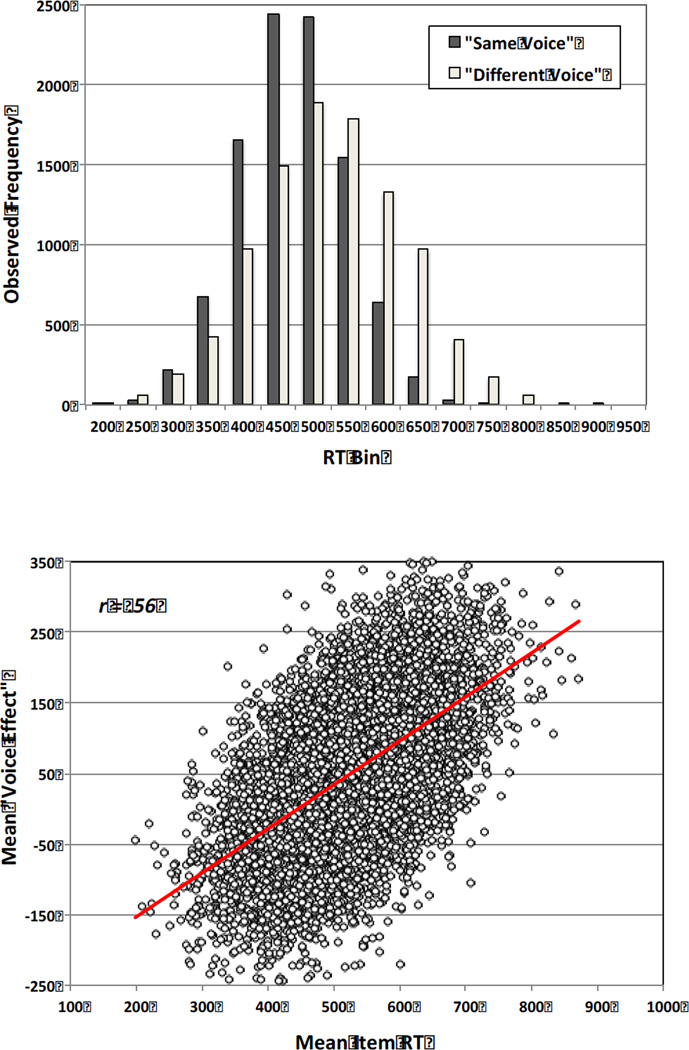

We next conducted tests with more reasonable core assumptions. In the simulations shown in Figure 2, there was no relationship between the SV and DV values generated for each word; they were independent samples. Presumably, real words have some “intrinsic RT” that can be adjusted upward or downward by manipulations, such as repetition priming. To further test the observed relationship, we generated 10,000 normally-distributed RTs for SV words (M = 500, SD = 75) and created DV versions by adding normally distributed values (M = 50, SD = 100). In this manner, DV and SV values were tethered to each other, but were adjusted by random sampling, and could produce occasional “backwards” priming effects. Not surprisingly, the final SV and DV values were highly correlated (around r = .75). What is more surprising is that item RTs were highly correlated with voice effects, with typical values around r = .55 (see Figure 3). When this simulation approach was repeated using Weibull distributions, the correlation was approximately r = .35. This relationship is not affected by “normalizing” transformations, such as log- or z-transforming the data. Not surprisingly, the relationship can be reduced by Vincentizing, such as replacing all scores above two standard deviations from the mean with the cutoff score.5 This has the effect of selectively suppressing the slowest RTs, which reduces the observed correlation.

Figure 3.

Simulated sampling of “same-voice” and “different-voice” RTs, with the extra assumption that both versions of each word are loosely related to each other. The upper panel shows RT frequency distributions for hypothetical SV and DV words: SV words were sampled from a Gaussian distribution, and DV versions were created by sampling normally-distributed changes to those base RTs. The lower panel shows the association that emerges between mean item RTs (SV+DV/2) and the size of voice effects (DV-SV).