Highlights

-

•

Simultaneous MEG recordings from two persons during live interaction.

-

•

Left-lateralized involvement of sensorimotor cortex during natural conversation.

-

•

Phasic modulation of sensorimotor rhythm indexing preparation to own speaking turn.

Abbreviations: MEG, magnetoencephalography; TFR, time–frequency representation

Keywords: Sensorimotor activation, Mu rhythm, Conversation, Magnetoencephalography

Abstract

Although the main function of speech is communication, the brain bases of speaking and listening are typically studied in single subjects, leaving unsettled how brain function supports interactive vocal exchange. Here we used whole-scalp magnetoencephalography (MEG) to monitor modulation of sensorimotor brain rhythms related to the speaker vs. listener roles during natural conversation.

Nine dyads of healthy adults were recruited. The partners of a dyad were engaged in live conversations via an audio link while their brain activity was measured simultaneously in two separate MEG laboratories.

The levels of ∼10-Hz and ∼20-Hz rolandic oscillations depended on the speaker vs. listener role. In the left rolandic cortex, these oscillations were consistently (by ∼20%) weaker during speaking than listening. At the turn changes in conversation, the level of the ∼10 Hz oscillations enhanced transiently around 1.0 or 2.3 s before the end of the partner’s turn.

Our findings indicate left-hemisphere-dominant involvement of the sensorimotor cortex during own speech in natural conversation. The ∼10-Hz modulations could be related to preparation for starting one’s own turn, already before the partner’s turn has finished.

1. Introduction

Although speech is an interpersonal communication tool, the brain basis of speech production and perception is typically studied in single isolated subjects, and often even with isolated speech segments, such as phonemes, syllables, and words. The main reasons for this experimental bias are certainly methodological as it is more complicated to study brain processes during connected speech, and even more complicated during natural conversation where the same experimental condition cannot be repeated to improve the signal-to-noise ratio of the measured brain activity.

Still, the interaction likely affects the brain activity that we observe in relation to both speaking and listening. In other words, dissecting a part of the interaction mechanism and studying it in isolation, out of the context, may hinder unraveling the brain basis of smooth conversational interaction.

According to Garrod and Pickering [1], dialog is the most natural form of language use because everyone who understands language and is able to speak is able to hold a dialog. In contrast, a monolog is considered to require learning. During conversation, people mutually adjust their linguistic style [2], as well as the speech rhythms and movements of head, trunk, and hands [3]. Such an alignment occurs even when the length of the verbal exchanges is only one word at a time [4].

This strong alignment between conversation partners is also reflected in turn-takings that across different languages typically occur within ±250 ms with respect to the end of the previous speaker’s turn [5]. This gap is likely too short to allow the partner to react to the end of the speech and start his/her own turn, meaning that the conversation partners have to be aligned at several perceptual and cognitive levels to predict the end of the partners’ speech.

We were interested in finding out how cortical brain rhythms are modulated while two people are engaged in a free conversation. Previous studies have shown that the sensorimotor mu rhythm, comprising ∼10- and ∼20-Hz frequency components [6], [7], is dampened before and during brisk movements. The mu rhythm is modulated by articulatory movements as well, but bilaterally in contrast to the contralaterally dominant modulations associated with hand and leg movements [8], in agreement with the bilateral innervation of the lower face. However, the results on speech-related brain-response lateralization are still quite scattered, and they may depend on the kind of “speech” used in each experiment: segments of speech (such as isolated words), connected speech [9], or real conversation with alternating speaker and listener roles.

In the present study we used a new experimental setup, recently developed in our laboratory [10], [11], to measure MEG signals simultaneously from two participants engaged in a dialog. We then quantified how the speaker vs. listener role during natural conversation affects the dynamics of the sensorimotor oscillations.

2. Methods

2.1. Participants

Eighteen healthy volunteers (mean ± SEM age 27.6 ± 2.1, range 21–49; 6 female, 12 male; all right-handed: Edinburgh Handedness Inventory mean 92.6, range 71–100) participated in the experiment. The subjects were arranged into pairs (two mixed-gender pairs, two female pairs, and five male pairs); four pairs knew each other before the experiment. The study had a prior approval by the Ethics Committee of Helsinki and Uusimaa Hospital District. All subjects gave written consent before participation.

2.2. Task

Each pair had an about 7-min conversation on a given topic (4 pairs about hobbies, 5 pairs about holiday activities); no other instructions were given about the nature of the conversation.

2.3. Data collection

The MEG recordings were conducted simultaneously at the Brain Research Unit of Aalto University and at the BioMag Laboratory of the Helsinki University Hospital; these laboratories are located 5 km apart. We used a custom-made dual-MEG setup with an audio link based on Internet; the system enables recording of brain and behavioral data at the same time from two measurement sites with one-way audio delay of 50 ± 2 ms [11]. MEG was recorded at both sites with similar 306-channel neuromagnetometers (Elekta Oy, Helsinki, Finland; Elekta Neuromag at Brain Research Unit and Neuromag Vectorview at BioMag Laboratory). The subjects were engaged in conversation via the audio link, using headphones and microphones.

The 306-channel neuromagnetometer comprises 102 pairs of orthogonal planar gradiometers and 102 magnetometers. The MEG data were bandpass-filtered to 0.1–300 Hz and digitized at 1000 Hz.

2.4. Analysis

2.4.1. Audio recordings

We monitored both subjects’ speech by recording the audio signals (sampled at 48 kHz) and bandpass-filtered them to 300–3400 Hz. We then computed the envelopes (absolute values of the Hilbert transforms of the signals), lowpass-filtered them at 400 Hz to avoid aliasing, and downsampled them to MEG’s sampling frequency (1000 Hz). The downsampled envelopes were then synchronized with the MEG data with 1-ms accuracy [11].

2.4.2. MEG data

We used the temporal extension of signal space separation (tSSS) with segment length of 300 s and correlation limit of 98% [12], [13] to clean MEG signals from interference and to transform them to subjects’ mean head position (MaxFilter™ v. 2.2; Elekta Oy, Helsinki, Finland). Further data analyses were performed with FieldTrip toolbox [14] running under Matlab (v. 2014a, MathWorks, Natick, MA, USA).

We used independent component analysis (ICA) to remove artifacts caused by eye blinks, eye movements, magnetocardiograms, and speaking-related muscular activity [15]. For that purpose, the MEG data were decomposed into 60 independent components, and the components’ time courses and spatial distributions were visually examined. The number of removed components varied between subjects (on average 6 components, including artifacts due to e.g., eye blinks, horizontal eye movements, cardiac and muscular activity, as well as external sources). It is worth noting that the speech-related muscular artifacts at the lowest row of MEG sensors were not completely removed by this procedure.

2.4.3. Comparison of speaking and listening periods

We divided the MEG data manually into speaking and listening epochs by defining the start of each person’s speech from the envelope of the audio recording. Each speaker’s turn was considered to end when the conversation partner started her/his turn. If the utterances of the two persons overlapped, or if a speaker’s turn lasted less than 1 s, the data (30% of all) were discarded.

Thereafter, we computed the power spectra (0–50 Hz; based on FFT with 1-s hanning window, resulting in a frequency resolution of 1 Hz) separately for speaking and listening epochs of the conversation.

We analyzed signals from the 102 pairs of planar gradiometers (average spectral power from the two orthogonal gradiometers of each pair) in the 7–13-Hz (referred to as ∼10 Hz) and 15–25-Hz (∼20 Hz) frequency bands. For each of these frequency bands of interest separately, we normalized the MEG power values by the maximum power during listening periods across eight pre-selected sensors over the left and right rolandic cortices (four sensors in each hemisphere).

We then compared the group-level topographic maps of MEG power during speaking vs. listening periods, separately for the ∼10- and ∼20-Hz bands. For statistical comparison, we used dependent-samples t-test, yielding t-value maps. A cluster-based permutation test (Monte Carlo method, 1000 randomizations) was then used to identify clusters of statistically significant t values, i.e. significant differences between speaking and listening epochs (for further information see Ref. [16]). The sensors of the lowest row of the MEG helmet (n = 23) were excluded from the analysis because they were most affected by speaking-related artifacts. Statistical analysis thus included 79 of the 102 sensor units in the MEG helmet.

2.4.4. Modulation of sensorimotor rhythms during turn-taking

We also followed the time courses of the sensorimotor rhythms with respect to turn changes during the conversation (i.e. when one person ended and the other person started speaking). We manually selected all turn changes where the two speakers’ voices did not overlap. We then calculated the time–frequency representations (TFRs) of MEG signals with respect to the start of one’s own turn. The TFRs were calculated from −5 to 5 s from the turn start in steps of 20 ms, and for frequencies from 1 to 40 Hz in steps of 1 Hz (7-cycle wavelets).

Thereafter we extracted the ∼10- and ∼20-Hz bands from the TFRs and selected for each subject (separately for ∼10- and ∼20-Hz bands) the MEG sensor unit over the left rolandic cortex for which the modulation of that frequency band was best visible. These time-series of power were then standardized (mean subtracted and divided by the standard deviation of the time-series) to factor out the inter-individual variability in mu power and relative reactivity, and later time-shifted for each subject to align across subjects salient power increases occurring before the turn transition. We also produced the corresponding group-level TFRs from individual TFRs that were before that normalized (divided by the highest power value between 3 and 40 Hz).

3. Results

The conversations of the nine dyads lasted on average (mean ± SEM) 6.9 ± 0.5 min. Only turns that were longer than 2 s and with non-overlapping speech during the turn-takings were included to the MEG analysis. Final analyses were based on 2.4 ± 0.2 min of MEG data during subject’s own speech and the same amount of data during listening to partner’s speech.

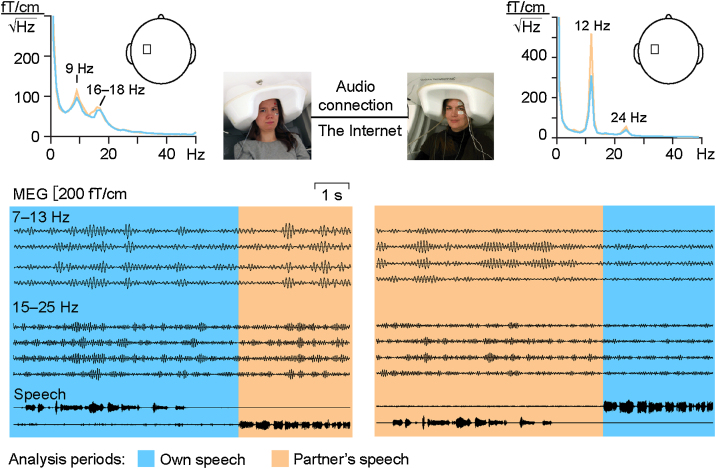

3.1. Modulations of brain oscillations during natural conversation

Fig. 1 depicts the experimental setup. During the recording, the subjects had to stay otherwise immobile but were able to engage in a natural conversation, hearing the other partner of the dyad via the Internet-based audio link. Fig. 1 also shows a representative sample of the MEG and speech signals and of the MEG spectra. The MEG signals, filtered to 7–13 Hz (top traces) and 15–25 Hz (bottom traces), are shown for one speaking (blue background) and one listening (orange background) period.

Fig. 1.

Dual-MEG setup for measuring brain activity simultaneously from two subjects engaged in a conversation via an Internet-based audio connection. Above: Amplitude spectra from one MEG planar gradiometer channel over the left rolandic cortex; blue lines show the activity during participant’s own speech and orange lines during partner’s speech. Below: MEG data from 4 planar gradiometer channels over left rolandic cortex filtered to 7–13 and 15–25 Hz, respectively. Two lowermost traces show the speech waveforms of the participant in question (above), and the speech of the partner (below). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

The spectra from one MEG channel over the left rolandic cortex display clear peaks at 9 and 16–18 Hz for the subject on the left side and at 12 and 24 Hz for the subject on the right. Both ∼10- and ∼20-Hz peaks were stronger when the subject was listening (orange traces) than speaking (blue traces).

Thus, in the following group-level analysis we concentrated on differences of the sensorimotor rhythms at ∼10 Hz and ∼20 Hz during speaking vs. listening epochs of the conversation.

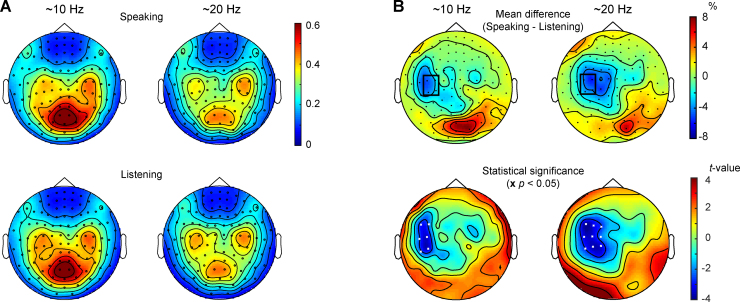

3.2. Suppression of rolandic rhythms during speaking and listening

We omitted from the analysis two subjects who lacked clear ∼10-Hz and ∼20-Hz oscillations and one subject with strong artifacts in the 20-Hz band. Thus the final analysis of ∼10-Hz oscillations was based on 16 subjects, and the analysis of ∼20-Hz oscillations on 15 subjects.

Fig. 2A shows group-average topographic maps for ∼10-Hz and ∼20-Hz powers during speaking and listening. In addition to clear peaks in the left and right sensorimotor cortices, strong occipital alpha is evident at ∼10 Hz and to a smaller extent at ∼20 Hz. These rhythms were in the left hemisphere weaker during speaking than listening (see detailed results below), whereas the level of the occipital ∼10-Hz alpha did not differ between the conditions.

Fig. 2.

(A) Topographic maps of the MEG signals in ∼10-Hz (left column) and ∼20-Hz frequency bands (right column). The spectra were calculated separately for speaking (top) and listening (bottom) epochs of the conversation. The warmer the color, the stronger is the activity in a particular area. (B) Top row: Mean difference (group average) in 7–13-Hz (left) and 15–25-Hz (right) activity between speaking and listening periods in the conversation; warm colors mark an increase, and cold colors a decrease in the activation during speaking compared with listening periods. Black rectangle surrounds the four MEG sensors that were used to calculate the individual suppression strengths. Bottom row: statistical significance map (t-values) between speaking and listening conditions. White crosses mark the sensors where the difference was statistically significant (p < 0.05).

Fig. 2B (left column) shows that the ∼10-Hz power was statistically significantly (p < 0.05) suppressed during speaking vs. listening at left rolandic sensors; such suppression was evident in 15 out of 16 subjects. The maximum ∼10-Hz suppression in one of the four left-hemisphere sensors (selected manually based on significant modulations during speaking vs. listening both at ∼10 and ∼20 Hz; marked in Fig. 2B with a black rectangle) was on average 18 ± 4%.

Fig. 2B (right column) shows that the corresponding ∼20-Hz power was suppressed statistically significantly (p < 0.05; Fig. 2B lower right plot) during speaking at left rolandic sensors. All subjects showed this suppression (mean ± SEM 17 ± 3%).

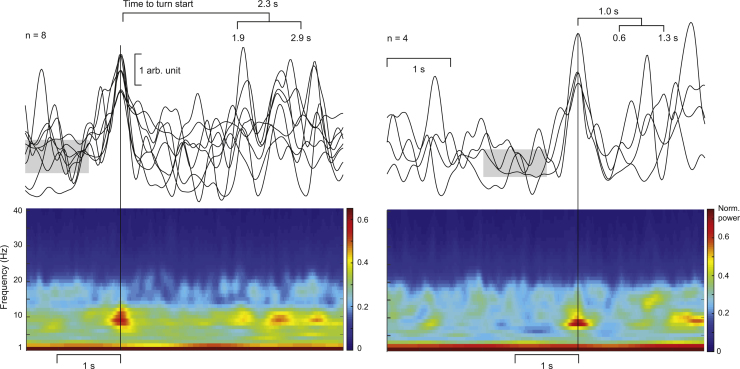

3.3. Turn-taking-related modulations of rolandic rhythms

We omitted from this analysis one subject who had only two turn starts without overlap with the other speaker’s voice and another subject with extremely large fluctuations in power envelope. We were thus left with 13 subjects who had on average 17 ± 1.7 turns with respect to which we averaged the ∼10- and ∼20-Hz envelopes. The pauses between turns lasted for 567 ± 32 ms.

Fig. 3 shows the temporal evolution of MEG power in one left-hemisphere sensor; the bottom panels show the averaged time–frequency representations for 1–40 Hz and the upper panels show the envelopes of 10-Hz power. In 8 subjects, a salient transient increase occurred on average 2.3 s (range 1.9–2.9 s; Fig. 3 top left traces) before the start of their own turn and in 4 subjects on average 1.0 s (range 0.6–1.3 s; Fig. 3 top right traces) before their own turn. The enhancements lasted for about 0.6 ± 0.1 s (full width at half maximum). The 10-Hz envelope of one subject out of 13 did not show any clear peak and is not depicted in Fig. 3. The ∼20-Hz rhythm did not show any systematic modulation in relation to turn changes in the conversation.

Fig. 3.

Top panels: Time courses of power envelopes of the ∼10-Hz rhythm around the start of the subject’s next turn in conversation; signals are displayed from one left-hemisphere sensor unit for each single individual. The waveforms are grouped and aligned according to the latency of the strongest peak in the ∼10-Hz power, with one group (left) with the mean peak latency of about 2.3 s and the other (right) with the mean peak latency about 1 s before the turn start. The brackets above the traces indicate the mean and range of the latency. The gray horizontal shadings indicate the group-mean RMS values, calculated from 0.5–1.5 s before the transient peak. Bottom panels: Time–frequency representations of the same data (group means) from 1 to 40 Hz.

To quantify the prominence of the selected power-envelope transients, we compared the across-group 10-Hz peak power with the RMS values computed across 0.5–1.5 s before the peak. For the groups of 8 and 4 subjects, respectively, the mean ± SEM peak values were 3.2 ± 0.5 and 2.8 ± 0.4 times larger than the RMS values.

4. Discussion

In the current experiment, dyads of subjects were engaged in a conversation on a given topic while we recorded their brain activity with a dual-MEG setup. We found that the sensorimotor ∼10- and ∼20-Hz oscillations were ∼20% weaker during speaking than listening, but only in the left hemisphere. The observed suppression, as such, is in line with previous findings that the rolandic mu rhythm is dampened during motor activity (for reviews, see Refs. [17], [18]). Still, it has been unclear whether the activation of the sensorimotor cortex shows left-hemisphere dominance during speech production. Several studies have reported bilateral activation in sensorimotor cortices while subjects were repeating single vowels [19], words they had heard [20], or a phrase [21]. On the other hand, left-hemipshere dominant activation has been found when subjects were reading aloud single nouns [22] or reciting the names of the months [23].

It has been claimed that the hemispheric lateralization during speech processing depends on the linguistic content of speech: whereas comprehension of “unconnected speech” (single phonemes, syllables, and words) relies on bilateral processing in temporal cortex, comprehension of “connected speech” (meaningful sentences and longer phrases) is associated with left-hemisphere-dominant activation of frontotemporal brain regions [9].

In a previous MEG study on speech production, the sensorimotor 20-Hz suppression did not differ but the post-movement 20-Hz rebound was statistically significantly left-hemisphere dominant when the subjects were uttering the same self-selected word in response to tone pips, but bilateral when they were at each tone pip silently articulating the vowel /o/. Moreover, the rebound was left-hemisphere dominant (but did not reach statistical significance) also when a kissing posture was made with the lips or when a new word was pronounced at each tone pip [24]. Thus it remained unclear to which extent the hemispheric lateralization of sensorimotor-cortex activation would depend on the linguistic content of the produced speech sounds. Our finding of left-lateralized suppression of rolandic ∼10-Hz and ∼20-Hz oscillations during speaking supports stronger involvement of the left than the right sensorimotor cortex in the production of connected speech during natural conversation.

As an unprecedented finding we observed that the level of sensorimotor ∼10-Hz oscillations was transiently enhanced (for about 0.6 s) before the turn changes; in 8 subjects the transient increases peaked about 2.3 s and in 4 subjects about 1 s before the start of the subject’s next turn, i.e., while the subjects were still listening to their partner.

At present we can only speculate about the neuronal basis of these transient 10-Hz increases in the sensorimotor cortex but we propose that they would be related to preparation, and specifically to respiratory preparation, for the subject’s next turn when the partner is predicted to soon end his/her turn.

The rhythm of speaking is closely related to the rhythm of breathing. Speaking occurs during exhalation and is typically preceded by prephonatory inspirations that have to account for the timing, prosody and loudness of the forthcoming utterances [25]. In contrast to respiration during rest when the inhalation and exhalation phases are of rather similar duration, during speaking the breathing is highly asymmetric, with short (about 0.5 s) inhalations followed by long exhalations lasting several seconds depending on the duration of contiguous speech [26].

During conversation, the listener’s exhalation phase lengthens already before turn-taking [27], making the resting breathing pattern to resemble that during speaking. Most turns are taken just after an inhalation, and coordination between the breathing rhythm of the partner is evident: listeners tend to inhale during the last part of the partner’s exhalation phase [26]. However, no overall correlation has been found between the breathing rhythms of the partners in a dyadic conversation [26], [28], indicating that breathing coordination during a dialog is specific to turn-taking.

In rats, brief inspirations (sniffing) are related to the phasically increased gamma oscillations in the olfactory bulb [29] and in respiratory regions of ventral medulla that provide input to facial motoneurons [30]. Although respiration has effects on the excitability of the human cortex, we cannot at present resolve whether the transient pre-turn enhancements of the sensorimotor 10-Hz rhythm could reflect prephonatory inhalations in a person preparing for her own turn, as we did not monitor respiration. Thus, further studies are needed to address this hypothesis.

Taken together, we have shown that during natural conversation the speaker’s sensorimotor cortex is activated in a left-hemisphere dominant manner, possibly reflecting the linguistic demands of the natural speech production. We also observed transient changes in sensorimotor activity a few seconds before the turn-takings, likely reflecting the listeners’ prediction of the turn end and (respiratory) preparation for their own turn.

Acknowledgments

This study was supported by the European Research Council (Advanced Grant #232946 to RH), the Academy of Finland (grants #131483 and #263800), European Union Seventh Framework Programmethe E (FP7/2007–2013) under grant agreement no. 604102 (Human Brain Project), Finnish Graduate School of Neuroscience, Doctoral Program Brain and Mind (Finland), and Emil Aaltonen Foundation (Finland). We thank Pamela Baess, Tommi Himberg, Lotta Hirvenkari, Mia Illman, Veikko Jousmäki, Jyrki Mäkelä, Jussi Nurminen, Petteri Räisänen, Ronny Schreiber, and Andrey Zhdanov for contributing to the realization of the 2-person MEG setup. The recordings were made in collaboration with the MEG Core, Aalto NeuroImaging and the BioMag Laboratory, Helsinki University Hospital.

References

- 1.Pickering M.J., Garrod S. Toward a mechanistic psychology of dialogue. Behav. Brain Sci. 2004;27:220–225. doi: 10.1017/s0140525x04000056. [DOI] [PubMed] [Google Scholar]

- 2.Gonzales A.L., Hancock J.T., Pennebaker J.W. Language style matching as a predictor of social dynamics in small groups. Commun. Res. 2010;37:3–19. [Google Scholar]

- 3.Kendon A. Movement coordination in social interaction: some examples described. Acta Psychol. (Amst.) 1970;32:100–125. [PubMed] [Google Scholar]

- 4.Himberg T., Hirvenkari L., Mandel A., Hari R. Word-by-word entrainment of speech rhythm during joint story building. Front. Psychol. 2015;6:1–6. doi: 10.3389/fpsyg.2015.00797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Stivers T., Enfield N.J., Brown P., Englert C., Hayashi M., Heinemann T. Universals and cultural variation in turn-taking in conversation. Proc. Natl. Acad. Sci. U. S. A. 2009;106:10587–10592. doi: 10.1073/pnas.0903616106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chatrian G.E., Petersen M.C., Lazarate J.A. The blocking of the rolandic wicket rhythm and some central changes related to movement. Electroencephalogr. Clin. Neurophysiol. 1959;11:497–510. doi: 10.1016/0013-4694(59)90048-3. [DOI] [PubMed] [Google Scholar]

- 7.Tiihonen J., Kajola M., Hari R. Magnetic mu rhythm in man. Neuroscience. 1989;32:793–800. doi: 10.1016/0306-4522(89)90299-6. [DOI] [PubMed] [Google Scholar]

- 8.Salmelin R., Hämäläinen M., Kajola M., Hari R. Functional segregation of movement-related rhythmic activity in the human brain. Neuroimage. 1995;2:237–243. doi: 10.1006/nimg.1995.1031. [DOI] [PubMed] [Google Scholar]

- 9.Peelle J.E. The hemispheric lateralization of speech processing depends on what speech is: a hierarchical perspective. Front. Hum. Neurosci. 2012;6:309. doi: 10.3389/fnhum.2012.00309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Baess P., Zhdanov A., Mandel A., Parkkonen L., Hirvenkari L., Mäkelä J.P. MEG dual scanning: a procedure to study real-time auditory interaction between two persons. Front. Hum. Neurosci. 2012;6:83. doi: 10.3389/fnhum.2012.00083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zhdanov A., Nurminen J., Baess P., Hirvenkari L., Jousmäki V., Mäkelä J.P. An nternet-based real-time audiovisual link for dual MEG rIecordings. PLoS One. 2015;10:e0128485. doi: 10.1371/journal.pone.0128485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Taulu S., Simola J. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Phys. Med. Biol. 2006;51:1–10. doi: 10.1088/0031-9155/51/7/008. [DOI] [PubMed] [Google Scholar]

- 13.Taulu S., Hari R. Removal of magnetoencephalographic artifacts with temporal signal-space separation: demonstration with single-trial auditory-evoked responses. Hum. Brain Mapp. 2009;30:1524–1534. doi: 10.1002/hbm.20627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Oostenveld R., Fries P., Maris E., Schoffelen J.M. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011;2011 doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Vigário R., Särelä J., Jousmäki V., Hämäläinen M., Oja E. Independent component approach to the analysis of EEG and MEG recordings. IEEE Trans. Biomed. Eng. 2000;47:589–593. doi: 10.1109/10.841330. [DOI] [PubMed] [Google Scholar]

- 16.Maris E., Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods. 2007;164:177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- 17.Hari R., Salmelin R. Human cortical oscillation: a neuromagnetic view through the skull. Trends Neurosci. 1997;20:44–49. doi: 10.1016/S0166-2236(96)10065-5. [DOI] [PubMed] [Google Scholar]

- 18.Cheyne D.O. MEG studies of sensorimotor rhythms: a review. Exp. Neurol. 2013;245:27–39. doi: 10.1016/j.expneurol.2012.08.030. [DOI] [PubMed] [Google Scholar]

- 19.Tarkka I.M. Cerebral sources of electrical potentials related to human vocalization and mouth movement. Neurosci. Lett. 2001;298:203–206. doi: 10.1016/s0304-3940(00)01764-x. [DOI] [PubMed] [Google Scholar]

- 20.Wise R.J.S., Greene J., Buchel C., Scott S.K. Brain regions involved in articulation. Lancet. 1999;353:1057–1061. doi: 10.1016/s0140-6736(98)07491-1. [DOI] [PubMed] [Google Scholar]

- 21.Murphy K., Corfield D.R., Guz A., Fink G.R., Wise R.J., Harrison J. Cerebral areas associated with motor control of speech in humans. J. Appl. Physiol. 1997;83:1438–1447. doi: 10.1152/jappl.1997.83.5.1438. [DOI] [PubMed] [Google Scholar]

- 22.Salmelin R., Schnitzler A., Schmitz F., Freund H.J. Single word reading in developmental stutterers and fluent speakers. Brain. 2000;123:1184–1202. doi: 10.1093/brain/123.6.1184. [DOI] [PubMed] [Google Scholar]

- 23.Riecker A., Ackermann H., Wildgruber D., Dogil G., Grodd W. Opposite hemispheric lateralization effects during speaking and singing at motor cortex, insula and cerebellum. NeuroReport. 2000;11:1997–2000. doi: 10.1097/00001756-200006260-00038. [DOI] [PubMed] [Google Scholar]

- 24.Salmelin R., Sams M. Motor cortex involvement during verbal versus non-verbal lip and tongue movements. Hum. Brain Mapp. 2002;16:81–91. doi: 10.1002/hbm.10031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tremoureux L., Raux M., Ranohavimparany A., Morélot-Panzini C., Pouget P., Similowski T. Electroencephalographic evidence for a respiratory-related cortical activity specific of the preparation of prephonatory breaths. Respir. Physiol. Neurobiol. 2014;204:64–70. doi: 10.1016/j.resp.2014.06.018. [DOI] [PubMed] [Google Scholar]

- 26.Rochet-Capellan A., Fuchs S. Take a breath and take the turn: how breathing meets turns in spontaneous dialogue. Philos. Trans. R. Soc. B: Biol. Sci. 2014;369:20130399. doi: 10.1098/rstb.2013.0399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.McFarland D.H. Respiratory markers of conversational interaction. J. Speech Lang. Hear. Res. 2001;44:128–143. doi: 10.1044/1092-4388(2001/012). [DOI] [PubMed] [Google Scholar]

- 28.Warner R.M., Waggener T.B., Kronauer R.E. Synchronized cycles in ventilation and vocal activity during spontaneous conversational speech. J. Appl. Physiol. 1983;54:1324–1334. doi: 10.1152/jappl.1983.54.5.1324. [DOI] [PubMed] [Google Scholar]

- 29.Manabe H., Mori K. Sniff rhythm-paced fast and slow gamma-oscillations in the olfactory bulb: relation to tufted and mitral cells and behavioral states. J. Neurophysiol. 2013:1593–1599. doi: 10.1152/jn.00379.2013. [DOI] [PubMed] [Google Scholar]

- 30.Moore J.D., Deschênes M., Furuta T., Huber D., Smear M.C., Demers M. Hierarchy of orofacial rhythms revealed through whisking and breathing. Nature. 2013;497:205–210. doi: 10.1038/nature12076. [DOI] [PMC free article] [PubMed] [Google Scholar]