Abstract

Recent evidence from blind participants suggests that visual areas are task-oriented and sensory modality input independent rather than sensory-specific to vision. Specifically, visual areas are thought to retain their functional selectivity when using non-visual inputs (touch or sound) even without having any visual experience. However, this theory is still controversial since it is not clear whether this also characterizes the sighted brain, and whether the reported results in the sighted reflect basic fundamental a-modal processes or are an epiphenomenon to a large extent. In the current study, we addressed these questions using a series of fMRI experiments aimed to explore visual cortex responses to passive touch on various body parts and the coupling between the parietal and visual cortices as manifested by functional connectivity. We show that passive touch robustly activated the object selective parts of the lateral–occipital (LO) cortex while deactivating almost all other occipital–retinotopic-areas. Furthermore, passive touch responses in the visual cortex were specific to hand and upper trunk stimulations. Psychophysiological interaction (PPI) analysis suggests that LO is functionally connected to the hand area in the primary somatosensory homunculus (S1), during hand and shoulder stimulations but not to any of the other body parts. We suggest that LO is a fundamental hub that serves as a node between visual-object selective areas and S1 hand representation, probably due to the critical evolutionary role of touch in object recognition and manipulation. These results might also point to a more general principle suggesting that recruitment or deactivation of the visual cortex by other sensory input depends on the ecological relevance of the information conveyed by this input to the task/computations carried out by each area or network. This is likely to rely on the unique and differential pattern of connectivity for each visual area with the rest of the brain.

Keywords: fMRI, Somatosensory, Visual, Cross-modal, Connectivity

Highlights

-

•

We studied cross-modal effects of passive somatosensory inputs on the visual cortex.

-

•

Passive touch on the body evoked massive deactivation in the visual cortex.

-

•

Passive hand stimulation evoked unique activation in visual object area LO.

-

•

This area was also uniquely connected to the hand area in Penfield's homunculus — S1.

Introduction

Recent studies in blind subjects suggest that the visual cortex of the blind can be taken over by other sensory modalities in very specific manner; namely, cross-modal recycling of each visual area, in a task specific manner even in the absence of any visual experience (Amedi et al., 2007, Merabet and Pascual-Leone, 2010, Ptito et al., 2012, Reich et al., 2011, Renier et al., 2010, Striem-Amit and Amedi, 2014, Striem-Amit et al., 2012a). For instance, it was shown that Braille reading activates the visual word form area (VWFA, Reich et al., 2011), and that haptic object recognition by touch or using visual-to-auditory substitution recruits the object related lateral occipital complex (LOC, Amedi et al., 2007, Amedi et al., 2010), leading to the suggestion that this area is dedicated to deciphering the 3D shape of objects regardless of sensory modality input (Amedi et al., 2001). This has led to various complementary theories suggesting that the entire visual system (or even the entire brain) might be a metamodal/supramodal sensory independent task machine even under normal sensory experience during brain development (Amedi et al., 2001, Pascual-Leone and Hamilton, 2001). In other words, the driving force for the recruitment of specific cortical area is not the sensory modality input but rather the task and computations carried out by each area or network. For example it was suggested that the main task of the (‘visual’) word form area is assigning a phonological value to different shape symbols (i.e. letters) which can be delivered by any sensory modality (Reich et al., 2011). This role might be supported by the maintenance of functional connectivity between shape selective areas in the visual cortex and phonological areas in both blind and sighted subjects (Striem-Amit and Amedi, 2014, Striem-Amit et al., 2012a). Similarly, the role of LOC might be to decipher the geometric 3D shape of objects regardless of sensory input modality (Amedi et al., 2001). Under normal conditions, such information is delivered through vision and touch, but recent evidence has revealed that even sound can recruit LOC if it contains specific geometric information, for example when the blind or sighted are using sensory substitution devices (SSDs, Amedi et al., 2007) or echolocation (Arnott et al., 2013, James et al., 2011).

However, this intriguing theory raises a set of fundamental questions that are highly controversial within the neuroscience community at large, especially in the context of contradictory results:

-

(1)

It is not clear whether and to what extent such cross-modal task selective activations also characterize the sighted brain or whether they are specific to cross-modal plasticity following visual deprivation. Several studies have reported a-modal multisensory activation in the traditional visual cortical areas of sighted individuals (Amedi et al., 2001, Beauchamp et al., 2007, Costantini et al., 2011, James et al., 2002, Mahon et al., 2009; see Beauchamp, 2005 for review). Other studies have hinted at the reverse phenomenon by showing that cross-modal stimuli (i.e. tactile, auditory or cognitive tasks) deactivate visual areas in normally sighted subjects while activating these areas in blind individuals (Azulay et al., 2009, Kawashima et al., 1995, Sadato et al., 1996). Therefore, it is not clear whether the lack (or reduction) of visual experience leads to the creation of new functional and anatomical connections or whether these results represent a more general pattern that is evident in the sighted as well.

-

(2)

There is still a vigorous debate regarding the interpretation and the source of such cross-modal and task-selective responses, since it is not clear whether the reported results in the sighted reflect basic a-modal processing or could be attributed to different confounding factors such as visual mental imagery (Peltier et al., 2007, Sathian and Zangaladze, 2001, Sathian et al., 1997).

For example, in all the studies reporting on tactile object selectivity in the LOC, the tactile activations emerged from active object manipulation which in all cases is confounded to a certain extent with motor movements, semantic input, object recognition and mental visual imagery (Amedi et al., 2001, Amedi et al., 2002, James et al., 2002, Reed et al., 2004, Zhang et al., 2004, but see Prather et al., 2004 for results on passive touch).

-

(3)

Another set of basic questions relates to the functional networks which might support the creation and maintenance of such a-modal effects. Specifically, little is known about the role of functional and anatomical connectivity patterns between visual areas and somatosensory or auditory areas in the creation of these task selectivity responses.

-

(4)

Beyond the issue of whether visual areas process tactile or auditory stimuli to a similar extent, it is also worth inquiring whether there are any topographical biases in the somatosensory evoked responses in visual areas. All visual retinotopic and even several non-retinotopic areas such as the VWFA and PPA (parahippocampal place area, Epstein and Kanwisher, 1998) show clear topographic selectivity to visual eccentricity. Similarly, Penfield's somatosensory homunculi and other high order somatosensory areas show topographic biases to body parts (Huang et al., 2012). However, it is not clear whether body-specific somatosensory biases also characterize cross-modal responses in the visual cortex (and vice versa). Most of the previous literature has focused on one part of the body surface (hands during active touch of objects) to study interactions between the somatosensory and the visual cortices. However, the pattern of visual cortex responses following tactile stimulation of various body parts has never been tested. Similarly, previous studies have suggested that the extent of multisensory processing and connectivity of low-level visual areas is modulated by their topographic organization (Borra and Rockland, 2011, Falchier et al., 2002, Gleiss and Kayser, 2013, Rockland and Ojima, 2003). Thus there is potentially much to learn from studying whether such topographical biases, such as eccentricity (fovea–periphery organization) also characterize visual responses to passive somatosensory stimuli.

In order to study this set of fundamental questions we focused on the interactions between touch and vision in the normally sighted brain, using the following extensive and complementary approaches: (1) we used a passive rather than active paradigm in the tested somatosensory modality; (2) we compared passive somatosensory input from various body parts; (3) we used various visual localizers to separate low-level vs. high order and center vs. peripheral areas in the visual cortex, with (4) special attention to object selective areas which might have a higher likelihood of being functionally connected and driven by the somatosensory system; and (5) finally using PPI (psychophysiological interaction) analysis we tested body-specific changes in the functional connectivity between LO and the somatosensory cortex.

Material and methods

Subjects

A total of 16 healthy right-handed subjects (6 females) aged 24–37 (mean age 29) with no neurological deficits were scanned in the current study. All the participants were scanned in the somatosensory and visual experiments, with different subsets of subjects participating in two additional experiments (see below). The Tel Aviv Sourasky Medical Center ethics committee approved the experimental procedure and written informed consent was obtained from each subject before the scanning procedure.

Experiments and stimuli

Our goal was to explore the cross-modal effects of passive somatosensory stimuli in the visual cortex. Four experiments were conducted: a passive somatosensory experiment, an auditory control experiment which served as a control for the somatosensory experiment and two visual experiments mapping low-level retinotopic and higher functional areas in the visual cortex. Except for the visual experiments, the subjects were blindfolded throughout all scans.

-

1.

Somatosensory experiment (n = 16): This experiment was made up of two scanning sessions, with two conditions in each session — tactile perception and tactile imagery. In the tactile perception condition, in each block, the body surface was stimulated sequentially by brushing the right side of the subjects' skin surface from the lips, and then continuously from the fingers and palm through the shoulder, waist, knee, and down to the foot and toes. This stimulation order was reversed in the second scanning session. The stimulus was passive and the subjects were just asked to concentrate on the tactile sensation. We chose this paradigm of continuance stimulation since it has been shown to be an optimal method for topographical mapping (Engel, 2012, Engel et al., 1997, Sereno et al., 1995) with relatively short scanning sessions. The natural tactile stimuli were delivered using a four-centimeter-wide paint brush by the same experimenter, who was well-trained prior to the scans to maintain a constant pace and pressure during the sessions. In the imagery condition, the subjects were instructed to imagine the same tactile sensation as was experienced during the tactile perception condition. Precise timing of stimuli (tactile or imagery) was achieved by auditory cues (the name of the body part to be stroked) delivered to the experimenter and the subjects through fMRI-compatible electrodynamic headphones (MR-Confon, Germany). The length of each stimulation cycle was 15 s (from lips to toes or vise versa), which was followed by a 12 s rest baseline. Each scanning session included 8 blocks of tactile perception and 8 blocks of tactile imagery, which were presented pseudorandomly. 30 s of silence was added before and after the 16 cycles of tactile stimulation for baseline.

-

2.

Visual localizer experiment (n = 16): This experiment was aimed at localizing functional visual areas in the extrastriate cortex and was designed in a block paradigm, including 5 visual categories — objects, body parts, faces, houses and textures. Visual stimuli were generated on a PC and presented via an LCD projector (Epson MP 7200) onto a translucent screen. Subjects viewed the gray scale stimuli through a tilted (~ 45°) mirror positioned above each subject's forehead. 9 images from the same visual category were presented in each epoch; each image was presented for 800 ms and was followed by a 200 ms blank screen. Each epoch lasted 9 s followed by a 6 s blank screen. A central red fixation point was present throughout the experiment. Each experimental condition was repeated 7 times, in pseudorandom order. During the experiment, one or two consecutive repetitions of the same image occurred in each epoch. The subjects' task was to covertly report whether the presented stimulus was identical to the previous stimulus or not.

-

3.

Auditory control (n = 15): This experiment served as a control experiment for the somatosensory experiment and was composed of the same auditory stimuli as in the somatosensory experiment. The subjects passively listened to a list of the names of the body parts in the same set up as in the somatosensory experiment. Each stimulation block, lasting 15 s, included one presentation of the list and was followed by a 12 second rest. 30 s of silence was added before and after the 8 cycles of auditory stimulation for baseline. In half of the scanning sessions the order of the auditory stimuli was reversed, starting from the foot to the lips.

-

4.

Visual eccentricity experiment (n = 13): The visual stimulus was adapted from standard retinotopy mapping (Engel et al., 1994, Engel et al., 1997, Sereno et al., 1995). An annulus was presented, expanding from 0° to 34° of the subject's visual field in 30 s. This cycle was repeated 10 times. 30 s of silence was added before and after the 8 cycles of tactile stimulation for baseline.

Functional and anatomical MRI acquisition

The BOLD fMRI measurements were obtained in a whole-body, 3-T Magnetom Trio scanner (Siemens, Germany). The scanning session included anatomical and functional imaging. The functional protocols were based on multi-slice gradient echoplanar imaging (EPI) using Siemens's 12-channels Head Matrix Coil. The functional data were collected under the following timing parameters: TR = 1.5 s (TR = 2 s in the visual localizer experiment), TE = 30 ms, FA = 70°, imaging matrix = 80 × 80, and FOV = 24 × 24 cm (i.e., in-plane resolution of 3 mm). 27–35 slices with slice thickness = 4.1–4.3 mm and 0.4–0.5 mm gap (46 slices with slice thickness = 3 mm and 0.3 mm gap in the visual localizer experiment) were oriented in the axial position, for complete coverage of the whole cortex. The first ten images (during the first baseline rest condition) were excluded from the analysis because of non-steady state magnetization. High resolution three-dimensional anatomical volumes were collected using a 3D-turbo field echo (TFE) T1-weighted sequence (equivalent to MP-RAGE). Typical parameters were: field of view (FOV) 23 cm (RL) × 23 cm (VD) × 17 cm (AP); foldover-axis: RL, data matrix: 160 × 160 × 144 zero-filled to 256 in all directions (approx 1 mm isovoxel native data), TR/TE = 9 ms/6 ms, and flip angle = 8°. Cortical reconstruction included the segmentation of the white matter using a grow-region function embedded in the BrainVoyager QX 2.0.8 software package (Brain Innovation, Maastricht, Netherlands). The cortical surface was then inflated. Group results were superimposed on a 3D cortical reconstruction of a Talairach normalized brain (Talairach and Tournoux, 1988).

Preprocessing of fMRI data

Data analysis was initially performed using the BrainVoyager QX 2.0.8 software package (Brain Innovation, Maastricht, Netherlands). fMRI data went through several preprocessing steps which included head motion correction, slice scan time correction, and high-pass filtering (cutoff frequency: 2 cycles/scan). No data included in the study showed translational motion exceeding 2 mm in any given axis, or had spike-like motion of > 1 mm in any direction. The time courses were de-trended to remove linear drifts, and in the experiments in which stimulation was periodic (somatosensory, auditory control and visual eccentricity), a temporal smoothing (4 s) in the frequency domain was also applied, in order to remove drifts and to improve the signal-to-noise ratio. For group analysis functional data also underwent spatial smoothing (spatial Gaussian smoothing, full width at half maximum = 6 mm) in order to overcome inter-subject anatomical variability within and across experiments. Functional and anatomical datasets for each subject were aligned and fit to the standardized Talairach space (Talairach and Tournoux, 1988).

Data analysis

GLM analysis

To compute statistical parametric maps we applied a general linear model (GLM) using predictors convoluted with a typical hemodynamic response function. Cross-subject statistical parametric maps were calculated using hierarchical random-effects model analysis (Friston et al., 1999). A statistical threshold criterion of p < 0.01 was set for all results unless otherwise reported. This threshold was corrected for multiple comparisons using a cluster-size threshold adjustment for multiple comparisons, based on a Monte Carlo simulation approach extended to 3D datasets, using the threshold size plug-in BrainVoyager QX (Forman et al., 1995).

In the somatosensory experiment, the raw time course of each cycle of response to the continuous full body tactile stimuli was divided into five brushing segments corresponding to stimulation of cortically adjacent body parts according to Penfield's classic homunculus (lip, hand, shoulder and upper trunk, hip to knee and knee to toes). This division of the continual stimulus into five segments roughly reflected the dermatomal arrangement of the skin surface: lips (trigeminal cranial nerve), arm and trunk (cervical dermatomes) and hip and leg (thoracic and lumber dermatomes). The responses to brushing the five segments were averaged from the two sessions with lip-to-toes and toes-to-lip strokes to avoid order and attention effects. Error bars display the standard error across subjects and sessions.

Regions of interest analysis

In order to test the somatotopic preference of the tactile-evoked activations in the visual cortex, we defined two regions-of interest (ROIs) in the left and right LO, identified from the group contrast between visual objects and all other visual categories (in conjunction with visual-object > rest baseline). We then used these ROI as an external localizer to test the body-part selectivity for passive perception and tactile imagery and to auditory signal from the control experiment. The time course of activation from these group-defined ROIs was sampled from the somatosensory experiment, and a ROI based random effect GLM analysis was conducted for the two scanning sessions, across all subjects. The time course of activation was also sampled from the auditory control experiment and a similar random effect GLM analysis was conducted to test for auditory activation within LO area.

Visual eccentricity analysis

In order to delineate retinotopic visual areas and their center-periphery preference, we applied a cross-correlation analysis. A boxcar function 2 TRs (time repetition) long was convolved with a two gamma hemodynamic response function (HRF) to derive predictors for the analysis. This predictor and the time course of each voxel were cross-correlated, allowing for 20 lags with one TR interval time to account for the stimulation duration of each cycle. For each voxel we obtained the lag value giving the highest correlation coefficient between the time course and one of the 20 predictors and the value of this correlation coefficient itself. On the group level, to run the random effect analysis, we used GLM parameter estimators derived from a complementary analysis as follows. First, a GLM analysis was carried out at the single-subject level using the predictor model described above. The resulting GLM parameter estimator values were then used in a second-level analysis for the group random effect. Finally, the random effect results were corrected for multiple comparisons using the Monte Carlo method (1000 iterations, α < 0.05) with an a-priori threshold of p < 0.05. The cross-correlation maps of the individual subjects were averaged to create a mean lag values map. The lag maps were thresholded by both the averaged correlation coefficient and the random effect corrected for multiple comparison maps.

Psychophysiological interaction (PPI) analysis

In order to test for body-dependent functional connectivity patterns we applied the generalized psychophysiological interaction (gPPI) method. PPI analysis was originally proposed by Friston and colleagues (Friston et al., 1997) and was recently expended to a generalized form of context-dependent PPI (McLaren et al., 2012), which span the space of all conditions and allows to explore all potential interactions between the psychological conditions. We were interested to explore which areas of the brain show task-specific correlations with Tactile-LO, and thus test if Tactile-LO is functionally connected to the somatosensory cortex and specifically to the hand and shoulder areas within S1. For that, we defined a spherical ROI (9 mm radius) from the group peak Talairach coordinates of Tactile-LO. Using the Prepare PPI Plug-in implemented in BrainVoyager a PPI model was created for each participant, for the two scanning sessions of the somatosensory experiment. Briefly, in this analysis, the mean activity of the seed ROI is extracted. This time course is z-transformed and then multiplied TR by TR with the task time course (a set of protocol-based predictors convoluted with a typical hemodynamic response function) to create a PPI predictor. The resulted GLM design matrix files were then used for a hierarchical random-effects model analysis (Friston et al., 1999). The statistical threshold criterion was set to p < 0.05 for all presented contrasts, and the resulted mapped was corrected for multiple comparisons using the Monte Carlo simulation approach (Forman et al., 1995).

Results

In this study we explored the cross-modal effects of passive somatosensory inputs from the body on the visual cortex. The study included a set of fMRI experiments on the same group of subjects:(1) a somatosensory experiment designed to search for somatosensory evoked responses in visual areas, and to further assess their topographical biases. This experiment included two conditions — perception of passive tactile stimulation of the subjects' bodies, and mental imagery of the same stimuli, (2) a visual experiment presenting the stimuli from several visual categories, which served as a localizer to define functional areas in the visual cortex and specifically, object selective areas. We also conducted two additional experiments: (3) visual eccentricity mapping to localize foveal and peripheral visual retinotopic areas and (4) an auditory control experiment of passive listening to the names of body parts which served as a control for the somatosensory experiment.

We analyzed the data on several levels and with a series of approaches, both at the group and single subject level. First, in order to discover the large-scale pattern of activation and deactivation in the visual cortex we performed a whole-brain analysis of the passive touch somatosensory responses. Next, to define the somatosensory activated and deactivated areas in the visual cortex we analyzed the visual experiment mapping of the low-level retinotopic and higher functional visual areas. We then tested whether the tactile evoked areas in the visual cortex showed somatotopic preferences for specific body parts using regions-of-interest (ROI) analysis. Finally, we compared the body-specific functional connectivity patterns of tactile-activated areas in the visual cortex following various body part stimulations.

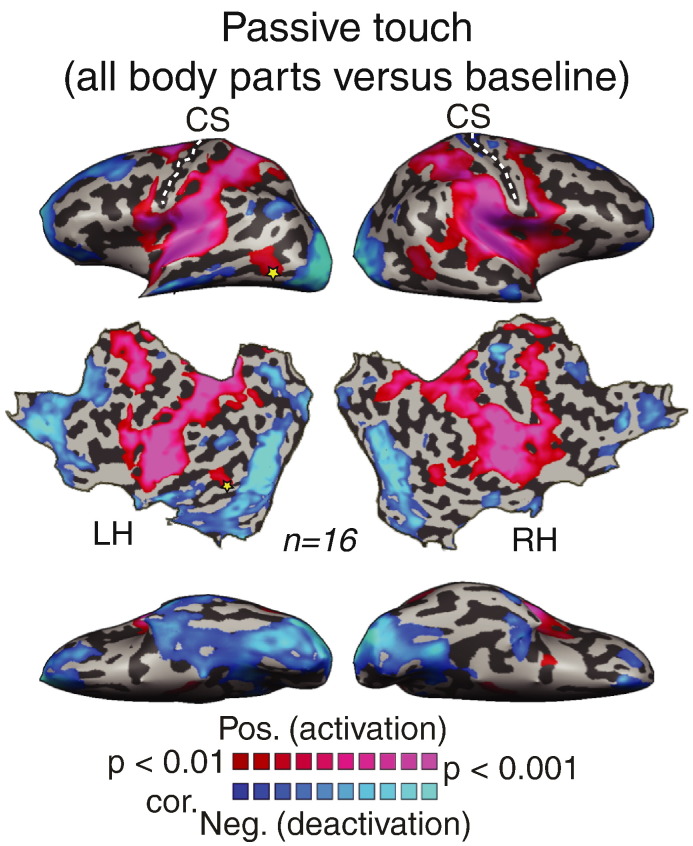

Fig. 1 presents the statistical parametric map of the whole-brain GLM analysis in response to tactile stimulation of the right side of the body (all body parts compared to baseline, t (15) = 3, p < 0.01, random effect analysis for all shown group results and corrected for multiple comparisons). As predicted, tactile stimulation of the body resulted in extensive bilateral activation in the parietal somatosensory areas, the insular cortex, and the motor cortex including the premotor and supplementary motor areas. However, an interesting pattern of responses was observed in the visual cortex. We found that in addition to the negative BOLD responses in the posterior and prefrontal areas, which comprise the default mode network (Greicius and Menon, 2004, Raichle et al., 2001), passive touch on the body resulted in a massive and overall deactivation of the visual cortex. Additional foci of deactivation included the dorsal part of the central sulcus in the right hemisphere. Comparing the deactivated areas in the visual system with a visual eccentricity map delineating retinotopic areas (Figs. S1, A–C) showed that the deactivated areas included (i.e. massively overlapped) both the retinotopic and non-retinotopic areas, in both the ventral and dorsal visual streams. This massive deactivation in response to passive touch was also evident and overlapped retinotopic eccentricity mapping at the single subject level (Fig. S1D). Interestingly, we did not find any positive BOLD in the peripheral primary visual (V1) or other peripheral visual areas as might have been expected based on some of the literature (see Introduction and Discussion, Introduction and Discussion sections).

Fig. 1.

Passive touch evokes massive deactivation in the visual cortex with a unique activation in the lateral occipital cortex. Statistical parametric map for whole-body passive touch (all body parts versus baseline) is presented on inflated and flattened brain reconstructions. Following the tactile stimulation, most of the visual cortex was deactivated except a specific activation in the LOC.

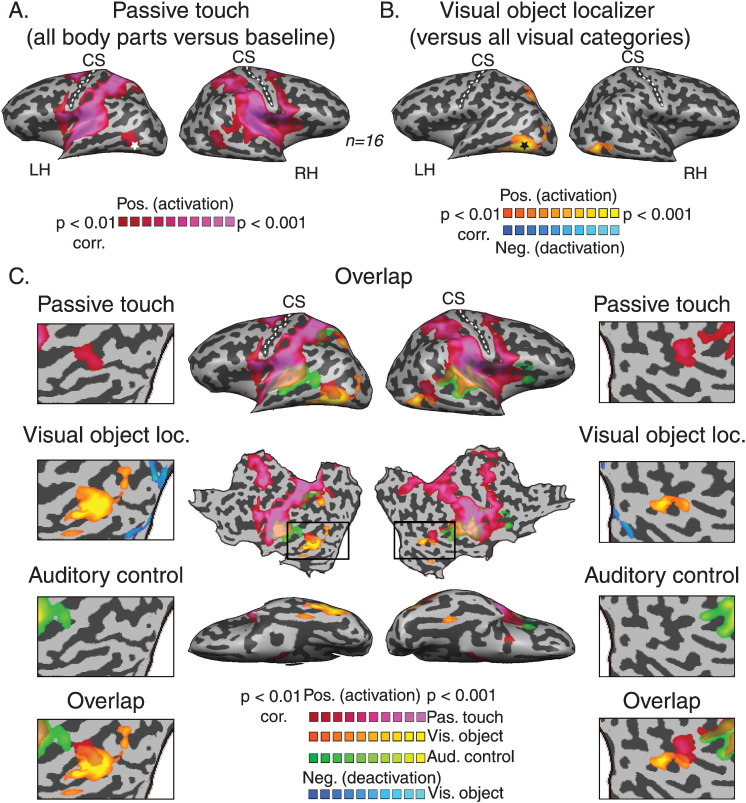

The one and only exception for this robust deactivation was observed in the lateral occipital cortex, which showed positive responses to passive touch stimulation. This tactile activated area overlapped the previously reported area that was activated by haptic active exploration of objects (Amedi et al., 2001; the lateral occipital tactile visual area — LOtv, denoted by an asterisk). In order to test the hypothesis that the unique passive touch response in the visual system is related to visual object selectivity, and hence to the specific computation or task performed by this area, we analyzed the visual localizer experiment to map object selective areas (Fig. 2). The results showed that visual object selective areas overlapped with the passive touch responses. Fig. 2A presents the positive BOLD response to passive touch on the whole body (the same map as presented in Fig. 1). Fig. 2B presents the statistical parametric map of the contrast between visual objects and other visual categories (see Material and methods section; t (15) = 3, p < 0.01, corrected for multiple comparisons). Visual object selective areas were observed in two bilateral clusters, the LO and a more dorsal cluster at the precuneus/posterior Intraparietal Sulcus (IPS). The overlay of the somatosensory and visual (Fig. 2C, pink and orange clusters, respectively) maps clearly shows that the response to tactile stimulation of the body overlapped with the dorsal part of the object selective area within LO. We termed this the tactile-evoked activation Tactile-LO (peak Talairach coordinates [x, y, z]: − 47, − 61, − 3; very similar to the previously reported LOtv: − 45, − 62, − 9). But note that in contrast to the previous experiments showing LOtv activation to haptic object exploration, the current study did not include hand movement and more importantly did not include any object recognition component.

Fig. 2.

Passive touch on the body activates the visual object selective area in LOC. A. Statistical parametric map for whole-body passive touch. B. Statistical parametric map for the visual object localizer shows bilateral LO activation. C. Overlap of the activations in response to passive touch (pink), visual-object (orange) and auditory control (green) stimuli. The results show that tactile but not auditory activated areas overlap bilaterally with the dorsal part of visual object selectivity in LOC. This tactile activated area overlapped the previously reported (Amedi et al., 2001) tactile object recognition area, the LOtv (denoted by an asterisk).

We also tested whether simply listening to the names of body part would result in similar LO activations due to auditory input or automatic mental imagery processes. For this purpose we conducted a separate auditory control experiment (that preceded the somatosensory experiment) in which the subjects passively listened to the names of the different body parts. The green clusters in Fig. 2C represent the activated areas in response to these auditory stimuli, and show that the responses to the names of body parts were confined to the auditory cortex with an additional cluster in the parietal lobe (further investigation of this effect is beyond the scope of the current paper). This result thus shows that the LO activation observed in the somatosensory experiment could not be attributed to the auditory stimulation and suggests that the passive somatosensory input without any object recognition component can activate the visual cortex selectively. Moreover, the responses to the auditory stimulation do not explain the massive visual cortex deactivation observed in the somatosensory condition, as most of the auditory evoked deactivations were localized to the medial prefrontal cortex and posterior cingulate gyrus, which are part of the default mode network (Fig. S2). Thus, passive touch on the body produces much more dramatic deactivation responses than listening to the names of these body parts (the full foci of the peak activated clusters for the somatosensory, visual and auditory experiments are presented in Table 1).

Table 1.

Peak Talairach coordinates of the activated areas for the somatosensory, visual and auditory experiments.

| Passive touch |

LH |

RH |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Area | BA | x | y | z | t val. | p val. | x | y | z | t value | p val. |

| Inferior temporal gyrus | 19 | − 47 | − 61 | − 3 | 4.2 | 0.00085 | 47 | − 54 | 1 | 4.7 | 0.00029 |

| Transverse temporal gyrus | 41 | − 51 | − 22 | 12 | 17.4 | 2.6 × 10− 11 | 50 | − 20 | 10 | 16.4 | 1.9 × 10− 11 |

| Inferior parietal lobule | 40 | − 32 | − 43 | 46 | 12.2 | 4.0 × 10− 9 | 34 | − 47 | 45 | 7.4 | 0.000002 |

| Superior parietal lobule | 7 | − 23 | − 50 | 55 | 11.2 | 1.3 × 10− 8 | 17 | − 54 | 57 | 6.6 | 0.000012 |

| Precentral sulcus | 6 | − 57 | − 2 | 35 | 6.1 | 0.000027 | 49 | − 6 | 7 | 8.8 | 2.7 × 10− 7 |

| Middle frontal gyrus | 6 | − 21 | − 17 | 59 | 9.2 | 1.7 × 10− 7 | 21 | − 12 | 55 | 5.8 | 0.000038 |

| Medial frontal gyrus | 6 | − 2 | − 11 | 55 | 7.5 | 0.000002 | |||||

| Insula | 13 | − 37 | − 26 | 16 | 13.5 | 1.0 × 10− 9 | |||||

| Thalamus | − 12 | − 19 | 3 | 6.5 | 0.000013 | 11 | − 22 | 13 | 6.3 | 0.000022 | |

| Basal ganglia | − 24 | − 2 | 10 | 4.8 | 0.00024 | 23 | 2 | 9 | 5.3 | 0.0001 | |

| Cerebellum | − 37 | − 37 | − 32 | 3.9 | 0.0016 | 27 | − 43 | − 27 | 5.6 | 0.00073 | |

| Visual object localizer | LH | RH | |||||||||

| Area | BA | x | y | z | t val. | p val. | x | y | z | t val. | p val. |

| Fusiform gyrus | 37 | − 47 | − 62 | − 10 | 8.7 | 3.9 × 10− 7 | 46 | − 63 | − 10 | 5.5 | 0.000074 |

| Precuneus | 19 | − 26 | − 74 | 39 | 5.1 | 0.000183 | |||||

| Cerebellum | 27 | − 40 | − 17 | 4 | 0.0014 | ||||||

| Middle occipital gyrus | 19 | − 36 | − 81 | 3 | 4.3 | 0.00067 | |||||

| Fusiform gyrus | 20 | − 31 | − 38 | − 18 | 4.3 | 0.0018 | |||||

| Auditory control | LH | RH | |||||||||

| Area | BA | x | y | z | t val. | p val. | x | y | z | t val. | p val |

| Superior temporal gyrus | 22/41 | − 46 | − 22 | 5 | 13 | 4.2 × 10− 9 | 47 | − 24 | 6 | 11 | 3.2 × 10− 8 |

| Inferior parietal lobule | 40 | − 36 | − 54 | 40 | 3.9 | 0.0018 | |||||

| Insula | 13 | 32 | 19 | 11 | 4.5 | 0.0006 | |||||

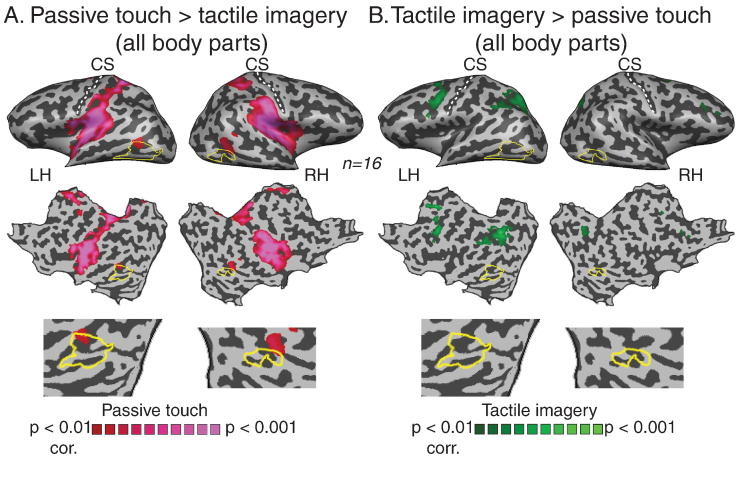

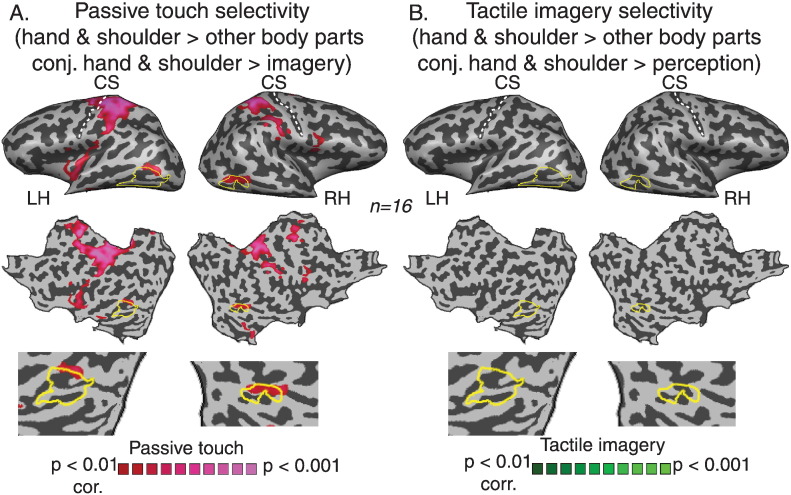

Some studies have suggested that tactile evoked responses in visual areas during tactile object recognition do not emerge from specific cross-modal input, but rather are dominated by higher cognitive functions, such as naming or mental imagery (Sathian and Zangaladze, 2001, Sathian et al., 1997, Zhang et al., 2004). To test this hypothesis directly, we compared the perception and mental imagery responses from the somatosensory experiment. First, when performing whole-brain analysis of tactile mental imagery of all body parts versus baseline (Fig. S3) we found one cluster of activation in the left visual cortex, overlapping LO area with only deactivations in the right hemisphere. Next, in order to explore the specific contribution of passive touch and mental imagery to the evoked responses in the visual cortex we contrasted these conditions directly (Fig. 3). The results show that while the responses in bilateral LO show preference to passive touch over mental imagery (Fig. 3A, t (15) = 3, p < 0.01, corrected for multiple comparisons), there is no significant activation for mental imagery over perception in any area of the visual cortex (Fig. 3B, t (15) = 3, p < 0.01, corrected for multiple comparisons). Interestingly, such preference for mental imagery was found in two main clusters in the frontal and parietal cortices. Thus, although we cannot rule out some modulatory effect of tactile mental imagery in the left LO, the results suggest that it could not be the dominant factor responsible for the pattern of activation in bilateral LO.

Fig. 3.

Visual cortex activations show preference to passive touch over tactile imagery. Statistical parametric maps for the contrasts between whole-body passive touch and tactile imagery. While passive-touch and mental imagery selective responses were found in the parietal and prefrontal areas, visual cortex activations showed preference to passive touch over mental imagery, and this activations overlapped the dorsal parts of LO (A). In contrast, mental imagery selective activation was found in the parietal and prefrontal cortices (B).

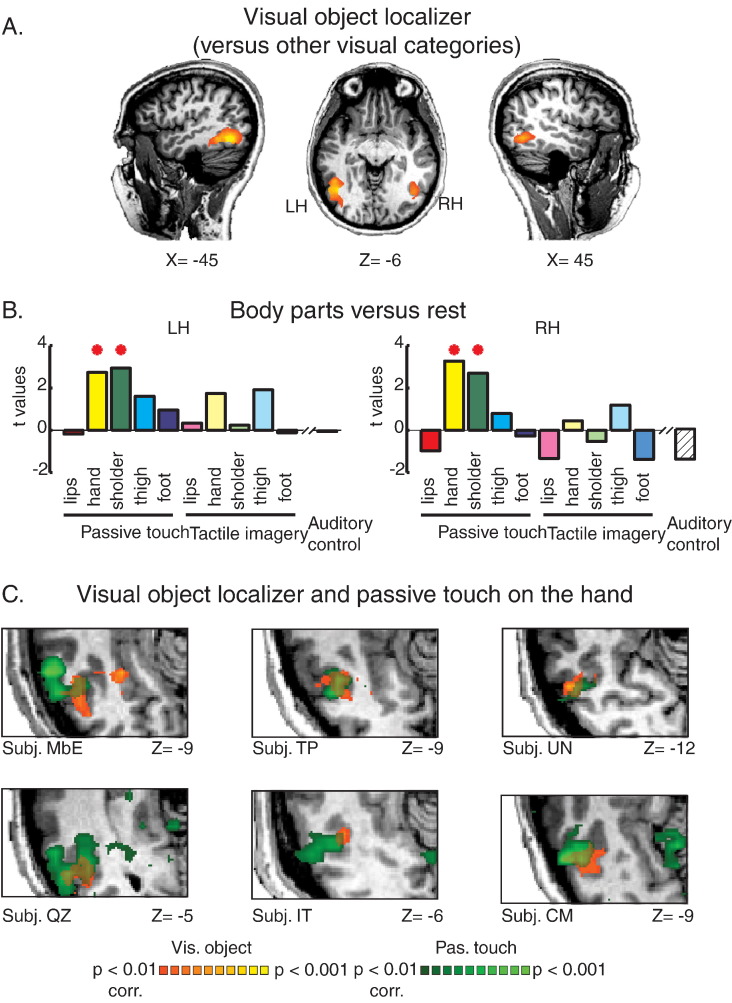

Next, we characterized the somatotopic preferences of the tactile activated area in LO and assessed whether this general somatosensory activation was driven by specific parts of the body or whether it should be considered a general phenomenon to passive touch on any body part. We used the visual localizer experiment to define visual object selective regions of interest (ROIs) within the ventral visual stream using the contrast between visual objects and all other categories (Fig. 4A). We then tested the responses to the somatosensory and mental imagery stimuli of the different body parts using ROI-GLM analysis. Fig. 4B presents the estimated statistics (compared to baseline) for each of the five body segments following passive touch or mental imagery, as well as the estimated statistics for the auditory control stimuli. The results show a clear bilateral and specific activation to passive touch on the hand and upper trunk. In contrast to passive touch, there was no significant activation to mental imagery to any of the body parts or to the auditory stimuli. Furthermore, the contrast between each body segment and all the other body parts independently (Fig. S4) supports the somatotopic preference and suggests that there is a specific tuning of the responses in the hand and upper trunk. In other words, the responses to passive touch in the visual object selective areas were specific to the hand and upper trunk stimulations. An alternative explanation for the somatotopic preference for hand and upper trunk in the visual cortex might stem from a general bias towards an enhanced representation of those body parts following the passive tactile stimulation. In order to rule out this potential confounding factor, we assessed the classical somatotopic organization of the primary sensory homunculus. Using spectral analysis method we were able to demonstrate a full somatotopic gradient in S1 from lip to toe (see Supplementary material and Fig. S5 for details). Applying the same analysis to the tactile activated area in LO resulted with a partial somatotopic gradient which was mostly confined to hand and shoulder segments. This further supports the GLM and ROI level analysis presented in Fig. 4, Fig. 5.

Fig. 4.

LO activation shows a high level of selectivity for the hand and upper trunk. A. Regions of interest (ROIs) were defined from Visual-LO using the contrast between visual objects and all other visual categories. B. ROI GLM analysis in Visual-LO for the five body segments from the somatosensory and tactile imagery conditions versus baseline, as well as for the auditory control. This analysis showed a clear bilateral somatotopic preference for the hand and upper trunk only during passive touch. * denotes p < 0.05, for significant activation. C. Single subject analysis. Overlay of the maps of passive touch to the hand and visual object selectivity is presented for six representative single subjects.

Fig. 5.

Hand and shoulder selectivity within the visual cortex. A. Statistical parametric map presenting the conjunction analysis of two contrast: passive touch to the hand and shoulder > other body parts and passive touch to the hand and shoulder > tactile imagery of hand and shoulder. Hand and shoulder responses in LO show selectivity both over the other body parts and over tactile imagery. B. Applying the same analysis to tactile imagery (tactile imagery of the hand and shoulder > other body parts conjunction with tactile imagery of the hand and shoulder > passive touch of hand and shoulder) did not yield significant activations.

In order to further assess this somatotopic preference for passive touch on the hand in LO we also analyzed the responses at the single subject level. For this, the responses to tactile hand stimulation and visual object selectivity were depicted for each subject individually, based on the non-smooth BOLD signal (i.e. no spatial smoothing). Next, we calculated the extent of overlap between these two maps, for each subject individually using probabilistic analysis (see Material and methods section). Out of the sixteen subjects who participated in the somatosensory and visual experiments, fourteen had a significant overlap between passive touch on the hand and visual objects (one subject did not exhibit significant passive touch responses and one had passive touch responses which did not overlap with the visual responses). In other words, over 95% of the subjects showed significant passive touch responses in LO. Table 2 presents the peak Talairach coordination of the overlap between these maps of all the subjects. Fig. 4C further shows this activation at the single subject level. These results clearly show that the overlap between the tactile and visual activations is not simply an artifact due to smoothing effects or data pooling over the group, but rather supports a mechanism of low-level inputs from the hand to a very specific object sensitive areas in the visual cortex.

Table 2.

Peak Talairach coordinates of the overlap between tactile hand stimulation and visual object selectivity.

| Subject | X | Y | Z | # of voxels (mm3) |

|---|---|---|---|---|

| 1. BL | – | – | ||

| 2. CM | − 47 | − 63 | − 6 | 2493 |

| 3. IT | − 42 | − 59 | − 5 | 426 |

| 4. MbE | − 51 | − 64 | − 9 | 1032 |

| 5. MvE | − 46 | − 62 | 3 | 110 |

| 6. NE | − 50 | − 58 | − 8 | 399 |

| 7. PS | − 41 | − 66 | 0 | 116 |

| 8. QZ | − 48 | − 73 | − 6 | 2163 |

| 9. ThO | − 46 | − 59 | − 7 | 202 |

| 10. TH | − 52 | − 67 | − 12 | 638 |

| 11. TN | − 45 | − 64 | − 12 | 112 |

| 12. TP | − 42 | − 61 | − 8 | 1127 |

| 13. TZ | − 48 | − 66 | − 12 | 2074 |

| 14. UH | − 46 | − 57 | − 9 | 138 |

| 15. UN | − 46 | − 59 | − 15 | 1066 |

| 16. XB | - | – |

Finally, we tested body part selectivity using conjunction analysis of two contrasts: (1) hand and shoulder versus other body parts within the same modality (perception or imagery) and (2) hand and shoulder stimulations across the modalities (perception versus imagery and vice versa). Fig. 5 presents these conjunction maps for passive touch (A, t (15) = 3, p < 0.01, corrected for multiple comparisons) and tactile imagery selectivity (B, t (15) = 3, p < 0.01, corrected for multiple comparisons) and shows that while passive touch selectivity to hand and shoulder stimulations was evident in somatosensory areas as well as in bilateral LO, similar selectivity for tactile imagery was not found in the visual cortex or in any other brain areas. Thus, LO activation was specific to hand and shoulder over other body parts, but only in perception and not for the mental imagery condition.

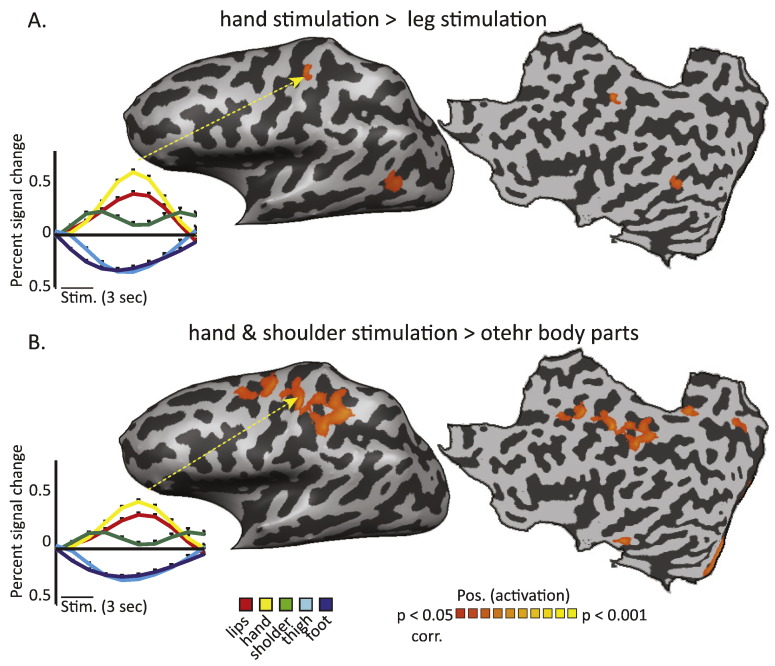

Our results so far have shown that on top of the visual–haptic object selectivity in the LO passive low level somatosensory information reaches this area from the hand and upper trunk, whereas the rest of the visual cortex is deactivated by passive touch. Next we explored whether this pattern was supported by functional connectivity as manifested by PPI analysis. PPI analysis which aims to explore task-specific functional connectivity, tests which voxels in the brain increase their connection with a seed region of interest under specific conditions. Thus, we were interested to test which areas in the brain show high functional connectivity with Tactile-LO during hand and shoulder stimulations, compared to other body parts. For that, we defined a seed region of interest from Tactile-LO which served to define a set of PPI predictors from the somatosensory experiment (see Material and methods section for details). The individual PPI models were then used for a group random effect analysis of functional connectivity. Fig. 6 presents the results of this analysis, for two main contrasts. First, we tested the PPI contrast between hand and leg stimulation, searching which voxels increase their connectivity to Tactile-LO during hand stimulation compared to leg stimulation (Fig. 6A, t (15) = 2.2, p < 0.05, corrected for multiple comparisons). The results show one cluster in the parietal lobe, located in the PCG. The averaged group time course which was sampled from this cluster clearly localizes it to the hand representation in S1. Next, we defined the PPI contrast of hand and shoulder stimulations compared to all other body parts (Fig. 6B, t (15) = 2.2, p < 0.05, corrected for multiple comparisons). This contrast revealed several clusters within the frontal and occipital cortices, as well as parietal activation stretching from the central sulcus to the post central sulcus and superior parietal lobule. Again, sampling the averaged group time course from the PCG cluster pointed to a body-specific increase in functional connectivity between Tactile-LO and the hand area in S1, which was specific to passive touch on the hand and shoulder. This suggests that Tactile-LO serves as an important node between object related areas in the visual cortex and the somatosensory cortex through the hand representation.

Fig. 6.

Tactile-LO is functionally connected to S1 during hand and shoulder stimulations. A. Statistical parametric map presenting the PPI contrast of hand versus leg stimulation. B. PPI contrast of hand and shoulder versus other body parts. The results show that in both contrasts, there is significant connectivity between Tactile-LO and the primary somatosensory cortex. Extracting the time course of activations from these clusters (left inset panels) shows that the connectivity is selective to the hand area within S1.

Discussion

Summary of the results and general conclusions

In this work we studied the cross-modal effects of passive somatosensory inputs from the body on the visual cortex. Our results revealed a unique combination of positive and negative BOLD responses in the visual cortex of normally sighted individuals. We showed that passive touch on the body triggers an island of activation in the visual object selective area (area LO) of the lateral occipital complex (LOC). This activation was surrounded by massive negative BOLD, occupying the retinotopic and non-retinotopic and ventral and dorsal visual areas (Fig. 1, Fig. 2 and S1, Table 1). Specifically, the deactivation characterized both fovea and peripheral areas, because we did not observe a significant activation in the delineated retinotopic areas. This was shown at the group and single subject levels (Figs. 1 and S1). This is the first demonstration of LO activation following passive tactile stimulation of body parts in which the subjects performed no task, in contrast to studies on haptic or visual-to-auditory shape conserving object recognition. We further showed that the responses in the visual cortex were due to the passive somatosensory stimulation per se, and did not emerge from auditory stimuli (Fig. 2, Fig. 4 and S2). Moreover, although there is a small effect to tactile mental imagery (in the left hemisphere, Fig. S3), our results show that mental imagery could not be the dominant factor explaining the tactile evoked activations in the left LO and do not seem to play any role in our experiment in the right LO (Fig. 3, Fig. 4, Fig. 5 and S4). Our results show that traditional visual cortical areas can be recruited (or deactivated) by passive cross-modal inputs and suggest that such metamodal processing is a fundamental principle even in the sighted brain. We further showed that the activation of LO by passive touch is specific to stimulation of the hand and upper trunk, compared to all other body parts and to mental imagery (Fig. 4, Fig. 5, S4 and Table 2). This specific activation of LO was further supported by PPI functional connectivity analysis which showed increased connectivity of Tactile-LO with the hand representation in the primary somatosensory cortex, but only during hand (and upper trunk) stimulation (Fig. 6). We suggest that Tactile-LO is a fundamental hub which serves as a node between object-related areas in the visual cortex and the somatosensory hand (and shoulder) representation, probably due to the critical evolutionary role of touch in object recognition and manipulation.

Thus, taken together, we suggest a general framework with specific predictions and potential mechanisms to study interactions within and between the senses. We suggest that even passive low-level sensory inputs from one modality can significantly and robustly influence most of the cortical areas of a different sensory modality. The nature and the specificity of these responses are determined by the functional relevance of the cross-modal inputs: if the information relayed by these inputs (passive touch on the hand in our case) is relevant to the task or computation carried out by a specific area (i.e. object selectivity in LO) it will be activated, while the same inputs will widely deactivate all the rest of the cortical areas that are not pertinent to this function. This prediction needs to be tested and validated across modalities (sound, touch and vision) in different cortical areas (temporal, occipital and parietal) and across functional domains and topographical maps.

Even passive touch stimulation without any task can drive the visual system: a putative framework/model

Increasing lines of evidence have challenged the classic view that the brain is organized according to parallel streams of discrete and modality-specific areas. This evidence for example, has emerged from studies of blind participants which show that various visual areas can be recruited in a task specific manner by non-visual inputs (Amedi et al., 2007, Matteau et al., 2010, Merabet and Pascual-Leone, 2010, Ptito et al., 2012, Reich et al., 2011, Renier et al., 2010, Striem-Amit and Amedi, 2014, Striem-Amit et al., 2012a). These findings have led to several complementary theories suggesting that the brain operates as a sensory-independent task machine and that many (if not all) areas which were considered unisensory can be driven by any sensory modality (Amedi et al., 2001, Pascual-Leone and Hamilton, 2001). However, these theories are still controversial, mainly since it is not clear whether such task-oriented and functional specialization characterizes the sighted brain as well, and whether the cross-modal responses reported in sighted reflect basic a-modal/multisensory processes or are epiphenomena.

Here, we addressed this array of questions in one of the most well studied cases of cross-modal recruitment of visual area — the lateral occipital (LO) area, but under a novel context which enabled us to explore some of these questions directly. LO was one of the first full examples of a brain area that is clearly task-specific and sensory independent. It was shown to be activated by tactile and visual object recognition in the sighted (Amedi et al., 2001). In particular, the LO was activated by visual and tactile shape recognition tasks but not by auditory object recognition using sound associations (Amedi et al., 2002). Further research into the multisensory nature of LO revealed that vision and touch share shape information within the LO (James et al., 2002, Kim et al., 2012), recognition of familiar tactile objects (Lacey et al., 2010) and activation for tactile shape over texture (Reiner et al., 2011, Stilla and Sathian, 2008). This strengthens the notion that LO deciphers geometric shape information, irrespective of the sensory modality used to acquire it. Other behavioral experiments have shown that general category knowledge about complex shape changes is readily shared across modalities, indicating that visual and haptic forms of shape information are integrated into a shared multisensory representation (Wallraven et al., 2014). However, it has been suggested that activation of visual areas during tactile tasks such as object recognition or tactile orientation discrimination does not reflect a-modal properties but is rather mediated through top-down processing arising from mental imagery (Sathian and Zangaladze, 2001, Sathian et al., 1997, Zhang et al., 2004) or visualization (Reiner, 2008) during haptic exploration.

One way to test this alternative explanation directly is to study cross-modal activation of visual areas in the congenitally blind. Recent evidence has shown that in some cases the same functional specialization emerges even without any visual experience or memories (Amedi et al., 2007, Collignon et al., 2011, Fiehler et al., 2009, Mahon et al., 2009, Matteau et al., 2010, Ptito et al., 2012, Reich et al., 2011, Striem-Amit et al., 2012a), thus ruling out visual mental imagery as an exclusive driving mechanism and suggesting that cortical functional specialization can be attributed at least partially to innately determined constraints (Striem-Amit et al., 2012a, Striem-Amit et al., 2012b). Another intriguing addition to this body of research is the recently reported preference for visual numerals (over letters or false fonts) in the right inferior temporal gyrus (rITG), a region therefore labeled the visual number-form area (VNFA, Shum et al., 2013). However, some symbols may represent both letters and numerals, such as the symbol for zero and the letter ‘O’ in English, since only a cultural convention makes them different. In fact, some cultures use the exact same symbol as a number with an assigned quantity and as a letter with an assigned phoneme, for example the symbols ‘V’, ‘I’ and ‘X’ in Roman script. Thus, the emergence of an area with a preference for the task of deciphering visual number symbols which is distinct from the area with a preference for the task of deciphering visual letters cannot be explained by the visual features of such symbols. In a recent study by Abboud et al. (2015) blind subjects were presented with such Roman script shapes encoded to sounds by a novel visual-to-music sensory-substitution device developed in our lab (EyeMusic, Abboud et al., 2014). The results show higher activation in the rITG when these symbols were processed as numbers compared to other control tasks on the same stimuli. Using resting-state fMRI in the blind and sighted, the same group further showed that different areas in the ventral visual cortex, including the numeral, letter and body image areas (rITG, VWFA and EBA, respectively) exhibited distinct patterns of functional connectivity (Abboud et al., 2015, Striem-Amit and Amedi, 2014). These findings suggest that specificity in the ventral ‘visual’ stream can emerge independently of sensory modality and visual experience, and is under the influence of unique connectivity patterns.

Here, we chose another approach to control for some potential confounding factors in the sighted. In all of the aforementioned studies of tactile object recognition in LO, the tactile activations emerged from active object manipulation which in all cases is confounded (in addition to visual mental imagery) to a certain extent with motor movements, semantic input and object recognition. Therefore, it is still unclear whether this reflects pure tactile, low level bottom-up processing (e.g. passive tactile stimulation), high level somatosensory processing, or top-down effects stemming from attentional demands of the task. Our results show for the first time that passive somatosensory stimulation of the body activates the visual cortex of sighted individuals, and overlaps the visual object selective area in LO. Moreover, our experimental paradigm allowed us to test the somatotopic preference of these passive tactile responses and showed that they are specific to the hand and upper trunk over all other body part stimulations and mental imagery. We showed that passive touch without any task evokes significant activation in LO, whereas mental imagery of the same stimuli did not produce the same effect. This suggests that at least in our case, low level and bottom-up cross-modal stimuli can drive the visual cortex even more strongly than high level cognitive and top-down processes such as mental imagery. Finally, the PPI functional connectivity analysis enabled us to further decipher the potential source of tactile evoked activation of the visual cortex. Our results on the task-specific functional connectivity between LO and the somatosensory cortex concur with previous effective connectivity studies (Deshpande et al., 2008, Peltier et al., 2007) which showed that the LOC is connected to somatosensory areas in the PCG and posterior insula. Here, we showed that these connections are specific both to the task (stimulation of the hand versus other body parts) and to the localization of the hand area in S1.

The framework we suggest here might help resolve some conflicting results regarding the effects of cross-modal stimuli on the visual cortex. For example, there are inconsistencies in the literature regarding whether visual areas in the normally sighted are recruited or deactivated by cross-modal stimuli (see for example- Burton et al., 2004, Laurienti et al., 2002, Sadato et al., 1996, Weisser et al., 2005) and thus whether these areas might be considered as multisensory. We argue that the main concern here is not inquiring whether we should consider these areas as pure multisensory areas or not. Rather, the crucial factor determining the cross-modal responses is the ecological relevance of the information conveyed by these inputs. We propose that even in the absence of a specific task (i.e. passive stimulation) an area can show a-modal characteristics if the cross-modal stimulus contains information pertinent to the task or computation performed by the specified area. When the input is not relevant to the ecological function or the computation carried out by a given area, the result is lack of activation or as we show here, emergence of cross-modal deactivation. At least in the case of passive touch described here, this essentially characterizes most of the somatosensory and visual cortex interactions.

Similarly, converging evidence suggests that task-oriented and modality independent processing might characterize also the language domain. Classical theories of speech perception and production have localized language processing to a left-lateralized network which includes portions of the superior temporal gyrus (Wernicke's area) as well as the inferior frontal cortex (Broca's areas). These areas were linked to process the auditory and motor aspect of spoken language. However, studies with deaf people using sign language have shown that linguistic processing in those areas is not determined exclusively by the sensory-motor mechanisms for hearing sound and producing speech. For example, although spoken and sign language are mediated through different modalities, a similar pattern of activation was observed when deaf and hearing people processed specific linguistic functions (e.g. MacSweeney et al., 2002, Petitto et al., 2000, see MacSweeney et al., 2008 for review). Furthermore, lesion studies have shown that left-hemisphere lesions in deaf signers produce similar aphasic symptoms to those seen in Broca's and Wernicke's aphasia in hearing patients (Bellugi et al., 1989, Hickok et al., 1998). Thus, such finding supports a polymodal and task-specific function of cortical areas involved in language processing.

Negative BOLD and cross-modal deactivations

In recent years there has been growing interest in the phenomenon of negative BOLD responses or deactivations. Negative BOLD responses, which have been shown to reflect decreased neural activity and/or inhibition (Boorman et al., 2010, Devor et al., 2007, Shmuel et al., 2002, Shmuel et al., 2006) have been studied mainly in the context of the default mode network (DMN, Greicius and Menon, 2004, Raichle and Snyder, 2007, Raichle et al., 2001). In contrast to goal-directed and externally oriented cognition, the DMN was suggested to be involved in self-referential processing, and to be relatively sensory modality and task-independent (Buckner et al., 2008). Beyond the DMN, negative BOLD is usually associated with task- or stimulus-specific responses, and related to processes directing attentional resources away from the deactivated brain area (Amedi et al., 2005, Azulay et al., 2009, Mozolic et al., 2008).

Several studies have reported negative BOLD responses in visual areas following cross-modal stimuli; however these results were generally found in the context of active tactile tasks (Kawashima et al., 1995, Merabet and Swisher, 2007, Sadato et al., 1996, Weisser et al., 2005) or focused on top-down effects such as attentional shift or task difficulty in cognitive performance (Hairston et al., 2008, Johnson and Zatorre, 2005, Laurienti et al., 2002, Mozolic et al., 2008). Here we provide another example of deactivation outside the DMN which is unique in the extent of the deactivation encompassing major parts of ventral and dorsal, retinotopic and non retinotopic areas, in particular since the stimulation was passive. This suggests that cross-modal effects are much more dramatic in contexts that have been less well explored; namely, the passive stream of input. Previous works reporting on cross modal effects in the visual system could also be interpreted in light of this framework. Merabet and Swisher (2007) explored the involvement of low-level visual cortical areas in tactile discrimination tasks. They found that perceiving roughness or inter-dot spacing of tactile patterns resulted in significant activation in the primary visual cortex and deactivation of extrastriate cortical regions, including part of the LOC. Critically, this result shows that the context and information carried by the tactile inputs determine which areas will be recruited or deactivated. This framework could also apply to higher cognitive tasks influencing the visual cortex such as verbal memory. Azulay and colleagues (2009) studied verbal memory processing in a group of sighted and blind individuals and showed that cross-modal deactivation triggered by top-down processes is task-related. During memory retrieval, both auditory and visual areas were robustly deactivated in the sighted. In contrast, in the blind participants, only the auditory cortex was deactivated whereas visual areas, shown previously to be relevant to this task (Amedi et al., 2003, Raz et al., 2005), presented a positive BOLD signal. Thus, there is a task-dependent balance of activation and deactivation that might allow maximization of resources and the filtering out of non-relevant information to enable allocation of attention to the required task.

Taken together, we suggest that the balance between positive and negative BOLD might be crucial to our understanding of a large variety of intrinsic and extrinsic tasks including high-level cognitive functions, sensory processing and multisensory integration, and might reflect a fundamental interaction between the senses.

Concluding remarks

In this work we showed that passive touch on the hand and shoulder triggers a specific activation in the object and tool selective part of the lateral occipital complex within the visual cortex. How did visual object and tool selectivity develop in the huge cortical portion that is devoted to vision? According to the neural recycling hypothesis (Dehaene and Cohen, 2007), a novel cultural ability encroaches onto a pre-existing brain system, resulting in an extension of existing cortical networks to address the novel functionality. This hypothesis was used to explain the cortical network dedicated to reading, and show how novel cultural objects rely on older brain circuits used for objects and face recognition (Dehaene et al., 2005). In the context of this framework, once tools were introduced during evolution, the brain machinery had to be developed to support tool recognition and tool use abilities. One cortical region which was associated to knowledge and the use of tool is a subarea of left anterior parietal cortex (Culham and Valyear, 2006). A recent study implementing a monkey model of tool use (Iriki, 2005) showed that the presumably homological area in the monkey has the capacity to be recycled for tool use. We speculate that such recycling processes could also support the specialization of the LO in object and tool recognition among the different categories within the ventral visual stream. Compared to other visual object categories (such as houses, faces, visual script) tools are unique as they are haptically manipulated during their everyday use. Thus, functional specialization for tool recognition would potentially involve cortical areas that process touch (and motor movement of) on body parts that are mainly used to manipulate tools as well as high level visual object recognition centers and the connection between them. The unique pattern of positive BOLD of only the hand and shoulder and only in visual object and toolselective areas is in line with these assumptions. The functional connectivity reported here either existed or then was developed/strengthened by the growing need to use tools during evolution (even today most people use tools extensively during a large part of their waking hours). Our work is the first to highlight and provide concrete evidence to support this missing link between passive touch on the hand and shoulder and visual object and tool selectivity including the pathway supporting it. Further research is needed to test this framework under different contextual circumstances and in different functional selective areas in the visual system and the entire brain.

Acknowledgments

This work was supported by a European Research Council grant (grant number 310809 to AA); the James S. McDonnell Foundation scholar award (grant number 220020284 to AA); the Edmond and Lily Safra Center for Brain Sciences (ELSC) Vision center grant; the Maratier family; the Gatsby Charitable Foundation; the Levzion Program for PhD students (to Z.T.) and by the Hebrew University Hoffman Leadership and Responsibility Fellowship Program (to R.G.).

Footnotes

Supplementary data to this article can be found online at http://dx.doi.org/10.1016/j.neuroimage.2015.11.058.

Appendix A. Supplementary data

Supplementary material.

References

- Abboud S., Hanassy S., Levy-Tzedek S., Maidenbaum S., Amedi A. EyeMusic: introducing a “visual” colorful experience for the blind using auditory sensory substitution. Restor. Neurol. Neurosci. 2014;32:247–257. doi: 10.3233/RNN-130338. [DOI] [PubMed] [Google Scholar]

- Abboud S., Maidenbaum S., Dehaene S., Amedi A. A number-form area in the blind. Nat. Commun. 2015;6:6026. doi: 10.1038/ncomms7026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amedi A., Malach R., Hendler T., Peled S., Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat. Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amedi A., Jacobson G., Hendler T., Malach R., Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb. Cortex. 2002;12:1202–1212. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Amedi A., Raz N., Pianka P., Malach R., Zohary E. Early “visual” cortex activation correlates with superior verbal memory performance in the blind. Nat. Neurosci. 2003;6:758–766. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- Amedi A., Malach R., Pascual-Leone A. Negative BOLD differentiates visual imagery and perception. Neuron. 2005;48:859–872. doi: 10.1016/j.neuron.2005.10.032. [DOI] [PubMed] [Google Scholar]

- Amedi A., Stern W.M., Camprodon J.A., Bermpohl F., Merabet L., Rotman S., Hemond C., Meijer P., Pascual-Leone A. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nat. Neurosci. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- Amedi A., Raz N., Azulay H., Malach R., Zohary E. Cortical activity during tactile exploration of objects in blind and sighted humans. Restor. Neurol. Neurosci. 2010;28:143–156. doi: 10.3233/RNN-2010-0503. [DOI] [PubMed] [Google Scholar]

- Arnott S.R., Thaler L., Milne J.L., Kish D., Goodale M.A. Shape-specific activation of occipital cortex in an early blind echolocation expert. Neuropsychologia. 2013;51:938–949. doi: 10.1016/j.neuropsychologia.2013.01.024. [DOI] [PubMed] [Google Scholar]

- Azulay H., Striem E., Amedi A. Negative BOLD in sensory cortices during verbal memory: a component in generating internal representations? Brain Topogr. 2009;21:221–231. doi: 10.1007/s10548-009-0089-2. [DOI] [PubMed] [Google Scholar]

- Beauchamp M.S. See me, hear me, touch me: multisensory integration in lateral occipital–temporal cortex. Curr. Opin. Neurobiol. 2005;15:145–153. doi: 10.1016/j.conb.2005.03.011. [DOI] [PubMed] [Google Scholar]

- Beauchamp M.S., Yasar N.E., Kishan N., Ro T. Human MST but not MT responds to tactile stimulation. J. Neurosci. 2007;27:8261–8267. doi: 10.1523/JNEUROSCI.0754-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellugi U., Poizner H., Klima E.S. Language, modality and the brain. Trends Neurosci. 1989;12:380–388. doi: 10.1016/0166-2236(89)90076-3. [DOI] [PubMed] [Google Scholar]

- Boorman L., Kennerley A.J., Johnston D., Jones M., Zheng Y., Redgrave P., Berwick J. Negative blood oxygen level dependence in the rat: a model for investigating the role of suppression in neurovascular coupling. J. Neurosci. 2010;30:4285–4294. doi: 10.1523/JNEUROSCI.6063-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borra E., Rockland K.S. Projections to early visual areas v1 and v2 in the calcarine fissure from parietal association areas in the macaque. Front. Neuroanat. 2011;5:35. doi: 10.3389/fnana.2011.00035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner R.L., Andrews-Hanna J.R., Schacter D.L. The brain's default network: anatomy, function, and relevance to disease. Ann. N. Y. Acad. Sci. 2008;1124:1–38. doi: 10.1196/annals.1440.011. [DOI] [PubMed] [Google Scholar]

- Burton H., Sinclair R.J., McLaren D.G. Cortical activity to vibrotactile stimulation: an fMRI study in blind and sighted individuals. Hum. Brain Mapp. 2004;23:210–228. doi: 10.1002/hbm.20064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collignon O., Vandewalle G., Voss P., Albouy G., Charbonneau G., Lassonde M., Lepore F. Functional specialization for auditory-spatial processing in the occipital cortex of congenitally blind humans. Proc. Natl. Acad. Sci. U. S. A. 2011;108:4435–4440. doi: 10.1073/pnas.1013928108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costantini M., Urgesi C., Galati G., Romani G.L., Aglioti S.M. Haptic perception and body representation in lateral and medial occipito-temporal cortices. Neuropsychologia. 2011;49:821–829. doi: 10.1016/j.neuropsychologia.2011.01.034. [DOI] [PubMed] [Google Scholar]

- Culham J.C., Valyear K.F. Human parietal cortex in action. Curr. Opin. Neurobiol. 2006;16:205–212. doi: 10.1016/j.conb.2006.03.005. [DOI] [PubMed] [Google Scholar]

- Dehaene S., Cohen L. Cultural recycling of cortical maps. Neuron. 2007;56:384–398. doi: 10.1016/j.neuron.2007.10.004. [DOI] [PubMed] [Google Scholar]

- Dehaene S., Cohen L., Sigman M., Vinckier F. The neural code for written words: a proposal. Trends Cogn. Sci. 2005;9:335–341. doi: 10.1016/j.tics.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Deshpande G., Hu X., Stilla R., Sathian K. Effective connectivity during haptic perception: a study using Granger causality analysis of functional magnetic resonance imaging data. NeuroImage. 2008;40:1807–1814. doi: 10.1016/j.neuroimage.2008.01.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devor A., Tian P., Nishimura N., Teng I.C., Hillman E.M.C., Narayanan S.N., Ulbert I., Boas D.A., Kleinfeld D., Dale A.M. Suppressed neuronal activity and concurrent arteriolar vasoconstriction may explain negative blood oxygenation level-dependent signal. J. Neurosci. 2007;27:4452–4459. doi: 10.1523/JNEUROSCI.0134-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel S.A. The development and use of phase-encoded functional MRI designs. NeuroImage. 2012;62:1195–1200. doi: 10.1016/j.neuroimage.2011.09.059. [DOI] [PubMed] [Google Scholar]

- Engel S.A., Rumelhart D.E., Wandell B.A., Lee A.T., Glover G.H., Chichilnisky E.J., Shadlen M.N. fMRI of human visual cortex. Nature. 1994;369:525. doi: 10.1038/369525a0. [DOI] [PubMed] [Google Scholar]

- Engel S.A., Glover G.H., Wandell B.A. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb. Cortex. 1997;7:181–192. doi: 10.1093/cercor/7.2.181. [DOI] [PubMed] [Google Scholar]

- Epstein R., Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Falchier A., Clavagnier S., Barone P., Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J. Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiehler K., Burke M., Bien S., Röder B., Rösler F. The human dorsal action control system develops in the absence of vision. Cereb. Cortex. 2009;19:1–12. doi: 10.1093/cercor/bhn067. [DOI] [PubMed] [Google Scholar]

- Forman S.D., Cohen J.D., Fitzgerald M., Eddy W.F., Mintun M.A., Noll D.C. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magn. Reson. Med. 1995;33:636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- Friston K.J., Buechel C., Fink G.R., Morris J., Rolls E., Dolan R.J. Psychophysiological and modulatory interactions in neuroimaging. NeuroImage. 1997;6:218–229. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Friston K.J., Holmes A.P., Price C.J., Buchel C., Worsley K.J. Multisubject fMRI studies and conjunction analyses. NeuroImage. 1999;10:385–396. doi: 10.1006/nimg.1999.0484. [DOI] [PubMed] [Google Scholar]

- Gleiss S., Kayser C. Eccentricity dependent auditory enhancement of visual stimulus detection but not discrimination. Front. Integr. Neurosci. 2013;7:52. doi: 10.3389/fnint.2013.00052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greicius M.D., Menon V. Default-mode activity during a passive sensory task: uncoupled from deactivation but impacting activation. J. Cogn. Neurosci. 2004;16:1484–1492. doi: 10.1162/0898929042568532. [DOI] [PubMed] [Google Scholar]

- Hairston W.D., Hodges D.A., Casanova R., Hayasaka S., Kraft R., Maldjian J.A., Burdette J.H. Closing the mind's eye: deactivation of visual cortex related to auditory task difficulty. Neuroreport. 2008;19:151–154. doi: 10.1097/WNR.0b013e3282f42509. [DOI] [PubMed] [Google Scholar]

- Hickok G., Bellugi U., Klima E.S. The neural organization of language: evidence from sign language aphasia. Trends Cogn. Sci. 1998;2:129–136. doi: 10.1016/s1364-6613(98)01154-1. [DOI] [PubMed] [Google Scholar]

- Huang R.-S., Chen C., Tran A.T., Holstein K.L., Sereno M.I. Mapping multisensory parietal face and body areas in humans. Proc. Natl. Acad. Sci. U. S. A. 2012;109:18114–18119. doi: 10.1073/pnas.1207946109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iriki A. A prototype of Homo-Faber: a silent precursor of human intelligence in the tool-using monkey brain. In: Dehaene S., Duhamel J.R., Hauser M., Rizzolatti G., editors. From Monkey Brain to Human Brain: A Fyssen Foundation Symposium. MIT Press; Cambridge, MA: 2005. pp. 133–157. [Google Scholar]

- James T.W., Humphrey G.K., Gati J.S., Servos P., Menon R.S., Goodale M.A. Haptic study of three-dimensional objects activates extrastriate visual areas. Neuropsychologia. 2002;40:1706–1714. doi: 10.1016/s0028-3932(02)00017-9. [DOI] [PubMed] [Google Scholar]

- James T.W., Stevenson R.A., Kim S., Vanderklok R.M., James K.H. Shape from sound: evidence for a shape operator in the lateral occipital cortex. Neuropsychologia. 2011;49:1807–1815. doi: 10.1016/j.neuropsychologia.2011.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson J.A., Zatorre R.J. Attention to simultaneous unrelated auditory and visual events: behavioral and neural correlates. Cereb. Cortex. 2005;15:1609–1620. doi: 10.1093/cercor/bhi039. [DOI] [PubMed] [Google Scholar]

- Kawashima R., O'Sullivan B.T., Roland P.E. Positron-emission tomography studies of cross-modality inhibition in selective attentional tasks: closing the “mind's eye”. Proc. Natl. Acad. Sci. U. S. A. 1995;92:5969–5972. doi: 10.1073/pnas.92.13.5969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim S., Stevenson R.A., James T.W. Visuo-haptic neuronal convergence demonstrated with an inversely effective pattern of BOLD activation. J. Cogn. Neurosci. 2012;24:830–842. doi: 10.1162/jocn_a_00176. [DOI] [PubMed] [Google Scholar]

- Lacey S., Flueckiger P., Stilla R., Lava M., Sathian K. Object familiarity modulates the relationship between visual object imagery and haptic shape perception. NeuroImage. 2010;49:1977–1990. doi: 10.1016/j.neuroimage.2009.10.081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurienti P.J., Burdette J.H., Wallace M.T., Yen Y.-F., Field A.S., Stein B.E. Deactivation of sensory-specific cortex by cross-modal stimuli. J. Cogn. Neurosci. 2002;14:420–429. doi: 10.1162/089892902317361930. [DOI] [PubMed] [Google Scholar]

- MacSweeney M., Woll B., Campbell R., McGuire P.K., David A.S., Williams S.C.R., Suckling J., Calvert G.A., Brammer M.J. Neural systems underlying British Sign Language and audio–visual English processing in native users. Brain. 2002;125:1583–1593. doi: 10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- MacSweeney M., Capek C.M., Campbell R., Woll B. The signing brain: the neurobiology of sign language. Trends Cogn. Sci. 2008;12:432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- Mahon B.Z., Anzellotti S., Schwarzbach J., Zampini M., Caramazza A. Category-specific organization in the human brain does not require visual experience. Neuron. 2009;63:397–405. doi: 10.1016/j.neuron.2009.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matteau I., Kupers R., Ricciardi E., Pietrini P., Ptito M. Beyond visual, aural and haptic movement perception: hMT + is activated by electrotactile motion stimulation of the tongue in sighted and in congenitally blind individuals. Brain Res. Bull. 2010;82:264–270. doi: 10.1016/j.brainresbull.2010.05.001. [DOI] [PubMed] [Google Scholar]

- McLaren D.G., Ries M.L., Xu G., Johnson S.C. A generalized form of context-dependent psychophysiological interactions (gPPI): a comparison to standard approaches. NeuroImage. 2012;61:1277–1286. doi: 10.1016/j.neuroimage.2012.03.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merabet L.B., Pascual-Leone A. Neural reorganization following sensory loss: the opportunity of change. Nat. Rev. Neurosci. 2010;11:44–52. doi: 10.1038/nrn2758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merabet L., Swisher J. Combined activation and deactivation of visual cortex during tactile sensory processing. J. Neurophysiol. 2007:1633–1641. doi: 10.1152/jn.00806.2006. [DOI] [PubMed] [Google Scholar]

- Mozolic J.L., Joyner D., Hugenschmidt C.E., Peiffer A.M., Kraft R.A., Maldjian J.A., Laurienti P.J. Cross-modal deactivations during modality-specific selective attention. BMC Neurol. 2008;8:35. doi: 10.1186/1471-2377-8-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascual-Leone A., Hamilton R. The metamodal organization of the brain. Prog. Brain Res. 2001;134:427–445. doi: 10.1016/s0079-6123(01)34028-1. [DOI] [PubMed] [Google Scholar]