Abstract

The long-term goal of the Virtual Physiological Human and Digital Patient projects is to run 'simulations’ of health and disease processes on the virtual or 'digital' patient, and use the results to make predictions about real health and determine the best treatment specifically for an individual. This is termed 'personalized medicine', and is intended to be the future of healthcare. How will people interact and engage with their virtual selves, and how can virtual models be used to motivate people to actively participate in their own healthcare? We discuss these questions, and describe our current efforts to integrate and realistically embody psychobiological models of face-to-face interaction to enliven and increase engagement of virtual humans in healthcare. Overall, this paper highlights the need for attention to the design of human–machine interfaces to address patient engagement in healthcare.

Keywords: computer graphics, biological psychology, virtual humans, healthcare

1. Introduction

In this paper, we present our perspective on the need for more than just a disembodied avatar to engage individuals in healthcare. We argue that giving an avatar a simplified nervous system and the ability to convey realistic emotions creates greater patient engagement and motivation. The paper is set out in several sections. First, we present a scenario of what a futuristic healthcare interaction might look like between a patient, a virtual physiological model of the patient and a virtual healthcare assistant, followed by discussion of how this system could educate patients about illnesses and motivate behaviour change. We then present our work to date on a biologically based lifelike model, with details of how the nervous system is linked to the facial motor systems using brain language (BL)—a modular simulation framework. We then present two examples—BabyX and the Auckland Face Simulator (AFS) to illustrate these biologically based models. This is a perspective paper and does not present new results.

Imagine Peter sees his digital self in a virtual mirror. His digital self is a computer generated simulation of the current state of his health, updated by real-time information from real-time sensors. His digital self represents his ‘Health Avatar’ which embodies all of his health information and predictive models. Peter can see himself as if looking in a mirror and then can switch the view to see the underlying tissues and organs functioning in real time. Also displayed is a virtual healthcare assistant, Stig, who asks Peter to describe how he feels, and asks Peter to perform certain exercises. The virtual healthcare assistant notes that Peter's head is tilted downwards, his eyelids are heavy, and he is speaking more slowly and negatively than usual. Stig appears concerned and expresses to Peter that he seems a bit low, and it is showing in his mood and physical health signs. He notes from sensors in Peter's watch that he hasn't been doing much exercise lately and his blood pressure is elevated. Stig knows that Peter has had previous issues with high cholesterol and hypertension and is prescribed cholesterol lowering medication. The avatar shows Peter on his own virtual self a visualization of restricted blood flow in arteries on his heart and predicted change in LDL cholesterol due to the lack of exercise. It also shows Peter the effects of endorphins released by exercise on his mood. ‘What do you think you could do to improve this?’ the assistant asks. ‘Well, I have not been taking my medication lately because I have been feeling well. I should start taking it again. Also I have been too busy to exercise. I should prioritise that’ Peter responds. The healthcare assistant smiles with an emphatic ‘yes', and reminds him that engaging in regular exercise and taking medication will improve his long-term prognosis. Peter then sees himself virtually taking medication and performing exercises, and also sees the predicted lowering of cholesterol in his arteries and improvements in behavioural manifestations of mood. The next day when Peter sees his digital mirror, his healthcare assistant notices his brighter voice and the higher tilt of his head, and says ‘I am already noticing positive changes in your physiological signs—if you keep this up in one week you will begin to see these further improvements', and Peter's virtual self smiles, flushed with virtual endorphins.

This futuristic scenario illustrates how individuals may be engaged and empowered to participate in their own healthcare on a daily basis, by interacting on a personal level with the information and predictive models in the Virtual Physiological Human (VPH) vision of a Digital Patient (DP) and ‘health avatar’ [1]. The scenario includes the following stages: (i) appraisal of current health state, (ii) integration of new information into existing model of health, (iii) demonstration of what is going on, (iv) diagnosis of issue and possible causes, (v) ownership, (vi) identification of strategies to improve the current state, (vii) motivation to implement behaviour, and (viii) visualization of improved health after behavioural change.

Engaging with the user on both cognitive and affective levels is critical in this scenario. To create initial engagement drawing his attention, Peter sees his model mirror and his movements, but in digital form. The virtual healthcare assistant is highly lifelike and responsive, and friendly, activating a social response. During the appraisal process, the virtual assistant senses Peter's affective state from non-verbal cues and language choice, and responds empathetically, to meet Peter at his level during the appraisal interview. To demonstrate at a personal level, Peter’s richly visualized virtual self makes use of integrated simulation models to visualize the physical (and potentially mental) diagnosis produced by the VPH models. Peter's virtual self looks exactly like Peter—and moves as his reflection, to maximize identification with the diagnosis.

In this article, we discuss some existing technologies which can form such a vision and focus on the use of integrated models to create engaging human-like interfaces to embody virtual healthcare assistants and virtual selves. We focus on our work in creating an interactive simulation framework to create lifelike engaging virtual humans incorporating multi-scale computational models, advanced graphics (anatomy/physiology) behaviours, sensing and a high degree of responsiveness, which aims to appeal to a user on a more humanistic and social level.

2. The Virtual Physiological Human

The Physiome and VPH [1] aim to deliver computational modelling frameworks for integrating every level in human biology—one that links genes, proteins, cells and organs to the whole body. Ultimately, the goal of the VPH/Physiome Project is to piece together the complete VPH: a personalized, three-dimensional model of an individual's unique physiological make-up. It is a way to share observations, to derive predictive hypotheses from them, and to integrate them into a constantly improving understanding of human physiology and pathology, by regarding it as a single system. The VPH vision highlights the need for the holistic treatment of the patient, and proposes the use of integrated functional simulation—creating a customized computer model of the patient's condition across multiple organ systems and length scales, and across time and environment.

As part of this vision, the DP is an envisaged super-sophisticated computer program that will be capable of generating a virtual living version of yourself. When this is achieved, it will be possible to run ‘simulations’ of health and disease processes on the virtual or ‘digital’ you, and use the results to make predictions about your real health. It will also be possible to determine the best treatment specifically for you. This is termed ‘personalized medicine’, and is intended to be the future of healthcare. An initial step in this process is the myhealthavatar (http://www.myhealthavatar.eu) which aims to provide an interface to support the collection of, and access to, the complete medical information relating to individual citizen's health status, gathered from different sources, including medical data, documents, lifestyle and other personal information, represented along a timeline.

A DP may be used by doctors to model disease and treatment processes in an individual. Virtual physiological humans can also be used to represent patients in the training of medical students, for example to detect cranial nerve abnormalities [2]. There is even an application for virtual avatars in training healthcare professionals to be more empathetic with promising initial results [3]. Medical students are asked to interview a virtual patient and the student's ability to respond empathetically at certain points in the interview can be evaluated in a systematic way.

A key goal of the project is to encourage self-engagement and empowerment of citizens in their own health. How can the DP and health avatar vision be most effectively used to achieve this?

3. Using the digital patient to engage and motivate people in their own healthcare

In order to understand how to engage and motivate people in their own health, it is important to first understand how people naturally make sense of symptoms and respond to health threats. Leventhal's common sense model is a good starting point to illustrate this process [4]. A critical point is that people are active problem solvers, not just passive recipients of healthcare. They will only engage in the healthcare behaviours that make sense to them based on their understanding of their illness. For example, they will only quit smoking if they believe that smoking caused their illness, or take medication if they perceive it as helpful. People's lay understandings are formed when they perceive sensations, such as a headache or rapid heartbeat, and they automatically attribute these to some cause, monitor whether the symptoms are getting worse over time, and make a tentative diagnosis based on past and current experiences, including conversations and observations of others. If people interpret symptoms as a possible sign of illness, this can trigger an emotional fear response, and a concurrent appraisal of what they can do about the threat, and the subsequent adoption of behaviours to reduce the threat. The results of the coping behaviour are then appraised and fed back into this self-regulation model [5].

Interestingly, research has shown that these mental models of illness are not just composed of the identity, cause, timeline, consequences, and the ability to control the illness but also incorporate visual imagery of the body itself [6]. When asked to draw what they thought had happened to their heart after a heart attack, patients drew damage on the heart and blockages in the arteries to various degrees. Furthermore, the more damage patients drew at the time of the infarct, the worse they rated their recovery at 3 months, with lower perceptions of their own ability to control the illness and a slower return to work, independent of the actual severity of the heart attack quantified by troponin T. This suggests that these lay visual models can form the basis for patients' healthcare decisions and behaviour. A number of subsequent studies have shown that patients with other illnesses also have visual models of the effects of the illness on the body (reviewed by van Leeuwen et al. [7]).

This opens an opportunity to educate the patient to change their mental models and, therefore, change their health behaviours. Interventions to date have mainly been based on tailored psychoeducational sessions with psychologists to discuss personal beliefs and promote more accurate models with the aid of diagrams, and plastic or physical models [8–10]. More recently, computer animations have been shown to patients with acute coronary syndrome to demonstrate the formation of a clot in a coronary artery causing a heart attack, subsequent damage to the heart and its ability to function, and the beneficial effects of treatment and behaviour change on reduced cholesterol levels in the blood and less obstructed blood flow [11]. This study found that the visual animations promoted more positive beliefs about the illness, and motivated behavioural change including enhanced exercise and quicker return to normal activities. Another computer simulation of HIV virus infection and the protection provided by medication resulted in improved patient adherence to prescribed medication [12].

Patients often have poor knowledge about their anatomy. When doctors overestimate patient knowledge miscommunication between doctors and patients can occur. For example, research has shown that on average only 50% of people can correctly identify where major organs are, such as the kidneys and stomach, and that patients with conditions that affect these organs are not more accurate than the general public are [13].

The VPH can be used as an embodied as a virtual human is the ultimate tool to demonstrate the physiology and anatomy of disease and treatment processes to the patient. A real advantage is that it is that the virtual human can be personalized to the individual patient, so the patient's own physical characteristics can be shown and integrated with their medical histories. For example, if a patient has diabetes and then has a heart attack, this combination can be visually modelled inside their own virtual self, making the information extremely personally relevant and clear.

Once a patient is given information that their body function is compromised, this generates a fear response. In order to translate this fear response into health-promoting adaptive behaviours, a plan of action must be provided that the patient feels he/she is capable of implementing. One way to encourage people to pursue a particular behavioural change is to show through modelling how the person will be rewarded by adapting the behaviour [14]. Research has shown that the similarity of the model to the person is very important—the more someone identifies with a model the more they may change their behaviour [15].

The advantage of a virtual self is that behaviour can be modelled by a patient him/herself so he/she can see a realistic version of yourself doing the desired behaviour and see the modelled consequences. The patients can also watch themselves transform from anxious and uncertain to being calm and in control after performing desired behaviours. Initial research suggests that behaviour can be learned just as well from a model in a video as a model in real life [16].

4. Using virtual healthcare assistants to engage and motivate people in their own healthcare

In most current Internet healthcare applications, avatars are used to represent the healthcare provider rather than the patient themselves. The avatar is used to ask questions and deliver therapies or advice. One advantage of using a computer avatar to deliver healthcare interventions is the ability for it to be widely adopted across large populations anywhere, at any time, at low costs.

Avatars are already being used to deliver computerized cognitive behaviour therapy (cCBT). In adults, cCBT has been shown to be an effective treatment for a number of emotional disorders such as anxiety and depression [17–20], panic disorder [21], agoraphobia [22], obsessive–compulsive disorder [23,24], and post-traumatic stress disorder [25]. Consequently, cCBT is now recommended by the National Institute for Health and Clinical Excellence for the treatment of mild to moderate depression and anxiety in adults [26]. Other health behaviour change theories are being incorporated into avatar-based computerized interventions, including initial attempts to have avatars use motivational interviewing techniques to promote behavioural change [27].

Researchers at the University of Southern California have developed the SimCoach project [28], which is motivated by the challenge of empowering troops and their significant others in regard to their healthcare, especially with respect to issues related to the psychological toll of military deployment. SimCoach virtual humans are not designed to act as therapists, but rather to encourage users to explore available options and seek treatment when needed by fostering comfort and confidence in a safe and anonymous environment where users can express their concerns to an artificial conversational partner without fear of judgement or possible repercussions. The related SimSensei project seeks to enable a new generation of clinical decision support tools and interactive virtual agent-based healthcare dissemination/delivery systems that are able to recognize and identify psychological distress from multi-modal signals [29]. These tools aim to provide military personnel and their families' better awareness and access to care while reducing the stigma of seeking help. For example, the system's early identification of a patient's high or low distress state could generate the appropriate information to help a clinician diagnose a potential stress disorder. User-state sensing can also be used to create long-term patient profiles that help assess change over time.

Another key advantage of virtual healthcare assistants is that the designer can make the agent possess the optimum personality and behaviour required in a healthcare assistant, possibly even better than your average human healthcare worker who may be distracted, impatient or not have good communication skills. The embodied nature of the agent is important for creating better patient engagement. Research has shown that people talk and smile more towards a robot than a computer tablet delivering the same exercise and relaxation instructions [30]. Furthermore, people are more likely to do what the robot says, trust the robot and want to interact with it again more than the tablet. Other research shows the more animated the agent, the greater the therapeutic alliance formed with it [31].

The rules for developing good avatars can be based on what works in good doctor–patient relationships. Just like human healthcare professionals, the appearance and behaviour of the virtual agent healthcare assistant plays a critical role in engaging the patient and forming a therapeutic alliance. Verbal behaviours (such as expressing empathy), and non-verbal behaviours (such as direct eye gaze) and following appropriate social rules for conversations are all important aspects in forming a therapeutic alliance [32]. The appropriateness of realism and rendering of the character have been shown to differ by application domain with more realistic avatars more suitable for the healthcare domain [33]. Other evidence in support of a realistic avatar comes from an experiment showing a realistic face is more acceptable on a healthcare robot than a metallic face, or no face [34].

These two applications—the DP and the virtual healthcare assistant—therefore both require realistic human representations and behaviour. The next section describes the development of a biologically inspired lifelike virtual human that we can use for these purposes.

5. Creating lifelike virtual humans using biologically based models

Integrated computational models of the body can be used to both simulate multi-scale processes, but also can be embodied and used as an engaging interaction medium. We discuss our work in creating an interactive simulation framework to explore the creation of lifelike virtual humans incorporating multi-scale models, advanced graphics (anatomy/physiology) behaviours, sensing and a high degree of responsiveness, which aim to appeal to a user on a more humanistic and social level. These models are a possible step in representing a ‘virtual self’ but also can be used to enliven virtual healthcare assistants. We focus on the interactive simulation of the face here as a holistic example of how models can be integrated to engage the user, as a component to enable future healthcare scenario described in the introduction.

Of all the experiences we have in life, face to face interactions fill many of our most meaningful moments. The complex interplay of facial expressions, eye gaze, head movements and vocalizations, in quickly evolving ‘social interaction loops' has enormous influence on how a situation will unfold. Each partner is affected by how the interaction unfolds which creates the emotive connection and investment—and thus the engagement.

While an impressive body of work has been done in the development of interactive virtual humans (e.g. [35], there is unfortunately still often quite a robotic ‘feel’ to the results which can lead to disengagement. And this is particularly evident in facial interaction. The way in which a digital face moves and appears can cause unwanted effects. Rather than aiding the appearance of life, a partially realistic solution can elicit a negative response (the Uncanny Valley effect). This response has been argued to be triggered by any number of non-expected responses which alarm the viewer's perceptual system [36]. To avoid triggering a negative perception many factors must be taken into account, the physical appearance of the face, the gaze of the eyes, the coherent movement of the skin and many smaller details, any of which can trigger a form of dissonance that interferes with communication and affinity with the perceived face.

5.1. Building a holistic model

The face mirrors both the brain and body, revealing mental state (e.g. mental attention in eye direction and affect or cognition through expressions), and physiological state (e.g. eye motion, position of eyelids and colour of the skin). The dynamic behaviour of the face emerges from many systems interacting on multiple levels, from high level social interaction to low-level biology.

In essence, to drive a biologically based lifelike autonomous person we hypothesize that we need to model several fundamental aspects of a nervous system. Depending on the level of granularity and functionality, a non-exhaustive list of what we need to model might include models of the sensory and motor systems, reflexes, perception, emotion and modulatory systems, attention, learning and memory, rewards, decision and goals. And we need an architecture to interconnect these. The face is so intimately tied to the nervous system that for an interactive virtual human to withstand a close-up view, it likely needs at least a basic associated nervous system model sufficient to drive facial behaviour. We are currently developing such a model.

5.2. Brain language

Our approach to building live interactive virtual agents embodies through realistic computer graphics biologically based models of behaviour within a real-time graphics and sensing framework, and grounds experience through real world–virtual world interaction. We aim to create a system which can be reducible to more biological detail, as well as expandable to incorporate more complex systems. As there are many current competing theories on the biological basis of behaviour, our choice was to opt for flexibility, and to develop a ‘system to build systems' in a lego-like manner. The goal is to construct a flexible architecture, which can be modified to include different models, represent the neural pathways and interconnected neural systems to drive the model.

The BL [37] is a modular simulation framework we have been developing to integrate neural networks and neural system models with real-time computer graphics and sensing. It is designed for maximum flexibility, and can interconnect with other architectures through very simple API layers. BL consists of a library of time-stepping modules and connectors. It is designed to support implementation of computational neuroscience models (e.g. [38]), from manual construction of specific neural circuits to large randomly connected ensembles. Neuron models supported by BL range from simple leaky integrators to spiking neurons to mean field models to self-organizing maps. These can be interconnected to form arbitrary neural networks—from hand-crafted networks representing specific biological circuits, to convolutional networks and recurrent neural networks. The networks are able to represent and learn online from both spatial and temporal data. A key strength of BL is the connection with computer graphics as a visualization tool. Complex dynamic networks can be visually investigated in multiple ways either through three-dimensional computer graphics (figures 1 and 2) or through a two-dimensional schematic interface (figures 3 and 4). The simulation can be directly modified—for example, changing neural model parameters while viewing the downstream effect on eye movement (figure 1).

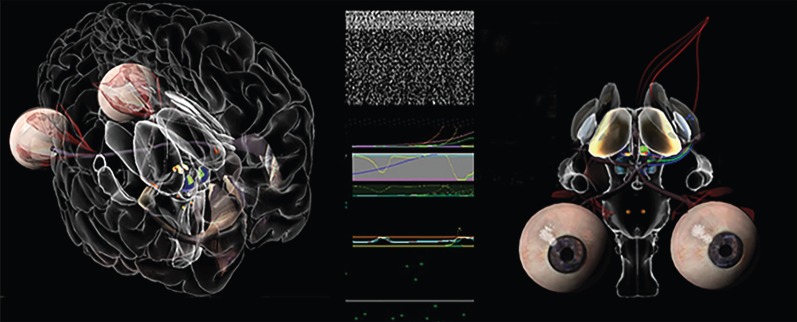

Figure 1.

BabyX's interactive brain. (left) Superior colliculus activity driving visual attention is visible (green) in the brainstem; (middle) BL raster plot of neural activity, and scrolling display of modulatory activity and (right) basal ganglia circuit. Interactive dopamine level modification (green) affecting cortico-thalamic feedback and eye movement.

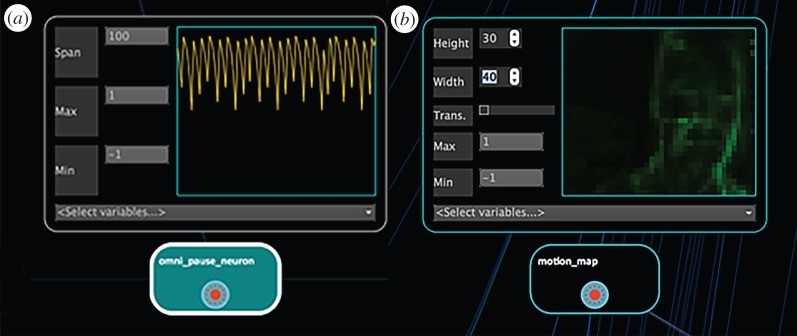

Figure 2.

BabyX (v. 3). Sensorimotor online learning session screenshot in which multiple inputs and outputs of the model can be viewed simultaneously including: scrolling displays, spike rasters, plasticity, activity of specific neurons, camera input, animated output.

Figure 3.

BL scopes viewing activity of single neuron (a) or array of retinotopic neurons (b) during a live interaction.

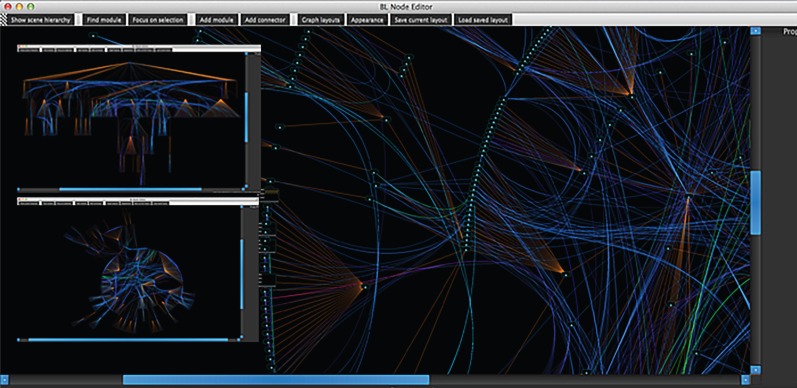

Figure 4.

Different BL windows showing different visual layouts of virtual connectome which can be interactively explored. Connections light up (green or red) when activated.

Supported sensory inputs include camera, microphone and keyboard with support for computer vision and audition, but data can be input from any arbitrary sensor or output to an effector through the API. Computer graphics output is through OpenGL and GLSL. A key feature of BL is that any variable in the neural network system can be shared and can drive any aspect of sophisticated three-dimensional animation system. For more details on BL see [37].

5.3. The BabyX project

To illustrate how these concepts come together, we now describe an experimental psychobiological simulation of an infant, ‘BabyX’, we are currently developing [39–41]. We aim to combine models of the facial and upper body motor systems with models of basic neural systems involved in early interactive behaviour and social learning, to create a virtual infant that can be naturally interacted with. This has a range of potential applications, for example, in paediatric simulation for clinical training, and also serves as a ‘baby step’ in creating biologically motivated interactive virtual human models.

At a conceptual level, BabyX's computer graphic face model is driven by muscle activations generated from motor neuron activity. The facial expressions are created by modelling the effect of individual muscle activations and non-linear combined activations to form an expression space [42]. The modelling procedures involve biomechanical simulation, scanning and geometric modelling. Fine details of visually important elements such as the mouth, eyes, eyelashes and eyelid geometry are painstakingly constructed for lifelikeness.

5.4. Biomechanics and the facial motor system

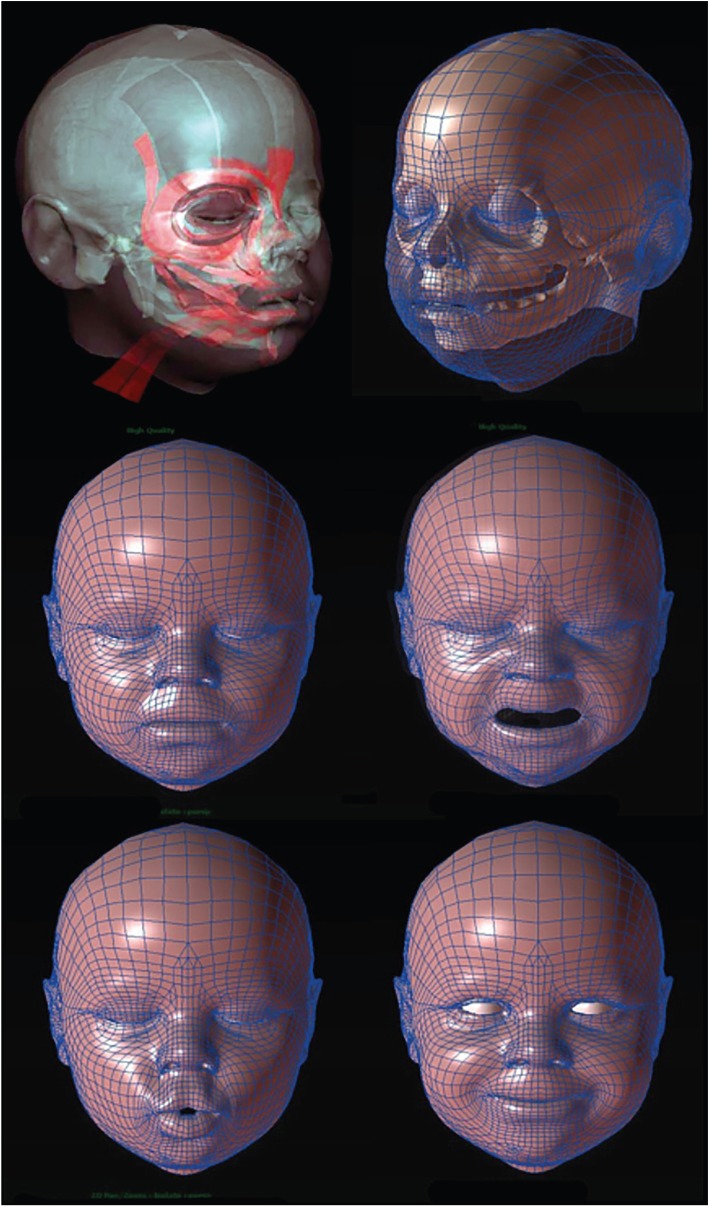

A highly detailed biomechanical face model (figure 5) has been constructed from MRI scans and anatomic reference (akin to Wu [43]). We model deep and superficial fat layers, deep and superficial muscle, fascia, connective tissue with different material properties.

Figure 5.

BabyX (v. 1). Detailed Biomechanical Face Model simulating expressions generated from muscle activations. This shows how a biomechanical model can form the basis for an interactive model.

Large deformation finite-element elasticity [44] was used to deform the face from rest position through simulated muscle activation. The action of each muscle, either in isolation or in combination with commonly co-activated muscles, was simulated to create a piecewise linear expression manifold. This pre-computed facial geometry is combined on the fly in the simulation framework BL to create real-time facial animation, so that in response to muscle activation driven by the neural system models there is realistic skin deformation and motion.

The facial muscles of expression are primarily driven by the facial nerve extending from the facial nucleus in the brainstem. The facial nucleus receives its main inputs from both subcortical and cortical areas, through different pathways. Expression dynamics are generated by neural pattern generation circuits, both in subcortical and cortical regions. Subcortical circuits include brainstem circuits for laughing and crying that are integrated with respiration. Evidence suggests certain basic emotional expressions do not have to be learned. Studies show that typical emotional expressions occur in congenitally blind people [45], smiles have been observed in infants born without a cortex [46] and basic expression development has been observed in the womb using ultrasound [47].

However, voluntary facial movements such as those involved in speech and culture-specific expressions are learned through experience and predominantly rely on cortical motor control. At least five areas representing the face have been identified in the cortex. The incoming pathways have different laterality and levels of influence on upper and lower regions of the face. Stroke patients with damage to the upper motor pathway to the primary motor and premotor areas cannot produce a symmetrical, voluntary smile, yet can smile normally in response to jokes [48].

So facial expression can be considered to consist of both innate and learned elements, driven by quite independent brain regions converging upon, and modulated by, the facial nucleus. In a generative model of innate and learned facial behaviour, we need to consider this architecture, and—of course—the upstream behavioural drivers that generate the incoming activity.

5.5. The nervous system

The BabyX project aims to encompass affective and cognitive neural systems to tie together emotion and cognition in an interactive system—so that it induces a dyadic behavioural loop with a user through sensing and synthesis. BabyX's neural system models implemented so far are sparse, yet span the neuroaxis and create muscle activation-based animation as motor output from continuously integrated neural models. Because of the lego-like nature of BL we can have a closed-loop functioning system which allows components to be experimentally modified or interchanged exploring different theoretical models. In total, the models aim to form a ‘large functioning sketch’ of interconnected mechanistic systems theoretically contributing to behaviour.

To date, BabyX's neural networks and circuits cover basic elements of motor control, behaviour selection, reflex actions, visual attention, learning, salience, emotion and motivation using theoretical models. A characteristic of the modelling approach is the representation of subcortical structures such as the basal ganglia, thalamus, hippocampus, hypothalamus, amygdala, superior colliculus, facial nucleus, oculomotor and other brainstem nuclei which form core components of the brain's operating system. Although highly simplified, these models serve as placeholders for future work, in which synthetic lesions can be introduced to simulate pathologies.

The structures are implemented as functional computational elements (e.g. the amygdala as a hebbian associator—‘neurons that fire together wire together’ [49]), and are based on current and practical theoretical models in the cognitive science and computational neuroscience literature. Because of the lego-like nature of BL, simple models can be replaced by more sophisticated models as they become available. One of the goals of BabyX is to visually represent functional neural circuit models in their appropriate anatomical positions. For example, the basal ganglia model (based on [50]) controls motor actions and is involved in behaviour switching, has an appropriate three-dimensional location and geometry, and the shader is activated by activity of the specific neurons, allowing the user to see the circuit in action.

Neurotransmitters and neuromodulators play many key roles in BabyX's learning and affective systems [51]. An example of a physiological variable that affects both the internal and external state of BabyX is dopamine, which provides a good example of how modelling at a low level interlinks various phenomena. In BabyX, dopamine plays a key role in motor activity, reinforcement learning, and its phasic activity provides a prediction error signal. It can modulate plasticity in the neural networks and also has subtle behavioural effects such as pupil dilation and blink rate. The use of such low-level models means that the user can adjust BabyX's behavioural dynamics, sensitivities and even temperament, by adjusting neurotransmitter and neuromodulator levels.

Muscle activities associated with specific behaviours are generated by recurrent neural networks which act as pattern generators. These circuits can be activated and modulated by different brain regions. For example, the amygdala responding to a sensory context can trigger activity in facial muscles directly via connections to the facial nucleus and also indirectly via the hypothalamus, by modulating a model of the stress system (hypothalamic–pituitary–adrenal (HPA) axis). The HPA model determines the production of parametric virtual hormones to form a physiological correlate of stress in the model and modulate behavioural circuits.

While these computational models are theoretical, and form simple representations of a sparse set of components, they are an attempt to provide an initial basis to explore the development of more holistic and complex models that can serve to interconnect behaviour and physiology, for use with future interactive DP simulations.

5.6. The Auckland Face Simulator

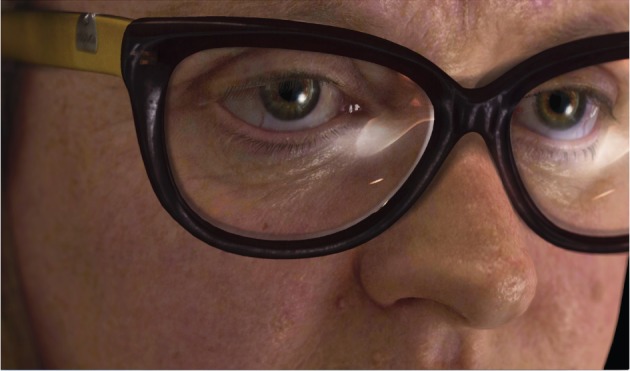

Using the same framework as BabyX, we have also been developing the AFS [40], which aims to cost-effectively create highly realistic and precisely controllable virtual faces (figures 5–7) that can function as virtual healthcare assistants and virtual ‘selves'. The AFS has been used to construct detailed interactive models of specific individuals in a cost-effective way by fitting generic animatable face simulation models to high quality photogrammetric scans. The faces may be animated using individual muscle activation parameters to form a full range of lifelike expressions. By tracking facial motion using computer vision techniques, we are able to solve for muscle activation, and re-animate the virtual face as a virtual mirror. However, the facial behaviour can also be controlled by computational models, so users can see their virtual selves reflect their motions but in a different mood (figure 7), for motivational effect.

Figure 6.

Image showing the degree of realism which is currently possible in a real-time simulation. The Auckland Face Simulator is being developed to cost-effectively create realistic and precisely controllable real-time models of the human face and its expressive dynamics for psychology research and real-time human–computer interaction applications.

Figures 7.

Computer-generated face created with the Auckland Face Simulator showing different expressions, to help engage the user.

5.7. Link to physiological models

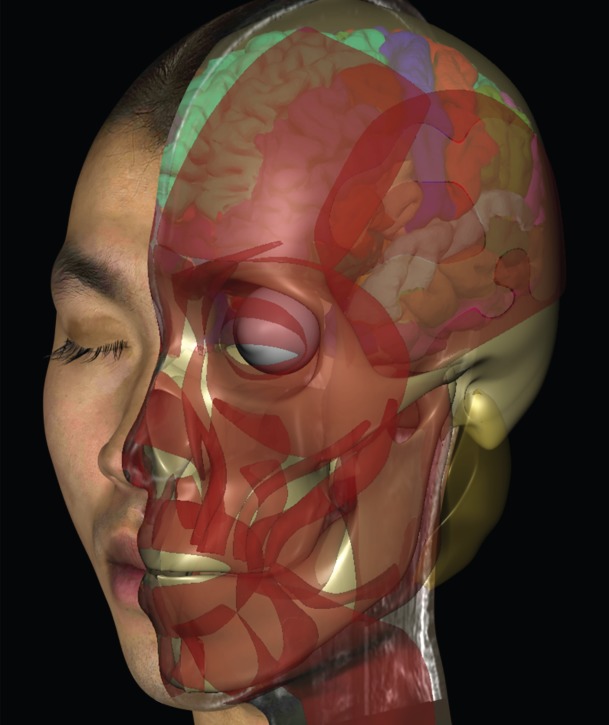

BL was designed primarily as a real-time integrative framework, exploring system level models designed to replicate integrative behaviour. However, as modelling depth and computational power increase, the anatomically defined modules can be further linked to more detailed physiology-based models. To this end, we are starting to explore the interfacing of BL with Physiome-associated mark-up languages such as CellML [52] and NeuroML [53], which will help link interactive computational neuroscience and physiological simulations. Figure 8 shows an example of visualizing internal anatomical features combined with the facial simulation.

Figure 8.

The same face as in figure 7 but visualizing internal anatomical features combined with the facial simulation. This shows how current techniques enable realistic interactive faces to be combined with anatomic visualization, as part of a Digital Patient model.

6. Discussion

Animated models of the body are starting to be used to educate patients about their illness and how treatment works inside the body. These can change people's perceptions of the underlying cause and pathogenesis of the illness. Watching animated models of how medical treatment, exercise and diet can help reduce disease can motivate people to adhere to treatment recommendations and healthy behaviours.

Currently, virtual healthcare assistants are being used in various healthcare applications, including cCBT. These human-like avatars often ask patients questions about their health, to first form diagnoses and then direct them to appropriate therapies or strategies to motivate behaviour change and improve health. A realistic appearance, as well as appropriate verbal and non-verbal behaviours, is important in creating trust and acceptance. Current models have limited realism and naturalistic behaviour.

Pertinent both to building engaging avatars and visualization of physiology, it is possible to use biologically based models holistically to create not only realistic structures but also realistic movements that can express appropriate emotions, including empathy. The nervous system must be modelled to include sensory and motor systems, as well as perception, learning, memory and emotions. An implementation of a framework integrates biomechanics, anatomical modelling, neural system models and computer vision and audio and interactive real-time computer graphics for autonomous facial animation was illustrated as possible example of how a holistic multi-scale modelling approach may be embodied and used for digital interaction.

The vision of the VPH provides the ultimate basis for creating both appropriate health assistant avatars that are engaging and motivating, and creating personalized physiological models. These models can be used for multiple applications—teaching medical students, modelling the effects of various treatments for specific patients in the virtual world, and also to change patients perceptions of their illness and its treatment to motivate healthy behaviours.

Acknowledgements

The Laboratory for Animate Technologies team: David Bullivant, Paul Robertson, Khurram Jawed, Ratheesh Kalarot, Tim Wu, Oleg Efimov, Werner Ollewagen and Kieran Brennan developed the BL simulation framework and computer graphics models referenced in this article. Thanks also to Annette Henderson, Paul Corballis, and John Reynolds.

Competing interests

We declare we have no competing interests.

Funding

The technology development described in this article was supported in part by the University of Auckland, VCSDF, CFRIF, SRIF funds and MBIE ‘Smart Ideas’.

References

- 1.Hunter P, et al. 2013. A vision and strategy for the virtual physiological human. 2012 update. Interface Focus 3, 20130004 ( 10.1098/rsfs.2013.0004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lyons R, Johnson TR, Khalil MK, Cendán JC. 2014. The impact of social context on learning and cognitive demands for interactive virtual human simulations. PeerJ 2, e372 ( 10.7717/peerj.372) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kleinsmith A, Rivera-Gutierrez D, Finney G, Cendan J, Lok B. 2015. Understanding empathy training with virtual patients. Comput. Hum. Behav. 52, 151–158. ( 10.1016/j.chb.2015.05.033) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Leventhal H, Meyer D, Nerenz DR. 1980. The common sense representations of illness danger. In Medical psychology, vol. 2 (ed Rachman S.), pp. 7–30. New York, NY: Pergamon. [Google Scholar]

- 5.Leventhal H, Benyamini Y, Diefenbach M, Patrick-Miller L, Robitaille C. 1997. Illness representations: theoretical foundations. In Perceptions of health and illness: current research and applications (eds Petrie KJ, Weinman JA), pp. 1–19. Amsterdam, The Netherlands: Harwood Academic Publishers. [Google Scholar]

- 6.Broadbent E, Petrie KJ, Ellis CJ, Ying J, Gamble G. 2004. A picture of health—myocardial infarction patients’ drawings of their hearts and subsequent disability: a longitudinal study. J. Psychosom. Res. 57, 583–587. ( 10.1016/j.jpsychores.2004.03.014) [DOI] [PubMed] [Google Scholar]

- 7.van Leeuwen BM, Herruer JM, Putter H, van der Mey AG, Kaptein AA. 2015. The art of perception: patients drawing their vestibular schwannoma. Laryngoscope 125, 2660–2667. ( 10.1002/lary.25386) [DOI] [PubMed] [Google Scholar]

- 8.Broadbent E, Ellis CJ, Thomas J, Gamble G, Petrie KJ. 2009. Further development of an illness perception intervention for myocardial infarction patients: a randomised controlled trial. J. Psychosom. Res. 67, 17–23. ( 10.1016/j.jpsychores.2008.12.001) [DOI] [PubMed] [Google Scholar]

- 9.Petrie KJ, Cameron LD, Ellis CJ, Buick D, Weinman J. 2002. Changing illness perceptions after myocardial infarction: an early intervention randomized controlled trial. Psychosom. Med. 64, 580–586. ( 10.1097/00006842-200207000-00007) [DOI] [PubMed] [Google Scholar]

- 10.Karamanidou C, Weinman J, Horne R. 2008. Improving haemodialysis patients’ understanding of phosphate-binding medication: a pilot study of a psycho-educational intervention designed to change patients’ perceptions of the problem and treatment. Br. J. Health Psychol. 13, 205–214. ( 10.1348/135910708X288792) [DOI] [PubMed] [Google Scholar]

- 11.Jones ASK, Ellis CJ, Nash M, Stanfield B, Broadbent E. 2015. Using animation to improve recovery from acute coronary syndrome: a randomized trial. Ann. Behav. Med. 1–11. ( 10.1007/s12160-015-9736-x) [DOI] [PubMed] [Google Scholar]

- 12.Perera A, Thomas MG, Moore JO, Faasse K, Petrie KJ. 2014. Effect of a smartphone application incorporating personalized health-related imagery on adherence to antiretroviral therapy: a randomized clinical trial. Aids Patient Care STDs 28, 579–586. ( 10.1089/apc.2014.0156) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Weinman J, Yusuf G, Berks R, Rayner S, Petrie KJ. 2009. How accurate is patients’ anatomical knowledge: a cross-sectional, questionnaire study of six patient groups and a general public sample. BMC Fam. Pract. 10, 43 ( 10.1186/1471-2296-10-43) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bandura A. 1969. Principles of behavior modification. New York, NY: Holt, Rinehart & Winston. [Google Scholar]

- 15.Gibbons FX, Gerrard M. 1995. Predicting young adults' health risk behavior. J. Pers. Soc. Psychol. 69, 505–517. ( 10.1037/0022-3514.69.3.505) [DOI] [PubMed] [Google Scholar]

- 16.Charlop-Christy MH, Le L, Freeman KA. 2000. A comparison of video modeling with in vivo modeling for teaching children with autism. J. Autism Dev. Disord. 30, 537–552. ( 10.1023/A:1005635326276) [DOI] [PubMed] [Google Scholar]

- 17.Andersson G, Bergström J, Holländare F, Carlbring P, Kaldo V, Ekselius L. 2005. Internet-based self-help for depression: randomised controlled trial. Br. J. Psychiat. 187, 456–461. ( 10.1192/bjp.187.5.456) [DOI] [PubMed] [Google Scholar]

- 18.Learmonth D, Trosh J, Rai S, Sewell J, Cavanagh K. 2008. The role of computer-aided psychotherapy within an NHS CBT specialist service. Couns. Psychother. Res. 8, 117–123. ( 10.1080/14733140801976290) [DOI] [Google Scholar]

- 19.Mackinnon A, Griffiths KM, Christensen H. 2008. Comparative randomised trial of online cognitive–behavioural therapy and an information website for depression: 12-month outcomes. Br. J. Psychiat. 192, 130–134. ( 10.1192/bjp.bp.106.032078) [DOI] [PubMed] [Google Scholar]

- 20.Proudfoot J, Ryden C, Everitt B, Shapiro DA, Goldberg D, Mann A, Tylee A, Marks I, Gray JA. 2004. Clinical efficacy of computerised cognitive–behavioural therapy for anxiety and depression in primary care: randomised controlled trial. Br. J. Psychiat. 185, 46–54. ( 10.1192/bjp.185.1.46) [DOI] [PubMed] [Google Scholar]

- 21.Bergström J, Andersson G, Ljótsson B, Rück C, Andréewitch S, Karlsson A, Carlbring P, Andersson E, Lindefors N. 2010. Internet-versus group-administered cognitive behaviour therapy for panic disorder in a psychiatric setting: a randomised trial. BMC Psychiat. 10, 54 ( 10.1186/1471-244X-10-54) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kiropoulos LA, Klein B, Austin DW, Gilson K, Pier C, Mitchell J, Ciechomski L. 2008. Is internet-based CBT for panic disorder and agoraphobia as effective as face-to-face CBT? J. Anxiety. Disord. 22, 1273–1284. ( 10.1016/j.janxdis.2008.01.008) [DOI] [PubMed] [Google Scholar]

- 23.Kenwright M, et al. 2005. Brief scheduled phone support from a clinician to enhance computer-aided self-help for obsessive-compulsive disorder: randomized controlled trial. J. Clin. Psychol. 61, 1499–1508. ( 10.1002/jclp.20204) [DOI] [PubMed] [Google Scholar]

- 24.Marks IM, Mataix-Cols D, Kenwright M, Cameron R, Hirsch S, Gega L. 2003. Pragmatic evaluation of computer-aided self-help for anxiety and depression. Br. J. Psychiat. 183, 57–65. ( 10.1192/bjp.183.1.57) [DOI] [PubMed] [Google Scholar]

- 25.Litz BT, Engel CC, Bryant RA, Papa A. 2007. A randomized, controlled proof-of-concept trial of an Internet-based, therapist-assisted self-management treatment for posttraumatic stress disorder. Am. J. Psychiat. 164, 1676–1684. ( 10.1176/appi.ajp.2007.06122057) [DOI] [PubMed] [Google Scholar]

- 26.NICE. 2006. Computerised cognitive behaviour therapy for depression and anxiety. Review of Technology Appraisal 51. National Institute for Health and Clinical Excellence Technology Appraisal 97. (www.nice.org.uk/nicemedia/pdf/TA097guidance.pdf) (accessed 1 March 2007).

- 27.Schulman D, Bickmore TW, Sidner CL. 2011. An intelligent conversational agent for promoting long-term health behavior change using motivational interviewing. In AAAI Spring Symp.: AI and Health Communication, Stanford, CA, 21–23 March. [DOI] [PMC free article] [PubMed]

- 28.Rizzo A, et al. 2011. SimCoach: an intelligent virtual human system for providing healthcare information and support. Int. J. Disabil. Hum. Dev. 10, 277–281. [PubMed] [Google Scholar]

- 29.DeVault D, et al. 2014. SimSensei Kiosk: a virtual human interviewer for healthcare decision support. In Proc. of the 2014 Int. Conf. on Autonomous agents and multi-agent systems. International Foundation for Autonomous Agents and Multiagent Systems, Paris, France, pp. 1061–1068. New York, NY: IFAAMAS.

- 30.Mann JA, MacDonald BA, Kuo I, Li X, Broadbent E. 2015. People respond better to robots than computer tablets delivering healthcare instructions. Comput. Hum. Behav. 43, 112–117. ( 10.1016/j.chb.2014.10.029) [DOI] [Google Scholar]

- 31.Bickmore T, Mauer D. 2006. Modalities for building relationships with handheld computer agents. Montreal, Canada: ACM SIGCHI Conference on Human Factors in Computing Systems(CHI). [Google Scholar]

- 32.Bickmore T, Gruber A. 2010. Relational agents in clinical psychiatry. Harv. Rev. Psychiatry 18.2, 119–130. ( 10.3109/10673221003707538) [DOI] [PubMed] [Google Scholar]

- 33.Ring L, Utami D, Bickmore T. 2014. The right agent for the job? In Intelligent virtual agents, pp. 374–384. Cham, Switzerland: Springer International Publishing.

- 34.Broadbent E, Kumar V, Li X, Sollers J, Stafford R, MacDonald BA, Wegner D. 2013. Robots with display screens: a robot with a more humanlike face display is perceived to have more mind and a better personality. PLoS ONE 8, e72589 ( 10.1371/journal.pone.0072589) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Brinkman WP, Broekens J, Heylen D. 2015. Proc. intelligent virtual agents: 15th Int. Conf., IVA 2015, vol. 9238. Delft, The Netherlands: Springer.

- 36.MacDorman K, Entezari S. 2015. Individual differences predict sensitivity to the uncanny valley. Interact Stud. 16, 141–172. ( 10.1075/is.16.2.01mac) [DOI] [Google Scholar]

- 37.Sagar M, Robertson P, Bullivant D, Efimov O, Jawed K, Kalarot R, Wu T. 2015. BL: a visual computing framework for interactive neural system models of embodied cognition and face to face social learning. In Unconventional computation and natural computation, pp. 71–88. Berlin, Germany: Springer International Publishing. [Google Scholar]

- 38.Trappenberg T. 2010. Fundamentals of computational neuroscience. New York, NY: Oxford University Press. [Google Scholar]

- 39.Sagar M, Bullivant D, Robertson P, Efimov O, Jawed K, Kalarot R, Wu T. 2014. A neurobehavioural framework for autonomous animation of virtual human faces. In SIGGRAPH Asia 2014 autonomous virtual humans and social robots for telepresence. New York, NY: ACM.

- 40.Sagar M. 2015. Auckland Face Simulator. In ACM SIGGRAPH 2015 Computer Animation Festival, p. 183. New York, NY: ACM.

- 41.Sagar M. 2015. BabyX. In ACM SIGGRAPH 2015 Computer Animation Festival, 184 New York, NY: ACM. [Google Scholar]

- 42.Sagar M. 2006. Facial performance capture and expressive translation for king kong. In ACM SIGGRAPH 2006 Sketches, p. 26. New York, NY: ACM.

- 43.Wu T. 2014. A computational framework for modeling the biomechanics of human facial expressions, PhD Thesis, The University of Auckland, New Zealand.

- 44.Bathe K, Ramm E, Wilson EL. 1975. Finite element formulations for large deformation dynamic analysis. Int. J. Numer. Methods Eng. 9.2, 353–386. ( 10.1002/nme.1620090207) [DOI] [Google Scholar]

- 45.Matsumoto D, Willingham B. 2009. Spontaneous facial expressions of emotion of congenitally and noncongenitally blind individuals. J. Pers. Social Psychol. 96, 1 ( 10.1037/a0014037) [DOI] [PubMed] [Google Scholar]

- 46.Luyendijk W, Treffers PDA. 1992. The smile in anencephalic infants. Clin. Neurol. Neurosurg. 94, 113–117. ( 10.1016/0303-8467(92)90042-2) [DOI] [PubMed] [Google Scholar]

- 47.Reissland N, Francis B, Mason J, Lincoln K. 2011. Do facial expressions develop before birth? PLoS ONE 6, e24081 ( 10.1371/journal.pone.0024081) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Gothard K. 2014. The amygdalo-motor pathways and the control of facial expressions. Front. Neurosci. 8 ( 10.3389/fnins.2014.00043) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Hebb DO. 1949. The organization of behavior. New York, NY: Wiley & Sons. [Google Scholar]

- 50.Redgrave P, Gurney K, Reynolds J. 2008. What is reinforced by phasic dopamine signals? Brain Res. Rev. 58, 322–339. ( 10.1016/j.brainresrev.2007.10.007) [DOI] [PubMed] [Google Scholar]

- 51.Panksepp J. 1998. Affective neuroscience: the foundations of human and animal emotions. Oxford, UK: Oxford University Press. [Google Scholar]

- 52.Lloyd CM, Halstead MD, Nielsen PF. 2004. CellML: its future, present and past. Prog. Biophys. Mol. Biol. 85, 433–450. ( 10.1016/j.pbiomolbio.2004.01.004) [DOI] [PubMed] [Google Scholar]

- 53.Gleeson P, Steuber V, Silver RA, Crook S. 2012. NeuroML. In Computational systems neurobiology, pp. 489–517. Delft, The Netherlands: Springer. [Google Scholar]