Abstract

Clinical competency and the assessment of core skills is a crucial element of any programme leading to an award with a clinical skills component. This has become a more prominent feature of current reports on quality health care provision. This project aimed to determine ultrasound practitioners’ opinions about how best to assess clinical competency. An on-line questionnaire was sent to contacts from the Consortium for the Accreditation of Sonographic Education and details distributed at the British Medical Ultrasound Society conference in 2011. One hundred and sixteen responses were received from a range of clinical staff with an interest in ultrasound assessment. The majority of respondents suggested that competency assessments should take place in the clinical departments with or without an element of assessment at the education centre. Moderation was an important area highlighted by respondents, with 84% of respondents suggesting that two assessors were required and 66% of those stating some element of external moderation should be included. The findings suggest that respondents’ preference is for some clinical competency assessments to take place on routine lists within the clinical department, assessed by two people one of which would be an external assessor. In view of recent reports relating to training and assessment of health care professionals, the ultrasound profession needs to begin the debate about how best to assess clinical competence and ensure appropriate first post-competency of anyone undertaking ultrasound examinations.

Keywords: Clinical competency, ultrasound, practice, assessment

Introduction

Clinical competency assessment is an essential part of any ultrasound programme, to ensure ultrasound practitioners are safe, competent and aware of their limitations. The Consortium for the Accreditation of Sonographic Education (CASE) accredit ultrasound programmes and short courses within the United Kingdom (UK). CASE stipulate that ultrasound practitioners completing a CASE accredited course are ‘clinically competent to undertake ultrasound examinations and are professionally responsible for their own case load’ and as such, all programmes must have clinical competency assessment within them.1 The providers of ultrasound education are able to interpret the guidelines to meet the needs of their programme and local clinical training sites, as long as these can be justified to the CASE accreditors and CASE council. This has ultimately led to a variety of assessment methods being used across the UK. In 2010, CASE commissioned a lead to put together a team to develop clinical competency guidelines, to ensure a minimum standard in relation to competency assessments, for all CASE accredited courses and programmes. The draft guidelines were informed by an on-line questionnaire sent to ultrasound professionals, which covered a range of topics relating to progress monitoring, assessment and preceptorship. This first article will discuss the results relating to the final clinical competency assessment.

Background

At the time of CASE commissioning the competency guidelines project, the Department of Health had concerns about standards of ultrasound practice in the UK, and work was in progress to produce a competency framework for non-obstetric ultrasound examinations to ensure fitness to practise both prior to qualification and throughout the ultrasound practitioner’s career.2

Ultrasound is a highly operator-dependent modality3,4 with practitioners taking responsibility for the examination, communication, interpretation, diagnosis, report writing and in many cases providing advice on further imaging and/or management. The need for formal assessment of competency is accepted by those within the profession,2,3,5 although the optimal method of assessing ultrasound clinical competence is an on-going topic for debate.

Assessment of competence

The British Medical Ultrasound Society (BMUS), in their guidelines for developing a business case for non-radiologists to undertake ultrasound examinations, suggest ‘formal independent assessment’ as one of the training requirements.5 CASE recommend that ‘rigorous’ assessment methods are used to ensure first post-competent practitioners, but do not specify what constitutes ‘rigorous’.1 A CASE newsletter in 20126 suggested that courses were developing novel methods of assessing ultrasound competency, although no further explanation of these ‘novel approaches’ was provided. Haptic simulation is increasingly being used in both the teaching and assessment of ultrasound skills,7,8 although there are very few published studies relating to validity of these new simulators. Norcini and McKinley9 review a range of assessment methods for medical education, including the use of observation, simulation using devices and simulation using standardised patients, although it has to be questioned whether using a range of methods, without direct observation of practice could lead to task-based learning.

Watson et al.10carried out a systematic review of the literature to determine how clinical assessments were used within nursing. Their study found that the term ‘competence’ had different meanings to different practitioners and much of the evidence within the nursing literature was of poor quality in relation to measuring clinical competence.10

Standardisation of assessment

There is no standardised clinical assessment for ultrasound practitioners undertaking CASE accredited programmes or short courses. In practice, ultrasound programmes that provide clinical competency assessment, use a variety of methods. Some programmes have an element of external assessment, to ensure consistency across the cohort, whilst others enable internal assessors to undertake the final competency assessments. The question of whether there should be standardisation is one for on-going debate and discussion. There are references to current literature and reports that highlight potential concerns when there is a lack of standardisation, for example, the Francis11 report suggests that there should be ‘sufficient practical elements’ within nurse training to provide reassurance that a ‘consistent standard is achieved’. He went further to suggest that national standards are developed to assess that nurses are competent in their role. It is important to recognise that the previous national standard for ultrasound practice was the Diploma in Medical Ultrasound, which did not have any clinical competency assessment attached to it.

In the 2013 budget report, it was suggested that university funding would be cut.12 This, in addition to changes within National Health Service education funding,13 could impact on the funding available for post-graduate ultrasound programmes.7 Higher education institutions are reviewing the way programmes are delivered to ensure they remain cost effective.14 Fairhead also commented in the review from the CASE annual programme monitoring report: ‘A general point to emerge from this year’s monitoring was the constant pressure for the programmes to become more efficient’.15

Method

A voluntary, anonymous on-line survey was carried out using SurveyMonkey™, with convenience sampling, via CASE and programme director contacts in the UK and a flyer at the BMUS conference in September 2011. A small pilot study was initially carried out amongst clinical sonographers, to ensure the questions were appropriate. Minor amendments were made to the wording of questions for clarity, and a glossary of terms was provided to ensure understanding of the terminology used within the questionnaire. The questionnaire included closed questions, Likert scale answers and free text sections, to allow respondents to provide additional information and opinion. Questions covered a wide range of issues relating to summative competency assessment, in addition to formative monitoring, who should mentor and assess trainees and the location of assessments.

The chair of the School of Health Sciences Ethics Committee at City University did not feel that the dissemination of the findings of this project required full ethics approval, due to the professional nature of the work and self-selecting sample.

The project lead also held verbal or e-mail discussion with a range of clinical colleagues, including representatives from the Royal College of Radiologists, the Royal College of Obstetricians and Gynaecologists, the British Society for Gynaecology Imaging, the Fetal Anomaly Screening Programme (FASP) and National Screening Committee, including the Abdominal Aortic Aneurysm programme. These discussions were to determine current practice in the UK for a range of practitioners, rather than formal evaluation.

Results

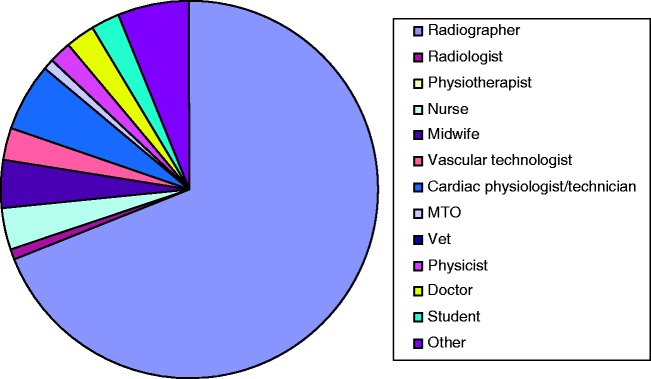

There were 116 responses to the on-line questionnaire; however, a response rate cannot be calculated because of the convenience sampling method used. Some questions generated multiple responses; in these cases the results are displayed as percentages. Of the 116 respondents, the majority were radiographers (64%) by original profession, followed by cardiac physiologist/technician and ‘other’ (both 5.6%) (Figure 1). In relation to clinical education, some respondents undertake multiple roles; the majority were mentors and/or assessors and 29 (25%) were educationalists.

Figure 1.

Original professional background

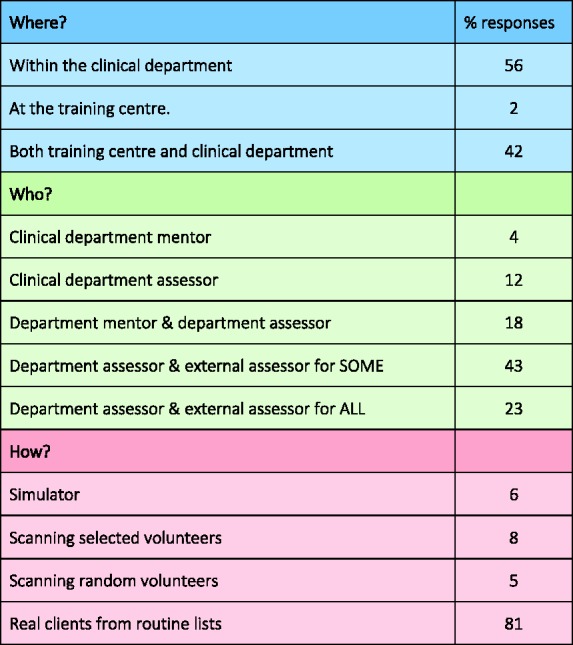

The majority of respondents said that summative, final competency assessments should take place within the clinical department or within the clinical department and training centre and 81% wanted the assessments to be on real patients, rather than on simulated or standardised patients (Figure 2). Only 2% of respondents suggested the assessment should be in the training centre only (Figure 2). Of respondents, 84% wanted two people to undertake the assessment; 66% of those suggested an element of external moderation should be included (Figures 2 and 3).

Figure 2.

Where and how should summative assessments be undertaken? (percentage)

Figure 3.

Who should undertake the summative clinical assessments? (percentage)

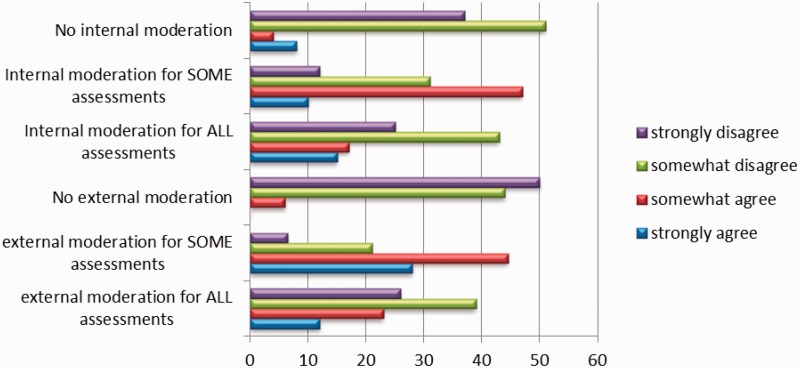

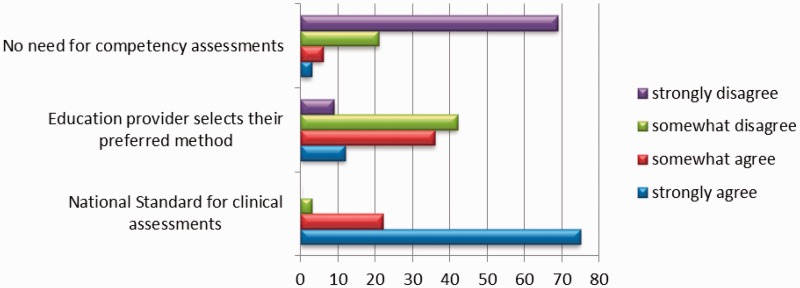

Respondents were asked for their opinions on whether there should be a national standard for clinical competency assessment or whether it should be left to individual providers to determine the most appropriate method of assessment. Ninety-seven percent strongly agreed or agreed that there should be a national standard for competency assessment, whereas there was a more mixed response to the question asking if the education provider should select their preferred method of summative assessment, with 51.5% disagreeing or strongly disagreeing and 48.5% agreeing to some extent (Figure 4). The question was asked in two ways to determine whether there was consistency in responses. The mixed responses might suggest that either the question was unclearly worded or that people did feel strongly that there was a national minimum standard, but with some flexibility for local providers to adapt the assessments within the national standard.

Figure 4.

Standardisation of clinical competency assessments

A range of questions was asked to elicit further information about opinions relating to competency assessments. The majority of respondents (99%) felt there should be specified assessments for each area of practice, with 85% suggesting that a pass/fail assessment would be appropriate. Contradicting this, 60% suggested that a mark should be awarded.

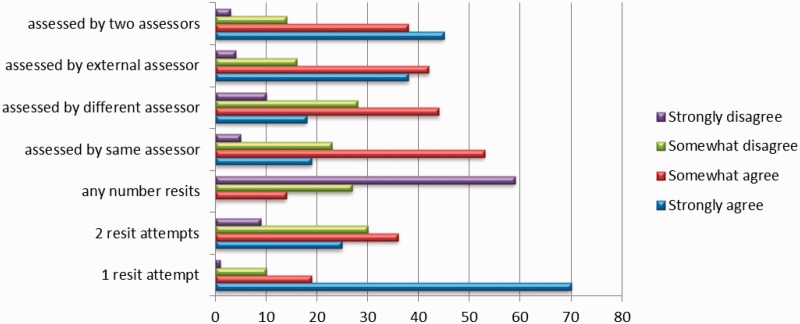

In cases where the trainee has failed a clinical competency assessment, 89% of respondents agreed or somewhat agreed that just one resit attempt should be allowed, 61% agreed with two resit attempts and only 14% somewhat agreed that any number of resit attempts should be available to the trainee (Figure 5). There was limited agreement on whether it should be the same or a different assessor who undertakes resit assessments, although there was a high-positive response rate when asked if there should be an external assessor or two assessors present for resits.

Figure 5.

Resit assessment arrangements (percentage)

Discussion

The demographics in this study were unsurprising, as 49% of BMUS members are radiographers.16 As respondents to the questionnaire were self-selecting, it is likely that they had an interest in competency assessment and quality, so it was hypothesised that many would be mentors and/or assessors. Generally, in practice, most ultrasound practitioners undertake teaching to some extent.

The 98% response rate for clinical assessments being undertaken in the clinical department or training centre, and the clinical department presumes that the training centre is separate from the clinical department. However, some training centres are integrated within hospital sites and provide hands-on clinical experience with a range of patients as part of the course. Trainees would learn the clinical skills and the theory at the training centre, so in these situations it may be more appropriate that the trainee be assessed in a familiar environment. Of respondents asked about how clinical competency should be assessed, 81% wanted the assessment to be on real patients. McKinley et al.17 recommend that direct observation of practice is an appropriate form of clinical competency assessment, whilst Watson et al.10 comment on the potential use of a range of methods for assessment, including observation, simulation and academic assessments such as objective structured exams. Simulation could include simulated patients, where volunteers are selected with known pathology, or the use of a simulator for assessment. Six percent of respondents to the questionnaire suggested that a simulator would be appropriate for assessment and 8% thought that selected volunteers would be suitable (Figure 2).

The results for questions about how clinical competence should be marked were contradictory, with 85% of respondents suggesting a pass/fail assessment, but 60% recommended that a mark should be given. CASE recommends that assessments are pass/fail, rather than awarded a mark.1 A pass/fail assessment suggests that the trainee is either competent or not competent at the time of the assessment. Awarding a mark for clinical competency assessment could lead to inconsistencies, dependent on who is undertaking the assessment. To ensure consistency for trainees, if marks were awarded, very strict marking criteria would be needed and in line with the Quality Assurance Agency recommendations,18 moderation would be essential. Stuart19 suggests that a trainee is either able to perform to the required standard or they are not and it is essential to ensure that a fair assessment has taken place and in cases where a trainee is not competent, ‘failure to fail’ should be avoided. A failure at the resit clinical assessment can lead to a fail for the whole programme, which could impact significantly on the trainee’s career prospects. This knowledge and the relationship built up with the trainee can impact on the assessors’ decision making during a clinical assessment, in addition to concerns about external scrutiny or complaints from the trainee.20 It is recognised that failing a trainee can be stressful for all parties, so Duffy20 recommends, in her study looking at nursing competency, that ‘lecturers should have a role in clinical assessment’ (p. 82).

Responses in the free text boxes related to clinical issues and competency assessment, suggesting the need for departmental decisions to be made prior to final assessment of competency being arranged. The key theme related to the need to reduce bias and/or to ensure consistency, by having some form of moderation and more importantly, the need for external assessor presence during resit assessments for students failing their first attempt at competency assessment. If an internal assessor, who is familiar with the trainee’s work was to assess with an external assessor, this would ensure that some of the factors highlighted in the work by Watson et al.10 could be overcome to some extent, to provide a less biased final competency assessment.

Respondents included comments relating to varying standards across hospitals and the need for standardisation and moderation, for example:

There should be a national standard with the same minimum number and range for every ultrasound student regardless of university / institution offering the award. Leaving it to staff within the department to “sign off” the learner as competent will result in a lack of standardisation of the outcome competencies.

One of the challenges of clinical competency assessment is judging what is acceptable ‘competence’. Whilst there are national guidelines for minimum standards of practice e.g. Royal Colleges, FASP, Occupational Standards, professional body guidelines and standards for ultrasound practice, interpretation of these can vary between practitioners and departments. An element of either external assessment/moderation or standardised patients/simulation could help to overcome this. One respondent highlighted this by suggesting that in addition to external assessment of the student ‘departmental assessors should be assessed in their assessor role to ensure consistency’ and this is supported by Norcini21 who recommends a number of different people assess doctors to provide a more valid and reliable assessment.

Another key factor to be considered, when determining the optimal method of assessment and how best to ensure competency is that of cost. Staff time and travel is costly if representatives from training centres are to attend every assessment for each trainee, which is an important factor to consider in times of austerity. Anecdotal evidence would suggest that programme teams for ultrasound consist of one or two members of staff, who often have additional responsibilities within wider programme or faculty teams. Sumway and Harden22 suggest that costs need to be factored into any decisions made relating to assessments.

Limitations of the study

There are a number of limitations with this study, the first of which is related to the methods used for recruiting respondents. The respondents were self-selecting, suggesting that they had some interest in clinical education, ultrasound training and assessment. As the questionnaire was designed to inform the development of guidelines, it seems appropriate that people with an interest in training and assessment respond, however, this could introduce bias into the results. The response rate of 116 is quite low, as all ultrasound practitioners were eligible to participate. The results of this study should be viewed with caution.

In some instances, respondents were asked to give their opinion on specific questions, using a Likert scale format. It would be expected that the response rate for each statement would be 100%, however, this was not always the case; some questions were left unanswered and others had multiple responses.

As the questionnaire was distributed in 2011 and contained a number of questions relating to simulation, there may be issues with currency of responses. Since the questionnaire was designed and completed, there have been rapid technological advances in ultrasound simulation. Respondents may have a different opinion if the questionnaire was repeated in view of these developments and the more widespread use of simulation in ultrasound education and research.7,8

Conclusion

Clinical competency is essential to ensuring high-quality service provision for patients and maintaining professional standards. Regulatory bodies, such as CASE, are the ‘gatekeepers for patients’ and have a duty of care to service users to ensure that assessments are rigorous and fit for purpose (p. 208).23 To ensure on-going quality standards, clinical competency assessments need to be valid, reliable and consistent. Recommendations from the Francis11 report suggest that consistency is required in competency assessment of nurses, and that national standards are required. Should there also be consistency in standards of assessment for other areas of health care practice, including ultrasound? Generally, the ultrasound community responding to this survey was in favour of standardised clinical competency assessments, with internal progress monitoring and some element of external moderation of final assessments to ensure independence and consistency. The findings suggest that respondents’ preference is for trainees to have some element of assessment during routine lists within the clinical department, using unplanned cases, to simulate the environment in which they will be working after they are deemed competent.

Considerations for future practice

The draft guidelines were completed and returned to CASE council for consideration, after the final consultation period. Since the work on this project was completed, the health service has been reorganised and funding for training has been reduced.24 With the introduction of new simulators and training budget cuts, there are questions raised as to whether external moderation of each trainee in their clinical placement department is a sustainable, cost-effective method of assessment.

The issue of simulator use as part of the assessment process has already been introduced,7 although there are potential issues relating to this as a method of assessment. There will need to be a substantial number of different cases available, to prevent the sharing of information between trainees, but also of a similar standard of difficulty to ensure consistency in experience. More generic skills such as communication skills, report writing, ergonomics and managing situations as they arise still need to be assessed, particularly as ultrasound is highly operator dependent and involves complex communication and decision-making skills.

There is currently debate amongst many health care professionals about the optimal method of assessment, to ensure validity, reliability and consistency and it seems that multiple methods of assessment may be required. One important area to begin the debate is related to simulation as a way of assessing clinical competence in ultrasound. Would the use of simulator assessment negate the issues relating to independence of assessors, for example assessors that are too strict, have different opinions about the level of skills required for first post competency, or assessors that ‘fail to fail’? If simulator assessment was used for competency assessment to ensure some form of consistency, how many cases should be undertaken to ensure validity of assessment and is there a reliable way of assessing the other core skills required of a competent sonographer?

Acknowledgements

The author would like to thank Peter Cantin, Fiona Maddox, Sophie Bale, Sue Halson-Brown, Julie Walton and Shaun Ricks for their work on the project team; Allison Harris, Jennifer Edie, Rosemary Lee and Vivien Gibbs for their support and advice during the project; Christoph Lees, Ian Francis, and Bob Jarman for sharing their professions’ practices; CASE Council and all respondents to the original questionnaire and those who provided further feedback from the consultation on the draft document.

Declarations

Competing interests: The author has no conflicts of interest to declare.

Funding: This work received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Ethical approval: Discussed with the chair of the School of Health Sciences ethics committee at City University London. No ethics approval required.

Guarantor: GH.

Contributorship: GH researched literature, was involved in protocol development, recruitment, data analysis and writing the manuscript.

References

- 1.The Consortium for the Accreditation of Sonographic Education. Validation and Accreditation Handbook, York: CASE, 2009. See http://www.case-uk.org/resources/CASE+handbook+060614.pdf (last checked 13 August 2014). [Google Scholar]

- 2.Walton J. National Clinical Standards to inform NHS and non NHS service delivery. Proceedings of 21st Euroson Conference integrated with 41st Annual Scientific Meeting of British Medical Ultrasound Society, Edinburgh. 2009.

- 3.Bates J, Deane C, Lindsell D. Extending the Provision of Ultrasound Services in the UK, London: British Medical Ultrasound Society, 2003. See http://www.bmus.org/policies-guides/pg-protocol01.asp (last checked 17 August 2013). [Google Scholar]

- 4.Ross M, Brown M, McLaughlin K, et al. Emergency physician-performed ultrasound to diagnose cholelithiasis: A systematic review. Acad Emerg Med 2012; 18: 227–35. [DOI] [PubMed] [Google Scholar]

- 5.British Medical Ultrasound Society. Business Case for Practical Training in Medical Ultrasound for Non-radiologist, London: BMUS; See http://www.bmus.org/policies-guides/businesscase1.pdf (last checked 23 April 2014). [Google Scholar]

- 6.Oxborough D. Report from the Chair. The Consortium for the Accreditation of Sonographic Education Newsletter, York: CASE, 2012. See http://www.case-uk.org/resources/CASE+Newsletter+December+2012.pdf (last checked 17 August 2013).

- 7.Gibbs V. A proposed new clinical assessment framework for diagnostic medical ultrasound students. Ultrasound 2014; 22: 113–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Williams C, Edie J, Mulloy B, et al. Transvaginal ultrasound simulation and its effect on trainee confidence levels. Ultrasound 2013; 21: 50–6. [Google Scholar]

- 9.Norcini J, McKinley D. Assessment methods in medical education. Teach Teach Educ 2007; 23: 239–50. [Google Scholar]

- 10.Watson R, Stimpson A, Topping A, et al. Clinical competence assessment in nursing: A systematic review of the literature. J Adv Nurs 2002; 39: 421–31. [DOI] [PubMed] [Google Scholar]

- 11.Francis R. The Mid Staffordshire NHS Foundation Trust Public Inquiry, London: The Stationery Office, 2013. See www.midstaffspublicinquiry.com/report (last checked 25 April 2014).

- 12.Universities UK. Parliamentary Briefing: Budget 2013: What Does it Mean for Universities? London: Universities UK, 2013. See http://www.universitiesuk.ac.uk/highereducation/Documents/2013/Budget2013.pdf (last checked 25 April 2014).

- 13.Department of Health. Liberating the NHS: Developing the Healthcare Workforce from Design to Delivery, London: DoH, 2012. [Google Scholar]

- 14.Gibbs V, Griffiths S. Funding and commissioning issues for undergraduate and postgraduate healthcare education from 2013. Imaging Oncol 2013, pp. 56–61. [Google Scholar]

- 15.Fairhead A. Report of APMR for Academic Year 2011–12, CASE Newsletter, York: CASE, 2013. See http://www.case-uk.org/resources/CASE+Newsletter+May+2013.pdf (last checked 23 April 2014).

- 16.BMUS. Membership of BMUS. See http://www.bmus.org/about-bmus/ab-home.asp (last checked 23 April 2014).

- 17.McKinley R, Fraser R, Baker R. Primary care model for directly assessing and improving clinical competence and performance in revalidation of clinicians. BMJ 2001; 322: 712–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Quality Assurance Agency for Higher Education. Assuring and Enhancing Academic Quality, Gloucester: QAA, 2013. See http://www.qaa.ac.uk/en/Publications/Documents/quality-code-B6.pdf (last checked 14 August 2014). [Google Scholar]

- 19.Stuart C. Mentoring, Learning and Assessment in Clinical Practice: A Guide for Nurses, 3rd edn London: Churchill Livingstone, 2013. [Google Scholar]

- 20.Duffy K. Failing Students: A Qualitative Study of Factors that Influence the Decisions Regarding Assessment of Students’ Competence in Practice, Glasgow: Glasgow Caledonian University, 2003. See http://www.nmc-uk.org/documents/Archived%20Publications/1Research%20papers/Kathleen_Duffy_Failing_Students2003.pdf (last checked 29 April 2014).

- 21.Norcini J. Current perspectives in assessment: The assessment of performance at work. Med Educ 2005; 39: 880–9. [DOI] [PubMed] [Google Scholar]

- 22.Sumway J, Harden R. AMEE Guide No.25: The assessment of learning outcomes for the competent and reflective physician. Med Teach 2003; 25: 569–84. [DOI] [PubMed] [Google Scholar]

- 23.Nocini J, Anderson B, Bollela V, et al. Criteria for good assessment: Consensus statement and recommendations from the Ottawa 2010 Conference. Med Teach 2011; 33: 206–14. [DOI] [PubMed] [Google Scholar]

- 24.Lintern S. Exclusive: Last minute reduction to expected education budgets. HSJ, 2013. See http://www.hsj.co.uk/news/exclusive-last-minute-reduction-to-expected-education-budgets/5059280.article (last checked 13 August 2014).