Abstract

Background

There is an urgent need for efficient, equitable interventions across the disease spectrum from prevention to palliative care. To identify and prioritize such interventions, evidence of effectiveness important to potential constituents is needed on outcomes relevant to them.

Methods

To inform practice and policy, evidence is needed on actionable, harmonized outcomes which are feasible to collect in most settings and relevant to citizens, practitioners, and decision makers. We propose that increased priority should be given to certain outcomes that are infrequently collected across multiple domains.

Results

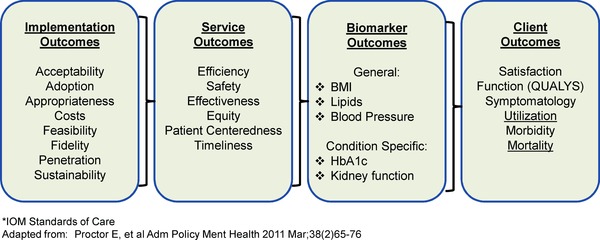

A modification of a logic model of health outcomes by Proctor et al. is used to propose key domains and measures of implementation, service delivery, biomarkers, and health and functioning outcomes. Recommendations are made to give increased priority to implementation (especially reach, resource requirements/costs; and fidelity/adaptation); Institute of Medicine service delivery categories of equity and safety; and patient reported health and functioning outcomes.

Conclusions

Implications of this outcomes framework include that biomarkers are not always the most important or relevant outcomes; that harmonized, pragmatic, and actionable measures are needed for each of these types of outcomes, and that significant changes in training and review of grants and publications are needed.

Keywords: evaluation, outcomes, measures, implementation, biomarkers

Introduction

This is an exciting time in healthcare and public health. The evolution of Affordable Care Act (ACA), the Patient Centered Outcomes Research Institute (PCORI), the Centers for Medicare and Medicaid Services (CMS)—Center for Medicare and Medicaid Innovation—Centers for Disease Control (CDC)—Community Transformation Grants (CTGs)—and the pressing need to achieve the CMS triple aim of improving quality, reducing costs, and improving health provide unprecedented opportunities. These developments, along with the need to reduce health inequities shift our thinking, research, and measurement priorities. There have been great advances in the development of evidence‐based interventions and guidelines.1 However, such advances have not generally translated into practice and those that often take a very long time.2, 3 Kessler has recently observed that our efficacy evidence base is both brilliant and largely irrelevant to many real‐world settings.4 Part of the reason for this complex problem may be the huge divide between “gold standard” evidence demanded by funding agencies, study sections, and journal reviewers and the types of information most needed by policymakers, practitioners, patients, and families. While there is an increasing focus on the science of dissemination and implementation research, which in part seeks to solve the leaking translation pipeline,5, 6 without better alignment on the key outcomes on which evidence is needed, this process will remain incomplete. This paper addresses the types of outcomes and measures that we think should receive priority, discusses implications of these recommendations, and proposes initial steps toward a substantially altered paradigm.

The specific purposes of this paper are to (1) describe a framework and propose a modification of it to classify types of health‐related outcomes; (2) apply this framework to the outcome criteria most often reported in health research and rewarded by the biomedical review and publication systems; (3) recommend several domains from the model that deserve greater attention in order to translate research into practice; and (4) discuss the implications of these recommendations for changes to health research, review, and publication.

Background and Model

Figure 1, adapted from Proctor et al.1 and the IOM criteria for characteristics of effective healthcare systems7 provides a useful framework for conceptualizing four domains of outcomes related to implementation, service/delivery quality, biomarkers, and finally the health and functioning of individuals or citizens who are ultimate targets of the intervention, policy or program. There are several important advantages of this framework over alternative frameworks in outcomes or etiologic research. First is the domain of implementation outcomes (described below) that recognizes the growing field of implementation science (e.g., the journal Implementation Science).5 In particular, the elements related to implementation include numerous contextual factors that are highly relevant to “real‐world” application of interventions: How much will it cost? Will this work in settings like ours? How do I sustain the intervention after initial funding ends? In addition, we propose a modification of the Proctor et al. model1 that considers biomarkers as an intermediate step between service or healthcare delivery and ultimate health and functioning outcomes. We suggest that implementation and functional outcomes have both been neglected and are most wanted by stakeholders.8

Figure 1.

Working model of measurement categories in health research.

Priorities resulting from application of the shift in framework

When discussing recommendations for priorities among many possible types of outcomes, as Proctor et al.1 argue, increased attention is needed to implementation. Without an implementation focus, time and resources are wasted on programs that will probably never be implemented. Failure to implement with quality is likely the most common reason that research‐based interventions are unsuccessful when attempted in real‐world settings.9, 10, 11

Implementation outcomes

Implementation is the process of putting to use or integrating evidence‐based interventions within a setting [National Institutes of Health. PA‐13–055: Dissemination and Implementation Research in Health (R01), 2013]. As shown in Figure 1, there are several categories of implementation measures.

Acceptability relates to a specific intervention and describes whether the potential implementers, based on their knowledge of or direct experience with the intervention, perceive it as agreeable, palatable, and/or satisfactory.1 Adoption is the decision of an individual leader, organization, or community to commit to and initiate an evidence‐based intervention.12 Too often data are lacking on adoptions of evidence‐based interventions even though this concept is the foundation for improving patient and population health. McGlynn et al.2 estimated that across a wide range of content areas, the adoption of evidence‐based healthcare practices among U.S. clinicians was estimated at 55%. Appropriateness is related to the concept of compatibility and is defined as the perceived fit and relevance of the intervention for a given context (i.e., setting, user group) and/or its perceived relevance and ability to address a particular issue. Organizational culture and organizational climate might explain whether an intervention is perceived as appropriate by a potential group of implementers.13

Implementation often depends on the costs of the particular intervention, the implementation strategy used, and the characteristics of the setting(s) where the intervention is being implemented. For decision/policymakers, cost is often one of the key implementation outcomes. Ideally, a more sophisticated version of cost data is available (e.g., cost‐effectiveness) to assess relative value.

Feasibility refers to the actual fit, suitability, or practicability of an intervention in a specific setting. In linking with diffusion theory, perceived feasibility plays key role in the early adoption process.1, 14 Fidelity is defined as the degree to which an intervention is implemented as it is prescribed in the original protocol provide a more comprehensive discussion of fidelity measurement for complex interventions.15, 16

Penetration (e.g., niche saturation) is the extent to which an evidence‐based intervention is integrated into all subsystems of an organization (e.g., from front‐line workers to managers). This element relates closely to several others in this domain, especially adoption and appropriateness.

Sustainability is the extent to which an evidence‐based intervention delivers its benefits over an extended period of time after external support from the donor agency is terminated.17 Most often sustainability is measured through the continued use of intervention components; however, it can also be viewed more broadly to include considerations of maintained community—or organizational—level partnerships, sustained organizational or community attention to the issue that the intervention, a long‐term commitment to evaluation, and diffusion or replication in other sites.18

Together these categories of implementation outcomes address many issues that are influential in determining whether programs will be adopted and if adopted, how successful they will be. Collectively, they are the types of information that clinicians and decision makers consider relevant and useful.8 We suggest that to be meaningful to implementation, such measures or a clearly identified process for including them, must become regular elements of research design and their absence decreases the utility of the research.

Service outcomes

Like Proctor et al.,1 we conceptualize service outcomes (or delivery quality) as an intermediate outcome. These service outcomes, drawn from the IOM report on crossing the quality chasm,7 are strongly influenced by implementation processes and strategies and are upstream in the framework from both biomarker and client outcomes.

Efficiency is critically important since it is a key criterion for service settings, and likely a major factor determining whether an intervention will be sustained. Efficiency is related to the implementation outcome of costs, but goes beyond resources required to ask “if this is a good value” and an efficient way to deliver the service.

Safety is at the center of all health interventions, and both researchers and practitioners need to remember to “first, do not harm.” The complication with complex interventions19, 20 is that while their immediate beneficial effects are often more obvious, some of the iatrogenic or negative unintended consequences can either be more subtle or take time to develop, such as the cardio toxic effects of some cancer treatments. Prevention researchers often point to the safety and lack of side effects of their programs as an important reason they should be given greater priority,21 and in general, this is true. However, an unintended consequence related to safety that should be addressed is the potential that by focusing on one prevention topic (e.g., cancer screening or a certain type of cancer screening), less attention may be paid to other important topics such as tobacco cessation, heart disease, or diabetes screening.22

Effectiveness is usually the service outcome assessed most extensively and examples include delivery of guidelines‐based interventions, or improvements in health behaviors. A corollary issue addressed much less frequently23 is that to compare effectiveness across topic areas, it is necessary to also include broader, less disease‐specific outcomes.24

Equity might be the most important outcome as it is built upon just implementation and delivery processes. Given their complex determinants,25, 26 it is challenging to reduce health disparities.27 However, it is incumbent on all researchers to assess the impact of their intervention on health inequities and to demonstrate, as in the case of safety outcomes, that at minimum the program or policy in question does not exacerbate inequities.

Patient‐centeredness has received considerable recent attention, especially with the advent of the PPCORI,28 the patient‐centered establishment of medical home movement and criteria.29 At minimum, if one is producing patient‐centered outcomes, patient‐report measures need be a key part of one's reported outcomes. This is one of several reasons that call for a reexamination of biomarkers as the unquestioned primary outcome in most medical and health research studies. With the recent emphasis on electronic health records (EHRs), the type of data most often missing from otherwise comprehensive electronic records is patient reported measures, despite the capacity to collect such data.30, 31

In many areas, client preference is a potent mediator of care and for example, meditates response to antidepressant medication.32 (While an important finding, clients generally remain receivers of care rather than partners in care. Engaging clients in key health decisions is both an important process and a research question. How do we do it? What promotes such engagement? If patient or citizen preferences mediate outcomes, then we need to regularly ask them about their experience and use those reports to both adapt planning and delivery, and a method of evaluating the effectiveness of the plan and its execution. So the ultimate outcomes are patients engaging in self‐identified areas of care, in an effective fashion that improves the functioning.

The public health or population parallel to patient‐centeredness is community engagement. Like patient‐centeredness, community engagement and community‐based participatory research (CBPR) is now in vogue, and many programs claim to use CBPR principles. Stronger measures of the extent to which community and agency members are equal partners and have meaningful participation throughout programs are needed.

The final service outcome in Figure 1 is timeliness. Timeliness is related to, but distinct from, efficiency in that it relates to the length of time between when a need is identified and appropriate services delivered. Timeliness is especially critical in progressive conditions such as cancer and diabetes, where earlier detection can lead to prevention of disease consequences.

Overall, service outcomes seem to be viewed by researchers, policymakers, and patients/citizens as important; but they are inconsistently measured, and there are not many agreed upon standardized, practical measures of service outcomes.33 Increased attention to these outcomes would do a great deal to make research more relevant.8

Biomarkers

We conceptualize biomarkers as the third category in our adapted logic model of health outcomes. Proctor et al.1 did not include biomarkers explicitly in their model, likely considering them to be either implicit or a subset of effectiveness under service outcomes. Biomarkers have become the preferred primary outcome of the vast majority of research on health outcomes.34, 35 We do not have the space to review the conceptual, historic, and methodological reasons for this, but many feel that it is almost impossible to get a grant funded or study published without a primary biomarker outcome.

Biomarkers have a number of advantages (Table 1), including that they can be measured objectively, some are relatively inexpensive to collect, and at least some biomarkers have mechanistic roles in the disease process. In many areas, there has been unquestioned progress in the relevance and usefulness of biomarkers such as the advance from urinary glucose to glycosylated hemoglobin for assessing diabetes outcomes.36

Table 1.

Strengths and limitations of different types of outcomes

| Outcome | Strengths | Limitations |

|---|---|---|

| Implementation | Necessary for action Informs how to improve Reflects real‐world delivery |

Few standard measures Requires multiple methods May be labor intensive to collect |

| Service delivery | Related to IOM* framework Related to HEDIS reporting |

Not always related to endpoints Reliable/valid measures may be lacking Metrics can be gamed |

| Biomarkers | Relatively easy and commonly collected Considered objective |

Not endpoints themselves Not always actionable, can result in overtreatment |

| Health and functioning | QALYs are ultimate goal Incorporates patient/population perspective |

Long lead time to outcome Often need huge sample sizes Existing surveillance systems often lack intermediate indicators |

*IOM: Institute of Medicine.

Nevertheless, biomarkers are not themselves health outcomes, a point that sometimes seems forgotten. The link between biomarkers and the client or health outcomes, which form the final category in the Proctor et al. model1 and Figure 1 is variable and often uncertain.34 Numerous other factors combine with biomarkers to produce outcomes such as morbidity, mortality, and healthcare utilization.37, 38, 39 In addition, it is possible to produce strong biomarker outcomes without positively impacting disease conditions, outcomes, or morbidity, as demonstrated in the ACCORD trial.40, 41 Improvements in biomarkers can be produced by interventions that are not at all efficient, timely, equitable, safe, or patient centered. In this time of healthcare budget crisis, the expense of producing some biomarker improvements can be considerable and should be quantified and considered carefully.

We posit that biomarkers, themselves in large part a reaction to earlier less precise and more subjective outcomes, have almost become reified as the most critical and sometime only outcome that is important. As happens at different times in scientific advances,42 an innovation that helped to advance the field substantially, has now become almost an impediment to broader, more integrative, and thoughtful conceptualizations of health outcomes. Biomarkers are no longer the ultimate outcome.

Patient, population, or ultimate health outcomes

Health, function, and intervention cost and effectiveness related to function over time are the ultimate patient and population outcomes.34 The changes generated by healthcare reform all focus on Triple Aim outcomes. If healthcare redesign focuses on improved patient experience (health and satisfaction) improved outcomes (function) and elimination of resource utilization that does not contribute to improved patient outcomes at a cost that society determines acceptable (cost and function over time) then the focus of research going forward is to focus on these ultimate outcomes. Similarly, new initiatives involving health policies, community transformation grants, and increased utilization of community health workers have potential to improve public and population health.

The ultimate question then becomes how programs and policies should be designed and implemented such that the optimal set of resources, deliver access to the optimal service and care to engaged and activated consumers to generate the best achievable function over time. Research responding to that question serves the goals of the Triple Aim and responds to crucial questions that have been marginalized can be evaluated using the outcome measures and metrics discussed above. There are at least three reasons for such marginalization. Measurement of health and function in a way that can lead to comparable outcomes is still evolving. Such measures must be collected in practice flow and have clinical, public health, and research relevance. Second, such measures are largely patient reported and third, the collection of such data and integrating into the EHRs and other databases is still in development.

Implications

How do we collect data?

Even if we can generate a consensus on priority outcomes, we still need to identify data collection methods that do not interfere with practice; or, by their collection make an organization sufficiently different to limit generalization. If data cannot be collected within the everyday flow of practice, they will not be collected in everyday care.

The EHR and large extracted databases as primary research data collection

Not long ago, specification of the EHR for data collection generated resistance and observations that it was not generalizable. It now seems that electronic and automated data collection methods have become the preferred modalities for data collection.

For example, recently CMS began to reimburse providers for a Medicare recipient receiving an annual wellness visit. To be reimbursable, a health risk appraisal needs to be conducted and documented. Said appraisal must contain elements of a functional assessment. So practice now regularly includes the collection of patient generated data that assess the psychosocial dimensions of functioning, including behavioral risk assessment and a patient's report of health functioning. To be usable as a part of care, the appraisal will be built into the EHR and recorded in searchable fields. If collected on a routine, population basis, this can become not only an individual patient tool, but data collection for quality improvement and for population research.

Recommended priorities

Based on the model above and our discussion of the current status of the field, we make the following recommendations for near term priorities that should jump‐start and help to refocus the integration of research into practice and policy.

First, as discussed in detail elsewhere,30, 33 there is a pressing need for consensus on and collection of more standardized or harmonized measures. We recommend priority be given to identification of harmonized, practical measures within each of three outcome categories (Table 2). Our recommendations for increased priority are:

Within implementation measures, priority should be given to harmonized assessments of reach (patient or citizen participation rates and representativeness of participants) adoption (same issues at the setting and staff levels), resources required (including both monetary and time/burden costs form perspectives of systems, citizens, and society), fidelity/adaptation of programs and policies as they are implemented in diverse settings, and sustainability;

Within service delivery measures, we recommend priority be given to harmonized measures of equity impacts (diversity indicators) as well as safety and unintended consequences (especially given the increasing availability of big data and EHR on large patient data sets);

Within health or ultimate outcome measures, we recommend priority be given to practical, patient‐reported function outcomes leading to functional data, effectiveness over time, and cost effectiveness.

Table 2.

Recommended priorities for outcomes measurement

| Recommended priority of outcome category (1–4)* | Specific issues within category to recommend |

|---|---|

| Implementation outcomes—1 | Reach (participation and characteristics of participants vs. those who decline), cost, adaptation, sustainability over time |

| Service/delivery—2 | Equity, safety (including unintended consequences) |

| Biomarkers—4 | Epigenetic changes |

| Outcomes—3 | Quality of life |

*1 = highest or top priority.

In general, for the near term, the priorities that have been placed on the four types of outcome measures need to be reversed. Specifically, biomarker outcomes that have dominated the field do not need to be collected in every grant or report; and by themselves can be misleading. Just because they are ubiquitous, does not mean they are always important. In general, health research has done a credible job of assessing health, client, or ultimate outcomes.1 The main limitation is that often these outcomes are measured by themselves, rather than as part of a multimethod, multioutcome focused package of measures.43

This leaves the categories of implementation and service/delivery quality outcomes, both of which would benefit from harmonization and more frequent collection. Above, we have summarized what we think are compelling reason why these categories deserve higher priority. Part of the reason these measures have not been collected more frequently may be due to the general lack of widely available, previously validated, or harmonized measures of these outcomes. For example, how one study measures reach may be quite diverse from another, and there are very few training programs that provide instruction in how to measure these outcomes. We think that efforts to develop and achieve harmonization on implementation and serve/delivery outcomes should be a research priority.30, 31, 33

Changes in health policy and care delivery generate changes in the need for data and the measures generating such data. Our healthcare evolution now implies increased priority on some measurement categories that have been less frequently reported, and less emphasis on some formerly held “gold standard measures,” such as biomarkers that frequently, and often solely, were considered necessary endpoints of major trials. Such a change in focus would greatly enhance relevance and transparency to potential adopters; would be more actionable; would potentially speed up pace of research; inform science about the links (and when there are not links) between different categories of outcomes.

We anticipate potential objections. We would need rapid development and consensus on more standardized measures within key domains. There is some indication from NIH/AHRQ projects promoting routine collection of patient‐reported measures in primary care that this can be done31, 44 (http://www.scribd.com/doc/14427729/Observations‐of‐Daily‐Living‐primer‐from‐Robert‐Wood‐Johnson‐Foundation).

Such a shift would challenge accepted training models and would require different training and infrastructure. We are admittedly proposing a shift in the culture of science that will require thinking through and justifying outcomes for any given study—rather than assuming that biomarkers are the key outcomes for all. For many practice and policy decisions (especially for chronic diseases), the changes in biological endpoints resulting from intervention are years or decades away, necessitating a stronger set of intermediate outcomes; and for many types of implementation and dissemination studies, biomarkers are not the appropriate endpoints when an evidence‐based intervention is already shown to be effective.

The infrastructure changes needed are not trivial. Required expertise of study sections and journal reviewers would need to change. Project support decisions would refocus to support high probability “on the ground” implementation that will generate results that have rapid and direct relevance to practice and policy. Similarly, journals and reviewers would be asked to adapt. Journals that publish data relevant to policy and practice would have increased value. Reviewing and publishing in those journals would become important and rewarded elements of professional development.

Ours is certainly not the final word, rather this is the beginning of a conversation, but one that is timely and overdue. We encourage others to engage in this discussion. Whatever the reaction to our ideas, the ultimate outcome is the need to consider the purpose of health research in general and outcomes in particular, and how as individuals, organizations, and fields we can shift from a paradigm of limited relevance and utility to research that can best advance health and most rapidly and productively be translated to policy and practice.

Acknowledgments

We express our gratitude to Dr. Enola Proctor for her seminal work on this measurement model and to Dr. Borsika Rabin for her work on the definitions of many of the implementation constructs discussed. This work was supported in part by Cooperative Agreement Number U48/DP001903 from the Centers for Disease Control and Prevention (the Prevention Research Centers Program). The opinions expressed are those of the authors and not necessarily those of the National Cancer Institute or any funder.

References

- 1. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health 2011; 38(2): 65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. McGlynn EA, Asch SM, Adams J, Keesey J, Hicks J, DeCristofaro A, Kerr EA. The quality of health care delivered to adults in the United States. N Eng J Med 2003; 348(26): 2635–2645. [DOI] [PubMed] [Google Scholar]

- 3. Balas EA, Boren SA. Managing Clinical Knowledge for Health Care Improvement. Stuttgart: Schattauer; 2000. [PubMed] [Google Scholar]

- 4.Kessler R. The patient centered medical home: an oppportunity to move past brilliant and irrelevant research and practice. Transl Behav Med 2012; 2: 311–312. doi:10.1007/s13142‐012‐0151‐6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health: Translating Science to Practice 1st edn. New York: Oxford University Press; 2012. [Google Scholar]

- 6. Glasgow RE, Vinson C, Chambers D, Khoury MJ, Kaplan RM, Hunter C. National Institutes of Health approaches to dissemination and implementation science: current and future directions. Am J Public Health 2012; 102(7): 1274–1281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Institute of Medicine . Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: Committee on Quality Health Care in America; National Academies Press; 2003. [Google Scholar]

- 8. Rothwell PM. External validity of randomised controlled trials: to whom do the results of this trial apply? Lancet 2005; 365: 82–93. [DOI] [PubMed] [Google Scholar]

- 9. Allen JD, Linnan LA, Emmons KM. Fidelity and its relationship to implementation effectiveness, adaptation, and dissemination In: Brownson RC, Colditz G, Proctor E, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012. pp 281–304. [Google Scholar]

- 10. Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implement Sci 2007; 2: 40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Breitenstein SM, Gross D, Garvey CA, Hill C, Fogg L, Resnick B. Implementation fidelity in community‐based interventions. Res Nurs Health 2010; 33(2): 164–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Rabin BA, Brownson RC. Developing the terminology for dissemination and implementation research in health In: Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012; 23–54. [Google Scholar]

- 13. Aarons GA, Horotwitz JD, Dlugosz LR, Ehrhart MG. Role of organizational processes in dissemination and implementation research In: Brownson RC, Colditz G, Proctor E, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012. 128–153. [Google Scholar]

- 14. Rogers EM. Diffusion of Innovations. 5th edn New York: Free Press; 2003. [Google Scholar]

- 15. Sussman S, Valente TW, Rohrbach LA, Skara S, Pentz MA. Translation in the health professions: converting science to action. Eval Health Prof 2006; 29: 7–22. [DOI] [PubMed] [Google Scholar]

- 16. Hawe P, Shiell A, Riley T, Gold L. Methods for exploring implementation variation and local context within a cluster randomised community intervention trial. J Epidemiol Community Health 2004; 58: 788–793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Shediac‐Ritzkallah MC, Bone LR. Planning for the sustainability of community‐based health programs: conceptual frameworks and future directions for resaerch, practice and policy. Health Educ Res 1998; 13(1): 87–108. [DOI] [PubMed] [Google Scholar]

- 18. Scheirer MA, Dearing JW. An agenda for research on the sustainability of public health programs. Am J Public Health 2011; 101(11): 2059–2067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ 2008; 337: a1655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Faes MC, Reelick MF, Esselink RA, Rikkert MG. Developing and evaluating complex healthcare interventions in geriatrics: the use of the medical research council framework exemplified on a complex fall prevention intervention. J Am Geriatr Soc 2010; 58(11): 2212–2221. [DOI] [PubMed] [Google Scholar]

- 21. Woolf SH. Potential health and economic consequences of misplaced priorities. JAMA 2007; 297(5): 523–526. [DOI] [PubMed] [Google Scholar]

- 22. Kessler RS, Purcell EP, Glasgow RE, Klesges LM, Benkeser RM, Peek CJ. What does it mean to “employ” the RE‐AIM model? Eval Health Prof 2012; 36(1): 44–46. [DOI] [PubMed] [Google Scholar]

- 23. Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Ann Rev Public Health 2007; 28: 413–433. [DOI] [PubMed] [Google Scholar]

- 24. Kaplan RM, Ganiats TG. Qalys: their ethical implications. JAMA 1990; 264(19): 2502–2503. [PubMed] [Google Scholar]

- 25. Mackenbach JP. The persistence of health inequalities in modern welfare states: the explanation of a paradox. Soc Sci Med 2012; 75(4): 761–769. [DOI] [PubMed] [Google Scholar]

- 26. Marmot M, Allen J, Bell R, Bloomer E, Goldblatt P. WHO European review of social determinants of health and the health divide. Lancet 2012; 380(9846): 1011–1029. [DOI] [PubMed] [Google Scholar]

- 27. Chin MH, Clarke AR, Nocon RS, Casey AA, Goddu AP, Keesecker NM, Cook SC. A roadmap and best practices for organizations to reduce racial and ethnic disparities in health care. J Gen Intern Med 2012; 27(8): 992–1000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Selby JV, Beal AC, Frank L. The Patient‐Centered Outcomes Research Institute (PCORI) national priorities for research and initial research agenda. JAMA 2012; 307(15): 1583–1584. [DOI] [PubMed] [Google Scholar]

- 29. Crabtree BF, Nutting PA, Miller WL, Stange KC, Stewart EE, Jaen CR. Summary of the National Demonstration Project and recommendations for the patient‐centered medical home. Ann Fam Med 2010; 8(Suppl 1): S80–S90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Glasgow RE, Kaplan RM, Ockene JK, Fisher EB, Emmons KM. Patient‐reported measures of psychosocial issues and health behavior should be added to electronic health records. Health Aff (Millwood) 2012; 31(3): 497–504. [DOI] [PubMed] [Google Scholar]

- 31. Estabrooks PA, Boyle M, Emmons KM, Glasgow RE, Hesse BW, Kaplan RM, Krist AH, Moser RP, Taylor MV. Harmonized patient‐reported data elements in the electronic health record: supporting meaningful use by primary care action on health behaviors and key psychosocial factors. J Am Med Inform Assoc 2012; 19(4): 575–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Raue PJ, Schulberg HC, Heo M, Klimstra S, Bruce ML. Patients’ depression treatment preferences and initiation, adherence, and outcome: a randomized primary care study. Psychiatr Serv 2009; 60(3): 337–343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Rabin BA, Purcell P, Naveed S, RP Moser, Henton MD, Proctor EK, Brownson RC, Glasgow RE. Advancing the application, quality, and harmonization of implementation science measures. Implement Sci 2012. Dec 11; 7: 119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Kaplan RM. Two pathways to prevention. Am Psychol 2000; 55(4): 382–396. [PubMed] [Google Scholar]

- 35. Shy CM. The failure of academic epidemiology: witness for the prosecution. Am J Epidemiol 1997; 145(6): 479–484. [DOI] [PubMed] [Google Scholar]

- 36. Nathan DM, Singer DE, Hurxthal K, Goodson JD. The clinical information value of the glycosylated hemoglobin assay. N Engl J Med 1984; 310(6): 341–346. [DOI] [PubMed] [Google Scholar]

- 37. Rose G. Sick individuals and sick populations. Int J Epidemiol 1985; 14: 32–38. [DOI] [PubMed] [Google Scholar]

- 38. Rose G. The Strategy of Preventive Medicine. New York: Oxford University Press; 1992. [Google Scholar]

- 39. McKinlay JB, Marceau LD. To boldly go… Am J Public Health 2000; 90(1): 25–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Bloomgarden ZT. Diabetes treatment: the coming paradigm shift. J Diabetes 2012; 4(4): 315–317. doi: 10.1111/1753‐0407.12005. [DOI] [PubMed] [Google Scholar]

- 41. Genuth S, Ismail‐Beigi F. Clinical implications of the ACCORD trial. J Clin Endocrinol Metab 2012; 97(1): 41–48. [DOI] [PubMed] [Google Scholar]

- 42. Kuhn TS. The Structure of Scientific Revolutions. 3rd edn Chicago: University of Chicago Press; 1996. [Google Scholar]

- 43. Mayo NE, Scott S. Evaluating a complex intervention with a single outcome may not be a good idea: an example from a randomised trial of stroke case management. Age Ageing 2011; 40(6): 718–724. [DOI] [PubMed] [Google Scholar]

- 44. Carle AC, Cella D, Cai L, Choi SW, Crane PK, Curtis SM, Gruhl J, Lai JS, Mukherjee S, Reise SP, Teresi JA, Thissen D, Wu EJ, Hays RD. Advancing PROMIS's methodology: results of the Third Patient‐Reported Outcomes Measurement Information System (PROMIS) Psychometric Summit. Expert Rev Pharmacoecon Outcomes Res 2011; 11(6): 677–684. [DOI] [PMC free article] [PubMed] [Google Scholar]