Abstract

Implementing behavioral health interventions is a complicated process. It has been suggested that implementation strategies should be selected and tailored to address the contextual needs of a given change effort; however, there is limited guidance as to how to do this. This article proposes four methods (concept mapping, group model building, conjoint analysis, and intervention mapping) that could be used to match implementation strategies to identified barriers and facilitators for a particular evidence-based practice or process change being implemented in a given setting. Each method is reviewed, examples of their use are provided, and their strengths and weaknesses are discussed. The discussion includes suggestions for future research pertaining to implementation strategies and highlights these methods' relevance to behavioral health services and research.

Keywords: Implementation Strategies, Implementation Research, Tailoring

Introduction

Evidence-based practices (EBPs) are underutilized in community settings. Implementation science, the study of methods to promote the integration of research into routine practice,1 has advanced knowledge of ways to increase the use of EBPs. In the United States, national agencies have prioritized the development and testing of implementation strategies,2 which are “methods or techniques used to enhance the adoption, implementation, and sustainability of a clinical program or practice.”3(p2) Implementation strategies can be single component or “discrete” strategies (e.g., disseminating educational materials, reminders, and audit and feedback); however, most are multifaceted and multilevel, involving more than one discrete component.4,5 Multifaceted strategies can be built by combining the over 70 discrete implementation strategies that have been described in published taxonomies.5–7 However, selecting the most appropriate implementation strategies is a complex task – one for which the literature has provided limited guidance.8 This article outlines challenges associated with selecting implementation strategies, presents an argument for selecting and tailoring implementation strategies to address the unique needs of implementation efforts, and fills a gap in the literature by suggesting four potential methods that may be used to guide that process.

Challenges associated with selecting implementation strategies

It is challenging to select from the many implementation strategies that may be relevant to a particular change effort given: limitations of the empirical literature; the underutilization of conceptual models and theories in the literature; and variations related to the EBPs and the contexts in which they are implemented.8 The evidence-base for implementation strategies has advanced considerably in recent years and numerous randomized trials have been conducted.9 However, reviews and syntheses of that literature provide limited guidance regarding the types of strategies that may be effective in particular circumstances. This is particularly true in behavioral health settings, as there are far fewer randomized controlled trials and head-to-head comparisons of implementation strategies than in other medical and health service settings.10–12 Thus, while it is well established that simply training clinicians to deliver EBPs is insufficient,13 it is less clear which strategies are needed at the client, clinician, team, organizational, system, or policy levels to facilitate implementation and sustainment.

Conceptual frameworks14 can guide research and practice by suggesting factors that influence implementation outcomes15,16 and providing some direction for the selection and tailoring of strategies.17,18 But researchers often fail to explicitly refer to guiding conceptual frameworks,12,19 and when they do, they often simply borrow a subset of constructs or outcomes without framing their study within the broader context of the framework.20 It is not always clear how to translate frameworks into implementation strategy design,21 as many are primarily heuristic and do not indicate the direction or nature of causal mechanisms. Thus, while conceptual frameworks and theories can inform all aspects of implementation research,22 they provide a necessary but not sufficient guide for selecting and tailoring implementation strategies.

The considerable variation in EBPs and other process changes has implications for selecting strategies. Scheirer,23 for example, has proposed a framework of six different intervention types that vary in complexity and scope, from interventions implemented by individual providers (e.g., cognitive behavioral therapy) to those requiring coordination across staff and community agencies (e.g., multisystemic therapy, assertive community treatment) to those embracing broad-scale system change (e.g., Philadelphia's recovery transformation).24 These intervention types may require the use of unique constellations of implementation strategies to ensure that they are integrated and sustained.25 Contextual variation also has implications for selecting strategies,8 as settings are likely to vary with regard to patient-level (e.g., fit between patient's cultural values and EBP,26 patient “buy in” to EBP);27 provider-level (e.g., attitudes toward EBPs28 and specific behavior change mechanisms7); organizational-level (e.g. culture and climate,29 implementation climate30); and system-level characteristics (e.g., policies and funding structures31,32).

Selecting and tailoring discrete strategies to address contextual needs

The complexities associated with selecting implementation strategies and the considerable variation inherent to different EBPs and contexts has led scholars to suggest that implementation strategies should be selected and tailored to address the unique needs of a given change effort.4,33–35 The first step in selecting and tailoring implementation strategies is to conduct an assessment of factors that influence implementation processes and outcomes,34 such as the characteristics of the innovation,23,36 characteristics of the setting in which it will be implemented, the characteristics and preferences of involved stakeholders, and other potential barriers and facilitators.37 Numerous resources can guide the assessment of these factors.37,38 The second step of the process involves selecting and, when necessary, tailoring strategies that can potentially address the context-specific factors identified in the pre-implementation assessment.34 An example of selecting strategies to address an identified barrier would be preventing “therapist drift” by selecting fidelity monitoring, audit and feedback, or ongoing consultation. Discrete strategies may also need to be tailored to address a particular barrier. For example, in-person trainings may be difficult to scale-up in community settings because they require substantial expenditures of time and money; thus, the training may need to be to be delivered as a web-based module.

The need for systematic methods for selecting and tailoring implementation strategies

Selecting and tailoring strategies to address contextual needs “has considerable face validity and is a feature of key frameworks and published guidance for implementation in health care.”4 While the empirical evidence supporting the approach is not yet robust, a Cochrane Review found that strategies tailored to address identified barriers to change were more likely to improve professional practice than no intervention or the dissemination of guidelines.33 Yet many implementation studies have failed to use strategies that are appropriately matched to contextual factors.39 Implementers have too often become attached to single strategies (e.g., educational workshops) and uncritically applied them to all situations.39 Furthermore, in a review of 20 studies, Bosch and colleagues40 found that when researchers have attempted to match implementation strategies to identified barriers, there has often been a theoretical mismatch (e.g., clinician-focused strategies are used to address barriers that at the organizational level). Baker et al. note, “although tailored interventions [implementation strategies] appear to be effective, we do not yet know the most effective ways to identify barriers, to pick out from amongst all the barriers those that are most important to address, or how to select interventions [implementation srategies] likely to overcome them.”33(p20) Linking strategies to barriers, facilitators, and contextual features remains a creative, emergent, and non-systematic process that occurs during implementation efforts.35 It is clear that “systematic and rigorous methods are needed to enhance the linkage between identified barriers and change strategies.”39(p169) These methods should take relevant theory and evidence into account, elicit stakeholder feedback and participation, and be specified clearly enough to be replicated in science and practice. To date, few studies have advanced candidate methods capable of addressing that need.

Methods for Selecting and Tailoring Implementation Strategies

This article fills a gap in the implementation science and behavioral health literatures by suggesting four methods that can be used to improve the process in which strategies are linked to the unique needs of implementation efforts: concept mapping,41,42 group model building,43–45 conjoint analysis,46 and intervention mapping.47 These methods were selected based upon the authors' expertise and a narrative search of the literature in multiple disciplines (e.g., implementation science, public health, engineering, marketing, etc.). They were also chosen because they have been used to develop interventions in other contexts, have substantial bodies of literature that could guide their use, and are not proprietary. The evidence for their effectiveness in improving implementation and clinical outcomes is yet to be determined; however, their use in other contexts suggests that they may be an important step in a research agenda aiming to integrate the perspectives of implementation stakeholders, make implementation planning more systematic, and improve the linkage between implementation barriers and strategies. Below each method is reviewed and examples of their use are provided. Table 1 contains a brief description of each method and lists some advantages and disadvantages to them to select and tailor implementation strategies.

Table 1. Overview of methods for selecting and tailoring implementation strategies.

| Method: | Brief Description: | Advantages and Disadvantages: |

|---|---|---|

| Concept Mapping | A mixed methods approach that involves generating, structuring, and analyzing ideas to create a visual map of concepts that are rated on specified dimensions (e.g., importance and feasibility) |

Advantages:

Disadvantages:

|

| Group Model Building | A system dynamics-based method that involves engaging stakeholders in the collaborative development of causal loop diagrams that model complex problems to identify opportunities and strategies for improvement |

Advantages:

Disadvantages:

|

| Conjoint Analysis | A quantitative method that requires participants to select different “product” profiles, which allows for the determination of how they value different attributes of products, services, interventions, implementation strategies, etc. |

Advantages:

Disadvantages:

|

| Intervention Mapping | A systematic, multi-step method for developing interventions (or implementation strategies) that is inherently ecological and incorporates theory, evidence, and stakeholder perspectives |

Advantages:

Disadvantages:

|

Concept mapping

Overview

Concept mapping is a mixed methods approach that organizes the ideas of a group to form a common framework.42 Data collection involves engaging stakeholders in brainstorming, sorting, and rating tasks. The brainstorming task is structured through a “focus prompt” (e.g., “a potential barrier to implementing this EBP is____” or “one strategy for implementing this EBP in our organization [service system, state, etc.] is ____”). Stakeholders are encouraged to generate as many items as possible. These items are then compiled and culled to a list of no more than 100 statements. Stakeholders then sort the statements into conceptually consistent piles. Stakeholders also are asked to rate each statement on a number of dimensions (e.g., importance, feasibility, changeability) using a Likert scale. These data are then analyzed using multidimensional scaling and hierarchical cluster analysis. The end product is a map that contains different shapes representing distinct concepts that can also be depicted in a cluster rating map to reflect varying ratings on specified dimensions such as importance and feasibility.42 Kane and Trochim's text42 provides an accessible introduction to concept mapping. Concept mapping software, training, and consultation is available through Concept Systems Global, Inc.48

How it has been used

Concept mapping has been used in strategic planning, community building, curriculum development, the development of conceptual models, and evaluation.49 Increasingly, it has been successfully used to address implementation-related objectives such as identifying barriers and facilitators,15,50 assessing program fidelity,51 and creating a conceptual model to translate cancer research into practice.52

Examples from behavioral health

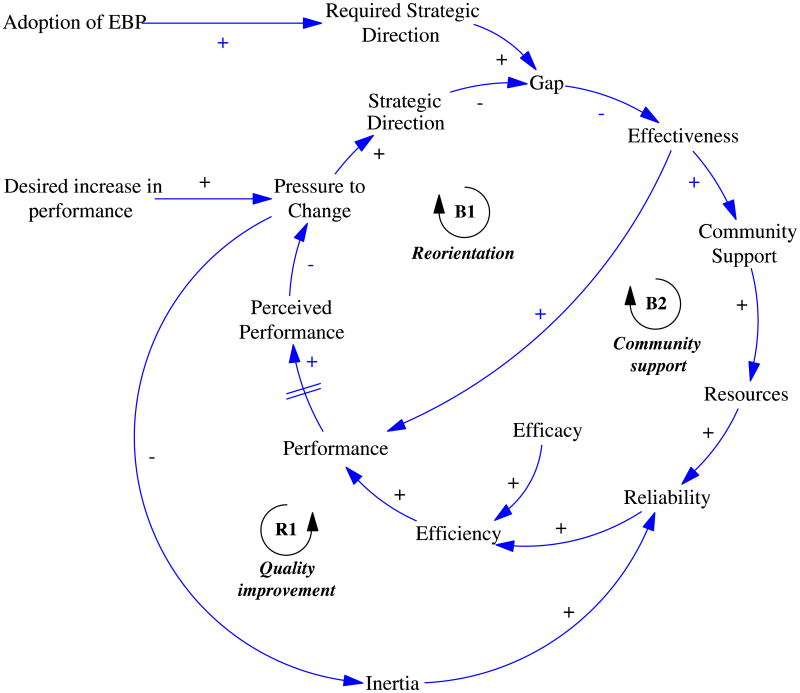

Concept mapping can improve the selection and tailoring of strategies in a number of ways. First, it can be used to identify factors that may affect the implementation of a specific EBP in a specific setting, and to determine which factors are most important and actionable from the perspectives of a wide range of stakeholders.15,50,53 For example, Green and Aarons53 compared the perspectives of policy and direct practice stakeholders regarding factors that influence the implementation of EBPs. Figure 1 shows an example of a cluster rating map taken from their study. Each point on the map represents a specific barrier or facilitator of EBP implementation that policy stakeholders identified. The 14 conceptually distinct clusters were derived from the sorting process and subsequent analysis (i.e., multidimensional scaling and hierarchical cluster analysis). The layers on the map depict the policy stakeholders' “importance” ratings. Thus, the cluster of barriers and facilitators labeled “funding” was seen as more important than the cluster labeled “agency compatibility.” Green and colleagues provide additional guidance and examples of the use of concept mapping in relation to implementation.41

Figure 1. Policy stakeholder cluster rating map53.

Second, concept mapping can be used to ensure that a full-range of implementation strategies are considered by supplementing strategies generated through stakeholder brainstorming sessions with those identified through relevant literature,5,6 and generating conceptually sound categories of strategies from which to select. Waltz et al.8 have recently used concept mapping to create conceptually distinct categories of strategies and determine their importance and feasibility according to experts in implementation science and clinical practice.

Finally, concept mapping could be used to generate consensus about the strategies (and categories of strategies) that can most effectively, efficiently, and feasibly address a specific barrier or set of barriers (e.g., a focus prompt could be, “In order to more effectively engage patients in treatment, we need to____”).

Group model building

Overview

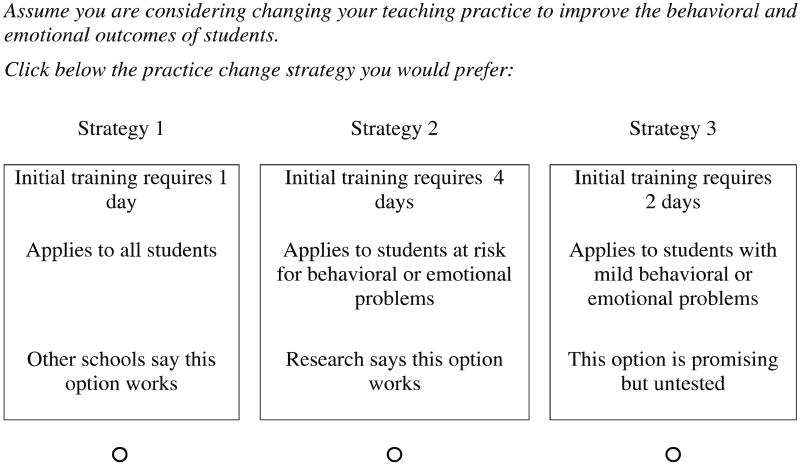

Group Model Building comes from the field of system dynamics and involves the “client” in identifying and implementing solutions to “messy” problems.43 System dynamics is characterized by the use of social systems to obtain stakeholder feedback that leads to models for studying causal loops (including variables, relationships, and feedback) to better understand potential consequences of the system's structure prior to the actual implementation. Group model building has evolved to include numerous structures and formats (see Rouwette and Vennix54 for a review), with guidelines for choosing the optimal approach.44 The reference group approach is perhaps the most commonly used. In this approach, a reference group of between 8-10 “clients,” who could be directors, managers, supervisors, providers, and consumer advocates involved in implementation, serve as critics to the modeling process in a series of iterative discussions and meetings. The group meetings require at least two guides, though others suggest a minimum of five, including: a “gatekeeper,” “process coach,” “modeling coach,” “facilitator” (who has the most important role), and a “recorder.”55 The facilitator must be a neutral party (ideally a group model building expert) who can elicit client knowledge, translate the knowledge into system dynamics terms, and create a group atmosphere in which open communication can occur with the goal of achieving consensus and commitment. The recorder keeps track of all model elements throughout the discussion series. Once the structure and roles are established, group model building engages the following three steps. First, a problem statement is articulated by the reference group.43 For example, the problem statement might be, “Clinicians are not integrating the EBP into their practice.” Second, the model building is initiated. This requires careful delineation of the relevant variables by the referent group and is termed the “conceptualization stage.” For instance, the referent group might identify training needs, resources, clinician confidence, time constraints, and supervision as relevant variables to be included in the model. The facilitator then generates a causal loop or stock and flow diagram based on this information. Figure 2 provides an example of a causal loop diagram related to implementation.56 These models can be drawn freehand or created with the aid of model building and simulation software. Third, a mathematical formula is generated to quantify specified parameters. This model building stage requires the expertise of the group model building team; the referent group is only used for consultation given its time consuming and complicated nature. The model is then ready for simulation of the proposed solution. More information about group model building can be found in Hovmand's text,57 and training and consultation opportunities can be sought through the System Dynamics Society.58

Figure 2. Main feedback loops in an implementation model56.

How it has been used

Group model building has been used to collaboratively solve problems among stakeholders with the aid of system dynamics experts and implement solutions across for-profit (e.g., oil, electronics, software, insurance, and finance industries), non-profit (e.g., universities, defense research), and governmental (from the city to national level) sectors, as well as between organizations.45 The Office of Behavioral and Social Sciences Research and other leaders in the field have prioritized the development and utilization of systems science methodologies to capture the complexity of threats to public health and to address the science to practice gap.59,60

Example from behavioral health

Huz and colleagues61 used a group model building approach to address gaps in the continuity of vocational rehabilitation services for individuals with severe mental illness. The process consisted of four meetings that occurred over a six-month period. After an initial introductory meeting, the system dynamics simulation model was developed during a two-day conference involving 12 to 18 managers of mental health and rehabilitation services from the involved county, state, and non-profit agencies, as well as one or more client advocates. This model was used to guide discussion and develop an action plan for moving toward a more integrated vocational rehabilitation system. The third and fourth sessions involved the continued exploration of insights from the simulation model, but moved toward the group's strategies for change and their progress toward achieving their action plan. Huz and colleagues61 found that the group model building process was well received by both the modeling team and the target group. More importantly, the process resulted in significant shifts in their approach to integrating care and reduced the group's reliance on “silver bullet” solutions that are not effective in improving care. Participants also became more aligned in their perceptions of systems goals and the strategies that they will use for integrating care.61

Conjoint analysis

Overview

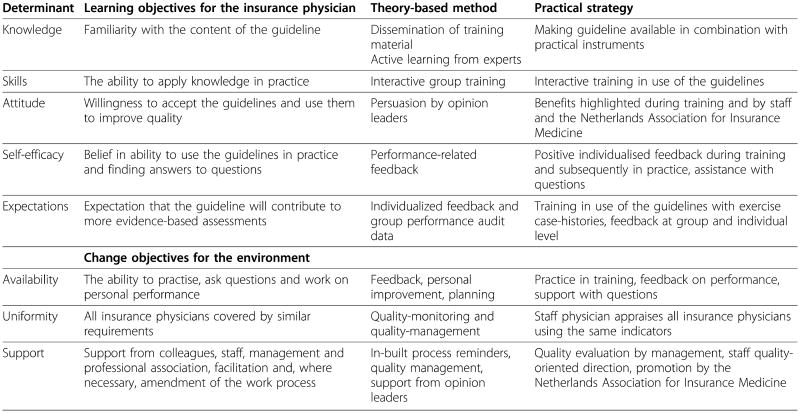

Conjoint analysis is a method used to measure stakeholders' preferences for product (or service, program, implementation strategy, etc.) attributes, to learn how changes in those attributes influence demand, and to forecast the likely acceptance of products that are brought to market.62,63 It is particularly useful in addressing situations in which there are inherent tradeoffs,62 for instance, when implementation strategy X has more support than strategy Y, but strategy Y is less costly than strategy X. Though there are many different types of conjoint analysis,62–65 all of them ask respondents to consider multiple conjoined product features and to rank or select the products that they would be likely to purchase or use.63 In “full-profile” techniques, different products with varying attributes are shown one at a time.62,63 For example, respondents might be presented with a single “card” that has five discrete strategies (e.g., educational materials, training, supervision, fidelity monitoring, and reminders) comprising a single multifaceted strategy, and be asked to rate the strategy on a scale from 0 to 100, where 100 would mean “definitely would use to implement” a given EBP in a given context. In choice-based conjoint or discrete choice experiments,62,66 products are displayed in pairs or sets and respondents are asked to chose the one they are most likely to use or purchase (see Figure 3). Respondents generally complete 12-30 of these questions, which are designed using experimental design principles of independence and balance of the attributes. Independently varying the attributes shown to respondents and recording their responses to the product profiles affords the opportunity to statistically deduce the product attributes that are most desired and have the most influence on choice.63 Orme67 provides a useful introduction of conjoint analysis for beginners, and Sawtooth Software provides a variety of software packages and resources that users will find helpful and accessible.68

Figure 3. Example item from a study using conjoint analysis69.

How it has been used

Conjoint analysis has primarily been used in marketing research to determine the products and features that consumers value most;62 however, as Bridges et al.66 note, it has also been applied to a range of health topics (e.g., cancer, HIV prevention, diabetes). While it is just beginning to be applied in implementation research,8,46,69 conjoint analysis can enhance the rigor of implementation strategy selection and tailoring processes. The examples provided below demonstrate the utility of two types of conjoint analysis: menu-based choice70 and discrete choice experiments.62,66

Examples from behavioral health

Menu-based choice methods are particularly relevant for obtaining stakeholders' insights regarding the types of strategies that should be considered among the many that could potentially be applied.5,8 Menu-based choice methods are useful for generating data that can inform the mass customization of products and services such as automobiles, employee benefit packages, restaurant menus, and banking options.70 Waltz and colleagues8 are using a menu-based choice approach to develop expert recommendations for the strategies that are most appropriate for implementing clinical practice changes within the Department of Veterans Affairs (VA). Expert panelists were given a set of 73 discrete strategies and were tasked with building multifaceted implementation strategies for three different clinical practice changes (measurement-based care for depression, prolonged exposure for posttraumatic stress disorder, and metabolic monitoring for individuals taking antipsychotics). For each practice change, panelists were provided with three different narratives that described relatively weak, moderate, and strong contexts. Each panelist indicated how essential (e.g., absolutely essential, likely essential, likely inessential, absolutely inessential) each discrete strategy would be to successfully implementing the clinical practice at three different phases of implementation (pre-implementation, implementation, and sustainment). While the results of this study are forthcoming, the analysis of these data will involve calculating Relative Essentialness Estimates70,71 for each discrete strategy (where 1 represents the highest degree of endorsement and 0 represents the lowest) and generating count-based analyses that will be used to characterize the most commonly selected combinations of essential strategies for each scenario.8 These results will then be provided to the expert panelists to develop final consensus statements about the strategies most appropriate for implementing each practice change in each of the three implementation phases and contexts.

Menu-based choice methods may be most appropriate for selecting strategies, but discrete choice experiments66 may be more helpful in tailoring strategies to specific populations and contexts. Each strategy has a number of modifiable elements; for example, the ICEBeRG Group72 pointed out that what might be seen as a relatively straightforward implementation strategy, audit and feedback, actually has at least five modifiable elements (content, intensity, method of delivery, duration, and context) that may influence its effectiveness. It may be helpful if modifications to these elements are informed by clinicians' preferences. Cunningham and colleagues69 used a discrete choice experiment to determine the relative influence of 16 different attributes of practice changes and implementation strategies on the preferences of 1,010 educators (see Figure 3 for a sample survey question). The attributes included contextual and social attributes (e.g., presenter's qualities, colleague support, compatibility with practice), content attributes (e.g., supporting evidence, focus on knowledge vs. skills, universal vs. targeted), and practice change process attributes (coaching to improve skills, workshop size, training time demands, internet options). Their findings suggested ways in which stakeholders' preferences converged, namely their desire for small-group workshops conducted by engaging experts who would teach skills applicable to all students. Through the use of latent class analysis, the investigators found two different segments of educators (the change ready segment [77%] and the demand sensitive segment [23%]) who expressed different preferences for the design of implementation strategies. The change ready segment was willing to adopt new practice changes and preferred that schools have autonomy in making practice change decisions, that coaching be provided to all participants, and that participants receive post-training follow-up sessions. The demand sensitive segment was less intent on practice change, thought that practice changes should be at the discretion of individual teachers, recommended discretionary coaching, and preferred no post-training follow-up support.

Intervention mapping

Overview

Intervention mapping draws upon mixed-methods research, theory, community stakeholder input, and a systematic process for intervention development.73 There are five steps in intervention mapping.47,73,74 First, a needs assessment is conducted to identify determinants (barriers and facilitators) and guide the selection of intervention components. Second, proximal program objectives are specified to produce matrices with multiple cells that contain behavioral or environmental performance objectives, and the determinants of these objectives as identified in the needs assessment. Third, a list of intervention methods that are matched to the proximal program objectives identified in step two is generated. To achieve this, one must brainstorm and delineate methods, and then translate those methods into strategies.74 Fourth, the implementation strategy is designed. To design the implementation strategy, the strategies listed in step three are operationalized in a way that clearly delineates what they entail, as well as how they will be delivered. Program materials such as an implementation intervention manual are designed. Fifth progress is monitored and evaluated.

The content produced throughout the stages of intervention mapping are both the implementation strategy and the tools needed to evaluate the effectiveness of the implementation strategy. The evaluation incorporates mixed methods measurement of both the processes and outcomes of the implementation strategy. There is an additional step of intervention mapping dedicated to adoption and implementation, but this step is not directly applicable here given that this is already a central consideration of implementation research. Bartholemew et al.47 provide a useful text on intervention mapping, and further resources are available through the developers' website.47

How it has been used

Intervention mapping has been used to develop a number of health-related programs, including those addressing sex education,75 obesity prevention,76 breast and cervical cancer prevention,77 and cardiovascular health.78 While intervention mapping has been proposed as a method for tailoring implementation strategies to identified barriers,22,39 it has not been widely used, with a few notable exceptions.79,80

Example from behavioral health

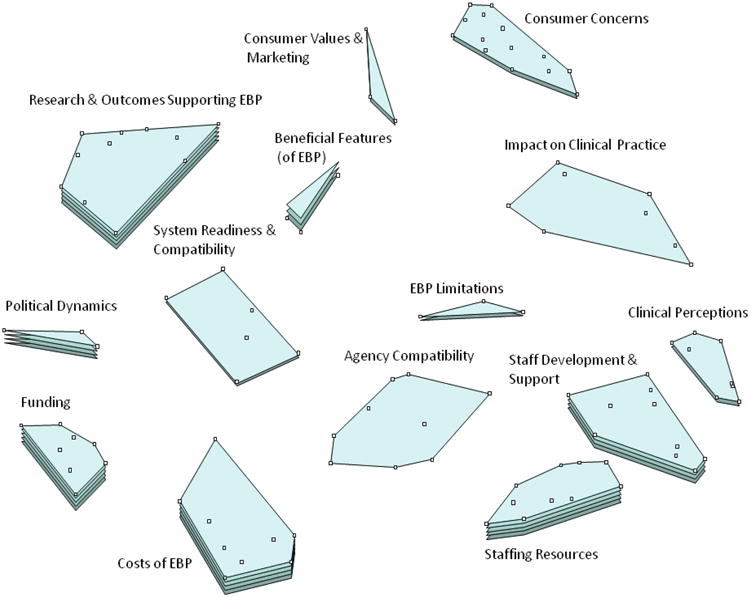

Intervention mapping was recently used in an attempt to increase physicians' adherence to guidelines for depression in the Netherlands.80 The purpose of the guidelines was to ensure that assessments of depression symptoms by physicians were transparent and uniform. Zwerver and colleagues80 provide a detailed account of the intervention mapping process, which resulted in the development of a multifaceted implementation strategy. Figure 4 provides a succinct summary of the associated determinants (barriers and facilitators), learning objectives, theory-based methods, and discrete implementation strategies that were generated. The multifaceted implementation strategy was subsequently tested in a randomized controlled trial, and was found to increase adherence to the guidelines in comparison to implementation as usual.81

Figure 4. Example matrix of determinants, learning objectives, theory-based methods, and implementation strategies80.

Discussion

This article provides an overview of four methods that can be used to select and tailor implementation strategies to local contextual needs, an underdeveloped but critical area in implementation science.4 The discussion focuses on these methods' common strengths and weaknesses, recommendations for comparative effectiveness research on the selection and tailoring of strategies, and suggestions for reporting this type of research. This represents a first step in a research agenda aiming to improve behavioral health services by more systematically and rigorously selecting and tailoring strategies for implementing effective practices.

The methods share a number of strengths. First, all are inherently participatory, engaging diverse stakeholders and importantly, providing concrete steps or processes for facilitating communication. All four methods, particularly concept mapping and group model building, have the potential to galvanize stakeholders around common goals and create consensus regarding the implementation approach. This emphasis on stakeholder participation is critical.82 Second, these methods all comprise systematic, transparent, replicable processes, which is a step forward for selecting and tailoring implementation strategies, an area previously described as a black box.40 Third, all can represent and respond to the complexity of implementation efforts,83 in that they provide the structure for considering factors at different levels and facilitate the selection and tailoring of strategies to address them.

The methods share a few primary weaknesses. If not informed by theory and evidence, they may be helpful in eliciting preferences, but not necessarily provide the supports needed to implement EBPs effectively. For example, in the discrete choice experiment cited above, there was a segment of teachers that believed practice changes should be at the discretion of individual teachers, that coaching should be discretionary, and that no post-training follow-up support was necessary. This conflicts with evidence that coaching and post-training follow-up are important.84,85 Thus, it would likely be less effective to give teachers the choice to opt out of coaching or follow-up training. Clearly there is a balance to be struck between a paternalistic overemphasis on the research literature and a disregard of evidence. One way of accomplishing this is to “seed” the methods with theoretically or empirically justified strategies and attributes, and allow stakeholders to supplement them based upon their expertise and experience. This would bolster the likelihood that selection and tailoring methods are guided by the best available theory and evidence, while preserving the benefits of stakeholder engagement and preference. Another weakness of all of the methods is that they all require consultation or specific training. Finally, with some notable exceptions,45 there is a dearth of empirical evidence supporting their effectiveness and cost-effectivness in obtaining improved implementation and clinical outcomes. The latter weakness is not suprising given that implementation science is a relatively new field that is gradually moving toward more precise applications of implementation strategies.4,86

Each method holds promise for increasing the rigor of implementation research; however, which method should be used is ultimately an empirical question. It is also possible that some of these methods could be usefully combined. For example, conjoint analysis might be particularly useful for tailoring implementation strategies after strategy selection is completed via one of the other methods (concept mapping, group model building, or intervention mapping). Three types of comparative effectiveness research87 are warranted. First, there is a need for comparisons between implementation strategies that have been selected and tailored to address contextual factors and more generic multifaceted implementation strategies. Although preliminary studies suggest the effectiveness of systematic selection of implementation strategies,88 the evidence is not robust.4,33 Second, there is a need to comparatively test different methods for selecting and tailoring strategies. It will be important to determine which methods are most acceptable and feasible for stakeholders, whether or not they result in similar constellations of implementation strategies, and whether some are more efficient and effective in identifying key contextual factors and matching discrete strategies to address them. Finally, it will be essential to assess the cost-effectiveness of these approaches.89,90 Several ongoing studies will contribute to the evidence-base for selecting and tailoring implementation strategies to context-specific factors, including the Tailored Implementation for Chronic Disease research program34 and a study recently funded by the National Institute of Mental Health that will test tailored versus standard approaches to implementing measurement-based care91 for depression (R01MH103310; Lewis, PI).

Publications reporting efforts to select and tailor strategies to address contextual factors should be clear about the methods used for selection and tailoring, the specific discrete strategies selected, and the links between strategies and barriers and other contextual factors. First, researchers should carefully report how they selected and tailored the strategies, and provide descriptions of any systematic methodology (e.g., concept mapping, group model building, etc.) that they may have used. Second, to move the field forward, there is a need for researchers to clearly describe the implementation strategies used in their studies, as the description of implementation strategies in published research has been noted to be very poor.3,92 Proctor and colleagues3 have suggested reporting guidelines for implementation strategies that require researchers to carefully name, conceptually define, and operationalize strategies, and specify them according to features such as: (a) the actor; (b) the action; (c) action target; (d) temporality; (e) dose; (f) implementation outcome affected;89 and (g) the empirical, theoretical, or pragmatic justification for use of the strategy.3 Authors may benefit from consulting those guidelines, or others that have recently been set forth93,94 when reporting the results of implementation studies. Finally, in order to make the links between strategies and the contextual factors that they are intended to address explicit, it may be helpful if researchers use logic models, which could clarify how and why their carefully selected and tailored implementation strategy is proposed to work.95,96 These suggestions should lead to more consistent and transparent reporting, and improve the ability to understand how strategies exert their effects.

Implications for Behavioral Health

Improving the quality of behavioral health services has proven to be a challenging task, with barriers and facilitators to change emerging at all levels of the service system.97–110 Both conceptual111 and empirical literature25,112 suggests that these barriers and facilitators must be taken into account and that different strategies may be required to overcome challenges associated with various interventions, implementation contexts, and stages of implementation. Moving toward more contextually sensitive implementation strategies is an exciting step forward for implementation science that holds potential for improving the quality of behavioral health care. The methods presented in this article have the potential to increase the rigor of the selection and tailoring process. While they may not make implementation easier at the outset, these methods provide a step-by-step process for selecting and tailoring implementation strategies and for engaging stakeholders that may be very attractive to researchers and other implementation leaders. These initial investments are also likely to payoff in the long run as implementation failures due to overlooked and unaddressed barriers to improvement are avoided.

These methods are likely not the only ones that could be used to guide selection and tailoring of strategies. There is a need for ongoing dialogue that might lead to the identification of additional avenues for systematically selecting and tailoring strategies. The move toward more the thoughtful and systematic application of implementation strategies will increase the legitimacy of the field by enhancing the scientific rigor of proposed studies, strengthening the evidence-base for implementation strategies, and providing support for causal mechanisms and theory. Ultimately, there is reason to hope that the use and evaluation of systematic methods for selecting and tailoring implementation strategies to contextual needs will propel the field toward a greater understanding of how, when, where, and why implementation strategies are effective in integrating evidence-based care and improving clinical outcomes in behavioral health service settings.4

Acknowledgments

This work was supported in part by the National Institutes of Health (F31MH098478 to BJP; K23MH099179 to RSB; R01MH103310 and R01MH106510 to CCL; R01MH092950 and R01MH072961 to GAA; R25MH080916, P30DK092950, U54CA155496; UL1RR024992 to EKP; and R01MH106175 to DSM) and the Doris Duke Charitable Foundation (through a Fellowship for the Advancement of Child Well-Being to BJP). Additionally, the preparation of this article was supported in part by the Implementation Research Institute (IRI; NIMH R25MH080916). Drs. Aarons & Proctor are IRI faculty; Dr. Beidas was an IRI fellow from 2012-2014.

Footnotes

Conflict of Interest: The authors have no conflicts of interest to declare.

Contributor Information

Byron J. Powell, Department of Health Policy and Management, Gillings School of Global Public Health, University of North Carolina at Chapel Hill.

Rinad S. Beidas, Department of Psychiatry, Perelman School of Medicine, University of Pennsylvania.

Cara C. Lewis, Department of Psychological and Brain Sciences, Indiana University, Bloomington.

Gregory A. Aarons, University of California-San Diego.

J. Curtis McMillen, School of Social Service Administration, University of Chicago.

Shanti K. Khinduka, Brown School, Washington University in St. Louis.

David S. Mandell, Department of Psychiatry, Perelman School of Medicine, University of Pennsylvania.

References

- 1.Eccles MP, Mittman BS. Welcome to Implementation Science. Implementation Science. 2006;1(1):1–3. doi: 10.1186/1748-5908-1-1. [DOI] [Google Scholar]

- 2.National Institutes of Health. [Accessed January 30, 2013];Dissemination and implementation research in health (R01) 2013 Available online at: http://grants.nih.gov/grants/guide/pa-files/PAR-13-055.html.

- 3.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: Recommendations for specifying and reporting. Implementation Science. 2013;8(139):1–11. doi: 10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mittman BS. Implementation science in health care. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012. pp. 400–418. [Google Scholar]

- 5.Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review. 2012;69(2):123–157. doi: 10.1177/1077558711430690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: Results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science. 2015;10(21):1–14. doi: 10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Michie S, Richardson M, Johnston M, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: Building an international consensus for the reporting of behavior change interventions. Annals of Behavioral Medicine. 2013;46(1):81–95. doi: 10.1007/s12160-013-9486-6. [DOI] [PubMed] [Google Scholar]

- 8.Waltz TJ, Powell BJ, Chinman MJ, et al. Expert recommendations for implementing change (ERIC): Protocol for a mixed methods study. Implementation Science. 2014;9(39):1–12. doi: 10.1186/1748-5908-9-39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cochrane Collaboration. [Accessed April 15, 2013];Cochrane Effective Practice and Organisation of Care Group. Available online at: http://epoc.cochrane.org.

- 10.Landsverk J, Brown CH, Rolls Reutz J, et al. Design elements in implementation research: A structured review of child welfare and child mental health studies. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(1):54–63. doi: 10.1007/s10488-010-0315-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Novins DK, Green AE, Legha RK, et al. Dissemination and implementation of evidence-based practices for child and adolescent mental health: A systematic review. Journal of the American Academy of Child and Adolescent Psychiatry. 2013;52(10):1009–1025. doi: 10.1016/j.jaac.2013.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Research on Social Work Practice. 2014;24(2):192–212. doi: 10.1177/1049731513505778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Beidas RS, Kendall PC. Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology: Science and Practice. 2010;17(1):1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tabak RG, Khoong EC, Chambers DA, et al. Bridging research and practice: Models for dissemination and implementation research. American Journal of Preventive Medicine. 2012;43(3):337–350. doi: 10.1016/j.amepre.2012.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Aarons GA, Wells RS, Zagursky K, et al. Implementing evidence-based practice in community mental health agencies: A multiple stakeholder analysis. American Journal of Public Health. 2009;99(11):2087–2095. doi: 10.2105/AJPH.2009.161711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Damschroder LJ, Lowery JC. Evaluation of a large-scale weight management program using the consolidated framework for implementation research (CFIR) Implementation Science. 2013;8(51):1–17. doi: 10.1186/1748-5908-8-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Couineau AL, Forbes D. Using predictive models of behavior change to promote evidence based treatment for PTSD. Psychological Trauma: Theory, Research, Practice, and Policy. 2013;3(3):266–275. doi: 10.1037/a0024980. [DOI] [Google Scholar]

- 18.French SD, Green SE, O'Connor DA, et al. Developing theory-informed behaviour change interventions to implement evidence into practice: A systematic approach using the Theoretical Domains Framework. Implementation Science. 2012;7(38):1–8. doi: 10.1186/1748-5908-7-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Davies P, Walker AE, Grimshaw JM. A systematic review of the use of theory in the design of guideline dissemination and implementation strategies and interpretation of the results of rigorous evaluations. Implementation Science. 2010;5(14):1–6. doi: 10.1186/1748-5908-5-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kessler RS, Purcell EP, Glasgow RE, et al. What does it mean to “employ” the RE-AIM model? Evaluation & the Health Professions. 2012;36(1):44–66. doi: 10.1177/0163278712446066. [DOI] [PubMed] [Google Scholar]

- 21.Bhattacharyya O, Reeves S, Garfinkel S, et al. Designing theoretically informed implementation interventions: Fine in theory, but evidence of effectiveness in practice is needed. Implementation Science. 2006;1(5):1–3. doi: 10.1186/1748-5908-1-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Proctor EK, Powell BJ, Baumann AA, et al. Writing implementation research grant proposals: Ten key ingredients. Implementation Science. 2012;7(96):1–13. doi: 10.1186/1748-5908-7-96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Scheirer MA. Linking sustainability research to intervention types. American Journal of Public Health. 2013;103(4):e73–e80. doi: 10.2105/AJPH.2012.300976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Abrahams IA, Ali O, Davidson L, et al. Philadelphia Behavioral Health Services Transformation: Practice Guidelines for Recovery and Resilience Oriented Treatment. I.I. Bloomington, Indiana: AuthorHouse Publishers; 2013. Available online at: http://www.dbhids.org/assets/Forms--Documents/transformation/PracticeGuidelines2013.pdf. [Google Scholar]

- 25.Isett KR, Burnam MA, Coleman-Beattie B, et al. The state policy context of implementation issues for evidence-based practices in mental health. Psychiatric Services. 2007;58(7):914–921. doi: 10.1176/appi.ps.58.7.914. [DOI] [PubMed] [Google Scholar]

- 26.Cabassa LJ, Baumann AA. A two-way street: Bridging implementation science and cultural adaptations of mental health treatments. Implementation Science. 2013;8(90):1–14. doi: 10.1186/1748-5908-8-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4(50):1–15. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Aarons GA, Cafri G, Lugo L, et al. Expanding the domains of attitudes towards evidence-based practice: The Evidence Based Attitudes Scale-50. Administration and Policy in Mental Health and Mental Health Services Research. 2012;39(5):331–340. doi: 10.1007/s10488-010-0302-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Glisson C, Landsverk J, Schoenwald S, et al. Assessing the organizational social context (OSC) of mental health services: implications for research and practice. Administration and Policy in Mental Health and Mental Health Services Research. 2008;35(1-2):98–113. doi: 10.1007/s10488-007-0148-5. [DOI] [PubMed] [Google Scholar]

- 30.Jacobs SR, Weiner BJ, Bunger AC. Context matters: Measuring implementation climate among individuals and groups. Implementation Science. 2014;9(46):1–14. doi: 10.1186/1748-5908-9-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ganju V. Implementation of evidence-based practices in state mental health systems: Implications for research and effectiveness studies. Schizophrenia Bulletin. 2003;29(1):125–131. doi: 10.1093/oxfordjournals.schbul.a006982. [DOI] [PubMed] [Google Scholar]

- 32.Raghavan R, Bright CL, Shadoin AL. Toward a policy ecology of implementation of evidence-based practices in public mental health settings. Implementation Science. 2008;3(26):1–9. doi: 10.1186/1748-5908-3-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Baker R, Cammosso-Stefinovic J, Gillies C, et al. Cochrane Database of Systematic Reviews. 2010. Tailored interventions to overcome identified barriers to change: Effects on professional practice and health care outcomes. 3 Art. No.: CD005470:1-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wensing M, Oxman A, Baker R, et al. Tailored implementation for chronic diseases (TICD): A project protocol. Implementation Science. 2011;6(103):1–8. doi: 10.1186/1748-5908-6-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wensing M, Bosch M, Grol R. Selecting, tailoring, and implementing knowledge translation interventions. In: Straus S, Tetroe J, Graham ID, editors. Knowledge Translation in Health Care: Moving from Evidence to Practice. Oxford, UK: Wiley-Blackwell; 2009. pp. 94–113. [Google Scholar]

- 36.Grol R, Bosch MC, Hulscher MEJ, et al. Planning and studying improvement in patient care: The use of theoretical perspectives. Milbank Quarterly. 2007;85(1):93–138. doi: 10.1111/j.14680009.2007.00478.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Flottorp SA, Oxman AD, Krause J, et al. A checklist for identifying determinants of practice: A systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implementation Science. 2013;8(35):1–11. doi: 10.1186/1748-5908-8-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wensing M, Grol R. Methods to identify implementation problems. In: Grol R, Wensing M, Eccles M, editors. Improving Patient Care: The Implementation of Change in Clinical Practice. Edinburgh, Ireland: Elsevier; 2005. pp. 109–120. [Google Scholar]

- 39.Grol R, Bosch M, Wensing M. Development and selection of strategies for improving patient care. In: Grol R, Wensing M, Eccles M, Davis D, editors. Improving Patient Care: The Implementation of Change in Health Care. 2nd. Chichester: John Wiley & Sons, Inc.; 2013. pp. 165–184. [Google Scholar]

- 40.Bosch M, van der Weijden T, Wensing M, et al. Tailoring quality improvement interventions to identified barriers: A multiple case analysis. Journal of Evaluation in Clinical Practice. 2007;13:161–168. doi: 10.1111/j.1365-2753.2006.00660.x. [DOI] [PubMed] [Google Scholar]

- 41.Green AE, Fettes DL, Aarons GA. A concept mapping approach to guide and understand dissemination and implementation. Journal of Behavioral Health Services & Research. 2012;39(4):362–373. doi: 10.1007/s11414-012-9291-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kane M, Trochim WMK. Concept Mapping for Planning and Evaluation. Thousand Oaks, CA: Sage; 2007. [Google Scholar]

- 43.Vennix JAM. Group model-building: Tackling messy problems. System Dynamics Review. 1999;15(4):379–401. [Google Scholar]

- 44.Vennix JAM. Group Model Building: Facilitating Team Learning. Chichester: Wiley; 1996. [Google Scholar]

- 45.Rouwette EAJA, Vennix JAM, van Mullekom T. Group model building effectiveness: A review of assessment studies. System Dynamics Review. 2002;18(1):4–45. doi: 10.1002/sdr.229. [DOI] [Google Scholar]

- 46.Farley K, Thompson C, Hanbury A, et al. Exploring the feasibility of conjoint analysis as a tool for prioritizing innovations for implementation. Implementation Science. 2013;8(56):1–9. doi: 10.1186/1748-5908-8-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bartholemew LK, Parcel GS, Kok G, et al. Planning Health Promotion Programs: An Intervention Mapping Approach. San Francisco, CA: Jossey-Bass, Inc.; 2011. [Google Scholar]

- 48.Concept Systems Global, Inc. [Accessed March 9, 2015];Concept Systems Global, Inc. 2015 Available online at: http://www.conceptsystems.com.

- 49.Concept Systems, Inc. Publications in concept mapping methodology. Concept Systems Inc.; 2013. [Accessed July 15, 2013]. Available online at: http://www.conceptsystems.com/content/view/publications.html. [Google Scholar]

- 50.Lobb R, Pinto AD, Lofters A. Using concept mapping in the knowledge-to-action process to compare stakeholder opinions on barriers to use of cancer screening among South Asians. Implementation Science. 2013;8(37):1–12. doi: 10.1186/1748-5908-8-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shern D, Trochim W, Christina L. The use of concept mapping for assessing fidelity of model transfer: An example from psychiatric rehabilitation. Evaluation and Program Planning. 1995;18(2):143–153. doi: http://dx.doi.org/10.1016/0149-7189(95)00005-V. [Google Scholar]

- 52.Vinson CA. Using concept mapping to develop a conceptual framework for creating virtual communities of practice to translate cancer research into practice. Preventing Chronic Disease: Public Health Research, Practice and Policy. 2014;11:e68. doi: 10.5888/pcd11.130280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Green AE, Aarons GA. A comparison of policy and direct practice stakeholder perceptions of factors affecting evidence-based practice implementation using concept mapping. Implementation Science. 2011;6(104):1–12. doi: 10.1186/1748-5908-6-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Rouwette EAJA, Vennix JAM. Group model building. In: Meyers RA, editor. Complex Systems in Finance and Econometrics. New York: Springer; 2011. pp. 484–496. [Google Scholar]

- 55.Richardson GP, Anderson DF. Teamwork in group model-building. System Dynamics Review. 1995;11(2):113–137. [Google Scholar]

- 56.Hovmand PS, Gillespie DF. Implementation of evidence-based practice and organizational performance. Journal of Behavioral Health Services & Research. 2010;37(1):79–94. doi: 10.1007/s11414-008-9154-y. [DOI] [PubMed] [Google Scholar]

- 57.Hovmand PS. Community Based System Dynamics. New York: Springer; 2014. [Google Scholar]

- 58.System Dynamics Society. [Accessed March 8, 2015];System Dynamics Society. 2015 Available online at: http://www.systemdynamics.org.

- 59.Holmes BJ, Finegood DT, Riley BL, et al. Systems thinking in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012. pp. 175–191. [Google Scholar]

- 60.Office of Behavioral and Social Science Research. [Accessed October 12, 2014];Systems Science. 2014 Available online at: http://obssr.od.nih.gov/scientific_areas/methodology/systems_science/

- 61.Huz S, Anderson DF, Richardson GP, et al. A framework for evaluating systems thinking interventions: An experimental approach to mental health system change. System Dynamics Review. 1997;13(2):149–169. [Google Scholar]

- 62.Green PE, Krieger AM, Wind Y. Thirty years of conjoint analysis: Reflections and prospects. Interface. 2001;31(3):S56–S73. doi: 10.1287/inte.31.3s.56.9676. [DOI] [Google Scholar]

- 63.Sawtooth Software. What is conjoint analysis? Sawtooth Software; 2014. [Accessed October 9, 2014]. Available online at: http://www.sawtoothsoftware.com/products/conjoint-choice-analysis/conjoint-analysissoftware. [Google Scholar]

- 64.Orme BK. Which Conjoint Method Should I Use? Sequim, Washington: Sawtooth Software, Inc.; 2009. pp. 1–7. [Google Scholar]

- 65.Qualtrics. [Accessed October 10, 2014];A brief explanation of the types of conjoint analysis. 2014 Available online at: http://www.qualtrics.com/wp-content/uploads/2012/09/ConjointAnalysisExp.pdf.

- 66.Bridges JFP, Hauber AB, Marshall D, et al. Conjoint analysis applications in health-a checklist: A report of the ISPOR good research practices for conjoint analysis task force. Value in Health. 2011;14(4):403–413. doi: 10.1016/j.jval.2010.11.013. [DOI] [PubMed] [Google Scholar]

- 67.Orme BK. Getting Started with Conjoint Analysis: Strategies for Product Design and Pricing Research. Madison, WI: Research Publishers; 2010. [Google Scholar]

- 68.Sawtooth Software. [Accessed March 9, 2015];Sawtooth Software. 2015 Available online at: http://www.sawtoothsoftware.com.

- 69.Cunningham CE, Barwick M, Short K, et al. Modeling the mental health practice change preferences of educators: A discrete-choice conjoint experiment. School Mental Health. 2014;6(1):1–14. doi: 10.1007/s12310-013-9110-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Orme BK. Menu-Based Choice (MBC) for Multi-Check Choice Experiments. Oren, UT: Sawtooth Software, Inc.; 2012. [Accessed June 17, 2015]. Available online at: http://www.sawtoothsoftware.com/download/mbcbooklet.pdf. [Google Scholar]

- 71.Johnson RB, Orme B, Pinnell J. Simulating market preference with “build your own” data; Sawtooth Software Conference Proceedings; Delray Beach, FL. 2006; pp. 239–253. [Google Scholar]

- 72.The Improved Clinical Effectiveness through Behavioural Research Group (ICEBeRG) Designing theoretically-informed implementation interventions. Implementation Science. 2006;1(4):1–8. doi: 10.1186/1748-5908-1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Bartholomew LK, Parcel GS, Gottlieb NH. Intervention Mapping: Designing Theory and Evidence-Based Health Promotion Programs. Mountain View, CA: Mayfield; 2001. [Google Scholar]

- 74.Bartholomew LK, Parcel GS, Kok G. Intervention mapping: A process for developing theory and evidence-based health education programs. Health Education & Behavior. 1998;25(5):545–563. doi: 10.1177/109019819802500502. [DOI] [PubMed] [Google Scholar]

- 75.Schaafsma D, Stoffelen JMT, Kok G, et al. Exploring the development of existing sex education programmes for people with intellectual disabilities: An intervention mapping approach. Journal of Applied Research in Intellectual Disabilities. 2013;26(2):157–166. doi: 10.1111/jar.12017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Belansky ES, Cutforth N, Chavez R, et al. Adapted intervention mapping: A strategic planning process for increasing physical activity and healthy eating opportunities in schools via environment and policy change. Journal of School Health. 2013;83(3):194–205. doi: 10.1111/josh.12015. [DOI] [PubMed] [Google Scholar]

- 77.Fernández ME, Gonzales A, Tortolero-Luna G, et al. Using intervention mapping to develop a breast and cervical cancer screening program for hispanic farmworkers: Cultivando la salud. Health Promotion Practice. 2005;6(4):394–404. doi: 10.1177/1524839905278810. [DOI] [PubMed] [Google Scholar]

- 78.Mani H, Daly H, Barnett J, et al. The development of a structured education programme to improve cardiovascular risk in women with polycystic ovary syndrome (SUCCESS Study) Endocrine Abstracts. 2013;31:228. doi: 10.1530/endoabs.31.P228. [DOI] [Google Scholar]

- 79.Jabbour M, Curran J, Scott SD, et al. Best strategies to implement clinical pathways in an emergency department setting: Study protocol for a cluster randomized controlled trial. Implementation Science. 2013;8(55):1–11. doi: 10.1186/1748-5908-8-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Zwerver F, Schellart AJM, Anema JR, et al. Intervention mapping for the development of a strategy to implement the insurance medicine guidelines for depression. BMC Health Services Research. 2011;11(9):1–12. doi: 10.1186/1471-2458-11-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Zwerver F, Schellart AJM, Knol DL, et al. An implementation strategy to improve the guideline adherence of insurance physicians: An experiment in a controlled setting. Implementation Science. 2011;6(131):1–10. doi: 10.1186/1748-5908-6-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Chambers DA, Azrin ST. Partnership: A fundamental component of dissemination and implementation research. Psychiatric Services. 2013;64(16):509–511. doi: 10.1176/appi.ps.201300032. [DOI] [PubMed] [Google Scholar]

- 83.Institute of Medicine. The State of Quality Improvement and Implementation Research: Workshop Summary. Washington, DC: The National Academies Press; 2007. [Google Scholar]

- 84.Beidas RS, Edmunds JM, Marcus SC, et al. Training and consultation to promote implementation of an empirically supported treatment: A randomized trial. Psychiatric Services. 2012;63(7):660–665. doi: 10.1176/appi.ps.201100401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Herschell AD, Kolko DJ, Baumann BL, et al. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review. 2010;30(4):448–466. doi: 10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Proctor EK, Landsverk J, Aarons GA, et al. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36(1):24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Institute of Medicine. Initial National Priorities for Comparative Effectiveness Research. Washington, DC: The National Academies Press; 2009. [Google Scholar]

- 88.Waxmonsky J, Kilbourne AM, Goodrich DE, et al. Enhanced fidelity to treatment for bipolar disorder: Results from a randomized controlled implementation trial. Psychiatry Services. 2014;65(1):81–90. doi: 10.1176/appi.ps.201300039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Proctor EK, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Raghavan R. The role of economic evaluation in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012. pp. 94–113. [Google Scholar]

- 91.Scott K, Lewis CC. Using measurement-based care to enhance any treatment. Cognitive and Behavioral Practice. 2015;22(1):49–59. doi: 10.1016/j.cbpra.2014.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Michie S, Fixsen DL, Grimshaw JM, et al. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implementation Science. 2009;4(40):1–6. doi: 10.1186/1748-5908-4-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Albrecht L, Archibald M, Arseneau D, et al. Development of a checklist to assess the quality of reporting of knowledge translation interventions using the Workgroup for Intervention Development and Evaluation Research (WIDER) recommendations. Implementation Science. 2013;8(52):1–5. doi: 10.1186/1748-5908-8-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Davidoff F, Batalden P, Stevens D, et al. Publication guidelines for quality improvement in health care: Evolution of the SQUIRE project. Quality & Safety in Health Care. 2008;17(Supplement 1):i3–i9. doi: 10.1136/qshc.2008.029066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Goeschel CA, Weiss WM, Pronovost PJ. Using a logic model to design and evaluate quality and patient safety improvement programs. International Journal of Quality in Health Care. 2012;24(4):330–337. doi: 10.1093/intqhc/mzs029. [DOI] [PubMed] [Google Scholar]

- 96.W. K. Kellogg Foundation. Logic Model Development Guide: Using Logic Models to Bring Together Planning, Evaluation, and Action. Battle Creek, Michigan: W. K. Kellogg Foundation; 2004. [Google Scholar]

- 97.Baker-Ericzen MJ, Jenkins MM, Haine-Schlagel R. Therapist, parent, and youth perspectives of treatment barriers to family-focused community outpatient mental health services. Journal of Child & Family Studies. 2013;22(6):854–868. doi: 10.1007/s10826-012-9644-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Bartholomew NG, Joe GW, Rowan-Szai GA, et al. Counselor assessments of training and adoption barriers. Journal of Substance Abuse Treatment. 2007;33(2):193–199. doi: 10.1016/j.jsat.2007.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Brunette MF, Asher D, Whitley R, et al. Implementation of integrated dual disorders treatment: A qualitative analysis of facilitators and barriers. Psychiatric Services. 2008;59(9):989–995. doi: 10.1176/ps.2008.59.9.989. [DOI] [PubMed] [Google Scholar]

- 100.Cook JM, Biyanova T, Coyne JC. Barriers to adoption of new treatments: An internet study of practicing community psychotherapists. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36(2):83–90. doi: 10.1007/s10488-008-0198-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Forman SG, Olin SS, Hoagwood KE, et al. Evidence-based interventions in schools: Developers' views of implementation barriers and facilitators. School Mental Health. 2009;1(1):26–36. doi: 10.1007/s12310-008-9002-5. [DOI] [Google Scholar]

- 102.Langley AK, Nadeem E, Kataoka SH, et al. Evidence-based mental health programs in schools: Barriers and facilitators of successful implementation. School Mental Health. 2010;2(3):105–113. doi: 10.1007/s12310-010-9038-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Pagoto SL, Spring B, Coups EJ, et al. Barriers and facilitators of evidence-based practice perceived by behavioral science health professionals. Journal of Clinical Psychology. 2007;63(7):695–705. doi: 10.1002/jclp.20376. [DOI] [PubMed] [Google Scholar]

- 104.Powell BJ, Hausmann-Stabile C, McMillen JC. Mental health clinicians' experiences of implementing evidence-based treatments. Journal of Evidence-Based Social Work. 2013;10(5):396–409. doi: 10.1080/15433714.2012.664062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Powell BJ, McMillen JC, Hawley KM, et al. Mental health clinicians' motivation to invest in training: Results from a practice-based research network survey. Psychiatric Services. 2013;64(8):816–818. doi: 10.1176/appi.ps.003602012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Raghavan R. Administrative barriers to the adoption of high-quality mental health services for children in foster care: A national study. Administration and Policy in Mental Health and Mental Health Services Research. 2007;34(3):191–201. doi: 10.1007/s10488-006-0095-6. [DOI] [PubMed] [Google Scholar]

- 107.Rapp CA, Etzel-Wise D, Marty D, et al. Barriers to evidence-based practice implementation: Results of a qualitative study. Community Mental Health Journal. 2010;46(2):112–118. doi: 10.1007/s10597-009-9238-z. [DOI] [PubMed] [Google Scholar]

- 108.Shapiro CJ, Prinz RJ, Sanders MR. Facilitators and barriers to implementation of an evidence-based parenting intervention to prevent child maltreatment: The triple p-positive parenting program. Child Maltreatment. 2012;17(1):86–95. doi: 10.1177/1077559511424774. [DOI] [PubMed] [Google Scholar]

- 109.Stein BD, Celedonia KL, Kogan JN, et al. Facilitators and barriers associated with implementation of evidence-based psychotherapy in community settings. Psychiatric Services. 2013;64(12):1263–1266. doi: 10.1176/appi.ps.201200508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Whitley R, Gingerich S, Lutz WJ, et al. Implementing the illness management and recovery program in community mental health settings: Facilitators and barriers. Psychiatric Services. 2009;60(2):202–209. doi: 10.1176/ps.2009.60.2.202. [DOI] [PubMed] [Google Scholar]

- 111.Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(1):4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Magnabosco JL. Innovations in mental health services implementation: A report on state level data from the U.S. evidence-based practices project. Implementation Science. 2006;1(13):1–11. doi: 10.1186/1748-5908-1-13. [DOI] [PMC free article] [PubMed] [Google Scholar]