Abstract

Autism spectrum disorders (ASD) are characterized by difficulties in social cognition, but are also associated with atypicalities in sensory and perceptual processing. Several groups have reported that autistic individuals show reduced integration of socially relevant audiovisual signals, which may contribute to the higher-order social and cognitive difficulties observed in autism. Here we use a newly devised technique to study instantaneous adaptation to audiovisual asynchrony in autism. Autistic and typical participants were presented with sequences of brief visual and auditory stimuli, varying in asynchrony over a wide range, from 512 ms auditory-lead to 512 ms auditory-lag, and judged whether they seemed to be synchronous. Typical adults showed strong adaptation effects, with trials proceeded by an auditory-lead needing more auditory-lead to seem simultaneous, and vice versa. However, autistic observers showed little or no adaptation, although their simultaneity curves were as narrow as the typical adults. This result supports recent Bayesian models that predict reduced adaptation effects in autism. As rapid audiovisual recalibration may be fundamental for the optimisation of speech comprehension, recalibration problems could render language processing more difficult in autistic individuals, hindering social communication.

Autism is a heritable, lifelong neurodevelopmental condition with striking effects on social communication. The condition is also associated with a range of non-social features, including both hypersensitivity and hyposensitivity to perceptual stimuli, and sensory seeking behaviours such as attraction to light, intense looking at objects and fascination with brightly coloured objects. These sensory atypicalities, which now form part of the diagnostic criteria for autism1, can have debilitating effects on the lives of autistic people and their families2,3.

To create a unified percept of the world, the brain has to combine multiple sources of sensory information into one coherent multisensory percept. This is particularly important for speech, which in noisy environments is easier to understand when the speaker’s lip movements can be observed4. Combining visual and auditory signals is not a trivial task, given both the difference in the transmission speed of light and sound and variable neural delays5. To keep the auditory and visual information temporally aligned, the brain must continually recalibrate. There is a good deal of evidence for active recalibration: after repeated exposure to an asynchronous audiovisual asynchrony, synchronous signals appear asynchronous, in the other direction6,7,8,9. Although most adaptation studies use prolonged exposure to asynchronous audiovisual signals, Van der Burg et al.10 have introduced a new technique showing that rapid adaptation to asynchronous audiovisual events, occurs more rapidly than was previously thought, even with audiovisual speech11. They presented participants with a stream of audiovisual events with variable asynchrony and asked them to judge if they appear synchronous. Perceived synchrony depended on the temporal order of the preceding stimulus, demonstrating instantaneous adaptation after a single presentation (see also12).

Difficulties in multisensory processing, particularly early in development, might lead to problems in social and adaptive behaviour and interpersonal interactions that occur in autism. Some evidence for such difficulties comes from studies on multisensory integration of speech and emotions, perceived from the face and the voice13,14,15,16,17,18,19. These studies suggest that autistic people have specific problems with audiovisual integration of social and emotional stimuli, which could contribute to their social difficulties. In general, these studies show that autistic people have poorer multisensory temporal acuity, a finding that typically manifests as a broadening of their multisensory temporal binding window13,20,21,22,23. However, it appears that multisensory binding is affected primarily for linguistic stimuli, with relative sparing of low-level integration16; and significant group differences in audiovisual ERPs occur in the time window when spoken words begin to be processed for meaning17. Only one study has shown differences with simple, non-linguistic stimuli: autistic participants use audiovisual synchrony to a lesser extent to aid in “pop-out” visual search tasks24. Given the importance of multisensory interaction for communication, it is possible that atypical multisensory integration could underlie some of the perceptual and cognitive differences associated with autism.

We have recently proposed a Bayesian account of autism25 to explain such differences, suggesting that it is not sensory processing itself that is disrupted in autism, but the interpretation of the sensory input. The Bayesian class of theories – including predictive coding and other generative models26,27,28 assume that perception is an optimized combination of external sensory data (the likelihood) and an internal model (the prior). We suggested that this process may be atypical in autism, in that the internal priors are under-weighted, less utilized than in typical individuals. Our theory has been followed by several others along similar lines29,30,31,32,33.

The suggestion of under-utilization of priors leads to several specific predictions. One strong prediction is that autistic individuals should show reduced adaptation aftereffects; and much data shows reduced adaptation in autism for high-level features, both social (e.g., faces34) and non-social (e.g., numerosity35). Adaptation helps to improve neuronal efficiency by dynamically tuning its responses to match the distribution of stimuli to make maximal use out of the limited working range of the system36,37,38. Failure to continuously adapt to the current environment should lead to inefficiencies, including the transmission of redundant information, which could have profound effects for how an individual might perceive and interpret incoming sensory information.

In this study we examine whether autism is also associated with reduced adaptation for multisensory stimuli. Specifically, we test whether adaptation to simple, non-linguistic audiovisual asynchrony is reduced in autistic people, using the robust and rapid technique of Van der Burg et al.10. We find robust recalibration in typical adults, very similar to published results10, but our autistic participants showed no trial-wise recalibration.

Results

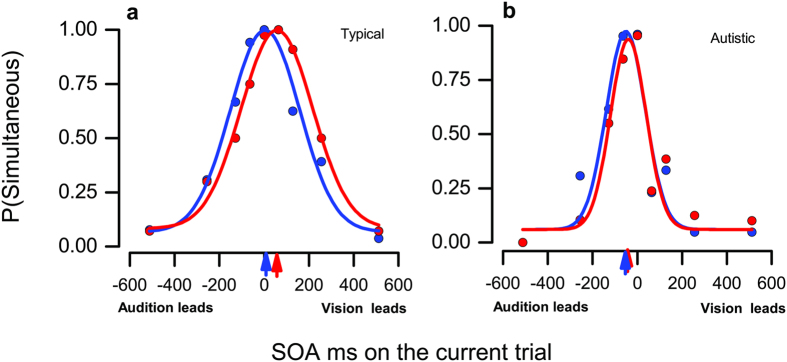

We measured dynamic adaptation to audiovisual stimuli in 16 autistic adults and 16 typical adults, asking them to judge whether a briefly flashed white ring appeared perceptually simultaneous with an auditory tone. The ring and tone were presented with variable asynchrony between ±512 ms (see methods). For each participant, we separated the data into trials where the sound led in the previous trial (blue symbols, Fig. 1), and those where it lagged (red symbols), ignoring trials preceded by zero delay. Proportion of trials judged simultaneous was plotted as a function of stimulus onset asynchrony (SOA), and fit by a gaussian free to vary in mean and standard deviation. Figure 1 shows the procedure for representative typical (a) and autistic (b) participants. The typical adult shows the adaptation effect, with the simultaneity judgments of the audio-lead trials (blue) being fit by a gaussian centred about 53 ms more negative than the auditory-lag trials (red). That is, if preceded by an audio-lead stimulus, the audio in current stimulus had lead vision more than on the other trials to be perceived as simultaneous, implying a negative adaptation aftereffect. However, for the autistic adult, the curves were superimposed, with no measurable displacement.

Figure 1. Effect of previous trial on simultaneity judgments.

Proportion of trials judged to be simultaneous as a function of Stimulus Onset Asynchrony (SOA) for two representative participants, one typical adult (a), one autistic adult (b). Negative SOAs indicate the tone preceded the luminance onset, positive that the tone followed the luminance onset. The data were divided into those where audition leads in the previous trial (blue symbols) and those where it lags (red symbols), ignoring trials preceded by simultaneous audiovisual stimuli. Colour-coded curves are best-fitting Gaussian distributions, free to vary in mean (giving and estimate of PSS) and standard deviation (estimating simultaneity bandwidth).

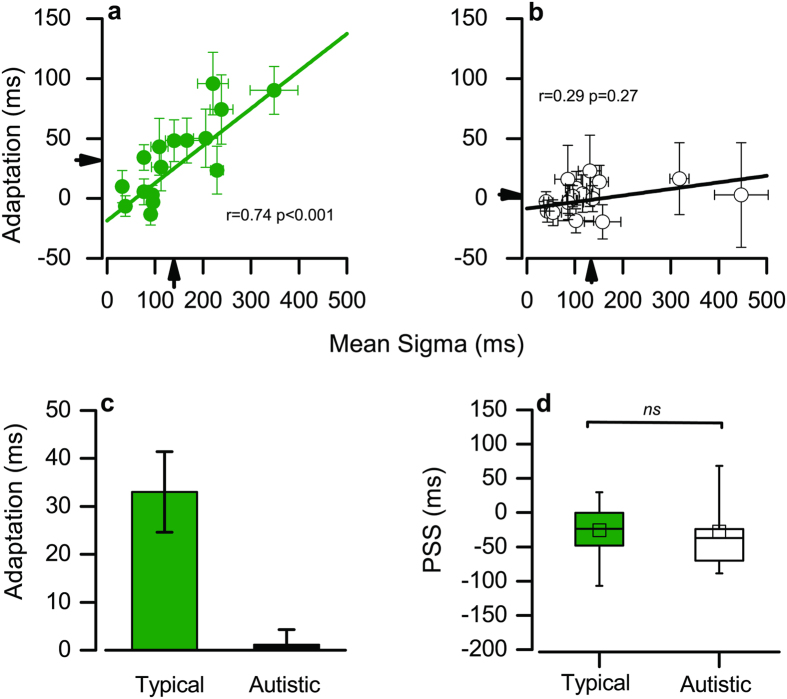

Figure 2 shows the adaptation effect (difference between the means of the fitted gaussians for auditory-lead and auditory-lag) for each individual, plotted against their simultaneity bandwidths (standard deviation of gaussian fitted to all trials). While both groups have a similar range of simultaneity bandwidths (arrows indicate means; t(30) = 0.21 p = 0.83, two-tailed), only the typical adults show strong adaptation effects (see arrows on ordinate, and bar graphs of Fig. 2c; t(30) = 3.54, p = 0.001, two-tailed). When we remove the two autistic participants with especially high bandwidths (see Fig. 2b), there are still no significant group differences in participants’ bandwidths, t(28) = 1.71 p = 0.10, two-tailed).

Figure 2. Adaptation effect, point of perceived simultaneity and simultaneity bandwidths.

(a,b) The adaptation effect (the difference in PSS for trials preceded by audio-lead and those by audio-lag) as a function of the simultaneity bandwidth (standard deviation of Gaussian fitted to all data), for typical (a) autistic (b) adults. Data were bootstrapped to estimate standard errors, indicated by the bars. (c) Mean adaptation effect for the two groups. (d) PSSs for each group, given by the mean of the Gaussian functions fitted to all data of each participant. The box refers to the 25 and 75 percentiles, the whiskers to 5 and 95 percentiles.

As Van der Burg et al.10 observed, for the typical adults, there is a robust correlation between strength of adaptation and simultaneity bandwidth, with participants with broader simultaneity bandwidth showing greater adaptation effects (r = 0.74, p < 0.001). However, for the autistic adults, there was no measurable relationship (r = 0.29, p = 0.27).

Figure 2d shows the PPSs (points of subjective simultaneity) for typical and autistic participants, given by the means of gaussians fitted to all data from each participant. The mean for both groups is about −25 ms, implying that the auditory stimulus had to lead by 25 ms for the two to appear simultaneous. There is considerable spread in PSS within both groups, covering a similar range (see box and whisker plot), but no significant difference between the groups (t(30) = 0.1, p = 0.92, two-tailed).

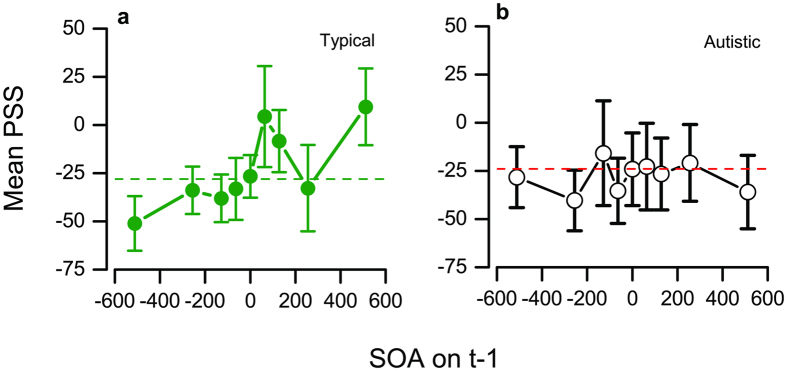

Figure 3 examines how PSS varies as function of the SOA on trial t−1 (again calculated separately for each participant, then averaged). For the typical group, there was a clear dependency on SOA, F(8,120) = 2.38 p = 0.02, but none for the autistic group, F(8,120) = 0.60, p = 0.77. It is evident that the dependency on previous trials occurs principally when the previous trial had a very large lead or lag, at ± 512 ms.

Figure 3. Effect of SOA of previous trial on point of subjective simultaneity.

Mean PSS (calculated separately for each participant, than averaged) as a function of SOA of the previous trial, for the typical group (a) and autistic group (b).

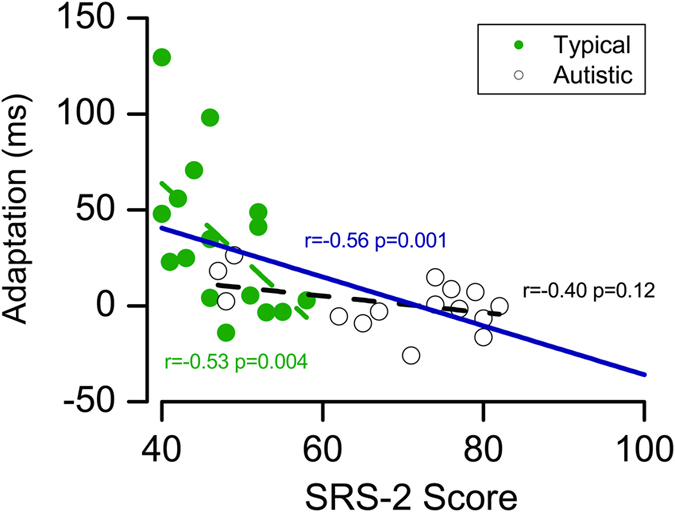

Figure 4 plots the magnitude of the adaptation effect against SRS-2 scores. Considering all participants, the correlation was strong and significant (r = −0.56, p = 0.001). Interestingly, the correlation was also significant within the typical group (r = −0.53, p = 0.04), showing that reduced adaptation co-varies with autistic traits, even for individuals not diagnosed with autism. Within the autistic group, there was a non-significant trend (r = −0.40, p = 0.12). Given that the adaptation for all the autistic participants was close to zero, the lack of significance most likely reflects a floor effect.

Figure 4. Relationship between adaptation and Social Responsiveness Scale.

Adaptation effect (the difference in PSS for trials preceded by audio-lead and those by audio-lag) as a function of Social Responsiveness Scale (SRS-2) score for all individuals (typical: green; autistic: black). The colour-coded broken lines show the correlations within each group, the continuous navy line the correlation between all participants. Full (blue dashed line) and partial correlation between recalibration effect and autistic traits measured by SRS-2 in the typical (green dashed line) and autistic (black dashed line) group.

Discussion

The results clearly show that autistic adults do not rapidly adapt to audiovisual synchrony, although matched typical adults showed robust adaptation. Furthermore, while the strength of adaptation in typical adults correlated strongly with the bandwidth of the perceived audiovisual synchrony window, there was no such correlation in the autistic group.

These results fit well with recent Bayesian models of autism25,29,32,33 that predict that individuals with autism should give less weighting to prior or predictive information and have reduced adaptation effects. While the mechanisms of adaptation may not be fully understood, adaptation is one of the clearest examples of transient neural plasticity, where the output of perceptual processes depend not only on the current stimuli, but on the immediate history. Many models link adaptation effects to Bayesian prediction39,40, suggesting that priors may serve as standards for self-calibration, which is the function of adaptation. Atypicalities in the prior in autistic individuals – either in its construction or use as a calibration standard – should impact on the magnitude of adaptation.

Previous research has demonstrated reduced adaptation in autism to faces34 and to number35, but not to more basic properties such as motion and shape aftereffects41,42. Similarly, in audition, autistic participants show reduced “simple loudness adaptation”, thought to be mediated by the central auditory system, but normal “induced loudness adaptation”, thought to be mediated by the peripheral auditory system43. It seems that reduced adaptation for high-level attributes is a general property of autistic perception. Both face and number perception are complex but important perceptual processes, where adaptation is thought to serve an important recalibration function44,45. Audiovisual synchrony must also involve higher-level processes, after the separate processing of auditory and visual signals.

There is considerable evidence that integration of audiovisual linguistic stimuli is atypical in autism13,14,15,16,17,18,19 but the underlying neural mechanisms for the atypicalities are not understood. Observing lip movement helps speech understanding, but only if the two are synchronized4. Detecting audiovisual synchrony is therefore fundamental for integration of audiovisual linguistic stimuli. This is a complex task for the nervous system. As both signals are subject to variable delays, it is not sufficiently simply to judge which stimulus arrived first at a given neural station, but constant recalibration is necessary to deal with the variability in timing. There is much evidence for audiovisual recalibration in typical adults, both after lengthy presentations of asynchronous stimuli6,7,8,9, and even after a single, brief exposure10,11,12,46.

Here we show that autistic adults do not show the rapid adaptation effects that we reliably reproduce in typical adults. There was no measurable adaptation in our group of autistic participants, despite using the identical procedure to that used for the typical participants, and in previous studies10. Furthermore, there was strong correlation between adaptation strength and scores on the social responsiveness scale (SRS-2). This correlation was driven mainly by the typical adults, suggesting that an association between lack of rapid adaptation and autistic traits exists, even for individuals below the diagnosis threshold. The lack of correlation within the autism group probably results from saturation of the effect: adaptation was near zero for the entire group.

Importantly, besides the lack of rapid adaptation, there were no obvious differences between the two groups in the perceived synchrony tasks other than the absence of adaptation. The mean point of subjective synchrony was very similar for the two groups, both about −25 ms (audio-lead), comparable spread of PSSs between individuals. The widths of the simultaneity window were also similar for the two groups, both the mean and the variability: the only difference was that in the group of autistic adults, the widths did not correlate with adaptation strength. All of this suggests that, despite differences in rapid adaptation, autistic people face no gross problems in perceiving synchrony of non-linguistic audiovisual signals. Our results are in line with studies reporting no significant difference in the width of simultaneity window in autistic people using non-linguistic stimuli13,18,23. Perhaps they have other mechanisms involved in the conscious perception of audiovisual synchrony; or perhaps other calibration mechanisms are involved, operating over a longer timescale. It would be interesting to measure perceived synchrony under variable conditions, such as distance and luminance, both of which should affect neural synchrony. Interestingly, the only other evidence of autism-related differences in audiovisual integration with non-linguistic stimuli is the reduced audiovisual “pop-out” in visual search24, where temporal coincidence in sounds and visual targets makes them more detectable. Perhaps this task relies on rapid calibration mechanisms.

Although the lack of rapid recalibration seems to have no gross consequences to audiovisual synchrony judgements of simple, non-linguistic stimuli in autistic adults, it may have more subtle consequences, which could be important, for example, for speech perception. There are indications that this may be the case. While most studies have found no problems in audiovisual integration of simple stimuli13,18, there is both psychophysical and electrophysiological evidence that autism is associated with reduced audiovisual integration in speech perception16,17. It may be that one aspect contributing to the reduced audiovisual speech integration and lip-reading abilities19 is the failure to keep audiovisual synchrony calibrated. As the integration of lip movements and speech is greatest when the inputs are synchronized, rapidly aligning temporally offset auditory and visual streams after brief asynchrony would be extremely beneficial. For example, rapid recalibration would ensure that an audiovisual speech stream received from a source at any distance could be rapidly realigned to maximize speech comprehension.

In the case of reduced adaptation in autism, as shown here, the efficiency that would be gained from processing multiple sensory signals as a single percept driven by recalibration would be lost, resulting in less efficient sensory processing overall. Further investigation is needed to better understand the neural substrates for this mechanism and their relationship with audiovisual integration in speech perception.

Methods

Participants

We tested 16 autistic adults and 16 typical adults (with no current or past medical or psychiatric diagnosis), matched for age (t(30) = 1.38 p = 0.17, two-tailed), gender and intellectual functioning (t(30) = −0.44 p = 0.65, two-tailed) (see Table 1). All autistic adults had received a clinical diagnosis of autism or Asperger’s disorder according to Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition (DSM-IV) criteria1 from independent clinician, and also met criteria for an autism spectrum disorder (ASD) on the Autism Diagnostic Observation Schedule – 2nd edition (ADOS-2)47. No participant had a medical or developmental disorder (other than ASD), nor was on medication, and all reported normal visual acuity and hearing. All participants completed the Social Responsiveness Scale – 2nd Edition (SRS-2)48, a quantitative measure of autistic traits. Participants were seen individually in a quiet room at the university. All procedures were approved by the regional ethics committee at the Azienda Ospedaliero-Universitaria Meyer. The participants gave their informed consent prior to their participation in accordance with the institutional approved guidelines.

Table 1. Descriptive statistics for developmental variables for autistic and typical adults.

| Autistic adults | Neurotypical adults | |

|---|---|---|

| N | 16 | 16 |

| Gender (male: female) | 12: 4 | 13: 3 |

| Age (years) | ||

| Mean (SD) | 29 .2 (5.2) | 27.1 (2.83) |

| Range | 17–34 | 18–31 |

| Full-Scale IQa | ||

| Mean (SD) | 112.0 (10.32) | 112.7 (11.44) |

| Range | 89–129 | 94–139 |

| ADOS-2b | ||

| Mean (SD) | 8.56 (2.03) | — |

| Range | 7–13 | — |

| SRS-2c | ||

| Mean (SD) | 69.37 (12.07) | 47.31 (5.61) |

| Range | 47–74 | 40–58 |

Stimuli and Procedure

Stimuli were generated with the Psychophysics Toolbox49 and presented at a viewing distance of 57 cm on a 23” LCD Acer monitor (resolution = 1920 × 1080 pixels; refresh rate = 60 Hz; mean luminance = 60 cd/m2), run by Macintosh laptop. The visual stimulus was a white ring (radius 2.6°; width 0.4°), presented around a white fixation cross on a black background (60 cdm) for a duration of 50 ms. The auditory stimulus was a 500 Hz tone of 50 ms duration, presented via headphones (Sennheiser HD201). Each trial started with the white fixation cross on a black background for 1 s, followed by an audiovisual stimulus of variable delay, randomly 0, ±64, ±128, ±256, ±512 ms, where negative means the auditory stimulus was presented first. Participants did not judge temporal order; rather, they were instructed to judge (by corresponding key press) whether the sound and visual display appeared to be synchronous or not. Participants performed 40 trials for each temporal condition in four sessions, giving a total of 400 trials. Fixation was monitored by the experimenters (two were present for all testing sessions).

Additional Information

How to cite this article: Turi, M. et al. No rapid audiovisual recalibration in adults on the autism spectrum. Sci. Rep. 6, 21756; doi: 10.1038/srep21756 (2016).

Acknowledgments

We thank all the participants who kindly gave up their time to take part in this study, and Anna Remington, Jake Fairnie and Angelica Brasacchio for their help in recruiting and testing. This work was supported by a grant from the United Kingdom’s Medical Research Council (MR/J013145/1) and European Union Grants Framework 7 - European Research Council (FP7- ERC) “Space Time and Number in The Brain (STANIB)” and “Early cortical sensory plasticity and adaptability in human adults (ESCPLAIN).” Research at the Centre for Research in Autism and Education is also supported by The Clothworkers’ Foundation and Pears Foundation.

Footnotes

Author Contributions M.T., D.C.B. and E.P. designed research; M.T. and T.K. performed research; M.T., D.C.B., T.K. and E.P. analyzed data; and M.T., D.C.B. and E.P. wrote the paper.

References

- American Psychiatric Association Arlington V. A. P. P. Diagnostic and statistical manual of mental disorders (5th ed.) (2013). [Google Scholar]

- Bagby M. S., Dickie V. A. & Baranek G. T. How Sensory Experiences of Children With and Without Autism Affect Family Occupations. American Journal of Occupational Therapy 66, 78–86, doi: 10.5014/Ajot.2012.000604 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams D. Somebody somewhere: breaking free from the world of autism. 1st edn, (Times Book, 1994). [Google Scholar]

- Sumby W. H. & Pollack I. Visual Contribution to Speech Intelligibility in Noise. The Journal of the Acoustical Society of America 26, 212–215, doi: 10.1121/1.1907309 (1954). [DOI] [Google Scholar]

- King A. J. & Palmer A. R. Integration of visual and auditory information in bimodal neurones in the guinea-pig superior colliculus. Exp Brain Res 60, 492–500 (1985). [DOI] [PubMed] [Google Scholar]

- Fujisaki W., Shimojo S., Kashino M. & Nishida S. Recalibration of audiovisual simultaneity. Nat Neurosci 7, 773–778, doi: 10.1038/nn1268 (2004). [DOI] [PubMed] [Google Scholar]

- Navarra J., Hartcher-O’Brien J., Piazza E. & Spence C. Adaptation to audiovisual asynchrony modulates the speeded detection of sound. Proc Natl Acad Sci USA 106, 9169–9173, doi: 10.1073/pnas.0810486106 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roseboom W. & Arnold D. H. Twice upon a time: multiple concurrent temporal recalibrations of audiovisual speech. Psychol Sci 22, 872–877, doi: 10.1177/0956797611413293 (2011). [DOI] [PubMed] [Google Scholar]

- Vroomen J., Keetels M., de Gelder B. & Bertelson P. Recalibration of temporal order perception by exposure to audio-visual asynchrony. Brain Res Cogn Brain Res 22, 32–35, doi: 10.1016/j.cogbrainres.2004.07.003 (2004). [DOI] [PubMed] [Google Scholar]

- Van der Burg E., Alais D. & Cass J. Rapid recalibration to audiovisual asynchrony. J Neurosci 33, 14633–14637, doi: 10.1523/JNEUROSCI.1182-13.2013 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van der Burg E. & Goodbourn P. T. Rapid, generalized adaptation to asynchronous audiovisual speech. Proc Biol Sci 282, 20143083, doi: 10.1098/rspb.2014.3083 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wozny D. R. & Shams L. Recalibration of auditory space following milliseconds of cross-modal discrepancy. J Neurosci 31, 4607–4612, doi: 10.1523/JNEUROSCI.6079-10.2011 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bebko J. M., Weiss J. A., Demark J. L. & Gomez P. Discrimination of temporal synchrony in intermodal events by children with autism and children with developmental disabilities without autism. J Child Psychol Psychiatry 47, 88–98, doi: 10.1111/j.1469-7610.2005.01443.x (2006). [DOI] [PubMed] [Google Scholar]

- Charbonneau G. et al. Multilevel alterations in the processing of audio–visual emotion expressions in autism spectrum disorders. Neuropsychologia 51, 1002–1010, doi: 10.1016/j.neuropsychologia.2013.02.009 (2013). [DOI] [PubMed] [Google Scholar]

- Gelder B. d., Vroomen J. & van der Heide L. Face recognition and lip-reading in autism. European Journal of Cognitive Psychology 3, 69–86, doi: 10.1080/09541449108406220 (1991). [DOI] [Google Scholar]

- Magnée M. J. C. M., De Gelder B., Van Engeland H. & Kemner C. Audiovisual speech integration in pervasive developmental disorder: evidence from event-related potentials. Journal of Child Psychology and Psychiatry 49, 995–1000, doi: 10.1111/j.1469-7610.2008.01902.x (2008). [DOI] [PubMed] [Google Scholar]

- Megnin O. et al. Audiovisual speech integration in autism spectrum disorders: ERP evidence for atypicalities in lexical-semantic processing. Autism Res 5, 39–48, doi: 10.1002/aur.231 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mongillo E. A. et al. Audiovisual processing in children with and without autism spectrum disorders. J Autism Dev Disord 38, 1349–1358, doi: 10.1007/s10803-007-0521-y (2008). [DOI] [PubMed] [Google Scholar]

- Smith E. G. & Bennetto L. Audiovisual speech integration and lipreading in autism. Journal of Child Psychology and Psychiatry 48, 813–821, doi: 10.1111/j.1469-7610.2007.01766.x (2007). [DOI] [PubMed] [Google Scholar]

- de Boer-Schellekens L., Eussen M. & Vroomen J. Diminished sensitivity of audiovisual temporal order in autism spectrum disorder. Frontiers in Integrative Neuroscience 7, 8, doi: 10.3389/fnint.2013.00008 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foss-Feig J. H. et al. An extended multisensory temporal binding window in autism spectrum disorders. Exp Brain Res 203, 381–389, doi: 10.1007/s00221-010-2240-4 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwakye L. D., Foss-Feig J. H., Cascio C. J., Stone W. L. & Wallace M. T. Altered auditory and multisensory temporal processing in autism spectrum disorders. Front Integr Neurosci 4, 129, doi: 10.3389/fnint.2010.00129 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson R. A. et al. Multisensory temporal integration in autism spectrum disorders. J Neurosci 34, 691–697, doi: 10.1523/JNEUROSCI.3615-13.2014 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collignon O. et al. Reduced multisensory facilitation in persons with autism. Cortex 49, 1704–1710, doi: 10.1016/j.cortex.2012.06.001 (2013). [DOI] [PubMed] [Google Scholar]

- Pellicano E. & Burr D. When the world becomes ‘too real’: a Bayesian explanation of autistic perception. Trends Cogn Sci 16, 504–510, doi: 10.1016/j.tics.2012.08.009 (2012). [DOI] [PubMed] [Google Scholar]

- Kersten D., Mamassian P. & Yuille A. Object perception as Bayesian inference. Annual review of psychology 55, 271–304, doi: 10.1146/annurev.psych.55.090902.142005 (2004). [DOI] [PubMed] [Google Scholar]

- Knill D. C. & Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends in neurosciences 27, 712–719, doi: 10.1016/j.tins.2004.10.007 (2004). [DOI] [PubMed] [Google Scholar]

- Mamassian P., Landy M. & Maloney L. In Probabilistic Models of the Brain: Perception and Neural Function (eds Rao R., Olshausen B., & Lewicki M.) (Bradford Books, 2002). [Google Scholar]

- Friston K. J., Lawson R. & Frith C. D. On hyperpriors and hypopriors: comment on Pellicano and Burr. Trends Cogn Sci 17, 1, doi: 10.1016/j.tics.2012.11.003 (2013). [DOI] [PubMed] [Google Scholar]

- Lawson R. P., Rees G. & Friston K. J. An aberrant precision account of autism. Frontiers in Human Neuroscience 8, doi: 10.3389/fnhum.2014.00302 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van de Cruys S., de-Wit L., Evers K., Boets B. & Wagemans J. Weak priors versus overfitting of predictions in autism: Reply to Pellicano and Burr (TICS, 2012). Iperception 4, 95–97, doi: 10.1068/i0580ic (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinha P. et al. Autism as a disorder of prediction. Proceedings of the National Academy of Sciences 111, 15220–15225, doi: 10.1073/pnas.1416797111 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenberg A., Patterson J. S. & Angelaki D. E. A computational perspective on autism. Proceedings of the National Academy of Sciences 112, 9158–9165, doi: 10.1073/pnas.1510583112 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pellicano E., Jeffery L., Burr D. & Rhodes G. Abnormal adaptive face-coding mechanisms in children with autism spectrum disorder. Curr Biol 17, 1508–1512, doi: 10.1016/j.cub.2007.07.065 (2007). [DOI] [PubMed] [Google Scholar]

- Turi M. et al. Children with autism spectrum disorder show reduced adaptation to number. Proc Natl Acad Sci U S A 112, 7868–7872, doi: 10.1073/pnas.1504099112 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlow H. Conditions for versatile learning, Helmholtz’s unconscious inference and the task of perception. Vision Res 30, 1561–1571 (1990). [DOI] [PubMed] [Google Scholar]

- Clifford C. W. et al. Visual adaptation: neural, psychological and computational aspects. Vision Res 47, 3125–3131, doi: 10.1016/j.visres.2007.08.023 (2007). [DOI] [PubMed] [Google Scholar]

- Webster M. A. W. J. Field DJ Oxford, UK: Oxford University Press. pp. 241–277. In Advances in Cognition Series: Vol.2. Fitting the Mind to the World: Adaptation and Aftereffects in High Level Vision. (ed Clifford C. & Rhodes G.) (Oxford University Press, 2005). [Google Scholar]

- Chopin A. & Mamassian P. Predictive properties of visual adaptation. Curr Biol 22, 622–626, doi: 10.1016/j.cub.2012.02.021 (2012). [DOI] [PubMed] [Google Scholar]

- Stocker A. A. & Simoncelli E. P. Sensory adaptation within a Bayesian framework for perception. In Weiss Y., Schoelkopf B., & Platt J. (Eds.). Advance in neural information processing systems. (2006). [Google Scholar]

- Pellicano E., Rhodes G., Jeffery L. & Burr D. Children with autism spectrum disorder show typical adaptation to motion. Manuscript in preparation (2015). [Google Scholar]

- Karaminis T. et al. Atypicalities in Perceptual Adaptation in Autism Do Not Extend to Perceptual Causality. PLoS ONE 10, e0120439, doi: 10.1371/journal.pone.0120439 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawson R. P., Aylward J., White S. & Rees G. A striking reduction of simple loudness adaptation in autism. Sci Rep 5, 16157, doi: 10.1038/srep16157 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clifford C. W. G. & Rhodes G. Fitting the mind to the world: adaptation and after-effects in high-level vision. 1st edn, (Oxford University Press, 2005). [Google Scholar]

- Rhodes G. & Jeffery L. Adaptive norm-based coding of facial identity. Vision Res 46, 2977–2987, doi: 10.1016/j.visres.2006.03.002 (2006). [DOI] [PubMed] [Google Scholar]

- Van der Burg E., Orchard-Mills E. & Alais D. Rapid temporal recalibration is unique to audiovisual stimuli. Exp Brain Res 233, 53–59, doi: 10.1007/s00221-014-4085-8 (2015). [DOI] [PubMed] [Google Scholar]

- Lord C. et al. Autism Diagnostic Observation Schedule, Second Edition (ADOS-2). (Western Psychological Services, 2012). [Google Scholar]

- Constantino J. N. G. C. P. Social Responsiveness Scale, Second Edition. (Western Psychological Services, 2012). [Google Scholar]

- Brainard D. H. The Psychophysics Toolbox. Spat Vis 10, 433–436 (1997). [PubMed] [Google Scholar]

- Wechsler D. Wechsler Abbreviated Scale of Intelligence. (The Psychological Corporation: Harcourt Brace & Company, 1999).