Significance

Our study introduces a conceptual framework and empirical approach to explore how knowledge impacts decision-making. We illustrate this approach with knowledge about ecosystem services (ES), but the approach itself can be applied broadly. Our results indicate that the legitimacy of knowledge (i.e., perceived as unbiased and representative of multiple points of view) is of paramount importance for impact. More surprisingly, we found that credibility of knowledge is not a significant predictor of impact. To enhance legitimacy, ES researchers must engage meaningfully with decision-makers and stakeholders in processes of knowledge coproduction that incorporate diverse perspectives transparently. Our results indicate how research can be designed and carried out to maximize the potential impact on real-world decisions.

Keywords: ecosystem services, science policy interface, conservation, knowledge systems, boundary organizations

Abstract

Research about ecosystem services (ES) often aims to generate knowledge that influences policies and institutions for conservation and human development. However, we have limited understanding of how decision-makers use ES knowledge or what factors facilitate use. Here we address this gap and report on, to our knowledge, the first quantitative analysis of the factors and conditions that explain the policy impact of ES knowledge. We analyze a global sample of cases where similar ES knowledge was generated and applied to decision-making. We first test whether attributes of ES knowledge themselves predict different measures of impact on decisions. We find that legitimacy of knowledge is more often associated with impact than either the credibility or salience of the knowledge. We also examine whether predictor variables related to the science-to-policy process and the contextual conditions of a case are significant in predicting impact. Our findings indicate that, although many factors are important, attributes of the knowledge and aspects of the science-to-policy process that enhance legitimacy best explain the impact of ES science on decision-making. Our results are consistent with both theory and previous qualitative assessments in suggesting that the attributes and perceptions of scientific knowledge and process within which knowledge is coproduced are important determinants of whether that knowledge leads to action.

The ongoing loss of biological diversity and persistence of poverty have sparked interest in policies that protect, restore, and enhance ecosystem services (ES). In response, there has been a growth in ES research that aims to inform policies, incentives, and institutions on a large scale (1–3). Despite this goal, scientific knowledge about ES continues to have limited impact on policy and decisions (1, 4–7).

The fact that most land- and resource-use policy decisions still do not take ES into account stems in part from an ineffective interface between ES science and policy, a need for scientists to better understand decision-making processes, and challenges in clarifying conflicting stakeholder values (8–11). In some cases, the science–policy interface is an important aspect of decision-making, but often the ES research and policy communities are disconnected from one another, with limited interactions, infrequent exchanges of information, and different objectives that hinder coordinated science and policy processes (12). Many scientists conduct ES research without fully considering how the knowledge they are producing might be used (5). If we want ES information to be incorporated into decisions, then we need to understand how and why decision-makers use certain kinds of information. We define “information” as a tangible, factual output of scientific research produced through specific ES analyses; “knowledge” as a body of information learned and conveyed through scientific and policy processes; and “knowledge systems” as knowledge itself, as well as the individuals, groups (including boundary organizations), and processes involved in producing, distributing, and using knowledge.

Much of the evidence for how and why ES knowledge influences policy decisions is anecdotal. A few recent studies have focused on this issue with qualitative, in-depth case studies (13–15). To more generally understand this issue, however, we also need quantitative, empirical research into how and why ES knowledge has an impact on decisions (5, 7, 13, 14). This topic is an understudied area of research, not least because empirical data on impacts from replicate cases are difficult to compile.

Here we report on a quantitative approach to understanding the factors and conditions that affect the impact of ES knowledge on decision-making. More carefully examining the relationships between impacts and enabling conditions helps us better understand why an ES approach may generate impacts on decisions and why it may not.

Scientific understanding of the factors that explain impact will benefit those who produce ES knowledge (i.e., by illuminating effective strategies for enhancing knowledge use) as well as decision-makers (i.e., by increasing the likelihood that they will receive useful ES knowledge). Cash et al. (16) identify salience, credibility, and legitimacy of knowledge as important enabling conditions for linking sustainability knowledge to action. “Salience” refers to the relevance of scientific knowledge to the needs of decision-makers; “credibility” comes from scientific and technical arguments being trustworthy and expert-based; and “legitimacy” refers to knowledge that is produced in an unbiased way and that fairly considers stakeholders’ different points of view. Their framework has inspired researchers to investigate these three attributes and how they affect decision-makers using knowledge (17–21).

Others focus on process rather than content of environmental management and policy (22–25). They describe the importance of joint fact-finding and iterative engagement among scientists and policymakers. These processes can affect the attributes of the knowledge—for example, a process of meaningful consultation and coproduction with all relevant stakeholders is necessary to create knowledge that is perceived as legitimate.

However, another branch of research has focused more on contextual conditions about the institutions, governance, and culture of places where environmental policy is successful. Haas et al. (26) and Wunder et al. (27) note institutional capacity to monitor environmental conditions and enforce rules as critical to effective science-based policies.

We organize these theoretical perspectives into three categories of enabling conditions for ES knowledge to lead to action (Table 1). The first category contains variables related to attributes of the scientific knowledge produced. These variables describe the relationships that people have with the ES knowledge. The second category includes variables that focus on characteristics of the process through which science informs decisions and policy. The third category contains variables that reflect contextual conditions of the project or place in which it is located. These categories are interrelated—for example, the scientific process helps to determine how the resulting knowledge is perceived. This association is particularly true in our study for legitimacy—an attribute of knowledge that depends strongly on the process of knowledge production.

Table 1.

Enabling conditions that facilitate the success of ES projects, as suggested by qualitative reviews of projects

| Attributes of knowledge | Process | Contextual conditions | Reference |

| Clear science about ES, interactions between services, and how proposed actions may affect services | A confined system with clearly identified stewards, perpetrators of negative impacts, and service beneficiaries | Good governance in terms of clearly defined ownership or tenure, a legal system, capacity to enforce laws and monitor impacts, and a functioning infrastructure to support projects | 25 |

| A clear policy question; A clear presentation of methods, assumptions, and limitations | Strong stakeholder engagement; Effective communications and access to decision-makers | Good governance; Local demand for valuation; Economic dependence on resources; High levels of threats to coastal resources | 51 |

| Policy question; Pertinent data; Integration of local and traditional knowledge | Meaningful participation and engagement with diverse groups; Joint knowledge production; Iterative process; Scenario development | Capacity to measure ES; Established planning process; Policy window | 13, 24, and 28 |

Drawing from these theoretical frameworks about linking knowledge with action, we test quantitatively which enabling conditions can explain the impact of ES science across a global sample of 15 cases. We focus primarily on the attributes of the knowledge produced, but also consider aspects of the science-to-policy process and contextual conditions. In so doing, we address the question, “What explains the impact of ES knowledge on decisions?” We hypothesize the following:

-

•

H1: Higher levels of salience, credibility, and legitimacy of ES knowledge are associated with higher measures of impact; and

-

•

H2: Attributes of the knowledge produced are more significant in explaining impact than aspects of the science to policy process or contextual conditions.

Methods

To examine whether certain factors and conditions predict impact, we sought a sample of cases in which similar scientific tools and approaches were used, but different levels of impact were achieved. We used a global sample of case studies from the Natural Capital Project, in which a standardized scientific tool, InVEST (Integrated Valuation of Environmental Services and Trade-offs), was applied to decisions with the aim of improving conservation, human development, and environmental planning outcomes (Table S1). The Natural Capital Project was formed in 2006 by the World Wildlife Fund (WWF), the Nature Conservancy, Stanford University, and the University of Minnesota, under the premise that information on biodiversity and ES can be used to inform decisions that improve human well-being and the condition of ecosystems (28). InVEST is a suite of software models that can be used to map, quantify, and value ES (29–31).

Table S1.

The sample of 15 global cases in which InVEST was used in a policy decision context

| No. | Location | Decision context | Organizations of survey respondents |

| 1 | Belize | Spatial planning | Coastal Zone Management Authority and Institute (national government) |

| 2 | Canada - West Coast Vancouver Island | Spatial planning | West Coast Aquatic (board with representation from local and provincial government, nine First Nations, conservation NGOs, and businesses) |

| 3 | Colombia - Cauca Valley Water Fund | Water funds | Cauca Valley Water Fund, The Nature Conservancy |

| 4 | Colombia - Cesar Department | Permitting & mitigation | The Nature Conservancy |

| 5 | China - Baoxing County, Hainan Island, Upper Yangtze River Basin | Spatial planning for Ecosystem Function Conservation Areas | Chinese Academy of Sciences, The Natural Capital Project |

| 6 | Himalayas (Bhutan, Nepal, India) | Spatial planning | WWF Eastern Himalayas Program |

| 7 | Latin America Water Funds Platform | Water funds | The Nature Conservancy |

| 8 | Indonesia - Borneo | Spatial planning, policy advocacy | WWF Indonesia |

| 9 | Indonesia - Sumatra | Spatial planning, Strategic Environmental Assessment | WWF Indonesia |

| 10 | Tanzania - Eastern Arc Mountains | PES & REDD planning, policy advocacy | Valuing the Arc project at Cambridge University and WWF |

| 11 | United States – Ft. Lewis-McChord and Ft. Pickett | Spatial planning for military installation activities | US Army and Air Force (Department of Defense) |

| 12 | United States -Galveston Bay in Texas | Hazard management, spatial planning, climate adaptation | SSPEED (Severe Storm Prediction, Education and Evacuation from Disasters) Center led by Rice University, The Nature Conservancy marine science |

| 13 | United States -Monterey Bay in California | Climate adaptation | Santa Cruz County, Moss Landing Marine Labs of California State Universities |

| 14 | United States - North Shore of O‵ahu in Hawai‵i | Spatial planning | Kamehameha Schools (private land-owner) |

| 15 | Virungas Landscape - DRC, Uganda, Rwanda | Permitting & mitigation, Strategic Environmental Assessment | Institute of Tropical Forest Conservation |

Each case represents a data point in the analysis. Ruckelshaus et al. (2015) discusses cases in more detail.

To measure the predictor variables such as salience, credibility, and legitimacy, we sent an electronic survey to decision-makers and boundary organization contacts in 25 of the demonstration sites presented in ref. 28. Boundary organizations were nongovernmental organizations (NGOs) that aimed to create more effective policymaking by spanning/bridging the science and policy communities (32). We received survey responses from 15 cases (Table S1). The survey collected self-reported, ordinal scale data on the variables that we identified in the literature and from practitioner experiences as important elements of an effective science policy interface (Table S2). This survey is not an exhaustive list of all potential enabling conditions, but includes variables that multiple sources identify as significant. Specific survey questions are included in SI Text. The survey was reviewed and approved by the University of Vermont Research Protections Office. We followed ethical principles for survey procedures, informed respondents that their participation was voluntary, and ensured participant anonymity.

Table S2.

Summary of predictor variables in three broad categories of enabling conditions

| Variable | Description | Survey question | References | |

| Attributes of knowledge | ||||

| Salience | The relevance and timeliness of scientific knowledge to the needs of decision-makers | Q1 | Cash et al. (16); Hirsch and Luzadis (52) | |

| Credibility | The degree to which scientific and technical arguments are trustworthy and expert-based | Q2 | Cash et al. (16) | |

| Legitimacy | Whether knowledge considers stakeholders’ different points of view, as evidenced by the representation of diverse views in decision-making processes | Q3 | Cash et al. (16) | |

| Local knowledge | Whether local knowledge and experience was included in ES assessment and planning | Q4 | Watson (53) | |

| Aspects of the process | ||||

| Coproduction | Scientists, stakeholders, and decision-makers working together to produce ES information; | Q5 | Cash et al. (16); Karl et al. (23); Rowe and Lee (19) | |

| Interactions PC* | Amount of interaction between decision-makers and scientists by phone/email, or in person | Q6 and Q7 | Karl et al. (23); Rowe and Lee (19) | |

| Represent PC** | Proportion of stakeholders represented; concentrated or shared distribution of decision-making power | Q8 and Q14 | Beierle and Konisky (54); Young et al. (46); Lee (55); Reed (43), The Natural Capital Project | |

| Dissent | Level of conflict or disagreement among stakeholders | Q9 | Karl et al.(23) | |

| Trust | Amount that trust between stakeholders increased during project | Q10 | Pretty and Smith (56); Pretty and Ward (57) | |

| Length | Length of project in years | The Natural Capital Project | ||

| Contextual conditions | ||||

| Inst. capacity PC*** | Capacity before the project to: measure baseline ES and human activities; monitor changes to ES and human activities; implement policy | Q11, Q12, and Q13 | Ferraro et al. (58); Smit and Wandel (59); Haas et al. (26) | |

| Year | Year project began | The Natural Capital Project | ||

See SI Text for survey questions. Multimodel inference was conducted with the following first principal components based on the variables noted below: *Interactions PC; **Represent PC; ***Inst. Capacity PC.

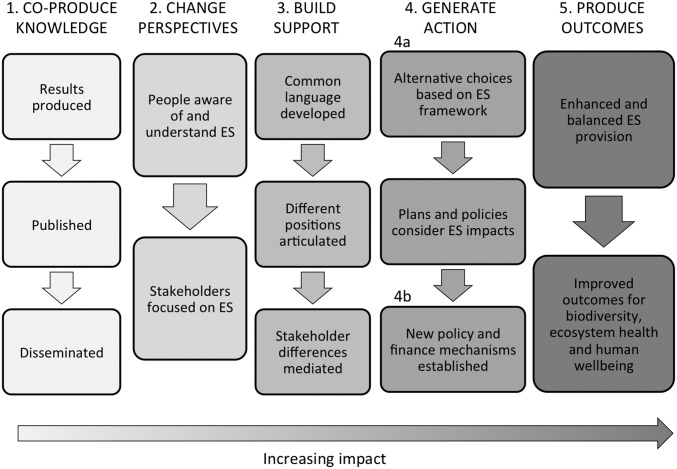

To measure the outcome variable of impact, we used expert review and the evaluative framework for impact described by Ruckelshaus et al. (28). We modified the framework to include five pathways through which impact is achieved in ES projects (Fig. 1). We added pathway 3 to reflect insights into the impact of ES knowledge: coproduction of knowledge (pathway 1), conceptual use (pathway 2), strategic use (pathway 3), instrumental use (pathway 4), and outcomes for human well-being, biodiversity, and ecosystems (pathway 5) (13).

Fig. 1.

Evaluative framework for how ES knowledge leads to impact. Each column represents a pathway to impacting decisions, with deeper impact going down each pathway and from left to right between pathways. Impacts 2, 3, 4a, and 4b were the basis for our measurement of impact in each of the cases. Impact 3 refers to how ES knowledge can be used to build support for considering ES in decisions.

Three reviewers used a rubric with a five-point scale based on this evaluative framework to provide initial impact scores for each case. The reviewers (one of whom is a coauthor) provided scores by analyzing a qualitative review of impacts from Ruckelshaus et al. (28), written documentation of the cases (including project reports, management plans, or case study summaries), and online resources pertaining to the cases (such as project websites or presentations to decision-makers). Through a Delphi process, the reviewers then gathered and discussed results before independently revising their scores. We averaged the three reviewer scores to obtain, for each case, an estimate of impact 3 (build support), impact 4a (generate action: proposed plans and policies based on ES), and impact 4b (generate action: new policy or finance mechanisms for ES established). Similar methods have been described by Sutherland (33) and used by Sutherland et al. (34) and Kenward et al. (35) to evaluate the impact of science and governance strategies.

A fourth measure of impact included in our analysis, impact 2 (awareness and understanding of ES), was based on the survey rather than the expert scores (Table 2). We omitted columns 1 and 5 of the evaluative framework from this study because all cases involved coproducing and publishing research results, and it is difficult to show whether biodiversity, wellbeing, or ES outcomes were enhanced over the timescale of these projects (Fig. 1). Given the post facto nature of this research, the design of the study is limited by the inability to use rigorous impact evaluation methods, such as comparing before–after change with counterfactual cases that did not undergo ES projects (36–38).

Table 2.

Questions to assess impact (the response variable) according to the evaluative framework

| Impact pathway | Measured with | Questions for survey respondents (for impact 2) or expert reviewers (for impact 3, 4a, and 4b) |

| 2: Change perspectives | Survey | What proportion of stakeholders was aware of and understood ES before the ES project? After the project?* |

| 3: Build support | Expert scoring | How much were the science and InVEST results used to build support among stakeholders for considering ES? |

| Did the ES knowledge help develop a common language among stakeholders, articulate different positions, or mediate differences? | ||

| 4a: Generate action | Expert scoring | How much did draft plans or policies emerge that consider ES? |

| Did proposed plans or policies consider ES? | ||

| 4b: Generate action | Expert scoring | Did a plan, policy, or finance mechanism to enhance, conserve, or restore ES become established? |

In the survey, we primed respondents to think about how much stakeholders were focused on and paying attention to ES, and defined “stakeholders” as “people or groups with an interest or concern in policy decisions that affect ES (for example, individual landowners, conservation NGOs, private businesses).”

Interrater reliability analysis was used to compare among the three expert reviewers who measured impact in the cases. We calculated a Krippendorff’s alpha to measure agreement for ordinal data among three reviewers. From our sample, α = −0.0544 for impact 3, α = 0.655 for impact 4a, and α = 0.619 for impact 4b. For conclusions based on the impact data, we took α ≥ 0.6 as acceptable for this study (39). The low level of agreement among reviewers for impact 3 indicates that only tentative conclusions should be drawn from these data.

We dealt with positive survey response bias by comparing cases to each other. So long as we can assume the positive response biases in our survey responses are equivalent across cases, then comparative analyses such as regressions remain informative. We dealt with bias in who responded to the survey by targeting specific decision-makers or representatives of boundary organizations who were involved in the ES projects (Table S1).

We treated the 15 cases as independent data points in the analysis, each with four outcome variables (i.e., measures of impact; Table 2) and 16 predictor variables (i.e., enabling conditions; Table S2). To test H1, we used the 5-point scale to group cases into broader categories (low, medium, and high) for salience, credibility, and legitimacy. For example, the lowest two levels of credibility of ES knowledge were labeled as “low,” the middle two levels were labeled “medium,” and the highest level assigned to the cases by survey respondents was labeled “high.” We then used analysis of variance (ANOVA) to test whether higher levels of salience, credibility, and legitimacy were associated with higher impact for all four impact measures (ANOVA type III test for the unequal number of cases in each of the groups). We used Tukey–Kramer tests in a post hoc analysis to confirm the ANOVA results and test whether means in each category were significantly different from each other.

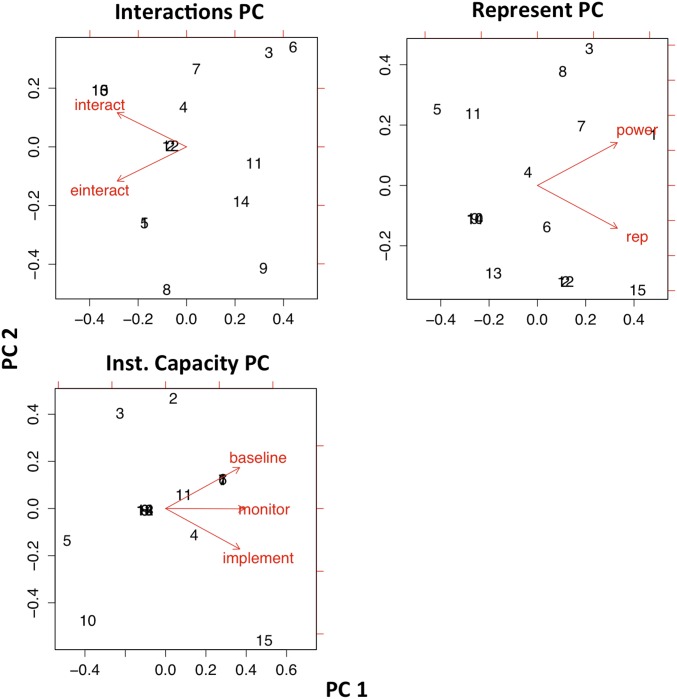

To test H2 about which enabling conditions could best explain impact, we used an information theoretic approach (35). We first reduced the dataset by using principal components analysis (PCA) on predictor variables with a Spearman rank correlation coefficient ≥ 0.80 (Fig. S1) (40). Using the first principal component for groups of highly correlated variables allowed us to focus on 12 predictor variables. We used the R package MuMIn to conduct multimodel inference (41). We tested all possible linear models with these 12 variables, for each of the four measures of impact. To determine which predictors best explain variability in impact, we ranked the top models by AICc values and calculated model average coefficients with 95% confidence intervals for each of the predictors. Model average coefficients represent the average coefficient for each predictor variable across all models, weighted for goodness of fit of the models (42).

Fig. S1.

PCA biplots. We reduced the dataset by combining the following highly correlated variables. Represent PC, power and representation (correlation coefficient = 0.74); Interactions PC, in-person interactions and electronic interactions (correlation coefficient = 0.72); Inst. Capacity PC, institutional capacity to measure baseline conditions, monitor changes, and implement policies (paired correlation coefficients of 0.70, 0.79, and 0.80). In subsequent analyses, we used PC1 for predictor variables (or the inverse of Interactions PC1, so that increases in the principal component correlated to increases in the underlying variables).

Results

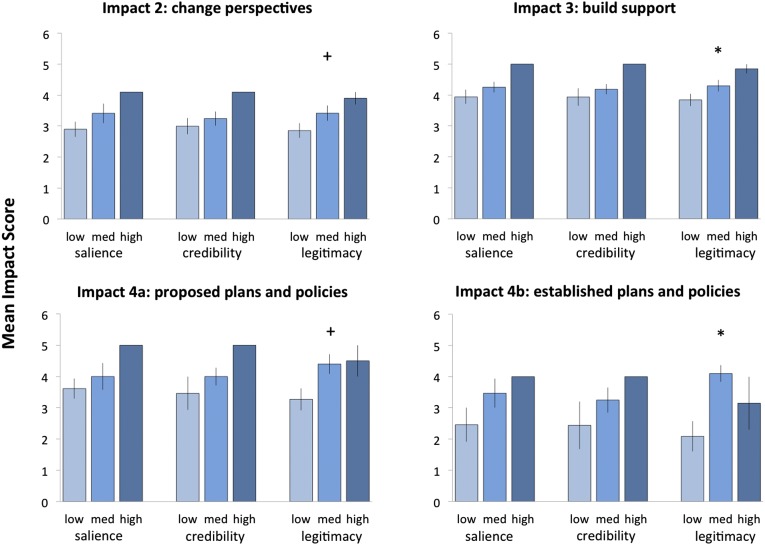

For almost all impact measures, we found that impact tends to increase with higher levels of salience, credibility, and legitimacy (Fig. 2). These results support our first hypothesis. With legitimacy, the effect is significant for all four measures of impact; with salience and with credibility, the ANOVA results are not significant (Fig. 2 and Table 3).

Fig. 2.

Effects of ES knowledge attributes on policy impact. Bars depict mean (±SE) levels of impact based on five-point expert assessment. For each attribute of knowledge (i.e., salience, credibility, and legitimacy), the 15 cases are divided into low, medium, and high categories for comparison, based on survey scores. No SE bar indicates one case in that category. **P < 0.01; *P < 0.05; +P < 0.1.

Table 3.

Relationships between attributes of knowledge and policy impact

| Attribute | Impact 2 | Impact 3 | Impact 4a | Impact 4b |

| Salience | 2.31 (0.142) | 1.94 (0.187) | 0.92 (0.424) | 1.27 (0.317) |

| Credibility | 1.16 (0.347) | 1.61 (0.240) | 1.17 (0.343) | 1.57 (0.468) |

| Legitimacy | 2.94 (0.092)+ | 3.88 (0.050)* | 3.37 (0.069)+ | 5.94 (0.016)* |

Each element reports the F1,15 value (and corresponding P value) for the ANOVA results. *P < 0.05; +P < 0.1.

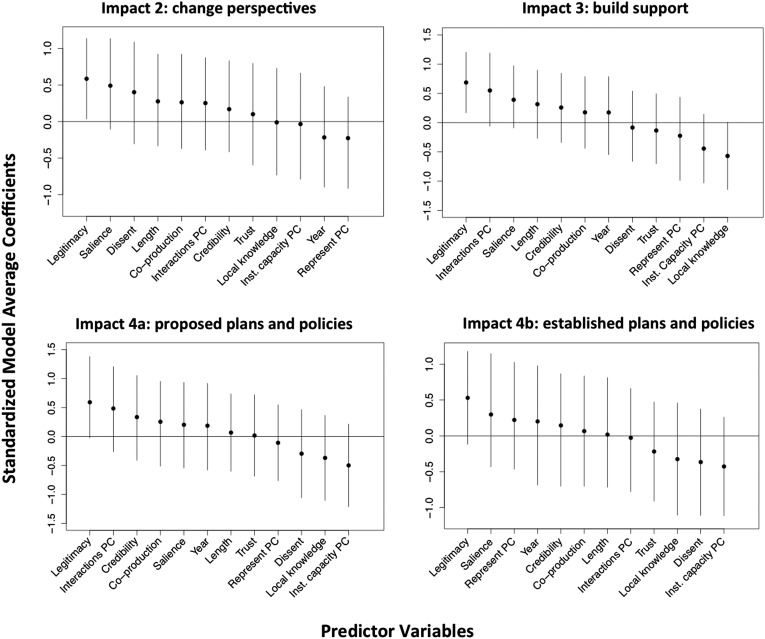

We also find partial support for our second hypothesis: Certain attributes of the ES knowledge explain impact better than process or contextual conditions (Fig. 3). Again, legitimacy emerges as a strong predictor of impact; averaging coefficients across all possible models, we find that legitimacy of the ES knowledge is more strongly related to impact than any other included variable. For all measures of impact, the top models include legitimacy as the strongest variable for explaining impact (Table S3). Other variables included in best models [ranked by lowest Akaike’s information criterion corrected for small sample size (AICc) value] are the number of interactions between scientists and decision-makers, the institutional capacities in the cases, and the degree to which local knowledge was incorporated into decisions.

Fig. 3.

Effects of multiple attributes on decision impact. Points and whiskers represent model average coefficients with 95% confidence intervals. Predictor variables are described in Table S2. The first principal components were used for three groups of highly correlated predictors (Spearman rank correlation coefficients > 0.80). The resulting variables have a “PC” suffix: interactions PC (interactions in person and by phone/email), represent PC (stakeholder representation and decision-making power), and inst. capacity PC (institutional capacities to measure baseline ES and human activities, monitor changes to ES and human activities, and implement policy).

Table S3.

Model selection results with top 5 models and AICc values

| Model | Variables | AICc | |||||||

| Legitimacy | Length | Local knowledge | Represent PC | Interactions PC | Dissent | Salience | Inst. capacity PC | ||

| Impact 2 | |||||||||

| 1 | X | 30.0 | |||||||

| 2 | X | X | 31.5 | ||||||

| 3 | X | X | X | 32.0 | |||||

| 4 | X | 32.1 | |||||||

| 5 | X | 32.1 | |||||||

| Impact 3 | |||||||||

| 1 | X | X | 22.3 | ||||||

| 2 | X | X | 22.7 | ||||||

| 3 | X | X | X | 22.9 | |||||

| 4 | X | X | 23.5 | ||||||

| 5 | X | 24.0 | |||||||

| Impact 4a | |||||||||

| 1 | X | 45.0 | |||||||

| 2 | X | X | 45.1 | ||||||

| 3 | X | X | 45.2 | ||||||

| 4 | X | X | 47.2 | ||||||

| 5 | X | 47.4 | |||||||

| Impact 4b | |||||||||

| 1 | X | X | 56.1 | ||||||

| 2 | X | 56.3 | |||||||

| 3 | X | X | 57.0 | ||||||

| 4 | X | 57.5 | |||||||

| 5 | X | 57.8 | |||||||

An “X” indicates variables included in each model.

Discussion

We develop a quantitative approach to examine the conditions under which scientific knowledge about ES most influences policies and decisions. Using four measures of impact in a global sample of ES cases, we find that legitimacy of scientific knowledge explains impact more than any of the other predictor variables we tested. Interestingly, higher levels of credibility are not associated with higher levels of impact, however measured. Credibility, or the trustworthiness and expert base of the scientific arguments, is the factor that scientists are most responsible for, whereas salience and legitimacy are established by both scientists and decision-makers through complex science–policy processes (19). The scientific adequacy of ES knowledge is undoubtedly important, perhaps as a necessary precondition to policy processes, but this study finds that it is not significantly associated with higher levels of impact.

The finding that legitimacy appears to matter more than credibility puts great responsibility on researchers to engage with stakeholders directly or in collaboration with decision-makers. Appropriate process is key for producing knowledge that is seen as legitimate, as well as for building trust, enhancing communication, and ensuring salience (24, 43–45). Transparently incorporating key diverse perspectives surrounding an issue can build trust and improve decision-makers’ acceptance of knowledge as legitimate (46). Researchers need to pay attention to elements of the science–policy process that enhance legitimacy, and research institutions need to put in place the incentives and time required for researchers to do this (47). In fact, because the process of knowledge generation is so important to its perception as legitimate, we measured legitimacy with a question about the process (SI Text). To maintain a conceptual distinction between knowledge and process, we define our process variables in more logistical terms, such as number of meetings, length of project, etc. (Table S2). Given this, our findings suggest that aspects of the science–policy process are also important for impact in so far as they create perceptions of knowledge as legitimate.

We also find that as a knowledge system evolves, different factors are important for different pathways of impact (Fig. 3). Evidence from practitioners in the field and qualitative studies claim that salience, credibility, and legitimacy are important to generate policy action, even while they recognize tradeoffs may be necessary among these attributes (16–18, 20). Our results indicate that these attributes are not equally important for each stage of impact we considered.

Salience emerges as important at early stages of the policy process, in shaping people’s ideas and discussions about ES (Fig. 2). Knowledge perceived to be salient—relevant to the needs of decision-makers—is more likely to increase awareness. Greater perceived legitimacy of ES knowledge is significantly associated with greater impact for three measures of impact, including changing awareness, building support, and drafting plans and policies that consider ES (Fig. 2). This result reinforces the idea that it is important for decision-makers to view ES knowledge as unbiased and based on a fair consideration of different stakeholder values at all stages of decision-making.

Regular interactions between scientists and decision-makers are important for building support. Building support is a political process of aligning shared interests behind particular positions, and interactions among decision-makers and stakeholders also take place at many of those same events/meetings. Decision-makers are likely to perceive the resulting scientific knowledge as salient when relevant policy questions, which they help frame, inspire science. Scenarios of future conditions and collaborative processes among scientists and decision-makers can also ensure the salience and legitimacy in a knowledge system (24).

Interestingly, local knowledge and institutional capacities are also important for building support, but with negative coefficients, indicating an inverse relationship with this measure of impact. This result is due to a few cases where low impact was achieved despite local knowledge being included and where high impact was achieved despite low institutional capacities. Scientist and decision-maker interactions are also important for explaining impact in terms of draft plans and policies that consider ES.

Viewed as a whole, these results indicate that early on in a science-to-policy cycle, the perception of ES knowledge as legitimate and salient is important to help shape conversations and raise awareness. Later on in the science-to-policy cycle, the contextual conditions that are outside of scientists’ control gain importance. According to these findings, the factors that best predict the final stages of impact (when a draft or established policy considers ES) are the degree to which decision-makers perceive ES knowledge as legitimate, the amount of interaction between scientists and decision-makers, the institutional capacities in the place where the project occurs, and the use of local knowledge (Fig. 3 and Table S3). However, there could be interactions among these variables or effects that our analysis did not uncover because of our sample size and sufficiency of data across all cases (for example, legitimacy only matters when credibility is high). In addition, in the final stages of a policy process when a new policy or finance mechanism actually becomes established, there are a multitude of variables at play, including many not measured here. Details of the individual decision-making processes, such as whether a specific decision or policy opportunity was driving knowledge creation, account for some of these unobserved explanatory factors.

Although our study illustrates a potentially powerful empirical approach to these issues, several limitations should be kept in mind. First, in exploring these relationships, it is difficult to link a policy change to any specific causal factor, because so many competing variables influence policy development (36). Studies with multiple case study comparisons complement our results by taking into account many of the subtle issues at play within the context of each unique case (13, 48, 49). Second, a sample of only 15 cases limits our statistical power and ability to infer general relationships. In interpreting our results generally, we also must consider that these cases were selected to undertake ES projects in part because they were believed to have some enabling conditions for success. Nevertheless, consistent trends observed across several impact measures (e.g., Fig. 2) instill some confidence in our overarching results. Assembling larger datasets and measuring impact with a standard framework across researchers will allow future studies to strengthen confidence in general findings. Third, expert opinion carries inherent potential for bias and error, but is increasingly well understood and supported as an empirical approach for research at the intersection of science and policy (50). Although observer bias remains an issue, the relative differences observed among cases are more robust.

Despite these limitations, our study advances our understanding of enabling conditions and use of ES knowledge and the elements that lead to an effective science–policy interface (11, 35, 51). Understanding the factors that tend to enhance the impact of ES knowledge is critical. Unless we consider the relationships that decision-makers have with the products and process of science, the impact of ES knowledge will be haphazard and will not prevent the continued declines in ecosystems and biodiversity and the benefits they provide to people.

SI Text

The following are survey questions given to decision-makers and/or representatives of boundary organizations in each case.

All targeted survey respondents were involved in the actual ES projects. Their assessments were based on their involvement and their experiences working with ES issues in the cases. We asked them to report their own individual assessments and experiences, and let them know their responses would be anonymous. We triangulated information with interviews with Natural Capital Project staff.

We provided the following definitions in the survey:

-

•

Stakeholders: “people and groups with an interest or concern in policy decisions that affect ecosystem services (for example, individual landowners, conservation NGOs, private businesses).”

-

•

Scientists: “researchers, analysts, or modelers with the Natural Capital Project, including those from WWF, The Nature Conservancy, or partner academic institutions.”

-

•

Decision-makers: “people who use knowledge to evaluate and make decisions about management activities, plans, or policies.”

-

•

Local knowledge: “knowledge provided by stakeholders within the community rather than by individuals or organizations with limited experience in the community.”

Q1. How relevant and timely was the ecosystem service information?

Not at all, only a little, somewhat, very, a great deal

Q2. Was the ecosystem service information scientifically credible? (“scientifically credible” refers to whether the information was reliable and based on scientific expertise)

Not at all, only a little, somewhat, very, a great deal

Q3. Did the decision-making process represent many diverse views on the management or policy issues?

Not at all, only a little, somewhat, a lot, a great deal

(Note: To reflect the connections among our variable categories, this question asks about the process, which determines whether decision-makers have the impression of the knowledge being legitimate.)

Q4. How much was local knowledge and experience included… a) in the ecosystem service assessment? b) in management or planning decisions?

Not at all, only a little, somewhat, a lot, a great deal

(Responses to a and b were averaged together.)

Q5. How much did scientists, stakeholders, and decision-makers work together to coproduce ecosystem service information?

Not at all, only a little, somewhat, a lot, a great deal

Q6. How many time did you interact with scientists by phone or email?

0, 1–5, 6–10, 11–15, 16–20, more than 20 times

Q7. How many time did you interact with scientists in person?

0, 1–5, 6–10, 11–15, 16–20, more than 20 times

Q8. About what proportion of stakeholders were represented in the process of… a) producing ecosystem service information? b) using ecosystem service information in decisions?

None at all, some, about half, most, all

(Responses to a and b were averaged together.)

Q9. How much conflict or disagreement was there among stakeholders during the process of… a) producing ecosystem service information? b) using ecosystem service information in decisions?

None at all, only a little, some, a lot, a great deal

(Responses to a and b were averaged together.)

Q10. How much did trust between stakeholders increase during the process of… a) producing ecosystem service information? b) using ecosystem service information in decisions?

None at all, only a little, somewhat, a lot, a great deal

(Responses to a and b were averaged together.)

Q11. Was there institutional capacity to measure baseline ecosystem services and human activities before the project?

None at all, only a little, somewhat, a lot, a great deal

Q12. Was there institutional capacity to monitor changes to ecosystem services and human activities before the project?

None at all, only a little, somewhat, a lot, a great deal

Q13. Was there institutional capacity to implement policies that impact ecosystem services and human activities before the project?

None at all, only a little, somewhat, a lot, a great deal

Q14. How was decision-making power distributed among stakeholders?

Decision-making power very concentrated, somewhat concentrated, evenly concentrated and shared, somewhat shared, decision-making power very shared

Q15. How long was the ecosystem service project?

Less than 1 y, 1 y, 2 y, 3 or more years

Q16. What year was the project completed?

Acknowledgments

We thank the many government representatives, nongovernmental organizations, and individuals who provided data. We thank Becky Chaplin-Kramer, Steve Polasky, and Duncan Russell for helpful comments on an earlier version of this manuscript; and Insu Koh, Alicia Ellis, Eduardo Rodriguez, Matthew Burke, Rebecca Traldi, and Amy Rosenthal. S.M.P. and T.H.R. were supported by a World Wildlife Fund Valuing Nature Fellowship.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1502452113/-/DCSupplemental.

References

- 1.Daily GC, Matson PA. Ecosystem services: From theory to implementation. Proc Natl Acad Sci USA. 2008;105(28):9455–9456. doi: 10.1073/pnas.0804960105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Daily GC, et al. Ecosystem services in decision making: Time to deliver. Front Ecol Environ. 2009;7(1):21–28. [Google Scholar]

- 3.Hogan D, et al. Developing an Institutional Framework to Incorporate Ecosystem Services into Decision Making: Proceedings of a Workshop. U.S. Geological Survey; Reston, VA: 2011. [Google Scholar]

- 4.de Groot RS, Alkemade R, Braat L, Hein L, Willemen L. Challenges in integrating the concept of ecosystem services and values in landscape planning, management and decision making. Ecol Complex. 2010;7(3):260–272. [Google Scholar]

- 5.Laurans Y, Rankovic A, Billé R, Pirard R, Mermet L. Use of ecosystem services economic valuation for decision making: Questioning a literature blindspot. J Environ Manage. 2013;119:208–219. doi: 10.1016/j.jenvman.2013.01.008. [DOI] [PubMed] [Google Scholar]

- 6.Spilsbury MJ, Nasi R. The interface of policy research and the policy development process: Challenges posed to the forestry community. For Policy Econ. 2006;8(2):193–205. [Google Scholar]

- 7.Liu S, Costanza R, Farber S, Troy A. Valuing ecosystem services: Theory, practice, and the need for a transdisciplinary synthesis. Ann N Y Acad Sci. 2010;1185:54–78. doi: 10.1111/j.1749-6632.2009.05167.x. [DOI] [PubMed] [Google Scholar]

- 8.Nesshover C, et al. Improving the science-policy interface of biodiversity research projects. Gaia. 2013;22(2):99–103. [Google Scholar]

- 9.Knight AT, et al. Knowing but not doing: Selecting priority conservation areas and the research-implementation gap. Conserv Biol. 2008;22(3):610–617. doi: 10.1111/j.1523-1739.2008.00914.x. [DOI] [PubMed] [Google Scholar]

- 10.Eppink FV, Werntze A, Mas S, Popp A, Seppelt R. Land management and ecosystem services: How collaborative research programmes can support better policies. Gaia. 2012;21(1):55–63. [Google Scholar]

- 11.Mermet L, Laurans Y, Lemenager T. Tools for What Trade? Analysing the Utilization of Economic Instruments and Valuations in Biodiversity Management. Agence Francaise de Developpement; Paris: 2014. [Google Scholar]

- 12.Weichselgartner J, Kasperson R. Barriers in the science-policy-practice interface: Toward a knowledge-action-system in global environmental change research. Glob Environ Change. 2010;20(2):266–277. [Google Scholar]

- 13.McKenzie E, et al. Understanding the use of ecosystem service knowledge in decision making: Lessons from international experiences of spatial planning. Environ Plann C Gov Policy. 2014;32(2):320–340. [Google Scholar]

- 14.Laurans Y, Mermet L. Ecosystem services economic valuation, decision-support system or advocacy? Ecosystem Services. 2014;7:98–105. [Google Scholar]

- 15.MacDonald DH, Bark RH, Coggan A. Is ecosystem service research used by decision-makers? A case study of the Murray-Darling Basin, Australia. Landscape Ecol. 2014;29(8):1447–1460. [Google Scholar]

- 16.Cash DW, et al. Knowledge systems for sustainable development. Proc Natl Acad Sci USA. 2003;100(14):8086–8091. doi: 10.1073/pnas.1231332100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Keller AC. Credibility and relevance in environmental policy: Measuring strategies and performance among science assessment organizations. J Public Adm Res Theory. 2010;20(2):357–386. [Google Scholar]

- 18.Sarkki S, et al. Balancing credibility, relevance and legitimacy: A critical assessment of trade-offs in science-policy interfaces. Sci Public Policy. 2013;2013:1–13. [Google Scholar]

- 19.Rowe A, Lee K. Linking Knowledge with Action: An Approach to Philanthropic Funding of Science for Conservation. David & Lucile Packard Foundation; Palo Alto, CA: 2012. [Google Scholar]

- 20.Reid RS, et al. Knowledge Systems for Sustainable Development Special Feature Sackler Colloquium: Evolution of models to support community and policy action with science: Balancing pastoral livelihoods and wildlife conservation in savannas of East Africa. Proc Natl Acad Sci USA. November 3, 2009 doi: 10.1073/pnas.0900313106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cook CN, Mascia MB, Schwartz MW, Possingham HP, Fuller RA. Achieving conservation science that bridges the knowledge-action boundary. Conserv Biol. 2013;27(4):669–678. doi: 10.1111/cobi.12050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Andrews CJ. Humble Analysis: The Practice of Joint Fact-Finding. Praeger; Westport, CT: 2002. p. xiv. [Google Scholar]

- 23.Karl HA, Susskind LE, Wallace KH. A dialogue not a diatribe—Effective integration of science and policy through joint fact finding. Environment. 2007;49(1):20–34. [Google Scholar]

- 24.Rosenthal A, et al. Process matters: A framework for conducting decision-relevant assessments of ecosystem services. International Journal of Biodiversity Science, Ecosystem Services and Management. 2015;11(3):190–204. [Google Scholar]

- 25.Cox B, Searle B. The State of Ecosystem Services. Bridgespan Group; Boston: 2009. [Google Scholar]

- 26.Haas PM, Keohane RO, Levy MA. Institutions for the Earth: Sources of Effective International Environmental Protection. MIT Press; Cambridge, MA: 1993. p. xi. [Google Scholar]

- 27.Wunder S, Engel S, Pagiola S. Taking stock: A comparative analysis of payments for environmental services programs in developed and developing countries. Ecol Econ. 2008;65(4):834–852. [Google Scholar]

- 28.Ruckelshaus M, et al. Note from the field: Lessons learned from using ecosystem service approaches to inform real-world decisions. Ecol Econ. 2015;115:11–21. [Google Scholar]

- 29.Nelson E, et al. Modeling multiple ecosystem services, biodiversity conservation, commodity production, and tradeoffs at landscape scales. Front Ecol Environ. 2009;7(1):4–11. [Google Scholar]

- 30.Bhagabati NK, et al. Ecosystem services reinforce Sumatran tiger conservation in land use plans. Biol Conserv. 2014;169:147–156. [Google Scholar]

- 31.Arkema KK, et al. Embedding ecosystem services in coastal planning leads to better outcomes for people and nature. Proc Natl Acad Sci USA. 2015;112(24):7390–7395. doi: 10.1073/pnas.1406483112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Guston DH. Boundary organizations in environmental policy and science: An introduction. Sci Technol Human Values. 2001;26(4):399–408. [Google Scholar]

- 33.Sutherland WJ. Predicting the ecological consequences of environmental change: A review of the methods. J Appl Ecol. 2003;43:599–616. [Google Scholar]

- 34.Sutherland WJ, Goulson D, Potts SG, Dicks LV. Quantifying the impact and relevance of scientific research. PLoS One. 2011;6(11):e27537. doi: 10.1371/journal.pone.0027537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kenward RE, et al. Identifying governance strategies that effectively support ecosystem services, resource sustainability, and biodiversity. Proc Natl Acad Sci USA. 2011;108(13):5308–5312. doi: 10.1073/pnas.1007933108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ferraro PJ, Lawlor K, Mullan KL, Pattanayak SK. Forest figures: Ecosystem services valuation and policy evaluation in developing countries. Rev Environ Econ Policy. 2012;6(1):20–44. [Google Scholar]

- 37.Gertler PJ, Martinez S, Premand P, Rawlings LB, Vermeersch CMJ. Impact Evaluation in Practice. The World Bank; Washington, DC: 2010. [Google Scholar]

- 38.Margoluis R, Stem C, Salafsky N, Brown M. Design alternatives for evaluating the impact of conservation projects. New Directions for Evaluation. 2009;2009(122):85–96. [Google Scholar]

- 39.Krippendorff K. Content Analysis: An Introduction to its Methodology. 2nd Ed Sage; Thousand Oaks, CA: 2004. [Google Scholar]

- 40.Dormann CF, et al. Collinearity: A review of methods to deal with it and a simulation study evaluating their performance. Ecography. 2013;36(1):27–46. [Google Scholar]

- 41.Barton K. 2014 MuMIn: R package for multi-model inference. The Comprehensive R Archive Network (CRAN) Project. Available at https://cran.r-project.org. Accessed October 13, 2014.

- 42.Burnham KP, Anderson DR. Model Selection and Multimodel Inference: A Practical Information-Theoretic Approach. 2nd Ed Springer; New York: 2002. [Google Scholar]

- 43.Reed MS. Stakeholder participation for environmental management: A literature review. Biol Conserv. 2008;141(10):2417–2431. [Google Scholar]

- 44.Smith PD, Mcdonough MH. Beyond public participation: Fairness in natural resource decision making. Soc Nat Resour. 2001;14(3):239–249. [Google Scholar]

- 45.Irvin RA, Stansbury J. Citizen participation in decision making: Is it worth the effort? Public Adm Rev. 2004;64(1):55–65. [Google Scholar]

- 46.Young JC, et al. Does stakeholder involvement really benefit biodiversity conservation? Biol Conserv. 2013;158:359–370. [Google Scholar]

- 47.Lynam T, Jong Wd, Sheil D, Kusumanto T, Evans K. A review of tools for incorporating community knowledge, preferences, and values into decision making in natural resources management. Ecol Soc. 2007;12(1):5. [Google Scholar]

- 48.Creswell JW. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. 3rd Ed. Sage; Thousand Oaks, CA: 2009. p. xxix. [Google Scholar]

- 49.Yin RK. Case Study Research: Design and Methods. 4th Ed. Sage; Los Angeles: 2009. p. xiv. [Google Scholar]

- 50.Manos B, Papathanasiou J. GEM-CON-BIO Governance and Ecosystem Management for the Conservation of Biology. Aristotle University of Thessaloniki; Thessaloniki, Greece: 2008. [Google Scholar]

- 51.Waite R, Kushner B, Jungwiwwattanaporn M, Gray E, Burke L. Use of coastal economic valuation in decision-making in the Caribbean: Enabling conditions and lessons learned. Ecosystem Services. 2014;11:45–55. [Google Scholar]

- 52.Hirsch PD, Luzadis VA. Scientific concepts and their policy affordances: How a focus on compatibility can improve science-policy interaction and outcomes. Nat Cult. 2013;8(1):97–118. [Google Scholar]

- 53.Watson RT. Turning science into policy: Challenges and experiences from the science-policy interface. Philos Trans R Soc Lond B Biol Sci. 2005;360(1454):471–477. doi: 10.1098/rstb.2004.1601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Beierle TC, Konisky DM. What are we gaining from stakeholder involvement? Observations from environmental planning in the Great Lakes. Environ Plann C Gov Policy. 2001;19:515–527. [Google Scholar]

- 55.Lee KN. Compass and Gyroscope: Integrating Science and Politics for the Environment. Island; Washington, DC: 1993. [Google Scholar]

- 56.Pretty J, Smith D. Social capital in biodiversity conservation and management. Conserv Biol. 2004;18(3):631–638. [Google Scholar]

- 57.Pretty J, Ward H. Social capital and the environment. World Dev. 2001;29(2):209–227. [Google Scholar]

- 58.Ferraro PJ, Hanauer MM, Sims KRE. Conditions associated with protected area success in conservation and poverty reduction. Proc Natl Acad Sci USA. 2011;108(34):13913–13918. doi: 10.1073/pnas.1011529108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Smit B, Wandel J. Adaptation, adaptive capacity and vulnerability. Glob Environ Change. 2006;16(3):282–292. [Google Scholar]