Significance

Although the involvement of the prefrontal cortex in the retrieval of verbal information has been established, there are no studies examining the role of the frontal cortex in the retrieval of auditory nonverbal information. The current experiment shows that a particular region within the large and heterogeneous prefrontal cortex, the midventrolateral prefrontal cortex, is involved in the retrieval of specific features of an auditory memory, such as the specific melody or its location. This selective retrieval is achieved via interactions with particular posterior cortical areas in the right hemisphere. Earlier research had demonstrated the importance of this region in the selective retrieval from memory of specific aspects of verbal, tactile, and visual information.

Keywords: fMRI, prefrontal cortex, memory retrieval, auditory, control processes

Abstract

There is evidence from the visual, verbal, and tactile memory domains that the midventrolateral prefrontal cortex plays a critical role in the top–down modulation of activity within posterior cortical areas for the selective retrieval of specific aspects of a memorized experience, a functional process often referred to as active controlled retrieval. In the present functional neuroimaging study, we explore the neural bases of active retrieval for auditory nonverbal information, about which almost nothing is known. Human participants were scanned with functional magnetic resonance imaging (fMRI) in a task in which they were presented with short melodies from different locations in a simulated virtual acoustic environment within the scanner and were then instructed to retrieve selectively either the particular melody presented or its location. There were significant activity increases specifically within the midventrolateral prefrontal region during the selective retrieval of nonverbal auditory information. During the selective retrieval of information from auditory memory, the right midventrolateral prefrontal region increased its interaction with the auditory temporal region and the inferior parietal lobule in the right hemisphere. These findings provide evidence that the midventrolateral prefrontal cortical region interacts with specific posterior cortical areas in the human cerebral cortex for the selective retrieval of object and location features of an auditory memory experience.

Functional neuroimaging studies have established a relationship between the ventrolateral prefrontal cortex and certain aspects of memory retrieval. For instance, there is evidence for the involvement of the left ventrolateral prefrontal region in selective verbal retrieval, such as the free recall of words that appear within particular contexts (lists) (1), verbal fluency (a form of selective verbal retrieval from semantic memory) (2, 3), and various other forms of verbal semantic retrieval (4–6). More precisely, it has been proposed that the two midventrolateral prefrontal cortical areas 45 and 47/12 are critical for the active selection and retrieval of information from memory when stimuli are related to other stimuli/contexts in multiple and more-or-less equiprobable ways, so that memory retrieval cannot be a matter of mere recognition of the stimuli or supported by strong and unique stimulus-to-stimulus or stimulus-to-context associations (7). In previous functional neuroimaging studies, we were able to show activity increases that were specific to the midventrolateral prefrontal cortex for the active retrieval of visual and tactile stimuli (8, 9). A more recent study has shown that patients with lesions to the ventrolateral prefrontal region, but not those with lesions involving the dorsolateral prefrontal region, show impairments in the active controlled retrieval of the visual contexts within which words had appeared (10). This impairment was not accompanied by general memory loss of the type observed after limbic medial temporal lobe lesions (11–13). Patients with ventrolateral prefrontal lesions performed as well as normal control subjects on recognition memory of the presented stimuli, but were impaired when they were asked to retrieve selectively specific aspects of the memorized information in a task in which words and their context entered into multiple relations with each other across trials and, therefore, retrieval could not be supported by simple recognition memory (10). Taken together, these results suggest that the midventrolateral prefrontal cortex plays a critical role in the top–down modulation of activity for the retrieval of specific features of mnemonic information when simple familiarity and/or unambiguous stimulus-to-stimulus relations are not sufficient for memory retrieval.

Although there is considerable evidence of direct anatomical connections between auditory cortical regions in the temporal lobe and the ventrolateral prefrontal cortex (14–20) and the presence of auditory responsive neurons for complex sounds in the ventrolateral prefrontal cortex (21, 22), there is no study examining the potential involvement of the ventrolateral prefrontal region in the controlled selective retrieval of auditory nonverbal information in the human brain. Most studies investigating the role of the prefrontal cortex in the auditory domain have focused on verbal phonological and semantic memory (23–25). The aim of the present study was to examine the hypothesis that the midventrolateral prefrontal cortex is involved in the active controlled retrieval of nonverbal auditory information from memory, in a manner analogous to its previously demonstrated role in the retrieval of verbal and nonverbal visual and tactile information (8, 9, 26).

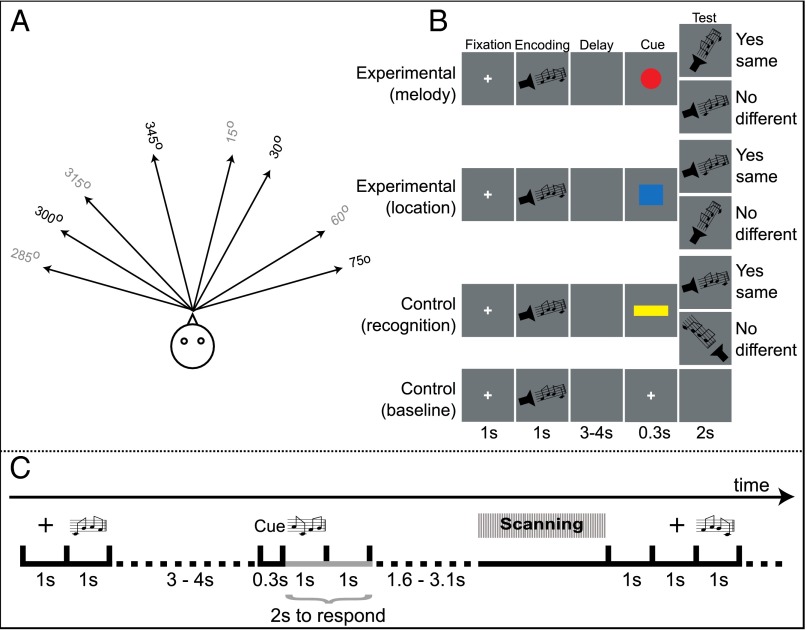

In the present functional magnetic resonance imaging (fMRI) study, on each trial, one of four different melodies was presented from one of four locations in a virtual acoustic environment (Fig. 1A) and, after a short delay, the subjects were instructed via a cue to retrieve either the specific melody (regardless of its location) or the location (regardless of the melody that was presented there) (Fig. 1B). The subjects were thus required to isolate the instructed component of the previously memorized auditory stimulus complex, namely the melody or the location. The experimental design prevented the establishment of strong and unique relations between the melodies and the locations because, across trials, all four melodies were presented from each one of the four locations with equal probability. Intermixed with these “active retrieval” trials requiring the isolation of a specific feature of the stimulus complex were “recognition” control trials that were identical in terms of the initial stimulus presentation period but, following the delay, the subjects were instructed simply to recognize (based on familiarity) the previously encoded stimulus complex of the melody and its location. No isolation of a specific aspect of the memory (melody or location) was required in these recognition control trials. There were also “baseline” control trials in which no auditory stimulus was presented during the retrieval period. The hypothesis to be tested predicted selective activity increases in the midventrolateral prefrontal cortex during the active retrieval of specific aspects of the encoded auditory stimuli in comparison with the simple recognition of that stimulus, that is, during the test period of the active retrieval trials versus the same period in the recognition control trials.

Fig. 1.

Schematic diagram of the experimental paradigm and scanning protocol. (A) Locations used to present the melodies. Gray indicates the four locations that were used only during the test period of the recognition control trials. (B) Illustration of the testing procedure. All trials started with the presentation of a cross in the middle of the screen followed by the encoding period, during which the auditory stimulus was presented. After a variable delay, a visual instructional cue was presented and the test period followed after the disappearance of the visual cue. A second auditory stimulus was presented during the test period for the experimental and recognition control trials but not during the baseline control trials. The subjects’ responses were recorded during the test period. The duration of the various periods was the same for the experimental and control trials. (C) Illustration of the sparse-sampling fMRI scanning used in this experiment.

Results

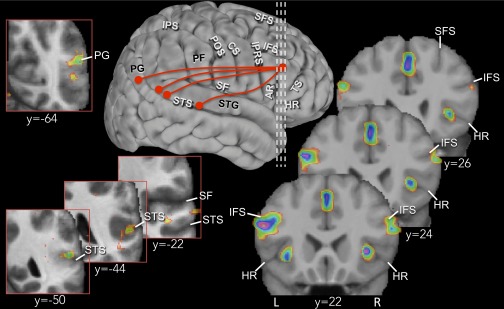

The comparison of the blood oxygenation level-dependent (BOLD) signal during the test period of the active retrieval trials with that during the test period of the recognition control trials revealed selective activity increases within the midventrolateral prefrontal cortex, as hypothesized (Table 1). There were peaks of increased functional activity located bilaterally below the inferior frontal sulcus within the pars triangularis of the inferior frontal gyrus where area 45 lies [MNI (Montreal Neurological Institute) coordinates (x, y, z) 54, 20, 18, t = 4.38, right hemisphere; −52, 22, 22, t = 5.00, left hemisphere] (Fig. 2). In addition, there were bilateral peaks within the complex formed by the horizontal ramus of the Sylvian fissure where cytoarchitectonic analysis shows that caudal area 47/12 lies and continues into the connectionally and phylogenetically related cortex of the anterior insula (27, 28) [MNI coordinates (x, y, z) 34, 22, 4, t = 4.65, right hemisphere; −32, 20, −6, t = 4.48, left hemisphere] (Fig. 2). The criteria for distinguishing area 47/12 from the anterior insula are discussed in Supporting Information. A third peak that did not reach significance was situated in the right hemisphere anterior to the rostral tip of the horizontal ramus at the border of area 45 with area 47/12 [(x, y, z) 52, 38, −6, t = 3.17]. In summary, there was clear activity increase in the midventrolateral prefrontal region lying below the inferior frontal sulcus (i.e., areas 45 and 47/12), but no significant activity increase was observed in the dorsolateral prefrontal cortex (areas 46, 9/46, 9, and 8B) or in the frontal pole (area 10). For information on the location, cytoarchitectonic features, and connections of midventrolateral prefrontal areas 45 and 47/12, see refs. 2, 19, and 27. Activity increases were also observed bilaterally in the inferior frontal junction (IFJ) where the inferior frontal sulcus joins the inferior branch of the precentral sulcus, in left premotor area 6, and in the paracingulate cortex (Table 1). Additional imaging results are included in Supporting Information together with the behavioral results.

Table 1.

Peak maxima from the comparison of the experimental active retrieval with the recognition control test period

| Stereotaxic coordinates (MNI space) | |||||

| Brain area | x | y | z | t value | Cluster volume, mm3 |

| Right hemisphere | |||||

| MVLPFC (area 45) | 54 | 20 | 18 | 4.38 | 1,248 |

| MVLPFC (area 47/12) | 34 | 22 | 4 | 4.65 | 1,536 |

| MVLPFC (rostral)* | 52 | 38 | −6 | 3.17 | 12 |

| IFJ | 50 | 6 | 30 | 5.63 | 4,408 |

| Left hemisphere | |||||

| MVLPFC (area 45) | −52 | 22 | 22 | 5.00 | 2,096 |

| MVLPFC (area 47/12) | −32 | 20 | −6 | 4.48 | 1,088 |

| IFJ | −44 | 16 | 28 | 5.54 | 3,696 |

| Premotor (area 6) | −50 | 0 | 40 | 4.50 | 752 |

| Premotor (area 6) | −40 | −4 | 54 | 4.28 | 1,064 |

| Paracingulate region | 0 | 18 | 48 | 4.84 | 4,936 |

IFJ, inferior frontal junction; MVLPFC, midventrolateral prefrontal cortex.

This peak did not reach significance.

Fig. 2.

Results from the comparison of the experimental active retrieval and recognition control trials. The vertical interrupted white lines projected onto a three-dimensional cortical surface rendering of the right hemisphere indicate the rostrocaudal levels (y 22, 24, 26) in the MNI standard stereotaxic space of the three coronal sections that illustrate the bilateral activity increases observed in ventrolateral areas 45 and 47/12. The red circles on the surface rendering along the superior temporal gyrus and sulcus as well as in area PG indicate the loci of peaks that interacted with right area 45 during active retrieval. These peaks (y −22, −44, −50, −64) are illustrated within the boxes outlined in red. AR, ascending ramus of SF; CS, central sulcus; HR, horizontal ramus of SF; IFS, inferior frontal sulcus; IPRS, inferior precentral sulcus; IPS, intraparietal sulcus; L, left hemisphere; PF, area PF of Economo; PG, area PG of Economo; POS, postcentral sulcus; R, right hemisphere; SF, Sylvian fissure; SFS, superior frontal sulcus; STG, superior temporal gyrus; STS, superior temporal sulcus; TS, triangular sulcus.

Because it has been argued that the dorsolateral prefrontal cortex is specifically involved in the memory of spatial stimuli and the ventrolateral prefrontal cortex in the memory of nonspatial stimuli (29–31), we examined separately the active retrieval trials in which the subjects retrieved the melody of the encoded auditory stimulus and the trials in which they retrieved the location. The separate comparisons of the test periods of each one of these two types of active retrieval trial with the test period of the recognition control trials provided very similar results, namely significant activity increases within the midventrolateral prefrontal cortex (areas 45 and 47/12) but no significant activity in the middorsolateral prefrontal cortex (areas 46 and 9/46), further emphasizing the selectivity of the midventrolateral prefrontal region in the selective retrieval from memory of both object (melody) and spatial aspects of nonverbal auditory stimuli (Supporting Information). Furthermore, when we compared directly the two active retrieval conditions, namely the active retrieval of the melody versus the active retrieval of the location, no activity differences were observed in the prefrontal cortex, again emphasizing the fact that active retrieval of both object (melody) and location recruits the same prefrontal regions and, therefore, their direct comparison did not yield any significant activity differences. Additional results are included in Supporting Information.

The analyses presented above demonstrate that the midventrolateral prefrontal cortical region is involved in the active retrieval of auditory information from memory. We next proceeded to test the hypothesis that this ventrolateral prefrontal region may be interacting functionally with the auditory temporal region that is known to play a role in the encoding and retention of auditory stimuli (32, 33) and the parietal region that processes spatial information (34–36). We therefore examined whether there was a significant change in functional connectivity between the midventrolateral prefrontal cortex and the secondary auditory temporal and the posterior parietal region during the active retrieval compared with the recognition control trials. We tested each one of the peaks within the midventrolateral prefrontal cortex, and area 45 in the right hemisphere was found to interact functionally during active retrieval with the secondary auditory cortex in the upper bank of the superior temporal sulcus (Fig. 2) [MNI coordinates (x, y, z) 50, −22, 0, t = 3.70, P = 0.00018 uncorrected, MNI coordinates (x, y, z) 56, −50, 18, t = 3.46, P = 0.00045 uncorrected] and the inferior parietal lobule, namely area PG of the angular gyrus (Fig. 2) [MNI coordinates (x, y, z) 44, −64, 20, t = 3.78, P = 0.00015 uncorrected, MNI coordinates (x, y, z) 54, −64, 24, t = 2.69, P = 0.05 uncorrected]. Anatomical studies have shown that area 45 in the midventrolateral prefrontal cortex is strongly interconnected with the auditory cortical areas of the superior temporal gyrus and sulcus via the extreme capsule fasciculus and the arcuate fasciculus and also with the posterior part of the inferior parietal lobule via the second branch of the superior longitudinal fasciculus (14, 20, 37). Separate analyses for the retrieval of the melody and location are presented in Supporting Information.

Discussion

During the test phase of the active retrieval trials, the subjects had to retrieve selectively either the specific melody or the specific location of the memorized auditory stimulus complex that was presented during the encoding phase of the trial. In other words, the subjects were required to isolate a specific feature from a previously encoded stimulus complex and, importantly, correct performance could not rely on the simple recognition/familiarity of the stimulus. The results clearly demonstrate involvement of the midventrolateral prefrontal cortex in selective auditory memory retrieval: Increased activity within the midventrolateral prefrontal region was observed both when the subjects were retrieving the specific melody presented and also when retrieving the specific location. There was no activity in the middorsolateral prefrontal cortex or the frontopolar region during these retrieval periods. These findings are consistent with the hypothesis tested, as well as earlier research that had shown selective increases of activity within the midventrolateral prefrontal cortex during the selective retrieval from memory of visual and tactile information (8, 9).

The next major finding was the demonstration of interaction between the midventrolateral prefrontal region and distinct posterior cortical areas for active retrieval. During the active retrieval period, there was an increase in the connectivity of ventrolateral prefrontal area 45 in the right hemisphere with the parabelt auditory region along the superior temporal gyrus and sulcus and the inferior parietal region (area PG) (Fig. 2). In other words, isolation from memory of the relevant feature of the encoded auditory information (i.e., melody or location) increased interaction between area 45 and auditory and spatial processing cortical regions. There are considerable anatomical data that the midventrolateral prefrontal cortex (and particularly area 45) is extensively connected with the superior temporal gyrus and the nearby dorsal bank of the superior temporal sulcus (through the extreme capsule and the arcuate fasciculus) and also with the inferior parietal lobule (area PG) (via the second branch of the superior longitudinal fasciculus) (14, 16–18, 20, 37). The superior temporal region of the primate brain is critical for the encoding and short-term maintenance of auditory information (32, 33, 38), whereas the caudal superior temporal gyrus and the adjacent parabelt region in the caudal superior temporal sulcus entering the inferior parietal lobule are involved in the spatial aspects of auditory coding (34, 36). Indeed, a recent study using transcranial magnetic stimulation has shown that the caudal part of the superior temporal gyrus, close to the inferior parietal lobule, is involved in auditory localization to a greater extent than the more rostral temporal region (39).

The present demonstration of functional interaction of the midventrolateral prefrontal region with posterior auditory cortical areas during active retrieval from recent auditory memory and the presented anatomical studies suggest a cortical circuit critical for the active controlled retrieval of auditory information. These interactions are occurring in the right hemisphere which is known to play a greater role in the nonverbal processing of auditory information, such as melodies (40). It is interesting to note here also the demonstration of a right hemisphere asymmetry in the monkey auditory superior temporal gyrus for the processing of auditory nonvocal sounds (41, 42), suggesting a general primate asymmetry in favor of the right hemisphere for the processing of noncommunicative auditory stimulation. We expect that retrieval of other types of nonverbal auditory information will again involve the midventrolateral prefrontal cortex in interaction with auditory temporal and parietal areas, primarily in the right hemisphere.

What might be the neurophysiological role of the midventrolateral prefrontal cortex in memory retrieval? We and others have argued that the self-initiated or instructed isolation of a specific feature from a memory experience (active retrieval) involves top–down modulation by the ventrolateral prefrontal cortex within specific posterior association cortical areas involved in the perceptual and mnemonic processing of various types of information and that the increased interactions observed during the retrieval of particular aspects of the mnemonic experience reflect this top–down modulation (4, 7, 9, 43). Recordings in nonhuman primates in the ventrolateral prefrontal cortex have provided evidence at the neuronal level for this active retrieval process. It has been shown that a class of neurons exhibits differential instruction-related activity according to the type of retrieval that must be performed by the monkey, thus signaling the initiation or noninitiation of the retrieval of specific information (44). A second class of neurons increase their firing rate during the delay after the instruction cue and before the test phase and, importantly, their firing is not modulated in the control trials or during the precue interval when the encoded event is simply stored in memory (44). Thus, the firing of these neurons is not related to encoding, maintenance, or simple recognition of an event in memory but rather to the active retrieval of specific features. We conceptualize active retrieval as a top–down modulation of information within specific posterior cortical areas, that is, a type of attentional orienting toward a specific feature within a memory representation that leads to enhancement of the relevant feature and inhibition of the irrelevant features, resulting in successful retrieval of the required specific information (7, 44). Importantly, there is evidence from studies of patients that the prefrontal cortex has a modulatory control role in activity in posterior auditory areas (45, 46).

Given the evidence for specialized areas involved in auditory processing in the superior temporal region (47) and the anatomical connections of the midventrolateral prefrontal region to the auditory temporal region and adjacent inferior parietal lobule (14–20), it can be hypothesized that the midventrolateral prefrontal cortex plays a key role in the isolation of particular aspects of memorized auditory stimuli in interaction with specific posterior temporoparietal cortical areas by enhancing relevant and inhibiting irrelevant features. This interaction was reflected in the increased connectivity of the midventrolateral prefrontal cortex with these specific posterior association cortical regions.

In addition to the ventrolateral prefrontal cortex, there was increased activity in the paracingulate region of the dorsomedial frontal cortex during the active retrieval period (Table 1). Anatomical studies have shown that the midventrolateral prefrontal cortex is linked with this dorsomedial frontal region in the monkey (19), and there is resting-state connectivity evidence for this linkage in the human brain (48). It should also be pointed out that this dorsomedial frontal region was coactivated with the ventrolateral prefrontal region in our previous functional neuroimaging studies of active memory retrieval (9, 26). We have recently provided evidence in a behavioral-lesion study in the human brain that the dorsomedial frontal region is indeed involved in memory retrieval (10). In a visual–verbal paradigm it was shown that, whereas the ventrolateral prefrontal cortex is specifically involved in the isolation of particular information from a previously encoded event, the dorsomedial frontal region is more generally involved in memory retrieval. Thus, it can be argued that the specific contribution of the ventrolateral prefrontal cortex may be to interact with the posterior parietal and temporal association cortex for the isolation and hence retrieval of particular features of a memorized event, whereas the paracingulate medial frontal cortex, which has direct access to the hippocampal episodic memory system via the cingulate fasciculus (49, 50), plays a more general role in memory retrieval.

The present findings also address the question of whether functional processing in the prefrontal cortex can be segregated along the spatial vs. nonspatial dimension of the stimuli to be retrieved. One major theoretical position of lateral prefrontal function has been that the dorsal aspect of the midlateral prefrontal cortex (areas 46 and 9/46) is involved in spatial mnemonic processing, whereas the ventral aspect of the midlateral prefrontal cortex (areas 45 and 47/12) is involved in object processing (e.g., 30, 51, 52). Recent findings from recording studies in nonhuman primates, however, indicate that prefrontal neurons in both the dorsal and ventral prefrontal cortex respond to sound cues during a nonspatial auditory memory task (53). In the present auditory memory retrieval study, there were trials in which the location of the stimulus had to be retrieved and trials in which the object (i.e., the melody) had to be retrieved. Active retrieval of both the location and the melody led to activity increases within the midventrolateral prefrontal cortex and not in the middorsolateral prefrontal region (areas 46 and 9/46). These findings are consistent with results obtained using visual and tactile stimuli with the same experimental paradigm. The activity foci observed in the present study for the selective retrieval of the location or the melody fall within the same midventrolateral prefrontal cortical region where activity peaks were observed in relation to the active retrieval of visual or tactile information (8, 9). This evidence is consistent with the interpretation that the midventrolateral prefrontal region in interaction with posterior cortical areas is involved in active memory retrieval, whereas the middorsolateral prefrontal region in interaction with the parietal region is involved in the manipulation of the information in working memory (54, 55). Although activity increases during location retrieval were not observed within area 46 or area 9/46, there was an activity increase in the dorsal premotor region close to the frontal eye field (56). Activity in this region often increases in relation to explicit or implicit eye movement activity and also in many spatial working memory tasks (57), as would be expected in a region that is either explicitly (e.g., turn eyes to the left side) or implicitly (e.g., prepare an eye movement to the left side) involved in eye movement processing.

Note that, in the present experiment, we ensured that the difficulty of the two types of retrieval, namely the retrieval of the location and the retrieval of the melody, was equal across subjects and that the attentional demands and depth of encoding were identical for both conditions, because the subjects did not know what type of trial they were performing until the presentation of the retrieval cue. This is important, given that previous research had indicated modulations of activity with increasing specificity or difficulty of the task and attentional demands (4, 58).

As discussed above, the role of the midventrolateral prefrontal cortex in active retrieval is not unique to auditory stimuli. Active retrieval of other types of sensory information also depends on the midventrolateral prefrontal cortex, although in interaction with different posterior cortical association areas (8, 9). In previous studies, increased interaction was seen between the midventrolateral prefrontal cortex and the fusiform gyrus for the active retrieval of faces (8), the rostral inferior parietal region for the active retrieval of vibrotactile information (9), and the left hemisphere language areas for the active retrieval of verbal information (26).

Materials and Methods

Experimental Design and Testing Procedure.

Twelve healthy volunteers (five males, seven females) with normal hearing participated in the study after providing informed, written consent according to the institutional guidelines established by the Research Ethics Board of the Montreal Neurological Hospital and Institute. All of the subjects were right-handed as assessed with a handedness questionnaire (based on ref. 59), and their age ranged from 20 to 34 y (mean 24.1, SD 3.82). The subjects had no formal musical or voice training.

A set of auditory stimuli that lasted 1 s and consisted of melodies presented from different locations was created for this study. These auditory stimuli were combinations of four 0.25-s pulse trains at C4, F4, G4, and A4 that had different temporal envelopes and were presented at a comfortable sound intensity. A head-related transfer function was used to create a simulated virtual acoustic environment within the scanner. To aid in the localization of the sounds, the pulse trains were composed of 40 harmonics. The melodies were presented from different spatial locations 45° apart in a half-circle at 0° azimuth. The distance between the different locations was chosen on the basis of a pilot study that showed that the subjects were able to discriminate with accuracy melodies that were delivered at a 45° distance from one another (Fig. 1A). The same stimuli were used across all trials.

There were three types of trial: experimental active retrieval, recognition control, and baseline control trials. During scanning, these three types of trial were pseudorandomly intermixed (Fig. 1B). All trials had the same sequence of events. The trial started with a fixation point appearing in the center of the screen for 1 s. The offset of the fixation point was followed by the encoding period of the trial, during which the screen was blank and an auditory stimulus was presented for 1 s: one of the four melodies presented from one of the four locations. Any one of the four melodies could be presented from any one of the four locations and, across trials, the same four melodies were presented from the same four locations in all possible combinations with the same probability of occurrence. The subjects had been instructed to encode and maintain the auditory stimulus (i.e., the specific melody and its location) until the test period. Following a delay of 3–4 s, an instructional visual cue appeared on the screen for 0.3 s providing an instruction about the test auditory stimulus, which appeared at the offset of the visual cue. The test auditory stimulus was presented for 1 s. In the active retrieval trials, the visual instructional cue indicated retrieval of either the particular melody or the particular location of the auditory stimulus presented during the encoding phase of the trial to compare it with the stimulus presented during the test period. A red circle instructed the subjects to retrieve the melody of the encoded stimulus and to decide whether the melody of the test stimulus was the same as the melody of the encoded stimulus and to press the appropriate one of the two response keys to indicate the choice, whereas a blue square instructed the subjects to retrieve the location of the encoded stimulus and to decide whether the specific location of the test stimulus was the same as that of the encoded stimulus and press the appropriate response key to indicate the decision. The subjects were required to press the left response key if the test stimulus matched the encoded stimulus along the required dimension and to press the right response key if it did not. In other words, during the test phase, the subjects had to isolate from the memorized encoded stimulus either the melody or the location to compare it with the test stimulus and press one of two response keys to indicate the decision. These were the experimental active retrieval trials, the active retrieval condition.

If the visual cue was a yellow rectangle, the subjects would simply have to recognize the test stimulus, that is, to decide whether it was the same as the encoded stimulus and press the appropriate one of two response keys. In other words, in this case there was no need to isolate selectively one aspect of the encoded stimulus but simply to make a same/different memory decision. These recognition trials constituted the recognition control condition. In these trials, there was no need to retrieve specific aspects of the auditory stimulus (i.e., the melody or the location). In the test period of the recognition control trials, the subjects were presented either with the exact same stimulus encoded at the beginning of the trial or one of four other familiar melodies presented from one of four other familiar locations. These familiar melodies and locations were never presented during the encoding period. The melodies were composed of the same pulse trains as the melodies presented in the encoding period (i.e., C4, F4, G4, and A4) but in different combinations and with different temporal envelopes. The locations were different from the locations of the encoded stimulus and were always in the opposite quarter of the virtual acoustic environment compared with the encoded stimulus (Fig. 1A).

Finally, if the visual cue was a white cross, the subjects did not have to retrieve any information from the encoded stimulus because no test stimulus was presented, and they simply had to press any one of the two response keys as soon as the visual cue disappeared to control for motor output. These trials constituted the baseline control condition.

Because all trials shared a common encoding period and the different trials were presented in a pseudorandom order, it was not possible for the subjects to know what type of trial they were performing during the encoding phase, that is, until the presentation of the instruction by the visual cue. Thus, the strength of attention and encoding of the auditory stimulus (melody and location) was the same across all types of trial. Scanning commenced 3.9–5.4 s after the onset of the visual instruction cue to capture the hemodynamic response function related to the memory retrieval the subjects were making during the test period (Fig. 1C). In this manner, we also ensured that the scanner noise did not interfere with the perception of the auditory stimulus.

Data Acquisition and Analysis.

Scanning was performed with a 1.5-T Siemens Sonata MRI Scanner. After a high-resolution T1-weighted anatomical scan (whole-head, 1-mm3 isotropic resolution), six runs of 46 images each [35 oblique T2* gradient echo planar imaging (EPI) images, voxel size 3.7 × 3.7 × 3.7 mm, time of repetition (TR) 13.98 and 14.48 s, time of acquisition (TA) 2.975 s, echo time (TE) 45 ms, flip angle 90°] sensitive to the BOLD signal were acquired. Each run consisted of 46 trials and lasted between 10 and 11 min. A single-trial sparse-sampling design was used to ensure that the subjects were able to hear the stimuli without the scanner noise and that there would be no contamination of the BOLD response to the auditory stimuli from the BOLD response to the scanner acquisition noise (60, 61) (Fig. 1C). The scan acquisition occurred after the test period of each trial.

We excluded from the analysis the first two frames of each run and the trials in which the subjects made an error. Images were realigned by using AFNI image registration software (62), blurred, and nonlinearly registered in the Montreal Neurological Institute standardized stereotaxic space (63, 64). Statistical analyses of the functional data were performed with FMRISTAT (65). A detailed description of the methods is provided in SI Materials and Methods.

SI Behavioral Results

The mean success rate of the subjects on the experimental active retrieval and recognition control trials was above 95% [96.0% (±3.2%, SD) for active retrieval and 98.6% (±2.6%, SD) for recognition control trials]. The mean reaction time (on the correct trials) was 1.169 s (±0.238 s, SD) for the active retrieval trials and 1.058 s (±0.207 s, SD) for the recognition control trials. There was a difference in accuracy [t(11) = 2.92, P = 0.013] as well as reaction times [t(11) = 4.46, P = 0.001] between the active retrieval and recognition control trials, which was expected and reflects the additional requirement in executive control processing of the experimental trials. Note that error trials were excluded from the fMRI BOLD signal analysis and, therefore, the activity differences reported in this study were based only on trials in which the subjects responded correctly. For the baseline control trials during which no test stimulus was presented, all subjects responded within the allocated time period of 1 s after the presentation of the white cross to indicate that they had perceived the visual cue (mean reaction time 0.428 s, ±0.119 s, SD). Importantly, in the experimental active retrieval trials, there was no significant difference in reaction times when the subjects were retrieving the melody compared with when they were retrieving the location of the encoded stimulus [1.168 s (±0.246 s, SD) and 1.171 s (±0.234 s, SD), respectively; paired t test; t(11) = 0.17, P = 0.87]. During training, the subjects performed equally well on the two types of active retrieval trial, but during the scanning they made a few more errors in the trials in which they had to retrieve the location of the encoded stimulus compared with the retrieval of the melody [mean success rate 94.1% (±5.0%, SD) and 98.0% (±2.4%, SD), respectively; paired t test; t(11) = 2.95, P = 0.01]. This difference was driven by two subjects who made more mistakes on the active retrieval trials for location than the active retrieval trials for melody, all other subjects having essentially the same scores on the two types of active retrieval trial. We can, therefore, conclude that the selective isolation of the location and the melody were comparable in terms of difficulty for the majority of the subjects.

In a separate analysis, we found no differences in either accuracy or reaction times across all types of trial when we separated the subjects into two groups on the basis of the scanning protocol, namely the time of repetition (TR) used (14.48 and 13.98 s, respectively).

SI Additional Imaging Results

Although no behavioral differences were observed between the two groups of subjects scanned with the two different TRs, we examined whether the different scanning procedures led to differences in the activity patterns between the two groups. There were no differences in activity patterns obtained from the comparisons of the test period of the experimental active retrieval and the test period of the recognition control trials for each of the two groups, separately. In addition, the direct comparison of the test period of the experimental active retrieval trials between the two groups did not yield any significant activity differences. The subjects were thus grouped together and the imaging results were analyzed from the group as a whole.

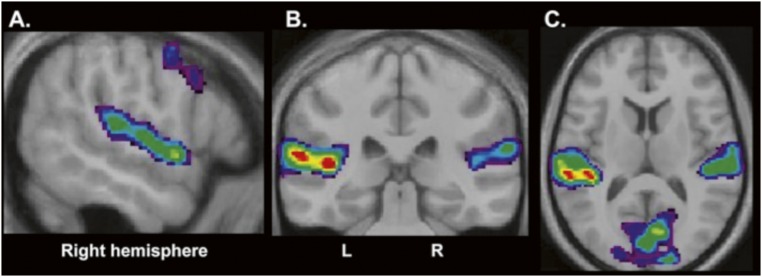

We also compared the test periods of the active retrieval and the recognition control trials in which a sound was presented with the test period of the baseline trials in which no sound was presented to examine the BOLD signal changes that accompanied the presentation of auditory stimuli per se. As expected, this comparison revealed activity within the primary and secondary auditory cortical areas, bilaterally: within both Heschl’s gyri and the planum temporale, as well as along the anterior superior temporal gyrus in the right hemisphere (Fig. S1 and Table S2).

Fig. S1.

Results from the comparison of the test period of all trials in which a sound was presented (i.e., active retrieval and recognition control trials) with the baseline control trials in which no sound was presented. (A) Sagittal section at x 56 to illustrate the activity increases along the right superior temporal gyrus in the primary and secondary auditory areas. (B and C) Coronal section at y −32 (B) and horizontal section at z 10 (C) to illustrate bilateral increases in activity in the planum temporale and Heschl’s gyri.

Although the primary aim of the present study was to evaluate the role of the midventrolateral prefrontal cortex in memory retrieval, the data are relevant to an argument that has existed in the literature regarding the role of the midlateral prefrontal cortex in memory. One theoretical position on lateral prefrontal function has been that the dorsal midlateral prefrontal cortex (areas 46 and 9/46) is involved in spatial working memory, whereas the ventral midlateral prefrontal cortex (areas 45 and 47/12) is involved in nonspatial working memory (29–31, 51). Recent findings, however, from single-neuron recording studies in nonhuman primates (44) and functional neuroimaging studies in human subjects have not supported such a dissociation in the prefrontal cortex (8, 9, 26); they are consistent with the theoretical position that the dorsal and ventral parts of the midlateral prefrontal cortex participate in different types of processing within working memory, regardless of the spatial or nonspatial nature of the stimuli (54). In the present fMRI study, we compared, separately, the test period of the trials in which the location of the encoded stimulus had to be retrieved and the trials in which the melody (nonspatial aspect of the stimulus) had to be retrieved with the test period of the recognition control trials. Both comparisons yielded significant activity increases within the midventrolateral prefrontal cortex but not within the middorsolateral prefrontal cortex (Table S1), indicating that the midventrolateral prefrontal cortex is involved in the selective retrieval of both the spatial and nonspatial aspects of a stimulus. For the retrieval of the location, there were also bilateral activity increases in the dorsal premotor cortex [MNI coordinates (x, y, z) 26, −2, 50, t = 4.068, right hemisphere; −42, 2, 50, t = 4.571, left hemisphere] close to the frontal eye field region (56).

Table S1.

Peak maxima within the midventrolateral prefrontal cortex from the separate comparison of the (i) melody and (ii) location active retrieval test periods with the recognition control test period

| Active retrieval melody minus recognition | Active retrieval location minus recognition | |||||||

| Stereotaxic coordinates (MNI space) | Stereotaxic coordinates (MNI space) | |||||||

| Brain area | x | y | z | t value | x | y | z | t value |

| Right hemisphere | ||||||||

| MVLPFC (area 45) | 50 | 20 | 18 | 3.5 | 54 | 20 | 18 | 4.17 |

| MVLPFC (area 47/12) | 34 | 22 | 2 | 4.54 | 36 | 26 | −2 | 3.65 |

| MVLPFC (rostral) | 56 | 40 | 0 | 3.17 | ||||

| Left hemisphere | ||||||||

| MVLPFC (area 45) | −56 | 22 | 14 | 4.13 | −52 | 22 | 24 | 4.5 |

| MVLPFC (area 47/12) | −36 | 24 | 0 | 4.05 | −32 | 20 | 6 | 5.25 |

MVLPFC, midventrolateral prefrontal cortex.

Table S2.

Peak maxima within the auditory regions from the comparison of all test periods in which a sound was presented with the baseline control test period in which no sound was presented

| Stereotaxic coordinates (MNI space) | ||||

| Brain area | x | y | z | t value |

| Right hemisphere | ||||

| Anterior superior temporal gyrus | 56 | 4 | −4 | 5.31 |

| Planum temporale | 60 | −10 | 6 | 5.43 |

| Heschl’s gyrus | 44 | −26 | 10 | 5.07 |

| Left hemisphere | ||||

| Planum temporale | −62 | −32 | 16 | 6.21 |

| Heschl’s gyrus | −42 | −32 | 10 | 6.27 |

To examine further the dissociation between the dorsal and ventral parts of the midlateral prefrontal cortex, we calculated the mean BOLD signal change between the active retrieval and recognition control trials during the test period within the middorsolateral and midventrolateral prefrontal regions. For the ventrolateral prefrontal cortex, we calculated the mean BOLD signal change during the test period within a 10-mm3 radius centered on all of the peaks of activity within the midventrolateral prefrontal cortex (both within area 45 and area 47/12) for each subject. We analyzed the results separately for each peak and also computed an average for the midventrolateral prefrontal cortex. Because no peaks of activity were observed within the middorsolateral prefrontal cortex, we calculated the mean BOLD signal change within a 10-mm3 radius centered around the following coordinates in the right hemisphere: x 21, y 40, z 26, selected on the basis of an earlier study that focused on the role of the middorsolateral prefrontal cortex in the monitoring of information in working memory (55). We transposed this peak to the left hemisphere to calculate the mean BOLD signal change in the left hemisphere dorsolateral prefrontal cortex and calculated an average mean BOLD signal change for the middorsolateral prefrontal cortex of each subject. The average BOLD signal in the midventrolateral prefrontal cortex was significantly greater than the average signal in the middorsolateral prefrontal cortex both for the active retrieval of the spatial (location) [t(11) = 2.8, P < 0.05] and the active retrieval of the nonspatial (melody) [t(11) = 3.3, P < 0.05] features of the encoded stimulus. The signal from each one of the midventrolateral prefrontal peaks was greater than the signal from the middorsolateral prefrontal peaks (P < 0.05). These findings provide further support for the argument that the midventrolateral prefrontal cortex is the key region for the retrieval of both the spatial and the nonspatial features of auditory information in memory.

The present results demonstrated activity increases within both areas 45 and 47/12 of the midventrolateral prefrontal cortex. Because ventrolateral prefrontal cortical area 47/12 folds within the horizontal ramus and the depth of the sulcus is considerable, the caudal extent of area 47/12 spreads as far as the anterior insula. Cytoarchitectonic analyses have shown that the cortex of area 47/12 is a granular prefrontal cortex that can be distinguished from the dysgranular limbic cortex of the anterior insula (27, 28). In successive sections of MRI images, the anterior end of the insula defined by the rostral-most extension of the circular sulcus of the insula can clearly be differentiated from the caudal-most extension of the horizontal ramus of the Sylvian fissure. In the present study, we confirmed that the activation focus reported as caudal area 47/12 was indeed within the horizontal ramus of the Sylvian fissure and not in the anterior insula, although the activity spreads into the anterior insular cortex that is related to the ventrolateral prefrontal cortex because (i) area 47/12 is thought to have developed out of a differentiation from the anterior insular cortex (28) and (ii) these two areas are directly connected to each other. In addition, the anteroposterior extent of the insula is known to be marked by the constant presence of the claustrum, which is continually adjacent to layer VI of the insular cortex. It is this characteristic that has led to the term “claustro-insular region.” We use the presence of the claustrum as an additional criterion to define the anterior-most extension of the insular cortex. As can be seen in sections y 22, y 24, and y 26 in Fig. 2 in the main text, no claustrum (or putamen, which lies next to the claustrum) can be visualized at these sections, indicating that the activity reported as area 47/12 lies anterior to the most anterior part of the insula.

For the interaction analysis that examined whether there was a significant change in functional connectivity between the midventrolateral prefrontal cortex and the auditory superior temporal region and adjacent inferior parietal region, a separate analysis was carried out for the active retrieval of the two types of auditory information, namely melody or location compared with the recognition control condition. When retrieval of the specific melody of the auditory stimulus was required, area 45 in the right hemisphere interacted with the auditory cortical region on the dorsal bank of the superior temporal sulcus (Fig. 2) [MNI coordinates (x, y, z) 50, −22, 0, t = 3.47, P = 0.0005 uncorrected, (x, y, z) 60, −44, 8, t = 3.43, P = 0.0005 uncorrected, MNI coordinates (x, y, z) 56, −50, 18, t = 4.18, P = 0.0004 uncorrected], as well as with the adjacent part of the inferior parietal lobule, namely area PG [MNI coordinates (x, y, z) 44, −64, 20, t = 3.67, P = 0.0002 uncorrected]. When the retrieval of the location of the presented auditory stimulus was required, area 45 in the right hemisphere interacted with the same areas in the inferior parietal lobule (area PG) in the right hemisphere (Fig. 2) [MNI coordinates (x, y, z) 54, −64, 20, t = 4.38, P = 0.00003 uncorrected].

SI Materials and Methods

The auditory stimuli were presented through insert earphones (E-A-RTONE) and the visual stimuli through a liquid crystal display (LCD) projector with a mirror system, and the subjects responded by pressing on an MR-compatible optical computer mouse. The stimulus presentation and the recording of the subjects’ responses were controlled by E-Prime (Psychology Software Tools). The subjects became familiar with the auditory stimuli during training sessions that preceded the scanning session.

The stimuli used in the experiment were selected on the basis of a pilot study to ensure that the difficulty level of discriminating one melody from another was comparable to that of discriminating one location from another. To eliminate between-subject variability due to the different anatomies of the subjects’ ears, we used insert earphones, and a high-pass filter was applied to compensate for the attenuation of the high frequencies by these insert earphones. In addition, all stimuli were designed so that the subjects could not easily verbalize them. All melodies shared the same four-pulse trains in different combinations for the same duration, whereas the locations from where the melodies were presented were all at 0° azimuth and were selected so that no verbal labels, such as right, left, or center, could be easily applied to them. There were two locations in the right quarter and two in the left quarter of the virtual acoustic environment. As can be seen in Fig. 1A of the main text, none of the stimulus locations were in the far right, far left, or center of the virtual acoustic environment, where they could easily be verbalized.

Note that, across trials, all four melodies had been presented in all four possible locations in the virtual acoustic environment and, therefore, there was no strong or stable association between any one of the melodies and any specific location. Thus, retrieval of the required information on each trial could not be based on strong stimulus-to-location associations. For instance, if melody A was presented in location X during the encoding period and melody A was presented in location Y during the test period, the subjects would provide a different answer depending on the feature of the encoded stimulus that they had been instructed to retrieve. If the visual cue indicated the retrieval of the melody, the subjects would have to press the left response key because the melody of the test stimulus would be the same as that of the encoded melody. By contrast, if the visual cue indicated the retrieval of the location of the encoded stimulus, the subjects would have to press the right response key because the location of the test stimulus would be different from that of the encoded stimulus.

Half of the subjects were scanned with a TR of 14.48 s and the other half were scanned with a TR of 13.98 s. The time of acquisition was the same across the two groups (TA 2.975 s). In the first group, we acquired the images 4.4, 4.9, and 5.4 s after the cue onset, whereas in the second group we acquired the images 3.9, 4.4, and 4.9 s after the cue onset. We used this acquisition protocol to ensure that we sampled adequately across the hemodynamic response function. There were no differences between the two groups in terms of their performance (errors and reaction times) and functional results, and we are therefore presenting the combined results from the two groups in Results. Trials in which the subjects made an error were excluded from the fMRI analysis.

Images from each functional run were realigned with AFNI image registration software using the third frame of the first run as a reference (62) and smoothed using a 6-mm full-width half-maximum (FWHM) isotropic Gaussian kernel. Images from each subject were then linearly registered to Montreal Neurological Institute (MNI) stereotaxic space using in-house dedicated software (63). As a second step, we performed a nonlinear registration for the anatomical images that was estimated on MRI data blurred with an 8-mm FWHM Gaussian kernel and a 3D lattice grid with 4-mm spacing between nodes. This transform corrects for overall brain shape and aligns major cortical structures (e.g., the central sulcus and the Sylvian fissure) but does not necessarily align secondary and tertiary sulci and gyri (64).

The data analysis was performed using the software FMRISTAT created in-house and implemented in MATLAB (65) available at www.math.mcgill.ca/keith/fmristat). The data were first converted to a percentage of the whole volume. The statistical analysis of the percentages was based on a linear model with correlated errors. In the design matrix, we defined the events of interest (i.e., the test periods of the melody active retrieval trials, the location active retrieval trials, and the recognition control trials) and the baseline event (i.e., the test period of the baseline control trials). Each column of the design matrix contained an event variable, and each row represented an acquisition. The design matrix was set up in this manner due to the sparse-sampling acquisition used during the scanning because each test period was followed by one acquisition. Temporal drift was removed by adding a cubic spline in the design matrix, and spatial drift was removed by adding a covariate in the whole-volume average. This produced estimates of effects and their SEs. The effects of interest were specified by a vector of contrast weights that gave a weighted sum or compound of parameter estimates referred to as a contrast. We were interested mainly in the contrast between the experimental active retrieval and the recognition test periods and between all test periods in which an auditory test stimulus was presented and the control baseline test period in which no auditory stimulus was presented. Additional contrasts of interest were between the melody active retrieval test period and the recognition test period and between the location active retrieval test period and the control recognition test period. From these contrasts, t statistics were produced for each contrast and each functional run (65).

As a second step, functional runs within each subject were combined using a fixed effects analysis that involved estimating the ratio of the random effects variance to the fixed effects variance and then smoothing this ratio with an infinitely large FWHM Gaussian filter, yielding a global ratio of 1. To generate the group average, the results across subjects were combined using a mixed effects analysis by first estimating the ratio of the random effects variance to the fixed effects variance and then regularizing this ratio by spatial smoothing with an FWHM Gaussian filter to achieve 100 effective degrees of freedom (65).

The resulting t-statistic images were thresholded using the minimum given by a Bonferroni correction, random field theory, and discrete local maxima taking into account the nonisotropic spatial correlation of the errors (66). For a single voxel in an exploratory search involving all peaks within an estimated gray matter of 600 cm3 covered by the slices, the threshold for reporting a peak as significant (P < 0.05) was t = 4.7 (67). A cluster volume extent >530 mm3 with t > 3 was significant (P < 0.05) corrected for multiple comparisons (68). For the midventrolateral prefrontal cortex, we used a mask that contained all voxels that belonged to this region in both the left and right hemispheres and calculated the volume covered by the slices. The threshold for reporting a peak as significant within that volume (P < 0.05) was t = 4.2, whereas a cluster volume extent >169 mm3 with t > 3 was significant (P < 0.05) corrected for multiple comparisons.

For the interaction analysis, for each subject, we identified, bilaterally, the reference voxels in the midventrolateral prefrontal cortex in which the contrast between the active retrieval and recognition test periods revealed activity increases [i.e., the caudal and rostral parts of area 47/12 and area 45 (27)]. We used the general linear model with regressors for the events of interest and the drift, so as to account for their effect, and then added a regressor for the time course at the reference voxel. Finally, we added as a regressor variable an interaction (product) between the task events and the reference voxel time course. The voxel values were extracted for each subject from native space after having applied slice time correction. Finally, we estimated the effect, SE, and t statistic for the interaction in the same manner as described above. Increased functional connectivity for the test period of the experimental active retrieval trials compared with the same period of the recognition control trials between the reference voxels and other voxels in the brain is represented by positive t values. Given that we had an a priori hypothesis that the midventrolateral prefrontal cortex would demonstrate increased functional connectivity with the posterior auditory areas during the active retrieval test period, we are reporting interaction results from these areas at P < 0.005 uncorrected.

Acknowledgments

We thank P. Ahad for help with stimulus construction, Dr. P. Bermudez for help with the implementation of the task, and Drs. R. Zatorre, B. Pike, K. Worsley, J.-K. Chen, and R. Amsel for advice. Supported by Canadian Institutes of Health Research Grant FDN-143212 (to M.P.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1520432113/-/DCSupplemental.

References

- 1.Petrides M, Alivisatos B, Evans AC. Functional activation of the human ventrolateral frontal cortex during mnemonic retrieval of verbal information. Proc Natl Acad Sci USA. 1995;92(13):5803–5807. doi: 10.1073/pnas.92.13.5803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Amunts K, et al. Analysis of neural mechanisms underlying verbal fluency in cytoarchitectonically defined stereotaxic space—The roles of Brodmann areas 44 and 45. Neuroimage. 2004;22(1):42–56. doi: 10.1016/j.neuroimage.2003.12.031. [DOI] [PubMed] [Google Scholar]

- 3.Wagner S, Sebastian A, Lieb K, Tüscher O, Tadić A. A coordinate-based ALE functional MRI meta-analysis of brain activation during verbal fluency tasks in healthy control subjects. BMC Neurosci. 2014;15(19):19. doi: 10.1186/1471-2202-15-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Badre D, Poldrack RA, Paré-Blagoev EJ, Insler RZ, Wagner AD. Dissociable controlled retrieval and generalized selection mechanisms in ventrolateral prefrontal cortex. Neuron. 2005;47(6):907–918. doi: 10.1016/j.neuron.2005.07.023. [DOI] [PubMed] [Google Scholar]

- 5.Thompson-Schill SL, D’Esposito M, Aguirre GK, Farah MJ. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: A reevaluation. Proc Natl Acad Sci USA. 1997;94(26):14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Badre D, Wagner AD. Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia. 2007;45(13):2883–2901. doi: 10.1016/j.neuropsychologia.2007.06.015. [DOI] [PubMed] [Google Scholar]

- 7.Petrides M. The mid-ventrolateral prefrontal cortex and active mnemonic retrieval. Neurobiol Learn Mem. 2002;78(3):528–538. doi: 10.1006/nlme.2002.4107. [DOI] [PubMed] [Google Scholar]

- 8.Kostopoulos P, Petrides M. The mid-ventrolateral prefrontal cortex: Insights into its role in memory retrieval. Eur J Neurosci. 2003;17(7):1489–1497. doi: 10.1046/j.1460-9568.2003.02574.x. [DOI] [PubMed] [Google Scholar]

- 9.Kostopoulos P, Albanese MC, Petrides M. Ventrolateral prefrontal cortex and tactile memory disambiguation in the human brain. Proc Natl Acad Sci USA. 2007;104(24):10223–10228. doi: 10.1073/pnas.0700253104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chapados C, Petrides M. Ventrolateral and dorsomedial frontal cortex lesions impair mnemonic context retrieval. Proc R Soc B. 2015;282(1801):20142555. doi: 10.1098/rspb.2014.2555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Squire LR, Zola-Morgan S. The medial temporal lobe memory system. Science. 1991;253(5026):1380–1386. doi: 10.1126/science.1896849. [DOI] [PubMed] [Google Scholar]

- 12.Scoville WB, Milner B. Loss of recent memory after bilateral hippocampal lesions. J Neurol Neurosurg Psychiatry. 1957;20(1):11–21. doi: 10.1136/jnnp.20.1.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mishkin M. A memory system in the monkey. Philos Trans R Soc Lond B Biol Sci. 1982;298(1089):83–95. doi: 10.1098/rstb.1982.0074. [DOI] [PubMed] [Google Scholar]

- 14.Petrides M, Pandya DN. Association fiber pathways to the frontal cortex from the superior temporal region in the rhesus monkey. J Comp Neurol. 1988;273(1):52–66. doi: 10.1002/cne.902730106. [DOI] [PubMed] [Google Scholar]

- 15.Barbas H. Architecture and cortical connections of the prefrontal cortex in the rhesus monkey. Adv Neurol. 1992;57:91–115. [PubMed] [Google Scholar]

- 16.Romanski LM, Bates JF, Goldman-Rakic PS. Auditory belt and parabelt projections to the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1999;403(2):141–157. doi: 10.1002/(sici)1096-9861(19990111)403:2<141::aid-cne1>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- 17.Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proc Natl Acad Sci USA. 2000;97(22):11793–11799. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Romanski LM, et al. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2(12):1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci. 2002;16(2):291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- 20.Petrides M, Pandya DN. Distinct parietal and temporal pathways to the homologues of Broca’s area in the monkey. PLoS Biol. 2009;7(8):e1000170. doi: 10.1371/journal.pbio.1000170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Romanski LM, Averbeck BB, Diltz M. Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J Neurophysiol. 2005;93(2):734–747. doi: 10.1152/jn.00675.2004. [DOI] [PubMed] [Google Scholar]

- 22.Romanski LM, Goldman-Rakic PS. An auditory domain in primate prefrontal cortex. Nat Neurosci. 2002;5(1):15–16. doi: 10.1038/nn781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Snyder HR, Banich MT, Munakata Y. Choosing our words: Retrieval and selection processes recruit shared neural substrates in left ventrolateral prefrontal cortex. J Cogn Neurosci. 2011;23(11):3470–3482. doi: 10.1162/jocn_a_00023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Crottaz-Herbette S, Anagnoson RT, Menon V. Modality effects in verbal working memory: Differential prefrontal and parietal responses to auditory and visual stimuli. Neuroimage. 2004;21(1):340–351. doi: 10.1016/j.neuroimage.2003.09.019. [DOI] [PubMed] [Google Scholar]

- 25.Newman SD, Twieg D. Differences in auditory processing of words and pseudowords: An fMRI study. Hum Brain Mapp. 2001;14(1):39–47. doi: 10.1002/hbm.1040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kostopoulos P, Petrides M. Left mid-ventrolateral prefrontal cortex: Underlying principles of function. Eur J Neurosci. 2008;27(4):1037–1049. doi: 10.1111/j.1460-9568.2008.06066.x. [DOI] [PubMed] [Google Scholar]

- 27.Petrides M, Pandya DN. Comparative architectonic analysis of the human and the macaque frontal cortex. In: Boller F, Grafman J, editors. Handbook of Neuropsychology. Vol 9. Elsevier; Amsterdam: 1994. pp. 17–58. [Google Scholar]

- 28.Pandya D, Seltzer B, Petrides M, Cipolloni PB. Cerebral Cortex: Architecture, Connections, and the Dual Origin Concept. Oxford Univ Press; New York: 2015. [Google Scholar]

- 29.Goldman-Rakic PS. Circuitry of primate prefrontal cortex and regulation of behavior by representational memory. In: Plum F, Mountcastle U, editors. Handbook of Physiology. Vol 5. Am Physiol Soc; Washington, DC: 1987. pp. 373–417. [Google Scholar]

- 30.Goldman-Rakic PS. Regional and cellular fractionation of working memory. Proc Natl Acad Sci USA. 1996;93(24):13473–13480. doi: 10.1073/pnas.93.24.13473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Levy R, Goldman-Rakic PS. Segregation of working memory functions within the dorsolateral prefrontal cortex. Exp Brain Res. 2000;133(1):23–32. doi: 10.1007/s002210000397. [DOI] [PubMed] [Google Scholar]

- 32.Colombo M, Rodman HR, Gross CG. The effects of superior temporal cortex lesions on the processing and retention of auditory information in monkeys (Cebus apella) J Neurosci. 1996;16(14):4501–4517. doi: 10.1523/JNEUROSCI.16-14-04501.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zatorre RJ, Evans AC, Meyer E. Neural mechanisms underlying melodic perception and memory for pitch. J Neurosci. 1994;14(4):1908–1919. doi: 10.1523/JNEUROSCI.14-04-01908.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 2000;97(22):11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of Visual Behaviour. MIT Press; Cambridge, MA: 1982. pp. 549–586. [Google Scholar]

- 36.Leinonen L, Hyvärinen J, Sovijärvi AR. Functional properties of neurons in the temporo-parietal association cortex of awake monkey. Exp Brain Res. 1980;39(2):203–215. doi: 10.1007/BF00237551. [DOI] [PubMed] [Google Scholar]

- 37.Seltzer B, Pandya DN. Frontal lobe connections of the superior temporal sulcus in the rhesus monkey. J Comp Neurol. 1989;281(1):97–113. doi: 10.1002/cne.902810108. [DOI] [PubMed] [Google Scholar]

- 38.Fritz J, Mishkin M, Saunders RC. In search of an auditory engram. Proc Natl Acad Sci USA. 2005;102(26):9359–9364. doi: 10.1073/pnas.0503998102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ahveninen J, et al. Evidence for distinct human auditory cortex regions for sound location versus identity processing. Nat Commun. 2013;4:2585. doi: 10.1038/ncomms3585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zatorre RJ. Discrimination and recognition of tonal melodies after unilateral cerebral excisions. Neuropsychologia. 1985;23(1):31–41. doi: 10.1016/0028-3932(85)90041-7. [DOI] [PubMed] [Google Scholar]

- 41.Poremba A, et al. Species-specific calls evoke asymmetric activity in the monkey’s temporal poles. Nature. 2004;427(6973):448–451. doi: 10.1038/nature02268. [DOI] [PubMed] [Google Scholar]

- 42.Poremba A, Mishkin M. Exploring the extent and function of higher-order auditory cortex in rhesus monkeys. Hear Res. 2007;229(1-2):14–23. doi: 10.1016/j.heares.2007.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Miyashita Y. Cognitive memory: Cellular and network machineries and their top-down control. Science. 2004;306(5695):435–440. doi: 10.1126/science.1101864. [DOI] [PubMed] [Google Scholar]

- 44.Cadoret G, Petrides M. Ventrolateral prefrontal neuronal activity related to active controlled memory retrieval in nonhuman primates. Cereb Cortex. 2007;17(Suppl 1):i27–i40. doi: 10.1093/cercor/bhm086. [DOI] [PubMed] [Google Scholar]

- 45.Knight RT, Scabini D, Woods DL. Prefrontal cortex gating of auditory transmission in humans. Brain Res. 1989;504(2):338–342. doi: 10.1016/0006-8993(89)91381-4. [DOI] [PubMed] [Google Scholar]

- 46.Chao LL, Knight RT. Contribution of human prefrontal cortex to delay performance. J Cogn Neurosci. 1998;10(2):167–177. doi: 10.1162/089892998562636. [DOI] [PubMed] [Google Scholar]

- 47.Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: Nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12(6):718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Margulies DS, Petrides M. Distinct parietal and temporal connectivity profiles of ventrolateral frontal areas involved in language production. J Neurosci. 2013;33(42):16846–16852. doi: 10.1523/JNEUROSCI.2259-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Mufson EJ, Pandya DN. Some observations on the course and composition of the cingulum bundle in the rhesus monkey. J Comp Neurol. 1984;225(1):31–43. doi: 10.1002/cne.902250105. [DOI] [PubMed] [Google Scholar]

- 50.Jones DK, Christiansen KF, Chapman RJ, Aggleton JP. Distinct subdivisions of the cingulum bundle revealed by diffusion MRI fibre tracking: Implications for neuropsychological investigations. Neuropsychologia. 2013;51(1):67–78. doi: 10.1016/j.neuropsychologia.2012.11.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Wilson FA, Scalaidhe SP, Goldman-Rakic PS. Dissociation of object and spatial processing domains in primate prefrontal cortex. Science. 1993;260(5116):1955–1958. doi: 10.1126/science.8316836. [DOI] [PubMed] [Google Scholar]

- 52.Plakke B, Romanski LM. Auditory connections and functions of prefrontal cortex. Front Neurosci. 2014;8:199. doi: 10.3389/fnins.2014.00199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Plakke B, Ng CW, Poremba A. Neural correlates of auditory recognition memory in primate lateral prefrontal cortex. Neuroscience. 2013;244:62–76. doi: 10.1016/j.neuroscience.2013.04.002. [DOI] [PubMed] [Google Scholar]

- 54.Petrides M. Lateral prefrontal cortex: Architectonic and functional organization. Philos Trans R Soc Lond B Biol Sci. 2005;360(1456):781–795. doi: 10.1098/rstb.2005.1631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Champod AS, Petrides M. Dissociable roles of the posterior parietal and the prefrontal cortex in manipulation and monitoring processes. Proc Natl Acad Sci USA. 2007;104(37):14837–14842. doi: 10.1073/pnas.0607101104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Amiez C, Kostopoulos P, Champod AS, Petrides M. Local morphology predicts functional organization of the dorsal premotor region in the human brain. J Neurosci. 2006;26(10):2724–2731. doi: 10.1523/JNEUROSCI.4739-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Courtney SM, Ungerleider LG, Keil K, Haxby JV. Object and spatial visual working memory activate separate neural systems in human cortex. Cereb Cortex. 1996;6(1):39–49. doi: 10.1093/cercor/6.1.39. [DOI] [PubMed] [Google Scholar]

- 58.Bunge SA, Kahn I, Wallis JD, Miller EK, Wagner AD. Neural circuits subserving the retrieval and maintenance of abstract rules. J Neurophysiol. 2003;90(5):3419–3428. doi: 10.1152/jn.00910.2002. [DOI] [PubMed] [Google Scholar]

- 59.Crovitz HF, Zener K. A group-test for assessing hand- and eye-dominance. Am J Psychol. 1962;75:271–276. [PubMed] [Google Scholar]

- 60.Belin P, Zatorre RJ, Hoge R, Evans AC, Pike B. Event-related fMRI of the auditory cortex. Neuroimage. 1999;10(4):417–429. doi: 10.1006/nimg.1999.0480. [DOI] [PubMed] [Google Scholar]

- 61.Hall DA, et al. “Sparse” temporal sampling in auditory fMRI. Hum Brain Mapp. 1999;7(3):213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Cox RW, Jesmanowicz A. Real-time 3D image registration for functional MRI. Magn Reson Med. 1999;42(6):1014–1018. doi: 10.1002/(sici)1522-2594(199912)42:6<1014::aid-mrm4>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- 63.Collins DL, Neelin P, Peters TM, Evans AC. Automatic 3D intersubject registration of MR volumetric data in standardized Talairach space. J Comput Assist Tomogr. 1994;18(2):192–205. [PubMed] [Google Scholar]

- 64.Collins DL, Holmes CJ, Peters TM, Evans AC. Automatic 3-D model-based neuroanatomical segmentation. Hum Brain Mapp. 1995;3(3):190–208. [Google Scholar]

- 65.Worsley KJ, et al. A general statistical analysis for fMRI data. Neuroimage. 2002;15(1):1–15. doi: 10.1006/nimg.2001.0933. [DOI] [PubMed] [Google Scholar]

- 66.Worsley KJ. An improved theoretical P value for SPMs based on discrete local maxima. Neuroimage. 2005;28(4):1056–1062. doi: 10.1016/j.neuroimage.2005.06.053. [DOI] [PubMed] [Google Scholar]

- 67.Worsley KJ, et al. A unified statistical approach for determining significant signals in images of cerebral activation. Hum Brain Mapp. 1996;4(1):58–73. doi: 10.1002/(SICI)1097-0193(1996)4:1<58::AID-HBM4>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- 68.Friston KJ, et al. Analysis of fMRI time-series revisited. Neuroimage. 1995;2(1):45–53. doi: 10.1006/nimg.1995.1007. [DOI] [PubMed] [Google Scholar]