Abstract

Purpose

Grammatical encoding (GE) is impaired in agrammatic aphasia; however, the nature of such deficits remains unclear. We examined grammatical planning units during real-time sentence production in speakers with agrammatic aphasia and control speakers, testing two competing models of GE. We queried whether speakers with agrammatic aphasia produce sentences word by word without advanced planning or whether hierarchical syntactic structure (i.e., verb argument structure; VAS) is encoded as part of the advanced planning unit.

Method

Experiment 1 examined production of sentences with a predefined structure (i.e., “The A and the B are above the C”) using eye tracking. Experiment 2 tested production of transitive and unaccusative sentences without a predefined sentence structure in a verb-priming study.

Results

In Experiment 1, both speakers with agrammatic aphasia and young and age-matched control speakers used word-by-word strategies, selecting the first lemma (noun A) only prior to speech onset. However, in Experiment 2, unlike controls, speakers with agrammatic aphasia preplanned transitive and unaccusative sentences, encoding VAS before speech onset.

Conclusions

Speakers with agrammatic aphasia show incremental, word-by-word production for structurally simple sentences, requiring retrieval of multiple noun lemmas. However, when sentences involve functional (thematic to grammatical) structure building, advanced planning strategies (i.e., VAS encoding) are used. This early use of hierarchical syntactic information may provide a scaffold for impaired GE in agrammatism.

Transforming a thought into a grammatical sentence requires retrieval of a set of lexical entries, together with their semantic and syntactic properties (referred to as lemmas), and coordinating these items into a syntactic structure (functional structure building; Bock & Levelt, 1994). Together, these processes are referred to as grammatical encoding (GE). Most theories of sentence production posit that sentences are not completely planned prior to speaking. Instead, GE occurs in an incremental (i.e., piecemeal) fashion from left to right, resulting in simultaneous speaking and planning (Bock & Levelt, 1994; De Smedt, 1990; Kempen & Hoenkemp, 1987; Levelt, 1989). Such incrementality allows a speaker to begin speech faster, supports fluent speech, and promotes efficient use of cognitive resources by reducing memory buffer demands (De Smedt, 1990; Ferreira & Slevc, 2007). One question pertaining to incrementality concerns the unit of advanced planning. That is, how much sentence structure is planned before speaking ensues? Is this done word by word, or are larger planning units engaged?

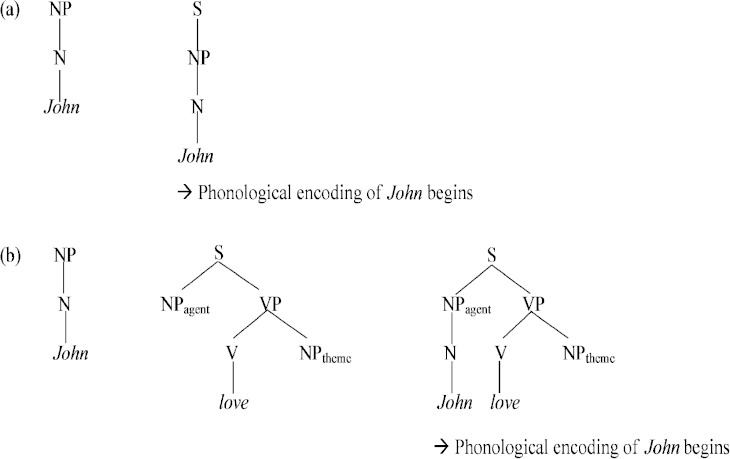

The word-by-word incremental model proposes that sentence structure is a mere reflection of the order of individual lexical items activated and that speakers begin sentences without advanced planning or knowledge of the upcoming structure of the sentence (De Smedt, 1990; Kempen & Hoenkemp, 1987; Levelt, 1989; “rapid incrementality” in Ferreira & Swets, 2002; “elemental view” in Bock, Irwin, & Davidson, 2004). Thus, as soon as the first lemma is accessed, it is encoded as the subject of the sentence, and the rest of the sentence structure is prepared “on the fly” to accommodate the next most available lexical item. As shown in Figure 1a, in producing the sentence “John loves Mary,” once the lemma John is selected, projecting its NP (noun phrase) node, it is assigned to the subject position automatically, and phonological encoding of the subject begins. The rest of the sentence structure, including the verb, is encoded during speech.

Figure 1.

Grammatical encoding processes for (a) word-by-word and (b) structural incremental models. NP = noun phrase; N = noun; S = sentence; VP = verb phrase.

Much experimental evidence from young healthy speakers shows that language production is highly incremental (e.g., Bock & Warren, 1985; Griffin, 2001; Griffin & Mouton, 2004; Schriefers, Teruel, & Meinshausen, 1998). For example, Griffin (2001) found that, in a multiword sentence production task (e.g., “The clock and the sofa are above the toaster”), young speakers planned both the lemma and its phonological form (i.e., the lexeme) for only the first word before speech onset. Using an eye-tracking sentence production paradigm and manipulating the codability (i.e., the number of lemma candidates; low-codability items such as sofa and couch vs. high-codability items such as fork) of the second and third nouns in the sentence, Griffin (2001) found that participants' gaze duration was longer to the second and third objects when codability was lower compared with when it was higher. However, these codability-induced differences in gaze duration occurred during speech rather than before speech onset, suggesting that speakers prepared only the first object's lemma, not lemmas of the second and third objects, before they began speaking. When word frequencies of the objects were manipulated (which affects lexeme retrieval during phonological encoding), results were the same. These findings suggest that sentence production proceeds in word-by-word incremental manner.

The structural incremental model proposes that GE is guided by a larger linguistic unit, which provides hierarchical (or relational) syntactic information to the speaker, such as verb argument structure (VAS; Ferreira, 2000; Kempen & Huijbers, 1983; Lindsley, 1975, 1976). VAS is represented in lemmas for lexical verbs, specifying the number of arguments required and their thematic–structural relation to the verb in the sentence. For example, when a speaker retrieves the transitive verb kick, two associated arguments (i.e., agent and theme) are retrieved and their functional (thematic to grammatical) roles such as “agent to subject” or “theme to object” are assigned. Selected constituents are then concatenated to form a sentence (Bock & Levelt, 1994; Levelt, 1989). On this account, speakers plan at least up to the verb before speech because encoding the verb lemma projects its argument structure configuration specifying the verb and its thematic–structural relation to the arguments (resulting in a so-called elementary tree in Ferreira, 2000). As shown in Figure 1b, in addition to the first lemma John, the verb lemma with its VAS configuration is retrieved. Because the sentence structure becomes apparent only after the verb lemma is encoded, only then can the lemma John be grammatically encoded as the subject of the sentence and assigned the agent role. Thus, speakers grammatically encode at least the subject and the verb lemma before production of the sentence.

A few studies in the literature suggest that at least some verb information needs to be accessed prior to production of the subject noun in constrained sentence production tasks (Kempen & Huijbers, 1983; Lindsley, 1975, 1976). For example, Lindsley (1975) compared speech onset latencies when speakers produced different structures (i.e., subject noun, subject–verb, subject–verb–object) on the basis of the same set of transitive action–describing pictures. When they produced the subject noun only (“the man”), the speakers showed shorter speech onset latencies than when they produced subject–verb utterances (“the man greets”). However, speakers showed no difference in speech onset latencies between subject–verb utterances and subject–verb–object utterances. These findings suggested that some verb-related, but not object-related, GE took place before speech onset.

Many individuals with agrammatic aphasia show impaired sentence comprehension and production, particularly for syntactically complex structures. Results of research in the comprehension domain suggest that such deficits are associated with impaired language processing (Linebarger, Schwartz, & Saffran, 1983; Mack, Ji, & Thompson, 2013; Shapiro & Levine, 1990; Shapiro, Nagel, & Levine, 1993; Thompson & Choy, 2009; Wulfeck, Bates, & Capasso, 1991) rather than an impairment in linguistic knowledge as espoused by Grodzinsky (1990). In the production domain, although little studied, agrammatic sentence deficits also are considered to result from impaired processing of linguistic knowledge during GE (e.g., Bastiaanse & van Zonneveld, 2004, 2005; Caramazza & Miceli, 1991; Lee & Thompson, 2011a, 2011b; Lee & Thompson, 2004; Thompson, Lange, Schneider, & Shapiro, 1997). For instance, as the argument structure of a verb becomes more complex (i.e., as the number of arguments associated with the verb increases), speakers with agrammatic aphasia have greater difficulty producing the verb in isolation as well as in sentences despite their intact knowledge of different argument structures (e.g., De Bleser & Kauschke, 2003; Kim & Thompson, 2000; Kiss, 2000; Luzzatti et al., 2002; Thompson et al., 1997). It is notable that sentences involving noncanonical mapping between the arguments' thematic roles and surface word order are particularly challenging for these speakers (Bastiaanse & van Zonneveld, 2004, 2005; Kegl, 1995; Lee & Thompson, 2004). For example, sentences with unaccusative verbs such as break (item 1 in the list below) involve derivational processes in which the argument bearing the theme role (glass) is promoted to the subject position from the object position (Burzio, 1986; Perlmutter, 1978). These sentences often are impaired in speakers with agrammatic aphasia in the face of retained ability to produce sentences with unergative verbs such as swim (item 2 in the list below) in which the agent argument (in this example, man), typically linked to the subject, appears in the subject position (Kegl, 1995; Lee & Thompson, 2004).

The glassi broke t i.

The man swam.

Although previous studies suggest that the operations at the level of GE are faulty in individuals with agrammatic aphasia, the precise nature of the GE deficits is still unclear. In particular, little research has focused on (a) the linguistic units used during GE in speakers with agrammatic aphasia and (b) whether and how the two subprocesses of GE (i.e., lexicalization and functional structure building) unfold differently in agrammatic aphasia. Related to the first question, research by Kolk and colleagues (e.g., de Roo, Kolk, & Hofstede, 2003; Haarmann & Kolk, 1991; Kolk, 1995; Kolk & Heeschen, 1990; cf. Martin & Freedman, 2001) proposed that speakers with agrammatic aphasia plan their speech in a smaller linguistic unit compared with healthy speakers as an adaptation to limited cognitive resources. They propose that speakers with agrammatic aphasia have a reduced memory buffer (or temporal window) for holding and computing linguistic information. Therefore, they make strategic adaptations to capacity overload by simplifying the amount of linguistic information to be processed at a time, resulting in more incremental speech production compared with healthy speakers (Kolk, 1995). This hypothesis has been tested based on computational modeling of agrammatic speech and by eliciting agrammatic-like speech in healthy speakers under increased cognitive loads (de Roo et al., 2003; Haarmann & Kolk, 1991). However, it remains to be tested whether speakers with agrammatic aphasia indeed use different linguistic units during GE compared with healthy speakers.

In our initial studies of GE, we (Lee & Thompson, 2011a, 2011b) examined production of sentences with different verbs in studies that investigated eye tracking while speaking. In Lee and Thompson (2011a), participants produced sentences in which the third noun was an argument (e.g., “The mother is applying the lotion to the baby”) and those in which it was an adjunct (e.g., “The mother is choosing the lotion for the baby”) using sets of written words (e.g., mother, is applying, lotion, baby). In sentences with the dative verb apply, the third noun, baby, is an argument of the verb, specifying a person to whom the lotion is applied. On the other hand, in sentences with the transitive verb choose, baby is not an argument of the verb. Rather, it is an adjunct (optional modifier) not encoded within the VAS of the verb. Participants' gaze shifts between the verb and the third noun in sentences were measured during sentence production. Both speakers with agrammatic aphasia and healthy speakers showed more frequent gaze shifts in the adjunct condition compared with the argument condition, suggesting that both groups experienced greater difficulty producing sentences when the number of entities specified by the verb's argument structure did not match with the number of the nouns to produce. It is important to note, however, that healthy speakers showed this difference after speech onset, suggesting that they used the verb information during speech, whereas speakers with agrammatic aphasia showed the difference before speech onset, suggesting that they used the verb information from the earliest stage of sentence production to preplan utterances. This early use of verb information by speakers with agrammatic aphasia but not by healthy speakers was also found in Lee and Thompson (2011b), in which production of sentences with unaccusative and unergative verbs was examined. These novel findings suggested that GE proceeds abnormally in speakers with agrammatic aphasia: They use verb information prior to speech onset to preplan sentences in compensation for impaired syntactic processing. That is, use of structural planning may be necessary for grammatical sentence production in speakers with agrammatic aphasia, whereas healthy speakers do not require this.

The present study sought to further test this account in two experiments that focused on lexicalization (lemma selection) and functional structure generation, respectively. Because we used written stimuli in the aforementioned studies, lexicalization processes were not explicitly examined. Thus, in Experiment 1, following Griffin (2001), we examined the production of multiword sentences with a predefined sentence structure (“The A and the B are above the C”), focusing on lexicalization. By varying the codability of each object noun (A, B, and C) to be either high or low and measuring participants' codability-induced gaze differences to each pictured object, we examined the number of lemmas speakers prepared before speech onset. In Experiment 2, using a verb-priming paradigm, we examined the production of action-describing sentences without a predefined sentence structure, hence focusing on lexicalization as well as functional structure generation processes. By examining the effects of VAS priming on speech onset latencies, we evaluated whether speakers encode VAS as part of advanced planning. We predicted that if structural planning is specific to functional structure generation processes in speakers with agrammatic aphasia, the speakers would show normal word-by-word incremental planning in Experiment 1 but advanced planning in Experiment 2 because speakers did not need to decide the sentence structure in Experiment 1.

Experiment 1

Participants

Twelve individuals with stroke-induced agrammatic aphasia (age: M = 57 years, SD = 12; education: M = 17 years, SD = 2; time after onset of stroke: M = 7.1 years, SD = 3.1; three women, nine men, A01–A12 in Table 1) were tested. The eye data of two participants (A02 and A10) were excluded due to failure to record enough fixation points (>500 fixations). Sixteen young (age: M = 21 years, SD = 2; nine women, seven men) and 16 age-matched (age: M = 52 years, SD = 9; education: M = 16 years, SD = 2; seven women, nine men) controls were tested. Age-matched control speakers were matched with speakers with agrammatic aphasia in terms of age and education (ps >.05, independent t tests). All participants were native speakers of English with normal or corrected-to-normal hearing and vision. No participant reported history of neurological or speech-language disorders prior to participation in the study or the stroke. This study was approved by the Institutional Review Board at Northwestern University, and all participants in Experiments 1 and 2 provided informed consent prior to the study.

Table 1.

Language testing scores for speakers with agrammatic aphasia for Experiment 1(A01–A12) and Experiment 2 (A01–A14).

| Test | Participants |

M | SD | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A01 | A02 | A03 | A04 | A05 | A06 | A07 | A08 | A09 | A10 | A11 | A12 | A13 | A14 | |||

| Western Aphasia Battery–Revised | ||||||||||||||||

| Aphasia Quotient | 74.4 | 87.6 | 81.2 | 83.2 | 85.4 | 77.6 | 85.0 | 69.9 | 82.8 | 85.0 | 73.5 | 80.8 | 76.7 | 75.2 | 79.7 | 5.4 |

| Fluency | 4.0 | 5.0 | 4.0 | 5.0 | 5.0 | 5.0 | 5.0 | 4.0 | 5.0 | 5.0 | 5.0 | 5.0 | 4.0 | 5.0 | 4.6 | 0.5 |

| AC | 7.9 | 10.0 | 10.0 | 10.0 | 10.0 | 7.8 | 10.0 | 9.5 | 10.0 | 9.8 | 8.6 | 9.0 | 9.5 | 8.0 | 9.3 | 0.9 |

| Repetition | 8.1 | 10.0 | 8.8 | 9.0 | 9.5 | 9.4 | 9.5 | 5.0 | 8.3 | 9.7 | 6.4 | 8.4 | 8.8 | 7.0 | 8.6 | 1.3 |

| Naming | 8.2 | 9.8 | 8.8 | 9.6 | 9.2 | 7.6 | 9.0 | 8.5 | 9.1 | 9.0 | 7.8 | 9.0 | 7.1 | 8.6 | 8.6 | 0.8 |

| Northwestern Assessment of Verbs and Sentences | ||||||||||||||||

| VNT | 86 | 100 | 90 | 97 | 100 | 80 | 100 | 100 | 92 | 92 | 81 | 76 | 85 | 93 | 91.6 | 6.8 |

| VCT | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100.0 | 0.0 |

| SPPT-C | 100 | 100 | 87 | 100 | 100 | 53 | 93 | 100 | 100 | 100 | 93 | 100 | 100 | 87 | 93.0 | 13.0 |

| SPPT-NC | 60 | 66 | 53 | 93 | 53 | 20 | 86 | 0 | 46 | 66 | 60 | 27 | 40 | 0 | 50.0 | 30.0 |

| SCT-C | 80 | 100 | 93 | 100 | 100 | 53 | 100 | 86 | 93 | 86 | 40 | 87 | 100 | 73 | 88.0 | 15.0 |

| SCT-NC | 80 | 100 | 80 | 93 | 93 | 66 | 93 | 80 | 53 | 66 | 73 | 53 | 80 | 20 | 72.0 | 23.0 |

| Northwestern Assessment of Verb Inflection | ||||||||||||||||

| Nonfinite | 65 | 100 | 100 | 100 | 91 | 100 | 100 | 100 | 100 | 97 | 93 | 100 | 100 | 95 | 98.0 | 4.0 |

| Finite | 53 | 77 | 95 | 85 | 40 | 38 | 38 | 30 | 88 | 92 | 65 | 0 | 33 | 18 | 52.0 | 31.0 |

Note. AC = Auditory Comprehension; VNT = Verb Naming Test; VCT = Verb Comprehension Test; SPPT-C = Sentence Production Priming Test, Canonical Sentences; SPPT-NC = Sentence Production Priming Test, Noncanonical Sentences; SCT-C = Sentence Comprehension Test, Canonical; SCT-NC = Sentence Comprehension Test, Noncanonical.

Agrammatic aphasia was diagnosed based on the speakers' performance on a set of language tests (see Table 1). Aphasia quotients derived from the Western Aphasia Battery–Revised (WAB-R; Kertesz, 2006) indicated a diagnosis of mild to moderate nonfluent aphasia, suggesting relatively preserved auditory comprehension in the face of compromised verbal output. All speakers with aphasia demonstrated reduced utterance length, slow rate of speech with decreased prosody, reduced syntactic complexity, errors with grammatical morphemes in the picture description section of the WAB-R, and mild to moderate word finding difficulty in spontaneous speech and confrontation naming. In the Verb Comprehension and Verb Naming Tests of the Northwestern Assessment of Verbs and Sentences (NAVS; Thompson, 2011), speakers with aphasia in general showed good ability to comprehend single verbs and preserved or mildly impaired naming of single verbs. Scores from the Sentence Priming Production and Sentence Comprehension Tests of the NAVS indicated overall greater difficulty with noncanonical sentences (i.e., passives, object wh-questions, object relative clauses) compared with canonical sentences (i.e., actives, subject wh-questions, subject relative clauses). On the Northwestern Assessment of Verb Inflection (NAVI; Lee & Thompson, in preparation), all participants showed greater difficulty with finite forms (present and past tenses) relative to nonfinite forms (present progressive, infinitive).

Design

A 3 (group) × 2 (codability) × 3 (position) design was used, with codability (high vs. low) and each object position in the sentence (A, B, C) as within-subject variables. The position of the critical noun with low codability varied across the three experimental conditions (A-low, B-low, and C-low). For the high condition, which served as the control condition, all three pictures had high codability, and their gaze durations were compared with those of the corresponding positions in each experimental condition for gaze analysis.

The two models of GE make different predictions about when codability effects (increased gaze duration to the object when it has low codability compared with high codability) will be shown for each object position. The word-by-word model predicts that only the first noun (noun A) will show the codability effect before speech onset because speakers start producing the sentence upon retrieval of the first lemma. However, codability effects for nouns B and C would be seen after speech onset because the lemmas for nouns B and C are prepared incrementally after speech onset. As an alternative, the structural model predicts that speakers plan at least up to the verb predicate (e.g., “are above”); thus, codability effects will appear for both nouns A and B before speech onset.

Stimuli

A total of 64 animate and inanimate nouns were selected from Snodgrass and Vanderwart (1980). The corresponding black-and-white line drawings of the nouns were adapted from Rossion and Pourtois (2004). A set of 16 noun objects with low codability (name agreement < 80%) and three sets of nouns with high codability (n = 16 per set; name agreement between 90% and 100%) were selected (see online supplemental materials, Supplemental Table 1). Codability was significantly lower for the low-codable compared with the high-codable nouns (ps < .05, independent t tests). Other variables that could affect lexical retrieval, including log lemma frequency (Center for Lexical Information [CELEX]; Baayen, Pieenbrock, & van Rij, 1993), image agreement and visual complexity of the pictures (Snodgrass & Vanderwart, 1980), word length, semantic category, and animacy, were all matched across the four word sets (ps > .05, independent t tests). Each set of words was repeated three times across the different conditions, with no list appearing twice in the same position. The order of nouns was randomized in each word list, resulting in a total of 64 trials with each different noun appearing in different positions. The presentation order of the trials was randomized across the conditions and participants.

Apparatus

The stimuli were presented on a 19-in. personal computer monitor using Superlab 4.0 (Abboud, Schultz, & Zeitlin, 2008). A remote, video-based pupil and corneal reflection system (D6 remote eye tracking camera, Applied Science Laboratories, Bedford, MA) was placed in front of the stimulus presentation computer and was used to record participants' eye movements. The position and direction of the participants' gaze were sampled at the temporal resolution of 6 ms. Only one eye was recorded.

Procedure

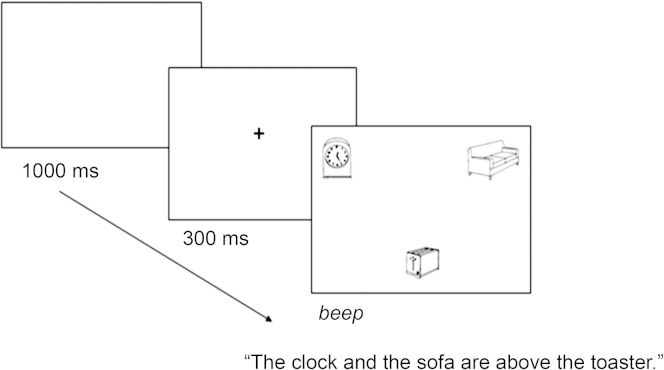

Participants were presented with a set of three pictured objects and were instructed to describe the pictures from top to bottom and left to right using the sentence frame “The A and the B are above the C” as fast and accurately as they could. Each trial began with a blank white screen, which appeared for 1000 ms, followed by a black fixation cross, which appeared for 300 ms (see Figure 2). A beep lasting 100 ms was presented simultaneously with the stimulus panel. A set of three practice trials preceded the experimental trials. Participants with agrammatic aphasia were provided with the same three practice trials both offline and online to ensure that they understood the task. At the beginning of the experiment, each participant's eye was calibrated using a set of nine calibration points distributed across the computer screen. The calibration procedure was performed every eight experimental trials throughout the task as needed. Participants' speech and eye movements were recorded during the task. Only neutral feedback (e.g., “You are doing fine”) was provided.

Figure 2.

A sample trial for Experiment 1. Clock, sofa, and toaster stimuli adapted from Rossion and Pourtois (2004); Copyright © B. Rossion and G. Pourtois. Reprinted with permission of the authors.

Data Analyses

Production accuracy was measured for each participant. Only the first attempt (consisting of at least a noun phrase and a verb; e.g., “The clock and the sofa are …”) was scored. Utterances with all three nouns correctly produced in the right order with the target sentence structure were considered correct responses. For speakers with agrammatic aphasia, occasional substitutions of the copula verb (is for are) and the preposition (beyond for above) were also accepted given that the purpose of this experiment was to examine the time course of lemma selection. Phonological paraphasias were accepted if 60% of the phonemes were produced correctly. Incorrect responses included substitution of a word, incorrect word order or sentence structure, abandoned utterances, responses of “I don't know,” disfluent utterances, and others. Gaze duration analyses were conducted only for the sentences produced correctly. An area of interest was defined within two visual degrees of margin for each object position in each trial. All fixations, which fell within each area of interest, were summed into a gaze. The gaze duration of each object was then aligned with speech onset of the sentence to see if the gaze occurred before or after speech onset. Speech onset latency for each sentence was manually measured using Praat from the onset of the beep to the onset of noun A.

Results

Production Accuracy

To examine whether the position of the low-codable noun in a sentence affects overall production accuracy of the sentence, a 3 (group) × 4 (condition: high, A-low, B-low, and C-low) mixed analysis of variance (ANOVA) was conducted. There was a main effect of group only, F(1, 41) = 3892.52, p < .001. Speakers with agrammatic aphasia produced fewer correct sentences across all conditions (high: M = 69, SD = 18; A-low: M = 76, SD = 17; B-low: M = 71, SD = 19; C-low: M = 76, SD = 19) compared with young (high: M = 94, SD = 8; A-low: M = 96, SD = 7; B-low: M = 94, SD = 9; C-low: M = 98, SD = 4) and age-matched (high: M = 95, SD = 6; A-low: M = 97, SD = 3; B-low: M = 96, SD = 4; C-low: M = 95, SD = 8) control speakers. However, no significant effect of condition and group × condition interaction was found.

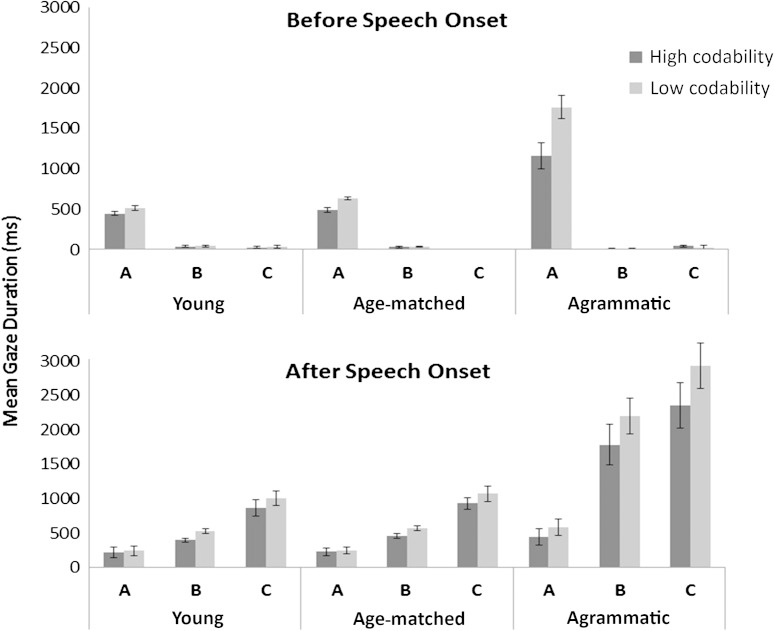

Gaze Duration Data

Figure 3 shows all groups' mean gaze duration times (in milliseconds) for each object before versus after speech onset of the sentence. For the gaze durations before speech, a 3 (group) × 3 (position: A, B, C) × 2 (codability) ANOVA revealed a group effect, indicating overall longer gaze durations in speakers with agrammatic aphasia compared with young and age-matched controls, F(2, 39) = 46.40, p < .001. There were main effects of position and codability as well as significant two- and three-way interactions, Fs > 6.87, ps < .01. A 3 (position) × 2 (codability) repeated ANOVA conducted for each group revealed that all three groups showed significantly longer gaze durations to noun A compared with nouns B and C—young: F(2, 30) = 246.40, p < .001; age-matched: F(2, 30) = 788.68, p < .001; agrammatic: F(2, 22) = 101.35, p < .001. There were also main effects of codability for all three groups—young: F(1, 15) = 6.80, p < .05; age-matched: F(1, 15) = 17.61, p < .01; agrammatic: F(1, 9) = 10.38, p < .01—and significant to marginally significant interactions between codability and positions—young: F(2, 30) = 3.03, p < .10; age-matched: F(2, 30) = 14.89, p < .001; agrammatic: F(2, 18) = 11.35, p < .01. A set of paired t tests revealed that all groups showed significantly longer gaze durations for the low-codable A compared with the high-codable A (young: M = 514 ms, SD = 133 vs. M = 447 ms, SD = 103; age-matched: M = 633 ms, SD = 84 vs. M = 494 ms, SD = 117; agrammatic: M = 1764 ms, SD = 496 vs. M = 1162 ms, SD = 434; ps < .01). However, none of the groups showed significant codability effects in gaze durations to nouns B and C.

Figure 3.

Mean gaze durations to each object position (with standard errors) for each group before speech onset (top) and after speech onset (bottom) of the sentence.

For the gaze durations after speech onset, a 3 × 3 × 2 ANOVA revealed a significant main effect of group, indicating overall longer gaze durations in speakers with agrammatic aphasia compared with young and age-matched groups, F(2, 29) = 52.60, p < .001. There were main effects of position and codability as well as significant two- and three-way interactions, Fs > 4.60, ps < .05. A 3 × 2 ANOVA conducted for each group showed that gaze durations to noun A were reliably shorter than those to nouns B and C in all three groups—young: F(2, 30) = 25.78, p < .001; age-matched: F(2, 30) = 33.37, p < .001; agrammatic: F(2, 18) = 30.83, p < .001. All three groups showed significant codability effects—young: F(1, 15) = 12.60, p < .01; age-matched: F(1, 15) = 15.52, p < .01; agrammatic: F(1, 9) = 34.83, p < .001—and, importantly, significant interactions between codability and position—young: F(2, 30) = 15.38, p < .001; age-matched: F(2, 30) = 4.62, p < .01; agrammatic: F(2, 18) = 4.31, p < .05. The codability-induced differences in gaze durations for noun B were significant in all three groups (young: M = 527 ms, SD = 156 vs. M = 396 ms, SD = 118; age-matched: M = 571 ms, SD = 131 vs. M = 456 ms, SD = 148; agrammatic: M = 2201 ms, SD = 895 vs. M = 1783 ms, SD = 790; all ps < .01). The codability effects for noun C approached significance in young speakers (M = 1005 ms, SD = 426 vs. M = 867 ms, SD = 473; p <. 10) and were significant in age-matched speakers (M = 1072 ms, SD = 450 vs. M = 932 ms, SD = 331; p < .05) and in speakers with agrammatic aphasia (M = 2932 ms, SD = 991 vs. M = 2359 ms, SD = 1007; p < .01). However, none of the groups showed codability effects for noun A after speech onset.

Interim Discussion

Results of Experiment 1 showed that the speakers with agrammatic aphasia performed similarly to young and age-matched controls, with all groups showing significant codability effects (reflected by increased gaze durations for low-codable pictures compared with high-codable pictures) for A only before speech onset. These findings are consistent with the word-by-word model of sentence production (Griffin, 2001; Schriefers et al., 1998) and indicate that lexicalization processes are spared in agrammatic sentence production, although selection of individual lemmas was delayed as indicated by increased gaze durations to pictures overall.

Experiment 2

Participants

Fourteen individuals with agrammatic aphasia (age: M = 57 years, SD = 13; education: M = 17 years, SD = 2; three women, 11 men, A01–A14 in Table 1), 25 young controls (age: M = 20 years, SD = 2; 10 women, 15 men), and 20 age-matched controls (age: M = 53 years, SD = 10; education: M = 16 years, SD = 2; 10 women, 10 men) were tested. The age-matched control speakers were matched with speakers with aphasia in terms of age and education (ps > .05, independent t tests).

Design

A 3 (group) × 2 (target sentence: transitive vs. unaccusative) × 2 (prime type: consistent vs. inconsistent) design was used, with target sentence structures and prime type as within-subject variables. The target structures were sentences with verbs of alternating transitivity (e.g., roll), which can be used in either a transitive (e.g., “The man is rolling the tire”) or an unaccusative (e.g., “The tire is rolling”) sentence. Alternating verbs were used to match imageability between the transitive and unaccusative targets because many nonalternating unaccusatives are difficult to picture. The prime verbs included nonalternating transitive (e.g., choose) and unaccusative (e.g., bloom) verbs. A transitive prime verb allows only a transitive argument structure, consistent with transitive targets but inconsistent with unaccusative target sentences. In contrast, the argument structure of the unaccusative prime verbs is consistent with that of the unaccusative target sentences but not that of transitive targets. Varying the combination of prime type and target sentence type resulted in four different types of prime–target pairs, as shown in Table 2.

Table 2.

Experimental conditions and sample stimuli for Experiment 2. NP = noun phrase; V = verb.

| Condition (prime–target pair) | Prime verb | Target sentence |

|---|---|---|

| Transitive prime–transitive target | choose | The man is rolling a tire. |

| [NPagent[V NPtheme]] | [NPagent[V NPtheme]] | |

| Unaccusative prime–transitive target | bloom | The man is rolling a tire. |

| [NPtheme [V]] | [NPagent[V NPtheme]] | |

| Unaccusative prime–unaccusative target | bloom | The tire is rolling. |

| [NPtheme [V]] | [NPtheme [V]] | |

| Transitive prime–unaccusative target | choose | The tire is rolling. |

| [NPagent[V NPtheme]] | [NPtheme [V]] |

The rationale for this manipulation was as follows: Seeing a prime verb will result in automatic activation of the verb's argument structure (e.g., Friedmann, Taranto, Shapiro, & Swinney, 2008; Shapiro & Levine, 1990; Shapiro et al., 1993). If speakers encode the verb lemma of the target sentence, accessing the VAS before production, the preactivated VAS of the prime will affect the advanced planning processes, resulting in reduced speech onset latencies (facilitation) when the two VASs are consistent compared with when the two are inconsistent. Therefore, speech onset latency of target sentences was the primary dependent measure. The word-by-word incremental model predicts no priming effects on speech onset latencies because speakers plan only the first lemma (noun) before speech onset. In the structural model, speakers encode VAS before speech onset; therefore, VAS priming effects would be expected.

Stimuli

Three sets of verbs were prepared: a set of 11 target verbs with alternating transitivity used for target sentences and 20 nonalternating transitive and 20 nonalternating unaccusative verbs used for prime verbs. The alternating and unaccusative verbs were selected based on previous studies (Bastiaanse & van Zonneveld, 2004; Friedmann et al., 2008; Haegeman, 1994; Lee & Thompson, 2004; Levin, 1993; Perlmutter, 1978). All transitive verbs were obligatory two-place verbs. The verbs were checked for their types by two linguists. The three sets of verbs were matched for log frequency; alternating target verbs = 1.89, unaccusative primes = 1.85, transitive primes = 1.82, CELEX (Baayen et al., 1993), F(1, 48) = .045, p > .95. All the target verbs were monosyllabic, and the prime verbs consisted of words with one to three syllables. The two prime verb groups were matched in terms of the syllable length, unaccusative = 1.65, transitive = 1.63, t(38) = 0.632, p >.05, and the number of written letters, unaccusative = 5.25, transitive = 5.47, t(38) = 0.131, p > .05.

The target verbs were repeated twice for each of the transitive and unaccusative target sentence conditions except for bounce and play. This resulted in a total of 20 items for both transitive and unaccusative target conditions (see online supplemental materials, Supplemental Table 2). Between the target and prime verb pairs, semantic (e.g., roll–push) and phonological (e.g., break–bite) relatedness was avoided. When developing target sentences, the same verb was combined with different imageable nouns in each sentence when possible. Between the transitive and unaccusative target conditions, the nouns were matched for word length (subjects of transitive targets = 1.33 syllables, objects of transitive targets = 1.33 syllables, subjects of unaccusative targets = 1.43 syllables; ps > .05, t tests) and log lemma frequency (subjects of transitive targets = 1.84, objects of transitive targets = 1.68, subjects of unaccusative targets = 1.72; CELEX, Baayen et al., 1993; ps > .05, t tests). Black-and-white line drawings of the target sentences were prepared and only the pictures that elicited at least 80% target responses during norming (10 native English speakers) were included.

Procedure

Familiarization Task

Participants were familiarized with the target nouns and verbs in singletons to increase consistency in the words produced in the sentence production experiment and to minimize confounding effects due to difficulty that participants with aphasia may have with word retrieval. For nouns, participants saw the experimental pictures with a red square drawn around the target actor or object to be named. They were asked to name what or who was in the box (e.g., bell, nurse). For verbs, an auditory picture-matching task was used in which participants saw four pictures and were asked to point to the picture that matched the verb the examiner produced. In addition, an action-naming task was administered, which required participants to name the pictured action using a single word. For both nouns and verbs, when a participant provided a nontarget response, feedback was provided (e.g., “You can also say roll for this picture”). However, participants were not instructed to remember or use the target words during the sentence production task. All participants performed at 70% accuracy or above on the familiarization tasks.

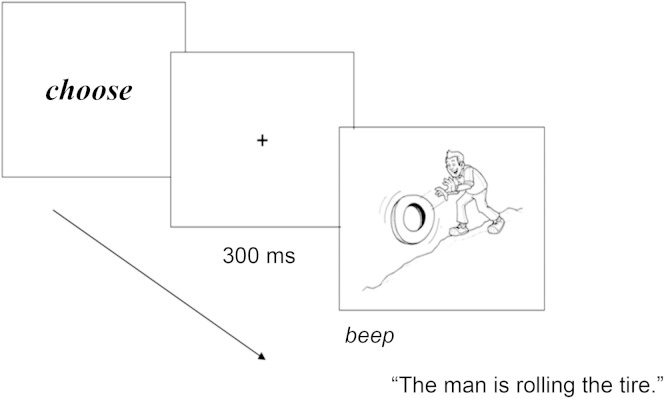

Online Sentence Production Task

A picture description task was used in combination with oral reading of a lexical prime (see Figure 4). Participants were instructed to read the word aloud when they saw a written word and to make a sentence when they saw a picture. They were instructed to speak as fast and as accurately as possible. After reading the prime verb, participants pressed the space bar to advance to the target picture stimulus, which was presented together with a beep signal after a 300-ms fixation cross panel. Participants' speech was recorded using Praat software.

Figure 4.

A sample trial for Experiment 2.

Data Analyses

Production accuracy data were obtained for each participant on the basis of correctly and fluently produced sentences. Correct responses included sentences in which the target nouns and verb were produced in the correct order. Use of semantically related nouns (e.g., woman, girl, lady) and different forms of verb inflections (e.g., broke, is breaking, breaks) was accepted. Use of modifiers (e.g., “the young boy” for “the boy”) also was accepted. Phonological paraphasias that included 60% of the target phonemes and mild dysarthric distortions were also accepted.

The following responses were excluded from target responses and considered erred responses: use of nontarget verbs, production of nontarget sentence structures (e.g., “The stick is broken by the man” for “The man is breaking the stick” or “The pot is boiling water” for “The water is boiling”), omission of an obligatory argument (e.g., “The man is rolling ____”), and production of semantic paraphasia (e.g., woman for man). Unintelligible or abandoned utterances, no responses, and “I don't know” responses were also excluded. Responses including disfluencies (interjections, revisions, repetitions of a partial or whole word; e.g., “Uh, uh, the man is, uh, breaking . . .”) during the production of the subject and verb were also excluded from correct responses given that disfluent utterances may be associated with different planning processes than fluent utterances. In addition, trials in which participants with aphasia read aloud the prime verb incorrectly (e.g., verbal and semantic or phonological paraphasic errors) were excluded from correct responses.

Speech onset latencies, defined as the time from onset of the picture stimulus (indicated by the beep, which co-occurred with the picture) to the onset of the subject of the sentence, were measured manually using Praat. Responses that elicited latencies longer than 2000 ms (for controls) and 7000 ms (for participants with aphasia) were excluded from further analysis.

Results

Production Accuracy

Table 3 shows the production accuracy and speech onset latency results. A 3 (group) × 2 (target sentence) × 2 (prime type) ANOVA revealed a main effect of group, indicating that speakers with agrammatic aphasia performed more poorly than young and age-matched controls, F(2, 56) = 75.31, p < .001. There were main effects of target sentence type, indicating greater accuracies in transitive than in unaccusative sentences, F(1, 56) = 20.04, p < .001. The priming effect was also significant, F(1, 56) = 14.14, p < .001. None of the two- and three-way interactions were significant.

Table 3.

Production accuracy and speech onset latency data (SD in parentheses) from Experiment 2.

| Variable | Trans–trans | Unacc–trans | Unacc–unacc | Trans–unacc |

|---|---|---|---|---|

| Production accuracy (%) | ||||

| Young | 92 (8) | 94 (7) | 88 (10) | 88 (9) |

| Age-matched | 90 (10) | 92 (7) | 81 (13) | 80 (11) |

| Agrammatic | 60 (19) | 61 (17) | 45 (18) | 53 (19) |

| Speech onset latency (ms) | ||||

| Young | 1110 (116) | 1169 (104) | 1044 (122) | 1062 (105) |

| Age-matched | 1244 (158) | 1318 (175) | 1220 (188) | 1424 (175) |

| Agrammatic | 3099 (1120) | 3334 (1024) | 3330 (1339) | 3756 (1616) |

Note. Trans–trans = transitive prime–transitive target; Unacc–trans = unaccusative prime–transitive target; Unacc–unacc = unaccusative prime–unaccusative target; Trans–unacc = transitive prime–unaccusative target.

Speech Onset Latency for Correct Responses

A 3 × 2 × 2 ANOVA revealed that speakers with agrammatic aphasia showed significantly longer speech onset latencies compared with young and age-matched speakers, F(2, 56) = 68.82, p < .001. There also was a main effect of target sentence type, F(1, 56) = 6.80, p < .05, and prime verb type, F(1, 56) = 18.77, p < .001. The interactions between target sentence type and group, F(2, 56) = 3.89, p < .05, and between prime type and group, F(2, 56) = 57.76, p < .001, were also significant. However, no interaction was found between target sentence and prime type, F(2, 56) = 0.26, p > .05. Importantly, the target sentence × prime type × group interaction was significant, F(2, 56) = 8.29, p < .01. A 2 × 2 repeated-measures ANOVA conducted for each group showed that both young and age-matched speakers showed main effects of target sentence, indicating significantly longer speech onset times for transitive sentences than for unaccusative sentences—young: F(1, 24) = 51.17, p < .001; age-matched: F(1, 19) = 4.47, p < .05. It also showed overall shorter onset times for the consistent primes compared with inconsistent primes, although the effect was not reliable for age-matched speakers—young: F(1, 24) = 18.50, p < .001; age-matched: F(1, 19) = 3.40, p < .10. It is important to note that both groups showed significant interaction effects between prime type and target sentence type—young: F(1, 24) = 10.38, p < .01; age matched: F(1, 19) = 14.89, p < .01. For the transitive targets, both young and age-matched speakers showed significantly shorter speech onset latencies when the prime verb was a transitive verb compared with when the prime verb was an unaccusative verb (ps < .001, paired t tests). However, neither group showed a reliable priming effect for unaccusative sentences. For speakers with agrammatic aphasia, a main effect of target sentence type was not significant, F(1, 13) = 2.86, p = .11. There was a main effect of prime type, F(1, 13) = 37.67, p < .001. However, different from the controls, there was no significant prime × target sentence type interaction for speakers with agrammatic aphasia, F(1, 13) = 3.06, p = .104. They showed significant priming effects in both transitive, t(13) = 3.73, p < .01, and unaccusative, t(13) = 4.73, p < .001, target sentences.

Exploratory Analyses

A set of exploratory analyses also was conducted. A 2 × 2 ANOVA was conducted on the speech onset latencies of speakers with agrammatic aphasia for incorrectly produced sentences, given that this group produced more errors than did controls. Only fluently produced nontarget sentences (i.e., sentences with incorrect functional structures; n = 235; 47% of total errors) were entered into the analysis to limit variability in speech onset latencies. In contrast with the findings from correct responses, there were no effects of target sentence and prime or interaction between the prime and target sentence (M = 3871 ms, SD = 2019 vs. M = 3536 ms, SD = 1296 for transitive prime–transitive target vs. unaccusative prime–transitive target conditions; M = 3712 ms, SD = 1452 vs. M = 3718 ms, SD = 1487 for unaccusative prime–unaccusative target vs. transitive prime–unaccusative target conditions). Second, Pearson correlations were conducted between the magnitude of VAS priming effects (the difference in speech onset latencies between the consistent and inconsistent prime conditions) and the performance of speakers with agrammatic aphasia on each subsection of the WAB-R, NAVS, and NAVI (see Table 1). Because the purpose of this analysis was to explore whether there is any meaningful relation between participants' impairments in different linguistic tasks (e.g., single-word processing, auditory comprehension, syntactic production) and the VAS priming effects, we excluded the aphasia quotients of the WAB-R, which is a composite score for overall aphasia severity, from the analysis. Results showed greater VAS priming effects in the unaccusative sentence condition, but not in the transitive condition, for speakers with agrammatic aphasia who showed greater impairments in production of noncanonical sentences (Sentence Production Priming Test, Noncanonical Sentences [SPPT-NC] of the NAVS, R = −.593, p < .05), reduced fluency and syntactic complexity in connected speech (Fluency of the WAB-R, R = −.571, p < .05), and poor repetition of utterances with increasing length and complexity (Repetition of the WAB-R, R = −.551, p < .05). However, no other measures showed significant correlations, including noun and verb naming, auditory comprehension of words and (simple and complex) sentences, and production of verb finite or nonfinite inflection morphemes.

Discussion

The time course of GE in speakers with agrammatic aphasia and young and age-matched control speakers was examined, focusing on lexicalization (Experiment 1) and functional structure building (Experiment 2) processes. In Experiment 1, our speakers with agrammatic aphasia, similar to young and age-matched controls, showed significant codability effects (reflected by increased gaze durations for low-codable pictures compared with high-codable pictures) for noun A only before speech onset, consistent with the word-by-word model (Griffin, 2001; Schriefers et al., 1998). In Experiment 2, young and age-matched speakers showed significant VAS priming effects in transitive sentences but not in unaccusative sentences. It is interesting to note that our speakers with agrammatic aphasia showed VAS priming effects in both transitive and unaccusative sentences, consistent with the structural model (Ferreira, 2000; Kempen & Huijbers, 1983; Lindsley, 1975, 1976).

The findings from our young and age-matched control speakers suggest that normal sentence production is highly incremental with some strategic flexibility (Ferreira & Swets, 2002; Wagner & Jescheniak, 2010). The data from Experiment 1 suggest that speakers are eager to start speech as soon as they have formulated the smallest bit of linguistic structure—a single lemma (e.g., Bock & Warren, 1985; De Smedt, 1990; McDonald, Bock, & Kelly, 1993; Schriefers et al., 1998; Spieler & Griffin, 2006). In Experiment 2, our young and age-matched control speakers made strategic adaptations to different sentence types. For transitive sentences, when there are two candidates (agent, theme) for the subject, it appears that they use verb information to decide which one to produce as the sentential subject. For unaccusatives, they produce the only candidate (theme) in the subject position as a default upon retrieving its lemma and encode the verb during speech (see Lee & Thompson, 2011b, for the parallel pattern of eye movement data for unaccusative sentences). These findings are in line with previous studies showing that normal speakers choose different degrees of advanced planning in various contexts (Ferreira & Swets, 2002; Konopka, 2012; Wagner & Jescheniak, 2010).

As predicted, our speakers with agrammatic aphasia showed clearly different production patterns in the two experiments. They showed word-by-word planning when sentences involved mainly lexicalization, similar to controls, although selection of individual lemmas was delayed, as indicated by increased gaze durations to pictures overall. In contrast, they consistently used structural planning, encoding VAS before speech onset, when required to generate sentence structure. This pattern was persistent even when producing unaccusative sentences, suggesting that early encoding of VAS seen in speakers with agrammatic aphasia (see also Lee & Thompson, 2011a, 2011b) is not a strategic adaptation to the number of entities depicted in the picture stimulus. Given these findings and those of previous eye-tracking studies comparing production of sentences with arguments and adjuncts (Lee & Thompson, 2011a) and sentences with unaccusative and unergative verbs (Lee & Thompson, 2011b), the encoding of VAS at the earliest stage of sentence planning appears to be a persistent pattern in agrammatic speech whenever sentence production involves functional structure generation.

These findings shed light on previous accounts of agrammatic sentence production. Our speakers with agrammatic aphasia used different planning units from control speakers as a function of the nature of GE processes required for sentence production. This suggests that the time courses of lexicalization and functional structure generation processes are affected differentially in agrammatic production, refining the impaired GE account (e.g., Bastiaanse & van Zonneveld, 2004, 2005; Caramazza & Miceli, 1991; Kim & Thompson, 2000; Lee & Thompson, 2004). Our findings also suggest that agrammatic production cannot simply be viewed as a strategic adaptation to limited memory buffer or cognitive resources (e.g., de Roo et al., 2003; Haarmann & Kolk, 1991; Kolk, 1995; Kolk & Heeschen, 1990; Rossi et al., 2003). Counter to what would be predicted from Kolk and colleagues, our speakers with agrammatic aphasia did not plan sentences in a smaller linguistic unit compared with control speakers. Rather, they engaged in a larger planning unit as the complexity of the task increased in Experiment 2 compared with Experiment 1. One might think that slower speech onset latencies in speakers with agrammatic aphasia compared with control speakers support slowed processing or activation of information. However, this argument also fails because our speakers with agrammatic aphasia were not just slower; they engaged in different planning processes than did control speakers.

Findings from this study support previous studies indicating that word-by-word incrementality is used by speakers without impairment, leading to efficient use of cognitive resources and requiring minimal memory buffer demands (De Smedt, 1990; Ferreira & Slevc, 2007). Structural GE also is used by speakers without impairment, particularly when multiple candidates are available for the subject position of the sentence, providing a look ahead for upcoming structure despite increased memory load (Bock et al., 2004). This strategy decreases utterance repairs and minimizes risk of reaching a “syntactic dead end” (De Smedt, 1990, p. 13). For speakers with impairment, correct sentence production may rely on developing a schematic relational structure for functional structure building, afforded by using structural GE (Lee & Thompson, 2011a, 2011b). Although quite preliminary, this idea is supported by the noted lack of VAS priming effects found when our speakers with agrammatic aphasia produced incorrect responses, suggesting that structural GE is a successful strategy that may be used to overcome sentence production deficits. Although it remains unclear whether speakers with agrammatic aphasia retrieve all lemmas for an entire clause before the functional role of the subject is decided (Ford, 1982; Ford & Holmes, 1978), the current findings indicate that by retrieving a VAS configuration, speakers with agrammatic aphasia can merge the first retrieved lemma to its appropriate sentential position, which in turn results in better sentence production. For the allocation of cognitive resources, loading the prespeech memory buffer with a larger linguistic unit may reduce demands or conflicts coming from the concurrent multiple processes of grammatical, phonological, and articulatory planning during speech.

The findings also provide implications for aphasia rehabilitation, suggesting use of different planning strategies for individuals presenting with lexicalization versus functional structure generation deficits. For targeting lexicalization and linear ordering of words, a treatment regimen utilizing a smaller planning unit of information, such as a single word or phrase, may lead to improved production. For deficits in functional structure generation, utilizing a structural linking on the basis of VAS may be helpful, as shown in previous treatment studies (Rochon, Laird, Bose, & Scofield, 2005; Thompson, 2007; Thompson, Riley, den Ouden, Meltzer-Asscher, & Lukic, 2013).

This study sheds light on the relation between a speaker's linguistic capacity and mechanisms of language production, showing that impaired GE relies on hierarchical syntactic structure (i.e., VAS) to spark the serial ordering of words in sentences, whereas this is not required in normal sentence production. An open question deserving future investigation is whether the use of a structural GE strategy is specific to impaired syntactic production or more generally engaged whenever speakers' language abilities are compromised across the board. The results from our exploratory correlation analysis appear to point to the former. Our speakers with agrammatic aphasia with poorer performance on the measures reflecting syntactic complexity in production (Sentence Production Priming Test, Noncanonical Sentences of the NAVS, Fluency and Repetition of the WAB-R) showed greater VAS priming effects. If the structural planning is a strategy adapted by any speakers with linguistic challenges, other measures reflecting speakers' lexical retrieval, auditory comprehension of simple and/or complex sentences, or impaired production of morphology should also have been correlated with VAS priming effects in our participants with agrammatic aphasia. This was not borne out in our data. Further investigation is needed to elucidate how different cognitive–linguistic capacities support incremental production.

In conclusion, two online sentence production experiments examined GE units in speakers with agrammatic aphasia and control speakers. Results from young and age-matched control speakers show that sentence production is largely incremental, with some strategic flexibility in degree of incrementality. Word-by-word incrementality is preferred; however, the human language production system also utilizes the abstract syntactic configuration of VAS from the earliest stage of sentence generation when speakers are uncertain about the starting point (Bock et al., 2004). Word-by-word strategies also are accessible to individuals with impaired production systems, who plan in a word-by-word manner when sentences mainly require lexicalization, similar to young and age-matched controls. However, when sentences involve functional structure generation processes, speakers with impairment consistently encode VAS before speech onset, in keeping with the structural model. These findings suggest that structural incrementality is utilized more in speakers with impairment than in speakers without impairment, indicating that speakers with agrammatic aphasia compensate for impaired sentence production processes by using a larger syntactic planning unit before speech onset.

Supplementary Material

Acknowledgments

This study was supported by School of Communication Graduate Research Ignition Grant from Northwestern University (J. Lee), NIH R01-DC01948 (C. K. Thompson), and NSF BCS-1323245 (M. Yoshida). The authors thank the individuals with aphasia for their participation.

Funding Statement

This study was supported by School of Communication Graduate Research Ignition Grant from Northwestern University (J. Lee), NIH R01-DC01948 (C. K. Thompson), and NSF BCS-1323245 (M. Yoshida).

References

- Abboud H., Schultz H., & Zeitlin V. (2008). SuperLab 4.0 [Computer software]. San Pedro, CA: Cedrus Corporation. [Google Scholar]

- Baayen R. H., Pieenbrock R., & van Rij H. (1993). The CELEX Lexical Database (Release 1) [Database]. Philadelphia, PA: Linguistic Data Consortium, University of Pennsylvania. [Google Scholar]

- Bastiaanse R., & van Zonneveld R. (2004). Broca's aphasia, verbs and mental lexicon. Brain and Language, 90, 198–202. [DOI] [PubMed] [Google Scholar]

- Bastiaanse R., & van Zonneveld R. (2005). Sentence production with verbs of alternating transitivity in agrammatic Broca's aphasia. Journal of Neurolinguistics, 18, 57–66. [Google Scholar]

- Bock J. K., & Levelt W. J. M. (1994). Language production: GE (Vol. 29). New York, NY: Academic Press. [Google Scholar]

- Bock K., Irwin D. E., & Davidson D. J. (2004). Putting first things first. In Henderson J. M. & Ferreira F. (Eds.), The integration of language, vision, and action: Eye movements and the visual world (pp. 249–277). New York, NY: Psychology Press. [Google Scholar]

- Bock K., & Warren R. K. (1985). Conceptual accessibility and syntactic structure in sentence formulation. Cognition, 21, 47–67. [DOI] [PubMed] [Google Scholar]

- Burzio L. (1986). Italian syntax. Dordrecht, The Netherlands: Reidel. [Google Scholar]

- Caramazza A., & Miceli G. (1991). Selective impairment of thematic role assignment in sentence processing. Brain and Language, 41, 402–436. [DOI] [PubMed] [Google Scholar]

- De Bleser R., & Kauschke C. (2003). Acquisition and loss of nouns and verbs: Parallel or divergent patterns? Journal of Neurolinguistics, 16, 213–229. [Google Scholar]

- de Roo E., Kolk H., & Hofstede B. (2003). Structural properties of syntactically reduced speech: A comparison of healthy speakers and Broca's aphasics. Brain and Language, 86, 99–115. [DOI] [PubMed] [Google Scholar]

- De Smedt K. (Ed.). (1990). IPF: An incremental parallel formulator. London, United Kingdom: Academic Press. [Google Scholar]

- Ferreira F. (2000). Syntax in language production: An approach using tree-adjoining grammars. In Wheeldon L. (Ed.), Aspects of language production (pp. 291–330). Cambridge, MA: MIT Press. [Google Scholar]

- Ferreira F., & Swets B. (2002). How incremental is language production? Evidence from the production of utterances requiring the computation of arithmetic sums. Journal of Memory and Language, 46, 57–84. [Google Scholar]

- Ferreira V. S., & Slevc R. (2007). Grammatical encoding. In Gareth Gaskell M. (Ed.), The Oxford handbook of psycholinguistics (pp. 453–470). Oxford, United Kingdom: Oxford University Press. [Google Scholar]

- Ford M. (1982). Sentence planning units: Implications for the speaker's representation of meaningful relations underlying sentences. Cambridge, MA: MIT Press. [Google Scholar]

- Ford M., & Holmes V. M. (1978). Planning units and syntax in sentence production. Cognition, 6, 35–53. [Google Scholar]

- Friedmann N., Taranto G., Shapiro L. P., & Swinney D. (2008). The leaf fell (the leaf): The online processing of unaccusatives. Linguistic Inquiry, 29, 355–377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffin Z. M. (2001). Gaze durations during speech reflect word selection and phonological encoding. Cognition, 82, B1–B14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffin Z. M., & Mouton S. S. (2004, March). Can speakers order a sentence's arguments while saying it? Poster presented at the 17th Annual CUNY Conference on Human Sentence Processing, College Park, MD. [Google Scholar]

- Grodzinsky Y. (1990). Theoretical perspectives on language deficits. Cambridge, MA: MIT Press. [Google Scholar]

- Haarmann H. J., & Kolk H. H. J. (1991). A computer model of the temporal course of agrammatic sentence understanding: The effects of variation in severity and sentence complexity. Cognitive Science, 15, 49–87. [Google Scholar]

- Haegeman L. (1994). Introduction to government and binding theory. Oxford, United Kingdom: Blackwell. [Google Scholar]

- Kegl J. (1995). Levels of representation and units of access relevant to agrammatism. Brain and Language, 22, 151–200. [DOI] [PubMed] [Google Scholar]

- Kempen G., & Huijbers P. (1983). The lexicalization process in sentence production and naming: Indirect election of words. Cognition, 14, 185–209. [Google Scholar]

- Kempen G. K., & Hoenkemp E. (1987). An incremental procedural grammar for sentence formulation. Cognitive Science, 11, 201–258. [Google Scholar]

- Kertesz A. (2006). The Western Aphasia Battery–Revised. San Antonio, TX: Psychological Corporation. [Google Scholar]

- Kim M., & Thompson C. K. (2000). Patterns of comprehension and production of nouns and verbs in agrammatism: Implications for lexical organization. Brain and Language, 74(1), 1–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiss K. (2000). Effects of verb complexity on agrammatic aphasics' sentence production. In Bastiaanse R. & Grodzinsky Y. (Eds.), Grammatical disorders in aphasia (pp. 123–151). London, United Kingdom: Whurr. [Google Scholar]

- Kolk H. H. J. (1995). A time-based approach to agrammatic production. Brain and Language, 50, 282–303. [DOI] [PubMed] [Google Scholar]

- Kolk H. H. J., & Heeschen C. (1990). Adaptation symptoms and impairment symptoms in Broca's aphasia. Aphasiology, 4, 221–231. [Google Scholar]

- Konopka A. E. (2012). Planning ahead: How recent experience with structures and words changes the scope of linguistic planning. Journal of Memory and Language, 40, 153–194. [Google Scholar]

- Lee J., & Thompson C. K. (2011a). Real-time production of arguments and adjuncts in normal and agrammatic speakers. Language and Cognitive Processes, 26, 985–1021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee J., & Thompson C. K. (2011b). Real-time production of unergative and unaccusative sentences in normal and agrammatic speakers: An eyetracking study. Aphasiology, 25, 813–825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee J., & Thompson C. K. (in preparation). Northwestern Assessment of Verb Inflection.

- Lee M., & Thompson C. K. (2004). Agrammatic aphasic production and comprehension of unaccusative verbs in sentence contexts. Journal of Neurolinguistics, 17, 315–330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levelt W. J. M. (1989). Speaking: From intention to articulation. Cambridge, MA: MIT Press. [Google Scholar]

- Levin B. (Ed.). (1993). English verb classes and alternations: A preliminary investigation. Chicago, IL: University of Chicago Press. [Google Scholar]

- Lindsley J. R. (1975). Producing simple utterances: How far ahead do we plan? Cognitive Psychology, 7(1), 1–19. [Google Scholar]

- Lindsley J. R. (1976). Producing simple utterances: Details of the planning process. Journal of Psycholinguistic Research, 5, 331–354. [Google Scholar]

- Linebarger M. C., Schwartz M. F., & Saffran E. M. (1983). Sensitivity to grammatical structure in so-called agrammatic aphasics. Cognition, 13, 361–392. [DOI] [PubMed] [Google Scholar]

- Luzzatti C., Raggi R., Zonca G., Pistarini C., Contardi A., & Pinna G. D. (2002). Verb-noun double dissociation in aphasic lexical impairments: The role of word frequency and imageability. Brain and Language, 81, 432–444. [DOI] [PubMed] [Google Scholar]

- Mack J. E., Ji W., & Thompson C. K. (2013). Effects of verb meaning on lexical integration in agrammatic aphasia: Evidence from eyetracking. Journal of Neurolinguistics, 26, 619–636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin R. C., & Freedman M. L. (2001). Short-term retention of lexical-semantic representations: Implications for speech production. Memory, 9, 261–280. [DOI] [PubMed] [Google Scholar]

- McDonald J. L., Bock K., & Kelly M. H. (1993). Word and world order: Semantic, phonological, and metrical determinants of serial position. Cognitive Psychology, 25, 188–230. [DOI] [PubMed] [Google Scholar]

- Perlmutter D. (1978). Impersonal passives and unaccusative hypothesis. In Jaeger J. (Ed.), Proceedings of the 4th Annual Meeting of the Berkeley Linguistics Society (pp. 157–190). Oakland, CA: University of California Press. [Google Scholar]

- Rochon E., Laird L., Bose A., & Scofield J. (2005). Mapping therapy for sentence production impairments in nonfluent aphasia. Neuropsychological Rehabilitation, 15, 1–36. [DOI] [PubMed] [Google Scholar]

- Rossi H., Borgo F., Semenza C., Zuodar S., de Roo E., & Kolk H. (2003). Syntactically reduced speech in Italian Broca's aphasics and normal speakers. Brain and Language, 87, 75–76. [DOI] [PubMed] [Google Scholar]

- Rossion B., & Pourtois G. (2004). Revisiting Snodgrass and Vanderwart's object pictorial set: The role of surface detail in basic-level object recognition. Perception, 33(2), 217–236. [DOI] [PubMed] [Google Scholar]

- Schriefers H., Teruel E., & Meinshausen R. M. (1998). Producing simple sentences: Results from picture-word interference experiments. Journal of Memory and Language, 39, 609–632. [Google Scholar]

- Shapiro L. P., & Levine B. A. (1990). Verb processing during sentence comprehension in aphasia. Brain and Language, 38, 21–47. [DOI] [PubMed] [Google Scholar]

- Shapiro L. P., Nagel N., & Levine B. A. (1993). Preferences for a verb's complements and their use in sentence processing. Journal of Memory and Language, 32, 96–114. [Google Scholar]

- Snodgrass J. G., & Vanderwart M. (1980). A standardized set of 260 pictures: Norms for name agreement, image agreement, familiarity, and visual complexity. Journal of Experimental Psychology: Human Learning and Memory, 6, 174–215. [DOI] [PubMed] [Google Scholar]

- Spieler D. H., & Griffin Z. M. (2006). The influence of age on the time course of word preparation in multiword utterances. Language and Cognitive Processes, 21, 291–321. [Google Scholar]

- Thompson C., Lange K. L., Schneider S. L., & Shapiro L. P. (1997). Agrammatic and non-brain damaged subjects' verb and verb argument structure production. Aphasiology, 11, 473–490. [Google Scholar]

- Thompson C. K. (2007). Complexity in language learning and treatment. American Journal of Speech-Language Pathology, 16, 3–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson C. K. (2011). Northwestern Assessment of Verbs and Sentences. Evanston, IL: Northwestern University. [Google Scholar]

- Thompson C. K., & Choy J. J. (2009). Pronominal resolution and gap filling in agrammatic aphasia: Evidence from eye movements. Journal of Psycholinguistic Research, 38, 255–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson C. K., Riley E., den Ouden D., Meltzer-Asscher A., & Lukic S. (2013). Training verb argument structure production in agrammatic aphasia: Behavioral and neural recovery patterns. Cortex, 48, 2358–2376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner V., & Jescheniak J. D. (2010). On the flexibility of grammatical advance planning during sentence production: Effects of cognitive load on multiple lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition, 36, 423–440. [DOI] [PubMed] [Google Scholar]

- Wulfeck B., Bates E., & Capasso R. (1991). A cross-linguistic study of grammaticality judgments in aphasia. Brain and Language, 41, 311–336. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.