Abstract

Despite federal incentives for adoption of electronic health records (EHRs), surveys have shown that EHR use is less common among specialty physicians than generalists. Concerns have been raised that current-generation EHR systems are inadequate to meet the unique information gathering needs of specialists. This study sought to identify whether information gathering needs and EHR usage patterns are different between specialists and generalists, and if so, to characterize their precise nature. We found that specialists and generalists have significantly different perceptions of which elements of the EHR are most important and how well these systems are suited to displaying clinical information. Resolution of these disparities could have implications for clinical productivity and efficiency, patient and physician satisfaction, and the ability of clinical practices to achieve Meaningful Use incentives.

Introduction

Electronic health records (EHRs) have become an increasingly critical component of modern health care delivery, and are used in all clinical disciplines.1 59% of hospitals and 48% of office-based providers currently use EHRs.2,3 However, despite the growing ubiquity of these systems, there is still substantial variability in adoption between different clinical disciplines.1,3 Specifically, adoption rates within surgical and medical specialties are approximately one half that of primary care physicians.1,4,5

In addition to these disparities in adoption rate, concerns exist that different medical fields may have varying levels of compatibility with current-generation EHRs. Numerous medical specialty societies have expressed the need for specialty-specific systems to meet the unique needs of their respective fields, including ophthalmology, orthopedic surgery, dermatology, oncology, obstetrics/gynecology, pediatrics, and pathology.6–12 These unique needs include differing workflow, information gathering, and clinical documentation requirements along with variations in baseline clinical volume, billing and compliance requirements, and specialty-specific terminology. Nonetheless, the widespread adoption of EHRs in the United States continues to increase, driven largely by federal incentives through the Centers for Medicare and Medicaid Services (CMS) Meaningful Use program.2,13 In 2015, penalties will begin to be levied against health care organizations that fail to meet several key EHR implementation requirements,14 further incentivizing EHR adoption and making the avoidance of EHRs less practical for physicians, regardless of how suitable such systems are to their specialty-specific needs.

There are numerous potential implications if current-generation EHRs do not function adequately in medical disciplines of all varieties. These include decreased physician and patient satisfaction, impaired productivity and efficiency, and difficulty meeting Meaningful Use requirements.15–18 However, to date there is no experimental evidence as to whether or not such a disparity in functionality exists among different clinical disciplines, or what the nature of those differences in information-gathering needs might be. While various disciplines have expressed differing ideas of how the EHR should function and what it should provide, there is no evidence that these groups use current generation EHRs differently from each other in clinical practice.19,20 In order to better meet the health information technology needs of all clinical disciplines, determining whether such interdisciplinary differences exist and identifying their precise nature is imperative. To accomplish this, we developed a survey to characterize three parameters of physicians’ methods of clinical information gathering using EHRs when evaluating a new patient; these were 1) How the EHR is incorporated into typical clinical workflow, 2) Which elements of the chart are most important and useful to the clinician, and 3) The strengths and weaknesses of the electronic chart in displaying relevant clinical information. These parameters were then compared between specialty and primary care physicians to identify any differences that may exist.

Methods

This study was approved by the Institutional Review Board at Oregon Health & Science University (Portland, OR). Acknowledgement of an information sheet by survey participants was used in lieu of informed consent.

Survey Development

The authors developed an 18-question survey for the purposes of data collection (Appendix). When answering these survey questions, respondents were asked to envision the scenario of evaluating a new patient rather than performing a follow up visit. This was because the former is a situation that physicians of all specialties have experience with, and because it provides the greatest insight into the strengths and weaknesses of the interaction between physician and EHR. Subjects were also instructed to envision using their own, most commonly-used EHR when responding to survey questions rather than a conception of a generic EHR system. Demographic characteristics were also collected, including primary clinical specialty, gender, clinical experience (years since graduation from medical school), level of computer experience, and primary practice setting (ambulatory vs. inpatient). The survey also included an optional free-text response eliciting any additional thoughts or comments. Survey reliability was confirmed using test-retest and alternate form methods.21 Specifically, the survey was administered twice to 4th year medical students, each administration separated by one week. There was 91% agreement between pre- and post-test responses. Survey content and construct validity were established iteratively through expert interviews and feedback.22

Survey Administration

An email containing a link to the questionnaire was distributed to all practicing physicians at three health care organizations in Oregon (Oregon Health & Science University/Portland VA Medical Center, PeaceHealth Medical System, and Legacy Emmanuel Medical Center) and one in Pennsylvania (Children’s Hospital of Philadelphia). These institutions were selected because of the wide variety of primary care and specialty disciplines represented at each site, and because they represented a mix of academic and community-based practices. The email was then resent to all recipients after one month. The survey was administered using REDCap electronic data capture tools hosted at Oregon Health & Science University.23

Statistical Analysis

The primary purpose of this study was to compare several aspects of physicians’ information gathering methods using the EHR across different clinical disciplines. In order to perform this comparison, individual disciplines were combined into two groups: the Specialty group and the Primary Care group. Primary Care was considered to include General Internal Medicine, General Pediatrics, Family Medicine, and Geriatrics, in accordance with the definition of the term provided by Medicare.24 The Specialty group was defined as any clinical discipline other than these four Primary Care disciplines, and in this case included respondents from Obstetrics & Gynecology, Ophthalmology, Orthopedics, General Surgery, Surgical Sub-Specialties, Emergency Medicine, Internal Medicine Sub-Specialties, and Pediatric Sub-Specialties.

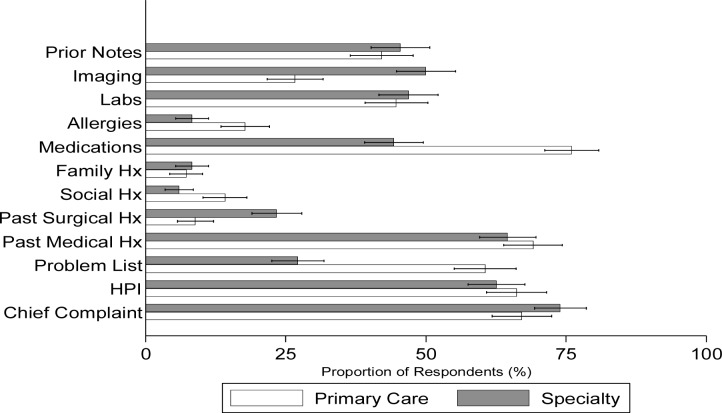

Three primary outcomes were compared between the Specialty vs. Primary Care groups: Outcome 1) How the EHR is incorporated into typical clinical workflow (Table 3); Outcome 2) Which elements of the chart are most important and useful to the clinician (Question 15;Figure 1); and Outcome 3) The strengths and weaknesses of the electronic chart in displaying relevant clinical information (Questions 17 and 18;Tables 4 and 5). Categorical response options were assessed using the Pearson Chi2 test followed by multinomial logistic regression accounting for the covariates listed previously. Binary outcomes were assessed using the Pearson Chi2 test followed by multivariable logistic regression. Ordinal outcomes were assessed using the Cochran-Armitage Test for Trend. One question (Question 15) provided multiple categorical responses for each respondent; in this case, proportions and 95% confidence intervals were compared between the Specialty vs. Primary Care groups for each potential response. Likert-type scale responses followed a nearly normal distribution and were treated as discrete continuous variables. An overall composite score was determined for each question by obtaining the group mean across all sub-sections, and these composite scores were compared between the two main predictor groups using multivariable linear regression. Thus overall scores of the ability of the EHR to display needed clinical information (Question 17) and of the severity of barriers to accessing needed information in the EHR (Question 18) were obtained from each respondent and compared between the Specialty vs. Primary Care groups. All analyses were performed using Stata SE12 (StataCorp, College Station, TX).

Table 3:

Comparison of EHR Use Practices Between Specialty and Primary Care Physicians

| SPECIALTY | PRIMARY CARE | P | |

|---|---|---|---|

| n(%) | n(%) | ||

| Initial source of information on a new patient | 0.02a | ||

|

| |||

| Other physician (referring provider) | 53 (16) | 23(8) | |

| Patient chart | 167(50) | 153 (52) | |

| The patient | 98 (30) | 109 (37) | |

| Technician/Ancillary staff | 4(1) | 4(1) | |

| Other | 9(3) | 7(2) | |

|

| |||

| Timing of initial chart review | <0.01b | ||

|

| |||

| Before entering patient room | 296(89) | 244(83) | |

| In room with patient or after exiting the room | 36(11) | 51(17) | |

|

| |||

| Duration of initial chart review | 0.91c | ||

|

| |||

| 0–2 minutes | 57(17) | 63 (21) | |

| >2–5 minutes | 124 (37) | 97 (33) | |

| >5–10 minutes | 90 (27) | 72 (24) | |

| >10 minutes | 61(18) | 63 (21) | |

Pearson Chi2 Test; association confirmed by multinomial logistic regression adjusting for level of training, clinical experience, and inpatient vs. outpatient practice setting

Multivariable logistic regression adjusting for clinical experience and inpatient vs. outpatient practice setting

Cochran-Armitage Test for Trend

Figure 1:

Relative Importance of Various EHR Elements among Primary Care and Specialty Physicians. Proportion of respondents ranking the indicated section among the top 5 “most important” EHR elements. Hx=history; HPI=history of present illness.

Table 4:

Ease of Accessing Different Types of Information in the HER Ranked on a Likert-type scale from 1 (very good) to 5 (very bad)

| EHR ELEMENT | SPECIALTY | PRIMARY CARE |

|---|---|---|

| Mean ± SD | Mean ± SD | |

| Laboratory Results | 2.04 ±1.05 | 2.04± 1.11 |

| Imaging | 2.25 ±1.24 | 2.25 ± 1.18 |

| Vital Signs | 2.27 ±1.05 | 1.94 ±0.96 |

| Medication List | 2.35 ±1.18 | 2.23 ± 1.16 |

| Procedure Notes | 2.37 ±1.04 | 2.62 ± 1.10 |

| Operative Reports | 2.37 ±1.04 | 2.70 ± 1.10 |

| History & Physical Documentation | 2.37 ±1.34 | 2.80 ± 1.74 |

| Outpatient Clinical Documentation | 2.42 ±1.14 | 2.24 ± 1.08 |

| Discharge Summary | 2.43 ±1.08 | 2.28 ± 1.06 |

| Problem List | 2.50±1.17 | 2.40 ± 1.27 |

| Inpatient Progress Notes | 2.51 ±1.20 | 2.44 ± 1.22 |

| ICU Bedside Data | 2.65 ±1.20 | 2.61 ± 1.22 |

SD=standard deviation

ICU=intensive care unit

Table 5:

Severity of Six Potential Barriers to Accessing Information in the EHR Ranked on a Likert-type scale from 1 (not a barrier) to 5 (severe barrier)

| POTENTIAL BARRIER | SPECIALTY Mean ± SD |

PRIMARY CARE Mean ± SD |

|---|---|---|

| “Information in the chart is inaccurate” | 2.94 ± 1.29 | 2.76 ± 1.24 |

| “Information I need is not in the chart” | 3.11 ± 1.24 | 2.98 ± 1.18 |

| “I can’t find it in the chart” | 3.23 ± 1.23 | 2.88 ± 1.28 |

| “Too much information” | 3.27 ± 1.31 | 3.20 ± 1.31 |

| “Information is poorly displayed/difficult to interpret” | 3.29 ± 1.20 | 3.00 ± 1.25 |

| “Other don’t record information consistently” | 3.38 ± 1.09 | 3.26 ± 1.13 |

SD=standard deviation

Results

Participant Demographics

Of the 3,649 physicians who received the survey link, 744 completed the questionnaire. This yielded a response rate of 20.4%. Of these 744 respondents, 90 were excluded either because they were not actively practicing medicine, did not use an EHR on a regular basis, or did not identify with a relevant clinical specialty, resulting in 654 responses being included in the final analysis. Three hundred fifty respondents (54%) identified with a clinical discipline in the “Specialty” group, and 304 (46%) with a discipline in the “Primary Care” group (Table 1). Subjectively, there were minimal differences between these groups with respect to clinical experience (number of years in practice), baseline computer experience, level of training, and primary practice environment (ambulatory vs. inpatient) (Table 2). However, there was a slightly higher proportion of males in the Specialty group (57%) compared to Primary Care (51%). A total of 13 EHR vendors were utilized by study participants; the most common of these were Epic (Verona, WI; 71%), Centricity (GE Healthcare, UK; 5%), CPRS/Vista (US Department of Veterans Affairs; 5%), Cerner (Kansas City, MO; 3%), and Allscripts (Chicago, IL; 3%).

Table 1:

Clinical Disciplines Represented Within a survey of 654 practicing physicians in the US

| CLINICAL DISCIPLINE | n(%) |

|---|---|

| Specialty | 350 (54) |

|

| |

| Pediatrics Sub-Specialty | 157(24) |

| Internal Medicine sub-specialty | 65 (10) |

| Ophthalmology | 46(7) |

| Surgical Sub-Specialty | 26(4) |

| Emergency Medicine | 20(3) |

| Obstetrics & Gynecology | 17(3) |

| Orthopedics | 10(2) |

| General Surgery | 9(1) |

|

| |

| Primary Care | 304(46) |

|

| |

| General Pediatrics | 169(26) |

| General Internal Medicine | 101(15) |

| Family Medicine | 34(5) |

Table 2:

Demographic Characteristics Of the 654 physicians participating in the survey

| CHARACTERISTIC | SPECIALTY | PRIMARY CARE |

|---|---|---|

| Gender | n(%) | n(%) |

|

| ||

| Male | 200 (57) | 156(51) |

| Female | 150(43) | 148 (49) |

|

| ||

| Baseline computer experience | ||

|

| ||

| Basic | 22(7) | 35(12) |

| Somewhat experienced | 262 (78) | 193 (64) |

| Very experienced | 54(16) | 73 (24) |

|

| ||

| Level of training | ||

|

| ||

| Resident | 20(6) | 56(19) |

| Fellow | 42 (12) | 3(1) |

| Attending Physician | 276(82) | 242 (80) |

|

| ||

| Years in practicea | ||

|

| ||

| 1–10 | 108(32) | 104(35) |

| 11–20 | 98 (29) | 89 (30) |

| 21–30 | 66 (20) | 56(19) |

| 31–40 | 51(15) | 36(12) |

| >40 | 14(4) | 15(5) |

|

| ||

| Primary practice environment | ||

|

| ||

| Ambulatory | 154(52) | 158(52) |

| Inpatient | 140(48) | 143 (48) |

Self-reported years since graduation from medical school

Incorporation of the EHR Into Clinical Workflow (Outcome 1)

Approximately one half of physicians in both the Specialty and Primary Care groups used the EHR as the primary source of initial information when evaluating a new patient (Table 3). However, there were significant differences between the two groups with regard to the other sources of information utilized (Pearson Chi2 test; p=0.02). Multinomial logistic regression confirmed this association even after adjusting for differences in level of training, amount of clinical experience, and practice setting. Specifically, Specialty physicians were significantly more likely to utilize another physician as their initial source of information on a new patient (OR=2.09, p<0.01). Primary Care physicians were significantly more likely to utilize the patient as their initial source of information than their Specialty counterparts (OR=1.47, p=0.05). There were no significant differences between the two groups regarding the likelihood of using the patient chart or a technician/ancillary staff as the primary source of initial information on a patient (Table 3).

Of the Specialty physicians surveyed, 296/332 (89%) reviewed the chart prior to entering the room with the patient, compared to 244/295 (83%) of Primary Care physicians (p=0.02). This relationship was not confounded by gender, amount of computer experience, or level of training. After adjusting for the amount of clinical experience and primary practice setting (ambulatory or inpatient), Primary Care physicians were still significantly more likely to delay chart review until during or after the patient encounter than Specialty physicians (OR=2.15, p<0.01). The duration of this initial chart review session was quite variable in both groups, with the majority of respondents indicating a time frame of 2–10 minutes (64% in the Specialty group and 57% in Primary Care). There was no significant difference between the two groups with respect to duration of chart review (p=0.91).

Relative Importance of EHR Elements (Outcome 2)

Participants ranked several elements of the EHR to identify the top 5 “most important” when evaluating a new patient. Specialty physicians ranked these sections as (in descending order of importance): 1: Chief Complaint, 2: Past Medical History, 3: History of Present Illness, 4: Imaging, and 5: Lab Values. Among Primary Care physicians, these sections were: 1: Medications, 2: Past Medical History, 3: Chief Complaint, 4: History of Present Illness, and 5: Problem List (Figure 1). Two individual elements of the EHR were perceived as significantly more important by the Primary Care group compared to the Specialty group; the first was the Problem List, ranked among the top 5 most important sections of the EHR by 61% of Primary Care physicians (95% confidence interval [CI]: 55–66%) compared to only 27% of Specialty physicians (95% CI: 23–32%). Secondly, the Medications section was ranked in the top 5 by 76% of Primary Care physicians (95% CI: 71–81%) compared to 44% of Specialty physicians (95% CI: 39–50%). One element of the EHR was significantly more important to Specialty physicians; this was the Imaging section, ranked in the top 5 by 50% (95% CI: 45–55%) compared to only 27% of Primary Care physicians (95% CI: 22–32%). There were also small but statistically significant differences in the rankings of the Allergies, Social History, and Past Surgical History sections (Figure 1).

EHR Utility and Ease-of-Use (Outcome 3)

Two Likert-type scale questions assessed this parameter. The first (Question 17) asked respondents to rank how well information was displayed in various sections of the EHR on a scale from 1 to 5 (1 indicating the display was “Very good”, 3 indicating “neutral”, and 5 indicating “Very bad”). Average ratings of these sections ranged from mean ± standard deviation (SD) of 2.04 ± 1.08 for “Laboratory Results” to 2.64 ± 1.21 for “ICU Bedside Data” (Table 4). The composite score representing the overall ability of the EHR to display relevant clinical information had a mean ± SD of 2.40 ± 0.75 (range: 1–5). Multivariable linear regression showed no difference in this composite score between the Specialty and Primary Care groups (p=0.90). However, there was a significant association with practice setting. Specifically, ambulatory physicians rated the composite score significantly worse than inpatient physicians (2.48 vs. 2.29, respectively; p<0.01).

The second Likert-type scale question asked respondents to rank the severity of 6 potential barriers to accessing needed information in the EHR on a scale from 1 to 5 (1 indicating a “Not a barrier”, 3 indicating “Moderately strong barrier”, and 5 a “Severe barrier”). Average ratings of these barriers ranged from mean ± standard deviation (SD) of 2.86 ± 1.27 for “Information in the chart is inaccurate” to 3.32 ± 1.11 for “Others don’t record information consistently” (Table 5). The composite score of these six potential barriers had a mean ± SD of 3.11 ± 0.86 (range: 1–5). Multivariable linear regression showed a small but statistically significant difference in this composite score between the Specialty (3.20) and Primary Care (3.01) groups (p<0.01). This association was not confounded by gender, amount of computer experience, level of training, clinical experience, or practice setting.

Discussion

This study assessed potential differences in EHR requirements among different clinical disciplines. Key findings were: 1) Both specialty and primary care physicians relied on the EHR as the most common initial source of clinical information; 2) There were significant differences between primary care and specialty physicians regarding which sections of the EHR were considered most important; 3) Specialists identified stronger barriers than primary care physicians with regard to ability to access clinical information in the EHR.

The first key finding was that both specialists and primary care physicians identified the chart as the most important initial source of patient information. This emphasizes the critical role of EHRs in modern health care, and the potential impact of using systems that do not adequately meet all providers’ needs. Interestingly, while the importance of the EHR was uniform between both groups, its method of use and incorporation into clinical workflow were not. Specifically, primary care physicians were much more likely to delay initial chart review until during or after entering the patient room. This resulted in them being more likely to utilize the patient as their initial source of information, while specialists were more likely to obtain information from other/referring providers. These differences in workflow provide additional opportunities for optimization of EHRs to meet the varying needs of different disciplines.

The second key finding identified several elements of the chart that were considered important by one group but not the other. Specifically, primary care physicians showed significantly greater interest in the Problem List and Medications sections than their specialty counterparts. As one respondent stated, “I’m a surgeon…I write 2 or 3 prescriptions a month, but the patient’s pharmacy is thrust before me in almost every screen.” Conversely, specialty physicians considered the Imaging section much more important than primary care physicians. This sentiment was also echoed in the respondent comments; said one physician, “In image driven specialties, like neurosurgery, it is crucial to get actual outside imaging and not just reports. The difficulty in doing this often leads to unnecessary CT/MRI scans and better communication/transmission of these data would be valuable.”

The third key finding was that specialists face slightly stronger barriers than primary care physicians in accessing needed information from the EHR. This difference was small but statistically significant, and is consistent with the complaints raised by numerous specialty societies. One respondent summarized this by saying, “I think that most of the major systems that try to serve multiple specialties are full of an unbelievable amount of bloat. My system is specialty specific and is tailored to do exactly what I need it to do.” Said another, “The electronic medical record is very poorly organized for a pediatric ICU patient. We have to create workarounds to get the information displayed in a meaningful manner.”

These results clearly demonstrate several differences between primary care and specialty fields with respect to which elements of the EHR are considered most important when gathering clinical information, as well as their perceptions of how well these systems are able to provide such information. These differences have several important implications. The first is impaired satisfaction among physicians using systems ill-suited to their practice; one recent survey suggested that 31% of all surgical and medical specialists were “very dissatisfied” with their EHR systems, compared to only 8% of primary care providers.25 In addition to physician satisfaction, inefficiencies introduced by poorly-integrated EHRs could impair clinical productivity and in turn affect patient satisfaction as well.26 Another potential sequela of this situation is greater difficulty in achieving Meaningful Use criteria, with large potential impacts on reimbursement.15 This is important, as it has been shown that EHR selection is heavily influenced by financial and organizational factors independent of clinical demands.27 In response to this concern, several medical specialty societies have successfully advocated for the inclusion of rules, exemptions, and options in stage 2 of Meaningful Use to better suit the practices of specialists.15 However, prior to this study there have been no data to guide these modifications, making their adequacy uncertain. Importantly, CMS does permit Meaningful Use exclusions for providers that do not collect core measures outside their scope of practice; however, these exclusions must be applied for on an individual provider basis.9,28 This places the burden of appropriately collecting these measures on the end user rather than the system, and does not provide a large-scale solution to the problem.

The results of this study inform several potential interventions to address these concerns. First, EHRs must be targeted to meet the unique documentation needs of individual specialties. Several such “specialty-specific” systems already exist, but further assessment of the precise information-gathering requirements of each specialty is required to optimize these systems.29 Second, the method of implementation of EHRs across health care organizations must be carefully considered. The vast majority of EHR-using physicians in the United States practice in health systems employing a single EHR system incorporated across multiple clinical departments (the so-called “Enterprise” or “Single Vendor” EHR solution).30,31 This has benefits for interdepartmental communication and ease of logistical processes such as billing and scheduling, but as the results of this study suggest, it may be difficult for a single EHR to meet the needs of all specialties simultaneously. Alternatively, a “Best of Breed” approach involving a network of specialty-specific systems can be employed.29 However, establishing this network of multiple products from a variety of vendors is extremely challenging from logistical and interoperability perspectives, and can result in a fragmented and ineffectual hospital information system.32,33 More recently, a third strategy has emerged: the so-called “Best of Suite” approach.32,34,35 This strategy involves a point-by-point assessment of the relative merits of integration vs. differentiation at each node of the information system (i.e. individual clinical departments, billing, scheduling, etc.), resulting in a framework falling somewhere between the “Single Vendor” and “Best of Breed” models. This approach may provide a more balanced solution, improving hospital efficiency36 while simultaneously meeting the varying needs of different clinical disciplines as identified in this study.

This study has several limitations. First, the response rate is on the low-normal end for similar surveys of this nature.37 Thus our respondent pool may not be representative of the population as a whole, and may be a collection of physicians with the most strongly-held beliefs on this topic. However, the wide ranges and standard deviations of responses to Likert-type scale questions indicate adequate variability of opinion among the respondents. Second, our grouping of clinical disciplines was fairly coarse due to overrepresentation of some disciplines compared to others. For example, there were many more pediatricians than surgeons in our respondent pool. However, subjectively there were minimal differences between individual specialties within groups, indicating an appropriate categorization scheme. Additionally, these differences resulted in inadequate power to identify differences between individual specialties, requiring the grouping of disciplines into “Specialty” and “Primary Care” categories. Consequently, our results provide a broad assessment of differences between clinical disciplines, but future studies are needed to identify differences between individual disciplines. Third, not all EHR systems were represented in our study. However, our sampling scheme did capture several of the most heavily used products nationwide. Additionally, supplementary analysis revealed that the trends identified were present among both Epic and non-Epic users, indicating that the results are not unique to this system alone. Finally, Likert-type scale responses were analyzed parametrically, which assumes that the intervals between ordinal categories are of equal size. For example, we assume the difference between “Not a barrier” (1 out of 5) and “Moderate barrier” (3 out of 5) is the same as that between “Moderate barrier” and “Severe barrier” (5 out of 5). However, this assumption was supported by the fact that responses to these questions followed approximately normal distributions.

Conclusions

This study demonstrates several differences between specialty and primary care physicians in their methods of using EHRs for clinical information gathering and perceptions of the most important elements of these systems. This has important implications for clinical workflow and efficiency, patient satisfaction, physician satisfaction, and financial reimbursement. Future studies must continue to delineate the unique requirements of individual specialty fields to facilitate informed modification of EHR design, implementation, and governmental oversight.

Acknowledgments

Research reported in this publication was supported by Oregon Clinical and Translational Research Institute (1 UL1 RR024140 01), National Library of Medicine of the National Institutes of Health (T15LM007088), Agency for Healthcare Research and Quality (1 K12 HS022981 01), and unrestricted departmental funding from Research to Prevent Blindness.

Appendix: Selected Survey Questions

Survey Question 15:

Survey Question 17:

Survey Question 18:

References

- 1.Grinspan ZM, Banerjee S, Kaushal R, Kern LM. Physician specialty and variations in adoption of electronic health records. Appl Clin Inform. 2013 May 22;4(2):225–240. doi: 10.4338/ACI-2013-02-RA-0015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Charles D, King J, Patel V, Furukawa MF. Adoption of electronic health record systems among U.S. non-federal acute care hospitals: 2008–2012. 2013 2013 Mar; ONC Data Brief 9. [Google Scholar]

- 3.Hsiao C, Hing E. Use and characteristics of electronic health record systems among office-based physician practices: United States, 2001–2013. 2014 NCHS Data Brief 143. [PubMed] [Google Scholar]

- 4.Patel V, Jamoom E, Hsiao CJ, et al. Variation in electronic health record adoption and readiness for meaningful use: 2008–2011. Journal of General Internal Medicine. 2013;28(7):957–64. doi: 10.1007/s11606-012-2324-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wright A, Henkin S, Feblowitz J, et al. Early results of the meaningful use program for electronic health records. New England Journal of Medicine. 2013;368:779–80. doi: 10.1056/NEJMc1213481. [DOI] [PubMed] [Google Scholar]

- 6.Chiang MF, Boland MV, Brewer A, et al. Special requirements for electronic health record systems in ophthalmology. Ophthalmology. 2011;118(8):1681–7. doi: 10.1016/j.ophtha.2011.04.015. [DOI] [PubMed] [Google Scholar]

- 7.Goradia VK. Electronic medical records for the arthroscopic surgeon. Arthroscopy: The Journal of Arthroscopic & Related Surgery. 2006;22(2):219–24. doi: 10.1016/j.arthro.2005.11.014. [DOI] [PubMed] [Google Scholar]

- 8.Corrao N. Importance of testing for usability when selecting and implementing an electronic health or medical record system. Journal of Oncology Practice. 2010;6(3):120–4. doi: 10.1200/JOP.200017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dharmaratnam A, Kaliyadan F, Manoj J. Electronic medical records in dermatology: Practical implications. Indian Journal of Dermatology, Venereology and Leprology. 2009;75(2):157–61. doi: 10.4103/0378-6323.48661. [DOI] [PubMed] [Google Scholar]

- 10.Lehmann CU, O’Connor KG, Shorte VA, Johnson TD. Use of Electronic Health Record Systems by Office-Based Pediatricians. Pediatrics. 2014 Dec 29; doi: 10.1542/peds.2014-1115. [DOI] [PubMed] [Google Scholar]

- 11.Henricks WH, Wilkerson ML, Castellani WJ, Whitsitt MS, Sinard JH. Pathologists’ place in the electronic health record landscape. Arch Pathol Lab Med. 2015 Mar;139(3):307–310. doi: 10.5858/arpa.2013-0709-SO. [DOI] [PubMed] [Google Scholar]

- 12.McCoy MJ, Diamond AM, Strunk AL. Special requirements of electronic medical record systems in obstetrics and gynecology. Obstet Gynecol. 2010;116(1):140–3. doi: 10.1097/AOG.0b013e3181e1328c. [DOI] [PubMed] [Google Scholar]

- 13.Tagalicod R, Reider J. Progress on adoption of electronic health records. 2013. Available at: http://www.cms.gov/eHealth/ListServ_Stage3Implementation.html.

- 14.EHR Incentive Programs. 2015. [Accessed February 25, 2015]. Available at: http://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/index.html?redirect=/EHRIncentivePrograms.

- 15.Lim MC, Chiang MF, Boland MV, McCannel CA, Wedemeyer L, Epley KD, et al. Meaningful use: how did we do, where are we now, where do we go from here? Ophthalmology. 2014 Sep;121(9):1667–1669. doi: 10.1016/j.ophtha.2014.06.048. [DOI] [PubMed] [Google Scholar]

- 16.Chiang MF, Read-Brown S, Tu DC, Choi D, Sanders DS, Hwang TS, et al. Evaluation of electronic health record implementation in ophthalmology at an academic medical center (an american ophthalmological society thesis) Trans Am Ophthalmol Soc. 2013;111:34–56. [PMC free article] [PubMed] [Google Scholar]

- 17.Redd TK, Read-Brown S, Choi D, et al. Electronic health record impact on productivity and efficiency in an academic pediatric ophthalmology practice. J AAPOS. 2014 Dec;18(6):584–9. doi: 10.1016/j.jaapos.2014.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Poissant L, Pereira J, Tamblyn R, Kawasumi Y. The impact of electronic health records on time efficiency of physicians and nurses: a systematic review. J Am Med Inform Assoc. 2005;12(5):505–516. doi: 10.1197/jamia.M1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Clarke MA, Steege LM, Moore JL, Koopman RJ, Belden JL, Kim MS. Determining primary care physician information needs to inform ambulatory visit note display. Appl Clin Inform. 2014 Feb 26;5(1):169–190. doi: 10.4338/ACI-2013-08-RA-0064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Clinician Perspectives on Electronic Health Information Sharing for Transitions of Care. [Accessed February 2, 2015]. Available at: http://bipartisanpolicy.org/wp-content/uploads/sites/default/files/Clinician%20Survery_format%20(2).pdf.

- 21.Litwin M. How to measure survey reliability and validity. First Ed. Vol. 1995. Thousand Oaks, California: Sage Publications; p. 96. [Google Scholar]

- 22.Polikandroiti M, Goudevenos I, Lampros M, et al. Validation and reliability analysis of the questionnaire “needs of hospitalized patients with coronary artery disease”. Health Science Journal. 2011;5(2):137–48. [Google Scholar]

- 23.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) – a metadata-driven methodology and workflow process for providing translational informatics research support. Journal of Biomedical Informatics. 2009;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Medicare Physician Compare: Specialty Definitions. [Accessed March 11, 2015]. Available at: http://www.medicare.gov/physiciancompare/staticpages/resources/specialtydefinitions.html.

- 25.Top primary care electronic health records honors announced by 2014 Black Book Satisfaction Survey, EHR vendor loyalty makes distinct surge upward. 2015. [Accessed February 25, 2015]. Available at: http://www.prweb.com/releases/2014/03/prweb11655078.htm.

- 26.Hayrinen K, Saranto K, Nykanen P. Definition, structure, content, use and impacts of electronic health records: a review of the research literature. International Journal of Medical Informatics. 2008;77:291–304. doi: 10.1016/j.ijmedinf.2007.09.001. [DOI] [PubMed] [Google Scholar]

- 27.Wang BB, Wan TT, Burke DE, Bazzoli GJ, Lin BY. Factors influencing health information system adoption in American hospitals. Health Care Manage Rev. 2005 Jan-Mar;30(1):44–51. doi: 10.1097/00004010-200501000-00007. [DOI] [PubMed] [Google Scholar]

- 28.Meaningful use for specialists tipsheet (Stage 1 2014 definition) 2014. [Accessed March 2, 2015]. Available at: http://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/Downloads/Meaningful_Use_Specialists_Tipsheet_1_7_2013 pdf.

- 29.Bentley T, Rizer M, McAlearney AS, et al. The journey from precontemplation to action: transitioning between electronic medical record systems. Health Care Manage Rev. 2014 Oct; doi: 10.1097/HMR.0000000000000041. epublication ahead of print. [DOI] [PubMed] [Google Scholar]

- 30.US Department of Health and Human Affairs Doctors and hospitals’ use of health IT more than doubles since 2012. 2013. [Accessed June 26, 2014]. Available at: http://www.hhs.gov/news/press/2013pres/05/20130522a.html.

- 31.Burke D, Yu F, Au D, Menachemi N. Best of breed strategies-hospital characteristics associated with organizational HIT strategy. J Healthc Inf Manag. 2009;23(2):46–51. [PubMed] [Google Scholar]

- 32.Ford E, Menachemi N, Huerta T, Yu F. Hospital IT adoption strategies associated with implementation success: Implications for achieving meaningful use. Journal of Healthcare Management. 2010;55:175–189. [PubMed] [Google Scholar]

- 33.Hermann S. Best-of-breed versus integrated systems. American Journal of Health-System Pharmacy. 2010;67:1406–1410. doi: 10.2146/ajhp100061. [DOI] [PubMed] [Google Scholar]

- 34.Hoehn B. Integration issues: What is the best clinical information systems strategy? Journal of Health Information Management. 2010;24:10–12. [Google Scholar]

- 35.Thouin M, Hoffman J, Ford E. The effect of information technology (IT) investments on firm-level performance in the healthcare industry. Health Care Manage Rev. 2008;33:60–69. doi: 10.1097/01.HMR.0000304491.03147.06. [DOI] [PubMed] [Google Scholar]

- 36.Fareed N, Ozcan YA, DeShazo JP. Hospital electronic medical record enterprise application strategies: Do they matter? Health Care Manage Rev. 2012;37(1):4–13. doi: 10.1097/HMR.0b013e318239f2ff. [DOI] [PubMed] [Google Scholar]

- 37.Kaplowitz M, Hadlock T, Levine R. A comparison of web and mail survey response rates. Pub Opin Q. 2004;68(1):94–101. [Google Scholar]