Abstract

Objective

Cochlear implant patients have difficulty in noisy environments in part because of channel interaction. Interleaving the signal by sending every other channel to the opposite ear has the potential to reduce channel interaction by increasing the space between channels in each ear. Interleaving still potentially provides the same amount of spectral information when the two ears are combined. Although this method has been successful in other populations such as hearing aid users, interleaving with cochlear implant patients has not yielded consistent benefits. This may be because perceptual misalignment between the two ears and the spacing between stimulation locations must be taken into account before interleaving.

Design

Eight bilateral cochlear implant users were tested. After perceptually aligning the two ears, twelve channel maps were made that spanned the entire aligned portions of the array. Interleaved maps were created by removing every other channel from each ear. Participants' spectral resolution and localization abilities were measured with perceptually aligned processing strategies both with and without interleaving.

Results

There was a significant improvement in spectral resolution with interleaving. However, there was no significant effect of interleaving on localization abilities.

Conclusions

The results indicate that interleaving can improve cochlear implant users' spectral resolution. However, it may be necessary to perceptually align the two ears and/or use relatively large spacing between stimulation locations.

Introduction

Cochlear implant (CI) users have considerable difficulty in various tasks including understanding speech in noisy environments (Muller et al. 2002; van Hoesel et al. 2008; van Hoesel and Tyler 2003). This can result from a number of factors such as the lack of temporal fine structure information that results from current speech processing techniques (e.g., Lorenzi et al. 2006) and channel interaction that occurs when adjacent channels stimulate overlapping neural populations (e.g., Fu and Nogaki 2005). For example, CI users are rarely able to benefit from more than eight channels at any given time (Friesen et al. 2001), likely because of channel interaction. Although this is a sufficient number of channels for understanding speech in quiet (Shannon et al. 1995), it is often insufficient for understanding speech in noisy environments (Fishman et al. 1997; Friesen et al. 2001; Loizou et al. 1999; Shannon et al. 2004), which requires better spectral resolution than is possible with only eight channels.

One method that has been used with a number of populations to reduce within-ear masking either from channel interaction or from the spread of masking is to interleave the spectral information across ears (Aronoff et al. 2014; Kulkarni et al. 2012; Loizou et al. 2003; Lunner et al. 1993; Siciliano et al. 2010; Takagi et al. 2010; Tyler et al. 2010; Zhou and Pfingst 2012; Zhou and Xu 2011). This means that the spectrum is divided into two interleaved groups. Half of the frequency bands are presented to one ear and the other half are presented to the other ear. For diotic signals, if the left and right ear signals are simply combined centrally, this technique will provide essentially the same acoustic information as sending the output of all frequency bands to both ears (diotic signal processing). However, because this technique results in greater spacing between stimulation sites for each ear, there is less within-ear masking.

Interleaving the signal between the ears has been found to consistently improve performance with diotic signals for hearing impaired listeners with moderate to moderately severe bilateral sensorineural hearing loss (Kulkarni et al. 2012; Lunner et al. 1993). This improvement occurs whether the stimuli are amplified and presented over headphones (Kulkarni et al. 2012) or presented via modified hearing aids (Lunner et al. 1993). Similarly, improvements with interleaving have been found for normal hearing (NH) listeners when hearing loss is simulated by adding noise in proportion to the short-time amplitude of the signal (Kulkarni et al. 2012).

In contrast, performance with CI patients has shown either no consistent benefit of interleaving (Tyler et al. 2010) or a significantly detrimental effect of interleaving (Mani et al. 2004). One reason why this technique may have yielded poor or inconsistent effects with CI users is that, although the analysis filters were interleaved, the relative pitch places of the corresponding electrodes was not controlled because stimulation locations were chosen based only on electrode number.

Previous research (Aronoff et al. 2015; Lin et al. 2013) suggests that bilateral CI users experience place-dependent contralateral masking (e.g., an electrode on the left array may preferentially mask a specific electrode on the right array). As a result, interleaving channels based on electrode number may result in one ear partially masking or interfering with the other ear. It may also result in the percept being spectrally distorted when the signal from the left and right ear are combined centrally. Simulations with NH listeners suggests that using mismatched arrays can prevent any benefit of interleaving. Siciliano et al. (2010) used six channel vocoders with alternating channels presented to opposite ears. They compared performance on IEEE sentences and vowel identification with this interleaved condition to performance with six interleaved channels with one ear spectrally shifted, resulting in a mismatch) or with only the three shifted or unshifted channels presented to one ear. The best performance was found with the interleaved (but unshifted) condition. Not only was the interleaved unilaterally shifted condition worse, but performance on that condition was comparable or worse than performance on the three unshifted channels condition, even after ten hours of training.

An alternative explanation for the lack of a consistent benefit with interleaving is that, given the broad current spread for cochlear implant patients, disabling every other electrode in a given ear may not result in sufficient spacing between stimulation sites to yield a benefit for interleaving. It may be the case that channel interaction for nearby but not adjacent electrodes is still too large. In both the Tyler et al. (2010) and the Mani et al. (2004) study, stimulation sites for the interleaved condition were separated by only one electrode.

Optimal benefits for interleaved signal processing with CI listeners likely require that the interleaved stimulation sites on the left and right array lead to non-overlapping pitch percepts and are sufficiently spaced. The goal of this study was to determine if implementing interleaved processors with pitch-matched stimulation sites separated by more than one electrode will yield a substantial and consistent benefit for CI patients.

Although interleaved processors may improve patients' abilities to listen in noisy environments, they may also distort the binaural cues that patients rely on for tasks such as localizing sounds and understanding speech that is spatially separated from the background noise. Interleaving has been shown to reduce localization performance for NH listeners (Aronoff et al. 2014). Although this detrimental effect was largest when localization was based on interaural time differences (ITDs), CI listeners are generally not able to make use of ITDs when using clinical processors (Aronoff et al. 2012; Aronoff et al. 2010). However, in Aronoff et al. (2014) there was also a significant decrease in interleaved performance when localizing based on interaural level difference (ILD) cues. It is likely that this decrease in performance results in part from ILD cues being distributed across unmatched frequency regions in the two ears (Francart and Wouters 2007). However, cochlear implant patients' ILD sensitivity may be less affected by perceptually misaligned arrays given that current spread spectrally smears the signal, functionally reducing the perceptual misalignment (Kan et al. 2013). As such, CI patients' localization abilities with and without interleaving were also investigated.

Methods

Subjects

Eight bilateral CI subjects participated in this study. All participants had Advanced Bionics CII or HiRes 90K implants in each ear. Subject details are provided in Table 1.

Table 1. Participant characteristics.

| Subject | Age | Gender | Hearing Loss Onset | Cause | Implant Experience |

|---|---|---|---|---|---|

| C3 | 57 | Female | 29 years old | Hereditary | 7 years (L) 4 years (R) |

| C14 | 48 | Male | 4.5 months | Maternal Rubella | 4 years (L) 8 years (R) |

| C20 | 75 | Female | 7 years old | Red Measles High Fever | 12 years (L) 4 years (R) |

| C21 | 59 | Male | 18 years old | Ear infection Noise exposure | 1.5 year (L) 1 year (R) |

| I01 | 62 | Female | At birth | Unknown | 8 years (L) 5 years (R) |

| I02 | 60 | Female | 2 years old | Meningitis | 2 years (L) 5 years (R) |

| I03 | 70 | Female | Birth | Unknown | 12 years (L) 7 years (R) |

| I05 | 56 | Male | 5 years old | Unknown (Injury or Genetic) | 12 years (L) 12 years (R) |

Apparatus

Loudness balancing and pitch matching were conducted using either Bionic Ear Data Collection System (BEDCS version 1.17) for both ears or BEDCS for one ear and HRStream (version 1.0.2) for the other ear. Both systems allow equivalent control over stimulation parameters. BEDCS and HRStream were controlled with custom Matlab-based software. Interleaved and non-interleaved maps were created using the Bionic Ear Programming System (BEPS+ version 1.6) and research Harmony processors.

Electric stimulation

Stimulation consisted of biphasic monopolar pulses. These pulses had a phase duration of approximately 32 μs and a pulse rate of approximately 976 pulses per second.

Current steering

Current steering was used to obtain more precise pitch matches between the two ears. Current steering describes stimulation where current is presented in-phase on two adjacent electrodes such that the electric fields from the two electrodes interact and create a peak of stimulation between the two electrodes. The position of the peak of stimulation between the two electrodes is determined by the relative current amplitudes presented on each electrode. The coefficient α is used to describe the proportion of the total current presented to the most basal of the two electrodes. The current steered electrical stimulation pattern is known as a “virtual channel”. Previous research has demonstrated that subjects with Advanced Bionics implants can distinguish places of stimulation differing by 20% (i.e. α differences of 0.2) of the distance between electrodes (e.g., Landsberger and Srinivasan 2009). The pitch of a virtual channel is perceived to be between the pitches typically provided by each of the two component electrodes. The pitch can effectively be “steered” anywhere between the two component electrodes by adjusting the relative amplitudes of each of the component electrodes (e.g., Donaldson et al. 2005; Firszt et al. 2007). Steering virtual channels between two electrodes is perceived to cause a continuous change in pitch (Luo et al. 2010; Luo et al. 2012). Furthermore, the spread of excitation of a virtual channel is the same as the spread of excitation from one physical electrode (Busby et al. 2008; Saoji et al. 2009). Steering between two adjacent electrodes produces no change in loudness (Donaldson et al. 2005). Therefore, by using virtual channels, place stimulation is not limited to the physical electrodes but instead can be provided as if there were a physical electrode at any location between the most apical and most basal electrode on the array. In the present experiment, current steering was used to increase the spectral resolution of the pitch matching task.

Loudness Balancing

For each array, the electrodes were loudness balanced by stimulating four adjacent electrodes in sequence, presenting each for 500 ms with a 1 second interstimulus interval. Electrode 1 was the reference electrode for the first group and stimulation on that electrode was presented at the most comfortable level. The stimulation level was adjusted for any electrode that was louder or softer than the first electrode. After all electrodes in that group were loudness balanced, a new group of four adjacent electrodes were chosen with the first electrode from the new group being the same as the last electrode from the previous group (i.e., group 1: electrodes 1-4; group 2: electrodes 4-7).

When loudness balancing across arrays, stimulation consisted of 500 ms pulse trains with an interstimulus period of approximately 500 ms. Stimulation alternated between the left and the right ear. Loudness balancing was conducted by either having the subjects use a mouse to increase or decrease the stimulation level of the target by 0.5, 1, or 1.5 dB or by having the experimenter adjust the target loudness based on the subjects' report of the loudness of the target stimulation compared to that of the reference. The reference stimulus was set at the most comfortable level. Loudness balancing across arrays was conducted for reference electrode 10 and the stimulation levels for all electrodes were subsequently globally adjusted by shifting the stimulation level by the same percent of the dynamic range for all electrodes.

Pitch matching

The reference and target stimuli were pulse trains of 500 ms of duration, with stimulation alternating between the left and the right ear. The interstimulus interval was approximately 500 ms. Stimuli were presented at the most comfortable level with 0.5 dB level roving to minimize potential loudness cues. The reference ear was usually the left ear. Place of stimulation could be adjusted in the target ear in 0.1 electrode steps (using current steering) by turning a knob (Powermate, Griffin Technology) or clicking using the mouse. This process of stimulus presentation followed by a subject-directed change in the place of stimulation continued until the left and right ear stimulation was perceived as the same pitch. Pitch matches were obtained for at least 22 unique reference locations. Only a small subset of reference locations (six or fewer) were used more than once for each subject. The pitch matching data was fit with a least trimmed squares regression, with all participants having a slope that was significantly greater than zero.

Interleaved and non-interleaved maps

Twelve channel interleaved and non-interleaved maps were created using BEPS+. For both the interleaved and non-interleaved maps, the pitch matching data was first used to select twelve bilaterally pitch-matched pairs of stimulation sites. This was done by selecting twelve equally spaced stimulation sites across the left array and the pitch-matched pairs on the right array based on a linear fit of the pitch matching data. For participant I02, the twelve stimulation sites were limited to electrodes 1-10 on the right ear and their pitch-matched pairs because the perceived pitches increased when going from stimulating electrodes 1 to 10 (as is typical) but then changed non-monotonically when stimulating electrodes 10-16. All other participants used the full range of pitch-matched pairs. Twelve bilateral pairs of pitch-matched stimulation sites were selected for all participants and used to generate twelve channel maps. For these maps, the left and right electrode in each pair was assigned the same frequency band, with the twelve bands spanning 374-4996 Hz (see Table 2). Typically the upper frequency band extends as high as approximately 8 kHz. This is normally implemented by having the highest frequency band span nearly 4 kHz. Because such a disproportionately large allocation in one frequency band would likely be detrimental with interleaving, and given the small amount of speech information above 5 kHz, a relatively modest frequency upper limit was used in this study.

Table 2. Frequency allocation table used for the study.

| Channel | Lower bound (Hz) | Upper bound (Hz) |

|---|---|---|

| 1 | 374 | 510 |

| 2 | 510 | 646 |

| 3 | 646 | 714 |

| 4 | 714 | 918 |

| 5 | 918 | 1121 |

| 6 | 1121 | 1393 |

| 7 | 1393 | 1733 |

| 8 | 1733 | 2141 |

| 9 | 2141 | 2617 |

| 10 | 2617 | 3229 |

| 11 | 3229 | 4044 |

| 12 | 4044 | 4996 |

The interleaved maps were derived from the pitch-matched, non-interleaved maps by setting M levels to 0 (preventing a change to the frequency allocation) for even numbered channels on one ear and odd-numbered channels on the other ear. Because the channels were based on pitch-matched pairs, this meant that only one member of each pitch-matched pair was used.

Training and adaptation

Some participants indicated that the maps initially sounded unnatural and had difficulty understanding speech. To provide listening experience to acutely adapt to the new maps, participants received structured vowel and consonant training using Angel Sound (angelsound.tigerspeech.com) with each map. This consisted of vowel recognition training (two blocks of 25 stimuli) and consonant recognition training (also two blocks of 25 stimuli). Participants were presented a word (or for one block of consonant recognition training, a non-word) and asked to select between two choices that differ in terms of one phoneme. If the participant was incorrect, both choices were presented as well as an indication of the correct choice. Because the goal of this training was to provide structured experience, no accuracy criteria was used for completing training, but the majority of participants performed well, accurately identifying the majority of the stimuli. Training was completed either in the sound field or by sending the signal directly to the auxiliary input on the processor. Training was self-paced and consisted of approximately 10 to 20 minutes of training per map. Pilot data indicated that this was sufficient to allow the maps to sound relatively natural and for speech to sound relatively understandable, although speech performance would likely greatly improve with extended experience.

Spectral resolution

Spectral resolution was measured with a modified version of the spectral ripple test (Henry and Turner 2003; Won et al. 2007) referred to as the Spectral-temporally Modulated Ripple Test (SMRT; Aronoff and Landsberger 2013). Subjects were presented with three stimuli with amplitude modulation in the frequency domain (i.e., ripples) that varied as a function of time using a three interval forced-choice task. Each of the two reference stimuli had a ripple density of 20 ripples per octave (RPO). Both reference stimuli were uniquely generated and thus were not spectrally identical. The target stimulus had a ripple density that was varied using a 1 up/1 down adaptive procedure, with a starting point of 0.5 RPO and a step size of 0.2 RPO. Subjects were asked to choose the stimulus that differed from the other two. The test was completed after 10 reversals. Thresholds were calculated based on the average of the last six reversals. The stimuli were presented at a comfortable loudness via direct connect. This task is sensitive to changes in spectral resolution (see Aronoff and Landsberger, 2013), but because it does not rely on speech stimuli that require a learned relationship between a spectral pattern and meaning, it can provide an acute measure of spectral resolution. Most participants completed three SMRT tests with both maps, with the exception of C20 who completed two and C21 who completed four. Test-retest reliability (i.e., one standard deviation) was 0.7 ripples per octave.

Localization

Testing followed the same procedure as in Aronoff et al. (2012). Virtual locations were created using a head-related transfer function specific to the Harmony processor's microphones (Aronoff et al. 2011). Subjects were asked to locate the stimulus presented from one of twelve virtual locations in the rear field, where the sensitivity to auditory spatial cues is most critical. The locations were spaced 15° apart, ranging from 97.5° to 262.5°. All locations were one meter away from the subject. The locations were numbered from 1 to 12, with number 1 located at 97.5° (right) and number 12 located at 262.5° (left). Subjects were provided with a sheet that showed each location and its corresponding number. The subject's task was to identify the location from which the stimulus originated, verbally indicating the number corresponding to the perceived location.

Prior to testing in each condition, subjects were familiarized with the stimulus locations by listening to the stimulus presented at each of the twelve locations, once in ascending and once in descending order. The location of each stimulus was indicated to the subject. A reference stimulus was presented immediately prior to each target stimulus. This reference was located at 90° when familiarizing in the ascending order and at 270° when familiarizing in the descending order. After familiarization, subjects were presented with a practice test that included each location presented in a random order. After completing the practice session, subjects proceeded to the test session. Neither the practice nor the test sessions contained reference stimuli, and no feedback was provided. For the practice and test sessions, the target was presented twice at a given location prior to the subject indicating the perceived location of the stimulus. Patients were allowed to repeat a stimulus before responding, although this rarely occurred.

For each test, participants were presented with a block of 24 stimuli. The final test score was determined by calculating the RMS localization error, in degrees, based on all responses. Test-retest reliability (i.e., one standard deviation) was four degrees. Most participants completed two localization tests for each map. C21, completed three localization tests for the non-interleaved map.

The procedures were approved by the St. Vincent Medical Center institutional review board (affiliated with the House Ear Institute) and the institutional review board for the University of Illinois at Urbana-Champaign.

Results

Robust statistical techniques were adopted to minimize the potential effects of outliers and non-normality (see supplemental appendix). These included bootstrap analyses, which avoid assumptions of normality by using distributions based on the original data rather than an assumed normal distribution. These also include trimmed means, a cross between means and medians.

To determine if using interleaved processors improved spectral resolution, a percentile bootstrap pairwise comparisons with 20% trimmed means was conducted comparing the SMRT scores for the two strategies. The results indicated that performance on SMRT was significantly better with the interleaved processors (p < .05; 20% trimmed mean of the difference between the two processors: 1 ripple per octave, greater than the measurement error; see Figure 1 and Table 3).

Figure 1.

Spectral resolution was significantly better with interleaved processors. Each data point indicates results for one subject, labeled by the subjects' ID. Points above the diagonal line indicate that performance is better with the interleaved processors. Points below the diagonal line indicate that performance is better with the non-interleaved processors.

Table 3.

Difference between interleaved and non-interleaved conditions (positive scores indicate a benefit with interleaving).

| Subject ID | SMRT (Ripples per octave) | Localization RMS error (degrees) |

|---|---|---|

| C3 | 0.9 | -7 |

| C14 | 2.9 | -6 |

| C20 | -1.3 | 3 |

| C21 | 0.3 | 1 |

| I01 | 3.9 | 6 |

| I02 | 1.4 | -6 |

| I03 | 0.7 | -1 |

| I05 | 0.3 | -4.5 |

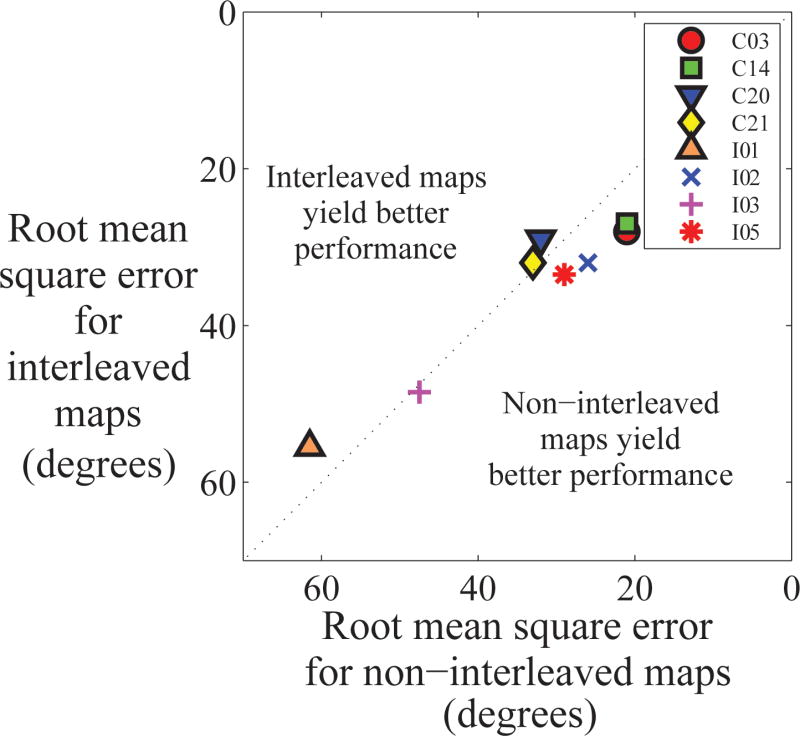

To determine if using interleaved processors detrimentally affected localization performance, a percentile bootstrap pairwise comparison with 20% trimmed means was conducted comparing localization performance with the two strategies. The results indicated that there was no significant difference between localizing with the interleaved and non-interleaved strategies (p = 0.36; 20% trimmed mean of the difference between the two processors: 2 degrees; see Figure 2 and Table 3).

Figure 2.

There was no significant difference between localization performance with interleaved and non-interleaved processors. Each data point indicates results for one subject, labeled by the subjects' ID. Points above the diagonal line indicate that performance is better with the interleaved processors. Points below the diagonal line indicate that performance is better with the non-interleaved processors.

Discussion

Interleaved processors were found to improve the spectral resolution of cochlear implant patients by one ripple per octave, which vocoder simulations suggest corresponds to the addition of four channels (Aronoff & Landsberger, 2013). Although binaural cues may potentially be distorted by interleaving, there was no significant effect of interleaving on localization abilities. This may have resulted from the limited frequency range used in the current study.

All but one participant (C20) had better spectral resolution with the interleaved processors. It is worth noting that in a previous study (Aronoff et al. 2015) it was shown that C20 also had greater masking with a contralateral masker than with an ipsilateral masker for a portion of their array. This increased contralateral masking may indicate that interleaved maps create more rather than less binaural interference for this participant.

These results suggest that interleaving may be clinically beneficial for CI patients, although further studies are needed to determine the generalizability of the results. These results also parallel the observed benefits of interleaving for other hearing impaired populations (e.g., Kulkarni et al. 2012; Lunner et al. 1993). However, these results are in direct contrast to the results found by Tyler et al. (2010) and Mani et al. (2004), who found no consistent effect or a negative effect of interleaved processors for CI users. The difference between the results from Tyler et al. (2010) and Mani et al. (2004) and the results from the current study may reflect the necessity of first using pitch matching to align the two arrays. Given that stimulation in the left and right ear is likely perceptually misaligned, this may be a critical prerequisite for interleaving with this patient population. The importance of pitch matching the arrays is consistent with results from simulations of interleaved processors with NH listeners, where the benefits of interleaved processors depended on starting with perceptually aligned channels (Siciliano et al. 2010). Although interleaved processors aligned based on electrode number have been shown to yield improvements when combined with the removal of sub-optimal channels based on modulation detection (Zhou and Pfingst 2012), that improvement may reflect the removal of the sub-optimal channels rather than the use of interleaving.

It may also be the case that the spacing between stimulation locations plays a key role in yielding a benefit with interleaving. In Tyler et al. (2010) and Mani et al. (2004) stimulation sites were separated by one electrode, typically with the more closely spaced Cochlear arrays, whereas the stimulation sites were typically separated by a greater amount with Advanced Bionics arrays in the current study. Given the large current spread typical with cochlear implants, using every other electrode may not sufficiently reduce channel interaction.

The absence of a significant detrimental effect on localization performance is also in contrast to the results from Tyler et al. (2010) and Aronoff et al. (2014), where participants' localization performance was consistently adversely affected by the use of interleaved processors. One possible explanation for this discrepancy could be the relatively low upper limit on the frequency allocation table used in the current study. The magnitude of the head shadow effect responsible for creating ILDs increases as frequency increases. With interleaving, one ear will be missing input from the highest frequency region (i.e. from the region with the largest ILD). This effectively artificially increases the ILD for sounds coming from sources near the ear with the highest frequency region and decreases the ILD for sounds coming from sources near the opposite ear, thus distorting the spatial map. The higher the frequency range, the larger the effect of this distortion is on ILDs. As such, having a low upper limit on the frequency range may have reduced the potentially detrimental effects of interleaving on ILD-based localization.

In addition to improving spectral resolution, interleaved processors have the potential to dramatically improve battery life. Because interleaving requires only half of the stimulation sites in each ear, it has the potential to nearly double the battery life of the processors. This may also allow for the use of more power-demanding stimulation modes such as tripolar and quadrupolar stimulation, which may further improve spectral resolution (e.g., Srinivasan et al. 2013; Srinivasan et al. 2012).

The results from this study demonstrate that, as with hearing aid users, cochlear implant patients can benefit from interleaved processors, although it may be necessary to first perceptually align the two ears and/or increase the spacing between stimulation locations. This study suggests that it may be clinically beneficial to implement interleaved processors for bilateral cochlear implant patients.

Supplementary Material

Acknowledgments

We thank our participants for their time and effort. We also thank Advanced Bionics for providing equipment and expertise for this study. This work was supported by the National Organization for Hearing Research (NOHR) and by NIH grants T32DC009975, R01-DC12152, R01-DC001526, R01-DC004993, R03-DC010064, and R03-DC013380.

Contributor Information

Justin M. Aronoff, Email: jaronoff@illinois.edu.

Julia Stelmach, Email: stelmac2@illinois.edu.

Monica Padilla, Email: Monica.PadillaVelez@nyumc.org.

David M. Landsberger, Email: David.Landsberger@nyumc.org.

References

- Aronoff JM, Amano-Kusumoto A, Itoh M, et al. The effect of interleaved filters on normal hearing listeners' perception of binaural cues. Ear Hear. 2014;35:708–710. doi: 10.1097/AUD.0000000000000060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Freed DJ, Fisher LM, et al. The effect of different cochlear implant microphones on acoustic hearing individuals' binaural benefits for speech perception in noise. Ear & Hearing. 2011;32:468–484. doi: 10.1097/AUD.0b013e31820dd3f0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Freed DJ, Fisher LM, et al. Cochlear implant patients' localization using interaural level differences exceeds that of untrained normal hearing listeners. J Acoust Soc Am. 2012;131:EL382–387. doi: 10.1121/1.3699017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Landsberger DM. The development of a modified spectral ripple test. J Acoust Soc Am. 2013;134:EL217–222. doi: 10.1121/1.4813802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Padilla M, Fu QJ, et al. Contralateral masking in bilateral cochlear implant patients: a model of medial olivocochlear function loss. PLoS One. 2015;10:e0121591. doi: 10.1371/journal.pone.0121591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Yoon YS, Freed DJ, et al. The use of interaural time and level difference cues by bilateral cochlear implant users. J Acoust Soc Am. 2010;127:EL87–EL92. doi: 10.1121/1.3298451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Busby PA, Battmer RD, Pesch J. Electrophysiological spread of excitation and pitch perception for dual and single electrodes using the Nucleus Freedom cochlear implant. Ear Hear. 2008;29:853–864. doi: 10.1097/AUD.0b013e318181a878. [DOI] [PubMed] [Google Scholar]

- Donaldson GS, Kreft HA, Litvak L. Place-pitch discrimination of single- versus dual-electrode stimuli by cochlear implant users (L) J Acoust Soc Am. 2005;118:623–626. doi: 10.1121/1.1937362. [DOI] [PubMed] [Google Scholar]

- Firszt JB, Koch DB, Downing M, et al. Current steering creates additional pitch percepts in adult cochlear implant recipients. Otol Neurotol. 2007;28:629–636. doi: 10.1097/01.mao.0000281803.36574.bc. [DOI] [PubMed] [Google Scholar]

- Fishman KE, Shannon RV, Slattery WH. Speech recognition as a function of the number of electrodes used in the SPEAK cochlear implant speech processor. J Speech Lang Hear Res. 1997;40:1201–1215. doi: 10.1044/jslhr.4005.1201. [DOI] [PubMed] [Google Scholar]

- Francart T, Wouters J. Perception of across-frequency interaural level differences. J Acoust Soc Am. 2007;122:2826–2831. doi: 10.1121/1.2783130. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, et al. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110:1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Nogaki G. Noise susceptibility of cochlear implant users: the role of spectral resolution and smearing. J Assoc Res Otolaryngol. 2005;6:19–27. doi: 10.1007/s10162-004-5024-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henry BA, Turner CW. The resolution of complex spectral patterns by cochlear implant and normal-hearing listeners. J Acoust Soc Am. 2003;113:2861–2873. doi: 10.1121/1.1561900. [DOI] [PubMed] [Google Scholar]

- Kan A, Stoelb C, Litovsky RY, et al. Effect of mismatched place-of-stimulation on binaural fusion and lateralization in bilateral cochlear-implant users. J Acoust Soc Am. 2013;134:2923–2936. doi: 10.1121/1.4820889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kulkarni PN, Pandey PC, Jangamashetti DS. Binaural dichotic presentation to reduce the effects of spectral masking in moderate bilateral sensorineural hearing loss. Int J Audiol. 2012;51:334–344. doi: 10.3109/14992027.2011.642012. [DOI] [PubMed] [Google Scholar]

- Landsberger DM, Srinivasan AG. Virtual channel discrimination is improved by current focusing in cochlear implant recipients. Hear Res. 2009;254:34–41. doi: 10.1016/j.heares.2009.04.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin P, Lu T, Zeng FG. Central masking with bilateral cochlear implants. J Acoust Soc Am. 2013;133:962–969. doi: 10.1121/1.4773262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou PC, Dorman M, Tu Z. On the number of channels needed to understand speech. J Acoust Soc Am. 1999;106:2097–2103. doi: 10.1121/1.427954. [DOI] [PubMed] [Google Scholar]

- Loizou PC, Mani A, Dorman MF. Dichotic speech recognition in noise using reduced spectral cues. J Acoust Soc Am. 2003;114:475–483. doi: 10.1121/1.1582861. [DOI] [PubMed] [Google Scholar]

- Lorenzi C, Gilbert G, Carn H, et al. Speech perception problems of the hearing impaired reflect inability to use temporal fine structure. Proc Natl Acad Sci U S A. 2006;103:18866–18869. doi: 10.1073/pnas.0607364103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunner T, Arlinger S, Hellgren J. 8-channel digital filter bank for hearing aid use: preliminary results in monaural, diotic and dichotic modes. Scand Audiol Suppl. 1993;38:75–81. [PubMed] [Google Scholar]

- Luo X, Landsberger DM, Padilla M, et al. Encoding pitch contours using current steering. J Acoust Soc Am. 2010;128:1215–1223. doi: 10.1121/1.3474237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo X, Padilla M, Landsberger DM. Pitch contour identification with combined place and temporal cues using cochlear implants. J Acoust Soc Am. 2012;131:1325–1336. doi: 10.1121/1.3672708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mani A, Loizou PC, Shoup A, et al. Dichotic speech recognition with bilateral cochlear implant users. International Congress Series. 2004;1273:466–469. [Google Scholar]

- Muller J, Schon F, Helms J. Speech understanding in quiet and noise in bilateral users of the MED-EL COMBI 40/40+ cochlear implant system. Ear Hear. 2002;23:198–206. doi: 10.1097/00003446-200206000-00004. [DOI] [PubMed] [Google Scholar]

- Saoji AA, Litvak LM, Hughes ML. Excitation patterns of simultaneous and sequential dual-electrode stimulation in cochlear implant recipients. Ear Hear. 2009;30:559–567. doi: 10.1097/AUD.0b013e3181ab2b6f. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Fu QJ, Galvin J., 3rd The number of spectral channels required for speech recognition depends on the difficulty of the listening situation. Acta Otolaryngol Suppl. 2004:50–54. doi: 10.1080/03655230410017562. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, et al. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Siciliano CM, Faulkner A, Rosen S, et al. Resistance to learning binaurally mismatched frequency-to-place maps: implications for bilateral stimulation with cochlear implants. J Acoust Soc Am. 2010;127:1645–1660. doi: 10.1121/1.3293002. [DOI] [PubMed] [Google Scholar]

- Srinivasan AG, Padilla M, Shannon RV, et al. Improving speech perception in noise with current focusing in cochlear implant users. Hear Res. 2013;299:29–36. doi: 10.1016/j.heares.2013.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Srinivasan AG, Shannon RV, Landsberger DM. Improving virtual channel discrimination in a multi-channel context. Hear Res. 2012;286:19–29. doi: 10.1016/j.heares.2012.02.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takagi Y, Itoh M, Noguchi E, et al. A study on the real-time dichotic-listening hearing aids considering spectral masking for improving speech intelligibility. Proceedings of the Acoustical Society of Japan. 2010:1521–1522. [Google Scholar]

- Tyler RS, Witt SA, Dunn CC, et al. An attempt to improve bilateral cochlear implants by increasing the distance between electrodes and providing complementary information to the two ears. J Am Acad Audiol. 2010;21:52–65. doi: 10.3766/jaaa.21.1.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hoesel R, Bohm M, Pesch J, et al. Binaural speech unmasking and localization in noise with bilateral cochlear implants using envelope and fine-timing based strategies. The Journal of the Acoustical Society of America. 2008;123:2249–2263. doi: 10.1121/1.2875229. [DOI] [PubMed] [Google Scholar]

- van Hoesel RJ, Tyler RS. Speech perception, localization, and lateralization with bilateral cochlear implants. J Acoust Soc Am. 2003;113:1617–1630. doi: 10.1121/1.1539520. [DOI] [PubMed] [Google Scholar]

- Won JH, Drennan WR, Rubinstein JT. Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. J Assoc Res Otolaryngol. 2007;8:384–392. doi: 10.1007/s10162-007-0085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou N, Pfingst BE. Psychophysically based site selection coupled with dichotic stimulation improves speech recognition in noise with bilateral cochlear implants. J Acoust Soc Am. 2012;132:994–1008. doi: 10.1121/1.4730907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou N, Xu L. Poster presented at the Association for Research in Otolaryngology 34th Annual Midwinter Research Meeting. 2011. Dichotic stimulation with cochlear implants: Maximizing the two-ear advantage. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.