Abstract

Purpose of review

Randomized, population-representative trials of clinical interventions are rare. Quasi-experiments have been used successfully to generate causal evidence on the cascade of HIV care in a broad range of real-world settings.

Recent findings

Quasi-experiments exploit exogenous, or quasi-random, variation occurring naturally in the world or because of an administrative rule or policy change to estimate causal effects. Well designed quasi-experiments have greater internal validity than typical observational research designs. At the same time, quasi-experiments may also have potential for greater external validity than experiments and can be implemented when randomized clinical trials are infeasible or unethical. Quasi-experimental studies have established the causal effects of HIV testing and initiation of antiretroviral therapy on health, economic outcomes and sexual behaviors, as well as indirect effects on other community members. Recent quasi-experiments have evaluated specific interventions to improve patient performance in the cascade of care, providing causal evidence to optimize clinical management of HIV.

Summary

Quasi-experiments have generated important data on the real-world impacts of HIV testing and treatment and on interventions to improve the cascade of care. With the growth in large-scale clinical and administrative data, quasi-experiments enable rigorous evaluation of policies implemented in real-world settings.

Keywords: cascade of care, causal inference, HIV/AIDS, natural experiment, quasi-experiment

INTRODUCTION

The second decade of mass HIV treatment provision has brought with it new hopes and challenges. On the one hand, the large potential for reduced transmission with early ART has led to optimism about further expansions of HIV testing and treatment. On the other hand, despite the widespread availability of antiretroviral therapy (ART) and its efficacy, HIV is still the leading cause of death in southern Africa [1] and losses along the ‘cascade’ from HIV testing to initiating and sustaining ART remain high [2]. Consequentially, there is strong interest in improving the effectiveness and sustainability of interventions through ‘implementation science’ research [3]. Fortunately, we have at our disposal a decade of history to learn from. Although such an approach would have been infeasible during the initial ‘emergency’ phase of ART scale-up, we now have the capability to look back and learn from existing experiences of programmes, policies and interventions implemented in real-world settings and at scale. In doing so, researchers have an important tool at their disposal, which provides powerful opportunities to grow the evidence base on the real-world effectiveness of interventions along the HIV treatment cascade: quasi-experiments.

In this article, we define quasi-experiments and characterize specific study designs as examples of the approach. We define the term narrowly, noting that a range of study designs sometimes included under the banner of quasi-experiments would not meet the criteria we set out. Second, we describe the benefits and limitations of quasi-experiments vis-à-vis the traditional stalwarts in empirical clinical and epidemiological research: the randomized clinical trial and the nonexperimental observational study. (We note the importance of modeling and projection studies to inform policy decision-making, but limit the discussion here to empirical studies of causal effects.) Third, we review a recent wave of studies that have used quasi-experimental designs either to evaluate components of the HIV cascade of care or to evaluate interventions to improve patient progression through the cascade of care. In our review, we draw out specific aspects of these studies that highlight the strengths and capabilities of quasi-experimental designs.

We note that a substantial portion of this literature comes from economics, and not all of these studies are indexed in PubMed. (Economics publishing timelines are much longer than in public health/medicine, and research results are often available as working papers or conference papers long before they appear in publication.) All studies that we review that were not included in PubMed are freely available online and were accessed via Google Scholar.

Box 1.

no caption available

QUASI-EXPERIMENTS: A DEFINITION

Quasi-experiments are studies in which a treatment or exposure is assigned by exogenous (or quasi-random) variation occurring naturally in the world, or resulting from an administrative rule, or policy change, or intervention [4,5]. Exogeneity implies that treatment assignment is not influenced by factors associated with the outcome of interest (i.e. confounders). In randomized-controlled trials, exogeneity is achieved through the investigator's randomization of subjects to different exposures. Although quasi-experiments are typically observational studies – studies in which exposure status is not assigned by the investigator – quasi-experiments have more similarities to randomized clinical trials than to nonexperimental observational studies in their approach to causal inference. Nonexperimental study designs, such as multiple regression, matching, reweighting, and stratification adjust for measured confounders and rely for causal inference on the ubiquitous but (often) strong assumption that there are no unobserved confounders. In contrast, and similar to randomized trials, quasi-experiments identify off of exogenous variation in treatment assignment that is plausibly independent of unobserved confounders.

Several study designs fit this definition of a quasi-experiment [6,7]: regression discontinuity designs can be implemented when an exposure is assigned by a threshold rule on a continuous pretreatment covariate (e.g. a clinical risk score), exploiting the similarity of subjects just above and below the threshold [8,9,10▪▪]. Interrupted time series designs, a subset of regression discontinuity, identify causal effects of rapid temporal changes in exposure status within the same group of study subjects. Difference-in-differences designs can be used to identify the causal effect of a plausibly exogenous change in exposure across differentially exposed groups, for example a policy change or environmental exposure that affected some regions but not others [4]. More generally, exogenous variation in an exposure may be identified whenever there is a valid instrumental variable – a variable that is correlated with the exposure, but not independently related to the outcome [11,12]. In fact, instrumental variable methods can be used across the full range of quasi-experimental designs to obtain the causal effect of treatment itself within the subpopulation induced to take up treatment – rather than the intent-to-treat effect of treatment assignment – analogous to corrections for noncompliance in randomized trials [11]. In rare but opportune quasi-experiments, treatment assignment is actually randomized – just not by the investigator, for example as in the Vietnam Draft Lottery [13,14] and Mendelian Randomization of genetic traits [15].

Common across all of these quasi-experimental designs, the researcher identifies a source of exogenous, quasi-random (or random) variation occurring in the world and designs a study to analyze it. We note that our definition differs from some others in the literature, which define a quasi-experiment as a prospective study in which the investigator assigns treatment in a controlled environment, but does so nonrandomly [16]. Under our definition, the ‘experiment’ in the quasi-experiment arises not because the investigator has intervened, but because treatment was assigned – at least in part – by some exogenous factors which can either be observed or isolated by the researcher. Sometimes, researchers are not able to measure a specific source of exogenous variation (e.g. an instrument), but are nevertheless able to control for large sets of unobserved confounders, for example through the use of fixed effects [17]. These studies have been termed ‘weak quasi-experiments’ [18▪▪].

STRENGTHS AND LIMITATIONS OF QUASI-EXPERIMENTAL DESIGNS

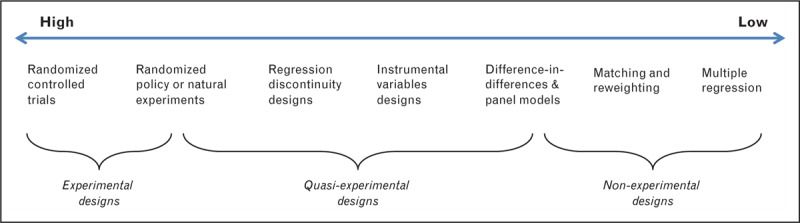

The key strength of quasi-experimental designs vis-à-vis observational studies that rely on adjustment for measured covariates is the plausibility of assumptions required for valid causal inference. Although there is substantial heterogeneity across quasi-experimental designs, all seek to reduce reliance on the assumption that there are no unobserved confounders. Figure 1 provides a schematic ranking of different study designs in order of their reliance on the assumption of no unobserved confounding. The ordering of study designs in Figure 1 is an approximation: internal validity in quasi-experiments will depend on the source of exogenous variation and whether it can credibly be considered as-good-as-random. For example, a bad instrumental variable will lead to inferences no better than most regression analyses. Additionally, we reflect in the figure that the boundaries between design categories – experiment, quasi-experiment, and nonexperiment – are blurry. Under some definitions, truly randomized exposures are considered experiments, even if the investigator is not involved in treatment assignment. Similarly, under some definitions, difference-in-differences models are considered quasi-experiments even if no specific source of exogenous variation is identified [18▪▪].

FIGURE 1.

Likelihood that the assumption of no unobserved confounding is satisfied.

Quasi-experiments have several important advantages over randomized-controlled trials. They offer opportunities to study the effectiveness of interventions implemented at scale in real-world settings, and to study the effectiveness across different populations and points in time. Quasi-experiments can evaluate interventions that randomized trials cannot ethically test. And they are typically much cheaper and may be more politically feasible to conduct than randomized trials. Quasi-experiments can often be implemented using existing data, and opportunities to use such designs are expanding with the proliferation of ‘big data’.

The key challenge in quasi-experimental designs vis-à-vis randomized-controlled trials is that the investigator does not control treatment assignment. Therefore, the investigator cannot be 100% certain that treatment assignment was actually random (or quasi-random). Rather, the investigator posits the random or quasi-random process that gave rise to treatment assignment, and then gathers evidence – both qualitative and quantitative – to support this assumption. The quality of a quasi-experimental study depends on the strength of evidence supporting this interpretation, which typically relies on the rigor and transparency with which supporting assumptions are reported and tested (where possible).

Quasi-experimental designs offer opportunities to learn about the causal impacts of HIV care and treatment as implemented in real-world settings and to evaluate interventions to improve the cascade of HIV care. In the rest of the article, we review the extant quasi-experimental literature on the HIV cascade of care, elucidating key ways that quasi-experiments can generate data that randomized trials cannot.

QUASI-EXPERIMENTS TO EVALUATE THE IMPACT OF HIV CARE AND TREATMENT

Real-world health impacts of programmes as implemented

Quasi-experimental designs have the ability to identify impacts of programmes, policies and interventions as implemented, at scale, in real-world settings. Programmes face myriad challenges such as poor provider training, drug stock outs, absenteeism and low motivation among patients – contextual factors that efficacy trials attempt to control. Critically, behavioral responses of providers and patients may mitigate (or reinforce) direct health effects. For example, the scale-up of ART may lead to the (false) perception that HIV is less prevalent because fewer people are dying [19] and thus to lower rates of HIV testing and enrolment in care. On the contrary, the scale-up of ART could reduce fears about learning one's status, leading to increased HIV testing and enrolment in care. The causal effect of ART scale-up on HIV testing (and downstream outcomes) is thus an empirical question that can only be answered using observational methods. Wilson [20] evaluates the staged geographic scale-up of ART in Zambia and finds that while scale-up of ART increased HIV testing among women and older men, it did not increase testing among working-age men, possibly because of low ART uptake in this group.

Another strength of quasi-experiments is the ability to quantify the magnitude of effects where the direction of effect is already known. There are many cases where equipoise cannot be demonstrated (A is known to be better than B), and yet the magnitude of benefits – and thus the extent to which scarce resources should be allocated to this intervention vis-à-vis other life-saving interventions – remains uncertain. Randomized controlled trials (RCTs) cannot provide evidence in such cases, because without equipoise a trial of intervention effects would be considered unethical. Because in quasi-experiments the investigator does not (typically) control treatment assignment, quasi-experiments are considered ethical even if equipoise cannot (or can no longer) be established. Good examples in the HIV literature relate to the scale-up of ART and health outcomes. Bendavid et al.[21] use a difference-in-differences strategy to look at the effect of PEPFAR funding on mortality, exploiting the fact that which countries received PEPFAR funding was somewhat arbitrary. Bor et al.[22] use an interrupted time series strategy with a modeled counterfactual to assess the effect of ART scale-up on population adult life expectancy in rural South Africa, finding 11.3-year gains following the introduction of mass treatment. Clearly, ART could not be randomly assigned to some communities and not others; and yet, causal evidence on the aggregate population health impact of ART is important to guide policy-making and resource allocation.

Quasi-experimental studies have also looked at the health impacts of treatment for those patients targeted. Bor et al.[10▪▪,23▪] look at the causal effect of early versus deferred ART eligibility on mortality in a regression discontinuity design, finding large gains in survivorship among patients with relatively low CD4 counts (200 cells per microliter) but who did not have other serious clinical symptoms (Stage IV HIV illness). Exploiting the differential scale-up of ART in Zambia, Lucas and Wilson [24] identify increases in weight at the population level among Zambian women likely to be HIV-positive. Using sharp changes in temporal availability of ART in the USA, Papageorge et al. [25] demonstrate decreases in domestic violence and illicit drug use among low-income, HIV-positive women.

Quasi-experimental study designs have also been used to assess ART regimen choice. Nelson et al.[26] use physician prescribing preference as an instrument for whether a patient started a proteinase-inhibitor or nonnucleoside reverse transcriptase inhibitor-(NNRTI) based regimen, and find substantially increased adherence among patients started on an NNRTI. Several quasi-experimental and experimental studies have evaluated the impact of HIV testing on sexual behaviors and incidence of sexually transmitted infections [27–30].

Spillover effects of interventions beyond the health of patients in care

Cost–benefit calculations should capture the full social costs and benefits of an intervention, including nonhealth outcomes and spillover effects beyond those persons directly targeted. Because of financial and ethical constraints, however, randomized trials are often stopped when the primary health outcome is reached. Several difference-in-differences studies have evaluated the economic impacts of HIV treatment scale-up on patients’ productivity and labor supply, documenting substantial though incomplete recovery [31–35]. Other studies have compared households where a member initiated ART to those where no household member initiated ART, and evaluated household spillover effects on labor supply [31,36,37], time-use [38], assets [33], health [36,39] and sexual behaviors [40].

Still other studies have exploited differential changes in exposure to ART over space and time to identify aggregate effects of ART scale-up on economic outcomes [41,42] and sexual behavior [43,44,45▪] at the community level, and spillover effects on the labor supply [19,46], education investments [47▪] and sexual behaviors of HIV-uninfected community members in particular [42]. For example, Friedman [45▪] exploits the differential rollout of ART across regions of Kenya to measure the effect of community ART availability on sexual behavior and pregnancy in Kenya. In a recent study, Wirth et al.[48] use an instrumental variables approach to assess the impact of local area ART coverage on HIV incidence, replicating quasi-experimentally the results of an influential nonexperimental study [49].

External validity across a range of settings

Effects may be context specific. One key strength of quasi-experiments is the ability to replicate analyses across settings even after a clinical benefit has been established. As an example, the effects of ART on labor market outcomes will depend on local labor market conditions. Much faster recovery of labor supply was observed in an agricultural setting in western Kenya [31] and among employees of companies in Kenya and Botswana [31–34] than in a general patient population in rural South Africa where work opportunities were scarce [35]. Quasi-experiments can contribute to systematic knowledge of how effects may vary across contexts.

In sum, these quasi-experimental studies have demonstrated very large population health impacts of ART scale-up [21,22,24], with substantial health [10▪▪,23▪] and economic [31–35] benefits accruing to patients on ART. Further, the impacts of ART extend beyond patients themselves and include economic and health benefits to members of households [37,38,50,51] and the broader community [19,23▪,47▪]. The threat of sexual disinhibition is real [43,44,45▪], but is very likely outweighed by the reduction in transmission from persons on ART [45▪,49].

QUASI-EXPERIMENTS TO IMPROVE PROGRESSION THROUGH THE CASCADE OF HIV CARE

Although quasi-experiments have generated substantial evidence about the real-world clinical and population impacts of HIV care and treatment, fewer studies have evaluated the impacts of specific interventions to improve patient progression through the cascade of HIV care. Nevertheless, progress has been made and this is a growing literature.

Three studies used difference-in-differences approaches to establish the impact of interventions on HIV testing: De Walque et al.[52▪] find that using ‘pay-for-performance’ provider incentives modestly increases HIV testing and counseling services in Rwanda; Goetz et al.[53] found that provider education and feedback improved rates of HIV testing in Veterans Administration facilities; and McGovern et al.[54] showed that a food voucher gift to the heads of households in a community in rural KwaZulu-Natal, South Africa, increased consent to home-based HIV testing among all household members. Klein et al.[55] used an interrupted time series design to assess the rollout of routine HIV testing in North Carolina and found close to zero impact on case detection.

Once patients test positive, how can they best be linked into long-run care and treatment? Bor et al.[10▪▪,23▪] assessed the causal impacts of immediate versus deferred ART eligibility for patients presenting for care in rural South Africa, using a regression discontinuity design. Patients presenting just above the 200-cell CD4 count eligibility threshold had higher mortality, lower retention in care and lower CD4 counts at follow-up than patients presenting with CD4 counts just below the threshold. Further down the cascade, Boruett et al.[56] conducted a controlled interrupted time series design to evaluate a facility-level ART adherence intervention in Kenya, and found small but significant improvements in patient adherence.

CONCLUSION

Quasi-experimental methods have been used extensively to evaluate the impact of HIV care and treatment scale-up, although this literature is largely in economics and is not widely cited in the mainstream HIV literature. Recent quasi-experiments have generated valuable evidence on specific interventions to improve progression across the HIV treatment cascade, for example better understanding the causal effect of offering patients immediate access to ART rather than referring them to pre-ART care, in real-world settings. Quasi-experiments have also been used effectively in HIV research beyond the ‘cascade of care’, for example to evaluate the effect of interventions in preventing new HIV infections [57▪,58] and reducing stigma [59], and in the estimation of HIV prevalence in the context of selective nonresponse [60,61▪]. With the increasing collection of large administrative, surveillance and clinical datasets, and the ability to link these sources, the opportunities for rigorous quasi-experiments will only grow.

Current guidelines for evidence synthesis (for example the GRADE criteria used by WHO, http://www.gradeworkinggroup.org) and for reporting of studies (for example STROBE guidelines) do not make separate recommendations for quasi-experimental studies. Quasi-experiments are typically graded as observational, nonexperimental studies, but this assessment does not allow that some quasi-experiments, for example regression discontinuity designs and randomized natural or policy experiments, yield inferences nearly as strong as randomized clinical trials. Furthermore, the features of a rigorously-presented quasi-experimental study differ from the features of a rigorously-presented cohort or case−control study.

Should there be a third GRADE category for quasi-experiments? Devising checklists for the assessment of quasi-experiments is difficult because of the wide range of data-generating processes that generate quasi-experiments, and the wide range of methods used to analyze them. Often, specific subject area knowledge is required to determine the plausibility of assumptions invoked in a quasi-experiment. And yet, the potential of these designs to produce rigorous, actionable evidence to improve patient care, policy design and resource allocation is too great to be ignored. Quasi-experimental studies have been widely accepted in the social sciences as valid methods of inquiry [4,5]. Quasi-experiments are gaining increasing appreciation and use in clinical and population health research [10▪▪,12,62▪]. Future research should focus on approaches to better integrate quasi-experiments into methods for evidence synthesis [18▪▪,63].

Acknowledgements

None.

Financial support and sponsorship

This work was supported in part by National Institutes of Health grant 1K01MH105320–01A1 (J.B.).

Conflicts of interest

There are no conflicts of interest.

REFERENCES AND RECOMMENDED READING

Papers of particular interest, published within the annual period of review, have been highlighted as:

▪ of special interest

▪▪ of outstanding interest

REFERENCES

- 1.Lozano R, Naghavi M, Foreman K, et al. Global and regional mortality from 235 causes of death for 20 age groups in 1990 and 2010: A systematic analysis for the Global Burden of Disease Study. Lancet 2012; 380:2095–2128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rosen S, Fox MP. Retention in HIV care between testing and treatment in sub-Saharan Africa: a systematic review. PLoS Med 2011; 8:e1001056.doi:10.1371/journal.pmed.1001056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fauci AS. AIDS: let science inform policy. Science 2011; 333:13.doi:10.1126/science.1209751. [DOI] [PubMed] [Google Scholar]

- 4.Meyer BD. Natural and quasi-experiments in economics. J Bus Econ Stat 1995; 13:151–161. [Google Scholar]

- 5.United Nations Publications, King G, Keohane RO, Verba S. The importance of research design. Rethink Soc Inq Divers Tools, Shar Stand. 2004; 181–192. [Google Scholar]

- 6.Angrist JD & Pischke JS. Mastering ’metrics: the path from cause to effect. Princeton University Press, 2014. [Google Scholar]

- 7.Angrist JD & Pischke JS. Mostly harmless econometrics: an empiricist's companion. Princeton University Press, 2009. [Google Scholar]

- 8.Thistlethwaite DL, Campbell DT. Regression-discontinuity analysis: an alternative to the ex post facto experiment. J Educ Psychol 1960; 51:309–317. [Google Scholar]

- 9.Lee DS, Lemieux T. Regression discontinuity designs in economics. J Econ Lit 2010; 48:281–355. [Google Scholar]

- 10▪▪.Bor J, Moscoe E, Mutevedzi P, et al. Regression discontinuity designs in epidemiology: causal inference without randomized trials. Epidemiology 2014; 25:729–737. [DOI] [PMC free article] [PubMed] [Google Scholar]; This article provides a primer on regression discontinuity designs for a clinical and public health audience; demonstrates the wide applicability of regression discontinuity to nonlinear and survival models often used by epidemiologists; and presents an empirical regression discontinuity analysis of the mortality impact of immediate vs. deferred ART eligibility for patients at the 200-cell CD4 count threshold in rural South Africa.

- 11.Imbens GW, Angrist JD. Identification and estimation of local average treatment effects. Econometrica 1994; 62:467–475. [Google Scholar]

- 12.Hernán MA, Robins JM. Instruments for causal inference: an epidemiologist's dream? Epidemiology 2006; 17:360–372. [DOI] [PubMed] [Google Scholar]

- 13.Hearst N, Newman TB, Hulley SB. Delayed effects of the military draft on mortality. A randomized natural experiment. N Engl J Med 1986; 314:620–624. [DOI] [PubMed] [Google Scholar]

- 14.Hearst N, Buehler JW, Newman TB, Rutherford GW. The draft lottery and AIDS: evidence against increased intravenous drug use by Vietnam-era veterans. Am J Epidemiol 1991; 134:522–525. [DOI] [PubMed] [Google Scholar]

- 15.Lawlor DA, Harbord RM, Sterne JAC, et al. Mendelian randomization: using genes as instruments for making causal inferences in epidemiology. Stat Med 2008; 27:1133–1163. [DOI] [PubMed] [Google Scholar]

- 16.Shadish WR, Cook TD, Campbell DT. Experimental and quasi-experimental designs for generalized causal inference. Boston, MA: Houghton Mifflin; 2002. [Google Scholar]

- 17.Gunasekara FI, Richardson K, Carter K, Blakely T. Fixed effects analysis of repeated measures data. Int J Epidemiol 2014; 43:264–269. [DOI] [PubMed] [Google Scholar]

- 18▪▪.Rockers PC, Røttingen J-A, Shemilt I, et al. Inclusion of quasi-experimental studies in systematic reviews of health systems research. Health Policy (New York) 2015; 119:511–521. [DOI] [PubMed] [Google Scholar]; This article presents a rationale for the inclusion of quasi-experimental studies in systematic reviews.

- 19.Baranov V, Bennett D, Kohler H-P. The Indirect Impact of Antiretroviral Therapy [Internet]. 2012. Report No.: 12–08. http://repository.upenn.edu/cgi/viewcontent.cgi?article=1038&context=psc_working_papers. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wilson N. Antiretroviral Therapy and Demand for HIV Testing: Evidence from Zambia. 2011. Available at SSRN: http://ssrn.com/abstract=1982185 or http://dx.doi.org/10.2139/ssrn.1982185. [DOI] [PubMed] [Google Scholar]

- 21.Bendavid E, Holmes CB, Bhattacharya J, Miller G. HIV development assistance and adult mortality in Africa. JAMA Am Med Assoc 2012; 307:2060–2067.doi:10.1001/jama.2012.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bor J, Herbst A, Newell M, Bärnighausen T. Increases in adult life expectancy in rural South Africa: valuing the scale-up of HIV treatment. Science 2013; 339:961–965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23▪.Bor J, Moscoe E, Bärnighausen T. When to start HIV treatment: evidence from a regression discontinuity study in South Africa. 2015; San Diego, CA: Population Association of America Annual Meeting, http://paa2015.princeton.edu/abstracts/151012. [Google Scholar]; This study presents a detailed application of a regression discontinuity design to the question of when to start ART, expanding substantially on [10].

- 24.Lucas AM, Wilson NL. Can Antiretroviral Therapy at Scale Improve the Health of the Targeted in Sub-Saharan Africa? Boston, MA: Population Association of America Annual Meeting; 2014; http://paa2014.princeton.edu/abstracts/140465 2014. [Google Scholar]

- 25.Papageorge N. Health, human capital, and domestic violence. Working Paper, 2015: https://nicholaswpapageorge.files.wordpress.com/2011/06/dv2.pdf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nelson RE, Nebeker JR, Hayden C, et al. Comparing adherence to two different HIV antiretroviral regimens: an instrumental variable analysis. AIDS Behav 2013; 17:160–167.doi:10.1007/s10461-012-0266-2. [DOI] [PubMed] [Google Scholar]

- 27.Baird S, Gong E, McIntosh C, Ozler B. The heterogeneous effects of HIV testing. J Health Econ Elsevier B V 2014; 37C:98–112.doi:10.1016/j.jhealeco.2014.06.003. [DOI] [PubMed] [Google Scholar]

- 28.Gong E. HIV testing & risky sexual behaviour. Econ J 2014; 125:32–60.doi:10.1111/ecoj.12125. [Google Scholar]

- 29.Thornton RL. The Demand for, and impact of, learning HIV status. Am Econ Rev 2008; 98:1829–1863.doi:10.1257/aer.98.5.1829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Delavande A, Kohler H-P. The impact of HIV testing on subjective expectations and risky behavior in Malawi. Demography 2012; 49:1011–1036.doi:10.1007/s13524-012-0119-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Thirumurthy H, Zivin JG, Goldstein M. The economic impact of AIDS treatment: labor supply in western Kenya. J Hum Resour 2008; 43:511–552. [PMC free article] [PubMed] [Google Scholar]

- 32.Larson BA, Fox MP, Rosen S, et al. Early effects of antiretroviral therapy on work performance: preliminary results from a cohort study of Kenyan agricultural workers. Aids 2008; 22:421. [DOI] [PubMed] [Google Scholar]

- 33.Larson Ba, Fox MP, Rosen S, et al. Do the socioeconomic impacts of antiretroviral therapy vary by gender? A longitudinal study of Kenyan agricultural worker employment outcomes. BMC Public Health 2009; 9:240.doi:10.1186/1471-2458-9-240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Habyarimana J, Mbakile B, Pop-Eleches C. The impact of HIV/AIDS and ARV treatment on worker absenteeism: implications for African firms. J Hum Resour 2010; 45:809–839.doi:10.1353/jhr.2010.0032. [Google Scholar]

- 35.Bor J, Tanser F, Newell M-L, Bärnighausen T. In a study of a population cohort in South Africa, HIV patients on antiretrovirals had nearly full recovery of employment. Health Aff 2012; 31:1459–1469.doi:10.1377/hlthaff.2012.0407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zivin JG, Thirumurthy H, Goldstein M. AIDS treatment and intrahousehold resource allocation: children's nutrition and schooling in Kenya. J Public Econ 2009; 93:1008–1015.doi:10.1016/j.jpubeco.2009.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bor J, Tanser F, Newell M-L, et al. Economic spillover effects of ART on rural South African households. Washington DC, USA: IAEN 2012 (International AIDS and Economics Network Conference); 2012. [Google Scholar]

- 38.D’Adda G, Goldstein M, Zivin JG, et al. ARV treatment and time allocation to household tasks: evidence from Kenya. African Dev Rev 2009; 21:180–208.doi:10.1111/j.1467-8268.2009.00207.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lucas AM, Wilson NL. Adult antiretroviral therapy and child health: evidence from scale-up in Zambia. Am Econ Rev 2013; 103:456–461.doi:10.1257/aer.103.3.456. [DOI] [PubMed] [Google Scholar]

- 40.Bechange S, Bunnell R, Awor A, et al. Two-year follow-up of sexual behavior among HIV-uninfected household members of adults taking antiretroviral therapy in Uganda: no evidence of disinhibition. AIDS Behav 2010; 14:816–823. [DOI] [PubMed] [Google Scholar]

- 41.McLaren Z. The effect of access to AIDS treatment on employment outcomes in South Africa. Essays on Labor Market Outcomes in South Africa 2010; Chapter 1, pp. 4–44. Dissertation: University of Michigan. [Google Scholar]

- 42.Barofsky J, Sood N, Wagner Z. HIV treatment and economic outcomes in Malawi. Boston, MA: Population Association of America Annual Meeting; 2014. [Google Scholar]

- 43.Lakdawalla D, Sood N, Goldman D. HIV breakthroughs and risky sexual behavior. Q J Econ 2006; 121:1063–1102. [Google Scholar]

- 44.De Walque D, Kazianga H, Over M. Antiretroviral therapy perceived efficacy and risky sexual behaviors: evidence from Mozambique. Econ Dev Cult Change 2012; 61:97–126. [Google Scholar]

- 45▪.2014; Friedman W. Antiretroviral Drug Access and Behavior Change. http://willafriedman.com/documents/ARVaccessandbehaviorchange.pdf. [Google Scholar]; This article exploits the phased roll-out of ART in Kenya to assess community spillover effects on sexual disinhibition and pregnancy.

- 46.Bor J, McLaren Z, Tanser F, et al. HIV treatment as economic stimulus: community spillover effects of ART provision in rural South Africa. San Diego, CA: Population Association of America Annual Meeting; 2015. [Google Scholar]

- 47▪.Baranov V, Kohler H-P. The Impact of AIDS Treatment on Savings and Human Capital Investment in Malawi [Internet]. 2014. Report No.: 14–3. http://repository.upenn.edu/cgi/viewcontent.cgi?article=1054&context=psc_working_papers. [Google Scholar]; This article exploits differential exposure to ART during its roll-out in Malawi and looks at the impact of community exposure on savings and investments in education.

- 48.Wirth K, Bärnighausen T, Tanser F, Tchetgen E. Community-level antiretroviral therapy coverage and HIV acquisition in rural KwaZulu-Natal, South Africa: an instrumental variable analysis. Society for Epidemiologic Research, Denver, 16–19 June 2015. [Google Scholar]

- 49.Tanser F, Bärnighausen T, Grapsa E, et al. High coverage of ART associated with decline in risk of HIV acquisition in rural KwaZulu-Natal, South Africa. Science 2013; 339:966–971.doi:10.1126/science.1228160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zivin JG, Thirumurthy H, Goldstein M. AIDS treatment and intrahousehold resource allocation: children's nutrition and schooling in Kenya. J Public Econ 2009; 93:1008–1015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Thirumurthy H, Zivin JG, Goldstein M. The economic impact of AIDS treatment. J Hum Resour 2008; 43:511. [PMC free article] [PubMed] [Google Scholar]

- 52▪.De Walque D, Gertler PJ, Bautista-Arredondo S, et al. Using provider performance incentives to increase HIV testing and counseling services in Rwanda. J Health Econ 2014; 40C:1–9. [DOI] [PubMed] [Google Scholar]; This article uses a difference-in-differences strategy to evaluate a cluster intervention to improve HIV counseling and testing.

- 53.Goetz MB, Hoang T, Knapp H, et al. Central implementation strategies outperform local ones in improving HIV testing in Veterans Healthcare Administration facilities. J Gen Intern Med 2013; 28:1311–1317.doi:10.1007/s11606-013-2420-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.McGovern M, Canning D, Tanser F, Herbst K, Gareta D, Mutevedzi T, et al. A Household Food Voucher Increases Consent to Home-Based HIV Testing in Rural KwaZulu-Natal. Conference on Retroviruses and Opportunistic Infections (CROI). Seattle, USA; 2015. http://www.croiconference.org/sessions/household-food-voucher-increases-consent-home-based-hiv-testing-rural-kwazulu-natal. [Google Scholar]

- 55.Klein PW, Messer LC, Myers ER, et al. Impact of a routine, opt-out HIV testing program on HIV testing and case detection in North Carolina sexually transmitted disease clinics. Sex Transm Dis 2014; 41:395–402.doi:10.1097/OLQ.0000000000000141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Boruett P, Kagai D, Njogo S, et al. Facility-level intervention to improve attendance and adherence among patients on antiretroviral treatment in Kenya: a quasi-experimental study using time series analysis. BMC Health Serv Res 2013; 13:242.doi:10.1186/1472-6963-13-242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57▪.De Neve J-W, Fink G, Subramanian SV, et al. Length of secondary schooling and risk of HIV infection in Botswana: evidence from a natural experiment. Lancet Glob Heal 2015; 3:e470–e477.doi:10.1016/S2214-109X(15)00087-X. [DOI] [PMC free article] [PubMed] [Google Scholar]; This article uses a quasi-experimental approach to assess the causal effect of education on HIV infection risk.

- 58.Burke M, Gong E, Jones K. Income shocks and HIV in Africa. Econ J 2014; 125:1157–1189. [Google Scholar]

- 59.Tsai A, Venkataramani A. The causal effect of education on HIV stigma in Uganda: evidence from a natural experiment. Social Science and Medicine 2015; 142:37–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Bärnighausen T, Bor J, Wandira-Kazibwe S, Canning D. Correcting HIV prevalence estimates for survey nonparticipation using Heckman-type selection models. Epidemiology 2011; 22:27–35.doi:10.1097/EDE.0b013e3181ffa201. [DOI] [PubMed] [Google Scholar]

- 61▪.McGovern ME, Bärnighausen T, Salomon JA, Canning D. Using interviewer random effects to remove selection bias from HIV prevalence estimates. BMC Med Res Methodol 2015; 15:8.doi:10.1186/1471-2288-15-8. [DOI] [PMC free article] [PubMed] [Google Scholar]; This article presents a quasi-experimental approach to correct selection bias in HIV prevalence estimates.

- 62▪.Moscoe E, Bor J, Bärnighausen T. Regression discontinuity designs are underutilized in medicine, epidemiology, and public health: a review of current and best practice. J Clin Epidemiol 2015; 68:122–133.doi:10.1016/j.jclinepi.2014.06.021. [DOI] [PubMed] [Google Scholar]; This article reviews the nascent regression discontinuity literature in clinical medicine, epidemiology and public health.

- 63.Langlois EV, Ranson MK, Bärnighausen T, et al. Advancing the field of health systems research synthesis. Syst Rev 2015; 4:90.doi:10.1186/s13643-015-0080-9. [DOI] [PMC free article] [PubMed] [Google Scholar]