Abstract

In this work, we explore finite-dimensional linear representations of nonlinear dynamical systems by restricting the Koopman operator to an invariant subspace spanned by specially chosen observable functions. The Koopman operator is an infinite-dimensional linear operator that evolves functions of the state of a dynamical system. Dominant terms in the Koopman expansion are typically computed using dynamic mode decomposition (DMD). DMD uses linear measurements of the state variables, and it has recently been shown that this may be too restrictive for nonlinear systems. Choosing the right nonlinear observable functions to form an invariant subspace where it is possible to obtain linear reduced-order models, especially those that are useful for control, is an open challenge. Here, we investigate the choice of observable functions for Koopman analysis that enable the use of optimal linear control techniques on nonlinear problems. First, to include a cost on the state of the system, as in linear quadratic regulator (LQR) control, it is helpful to include these states in the observable subspace, as in DMD. However, we find that this is only possible when there is a single isolated fixed point, as systems with multiple fixed points or more complicated attractors are not globally topologically conjugate to a finite-dimensional linear system, and cannot be represented by a finite-dimensional linear Koopman subspace that includes the state. We then present a data-driven strategy to identify relevant observable functions for Koopman analysis by leveraging a new algorithm to determine relevant terms in a dynamical system by ℓ1-regularized regression of the data in a nonlinear function space; we also show how this algorithm is related to DMD. Finally, we demonstrate the usefulness of nonlinear observable subspaces in the design of Koopman operator optimal control laws for fully nonlinear systems using techniques from linear optimal control.

Introduction

Koopman spectral analysis provides an operator-theoretic perspective to dynamical systems, which complements the more standard geometric [1] and probabilistic perspectives. In the early 1930s [2, 3], B. O. Koopman showed that nonlinear dynamical systems associated with Hamiltonian flows could be analyzed with an infinite dimensional linear operator on the Hilbert space of observable functions. For Hamiltonian fluids, the Koopman operator is unitary, meaning that the inner product of any two observable functions remains unchanged by the operator. Unitarity is a familiar concept, as the discrete Fourier transform (DFT) and the proper orthogonal decomposition (POD) [4] both provide unitary coordinate transformations. In the original paper [2], Koopman drew connections between the Koopman eigenvalue spectrum and conserved quantities, integrability, and ergodicity. Recently, it was shown that level sets of the Koopman eigenfunctions form invariant partitions of the state-space of a dynamical system [5]; in particular, eigenfunctions of the Koopman operator may be used to analyze the ergodic partition [6, 7]. Koopman analysis has also been recently shown to generalize the Hartman-Grobman theorem to the entire basin of attraction of a stable or unstable equilibrium point or periodic orbit [8]. For more information there are a number of excellent in-depth reviews on Koopman analysis by Mezić et al. [9, 10].

Koopman analysis has been at the focus of recent data-driven efforts to characterize complex systems, since the work of Mezić and Banaszuk [11] and Mezić [12]. There is considerable interest in obtaining finite-rank approximations to the linear Koopman operator that propagate the original nonlinear dynamics. This is especially promising for the potential control of nonlinear systems [13]. However, by introducing the Koopman operator, we trade nonlinear dynamics for infinite-dimensional linear dynamics, introducing new challenges. Finite-dimensional linear approximations of the Koopman operator may be useful to model the dynamics on an attractor, and those that explicitly advance the state may also be useful for control. Any set of Koopman eigenfunctions will form a Koopman-invariant subspace, resulting in an exact finite-dimensional linear model. Unfortunately, many dynamical systems do not admit a finite-dimensional Koopman-invariant subspace that also spans the state; in fact, this is only possible for systems with an isolated fixed point. It may be possible to recover the state from the Koopman eigenfunctions, but determining the eigenfunctions and inverting for the state may both be challenging.

Dynamic mode decomposition (DMD), introduced in the fluid dynamics community [14–17], provides a practical numerical framework for Koopman mode decomposition. DMD has been broadly applied to a wide range of applied domains, including fluid dynamics [10, 18–21], neuroscience [22], robotics [23], epidemiology [24], and video processing [25–27]. In each of these domains, DMD has been useful for extracting spatial-temporal coherent structures from data; these structures, often called modes, oscillate at a fixed frequency and/or a growth or decay rate. By decomposing the state space into these spatial-temporal coherent modes, it is possible to infer physical mechanisms underlying observed high-dimensional data. Much of the success of DMD rests on it foundations in linear algebra, as the procedure is easy to implement [28], the results are highly interpretable, and the method lends itself naturally to extensions [17], such as compressed sensing [27, 29–31], disambiguating the effect of actuation [13], multi-resolution analysis [32], de-noising [33, 34], and streaming variants [35].

DMD implicitly uses linear observable functions, such as direct velocity field measurements from particle image velocimetry (PIV). In other words, the observable function is an identity map on the fluid flow state. This set of linear observables is too limited to describe the rich dynamics observed in fluids or other nonlinear systems. Recently, DMD has been extended to include a richer set of nonlinear observable functions, providing the ability to effectively analyze nonlinear systems [36]. Because of the extreme cost associated with this extended DMD for high-dimensional systems, a variation using the kernel trick from machine learning has been implemented to make the cost of extended DMD equivalent to traditional DMD, but retaining the benefit of nonlinear observables [37]. However, choosing the correct nonlinear observable functions to use for a given system, and how they will impact the performance of Koopman mode decomposition and reduction, is still an open problem. Presently, these observable functions are either determined using information about the right-hand side of the dynamics (i.e., knowing that the Navier-Stokes equations have quadratic nonlinearities, etc.) or by brute-force trial and error in a particular basis for Hilbert space (i.e., trying many different polynomial functions).

In this work, we explore the identification of observable functions that span a finite-dimensional subspace of Hilbert space which remains invariant under the Koopman operator (i.e., a Koopman-invariant subspace spanned by eigenfunctions of the Koopman operator). When this subspace includes the original states, we obtain a finite-dimensional linear dynamical system on this subspace that also advances the original state directly. We utilize a new algorithm, the sparse identification of nonlinear dynamics (SINDy) [38], to first identify the right-hand side dynamics of the nonlinear system. Next, we choose observable functions such that these dynamics are in the span. Finally, for certain dynamical systems with an isolated fixed point, we construct a finite-dimensional Koopman operator that also advances the state directly. For the examples presented, this procedure is closely related to the Carleman linearization [39–41], which has extensions to nonlinear control [42–44]. Afterward, it is possible to develop a nonlinear Koopman operator optimal control (KOOC) law, even for nonlinear fixed points, using techniques from linear optimal control theory.

Background on Koopman analysis

Consider a continuous-time dynamical system, given by:

| (1) |

where x ∈ M is an n-dimensional state on a smooth manifold M. The vector field f is an element of the tangent bundle T M of M, such that f(x)∈Tx M. Note that in many cases we dispense with manifolds and choose and f a Lipschitz continuous function.

For a given time t, we may consider the flow map Ft: M → M, which maps the state x(t0) forward time t into the future to x(t0+t), according to:

| (2) |

In particular, this induces a discrete-time dynamical system:

| (3) |

where xk = x(kt). In general, discrete-time dynamical systems are more general than continuous time systems, but we choose to start with continuous time for illustrative purposes.

We also define a real-valued observable function , which is an element of an infinite-dimensional Hilbert space. Typically, the Hilbert space is given by the Lebesque square-integrable functions on M; other choices of a measure space are valid.

The Koopman operator is an infinite-dimensional linear operator that acts on observable functions g as:

| (4) |

where ∘ is the composition operator, so that:

| (5) |

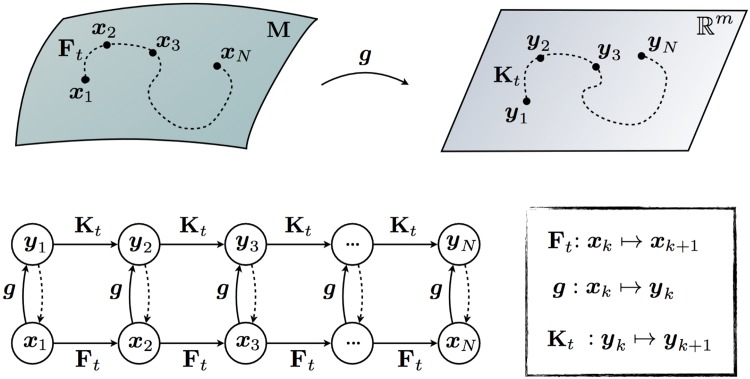

In other words, the Koopman operator defines an infinite-dimensional linear dynamical system that advances the observation of the state gk = g(xk) to the next timestep, as illustrated in Fig 1:

| (6) |

Note that this is true for any observable function g and for any point xk ∈ M.

Fig 1. Schematic illustrating the Koopman operator for nonlinear dynamical systems.

The dashed lines from yk → xk indicate that we would like to be able to recover the original state.

In the original paper by Koopman, Hamiltonian fluid systems with a positive density were investigated. In this case, the Koopman operator is unitary, and forms a one-parameter family of unitary transformations in Hilbert space. The Koopman operator is also known as the composition operator, which is formally the pull-back operator on the space of scalar observable functions [45]. The Koopman operator is the dual, or left-adjoint, of the Perron-Frobenius operator, or transfer operator, which is the push-forward operator on the space of probability density functions.

We may also describe the continuous-time version of the Koopman dynamical system in Eq (6) with the infinitesimal generator of the one-parameter family of transformations [45]:

| (7) |

The linear dynamical systems in Eqs (7) and (6) are analogous to the dynamical systems in Eqs (1) and (3), respectively. It is important to note that the original state x may be the observable, and the infinite-dimensional operator will advance this observable function. Again, for Hamiltonian systems, the infinitesimal generator is self-adjoint.

Koopman invariant subspaces and exact finite-dimensional models

As with any vector space, we may choose a basis for Hilbert space and represent our observable function g in this basis. For simplicity, let us consider basis observable functions y1(x), y2(x), etc., and let a given function g(x) be written in these coordinates as:

| (8) |

A Koopman-invariant subspace is given by span{ys1, ys2, ⋯, ysm} if all functions g in this subspace,

| (9) |

remain in this subspace after being acted on by the Koopman operator :

| (10) |

For functions in these invariant subspaces, it is possible to restrict the Koopman operator to this subspace, yielding a finite-dimensional linear operator K. K acts on a vector space , with the coordinates given by the values of ysk(x). This induces a finite-dimensional linear system, as in Eqs (6) and (7). Koopman eigenfunctions φ, such that , generate invariant subspaces; however, it may or may not be possible to invert these functions to recover the original state x.

For control, we may seek Koopman-invariant subspaces that include the original state variables x1, x2, ⋯, xn. The Koopman operator restricted to this subspace is finite-dimensional, linear, and it advances the original state dynamics, as well as the other observables in the subspace, as shown in Fig 1. These Koopman-invariant subspaces may be identified using data-driven methods, as discussed below. In the following sections, we will show that including the state in our observable subspace is rather restrictive, and it is not possible for the vast majority of nonlinear systems. In fact, it is impossible to determine a finite-dimensional Koopman-invariant subspace that includes the original state variables for any system with multiple fixed points or any more general attractors. This is because all finite-dimensional linear systems have a single fixed point, and cannot be topologically conjugate to a system with multiple fixed points. This does not, however, preclude the identification of Koopman-invariant subspaces spanned by Koopman eigenfunctions φ, which may provide useful intrinsic coordinates [46]. In fact, it is possible to establish topological conjugacy of the entire basin of attraction of a stable or unstable fixed point or periodic orbit with an associated linear system through the Koopman operator, as shown in [8, 10]. It may be possible to invert these coordinates to recover the states, although determining eigenfunctions and inverting them to obtain the state may both be challenging.

For the original state variables x1, x2, ⋯, xn to be included in the Koopman-invariant subspace, then the nonlinear right hand side function f must also be in this subspace:

| (11) |

The state variables x form the first n observable functions ys1 = x1, ys2 = x2, ⋯, ysn = xn, and the remaining m − n observables are nonlinear functions required to represent the terms in f. If it is possible to represent each term fk as a combination of observable functions in the subspace,

| (12) |

then we may write the first n rows of the Koopman-induced dynamical system as:

| (13) |

In practice, the last m − n rows may be determined analytically, by successively computing for k > n and representing these derivatives in terms of other subspace observables. Knowing the dynamics f is essential to choose a relevant observable subspace. If the dynamics are known, observables may be derived analytically. Alternatively, a least-squares regression may be performed using data, as in the extended DMD [36].

Data-driven sparse identification of nonlinear observable functions

It is clear from Eqs (11)–(13) that the choice of relevant Koopman observable functions is closely related to the form of the nonlinearity in the dynamics. In the case that governing equations are unknown, data-driven strategies must be employed to determine useful observable functions. A recently developed technique allows for the identification of the nonlinear dynamics in Eq (11), purely from measurements of the system [38]. The so-called sparse identification of nonlinear dynamics (SINDy) algorithm uses sparse regression [47] in a nonlinear function space to determine the relevant terms in the dynamics. This may be thought of as a generalization of earlier methods that employ symbolic regression (i.e., genetic programming [48]) to identify dynamics [49, 50]; a similar method has been used to predict catastrophes in dynamical systems [51]. Thus, the SINDy algorithm is an equation-free method [52] to identify a dynamical system from data. This follows a growing trend to exploit sparsity in dynamics [53–55] and dynamical systems [56–58].

For simplicity in connecting the SINDy algorithm with dynamic mode decomposition (DMD), we consider discrete-time systems as in Eq (3), although the algorithm applies equally well to continuous-time systems. In the SINDy algorithm, measurements of the state x of a dynamical system are collected, and these measurements are augmented into a larger vector Θ(x) which contains candidate functions yck(x) for the right-hand side dynamics Ft(x) in Eq (3):

| (14) |

Often, we will choose the first n functions to be the original state variables, yck(x) = xk, so that the state x in Θ(x). Then, we write the following matrix system of equations:

| (15) |

This may be written in matrix short-hand as:

| (16) |

The functions in Θ(x) are candidate terms in the right hand side dynamics Ft, and they will also be candidate observable functions. The row vectors determine which nonlinear terms in Θ(x) are active in the k-th row of Ft; typically, ξk will be a sparse vector, since only a few terms are active in the right hand side of many dynamical systems of interest. In this case, we may use sparse regression, such as the LASSO [47], to solve for each sparse row . In this regression, there is an additional term that penalizes the ℓ1 norm (‖⋅‖1) of the regression parameters so that as many parameters are set to zero as possible. Afterward, the sparse matrix ΞT yields a nonlinear discrete-time model for Eq (3), obtained purely from data:

| (17) |

With the active terms in the nonlinear dynamics identified as the nonzero entries in the rows of ΞT, it is possible to include these functions in the Koopman subspace. Note that in the original SINDy algorithm, the transpose of Eq (15) was used so that the rows of Ξ become sparse column vectors, establishing a closer resemblance to sparse regression and compressed sensing formulations. Again, either discrete-time or continuous time formulations may be used. After a reduced observable subspace has been identified, we may re-apply the SINDy Algorithm:

| (18) |

where Θref is a refined set of candidate observable functions that are active in Eq (17). The additional rows of Ξaug determine how these observable functions advance as a linear combination of other observable functions. This procedure may be iterated until the subspace converges. Also, the ℓ1 sparse regularization may be omitted in these regressions.

Connections to dynamic mode decomposition (DMD)

In the case that Θ(x) = x, the problem in Eq (16) reduces to the standard DMD problem:

| (19) |

In the standard DMD algorithm, a solution Ξ is obtained that minimizes the sum-square error:

| (20) |

where ‖⋅‖F is the Frobenius norm. This is generally obtained by computing the pseudo-inverse of X using the singular value decomposition (SVD).

Systems with Koopman-invariant subspaces containing the state

Here, we construct a family of nonlinear dynamical systems where it is possible to find a Koopman-invariant subspace that also includes the original state variables as observable functions. These systems necessarily only have a single isolated fixed point, as there is no finite-dimensional linear system that can represent multiple fixed points or more general attractors. It is, however, possible to obtain linear representations of entire basins of attractions of certain fixed points using eigenfunction coordinates [8, 36], where it may be possible to invert to find the state. However, these are still not global descriptions, and finding these eigenfunctions and inverting to recover the state remains an open challenge for most systems. All of the examples below exhibit polynomial nonlinearities that give rise to polynomial slow or fast manifolds.

Continuous-time formulation

Consider a continuous-time dynamical system with a polynomial slow manifold, given by

| (21) |

where P(x) is a polynomial function. If λ ≪ |μ|<0,then the λ dynamics rapidly drive x2 − P(x1) to zero, so that x2 = P(x1). Once on this manifold, the slower μ dynamics become dominant. The manifold x2 = P(x1) is referred to as an asymptotically attracting slow manifold. This system has a single fixed point at the origin x1 = x2 = 0. We will show that there always exists a finite-dimensional linear system that is given by the closure of the Koopman operator on an observable subspace spanned by the states x1, x2 and the active polynomial terms in P(x1).

First, consider a single monomial term given by P(x) = xN. For the dynamics in the right hand side of Eq (21) to be in the span of our Koopman-invariant subspace, we must include the observable function xN. Thus, we must augment the state with an observable function xN, so that:

| (22) |

Now, the first two terms for and are linearly related to the entries of y. Finally, to determine , we need only apply the chain rule:

| (23) |

This is closely related to Carleman linearization [39–41]. Thus, the system simplifies as:

| (24) |

For more general polynomials, given by , we must include each of the monomial terms with nonzero coefficient, resulting in:

This expression is finite-dimensional and linear, and it advances the original state x forward exactly, even though the governing dynamics are nonlinear.

Continous-time examples

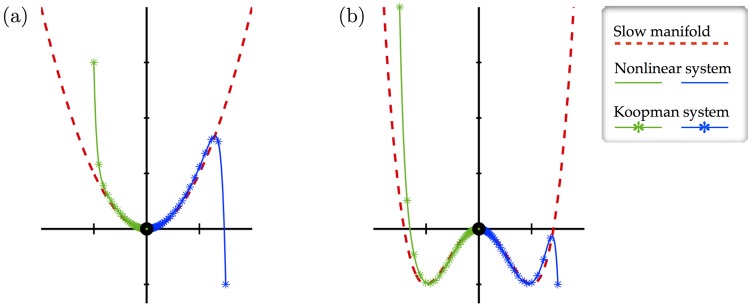

Here, we consider two examples with slow manifolds, which are illustrated in Fig 2. The first system, with quadratic attracting manifold , is given by:

| (25) |

and the second system, with quartic attracting manifold , is given by:

Fig 2. Illustration of two examples with a slow manifold.

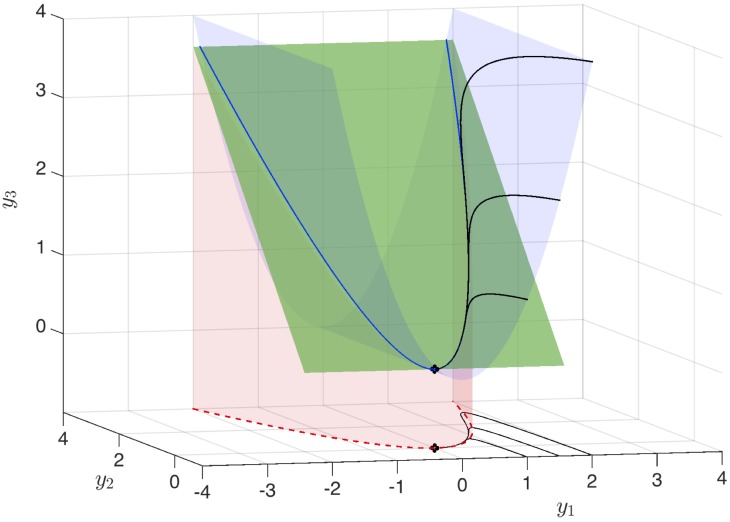

In both cases, μ = −0.05 and λ = −1.

To understand the embedding of a nonlinear dynamical system in a higher-dimensional observable subspace, in which the dynamics are linear, consider the system with quadratic attracting manifold from Eq (25). The full three-dimensional Koopman observable vector space is visualized in Fig 3. Trajectories that start on the invariant manifold , visualized by the blue surface, are constrained to stay on this manifold. There is a slow subspace, spanned by the eigenvectors corresponding to the slow eigenvalues μ and 2μ; this subspace is visualized by the green surface. Finally, there is the original asymptotically attracting manifold of the original system, , which is visualized as the red surface. The blue and red parabolic surfaces always intersect in a parabola that is inclined at a 45° angle in the y2-y3 direction. The green surface approaches this 45° inclination as the ratio of fast to slow dynamics become increasingly large. In the full three-dimensional Koopman observable space, the dynamics are given by a stable node, with trajectories rapidly attracting onto the green subspace and then slowly approaching the fixed point.

Fig 3. Visualization of three-dimensional linear Koopman system from Eq (25) along with projection of dynamics onto the x1-x2 plane.

The attracting slow manifold is shown in red, the constraint is shown in blue, and the slow unstable subspace of Eq (25) is shown in green. Black trajectories of the linear Koopman system in y project onto trajectories of the full nonlinear system in x in the y1-y2 plane. Here, μ = −0.05 and λ = 1. Figure is reproduced with Code 1 in S1 Appendix.

Intrinsic coordinates defined by eigen-observables of the Koopman operator

The left eigenvectors of the Koopman operator yield Koopman eigenfunctions (i.e., eigenobservables). The Koopman eigenfunctions of Eq (25) corresponding to eigenvalues μ and λ are:

| (26) |

The constant b in φλ captures the fact that for a finite ratio λ/μ, the dynamics only shadow the asymptotically attracting slow manifold , but in fact follow neighboring parabolic trajectories. This is illustrated more clearly by the various surfaces in Fig 3 for different ratios λ/μ.

In this way, a set of intrinsic coordinates may be determined from the observable functions defined by the left eigenvectors of the Koopman operator on an invariant subspace. Explicitly,

| (27) |

These eigen-observables define observable subspaces that remain invariant under the Koopman operator, even after coordinate transformations. As such, they may be regarded as intrinsic coordinates [46] on the Koopman-invariant subspace. As an example, consider the system from Eq (25), but written in a coordinate system that is rotated by 45°:

| (28) |

The original eigenfunctions, written in the new coordinate systems are:

It is easy to verify that these remain eigenfunctions:

In fact, in this new coordinate system, it is possible to write the Koopman subspace system:

| (29) |

Discrete-time formulation

A related formulation for discrete-time systems is given by:

| (30) |

This system will also converge asymptotically to a slow manifold given by x2 = P(x1) when |λ|≪|μ| and |λ|<1. A similar argument can be made to that given in Eqs (23) and (25), but with μN replacing Nμ, since:

| (31) |

Thus, for discrete-time systems, the update is given by:

| (32) |

Discrete-time example

The case of a polynomial slow manifold is inspired by a simple illustrative example from Tu et al. [17]:

| (33) |

In this case, there is a polynomial stable manifold . Thus, they suggest the following observable variables, which are intrinsic coordinates for the dynamics:

| (34) |

In our framework above, if the correct intrinsic variables were unknown, they could be discovered by writing the system as:

| (35) |

Finally, in this observable function coordinate system, the left eigenvectors are:

corresponding to the eigenvalues λ1 = λ, λ2 = λ2 and λ3 = μ. These eigenvectors diagonalize the system and define the intrinsic coordinates.

Koopman operator optimal control

A long held hope of Koopman operator theory is that it would provide insights into the control of nonlinear systems. Here, we present results of designing control laws using linear control theory on the truncated Koopman operator; these Koopman operator optimal controllers (KOOCs) then induce a nonlinear controller on the state-space that dramatically outperforms optimal control on the linearized fixed point.

This is only a brief introduction to the theory of Koopman optimal control, and there are numerous extensions that must be developed and explored. There are existing connections between DMD and control systems [13], and there are ongoing efforts to extend this to the Koopman operator framework. There are a number of systems where it is not clear how to use the Koopman linear operator for control, and these will be briefly outlined below. In addition, there are alternative nonlinear control methods related to Carleman linearization [42–44] that may be connected to Koopman operator control. Moreover, we have not yet proven the nonlinear optimality of these new controllers, but the numerical performance is striking.

Simple motivating example

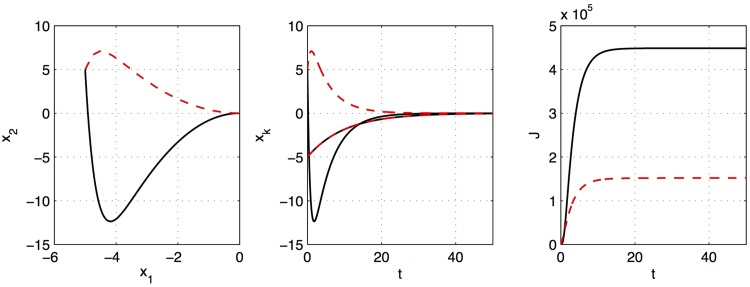

As a motivating example, consider the nonlinear system in Eq (25), but with the stability of the x2 direction reversed (i.e., λ = 1 instead of λ = −1), and modified to include actuation on the second state:

| (36) |

with μ = −.1 and λ = 1. Again, this may be put into a Koopman formalism as:

| (37) |

Now, let us assume that we have a quadratic cost function, as in the linear-quadratic-regulator (LQR) control framework:

| (38) |

where Q weighs the cost of deviations of the state x from the origin and R weighs the cost of control expenditure. For now, we will consider the following Q and R for simplicity:

| (39) |

In this way, all state deviations and control expenditures are weighed equally.

For linear systems, such as the linearization of Eq (36), it is possible to derive the matrix C that results in the optimal control law u = −Cx; this control law is optimal in the sense that it achieves the minimal attainable cost function J. However, this controller will only be optimal for a small vicinity of the fixed point where linearization is valid. Outside this vicinity, when nonlinear terms become large, all guarantees of optimality are lost.

Instead of linearizing near the fixed point and computing the optimal LQR controller, here we use the Koopman linear system in Eq (37). We still have the same cost on the state x, so we use a modified weight matrix given by and . In this way, we may develop an optimal linear controller for the Koopman representation of our nonlinear system. In this case, the Koopman linear control law, given by , may be interpreted as a nonlinear control law on the original state x:

| (40) |

The results of the standard LQR compared with this Koopman operator optimal controller are shown in Fig 4, and the Matlab code is provided in Code 2 in S1 Appendix. In this example, the KOOC achieves a cost of approximately 1/3 the cost of standard LQR.

Fig 4. Illustration of LQR control around a nonlinear fixed point using standard linearization (black) and truncated Koopman (red).

The Koopman optimal controller achieves a much smaller overall cost, J, approximately 1/3 of the cost of the standard LQR solution.

Limitations of Koopman operator optimal control

In the current framework, there are a number of limitations to the approach advocated above. We will illustrate this on a simple variation on the example above, in which μ is unstable instead of λ and the control input effects the first state x1 instead of x2:

| (41) |

with μ = .1 and λ = −1. In this example, it is necessary to move the actuation to the first state x1, otherwise this state will be unstable and uncontrollable. What is more troubling, is that the subspace spanned by x1, x2, and is no longer Koopman-invariant, since the expression for the time derivative of is more complicated now:

| (42) |

Thus, there is a troublesome extra nonlinear term x1 u in the expression for . However, this may not be too large of a problem, considering that we don’t weight excursions of y3 in the cost function. What is a larger problem, is that the state y3 has a positive eigenvalue 2μ, which is uncontrollable. Many off-the-shelf packages, such as Matlab, will fail to return an LQR controller for such uncontrollable unstable systems.

Discussion

In this paper, we have investigated a special choice of Koopman observable functions that form a finite-dimensional subspace of Hilbert space that contains the state in its span and remains invariant under the Koopman operator. Any finite collection of Koopman eigenfunctions (i.e., eigen-observables) forms such a Koopman-invariant subspace. These Koopman eigenfunctions may be extremely useful, providing intrinsic coordinates for a given nonlinear dynamical system. In addition, given such a Koopman-invariant subspace, the Koopman operator restricted to this subspace yields a finite-dimensional linear dynamical system to evolve these observables forward in time. However, it is not always clear how to identify relevant Koopman eigenfunctions, either from data or governing equations, or how to invert these coordinates to obtain information about the progression of the underlying state variables. Moreover, in many cases with control, the control objectives are defined directly on the state; this is the case in linear quadratic regulator (LQR) control, for example. Thus, there is still interest in defining a Koopman invariant subspace that includes the original state variables as observable functions.

We demonstrate that for a large class of nonlinear systems with a single isolated fixed point, it is possible to obtain such a Koopman-invariant subspace that includes the original state variables. We show that the eigen-observables that define this Koopman-invariant subspace may be solved for as left-eigenvectors of the Koopman operator restricted to the subspace in the chosen coordinate system. Finally, we demonstrate that the finite-dimensional linear Koopman operator defined on this Koopman-invariant subspace may be used to develop Koopman operator optimal control (KOOC) laws using techniques from linear control theory. In particular, we develop an LQR controller using the Koopman linear system, but retaining the cost function defined on the original state. The resulting control law may be thought of as inducing a nonlinear control law on the state variable, and it dramatically outperforms standard LQR computed on a linearization, reducing the cost expended by a factor of three. This is extremely promising and may result in significantly improved control laws for systems with normal form expansions near fixed points [1]. These expansions are commonly used in astrophysical problems to compute orbits around fixed points [59]; for example, the James Webb Space Telescope will orbit the Sun-Earth L2 Lagrange point [60].

We also present a data-driven technique to identify the relevant Koopman observable functions, leveraging a recent technique that identifies nonlinear dynamical systems in a nonlinear function space using sparse regression; this algorithm is known as the sparse identification of nonlinear dynamics (SINDy). Since the SINDy algorithm employs regression to determine an approximate dynamical system from data, it is closely related to the DMD algorithm. Such nonlinear dynamic regression algorithms have significant potential to add further understanding in a broad range of applications, including fluid dynamics, neuroscience, robotics, epidemiology, and video processing, where DMD has already been successfully demonstrated.

As is often the case with interesting problems in mathematics, a deeper understanding of one problem opens up a host of other open questions. For example, a complete classification of nonlinear systems which admit Koopman-invariant subspaces that include the state variables as observables remains an open and interesting problem. It is, however, clear that no system with multiple fixed points, or any periodic orbits or more complex attractors can admit such a finite-dimensional Koopman-invariant subspace containing the state variables explicitly as observables. In these cases, another open problem is how to choose observable coordinates so that a finite-rank truncation of the linear Koopman dynamics yields useful results, not just for reconstruction of existing data, but for future state prediction and control. Finally, more effort must go into understanding whether or not Koopman operator optimal control laws are optimal in the sense that they minimize the cost function across all possible nonlinear control laws.

Much of the interest surrounding Koopman analysis and DMD has been centered around the promise of obtaining finite-dimensional linear expressions for nonlinear dynamics. In fact, any set of Koopman eigenfunctions span an invariant subspace, where it is possible to obtain an exact and closed finite-dimensional truncation, although finding these nonlinear Koopman eigen-observable functions is challenging. Moreover, Koopman invariant subspaces may or may not provide enough information to propagate the underlying state, which is useful for evaluating cost functions in optimal control laws. Koopman eigenfunctions provide a wealth of information about the original system, including a characterization of invariant sets such as stable and unstable manifolds, and these may not have simple closed-form representations, but may instead need to be approximated from data. There are methods that identify almost invariant sets and coherent structures [61, 62] using set oriented methods [63]. Related Ulam-Galerkin methods have been used to approximate eigenvalues and eigenfunctions of the Perron-Frobenius operator [64].

To address these challenges, finite-dimensional linear approximations of the Koopman operator from data have been widely explored, and they are valuable in many instances, especially for extracting dynamics on modal coherent structures. However, we have shown that it is quite rare for a dynamical system to admit a finite-dimensional Koopman-invariant subspace that includes the state variables explicitly, so that exact linear models to propagate the state dynamics exist only for systems with a single isolated fixed point. This implies that approximate truncation of linear Koopman models for nonlinear phenomena with multiple fixed points or more general attractors should be used with care for future-state prediction, especially for off-attractor transients, as well as for the design of control laws. There is no free lunch with Koopman analysis of nonlinear systems, as we trade finite-dimensional nonlinear dynamics for infinite-dimensional linear dynamics, with an entirely new host of challenges.

Supporting Information

(PDF)

Acknowledgments

The authors would like to thank Clancy Rowley for insightful and constructive comments on an early draft of this paper, especially his observation that the state can often be recovered from the intrinsic eigenfunction coordinates. We also thank Igor Mezic for making us aware of Carleman linearization and the extremely interesting literature on operator theoretic control of nonlinear systems. SLB acknowledges support from the AFOSR Center of Excellence in Nature Inspired Flight Technologies and Ideas (FA9550-14-1-0398). BWB acknowledges support from the Washington Research Foundation. BWB and SLB acknowledge support from the U.S. Air Force Research Labs (FA8651-16-1-0003). JLP thanks Bill and Melinda Gates for their active support of the Institute of Disease Modeling and their sponsorship through the Global Good Fund. JNK acknowledges support from the U.S. Air Force Office of Scientific Research (FA9550-09-0174).

Data Availability

All relevant data are within the paper, and may be generated using the Matlab codes in the appendix.

Funding Statement

SLB and BWB received funding from University of Washington Start-Up Grants. JLP received support through the Institute of Disease Modeling, which is supported by Bill and Melinda Gates, along with the Global Good Fund. JNK received support from the US Air Force Office of Scientific Research (FA9550-09-0174). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Holmes P, Guckenheimer J. Nonlinear oscillations, dynamical systems, and bifurcations of vector fields. vol. 42 of Applied Mathematical Sciences. Berlin, Heidelberg: Springer-Verlag; 1983. [Google Scholar]

- 2. Koopman BO. Hamiltonian Systems and Transformation in Hilbert Space. Proceedings of the National Academy of Sciences. 1931;17(5):315–318. 10.1073/pnas.17.5.315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Koopman B, Neumann Jv. Dynamical systems of continuous spectra. Proceedings of the National Academy of Sciences. 1932;18(3):255 10.1073/pnas.18.3.255 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Holmes PJ, Lumley JL, Berkooz G, Rowley CW. Turbulence, coherent structures, dynamical systems and symmetry 2nd ed Cambridge Monographs in Mechanics. Cambridge, England: Cambridge University Press; 2012. [Google Scholar]

- 5. Budišić M, Mezić I. Geometry of the ergodic quotient reveals coherent structures in flows. Physica D: Nonlinear Phenomena. 2012;241(15):1255–1269. 10.1016/j.physd.2012.04.006 [DOI] [Google Scholar]

- 6. Mezić I, Wiggins S. A method for visualization of invariant sets of dynamical systems based on the ergodic partition. Chaos: An Interdisciplinary Journal of Nonlinear Science. 1999;9(1):213–218. 10.1063/1.166399 [DOI] [PubMed] [Google Scholar]

- 7.Budišić M, Mezić I. An approximate parametrization of the ergodic partition using time averaged observables. In: Decision and Control, 2009 held jointly with the 2009 28th Chinese Control Conference. CDC/CCC 2009. Proceedings of the 48th IEEE Conference on. IEEE; 2009. p. 3162–3168.

- 8. Lan Y, Mezić I. Linearization in the large of nonlinear systems and Koopman operator spectrum. Physica D: Nonlinear Phenomena. 2013;242(1):42–53. 10.1016/j.physd.2012.08.017 [DOI] [Google Scholar]

- 9. Budišić M, Mohr R, Mezić I. Applied Koopmanism a). Chaos: An Interdisciplinary Journal of Nonlinear Science. 2012;22(4):047510 10.1063/1.4772195 [DOI] [PubMed] [Google Scholar]

- 10. Mezic I. Analysis of fluid flows via spectral properties of the Koopman operator. Annual Review of Fluid Mechanics. 2013;45:357–378. 10.1146/annurev-fluid-011212-140652 [DOI] [Google Scholar]

- 11. Mezić I, Banaszuk A. Comparison of systems with complex behavior. Physica D: Nonlinear Phenomena. 2004;197(1):101–133. [Google Scholar]

- 12. Mezić I. Spectral properties of dynamical systems, model reduction and decompositions. Nonlinear Dynamics. 2005;41(1–3):309–325. [Google Scholar]

- 13.Proctor JL, Brunton SL, Kutz JN. Dynamic mode decomposition with control; 2015. To appear in the SIAM Journal on Applied Dynamical Systems. Available: arXiv:1409.6358.

- 14.Schmid PJ, Sesterhenn J. Dynamic mode decomposition of numerical and experimental data. In: 61st Annual Meeting of the APS Division of Fluid Dynamics. American Physical Society; 2008.

- 15. Rowley CW, Mezić I, Bagheri S, Schlatter P, Henningson DS. Spectral analysis of nonlinear flows. Journal of Fluid Mechanics. 2009;645:115–127. 10.1017/S0022112009992059 [DOI] [Google Scholar]

- 16. Schmid PJ. Dynamic Mode Decomposition of Numerical and Experimental Data. Journal of Fluid Mechanics. 2010;656:5–28. 10.1017/S0022112010001217 [DOI] [Google Scholar]

- 17. Tu JH, Rowley CW, Luchtenburg DM, Brunton SL, Kutz JN. On dynamic mode decomposition: theory and applications. Journal of Computational Dynamics. 2014;1(2):391–421. 10.3934/jcd.2014.1.391 [DOI] [Google Scholar]

- 18. Schmid PJ. Application of the dynamic mode decomposition to experimental data. Experiments in Fluids. 2011;50:1123–1130. 10.1007/s00348-010-0911-3 [DOI] [Google Scholar]

- 19. Grilli M, Schmid PJ, Hickel S, Adams NA. Analysis of Unsteady Behaviour in Shockwave Turbulent Boundary Layer Interaction. Journal of Fluid Mechanics. 2012;700:16–28. 10.1017/jfm.2012.37 [DOI] [Google Scholar]

- 20. Bagheri S. Koopman-mode decomposition of the cylinder wake. Journal of Fluid Mechanics. 2013;726:596–623. 10.1017/jfm.2013.249 [DOI] [Google Scholar]

- 21. Brunton SL, Noack BR. Closed-loop turbulence control: Progress and challenges. Applied Mechanics Reviews. 2015;67(050801):1–48. [Google Scholar]

- 22. Brunton BW, Johnson LA, Ojemann JG, Kutz JN. Extracting spatial-temporal coherent patterns in large-scale neural recordings using dynamic mode decomposition. Journal of Neuroscience Methods. 2016;258:1–15. 10.1016/j.jneumeth.2015.10.010 [DOI] [PubMed] [Google Scholar]

- 23. Berger E, Sastuba M, Vogt D, Jung B, Amor HB. Estimation of perturbations in robotic behavior using dynamic mode decomposition. Journal of Advanced Robotics. 2015;29(5):331–343. 10.1080/01691864.2014.981292 [DOI] [Google Scholar]

- 24. Proctor J, Echhoff P. Discovering dynamic patterns from infectious disease data using dynamic mode decomposition. International Health. 2015;7:139–145. 10.1093/inthealth/ihv009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Grosek J, Kutz JN. Dynamic mode decomposition for real-time background/foreground separation in video; 2014. Preprint. Available: arXiv:1404.7592.

- 26.Kutz JN, Grosek J, Brunton SL. Dynamic Mode Decomposition for Robust PCA with Applications to Foreground/Background Subtraction in Video Streams and Multi-Resolution Analysis. In: Bouwmans T, editor. CRC Handbook on Robust Low-Rank and Sparse Matrix Decomposition: Applications in Image and Video Processing; 2015.

- 27.Erichson NB, Brunton SL, Kutz JN. Compressed Dynamic Mode Decomposition for Real-Time Object Detection; 2015. Preprint. Available: arXiv:1512.04205.

- 28. Belson BA, Tu JH, Rowley CW. Algorithm 945: modred—A Parallelized Model Reduction Library. ACM Transactions on Mathematical Software. 2014;40(4):30:1–30:23. 10.1145/2616912 [DOI] [Google Scholar]

- 29. Jovanović MR, Schmid PJ, Nichols JW. Sparsity-promoting dynamic mode decomposition. Physics of Fluids. 2014;26(2):024103 10.1063/1.4863670 [DOI] [Google Scholar]

- 30.Brunton SL, Proctor JL, Tu JH, Kutz JN. Compressive sampling and dynamic mode decomposition; 2015. To appear in the Journal of Computational Dynamics. Available: arXiv:1312.5186.

- 31. Gueniat F, Mathelin L, Pastur L. A dynamic mode decomposition approach for large and arbitrarily sampled systems. Physics of Fluids. 2015;27(2):025113 10.1063/1.4908073 [DOI] [Google Scholar]

- 32.Kutz JN, Fu X, Brunton SL. Multi-Resolution Dynamic Mode Decomposition; 2016. Preprint. Available: arXiv:1506.00564.

- 33.Dawson STM, Hemati MS, Williams MO, Rowley CW. Characterizing and correcting for the effect of sensor noise in the dynamic mode decomposition; 2015. Preprint. Available: arXiv:1507.02264.

- 34.Hemati MS, Rowley CW. De-Biasing the Dynamic Mode Decomposition for Applied Koopman Spectral Analysis. Preprint Available: arXiv:150203854. 2015;.

- 35. Hemati MS, Williams MO, Rowley CW. Dynamic mode decomposition for large and streaming datasets. Physics of Fluids. 2014;26(11):111701 10.1063/1.4901016 [DOI] [Google Scholar]

- 36. Williams MO, Kevrekidis IG, Rowley CW. A data-driven approximation of the Koopman operator: extending dynamic mode decomposition. Journal of Nonlinear Science. 2015;25(6):1307–1346. 10.1007/s00332-015-9258-5 [DOI] [Google Scholar]

- 37.Williams MO, Rowley CW, Kevrekidis IG. A Kernel Approach to Data-Driven Koopman Spectral Analysis; 2014. Preprint. Available: arXiv:1411.2260.

- 38.Brunton SL, Proctor JL, Kutz JN. Discovering governing equations from data: Sparse identification of nonlinear dynamical systems; 2015. Preprint. Available: arXiv:1509.03580. [DOI] [PMC free article] [PubMed]

- 39. Carleman T. Application de la théorie des équations intégrales linéaires aux systémes d’équations différentielles non linéaires. Acta Mathematica. 1932;59(1):63–87. 10.1007/BF02546499 [DOI] [Google Scholar]

- 40. Steeb WH, Wilhelm F. Non-linear autonomous systems of differential equations and Carleman linearization procedure. Journal of Mathematical Analysis and Applications. 1980;77(2):601–611. 10.1016/0022-247X(80)90250-4 [DOI] [Google Scholar]

- 41. Kowalski K, Steeb WH, Kowalksi K. Nonlinear dynamical systems and Carleman linearization. World Scientific; 1991. [Google Scholar]

- 42. Brockett RW. Volterra series and geometric control theory. Automatica. 1976;12(2):167–176. 10.1016/0005-1098(76)90080-7 [DOI] [Google Scholar]

- 43. Banks S. Infinite-dimensional Carleman linearization, the Lie series and optimal control of non-linear partial differential equations. International Journal of Systems Science. 1992;23(5):663–675. 10.1080/00207729208949241 [DOI] [Google Scholar]

- 44. Svoronos S, Papageorgiou D, Tsiligiannis C. Discretization of nonlinear control systems via the Carleman linearization. Chemical Engineering Science. 1994;49(19):3263–3267. 10.1016/0009-2509(94)00141-3 [DOI] [Google Scholar]

- 45. Abraham R, Marsden JE, Ratiu T. Manifolds, Tensor Analysis, and Applications. vol. 75 of Applied Mathematical Sciences. Springer-Verlag; 1988. [Google Scholar]

- 46. Williams MO, Rowley CW, Mezić I, Kevrekidis IG. Data fusion via intrinsic dynamic variables: An application of data-driven Koopman spectral analysis. Europhysics Letters. 2015;109(4):40007 10.1209/0295-5075/109/40007 [DOI] [Google Scholar]

- 47. Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society B. 1996;58(1):267–288. [Google Scholar]

- 48. Koza JR, Bennett FH III, Stiffelman O. Genetic programming as a Darwinian invention machine In: Genetic Programming. Springer; 1999. p. 93–108. [Google Scholar]

- 49. Bongard J, Lipson H. Automated reverse engineering of nonlinear dynamical systems. Proceedings of the National Academy of Sciences. 2007;104(24):9943–9948. 10.1073/pnas.0609476104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Schmidt M, Lipson H. Distilling free-form natural laws from experimental data. Science. 2009;324(5923):81–85. 10.1126/science.1165893 [DOI] [PubMed] [Google Scholar]

- 51. Wang WX, Yang R, Lai YC, Kovanis V, Grebogi C. Predicting Catastrophes in Nonlinear Dynamical Systems by Compressive Sensing. Physical Review Letters. 2011;106:154101–1–154101–4. 10.1103/PhysRevLett.106.154101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Kevrekidis IG, Gear CW, Hyman JM, Kevrekidis PG, Runborg O, Theodoropoulos C. Equation-Free, Coarse-Grained Multiscale Computation: Enabling Microscopic Simulators to Perform System-Level Analysis. Communications in Mathematical Science. 2003;1(4):715–762. 10.4310/CMS.2003.v1.n4.a5 [DOI] [Google Scholar]

- 53. Ozoliņš V, Lai R, Caflisch R, Osher S. Compressed modes for variational problems in mathematics and physics. Proceedings of the National Academy of Sciences. 2013;110(46):18368–18373. 10.1073/pnas.1318679110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Schaeffer H, Caflisch R, Hauck CD, Osher S. Sparse dynamics for partial differential equations. Proceedings of the National Academy of Sciences. 2013;110(17):6634–6639. 10.1073/pnas.1302752110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Mackey A, Schaeffer H, Osher S. On the Compressive Spectral Method. Multiscale Modeling & Simulation. 2014;12(4):1800–1827. 10.1137/140965909 [DOI] [Google Scholar]

- 56. Bai Z, Wimalajeewa T, Berger Z, Wang G, Glauser M, Varshney PK. Low-Dimensional Approach for Reconstruction of Airfoil Data via Compressive Sensing. AIAA Journal. 2014;53(4):920–933. 10.2514/1.J053287 [DOI] [Google Scholar]

- 57. Proctor JL, Brunton SL, Brunton BW, Kutz JN. Exploiting Sparsity and Equation-Free Architectures in Complex Systems. The European Physical Journal Special Topics. 2014;223(13):2665–2684. 10.1140/epjst/e2014-02285-8 [DOI] [Google Scholar]

- 58. Brunton SL, Tu JH, Bright I, Kutz JN. Compressive sensing and low-rank libraries for classification of bifurcation regimes in nonlinear dynamical systems. SIAM Journal on Applied Dynamical Systems. 2014;13(4):1716–1732. 10.1137/130949282 [DOI] [Google Scholar]

- 59.Koon WS, Lo MW, Marsden JE, Ross SD. Dynamical systems, the three-body problem and space mission design. In: International Conference on Differential Equations. World Scientific; 2000. p. 1167–1181.

- 60. Gardner JP, Mather JC, Clampin M, Doyon R, Greenhouse MA, Hammel HB, et al. The James Webb space telescope. Space Science Reviews. 2006;123(4):485–606. 10.1007/s11214-006-8315-7 [DOI] [Google Scholar]

- 61. Froyland G. Statistically optimal almost-invariant sets. Physica D: Nonlinear Phenomena. 2005;200(3):205–219. 10.1016/j.physd.2004.11.008 [DOI] [Google Scholar]

- 62. Froyland G, Padberg K. Almost-invariant sets and invariant manifolds—Connecting probabilistic and geometric descriptions of coherent structures in flows. Physica D. 2009;238:1507–1523. 10.1016/j.physd.2009.03.002 [DOI] [Google Scholar]

- 63. Dellnitz M, Froyland G, Junge O. The algorithms behind GAIO—Set oriented numerical methods for dynamical systems In: Ergodic theory, analysis, and efficient simulation of dynamical systems. Springer; 2001. p. 145–174. [Google Scholar]

- 64. Froyland G, Gottwald GA, Hammerlindl A. A computational method to extract macroscopic variables and their dynamics in multiscale systems. SIAM Journal on Applied Dynamical Systems. 2014;13(4):1816–1846. 10.1137/130943637 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF)

Data Availability Statement

All relevant data are within the paper, and may be generated using the Matlab codes in the appendix.