Abstract

Coreference resolution is one of the fundamental and challenging tasks in natural language processing. Resolving coreference successfully can have a significant positive effect on downstream natural language processing tasks, such as information extraction and question answering. The importance of coreference resolution for biomedical text analysis applications has increasingly been acknowledged. One of the difficulties in coreference resolution stems from the fact that distinct types of coreference (e.g., anaphora, appositive) are expressed with a variety of lexical and syntactic means (e.g., personal pronouns, definite noun phrases), and that resolution of each combination often requires a different approach. In the biomedical domain, it is common for coreference annotation and resolution efforts to focus on specific subcategories of coreference deemed important for the downstream task. In the current work, we aim to address some of these concerns regarding coreference resolution in biomedical text. We propose a general, modular framework underpinned by a smorgasbord architecture (Bio-SCoRes), which incorporates a variety of coreference types, their mentions and allows fine-grained specification of resolution strategies to resolve coreference of distinct coreference type-mention pairs. For development and evaluation, we used a corpus of structured drug labels annotated with fine-grained coreference information. In addition, we evaluated our approach on two other corpora (i2b2/VA discharge summaries and protein coreference dataset) to investigate its generality and ease of adaptation to other biomedical text types. Our results demonstrate the usefulness of our novel smorgasbord architecture. The specific pipelines based on the architecture perform successfully in linking coreferential mention pairs, while we find that recognition of full mention clusters is more challenging. The corpus of structured drug labels (SPL) as well as the components of Bio-SCoRes and some of the pipelines based on it are publicly available at https://github.com/kilicogluh/Bio-SCoRes. We believe that Bio-SCoRes can serve as a strong and extensible baseline system for coreference resolution of biomedical text.

Introduction

Coreference is defined as the relation between linguistic expressions that are referring to the same real-world entity [1]. Coreference resolution is the task of recognizing these expressions in text and linking or chaining them. It is one of the fundamental tasks in natural language processing and can be critical for many downstream tasks, such as relation extraction, automatic summarization, textual entailment, and question answering. Coreference is generally trivial for humans to resolve and it occurs frequently in all types of text. For instance, consider the simple example below [2]:

-

(1)

JohnX is {a linguist}X. PeopleY are nervous around JohnX, because heX always corrects theirY grammar.

The mentions with the same subscripts corefer. Often, two major types of coreference relations are distinguished: identity and appositive. The coreference relation between John and he and the one between People and their are relations of identity (identity); in other words, the expressions refer to the same entities in the real world. Both coreference relations are signaled with pronouns (personal and possessive, respectively) and are instances of anaphora, since the coreferential mentions (anaphors) point back to previously mentioned items (antecedents) in text. On the other hand, the relation between John and a linguist is an attribute relation (appositive). The attribute relation in this instance is indicated by using an indefinite noun phrase (a linguist) in a copular construction. This type of copular coreference is sometimes referred to as predicate nominative [3]. In appositive relations, coreferential mention is called an attribute and the referent a head [2]. Coreference can be represented as a pairwise relation or as a chain. In the example above, three pairwise relations ({John,a linguist}, {John,he}, {People,their}) and two chains ({John,a linguist,he} and {People,their}) can be distinguished.

Coreference is common in all types of biomedical text, as well. Consider the example below, taken from a drug label in DailyMed [4]:

-

(2)

Since amiodarone is a substrate for {CYP3A and CYP2C8}X, drugs/substances that inhibit {these isoenzymes}X may decrease the metabolism ….

In this example, the anaphor is a demonstrative noun phrase (these isoenzymes) and its antecedents are CYP3A and CYP2C8, constituting an instance of anaphora. This anaphora instance also exemplifies a set-membership relation, since CYP3A and CYP2C8 are members of the isoenzyme set. Resolving this coreference instance would allow us to capture the following drug interactions mentioned in the sentence:

Inhibitors of CYP3A-POTENTIATE-Amiodarone

Inhibitors of CYP2C8-POTENTIATE-Amiodarone

Although research in coreference resolution in computational linguistics dates back to at least 1970s (e.g., Hobbs [5]), it is a difficult problem that remains far from being solved. This can be attributed to several factors:

Coreference is a complex, discourse-level phenomenon, and its resolution needs to take into account the entire text.

There are several distinct subtypes of coreference, such as anaphora, cataphora, and bridging. Each can be expressed using a variety of lexical/syntactic means, as we have seen in the examples above; each combination requiring, sometimes significantly, different processing.

All levels of linguistic information, from morphosyntactic to semantic and discourse-level, contribute to coreference resolution and this requires high-quality natural language processing tools that can address these levels of information accurately.

Corpus annotation for coreference has been notoriously difficult, partly due to cognitive burden it places on the annotator and partly due to terminological confusion [6], often leading to low consistency in annotation [2]. The consequence of this has been the development of several annotation schemes addressing some aspects of coreference, but not others. For example, the pioneering MUC7 dataset [7] considered only identity relations. In the more recent OntoNotes corpus [8], predicate nominatives are not annotated, while explicit appositive constructions which have similar semantic consequences are (e.g., John, a linguist is annotated, while John is a linguist is not).

The evaluation of coreference resolution systems has been controversial. Several evaluation metrics have been proposed [9–12], their results sometimes varying significantly. Pradhan et al. [2] have argued that, in the absence of a specific application, an objective measure of coreference resolution performance is difficult to establish.

Despite these challenges, recent years have seen important advances in this area of research. In the general domain, OntoNotes [8] has emerged as the standard corpus and the annotation scheme and CoNLL shared tasks based on this corpus have provided the platform for evaluation [2, 13]. New evaluation metrics have also been proposed [14].

In biomedical natural language processing (bioNLP), research on coreference resolution is relatively recent. BioNLP is an applied discipline and this is reflected in the mostly pragmatic manner coreference resolution task is approached within the community. It is generally considered a supporting task to more critical applications, such as event extraction or clinical text processing. Most of the early work focused on coreference resolution of specific entity types in biomedical literature, such as bio-entities (chemicals, genes, cells, etc.) [15] or proteins [16, 17]. Corpora have been made available, such as the GENIA protein coreference dataset, which has been used for the BioNLP 2011 supporting shared task on coreference resolution [18]. Coreference in clinical narratives has also been studied [1] and an i2b2/VA shared task on coreference resolution has been organized [19]. There have been attempts to use existing general domain coreference resolution tools (e.g., [20]) on clinical text and biomedical literature; however, the results reported have been generally poor [21, 22], suggesting that biomedical coreference resolution may be a more challenging problem and semantic constraints less utilized in the general domain can provide useful basis for coreference resolution.

In this paper, we focus on biomedical coreference resolution. Our goal has been to develop a modular, flexible coreference resolution framework that can accommodate a wide range of coreference types and expressions, while maintaining generality and extensibility. Towards this goal, we propose a smorgasbord architecture, based on the notion of resolution strategies. Each strategy essentially addresses a coreference type/mention type pair (e.g., anaphora expressed with definite noun phrases) and attempts to resolve instances of this specific combination. A strategy consists of a set of mechanisms for filtering mentions and their candidate referents, for measuring compatibility between a mention and a candidate referent, and for determining the best referent in a set of compatible candidates. The system incorporates a variety of methods for each of these steps, and we formulate coreference resolution as the task of selecting the appropriate methods for each step and combining them to reach desired performance on a given corpus or for a downstream task, analogous to selecting dishes from a smorgasbord. The core of the framework is designed to be corpus-agnostic, in the sense that additional methods can be plugged in to address corpus-specific concerns, and pre- and post-processing steps can be used to tailor input and output for specific tasks.

The work reported here extends and consolidates our previous work on coreference resolution in consumer health questions [23] and drug labels [24]. To aid and inform system development, we annotated a corpus of structured drug labels (SPLs) using a fine-grained coreference annotation scheme and a pairwise relation representation. The corpus focuses on drug/substance coreference, due to our interest in drug information extraction, specifically drug-drug interactions. To show the generality of our approach, we evaluated the system on two other biomedical corpora annotated for coreference: the GENIA protein coreference dataset [17] and i2b2/VA clinical coreference dataset [19], the former using pairwise relations and the latter using coreference chains (clusters). Our results show that our framework, in its current state, can address coreference in different types of biomedical text to varying degrees. The smorgasbord architecture provides the means for a strong, robust baseline, with the incorporated resolution mechanisms, while also allowing addition of new mechanisms. While the system performs better than the state-of-the-art results on biomedical literature, we find that there is room for improvement for coreference resolution in clinical reports. On the other hand, on SPLs, the system performs significantly better than the baseline, in the absence of a comparable system.

To avoid terminological confusion, it should be noted that coreferring expressions (e.g., anaphor and antecedent) are sometimes collectively called markables [7], while others make a distinction between the referring mention and the referent; for example, in the ACE corpus [25], the referring mentions are simply called mentions, and the referents are called entities. In this work, we will generally make the distinction between the referring mention and the referent, and use specific terms, such as anaphor or antecedent, when possible. When this is not relevant, we will use the terms mention and referent. We will also refer to specific coreference type under discussion (e.g., anaphora), when relevant, rather than using the generic term, coreference.

Related Work

In the general English domain, early coreference resolution systems often focused on pronominal coreference using rule-based approaches [5, 26, 27]. These approaches relied on syntactic structure as well as discourse constraints, such as those predicted by the Centering Theory [28], which investigates the interaction of local coherence with the choice of linguistic expressions. With the availability of annotated corpora, such as MUC7 [7] and ACE [25], these approaches were mostly supplanted by supervised learning approaches [29–31]. Joint coreference resolution of all mentions in a document has also been explored [3]. Somewhat surprisingly, in recent years, systems based on deterministic rules have been shown to provide superior performance in coreference resolution tasks. Haghighi and Klein [32] proposed an algorithm that modularized syntactic, semantic, and discourse constraints on coreference and learned syntactic and semantic compatibility rules from a large, unlabeled corpus. Syntactic constraints included recognizing structures such as appositive constructions and i-within-i filter, which prevents parent noun phrases in a parse tree from being coreferent with any of children. Semantic compatibility of headwords and proper names were mined from Wikipedia and newswire texts. In a similar vein, Lee et al. [20] combine global information with simple but precise features in a sieve architecture. In the first stage of the architecture, mention detection is performed with high recall. The second stage consists of using precision-ranked coreference sieves (essentially deterministic models for exact string match, proper head noun match, etc.) and global information through an entity-centric clustering model. They achieved the best performance in the CoNLL 2011 shared task on unrestricted coreference resolution [13], a task that was based on the coreference annotations in the OntoNotes corpus [8]. Context-dependent semantics/discourse, coordinate noun phrases, and enumerations are given as the shortcomings of the system. The sieve architecture has been adopted by some of the most successful systems in the CoNLL 2012 shared task on multilingual coreference resolution, as well [2], and it has been made part of the Stanford CoreNLP toolkit [33]. In addition to these studies that focus on end-to-end coreference resolution, there is also a rich body of research on more specific aspects of coreference resolution, such as recognizing non-referential mentions (e.g., pleonastic it) [34], anaphoricity detection (i.e., determining whether a mention is anaphoric or not) [30, 35], distinguishing coreferential vs. singleton mentions [36], and resolution of discourse-deictic pronouns [37].

In the biomedical domain, early work focused on coreference resolution for biomedical literature. Castaño and Pustejovsky [15] described a rule-based system for resolution of pronominal and sortal (nominal) anaphora involving bio-entities (e.g., amino acids, proteins, cells). Their system makes heavy use of semantic type information from UMLS [38]. A resolution context is defined for each anaphor (e.g., the context for antecedents of reflexive pronouns is taken to be the current sentence) and all antecedent candidates in the context are scored based on their compatibility (e.g., number, person agreement, semantic preference) with the anaphor. The candidate with the highest cumulative salience score above a threshold is taken to be the antecedent. They annotated a corpus of 46 MEDLINE citations with anaphora information, achieving 73.8% F1 score. Kim et al. [16] augmented a system that extracts general biological interaction information with pronominal and nominal anaphora. For pronominal anaphora, they adopted Centering Theory and exploited syntactic parallelism of the anaphor and its antecedent. Their nominal anaphora resolution is similar to Castaño and Pustejovsky’s [15] salience measure-based approach; however, the former pay special attention to certain syntactic structures, such as coordinate noun phrases and appositive constructions. They reported precision of 75% and recall of 54% on a small MEDLINE dataset, yielding an F1 score of 63%. In contrast to these studies, Gasperin and Briscoe [39] focused on coreference in full-text biological articles and provided an annotated corpus of five articles on molecular biology of Drosophila melanogaster. They excluded pronominal anaphora relations from the annotation, due to their sparsity in biomedical literature, a fact confirmed by other studies, as well [40]. Drosophila melanogaster corpus is also unique in that three types of associative coreference are annotated: homology, related biotype (e.g., a gene and its product, a protein), and the set-membership relations. The first two can be considered specific to the biological domain. Their resolution approach uses a Bayesian probabilistic model and achieves an F1 score of 57% for coreference relations, but it is less successful in identifying biotype and set-membership relations. In the BioNLP 2011 shared task on event extraction [18], a supporting task on protein coreference resolution was proposed, based on the observation that one of major difficulties in event extraction is coreference resolution. A set of MEDLINE abstracts on transcription factors from the GENIA corpus [41] was annotated for protein coreference; pronominal anaphora as well as nominal anaphora were considered. Six systems participated in the task and the best system, an adaptation of a coreference resolution system developed for newswire text, achieved an F1 score of 34.05% [42], indicating a significant degradation in performance by the change of the domain. A similar trend was noted recently by Choi et al. [22], who used the state-of-the-art Stanford CoreNLP deterministic coreference resolution system [20] on this dataset and reported extremely poor results, partly attributed to lack of semantic knowledge usage. Nguyen et al. [17] achieved an F1 score of 62.4% on the same dataset, integrating domain-specific semantic information, confirming the more prominent role of semantic knowledge in biomedical coreference. Coreference information has also been integrated into event/relation extraction pipelines and varying degrees of improvements due to coreference resolution have been reported [43–46].

Coreference annotation in biomedical literature has mostly focused on annotating the mention pairs that corefer, rather than mention chains, which is the norm in the general domain. This is mostly driven by pragmatic concerns: often, coreference resolution is conceived as a task that supports other, more salient tasks, such as event extraction. In such cases, it is less important to identify the full mention cluster accurately than to identify one of the referents or, in the case of set-membership coreference, the member referents belonging to the set. Once the referents are identified, due to the transitive closure of the pairwise decisions, the mentions can mostly be substituted with the referents and the more salient tasks can be tackled in the usual manner. In addition, tasks that depend on coreference resolution often involve semantic components and will perform concept recognition/normalization, using tools such as MetaMap [47]. By addressing synonymy, such tools can aid in forming mention clusters, if needed. One exception to this pragmatic approach in studies focusing on literature is the coreference annotation in the CRAFT corpus [48], in which all mentions are annotated to form coreference chains in full-text articles. In addition, in contrast to other corpora, all semantic types are considered. CRAFT annotation guidelines mostly follow those of the OntoNotes corpus, although there are differences, such as CRAFT’s annotation of generics (such as bare plurals) as mentions. We are not aware of any coreference resolution study using this corpus. Another annotation effort (HANAPIN) focused on the biochemistry literature [49]. This corpus consists of 20 full-text, open-access articles. In addition to nominal and pronominal anaphora generally taken into account, abbreviation/acronyms as well as numerical coreference were annotated. A wider range of entity types, including drug effects and diseases, were considered.

The research in coreference resolution for clinical narratives began in earnest after the availability of the coreference annotation in the i2b2/VA corpus [19] and the ODIE corpus [50], both of which were used for training and evaluation in the 2011 i2b2/VA shared task [19]. Coreference annotation in these corpora is similar to that in the OntoNotes corpus, in that mention clusters are annotated. Entity types annotated include problem, person, test, treatment, anatomical site. Supervised learning, rule-based, and hybrid approaches were explored by the systems participating in the shared task; the best performance on the i2b2/VA corpus was reported by a supervised learning method incorporating document structure and world knowledge [51]. On the other hand, a rule-based system performed best on the ODIE corpus [52], while hybrid approaches using sieve architecture performed successfully overall [53, 54]. Attempts to use the existing general domain coreference tools for the task yielded poor results [21].

Coreference resolution has also been attempted in the so-called gray literature genre. For example, Segura-Bedmar et al. [55] reported a corpus of 49 drug interaction documents annotated with pronominal and nominal anaphora relations (DrugNerAR). Their resolution approach exploits Centering Theory for pronominal anaphora. For nominal anaphora, semantic information extracted from UMLS as well as information about drug classes are used. They report an F1 score of 0.76, a significant improvement over the baseline method (0.44), which consists of mapping the anaphors to the closest nominal phrases. Kilicoglu and Demner-Fushman [24] reported experiments on the DrugNerAR corpus as well as another similar corpus extracted from the DailyMed structured drug labels. Their results illustrated the importance of appositive constructions and coordinate noun phrases in resolving anaphora in drug-related texts. In another study, Kilicoglu et al. [23] investigated the role of anaphora and ellipsis in understanding consumer health questions and reported an 18 point improvement in F1 score in question frame extraction due to resolving pronominal and nominal anaphora.

Materials and Methods

In this section, we first describe in detail our fine-grained coreference annotation of structured drug labels from DailyMed. Next, we discuss the smorgasbord architecture and individual methods currently used by resolution strategies, providing examples from this annotated dataset. We end the section with a brief discussion of evaluation metrics.

Coreference Annotation of Structured Drug Labels

We annotated a set of structured drug labels (SPLs) extracted from DailyMed with several types of coreference relations. The annotated corpus consists of 181 labels for drugs that are used in the treatment of cardiovascular disorders, containing 348,513 tokens (approximately 1,925 tokens per label). This set was initially extracted from DailyMed for drug-drug interaction (DDI) extraction studies and was annotated with several entity and relation types [56]. In this earlier annotation study, the annotated entity types were drug, drug_class, and substance. Over the course of that annotation study, it was observed that coreference resolution would assist in the DDI extraction task and, therefore, some coreference relations were also annotated. However, the annotators were instructed to annotate coreference only when its resolution would be beneficial to the DDI task; therefore, coreference annotation in this corpus is sparse. For example, no coreference was annotated for the sentence below, even though there is a clear anaphoric relation between the product and its antecedent Plavix.

-

(3)

[Plavix]Antecedent is contraindicated in patients with hypersensitivity (e.g., anaphylaxis) to clopidogrel or any component of [the product]Anaphor

In the current work, we annotated coreference in this corpus regardless of whether it was useful for the DDI task or not, so that the dataset could be used to train and evaluate coreference resolution systems focusing on drug-related information. To make the task feasible while at the same time ensuring that the annotations could still be useful for learning algorithms, we limited coreference annotations to those that involve drugs and substances, ignoring coreference of other semantic types, such as disorders and procedures. While we constrained ourselves to this specific semantic group, we aimed at being general with respect to coreference types that could be annotated; we not only considered anaphora relations that are typically annotated in such corpora but also cataphora, appositive and predicate nominative relations, thus, taking into account both identity and appositive relations. Cataphora refers to coreference relations in which the referent (consequent) appears in the context following the coreferential mention (cataphor). We also annotated set-membership coreference, very prevalent in SPLs and resolution of which is critical for downstream tasks. Furthermore, we did not ignore generics (e.g., bare plurals), as was done in OntoNotes coreference annotation [2], agreeing with the rationale presented by Cohen et al. [48] with respect to lack of generics in biomedical text. On the other hand, indirect coreference relations [57], such as part/whole relations (meronymy) and bridging inference, and abbreviations/acronyms were left out of the scope of this project.

To facilitate the annotation task, we leveraged the entity annotations already present in the DDI corpus. In a pre-annotation step, we extracted all annotated entity strings in the corpus and, ignoring case differences, automatically annotated all unannotated occurrences of these strings with the entity type most frequently associated with it. For example, all mentions of ACE inhibitors were annotated as drug_class. This allowed the annotators to better focus on the coreference annotation task. The annotators were instructed to annotate entity mentions in case they were missed by this pre-annotation procedure. They were also instructed to correct/delete coreference annotations present from the previous study when necessary. Several documents were removed from the collection, since they overlapped significantly with other drug label documents. In pre-annotation, we also automatically removed coreference-related annotations (mention and coreference) that did not involve drugs or substances. For example, we deleted annotations associated with mentions such as this process, such an effect, which resulted in a reduction in the number of initial coreference-related annotations, in contrast to an increase in the number of entity-related annotations, as seen in Table 1:

Table 1. Number of annotations before and after pre-annotation.

| Annotation Type | Original | With pre-annotations |

|---|---|---|

| drug | 4621 | 13144 |

| drug_class | 2808 | 5635 |

| substance | 153 | 589 |

| mention | 262 | 198 |

| coreference | 431 | 352 |

In the current study, we view coreference annotation as a pairwise relation annotation task, rather than a coreference chain annotation task. This formulation is similar to that adopted in most biomedical corpora (e.g., [15, 18, 39]), and differs from most coreference corpora in the general domain (e.g., [8]). Like others [39], we take the view that from pairwise annotations, coreference chains can be largely inferred by merging the links between mentions of the same entity, with the aid of a concept normalization tool such as MetaMap [47]. This formulation also simplifies the annotation task significantly, since all the annotator needs to do is to link a mention to its closest referent.

The annotation was carried out by two annotators, a medical librarian and a physician with a degree in biomedical informatics. Both had been involved with the previous DDI annotation study, as well, and were familiar with the topics covered in the corpus. brat annotation tool [58] was used for annotation. The entire corpus was double-annotated and the annotations were later discussed and reconciled by both annotators. Disagreements were resolved by the authors of this paper. The annotation was completed in approximately 3 months, annotators splitting their time between this task and their other work-related activities. The basic instructions provided to the annotators are given in Appendix.

The annotation study proceeded in several steps:

Practice phase: Annotators were presented with basic annotation guidelines that provided definitions of the relevant phenomena and examples. Each annotator then annotated five drug label documents. Annotation differences were identified and the primary author (HK) and the annotators discussed these differences to further refine and clarify the guidelines.

Main annotation phase: Annotators annotated in batches of approximately 30 labels at a time. The annotators could discuss complex cases or ask for clarification. Once the annotation of a batch was completed by both annotators, inter-annotator agreement was calculated to assess the progress. The annotators then reconciled their annotations, consulting with the authors in the cases of disagreement. The guidelines were updated, if necessary.

-

Semi-automated fine-grained annotation phase: The primary author conducted this phase. All mention and coreference annotations were semi-automatically subcategorized into fine-grained classes, and each element of the coreference relations were labeled with the appropriate role (antecedent, cataphor, attribute, etc.). The motivation for this step is that it is generally accepted that different approaches are needed to detect and resolve different types of coreference (e.g., anaphora vs. appositive) indicated by different means (pronouns vs. noun phrases). These fine-grained annotations, then, may serve to train/evaluate such type-specific approaches. We also performed quality control of the annotations in this step. In this phase, the mention annotations were subcategorized into the following classes:

- personal_pronoun (personal pronoun), possessive_pronoun, demonstrative_pronoun, relative_pronoun, indefinite_pronoun, distributive_pronoun, reciprocal_pronoun

- definite_np(definite noun phrase), demonstrative_np, indefinite_np, distributive_np, zero_article_np

The coreference annotations were subcategorized as follows, with the corresponding role labels given in parentheses. An example of each type is also given:- anaphora (Anaphor, Antecedent)

- [Plavix]Antecedent is contraindicated in patients with hypersensitivity (e.g., anaphylaxis) to clopidogrel or any component of [the product]Anaphor.

- cataphora (Cataphor, Consequent)

- Because of [its]Cataphor relative beta1-selectivity, however, [Lopressor]Consequent may be used with caution in patients with bronchospastic disease.

- appositive (Attribute, Head)

- the pharmacokinetics of [S-warfarin]Head ([a CYP2C9 substrate]Attribute).

- predicate_nominative (Attribute, Head)

- [Clopidogrel]Head is [a prodrug]Attribute.

The total number of resulting annotations in the corpus is given in Table 2. The fact that the number of coreference annotations is approximately one and a half times that of mention annotations is an indication of the prevalence of set-membership coreference in the corpus.

Table 2. Coarse-grained annotation counts.

| Annotation Type | Number of annotations |

|---|---|

| drug | 13124 |

| drug_class | 5628 |

| substance | 713 |

| mention | 1976 |

| coreference | 3006 |

The distribution of mention annotations after semi-automated fine-grained annotation is given in Table 3. The distribution shows the predominance of nominal mentions, even though personal and possessive pronouns also appear in more substantial quantities than they do in biomedical literature [39].

Table 3. Fine-grained coreferential mention counts.

| Type | Number of annotations | % |

|---|---|---|

| personal_pronoun | 196 | 9.9 |

| possessive_pronoun | 230 | 11.6 |

| demonstrative_pronoun | 8 | 0.4 |

| relative_pronoun | 32 | 1.6 |

| indefinite_pronoun | 3 | 0.2 |

| distributive_pronoun | 14 | 0.7 |

| reciprocal_pronoun | 6 | 0.3 |

| definite_np | 587 | 29.7 |

| demonstrative_np | 367 | 18.6 |

| indefinite_np | 328 | 16.6 |

| distributive_np | 61 | 3.1 |

| zero_article_np | 144 | 7.3 |

The distribution of coreference annotations after semi-automated fine-grained annotation is given in Table 4.

Table 4. After fine-grained annotation.

| Coreference Type | Total | % | By Mention Type |

|---|---|---|---|

| anaphora | 2021 | 67.2 | definite NP (595), demonstrative NP (571), possessive pronoun (239), personal pronoun (205), distributive NP (131), zero article NP (116), indefinite NP (63), relative pronoun (49), distributive pronoun (28), reciprocal pronoun (12), demonstrative pronoun (9), indefinite pronoun (3) |

| cataphora | 488 | 16.2 | definite NP (419), demonstrative NP (34), indefinite NP (20), possessive pronoun (12), distributive NP (2), personal pronoun (1) |

| appositive | 312 | 10.4 | indefinite NP (146), zero article NP (106), definite NP (60) |

| predicate_nominative | 185 | 6.2 | indefinite NP (147), zero article NP (30), definite NP (8) |

We calculated inter-annotator agreement after each batch of approximately 30 files was fully annotated. We take the F1 score when one set of annotations is taken as gold standard as the inter-annotator agreement, a measure often used in calculating relation annotation agreement in the absence of negative cases [59]. Agreement on both mentions and coreference relations was considered and both the exact match and the approximate match of the annotations were taken into account. In approximate matching, we considered two mentions to be a match, if their textual spans overlapped. Two coreference relations were considered to be a match, if both their mentions matched. Inter-annotator agreement calculations were based on coarse-grained annotations. The agreement results are given in Table 5. They show a clear improvement trend in inter-annotator agreement in the course of the study. The high agreement on the final batch is particularly encouraging, indicating that coreference can be reliably annotated by non-linguists provided with some training and guidelines.

Table 5. Inter-annotator agreement.

| Mention | Coreference | |||

|---|---|---|---|---|

| Batch | Exact | Approximate | Exact | Approximate |

| 1 | 0.6078 | 0.6976 | 0.4888 | 0.6485 |

| 2 | 0.7781 | 0.8138 | 0.6382 | 0.7083 |

| 3 | 0.7514 | 0.8171 | 0.5970 | 0.7164 |

| 4 | 0.8218 | 0.8764 | 0.7399 | 0.8074 |

| 5 | 0.8315 | 0.8853 | 0.7255 | 0.8309 |

| 6 | 0.9485 | 0.9708 | 0.8651 | 0.8921 |

Bio-SCoRes Framework

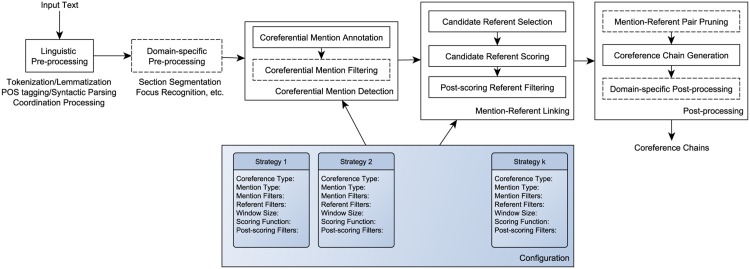

Our coreference resolution framework follows a pipeline architecture, consisting of several mandatory and optional steps. These steps are illustrated in Fig 1. The mandatory steps are illustrated as solid boxes, while the optional steps are shown as dotted boxes. The methodology is driven by resolution strategies, which collectively form a configuration. Each resolution strategy addresses coreference resolution of a specific mention type and declares a combination of methods that address a particular aspect of the coreference resolution process. These strategies are intended to be modular, so that new methods can be defined and they can be mixed and matched for different text types and domains in a smorgasbord style. In this subsection, in addition to discussing the components of the framework, we also describe the particular methods that we implemented to address coreference in SPLs, some of which were used for other text types, as well. In later sections, we will also describe the additions/modifications we made to this core pipeline to address coreference in biomedical literature and discharge summaries.

Fig 1. The high-level view of the Bio-SCoRes framework.

As can be seen from Fig 1, the mandatory components of the framework are linguistic pre-processing, coreferential mention detection, mention-referent linking and, largely corpus-specific, post-processing. Mention detection and mention-referent linking are at the core of the framework. On the other hand, optional components can be used for specific tasks that can aid in resolution, such as section segmentation, or in post-processing to prune unwanted coreference relations.

Linguistic Pre-processing

The framework presupposes linguistic processing of the input documents. It requires lemma, part-of-speech and character offsets for individual tokens as well offsets, syntactic parse trees and dependency relations for sentences. For the experiments reported in this paper, for this type of lexical and syntactic analysis, we used Stanford CoreNLP toolkit [33]. The framework does not perform named entity recognition; however, it is designed to accept named entity annotations extracted by an external system or assigned manually. Named entities are expected to have semantic type information (e.g., drug, substance, etc.). The system can also use an additional, coarser level of semantic typing called semantic groups, each of which can contain multiple more fine-grained semantic types. Even though it is possible to create a coreference resolution pipeline that does not use semantic information at all, this is likely to result in impoverished performance.

Once the lexical, syntactic, and semantic information for a document is obtained, we perform a series of syntactic dependency transformations, a subset of those specified in the Embedding Framework [60]. These syntactic transformations serve several purposes, such as a) integrating semantic information with the syntactic structure, b) addressing syntactic phenomena such as coordination, and c) correcting potential dependency parsing errors. We sequentially apply the following transformations in our pipeline:

Semantic Enrichment allows merging named entity annotations with the tokens and into the dependency graph and results in a simplified dependency graph in which intra-entity dependencies are deleted.

PP-Attachment Correction attempts to correct prepositional phrase attachment errors, generated frequently by syntactic parsers, using deterministic rules discussed in Schuman and Bergler [61].

Modifier Coordination Transformation rearranges dependency relations involving named entities that appear in pre-modifier position of noun phrases. This is mostly a corrective transformation.

Term Coordination Transformation attempts to address complex, serial coordination cases involving named entities, by exploiting information about punctuations and parentheticals.

Coordination Transformation identifies the arguments of a conjunction and modifies the dependency graph to better reflect the semantic dependencies between the conjunction and its arguments.

NP-Internal Transformation performs a kind of NP-chunking using dependency relations and semantic information, such as named entities.

Using the transformed dependencies from this step, we next recognize and explicitly represent the coordinated named entities. In addition to being syntactically coordinated, we also require that the named entities be semantically compatible. Syntactic coordination information is directly derived from the modified dependency graph. Two syntactically coordinated named entities are taken to be compatible, if they share a semantic type/group. These coordination-specific steps are expected to aid in resolution of set-membership coreference, a common occurrence in biomedical text.

Domain-specific Pre-processing

While certain types of pre-processing can be useful for coreference resolution in general (such as coordination processing), others can aid the task in specific text types or domains. We refer to such optional steps that can aid the resolution process as domain-specific pre-processing. For example, it is widely accepted that discourse structure and coreference are closely linked; in other words, discourse structure may constrain where the referents of a mention can be found [62]. For SPLs and clinical reports, which can be lengthy and are generally composed of multiple sections, it can be useful to segment the text into sections to constrain the search space. Another example of a domain-specific pre-processing is recognition of the document topic (focus). For example, drug labels describe a single drug and its ingredients, and it could help the resolution process to recognize these terms.

For our experiments on SPLs, we performed section segmentation. To accomplish this, we simply compiled a list of section headers used in the labels, including DRUG INTERACTIONS, PRECAUTIONS, and WARNINGS, and used simple regular expressions to recognize them.

Another pre-processing step involved recognition of discourse-level coordination and was performed mainly to improve cataphora resolution. An example of discourse-level coordination is given below.

-

(4)

When administered concurrently, [the following drugs]Cataphor may interact with thiazide diuretics.

- [Antidiabetic drugs (oral agents]Consequent and [insulin]Consequent): Dosage adjustment of the antidiabetic drug may be required.

- [Lithium]Consequent: Diuretic agents increase the risk of lithium toxicity. …

- [Nonsteroidal anti-inflammatory drugs]Consequent (NSAIDs) and [COX-2 selective agents]Consequent: When Amturnide and nonsteroidal anti-inflammatory agents are used concomitantly, …

To resolve such instances, we need to recognize that the consequents are part of an itemized list and they should be treated as conjuncts. The list recognition step presupposes that the label is segmented into sections. We group all the terms in a given section by their semantic group. Iterating through each term group, we check whether a term in the group is the first element on its line and, if so, add it to the discourse-level conjunct list, with respect to its semantic group. If the term itself is coordinated with others on the same line, they are added to the conjunct list, as well.

Configuring Resolution Strategies

The core coreference resolution steps, mention detection and mention-referent linking, are driven by a configuration determined according to specific needs of the corpus or task, that consists of a set of resolution strategies. Taken together, resolution strategies determine the coreference types to be addressed by the framework and the mention types to consider for each coreference type. For example, our coreference resolution pipeline for SPLs recognizes the following mention types: pronouns (personal, possessive, distributive, and reciprocal) and noun phrases (definite, demonstrative, distributive, indefinite, and zero article). Though annotated, demonstrative pronouns and indefinite pronouns are not considered, since they are rarely used to indicate drug coreference. The pipeline addresses four types of coreference relations annotated in the SPLs: anaphora, cataphora, appositive, and predicate nominative.

Resolution strategies are declarative and each consists of the following elements:

Coreference type indicates the type of coreference relation the strategy addresses (e.g., anaphora, cataphora).

Mention type indicates the syntactic class of the mentions the strategy addresses (e.g., personal pronoun, definite noun phrase).

Coreferential mention filters indicate the methods to eliminate unwanted mention annotations from coreference consideration (e.g., elimination of rigid designators [15], such as the p38 MAP kinase).

Candidate referent filters indicate the filtering methods to eliminate candidate referents incompatible with the mention on some constraint (e.g., elimination of candidates that occur after the mention for anaphora cases, elimination of candidates outside a predefined window).

- Scoring function is used to calculate a cumulative score for the compatibility between a coreferential mention and a candidate referent. Compatibility is measured using a selection of agreement methods, each associated with a reward for agreement and a penalty for disagreement. Given a mention m and a candidate referent c, the compatibility score of c with respect to the mention m (SC(m,c)) using a set of k agreement methods AM1…k(M,C) can be given recursively as:

where Rew(AMk) indicates the reward for agreement on AM, and Pen(AMk) the penalty for disagreement. Initially,

Scoring function is inspired by the salience measures used by Lappin and Leass [27] as well as Castaño and Pustejovsky [15]. Reward and penalty values can be used to encode hard and soft constraints. Post-scoring referent filters indicate the methods to use to identify the best referent among a set of scored candidate referents. Often, a filtering method based on scores is combined with another salience measure to break ties (e.g., a threshold-based filter followed by a proximity filter).

As an example, the strategy used in anaphora resolution of personal pronouns in SPLs is given in Table 6. This strategy indicates the following:

It is applicable for anaphora resolution of personal pronouns (Coreference Type and Mention Type).

Only third person personal pronouns (e.g., it, they, them) are considered and pleonastic it pronouns (as in it is accepted that …) are ignored (Mention Filters).

Candidate antecedent selection is based on running five filters sequentially over the input text (Candidate Filters). For instance, one of these, PriorDiscourse filter, will remove the candidates that do not precede the anaphor and another, WindowSize(2), will remove candidates that are not within two sentences of the mention.

The antecedent scoring is based on its compatibility with the anaphor on four agreement constraints (Scoring Function), including number agreement. If the antecedent and the anaphor agree on number (either both are plural or both are singular), the score is incremented by 1. No penalty is incurred, when the antecedent and the anaphor do not agree on number (0).

After the candidate referents are scored, those with a score less than 4 are eliminated (Threshold(4)) and highest-ranked candidates are kept. If there are multiple candidates at this point, the one closest to the anaphor mention over the parse tree (Salience(Parse)) is selected as the best candidate. If no candidates have scores over 4, no coreference link is generated.

Table 6. Resolution strategy for personal pronominal anaphora.

| Coreference Type | Anaphora |

| Mention Type | PersonalPronoun |

| Mention Filters | ThirdPerson, PleonasticIt |

| Candidate Referent Filters | PriorDiscourse, WindowSize(2), SyntacticConfig, Default, Exemplification |

| Scoring Function | Person(1,0) + Gender(1,0) + Animacy(1,0) + Number(1,0) |

| Post-scoring Filters | Threshold(4), TopScore, Salience(Parse) |

In the scope of this study, we defined a number of methods for mention filtering, candidate referent filtering, agreement, and post-scoring filtering to use in coreference pipelines. The framework allows defining new filters and constraints, as well. We will describe these methods, when appropriate, in the following sections. The full configuration for coreference resolution of SPLs is given in Table 7.

Table 7. Configuration for structured drug label coreference resolution.

| Mention Type | Mention Filters | Referent Filters | Agreement Methods | Post-Scoring Filters |

|---|---|---|---|---|

| Anaphora resolution | ||||

| PersonalPronoun | ThirdPerson | PriorDiscourse | Person(1,0) | Threshold(4) |

| PleonasticIt | WindowSize(2) | Gender(1,0) | TopScore | |

| SyntacticConfig | Animacy(1,0) | Salience(Parse) | ||

| Default | Number(1,0) | |||

| Exemplification | ||||

| PossessivePronoun | ThirdPerson | PriorDiscourse | Person(1,0) | Threshold(4) |

| WindowSize(2) | Gender(1,0) | TopScore | ||

| Default | Animacy(1,0) | Salience(Parse) | ||

| Exemplification | Number(1,0) | |||

| DistributivePronoun | None | PriorDiscourse | Person(1,0) | Threshold(4) |

| ReciprocalPronoun | WindowSize(2) | Gender(1,0) | TopScore | |

| SyntacticConfig | Animacy(1,0) | Salience(Default) | ||

| Default | Number(1,0) | |||

| Exemplification | ||||

| DefiniteNP | Anaphoricity | PriorDiscourse | Number(1,1) | Threshold(4) |

| DemonstrativeNP | SyntacticConfig | HypernymList(3,0) | TopScore | |

| DistributiveNP | Default | Salience(Default) | ||

| Exemplification | ||||

| Cataphora resolution | ||||

| PersonalPronoun | ThirdPerson | SubsequentDiscourse | Person(1,0) | Threshold(4) |

| PleonasticIt | WindowSize(Sentence) | Gender(1,0) | TopScore | |

| SyntacticConfig | Animacy(1,0) | Salience(Default) | ||

| Default | Number(1,0) | |||

| PossessivePronoun | ThirdPerson | SubsequentDiscourse | Person(1,0) | Threshold(4) |

| WindowSize(Sentence) | Gender(1,0) | TopScore | ||

| Default | Animacy(1,0) | Salience(Default) | ||

| Number(1,0) | ||||

| DiscourseConnective(1,2) | ||||

| DefiniteNP | Cataphoricity | SubsequentDiscourse | Number(1,1) | Threshold(4) |

| SyntacticConfig | HypernymList(3,0) | TopScore | ||

| WindowSize(2) | Salience(Default) | |||

| Default | ||||

| Appositive resolution | ||||

| DefiniteNP | None | WindowSize(Sentence) | Number(1,1) | Threshold(4) |

| IndefiniteNP | Default | SyntacticAppositive(3,2) | TopScore | |

| ZeroArticleNP | HypernymList(1,1) | Salience(Default) | ||

| Predicate nominative resolution | ||||

| IndefiniteNP | None | PriorDiscourse | Number(1,1) | Threshold(4) |

| ZeroArticleNP | WindowSize(Sentence) | SyntacticPredicate | TopScore | |

| Default | Nominative(3,2) | Salience(Default) | ||

| HypernymList(1,1) | ||||

Coreferential Mention Detection

The first phase of coreference resolution is concerned with annotating and filtering the coreferential mentions included in the resolution strategies (illustrated as Coreferential Mention Annotation and Coreferential Mention Filtering in Fig 1). The annotation is rule-based and relies on lexical and syntactic cues. We examine all tokens in text and annotate them with the appropriate mention type, if they satisfy the appropriate constraints, as described below:

Personal and possessive pronouns: Personal and possessive pronouns are detected based simply on their part-of-speech (PRP and PRP$, respectively). Reflexive pronouns are also marked as such, based on their token (itself, themselves).

Demonstrative pronouns: Demonstrative pronouns (this, that, these, those) are recognized based on their tokens. We further require that the demonstrative pronoun that is not used as a complementizer (as in The results show that …) and that these pronouns are not in the determiner position of a noun phrase. These rules are implemented using dependency relations.

Distributive pronouns: Distributive pronouns (both, either, neither, each) are also determined based on the dependency relations they occur in. We require that the pronoun under consideration is neither in the dependent position in a preconj (preconjunct) dependency nor in the head position of a pobj (object of preposition) relation, to exclude phrases like both INDOCIN I.V. and furosemide from consideration.

Reciprocal pronouns: We determine reciprocal pronouns (each other, one another) based on simple string match, ignoring case.

Indefinite pronouns: Indefinite pronouns are also determined based on their tokens (another, some, all) and their part-of-speech (DT).

Relative pronouns: Relative pronouns (e.g., who, which, whose, that) are recognized based on their part-of-speech (WDT, WRB, WP, or WP$, and IN for that). We further require that that is not used as a complementizer.

Definite noun phrases: One of the syntactic transformations (NP-Internal Transformation) chunks noun phrases in a sentence. We mark as definite noun phrases those that begin with the definite article, the.

Demonstrative noun phrases: Similarly to definite noun phrases, we mark as demonstrative noun phrases those that begin with the demonstrative determiners, this, that, these, those, or with the demonstrative adjective, such.

Distributive noun phrases: We mark those that begin with the distributive determiners either, neither, both, each.

Indefinite noun phrases: We mark those that begin with the indefinite articles a, an, indefinite determiners any, some, all, another, and with the indefinite adjective, other.

Zero article noun phrases: These are distinguished by the absence of any triggers for other noun phrase types. For precision, we also require that the head of the noun phrase be a hypernym for the semantic type/group it belongs to (e.g., medication is a hypernym for the drug semantic type). The system currently provides a small list of such hypernyms for several semantic groups, which can be extended/redefined.

After mention types are annotated, mention filters are applied to eliminate from further consideration mentions that are not coreferential or not in the scope of resolution. Four mention filters that were used in SPLs were ThirdPronoun, PleonasticIt, Anaphoricity, and Cataphoricity filters. The first filter (ThirdPronoun) applies to personal and possessive pronouns and eliminates first and second person pronouns (such as I, me, you, yourself) from consideration, since the corpus is concerned with drug/substance coreference only. The second (PleonasticIt) applies to personal pronouns only and removes it mentions that are pleonastic, and therefore, not coreferential. An example of pleonastic it is given below.

-

(5)

It is not certain that these events were attributable to DEMADEX.

Pleonastic it is recognized using a simple dependency-based rule that mimics patterns in Segura-Bedmar et al. [55], given below.

nsubj*(X,it) ∧ DEP(X,Y) ⇒ PLEONASTIC(it)

where nsubj* refers to nsubj or nsubjpass dependencies and DEP is any dependency, where DEP ∉ {infmod, ccomp, xcomp, vmod}.

Anaphoricity and Cataphoricity mention filters are largely similar. They both eliminate noun phrases that correspond to rigid designators [15] and noun phrases that are in appositive constructions. For example, the definite noun phrase the nitric oxide/cGMP pathway is eliminated since it is a rigid designator, while the monotherapies in the following sentence is not considered for anaphora, since it is in an appositive construction with the coordinate noun phrase aliskiren and valsartan.

-

(6)

the incidence of hyperkalemia …was about 1%-2% higher in the combination treatment group compared with the monotherapies aliskiren and valsartan, or with placebo

In addition to these two constraints, Anaphoricity filter eliminates noun phrases with the word following (e.g., the following drugs) and the noun phrase that begins a document, since they can only be cataphoric. Cataphoricity filter, on the other hand, keeps those noun phrases eliminated by this constraint, and discards the rest.

Mention-Referent Linking

After the relevant coreferential mentions are selected, we attempt to link them to their referents. The system proceeds from left to right and as coreferential mentions are encountered, it attempts to resolve their referents based on their mention types, the kinds of coreference the system aims to resolve, and the parameters of the appropriate resolution strategies. This phase consists of the following steps: Candidate Referent Selection, Candidate Referent Scoring, and Post-scoring Referent Filtering.

Candidate Referent Selection step is concerned with identifying all the candidate referents for a given mention, and is driven by candidate referent filters parameter of the strategy being applied. Filters are applied sequentially. The following referent filters are used for selection in structured drug labels:

PriorDiscourse: This filter includes as candidates those mentions that precede the coreferential mention. It is applicable for anaphora resolution.

WindowSize: This filter includes as candidates those mentions that are within a predefined number of sentences from the mention. For example, if the window size parameter is 2, candidates are taken to be the mentions that are within two sentences from the coreferential mention. If not used, all document text is used for candidate selection. Sentence and Section can also be specified as the window.

-

SyntacticConfiguration: This filter removes candidates that are in a particular syntactic configuration with the coreferential mention that would render them impossible to corefer. This filter is generally not applicable to appositive and predicate nominative types as well as to possessive and relative pronouns, since in these cases, the candidates may be linked to the mention via syntactic means. This filter works by identifying the syntactic dependency path between the coreferential mention and the candidate referent. If a path is not found, the candidate is included. If a path is found, the candidate is excluded if:

- There is verbal path and the candidate and the mention are in the subject and object positions of a verb.

- There is a nominal path and the candidate and the mention are in the subject and object position of a nominal predicate.

- If the length of the path is at most 2 and one of the dependencies indicates an appositive construction.

- If the length of the path is at most 2 and ignoring those indicating an appositive construction, all dependencies indicate subject, object, prepositional phrase, or coordination constructions.

This filter is a somewhat modified version of i-within-i filter used in [32], among others.

NounPhrase: This filter includes only noun phrases, identified in linguistic pre-processing, as candidate referents.

VerbPhrase: This filter includes heads of verb phrases as candidates.

SemanticClass: This filter includes semantic objects of specific, predefined classes as candidates (e.g., named entities, events).

Default: This filter combines the previous three filters to include candidate referents that pass NounPhrase and SemanticClass filters and exclude those that pass VerbPhrase filter. For SPLs, SemanticClass filter allows named entities, coreferential mentions, and conjunctions indicating a collection of named entities.

- Exemplification: This filter is concerned with set-membership relations and removes from consideration candidates that are specific instantiations of a larger class, also mentioned in text. In the following example, the drugs and drug classes in parentheses are not considered as candidates for the personal pronoun they, whereas the class that they belong to (i.e., Inhibitors of this isoenzyme) is.

-

(7)[Inhibitors of this isoenzyme]Antecedent (e.g., macrolide antibiotics, azole antifungal agents, protease inhibitors, serotonin reuptake inhibitors, amiodarone, cannabinoids, diltiazem, grapefruit juice, nefazadone, norfloxacin, quinine, zafirlukast) should be cautiously co-administered with TIKOSYN as [they]Anaphor can potentially increase dofetilide levels.

-

(7)

SubsequentDiscourse: This filter is similar to PriorDiscourse filter, but it includes the candidates occurring subsequent to the mention, instead. This filter is appropriate for cataphora resolution.

Once a final candidate list is formed by applying the filters above, agreement methods are used to determine the level of compatibility between each candidate and the mention and the candidate is assigned a score. This step (Candidate Referent Scoring) is also driven by the appropriate resolution strategy. If a candidate and the mention are compatible according to a specific agreement method, then the candidate score is incremented by the reward value of the agreement method. Otherwise, the specified penalty is applied, if any, and the overall compatibility score may be lowered. In this section, we provide more details regarding the agreement methods used for SPLs.

Number: This agreement indicates whether the candidate and the mention agree on their number. We calculate the number as either plural or singular. The number is taken as plural for conjunction candidates, for reciprocal and distributive pronouns, plural pronouns (e.g., ours, they, etc.), and collective nouns (e.g., family, population, etc.). Excluding these special cases, the part-of-speech tag is used to determine the number feature, if the mention is a noun. Otherwise, the number feature is taken as singular. For reciprocal and distributive pronouns or mentions with the word two (e.g., these two enzymes), we stipulate that the candidate be a coordinate noun phrase with two named entities, for agreement.

Animacy: We calculate three animacy values: Animate, NotAnimate, and MaybeAnimate. For example, personal pronouns he and she and related forms are assigned the value Animate, while it and related forms are assigned NotAnimate. On the other hand, they and related forms are assigned the value MaybeAnimate. If a population semantic group is defined and the mention in consideration belongs to this semantic group, it is taken as Animate, as well.

Gender: We calculate three gender values: Female, Male and Unknown. Personal pronoun he and related forms are assigned the value Male, whereas she and related forms are assigned Female and it and related forms are assigned Unknown. A small list of Female and Male words (such as mother, father, son) is also consulted. Gender agreement is predicted when the genders of the candidate and the mention match or they are both Unknown.

Person: This agreement indicates whether the candidate and the mention agree on grammatical person, which takes the values First, Second, or Third. We determine the values First and Second by simple regular expressions and otherwise assign the value Third.

HypernymList: This agreement is meaningful when the mention is nominal. It predicts that a candidate with a certain semantic type is in agreement with a mention if the headword of the mention is one of the explicitly defined hypernym words for that semantic type. For example, clopidogrel and the medication are compatible according to this agreement method, because medication is explicitly defined as a high-level term for the drug semantic type. Other hypernyms for this type include drug, agent, compound, solution, product, among others, and have been mined from the training data.

- DiscourseConnective: This agreement is concerned with cataphoric uses of personal and possessive pronouns, constrained with sentence-initial discourse connectives. It allows a personal/possessive pronoun to be cataphoric, if the sentence it appears in begins with one of the specified discourse connectives (because, although, since). In the following example, the discourse connective because of licenses the possessive pronoun its to be cataphoric.

-

(8)Because of [its]Cataphor beta1-selectivity, this is less likely with [ZEBETA]Consequent.

-

(8)

SyntacticAppositive: This agreement is satisfied if the mention and the candidate are in a syntactic appositive construction. In the case the candidate referent is a conjunction, it is sufficient to have one of its conjuncts be in the appositive construction with the mention. Appositive constructions are determined by the presence of a dependency relation of type appos (appositive) or abbrev (abbreviation) between the mention and the referent. To increase recall and deal with often erroneous dependency relations, we also take contiguous noun phrases as appositives. For example, in the fragment the ACE inhibitor enalapril, the noun phrases the ACE inhbitor and enalapril are taken to be appositives.

SyntacticPredicateNominative: This agreement is satisfied if the mention and the candidate are in a copular construction. To determine such constructions, we search for the dependency relation cop (copula) and ignore the cases in which object of the copular construction is negated (neg dependency). If a copular construction exists between the mention and the candidate, we stipulate that the candidate be in the subject position.

After each candidate is scored, the next step is selecting the best candidate as the referent using Post-scoring Referent Filtering. Similarly to previous steps, this is also driven by the appropriate resolution strategy, specifically, its post-scoring filters parameter. The methods specified in this parameter are applied sequentially over the candidate list. If no candidate is left after these filters are applied, no coreference link will be generated. The post-scoring filtering methods used in coreference resolution of SPLs are given below.

Threshold: This filter discards all candidates with a score less than the given threshold.

TopScore: This filter eliminates all candidates, except those with the highest score.

Salience: This filter finds the candidates that are most salient to the mention on a specified measure and eliminates the rest. Default salience measure is proximity to the mention in number of intervening textual units, often used to break ties between compatible candidates. We also use Parse measure, which indicates the path distance from the mention to the referent on the syntactic parse tree. If the candidate and the mention are in different sentences, the sentence distance is added to the distance, as well, with a factor of 2. Using parse tree distance to calculate salience has been shown to be useful especially for pronominal anaphora [5, 26, 32, 55] and reflects the intuition of the Centering Theory [28]. If using the Parse measure results in a tie, we use the Default salience measure to select the best candidate.

Post-processing

Mention-Referent Linking phase results in a list of mention-best referent pairs. The framework does not make assumptions regarding how to generate coreference links from these pairs; however, in general, we expect that some kind of pruning may take place to eliminate pairs that are not of interest or are inconsistent (Mention-Referent Pruning), followed by generation of a new coreference chain or merging with an existing chains (Coreference Chain Generation) and other domain-specific post-processing. We describe these steps for SPLs below. Note that each coreference resolution pipeline based on the Bio-SCoRes framework is likely to have different mechanisms for this phase.

In Mention-Referent Pruning for SPLs, we address the personal and possessive pronouns that are involved in both anaphora and cataphora relations, due to DiscourseConnective agreement measure described earlier. In the example below, an anaphoric pair {its,insulin} and a cataphoric pair {its,ZEBETA} have been identified on the same pronoun.

-

(9)

Nonselective beta-blockers may potentiate insulin-induced hypoglycemia and delay recovery of serum glucose levels. Because of [its]Cataphor beta1-selectivity, this is less likely with [ZEBETA]Consequent.

In this step, we eliminate the anaphoric pair, since we find such pairs less likely to be correct than the cataphoric pairs in these instances.

In the SPL corpus, coreference is annotated as a pairwise binary relation between two terms (mention and referent). If the coreference is a set-membership coreference (i.e., a referent is a coordinate noun phrase with multiple named entity terms), a separate relation is annotated between the mention and each conjunct. In Coreference Chain Generation step, we split such referents into multiple mention-referent pairs, and simply generate a binary relation between the elements of the pair.

In the final domain-specific post-processing step, we prune the coreference relations that do not involve drugs/substances, since they were not annotated in the corpus. In the example below, the coreference relation shown is pruned even though it is accurate, because it is one that is not in the scope of the annotation.

-

(10)

Discuss with [patients]Antecedent the appropriate action to take in the event that [they]Anaphor experience anginal chest pain requiring nitroglycerin following intake of ADCIRCA.

It is conceivable to perform pruning and some other aspects of domain-specific post-processing using various types of filters described earlier. For example, patients in the example above can be filtered out as a candidate using a candidate filtering method that relies on semantic type information. However, this has the drawback of potentially identifying another compatible mention, appearing before patients in text, as the referent. Therefore, it generally makes more sense to address coreference of all semantic types in mention-referent linking step, if possible, and perform pruning as a post-processing step. However, as we will show later, we have also used such filtering methods (based on semantic type/group) in other experiments, when appropriate. The smorgasbord architecture allows mixing and matching various filters for optimal results.

So far, we have described the framework as it was applied to coreference resolution in SPLs using gold entity mentions. We have performed various other experiments on this corpus, as well as experiments on two other coreference corpora, a corpus of i2b2/VA discharge summaries and a corpus of MEDLINE abstracts. Instead of describing the adaptation of the framework to these tasks in this section, we will discuss them in the Results section, when appropriate, for readability.

Evaluation

We evaluated the results generated by our approach for structured drug labels using standard evaluation metrics, precision, recall, and F1 score. In this, we followed the evaluation method adopted for the BioNLP-ST 2011 task on coreference resolution [18], since our annotation methodology was similar to theirs. We evaluated our results on MEDLINE abstracts in the same way. To evaluate the results on the i2b2/VA discharge summaries, we used unweighted F1average over B-CUBED [10], MUC [9], and CEAF [11] metrics, adopted for the i2b2 challenge on coreference resolution [19], based on the same corpus. Briefly, B-CUBED evaluation measures the overlap between the coreference chains predicted and the gold standard chains, while MUC measures the minimum number of pair addition and removals required for them to match the gold chains. On the other hand, CEAF computes an optimal alignment between the predicted chains and the gold standard chains based on a similarity score.

Results and Discussion

In this section, we first discuss the results we obtained on SPLs, using the methodology described in the previous section and analyze some of the errors that the system makes. Next, we describe the adaptation of this methodology to discharge summaries and biomedical literature and discuss the results for these adaptations.

Coreference Resolution on Structured Drug Labels

We split the SPL corpus into training and test subsets and developed the resolution configuration discussed earlier and provided in Table 7, based on the training set. The training set consists of 109 labels and the test set of 72 labels. In the training set, 1358 of the coreference relations are anaphora instances, 312 are cataphora, 203 are appositive, and 113 are predicate nominatives. The corresponding number of coreference instances in the test set are 663, 176, 109, and 72, respectively.

In the first set of experiments, we presuppose that gold named entities (drug, drug_class, and substance types) are known. In another set of experiments, we presuppose that both gold named entities and gold coreferential mentions are known, to assess the mention-referent linking step only. Finally, we experiment with a named entity recognition/concept normalization system for end-to-end coreference resolution on this dataset.

The evaluation results concerning mention detection are shown in Table 8. No results are shown for demonstrative, relative, and indefinite pronouns, since the system did not attempt to detect them, and for reciprocal pronouns, since they did not appear in the test set.

Table 8. Evaluation results for mention detection on the test portion of SPL coreference dataset.

| Precision | Recall | F1 | |

|---|---|---|---|

| PersonalPronoun | 96.8 | 100.0 | 98.4 |

| PossessivePronoun | 95.7 | 63.8 | 76.5 |

| DistributivePronoun | 33.3 | 100.0 | 50.0 |

| DefiniteNP | 85.0 | 94.6 | 89.6 |

| DemonstrativeNP | 93.8 | 96.0 | 94.9 |

| DistributiveNP | 81.3 | 59.1 | 68.4 |

| IndefiniteNP | 79.1 | 72.0 | 75.4 |

| ZeroArticleNP | 41.2 | 59.2 | 48.6 |

| Overall | 80.1 | 82.3 | 81.2 |

The results show that while we achieve good precision and recall for certain types of mentions (personal pronouns, for example), there is clearly room for improvement in detecting other types, particularly distributive pronouns/noun phrases and zero article noun phrases. Most of the errors made in mention detection step were found to be due to erroneous or unspecific dependency relations; for example, some distributive noun phrases were recognized as distributive pronouns, due to absence of required dependency relations, leading to low precision in detecting distributive pronouns and low recall in detecting distributive noun phrases. Ignoring demonstrative, relative, and indefinite pronouns, which we did not attempt to identify, the recall increases from 82.3 to 83.7 and the F1 score from 81.2 to 81.9.

As baseline for coreference resolution, we implemented a simple mechanism which, after noun phrase chunking, identifies coreferential mentions by the presence of certain determiners/pronouns and takes the closest mention to the left with a drug semantic type as the referent. This is roughly the same baseline that has been used in Segura-Bedmar et al. [55]. We also tried a baseline using proximity on either side to account for cataphoric/appositive instances, but this yields poorer results, since anaphora instances are much more prevalent.

Using the configuration given above, we obtain the results given in Table 9 on the test set using gold entity mentions. The number of instances for coreference/mention type pairs are given in parentheses. Evaluation is based on type and approximate span matching of the coreference elements.

Table 9. Evaluation results for coreference resolution on the test portion of SPL coreference dataset.

| Precision | Recall | F1 | |

|---|---|---|---|

| Baseline | 6.0 | 35.6 | 10.3 |

| Bio-SCoRes | 65.5 | 45.2 | 53.5 |

| - Anaphora | 64.8 | 45.3 | 53.3 |

| — PersonalPronoun (60) | 82.9 | 48.3 | 61.1 |

| — PossessivePronoun (74) | 76.1 | 47.3 | 58.3 |

| — DistributivePronoun (12) | 45.4 | 83.3 | 58.8 |

| — DefiniteNP (219) | 55.1 | 44.3 | 49.1 |

| — DemonstrativeNP (190) | 74.4 | 62.6 | 68.0 |

| — DistributiveNP (40) | 41.7 | 25.0 | 31.3 |

| - Cataphora | 61.1 | 37.5 | 46.5 |

| — PersonalPronoun (1) | 100.0 | 100.0 | 100.0 |

| — PossessivePronoun (6) | 100.0 | 100.0 | 100.0 |

| — DefiniteNP (135) | 58.4 | 43.7 | 50.0 |

| - Appositive | 61.1 | 50.5 | 55.3 |

| — DefiniteNP (24) | 86.7 | 54.2 | 66.7 |

| — IndefiniteNP (40) | 91.7 | 55.0 | 68.8 |

| — ZeroArticleNP (45) | 39.2 | 44.4 | 41.7 |

| - PredicateNominative | 93.0 | 55.6 | 69.6 |

| — IndefiniteNP (47) | 96.8 | 63.9 | 76.9 |

| — ZeroArticleNP (24) | 83.3 | 41.7 | 55.6 |

The system performs significantly better than the baseline in this setting. As might be expected from a deterministic, rule-based approach, the system achieves better precision than recall. Predicate nominative instances are considerably easier to resolve, while cataphora and anaphora cases are more difficult. Even though appositive instances behave similarly to predicate nominatives to a large extent, the system does not perform as well on them. Some coreference/mention type combinations are especially challenging and/or rare, and we have not included them in the final configuration. For example, we have not attempted to resolve demonstrative pronouns, since they are often discourse-deictic [63] and rarely refer to drugs in the corpus. We also have not attempted to resolve indefinite pronouns, which are rarely coreferential in text, and relative pronouns, which were sparsely annotated in the corpus. We attempted to resolve indefinite and zero article noun phrases only in the context of appositives and predicate nominatives, since, in such cases, they are syntactically more constrained. Limiting the evaluation to only those coreference type/mention combinations that we in fact addressed, the overall recall increases from 45.2 to 50.3 and F1 from 53.5 to 56.9 (56.7 for anaphora, 52.8 for cataphora, and 70.2 for predicate nominative; recall and F1 score for appositive class remains the same). The largest increase is for the cataphora class, due to a single instance of cataphora that involved 34 consequent drug names linked to a single demonstrative noun phrase, illustrating also the importance coordination recognition for this task. In fact, turning coordination recognition off completely in pre-processing results in poor results, overall F1 score is reduced to 41.7, with the most dramatic effect on anaphora and cataphora classes (F1 score of 43.1 for anaphora and 13.8 for cataphora).

To assess mention-referent linking only, we evaluated the system using gold coreferential mentions, as well, and obtained the results in Table 10. In this setting, the baseline performs much better, indicating that simple keyword search for mentions (the previous baseline method) is inadequate and that coreference links can be recovered successfully, to some extent, without using sophisticated techniques if the coreferential mentions were identified accurately. The system also performs better with gold coreferential mentions; however, the improvement is not as significant as it is with the baseline. Precision is improved across all coreference types, while recall increases only for predicate nominative and anaphora types. The recall drop in appositive type seems to be due to the interaction of syntactic dependency transformation and coreferential mentions.

Table 10. Evaluation results on the test portion of SPL coreference dataset using gold coreferential mentions.

| Precision | Recall | F1 | |

|---|---|---|---|