Abstract

The original neural field model of Wilson and Cowan is often interpreted as the averaged behaviour of a network of switch like neural elements with a distribution of switch thresholds, giving rise to the classic sigmoidal population firing-rate function so prevalent in large scale neuronal modelling. In this paper we explore the effects of such threshold noise without recourse to averaging and show that spatial correlations can have a strong effect on the behaviour of waves and patterns in continuum models. Moreover, for a prescribed spatial covariance function we explore the differences in behaviour that can emerge when the underlying stationary distribution is changed from Gaussian to non-Gaussian. For travelling front solutions, in a system with exponentially decaying spatial interactions, we make use of an interface approach to calculate the instantaneous wave speed analytically as a series expansion in the noise strength. From this we find that, for weak noise, the spatially averaged speed depends only on the choice of covariance function and not on the shape of the stationary distribution. For a system with a Mexican-hat spatial connectivity we further find that noise can induce localised bump solutions, and using an interface stability argument show that there can be multiple stable solution branches.

Keywords: Stochastic neural field, Interface dynamics, Fronts, Bumps, Non-Gaussian quenched disorder

Introduction

The study of waves, bumps and patterns in models of Wilson–Cowan type [1] is now a very mature branch of mathematical neuroscience, as discussed in the review by Bressloff [2], with many practical applications to topics including working memory, visual processing, and attention. For a recent and comprehensive description of neural fields and their applications we refer the reader to the book [3]. It is only relatively recently that stochastic effects in neural fields have begun to be considered, with important applications to problems such as binocular rivalry waves [4] and perceptual switching [5]. These stochastic models are often obtained by considering the addition of noisy currents (notionally a “Gaussian random noise”) to standard (deterministic) neural fields, and the resulting models are cast as stochastic nonlinear integro-differential equations driven by a Wiener process, such as in [6–14]. A rigorous probabilistic framework in which to study these equations has recently been provided by Faugeras and Inglis [15]. The analysis of patterns, waves and bumps in such models has been possible utilising tools from stochastic centre manifold theory (especially tools for weak noise analysis), Fokker–Planck reductions, and other techniques from stochastic calculus developed previously for PDEs. For a recent perspective on this approach the book by Bressloff is a highly valuable resource [16], as well as the paper by Inglis and MacLaurin [17], which presents a general framework in which to rigorously study the effect of spatio-temporal noise on travelling wave fronts. Indeed there is now a quite elegant body of rigorous theory growing up around neural field models with multiplicative stochastic forcing, as exemplified in the paper by Krüger and Stannat [18] using multiscale analysis, which moves beyond formal perturbation methods, to understand front propagation in particular. However, the original work of Wilson and Cowan suggests that another, perhaps more natural, way to introduce stochasticity into neural field models is by treating some of the system parameters as random variables. Indeed, threshold noise in a linear integrate-and-fire model is able to fit real firing patterns observed in the sensory periphery [19]. The simplicity of such models is also appealing from a theoretical perspective, and for a threshold described by an Ornstein–Uhlenbeck process it has recently been shown that analytical (and non-perturbative) expressions for the first-passage time distribution can be obtained [20].

To appreciate the original idea of Wilson and Cowan that threshold noise in switching networks can give rise to a probabilistic interpretation of network dynamics in terms of a smooth firing-rate function it is enough to consider a simple discrete time model for the evolution of neural activity , , in a network with connections :

| 1 |

Here H is a Heaviside step function with threshold h. If we now associate a threshold with each individual node and treat it as a random variable drawn from a normalised stationary probability distribution at each time step then we can take the ensemble average of the above and find

| 2 |

where . Thus we obtain a smooth nonlinear deterministic model describing the average behaviour of a set of switch like elements with random thresholds, with the link between the two determined by the relationship . Since ϕ is a probability distribution, this relationship immediately implies a monotonically increasing firing-rate function. Given a realisation of the thresholds at some time t, it is of interest to ask how the spatial covariance structure of these random thresholds affects network dynamics. This is precisely the question we wish to address in this paper for continuum models of Wilson–Cowan type, in which the random firing threshold is now described as spatially continuous quenched disorder. Although we will restrict our attention to a Gaussian covariance function, we shall consider a broad class of stationary distributions, and present practical techniques from applied mathematics and statistics for working with non-Gaussian distributions. Moreover, by working with the Heaviside choice, as in (1), we will be able to build on the interface approach of Amari [21] to obtain explicit results for travelling fronts and bumps, and their dependence on the threshold noise structure.

In Sect. 2 we introduce our neural field model of choice, as well as the form of the stochastic threshold, namely its steady state distribution and spatial covariance structure. In Sect. 3 we show that, for a given realisation of the threshold, we may use the Amari interface approach to determine the instantaneous speed of a travelling front. We further show how to calculate the effects of the quenched spatial disorder arising from the noisy threshold using a perturbative approach, valid for small deviations of the threshold from its average value. We extend the approach for fronts to tackle stationary bumps in Sect. 4, where we also show how to determine the linear stability of localised solutions. This leads to a prediction that noise can induce multiple stable bumps, which we confirm numerically. Indeed throughout the paper we use direct numerical simulations to illustrate the accuracy of all theoretical predictions. Finally in Sect. 5 we discuss natural extensions of the work in this paper.

The Model

For mathematical convenience it is often easier to work with spatially continuous models rather than lattice models of the type described by (1). We consider a neural field , , , whose dynamics is given by

| 3 |

The kernel w represents the anatomical connectivity, and we have chosen to include the nonlinearity within the spatial integration, though activity based models with the nonlinearity outside the spatial integration may also be considered with the techniques described below (and are qualitatively similar in their behaviour). As it stands the model given by (3) is a standard Amari neural field model for the choice that h, the firing threshold, is a constant function. In this case the model is well known to support travelling waves, including fronts [22] and localised bump states in systems with a mixture of excitation and inhibition. For a review of such behaviour see [23], and for a recent overview of neural field modelling in general see [3].

In this paper we shall consider the case that h is a spatially random function. Given the wealth of mathematical knowledge for Gaussian disorder it would be highly convenient to make this choice for the threshold. However, this is a non-physiological convenience that we would prefer to avoid. Indeed it is very natural to expect threshold noise to be bounded and unlikely to be best described by a symmetric distribution. As such we will consider both Gaussian and non-Gaussian disorder and in particular skewed exponential distributions and distributions with compact support. We shall explicitly model the random firing threshold as

| 4 |

where corresponds to the mean of the threshold, and denotes the quenched disorder with symmetric, bounded and non-negative spatial covariance function . We shall fix this to be a Gaussian shape such that , with

| 5 |

Here κ is the correlation length of the quenched disorder. Note that the variance of the threshold is given by . There exists a sequence of non-negative real numbers, , , which are eigenvalues of the covariance operator, associated with a sequence of eigenfunctions, , , according to

| 6 |

that form a complete orthonormal basis so that we may represent by its Karhunen–Loève decomposition [24–26]

| 7 |

Here the are uncorrelated random variables with zero mean and unit variance, i.e. and . The properties of the ensure that the Karhunen–Loève representation captures the first and second moment of exactly. The latter result follows from the fact that

| 8 |

so that has the expected spectral representation. When the correlation length κ is much smaller than the domain size L, the Karhunen–Loève decomposition of for the Gaussian covariance function (5) with periodic boundary conditions can be very well approximated by [25]

| 9 |

Here we have split the eigenfunctions in (7) into two sets and , which read

| 10 |

with , and . Note that we have

| 11 |

for any n, m. To complete the model setup we need to specify the random coefficients and . They are determined by the local distribution of the quenched disorder . If is Gaussian, it suffices to choose the and as uncorrelated univariate Gaussian random variables, namely and . Indeed there is a large variety of methods to simulate Gaussian disorder including autoregressive-moving-averages [27], circular embedding [28] spectral representations [29] or the Karhunen–Loève decomposition [24–26]. However, if is non-Gaussian, then and are not described by a scaled version of . Thus we require suitable techniques to generate non-Gaussian disorder.

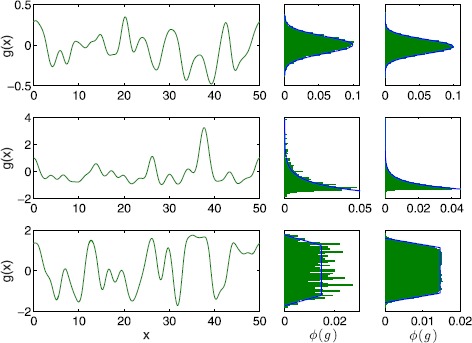

Compared to Gaussian statistics, there are only a few methods for simulating non-Gaussian disorder. Amongst them, translation processes, in which a suitably chosen Gaussian model is non-linearly mapped to the desired non-Gaussian disorder, and a Karhunen–Loève decomposition feature most prominently [30, 31]. We have chosen to employ a Karhunen–Loève decomposition as it is more robust [32], which means that we may use the same technique for both Gaussian and non-Gaussian disorder. We implement the method developed in [30] and provide details of the algorithm in Appendix A, though the main idea is as follows. As a starting point choose uncorrelated and from the probability distribution and generate a large number of samples of the quenched disorder according to (7). Since the and are uncorrelated possesses the prescribed covariance function . However, the probability distribution of differs from . This can be corrected by determining a new set of the and , but these and are now correlated. It is then possible to decorrelate the and without changing their statistics, which in turn ensures that the statistics of still comply with . Overall, the method in [30] provides an iterative scheme such that the probability distribution of converges towards the prescribed distribution , while keeping the chosen covariance function exact in every iteration step. In Fig. 1 we show the three types of distribution that we use to realise threshold values. These are (i) a Gaussian distribution, (ii) a highly skewed shifted exponential distribution, and (iii) a piecewise linear distribution with compact support. The precise mathematical form for each of these is given in Appendix B.

Fig. 1.

Random thresholds. Realisations of the random threshold (left) for a Gaussian (top), shifted exponential (middle) and bump (bottom) distribution. The middle column shows the probability distribution of the threshold on the left. The right column depicts the probability distribution of the threshold obtained from 1000 realisations. Equation (9) was truncated after 50 (top), 32 (middle) and 64 (bottom) terms per sum, respectively. Parameter values are , and (top), , (middle) and , , (bottom)

Travelling Fronts

As mentioned in Sect. 1 much is now known about the effects of random forcing on neural field models. As regards travelling fronts the work of Bressloff and Webber [9] has shown that this can result in ‘fast’ perturbations of the front shape as well as a ‘slow’ horizontal displacement of the wave profile from its uniformly translating position. A separation of time-scales method is thus ideally suited to analysing this phenomenon, though we also note that more numerical techniques based upon stochastic freezing [33] could also be utilised. In this section we will explore the effects of quenched or ‘frozen’ threshold noise on the properties of a travelling wave, and in particular its speed.

For a symmetric choice of synaptic kernel , which decays exponentially, the one-dimensional model (3) with a constant threshold is known to support a travelling front solution [22, 23] that connects a high activity state to a low activity state. In this case it is natural to define a pattern boundary as the interface between these two states. One way to distinguish between the high and the low activity state is by determining whether u is above or below the firing threshold. When denoting the position of the moving interface by , the above notion leads us to the defining equation

| 12 |

Here, we are assuming that there is only one point on the interface as illustrated in Fig. 2, though in principle we could consider a set of points. For the choice (5) we see that is differentiable at , which means that the random threshold is differentiable in the mean square sense. The differentiation of (12) gives an exact expression for the velocity of the interface c in the form

| 13 |

which modestly extends the original approach of Amari [21] with the inclusion of the term for . We can now describe the properties of a front solution solely in terms of the behaviour at the front edge that separates high activity from low, as described in [34, 35]. To see this, let us consider a right moving front for which for and for . Then we solve (3), dropping transients, to obtain

| 14 |

Hence,

| 15 |

For simplicity we make the choice so that from (14) we find by differentiation with respect to x that for a right moving wave (for large t)

| 16 |

By noting that

| 17 |

and inserting (16) and (17) into (13), we find the wave speed of a right moving wave

| 18 |

When we repeat the above derivation for a left moving wave, the wave speed is given by

| 19 |

where we used

| 20 |

Note that in the case of a constant threshold with we obtain , for , and , for , which recovers a previous result, as discussed in [16]. If the front is moving to the right we have an exact expression for the speed (see also [36]):

| 21 |

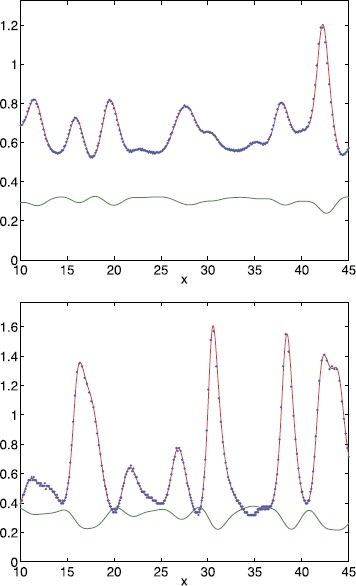

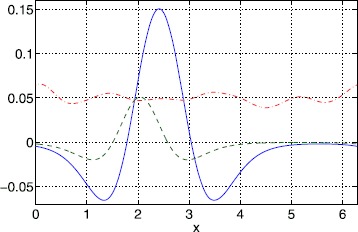

Examples of this relationship are shown in Figs. 3 and 4 where we plot both and the instantaneous front velocity extracted from a numerical simulation of (3). Figure 3 depicts results when the local probability distribution is a Gaussian for two different values of the correlation length κ, while Fig. 4 illustrates travelling fronts for thresholds that are locally distributed as a skewed exponential and a bump. We see excellent agreement between the numerical values and the expression (21).

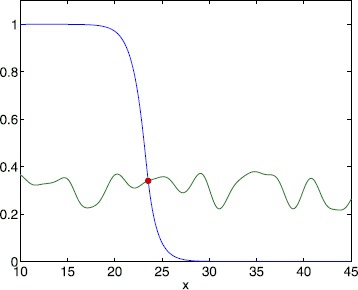

Fig. 2.

Travelling front. Instance of a travelling front (blue) for the bump distribution. The threshold is shown in green. The red dot indicates the position where . Equation (9) was truncated after 64 terms per sum. Parameter values are , , , , , and

Fig. 3.

Instantaneous speed of a travelling front for a Gaussian threshold distribution. Measured front speed for a Gaussian threshold distribution, extracted from a simulation of (3) (blue); theoretical value from (21) (red); and the threshold (4) (green), for (top) and (bottom). Equation (9) was truncated after 50 terms per sum. Other parameter values are , , ,

Fig. 4.

Instantaneous speed of a travelling front for non-Gaussian threshold distributions. Measured front speed, extracted from a simulation of (3) (blue); theoretical value from (21) (red); and the threshold (4) (green) for a shifted exponential distribution (top) and a bump distribution (bottom) for , and . Equation (9) was truncated after 32 (top) and 64 (bottom) terms per sum, respectively. Other parameter values are , , (top) and , , , (bottom)

Perturbative Calculation of Wave Speed

We can also perturbatively calculate the effects of threshold disorder on the speed of a travelling front. Substituting (4) into (21) and taking we find that

| 22 |

where the prime denotes differentiation. We will now take the spatial and ensemble average of (22). It is convenient to introduce an angle bracket notation to denote spatial averaging according to . We find from (9) that (since only the constant eigenfunction contributes to the integral and all other terms in (9) integrate to zero because of periodicity) and (because of periodicity). Hence, the spatial average of c takes the compact form

| 23 |

where and . Now taking expectations over the and we obtain , and . Hence, we may construct as

| 24 |

This expression gives the lowest order correction term to the expression for speed (for a right moving wave) when the threshold is constant and takes the value . The term in square brackets in (24) is positive, and thus spatial disorder will always increase the average speed. This term also increases as the correlation length decreases since the decay more slowly for smaller correlation lengths. Note, however, that the correction term remains uniformly small since for all m when .

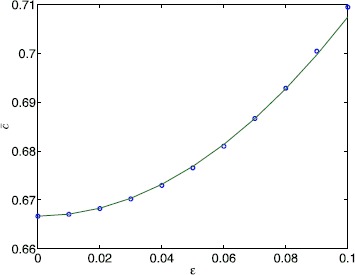

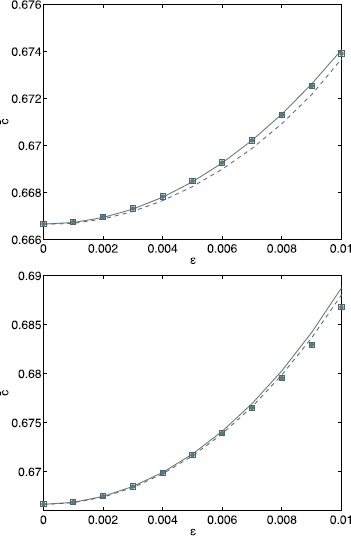

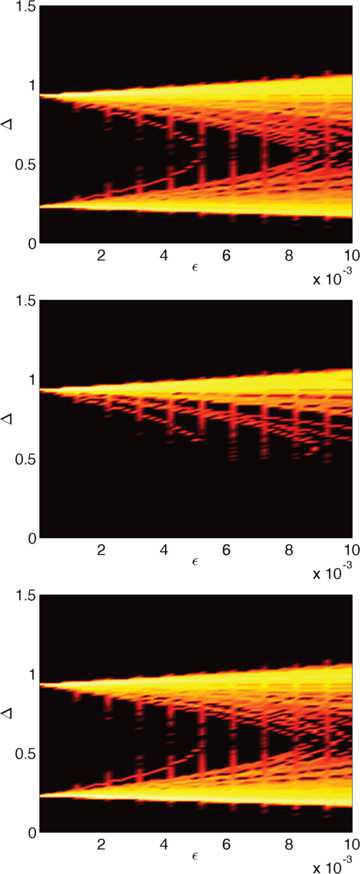

Figure 5 shows c̅ as a function of ϵ for a Gaussian distribution of threshold values, as well as results from directly averaging realisations of (21). Here the and are randomly chosen from the unit normal distribution. We obtain identical results when these are uniformly distributed on (i.e. with mean zero and variance 1). The difference between using bounded distributions for the versus unbounded is that the maximum value of h is then bounded/unbounded. The results based on (24) are almost identical to those obtained from (21) for small values of ϵ, while minor deviations appear as we increase ϵ. In Fig. 6 we plot c̅ as a function of ϵ for the shifted exponential and bump distribution. In addition, we show results for a Gaussian distribution with the same variance. We again observe very good agreement between the small noise expansion (24) and averaging (21) for small values of ϵ. In addition, the curves for the Gaussian threshold and for the non-Gaussian thresholds obtained from averaging (21) almost agree, while the expansion (24) yields identical results for both kinds of threshold noise. The latter is a direct consequence of the bi-orthogonality of the Karhunen–Loève expansion. Equation (24) only depends on the eigenvalues of the covariance function and not on the properties of the local distributions.

Fig. 5.

Mean speed versus ϵ for a Gaussian threshold distribution. c̅ as a function of ϵ for . Solid curve: (24); circles: from averaging (21) over 1000 realisations per point. Equation (9) was truncated after 50 terms per sum. Other parameter values are , ,

Fig. 6.

Mean speed versus ϵ for non-Gaussian threshold distributions. c̅ as a function of ϵ for , , for the exponential distribution (top) and the bump distribution (bottom). In each panel results for the non-Gaussian distribution (dashed blue) are compared to those for a Gaussian distribution (solid green) with the same variance. Solid/dashed curves: (24); blue squares (non-Gaussian)/green circles (Gaussian): from averaging (21) over 1000 realisations per point. Equation (9) was truncated after 250 terms per sum. Other parameter values are , (top) and , , (bottom)

Stationary Bumps

Neural fields of Amari type are known to support spatially localised stationary bump patterns when the anatomical connectivity function has a Mexican-hat shape. In a one dimensional spatial model, and in the absence of noise, it is known that pairs of bumps exist for some sufficiently low value of a constant threshold and that only the wider of the two is stable [21]. For random forcing it is possible to observe noise-induced drifting activity of bump attractors, which can be described by an effective diffusion coefficient (using a small noise expansion) [11] or by an anomalous sub-diffusive process in the presence of long-range spatio-temporal correlations [37]. However, it is also known that spatial disorder can act to pin states to certain network locations by breaking the (continuous) translation symmetry of the system, as described in [38] for neural field models with heterogeneous anatomical connectivity patterns. It is the latter phenomenon that we are interested in here, especially since for a disordered threshold that breaks translational symmetry, it is not a priori obvious how many bump solutions exist and what their stability properties are.

A one-bump solution is characterised by a width Δ, such that for two values and with we have for . Using (3) a one-bump solution therefore satisfies

| 25 |

Note that with given by

| 26 |

We can determine the linear stability of bumps by studying the (linearised) evolution of a perturbation around the stationary bump . We hence find from (3)

| 27 |

| 28 |

where . Demanding that the perturbations at be non-trivial yields the spectral equation , where is the identity matrix in and

| 29 |

The eigenvalues are then given by :

| 30 |

Note further that for we have and therefore as expected from translation invariance.

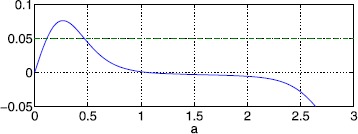

Simple Heterogeneity

We first consider a simple form of heterogeneity to present the ideas, and then move to more general heterogeneity. Suppose and , and the domain is . Then we have bumps which have their maximum at either 0 or π. Suppose the maximum is at 0 and for . Since we need and the threshold is symmetric around 0, we immediately arrive at . To determine a we require i.e.

| 31 |

Choosing , , , and we have two bump widths, as shown in Fig. 7 (). We find that the larger root is stable and the other is unstable, and both have a zero eigenvalue as expected. Increasing ϵ from zero breaks the translational invariance of the system and we obtain 4 bumps for as shown in Fig. 8: the two that exist for the homogeneous case (), now centred around , and similarly two centred around . (We no longer restrict to .)

Fig. 7.

Widths of stationary bumps. The left hand side of (31) (solid) and the right hand side (dashed), with , , , and

Fig. 8.

Bump widths and profiles for a spatially heterogeneous threshold. Top: solutions of (32)–(33), where , for . Only the red solution is stable. Bottom: bump profiles for the solutions in the upper panel, and threshold (dash-dotted). The solid bump is stable, all others (dashed) are unstable. Parameter values are , and

General Heterogeneity

We keep and now consider a general without symmetries. The problem of the existence of a bump is this: given and a value of , find a value of such that , and , where is given by (26). This will not happen generically but only at isolated points in the plane. Thus we need to solve the simultaneous equations

| 32 |

| 33 |

and we only search for solutions which satisfy

| 34 |

We now apply this general concept to the stochastic threshold (9). For each realisation and set of parameter values we use Newton’s method with 1000 randomly chosen initial values which satisfy (34). Out of these 1000 initial values, the number of distinct solutions of (32)–(33) that satisfy (34) is recorded, and their stability is determined as described above. We then check these solutions to verify that only for . Any that do not satisfy this inequality are discarded.

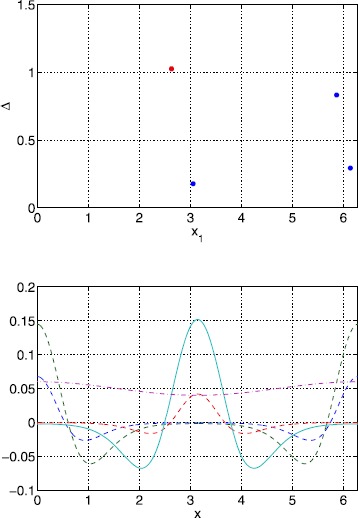

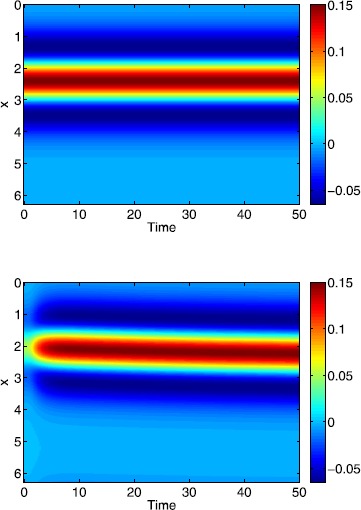

Typical results for a Gaussian threshold are depicted in Fig. 9 for and . We see that width values () are clustered around two values (those corresponding to the homogeneous system) and that the only stable ones are those with large widths (inherited from the homogeneous case). Two example bumps, one stable and the other unstable, from Fig. 9 are shown in Fig. 10. Space-time simulations of (3) using these two bumps as initial conditions are shown in Fig. 11. The stable bump is stationary, as expected, while the solution starting close to (but not exactly at) the unstable bump rapidly increases in size and then slowly moves towards a stable solution.

Fig. 9.

Bump widths for a Gaussian threshold distribution. Typical sets of solutions of (32)–(33) for a Gaussian threshold distribution with , and . Only those with red dots are stable. Other parameter values are , , , and

Fig. 10.

Bumps for a Gaussian threshold distribution. Stable (solid) and unstable (dashed) solutions corresponding to the two points in Fig. 9 with slightly less than 2. The threshold is shown dash-dotted. Parameter values are , , , , , and

Fig. 11.

Space-time plots of bumps for a Gaussian threshold distribution. Simulations of (3) using as initial conditions the two different solutions shown in Fig. 10. Top: stable; bottom: unstable. Parameter values are , , , , , and

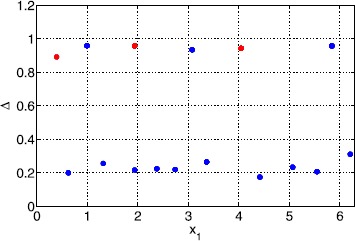

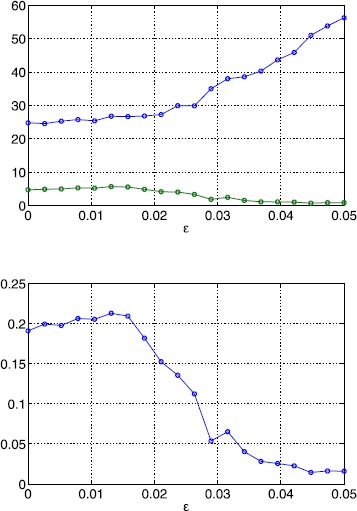

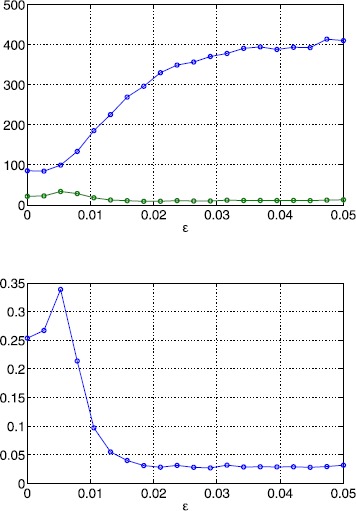

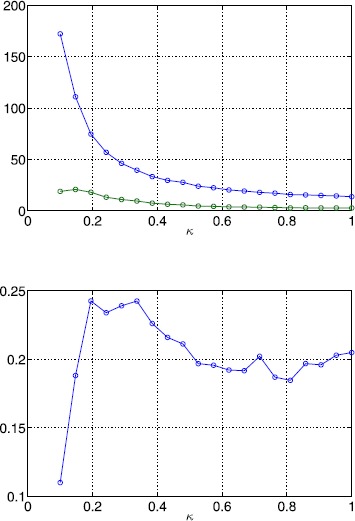

In Fig. 12 we show the number of solutions of (32)–(33) as well as the number and fraction of stable solutions as a function of ϵ. While the number of solutions exhibits an increasing trend, the number of stable solutions decreases, leading to an overall decrease in the fraction of stable solutions. When lowering the correlation length five times (Fig. 13), we observe a similar behaviour. Note, however, that the number of solutions has increased significantly. When we fix ϵ and vary κ the number of solutions decays quickly as shown in Fig. 14. At the same time, the number of stable solutions remains almost constant, resulting in a strong increase of the fraction of stable solutions. Overall, we find that varying the amplitude of the threshold heterogeneity by changing ϵ more strongly affects solution numbers when κ is small, and that the value of κ has a significant effect on how many fixed points exist (and a lesser effect on the number of those which are stable).

Fig. 12.

Bump solutions as a function of ϵ for a Gaussian threshold distribution. Top: average number of total solutions (blue) and stable solutions (green) of (32)–(33) for a Gaussian threshold distribution. Bottom: fraction of solutions which are stable. Parameter values are , , , , and

Fig. 13.

Bump solutions as a function of ϵ for a Gaussian threshold distribution. Top: average number of total solutions (blue) and stable solutions (green) of (32)–(33) for a Gaussian threshold distribution. Bottom: fraction of solutions which are stable. Parameter values are , , , , and

Fig. 14.

Bump solutions as a function of κ for a Gaussian threshold distribution Top: average number of total solutions (blue) and stable solutions (green) of (32)–(33) for a Gaussian threshold distribution. Bottom: fraction of solutions which are stable. Parameter values are , , , , and

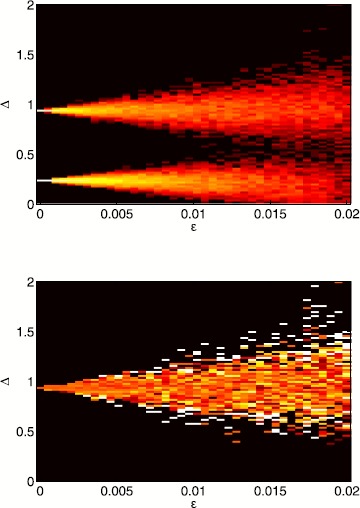

A more detailed view on bump solutions with a Gaussian threshold is presented in Fig. 15, where we show the distribution of Δ values as a function of ϵ and the proportion which are stable. For the probability distribution consists of two delta functions at the stable and unstable bump, respectively. As ϵ increases two branches of solutions emerge from the solutions in the homogeneous case, which widen for larger values of ϵ. Note that as in the homogeneous case, only large-width bumps are stable.

Fig. 15.

Probability distributions of bump widths for a Gaussian threshold distribution. Top: log of the probability density of Δ values for a Gaussian threshold distribution. (White is high, black low.) Bottom: fraction of solutions which are stable. (, .) Parameter values are , , , , and

When bumps only exist below a critical value of , and a branch of stable bumps coalesces with a branch of unstable bumps at this critical value when is varied. Figure 16 shows results for a Gaussian threshold when we change for . We again observe two solution branches that only exist below a critical value of . Each solution branch is smeared out compared to the homogeneous limit, indicating a probability distribution that has a finite and non-zero width.

Fig. 16.

Probability distributions of bump widths for a Gaussian threshold distribution. Top: probability density of Δ values for a Gaussian threshold distribution. (White is high, black low.) The deterministic solution is superimposed in blue. Bottom: fraction of solutions which are stable. (, .) Parameter values are , , , , and

Using the shifted exponential distribution for the threshold we obtain the results plotted in Fig. 17. We again observe two solution branches that emerge from the solutions in the homogeneous case as ϵ increases, with the stable solutions confined to the upper branch. In contrast to the Gaussian case in Fig. 15 the two solution branches do not grow symmetrically around the values of the homogeneous case. This is a manifestation of the highly skewed character of the exponential distribution compared to the symmetric Gaussian distribution. The probability distribution of the widths also exhibits much more structure compared to the Gaussian case.

Fig. 17.

Probability distributions of bump widths for a non-Gaussian threshold distribution. Logarithm of the probability of the bump width Δ when the local probability distribution is given by a shifted exponential and the covariance function is Gaussian: all bumps (top), stable bumps (middle), unstable bumps (bottom). Parameter values are , , , , , and

We know that for a Heaviside firing rate, Mexican-hat connectivity, and a constant firing threshold, multibump solutions cannot exist [39]. However, breaking the translational invariance by using a heterogeneous threshold does allow such solutions to exist. We show an example of this behaviour in Fig. 18, where the norms of stable steady states of (3) are shown as a function of . Each point corresponds to a different realisation of and a different initial condition. We clearly see different “bands”, each identified with an integer number of bumps in a solution, and the norm of these solutions increases as the number of bumps increases, in the same way as seen for systems with a smooth firing-rate function and homogeneous threshold [40–42], or with an oscillatory coupling function [39].

Fig. 18.

Multibumps. Top: norm of stable steady states of (3) for a Gaussian threshold distribution, with many different initial conditions, different realisations of , and various . Solutions indicated by blue stars are shown in the bottom panel. Bottom: typical stable steady states solutions of (3) with 1, 2 and 3 bumps, for . Their norms increase as the number of bumps increases (blue dots in the top panel). These three solutions correspond to three different realisations of . Parameter values are , , , , and

Conclusion

In this paper we have explored the role of threshold noise on travelling fronts and bumps in a simple neural field model with a Heaviside nonlinearity. For a frozen form of disorder and a given realisation of a spatial threshold the standard rules of calculus apply and we have exploited the interface approach of Amari to obtain exact results about solution properties for both existence and stability. The theory that we have developed is not restricted to any special choice of distribution for describing the threshold noise, apart from that sample trajectories be differentiable in the mean square sense. It is worth noting that the stochastic threshold model presented here is formally equivalent to already published stochastic nonlinear integro-differential equations with constant threshold [6–14] when we employ the transformation . However, our approach permits the analysis of strong noise (see e.g. [20]) and hence will allow us to move beyond perturbative expansions.

Theoretical predictions have been shown to be in excellent agreement with numerical simulations of both Gaussian and non-Gaussian threshold models. As such we have a viable mathematically tractable model of a noisy neural tissue that would be of interest to explore in a variety of more neurobiologically relevant scenarios. For example, temporal correlations in excitability of neural tissue would be expected to strongly affect wave propagation and could be easily modelled with an appropriate choice for . To investigate the consequences for wave speed all that would be required would be a minimal extension of the formula for interface dynamics (13) with the replacement of the numerator according to . Moreover, the inclusion of a linear adaptation current, as is common for describing spike frequency adaptation, would allow the study of travelling pulses as well as fronts, building on the deterministic approach in [43] and more recently in [44] for understanding cortical waves in epilepsy. The work here can also be extended to planar systems, allowing the study of spiral waves [45] and an investigation of how noise levels could be used to control the scale and size of a spiral, as suggested in [46]. These are topics of current investigation and will be reported upon elsewhere.

Acknowledgements

SC was supported by the European Commission through the FP7 Marie Curie Initial Training Network 289146, NETT: Neural Engineering Transformative Technologies. We would like to thank Daniele Avitabile for useful feedback provided on a first draft of this manuscript.

Appendix A: Non-Gaussian Quenched Disorder

In the following, we will describe the iterative approach that we have used to generate non-Gaussian quenched disorder. The algorithm is based on [30]. We will use the more general form of the Karhunen–Loève decomposition in (7). To translate our findings for the to the and in (9) we note that this can be achieved by relabelling, i.e.

| 35 |

In the following it is convenient to introduce the notation , which denotes the mth expansion coefficient at the kth iteration for the lth realisation of the quenched disorder. To initialise the scheme, we generate M sets of uncorrelated , , drawn from the prescribed non-Gaussian distribution shifted to mean zero and scaled to unit variance. A convenient way for doing this is to use inverse transform sampling based on the cumulative distribution function of . Strictly speaking the could be generated from any mean zero and unit variance distribution, but starting with the prescribed distribution might speed up convergence. For numerical purposes, the sum in (7) will only contain N terms. One method to determine N is to impose the condition

| 36 |

i.e. that the maximal norm of the difference between the given covariance function and its approximation is smaller than some . Once N is fixed, each iteration step proceeds as follows:

- Generate M samples of the quenched disorder

where denotes the lth realisation of the quenched disorder at the kth iteration. Note that because the are uncorrelated with unit variance, the numerical error in determining the covariance function only depends on N (to satisfy (8)) and on M (for high-fidelity averaging).37 - Numerically determine the cumulative distribution function

where represents the indicator function, i.e. if A is true, otherwise . The cumulative distribution function generally does not agree with the prescribed function . The next two steps alleviate this problem.38 - Map the simulated values to follow :

39 - Compute new values as

The mean of is zero by construction, but the variance is unequal from one due to (39). Is therefore necessary to scale the to have unit variance.40 -

While the now give rise to the prescribed probability distribution , they are correlated due to the mapping in (39). To decorrelate them, we use an iterative product-moment orthogonalisation technique.

- Arrange the values of into a matrix A, i.e. the mth column contains the realisations of . The goal is to re-arrange each column such that correlations between columns are minimised. This is equivalent to having minimal correlations between draws of the .

- Compute the covariance matrix of A.

- Because is positive definite, we can employ a Cholesky decomposition, i.e. . Therefore, the new matrix is uncorrelated.

- Reorder the entries in A to follow the rankings in H.

Repeating the steps (a)–(d) will decrease the correlations of the as required.

Appendix B: Local Probability Distributions

We here provide details of the three zero-mean local probability distributions used in this study. We consider a Gaussian distribution

| 41 |

a highly skewed shifted exponential distribution

| 42 |

and a piecewise linear distribution with compact support

| 43 |

We refer to the last distribution as bump distribution. By demanding that it is normalised, we find . The variances of the exponential and bump distribution are given, respectively, by

| 44 |

Footnotes

Competing Interests

The authors declare that they have no competing interests.

Authors’ Contributions

All authors contributed equally to the paper.

Contributor Information

Rüdiger Thul, Email: ruediger.thul@nottingham.ac.uk.

Stephen Coombes, Email: stephen.coombes@nottingham.ac.uk.

Carlo R. Laing, Email: c.r.laing@massey.ac.nz

References

- 1.Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J. 1972;12:1–24. doi: 10.1016/S0006-3495(72)86068-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bressloff PC. Spatiotemporal dynamics of continuum neural fields. J Phys A. 2012;45 doi: 10.1088/1751-8113/45/3/033001. [DOI] [Google Scholar]

- 3.Coombes S, beim Graben P, Potthast R, Wright JJ, editors. Neural fields: theory and applications. Berlin: Springer; 2014. [Google Scholar]

- 4.Webber MA, Bressloff PC. The effects of noise on binocular rivalry waves: a stochastic neural field model. J Stat Mech. 2013;3 [Google Scholar]

- 5.Rankin J, Meso AI, Masson GS, Faugeras O, Kornprobst P. Bifurcation study of a neural field competition model with an application to perceptual switching in motion integration. J Comput Neurosci. 2014;36:193–213. doi: 10.1007/s10827-013-0465-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hutt A, Longtin A, Schimansky-Geier L. Additive noise-induced Turing transitions in spatial systems with application to neural fields and the Swift–Hohenberg equation. Physica D. 2008;237:755–773. doi: 10.1016/j.physd.2007.10.013. [DOI] [Google Scholar]

- 7.Touboul J, Hermann G, Faugeras O. Noise-induced behaviors in neural mean field dynamics. SIAM J Appl Dyn Syst. 2012;11:49–81. doi: 10.1137/110832392. [DOI] [Google Scholar]

- 8.Touboul J. Mean-field equations for stochastic firing-rate neural fields with delays: derivation and noise-induced transitions. Physica D. 2012;241:1223–1244. doi: 10.1016/j.physd.2012.03.010. [DOI] [Google Scholar]

- 9.Bressloff PC, Webber MA. Front propagation in stochastic neural fields. SIAM J Appl Dyn Syst. 2012;11:708–740. doi: 10.1137/110851031. [DOI] [Google Scholar]

- 10.Bressloff PC. From invasion to extinction in heterogeneous neural fields. J Math Neurosci. 2012;2 doi: 10.1186/2190-8567-2-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kilpatrick ZP, Ermentrout B. Wandering bumps in stochastic neural fields. SIAM J Appl Dyn Syst. 2013;12:61–94. doi: 10.1137/120877106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kilpatrick ZP, Faye G. Pulse bifurcations in stochastic neural field. SIAM J Appl Dyn Syst. 2014;13:830–860. doi: 10.1137/140951369. [DOI] [Google Scholar]

- 13.Kuehn C, Riedler MG. Large deviations for nonlocal stochastic neural fields. J Math Neurosci. 2014;4 doi: 10.1186/2190-8567-4-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Poll DB, Kilpatrick ZP. Stochastic motion of bumps in planar neural fields. SIAM J Appl Math. 2015;75:1553–1577. doi: 10.1137/140999505. [DOI] [Google Scholar]

- 15.Faugeras O, Inglis J. Stochastic neural field equations: a rigorous footing. J Math Biol. 2015;71:259–300. doi: 10.1007/s00285-014-0807-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bressloff PC. Waves in neural media: from single cells to neural fields. New York: Springer; 2014. [Google Scholar]

- 17.Inglis J, MacLaurin J. A general framework for stochastic traveling waves and patterns, with application to neural field equations. SIAM J Appl Dyn Syst. 2016;15:195–234. doi: 10.1137/15M102856X. [DOI] [Google Scholar]

- 18.Krüger J, Stannat W. Front propagation in stochastic neural fields: a rigorous mathematical framework. SIAM J Appl Dyn Syst. 2014;13:1293–1310. doi: 10.1137/13095094X. [DOI] [Google Scholar]

- 19.Coombes S, Thul R, Laudanski J, Palmer AR, Sumner CJ. Neuronal spike-train responses in the presence of threshold noise. Front Life Sci. 2011;5:91–105. doi: 10.1080/21553769.2011.556016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Braun W, Matthews PC, Thul R. First-passage times in integrate-and-fire neurons with stochastic thresholds. Phys Rev E. 2015;91 doi: 10.1103/PhysRevE.91.052701. [DOI] [PubMed] [Google Scholar]

- 21.Amari S. Dynamics of pattern formation in lateral-inhibition type neural fields. Biol Cybern. 1977;27:77–87. doi: 10.1007/BF00337259. [DOI] [PubMed] [Google Scholar]

- 22.Ermentrout GB, McLeod JB. Existence and uniqueness of travelling waves for a neural network. Proc R Soc Edinb. 1993;123A:461–478. doi: 10.1017/S030821050002583X. [DOI] [Google Scholar]

- 23.Coombes S. Waves, bumps and patterns in neural field theories. Biol Cybern. 2005;93:91–108. doi: 10.1007/s00422-005-0574-y. [DOI] [PubMed] [Google Scholar]

- 24.Laing CR. Waves in spatially-disordered neural fields: a case study in uncertainty quantification. In: Gomez D, Geris L, editors. Uncertainty in biology: a computational modeling approach. Berlin: Springer; 2014. [Google Scholar]

- 25.Shardlow T. Numerical simulation of stochastic PDEs for excitable media. J Comput Appl Math. 2005;175:429–446. doi: 10.1016/j.cam.2004.06.020. [DOI] [Google Scholar]

- 26.Le Maître OP, Knio OM. Spectral methods for uncertainty quantification: with applications to computational fluid dynamics. Dordrecht: Springer; 2010. [Google Scholar]

- 27.Papoulis A, Pillai SU. Probability, random variables and stochastic processes. 4. Boston: McGraw-Hill; 2002. [Google Scholar]

- 28.Dietrich CR, Newsam GN. Fast and exact simulation of stationary Gaussian processes through circulant embedding of the covariance matrix. SIAM J Sci Comput. 1997;18:1088–1107. doi: 10.1137/S1064827592240555. [DOI] [Google Scholar]

- 29.Shinozuka M, Deodatis G. Simulation of stochastic processes by spectral representation. Appl Mech Rev. 1991;44:191–204. doi: 10.1115/1.3119501. [DOI] [Google Scholar]

- 30.Phoon KK, Huang HW, Quek ST. Simulation of strongly non-Gaussian processes using Karhunen–Loeve expansion. Probab Eng Mech. 2005;20:188–198. doi: 10.1016/j.probengmech.2005.05.007. [DOI] [Google Scholar]

- 31.Yamazaki F, Shinozuka M. Digital generation of non-Gaussian stochastic fields. J Eng Mech. 1988;114:1183–1197. doi: 10.1061/(ASCE)0733-9399(1988)114:7(1183). [DOI] [Google Scholar]

- 32.Li LB, Phoon KK, Quek ST. Comparison between Karhunen–Loeve expansion and translation-based simulation of non-Gaussian processes. Comput Struct. 2007;85:264–276. doi: 10.1016/j.compstruc.2006.10.010. [DOI] [Google Scholar]

- 33.Lord GJ, Thümmler V. Computing stochastic traveling waves. SIAM J Sci Comput. 2012;34:B24–B43. doi: 10.1137/100784734. [DOI] [Google Scholar]

- 34.Coombes S, Schmidt H, Bojak I. Interface dynamics in planar neural field models. J Math Neurosci. 2012;2 doi: 10.1186/2190-8567-2-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bressloff PC, Coombes S. Neural ‘bubble’ dynamics revisited. Cogn Comput. 2013;5:281–294. doi: 10.1007/s12559-013-9214-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Coombes S, Laing CR, Schmidt H, Svanstedt N, Wyller JA. Waves in random neural media. Discrete Contin Dyn Syst, Ser A. 2012;32:2951–2970. doi: 10.3934/dcds.2012.32.2951. [DOI] [Google Scholar]

- 37.Qi Y, Breakspear M, Gong P. Subdiffusive dynamics of bump attractors: mechanisms and functional roles. Neural Comput. 2015;27:255–280. doi: 10.1162/NECO_a_00698. [DOI] [PubMed] [Google Scholar]

- 38.Coombes S, Laing CR. Pulsating fronts in periodically modulated neural field models. Phys Rev E. 2011;83 doi: 10.1103/PhysRevE.83.011912. [DOI] [PubMed] [Google Scholar]

- 39.Laing CR, Troy WC, Gutkin B, Ermentrout GB. Multiple bumps in a neuronal model of working memory. SIAM J Appl Math. 2002;63:62–97. doi: 10.1137/S0036139901389495. [DOI] [Google Scholar]

- 40.Coombes S, Lord GJ, Owen MR. Waves and bumps in neuronal networks with axo-dendritic synaptic interactions. Phys D, Nonlinear Phenom. 2003;178:219–241. doi: 10.1016/S0167-2789(03)00002-2. [DOI] [Google Scholar]

- 41.Rankin J, Avitabile D, Baladron J, Faye G, Lloyd DJ. Continuation of localized coherent structures in nonlocal neural field equations. SIAM J Sci Comput. 2014;36:B70–B93. doi: 10.1137/130918721. [DOI] [Google Scholar]

- 42.Laing CR. PDE methods for two-dimensional neural fields. In: Coombes S, beim Graben P, Potthast R, Wright JJ, editors. Neural fields: theory and applications. Berlin: Springer; 2014. [Google Scholar]

- 43.Pinto DJ, Ermentrout GB. Spatially structured activity in synaptically coupled neuronal networks: I. Travelling fronts and pulses. SIAM J Appl Math. 2001;62:206–225. doi: 10.1137/S0036139900346453. [DOI] [Google Scholar]

- 44.González-Ramírez LR, Ahmed OJ, Cash SS, Wayne CE, Kramer MA. A biologically constrained, mathematical model of cortical wave propagation preceding seizure termination. PLoS Comput Biol. 2015;11 doi: 10.1371/journal.pcbi.1004065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Huang X, Troy WC, Yang Q, Ma H, Laing CR, Schiff SJ, Wu J. Spiral waves in disinhibited mammalian neocortex. J Neurosci. 2004;24:9897–9902. doi: 10.1523/JNEUROSCI.2705-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Jung P, Mayer-Kress G. Spatiotemporal stochastic resonance in excitable media. Phys Rev Lett. 1995;74:2130–2133. doi: 10.1103/PhysRevLett.74.2130. [DOI] [PubMed] [Google Scholar]