Abstract

Objective. To assess first-year (P1) pharmacy students’ studying behaviors and perceptions after implementation of a new computerized “composite examination” (CE) testing procedure.

Methods. Student surveys were conducted to assess studying behavior and perceptions about the CE before and after its implementation.

Results. Surveys were completed by 149 P1 students (92% response rate). Significant changes between survey results before and after the CE included an increase in students’ concerns about the limited number of questions per course on each examination and decreased concerns about the time allotted and the inability to write on the CEs. Significant changes in study habits included a decrease in cramming (studying shortly before the test) and an increase in priority studying (spending more time on one course than another).

Conclusion. The CE positively changed assessment practice at the college. It helped overcome logistic challenges in computerized testing and drove positive changes in study habits.

Keywords: assessment, testing, study habits

INTRODUCTION

Cumulative tests such as progress examinations that integrate material across courses are used primarily in medical education, but are also present in pharmacy education.1 Typically, such examinations are administered at infrequent intervals throughout programs and are usually given at the end of a semester or academic year. While some evidence suggests that students perform better when they are tested more often, results have been mixed.2 Additionally, while it may seem logical that studying consistently would have a better effect on academic performance than “cramming” shortly before an examination, some studies suggest that “crammers” may actually perform better on examinations.3,4

However, cramming does not ensure long-term retention.5 Frequent testing in itself has a positive effect on retention.6 Given these relationships between testing process, study habits, and retention, testing content of required courses in an examination that occurs frequently may compel students to study consistently rather than cram, which theoretically should promote long-term retention.

This theoretical framework and the desire to enhance student learning and retention provide the rationale for the development and implementation of a “composite examination” (CE) process by the University of Tennessee Health Science Center’s (UTHSC) College of Pharmacy. These CEs are single examinations administered approximately every two weeks and cover most material presented during that time period.

A literature search for peer-reviewed work in four databases, Academic Search Premier, ERIC, PsychInfo, and Medline using various combinations of the terms “composite examination,” “composite test,” “integrated testing,” “integrated examination,” “integrated content,” “multiple domain,” “combined subjects,” “combined topics,” “compiled subjects,” “compiled courses,” and “compiled topics” did not identify any studies about the use of this type of composite examination. Although the UTHSC Colleges of Medicine and Dentistry have used the CE format for several years, the CE testing process implemented at our institution has not been described previously.

The objective of our research was to assess first-year (P1) student pharmacists’ perceptions of the CE and to determine whether or not this testing format influenced their study behaviors. We hypothesized that perceptions about the CE would change as students gained experience with the format and that behaviors would migrate toward less cramming and more consistent studying.

METHODS

In 2010, faculty members at the college voted to use computerized testing in required courses. Despite the availability of the 77-seat computer laboratory at the college, implementation of computerized testing was associated with numerous logistical problems. These problems included scheduling limitations that precluded course directors from accessing the computer laboratory as needed, the inability to accommodate a large class in one testing session, and logistical problems coordinating scheduling of other campus testing facilities as a result of six other colleges on campus competing for testing resources.

A task force of faculty members, staff, and students concluded that these logistic factors precluded the administration of computerized examinations using the conventional approach of multiple examinations in each course throughout the semester. For example, once implemented across the first five semesters, computerized testing would be needed for 16 required courses in the fall and 10 required courses in the spring semester. Thus, the task force recommended that the college move to a CE and that implementation begin with the class entering in fall 2011 and continue as that group progressed.

During orientation, P1s are trained on ExamSoft software (ExamSoft Worldwide, Inc., Dallas, TX), including a mock examination, and are scheduled for additional practice sessions over the first two weeks of the semester as needed. Composite examinations for the first professional year are generally given on Tuesday mornings, but because of laboratory availability, some may be scheduled in the afternoon. Students take one 3-hour examination approximately every two weeks for a total of seven CEs across the semester.

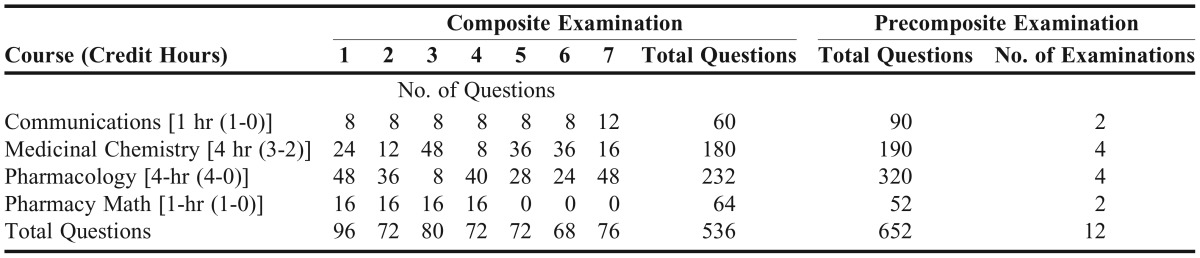

The examination format consists of multiple-choice, fill-in-the-blank, some case-based questions, as well as questions including embedded images (eg, chemical structures). Courses are allotted 3-4 questions per contact hour of lecture (ie, 50 minutes), thus courses with the most contact time have more questions on each CE. Table 1 illustrates the number of questions each course had on the seven examinations and highlights the difference in examination structure before and after the CE was implemented.

Table 1.

Composite Examination Structure and Previous Examination Structure

Questions for each course are seen in sequence together on the examination and are not randomized across the CE. A student does not know which faculty member wrote a specific question but may be able to discern this by the topic. The day before the examination, students are told the number of questions per course, the order of course appearance on the examination, and the question numbers that correspond to each course. This information enables students to test on each course in the order that they prefer.

Students do not receive an overall score or grade for each CE; instead, a score is provided for each course independently. This approach enables the course to maintain its identity on the student’s transcript and in the academic catalog. Moreover, this practice ensures that students ultimately demonstrate proficiency within each course. At the discretion of course directors, individual courses may include other assessments (eg, quizzes, projects) that are considered in the final course grade.

In fall 2013, survey data were collected from P1s at the beginning and end of their first semester. During initial orientation to the testing software (ExamSoft), the presurvey was electronically administered in a computer laboratory using the same software. The postsurvey also was administered with ExamSoft in a computer laboratory before the final test of the semester. The postsurvey included an opportunity to submit comments about the CE. The surveys were designed to assess study behavior, concerns about the CE, and perceptions about the effect CEs may have on academic performance and knowledge retention. The survey was piloted the previous fall semester (2012) with the P1 class and revised for greater clarity according to student feedback.

A matched-pairs design was used to examine differences between the pre/postsurvey results. The Wilcoxon signed rank test was used for ordinal data, and McNemar’s chi-square test was used for nominal data. An alpha level of 0.05 was set as the criterion for significance. Effect sizes were calculated for significant results using the correlation coefficient r for the Wilcoxon tests and odds ratios for the McNemar’s tests. Qualitative data were analyzed independently by two of the authors and coded into categories to identify recurrent themes. This study was approved by the university’s institutional review board, and analyses were conducted using SPSS, v20.0 (SPSS, Inc., Chicago, IL).

RESULTS

One hundred forty-nine P1s completed both surveys, resulting in a 92% response rate. Approximately 32% (n=47) submitted comments on the postsurvey. The majority (60%, n=90) were female, and ages ranged from 20-47, with a mean of 23. English was the first language for 90% (n=134), and 75% (n=112) had completed at least a bachelor’s degree before starting pharmacy school.

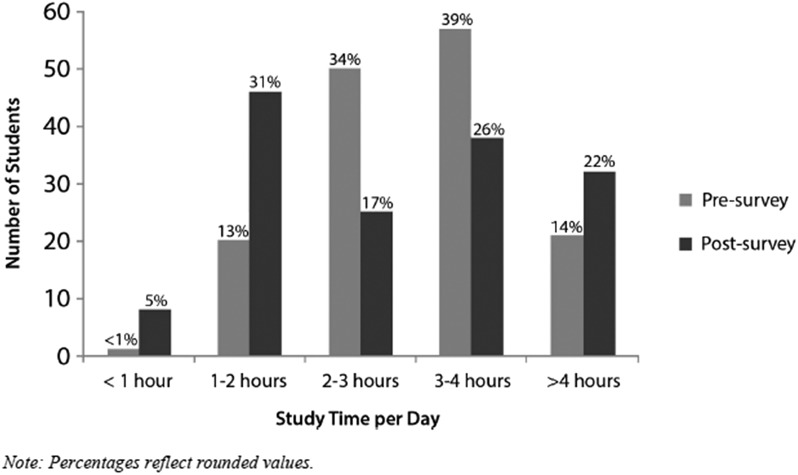

Participants were asked in the presurvey how much time they anticipated studying each day, excluding weekends, and were asked in the postsurvey how much time they actually studied during the semester. Weekend study time was excluded from the questionnaire to focus on assessing how consistently students studied throughout the week. The distribution of responses changed significantly from presurvey to postsurvey (p=0.02, r=0.19). The majority (72%) anticipated studying 2-4 hours per day, while the actual reported study time varied more widely, with 31% studying 1-2 hours, 25% studying 3-4 hours, and 21% studying more than 4 hours (Figure 1).

Figure 1.

Student Anticipated vs Actual Amount of Time Spent Studying.

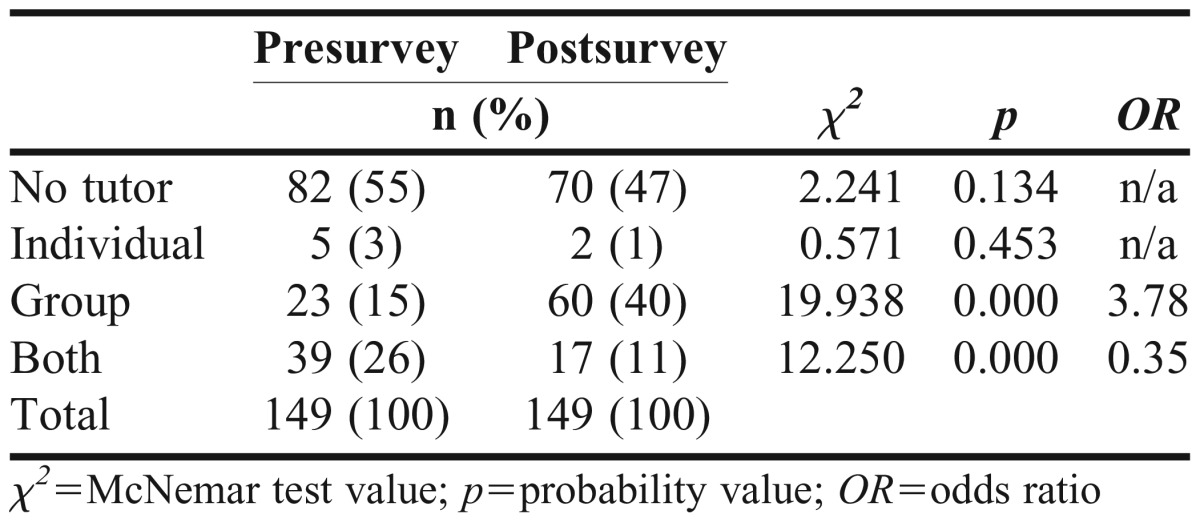

The surveys asked about expectations and actual use of an individual tutor, group tutor, both, or no tutor at all. Actual use of tutors shifted significantly from what students anticipated, with use of group tutors increasing from an anticipated 15% to 40% actually used (p<0.001). Use of individual and group tutors was anticipated by 26% of students but were actually used by only 11%, which was also a significant change (p<0.001). Overall, the largest percentage of students expected not to use a tutor at all (55%), and the smallest percentage expected to use an individual tutor only (5%). Actual reported use at the end of the semester did not change significantly in either of these categories, with 47% reporting that they did not use a tutor, and 1% reporting the sole use of an individual tutor (Table 2).

Table 2.

Student Anticipated vs Actual Use of Tutors

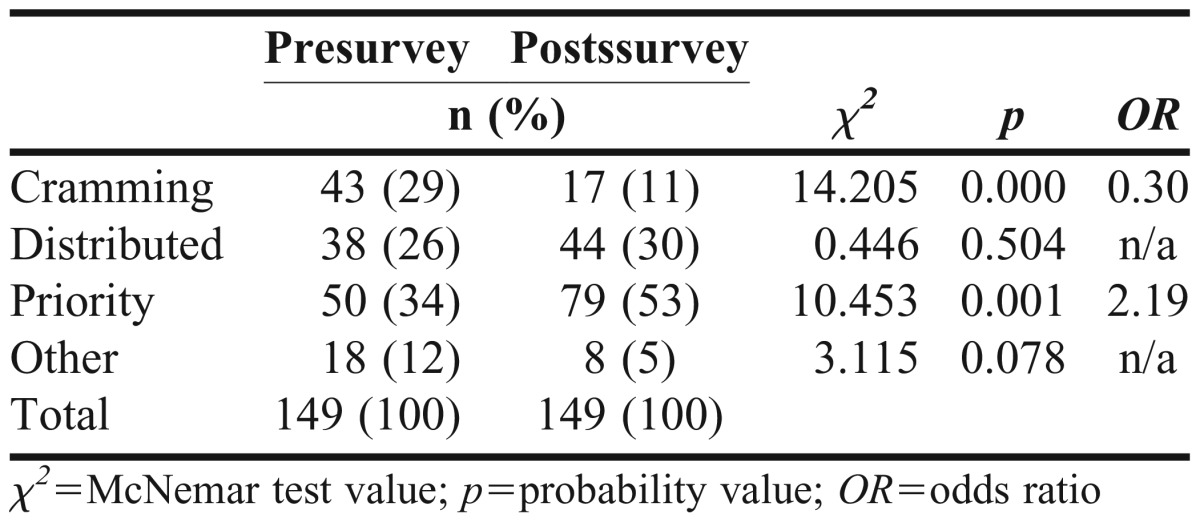

Students were asked to select one answer that best described their study habits using four choices: cramming (studying only a day or two before the examination), distributed (studying a little each day), priority studying (spending more time on one course than another), or other. In the presurvey, 29% anticipated cramming, 26% anticipated studying some each day, and 34% anticipated they would priority study. In the postsurvey, fewer students reported cramming (11%), and more reported priority studying (53%). These differences were significant (p<0.001, and p=0.001, respectively; Table 3).

Table 3.

Student Description of Study Habits Before and After the First Semester

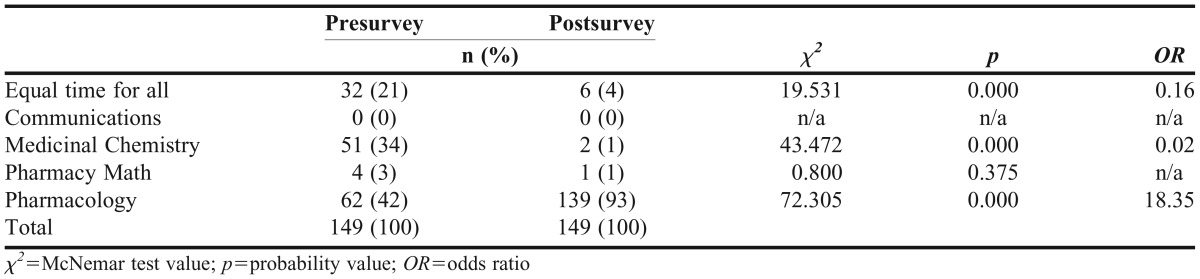

The courses students initially anticipated studying for the most changed in the postsurvey. Significant differences were found in three areas (Table 4). In the presurvey, 21% expected to spend equal time on all courses, while only 4% reported actually doing so (p<0.001). Studying for Medicinal Chemistry was expected to be the top priority for 34%, which decreased to only 1% (p<0.001). Finally, 42% expected to study the most for Pharmacology, while 93% reported actually studying the most for that course (p<0.001).

Table 4.

Student Anticipated vs Actual Study Effort by Course

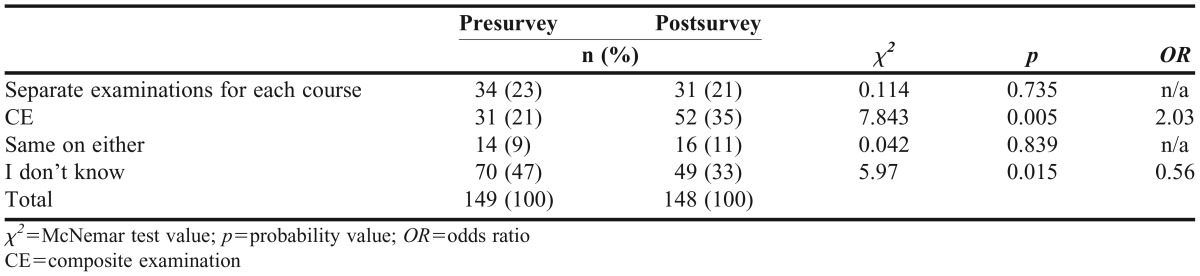

Participants were asked about their perceptions on the effect of the testing format (CE or separate examinations for each course) on their examination performance. The percentage who thought they would perform better on the CE format increased significantly, from 21% presurvey to 35% postsurvey (p=0.005). The percentage who did not know decreased significantly, from 47% to 33% (p=0.015, Table 5).

Table 5.

Student Perceptions Regarding Examination Format Leading to Highest Performance

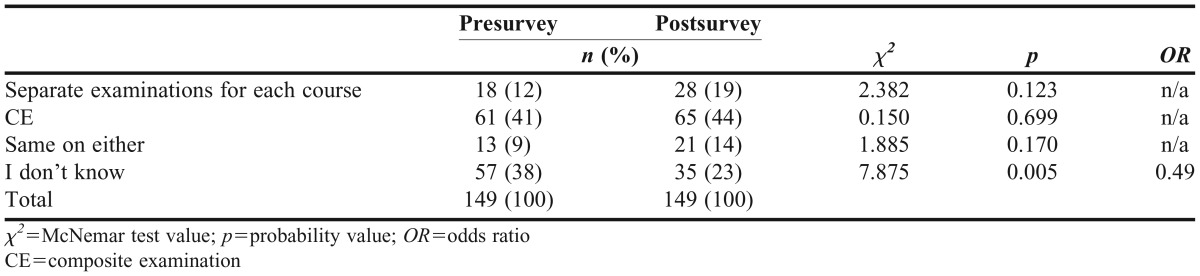

When asked which testing format led to greater knowledge retention, the largest number of students on both surveys (41% pre, 44% post) responded that it was the CE format. The percentage responding, “I don’t know,” decreased significantly, from 38% in the presurvey to 23% postsurvey (p=0.005, Table 6).

Table 6.

Student Perceptions Regarding Examination Format Leading to Greatest Retention

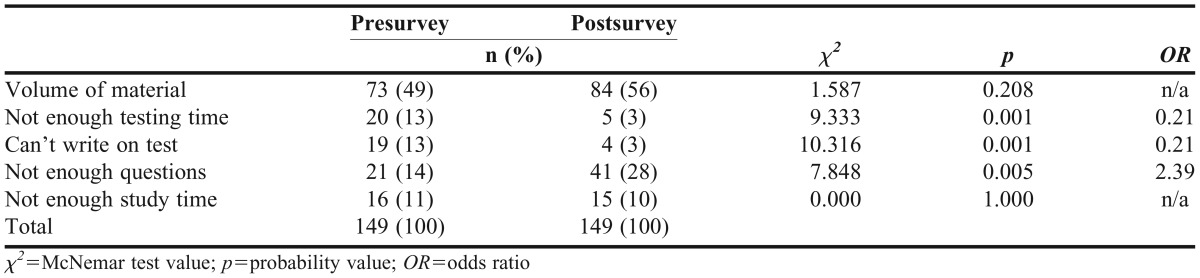

Major concerns noted in the presurvey were the volume of material (49%), limited number of questions per course on each examination (14%), time allotted for examination (13%), and inability to write on the examination (13%). No significant changes were noted in students’ concerns about the volume of material in the postsurvey. Concerns about the limited number of questions per course on each examination significantly increased to 28% in the postsurvey (p=0.005). Concerns about time allotted and the ability to write on the examination both decreased to 3%, resulting in significant differences (p=0.001, Table 7).

Table 7.

Student Top Concerns about Composite Examination Before and After the First Semester

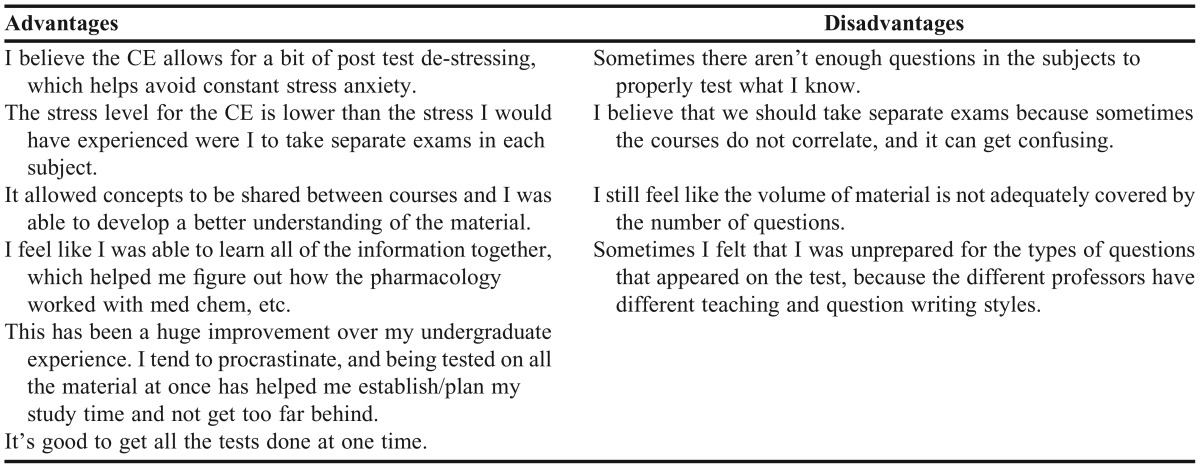

Forty-seven comments were submitted on the postsurvey in response to the final open-ended question asking for general comments about the CE. The data fell into three categories of perspectives toward the examination: positive, negative, and mixed/ambivalent. Overall, feedback was positive, with 18 students explicitly stating that they liked the CE format of testing; five said they either did not like the CE or would prefer to have separate course material tested on separate examinations. Comments included descriptions of advantages and disadvantages as well as suggestions for improving the CE. Some examples of advantages and disadvantages appear in Table 8 and included increasing the number of items per course and increasing the time allotted to complete the examination. A few students indicated that the testing format decreased their anxiety level.

Table 8.

Illustrative Examples of Student Comments on Perceived Advantages and Disadvantages of Composite Examination

DISCUSSION

The goal of this study was to assess the extent to which study behavior and perceptions of the CE changed for students after implementing a new testing method during their first semester in pharmacy school. The major results show some differences between presurvey and postsurvey regarding behavior and perception.

Given that part of the rationale for the use of the CE was to decrease students’ cramming before an examination, it is noteworthy that the percentage of students who reported cramming decreased significantly, from 29% to 11%. The effect size (OR=0.303) reflects a change of substantial magnitude, as students were more than three times less likely in the postsurvey to describe their study habits as cramming than they were to have selected that choice in the presurvey. Also of note was the shift in priority studying, which increased from 34% to 53%. In this case, the effect size of 2.19 revealed that students were more than twice as likely in the postsurvey to characterize their study habits as priority studying than they were to have selected that description on the presurvey. The latter is not surprising, considering that students perceive some courses as more difficult and/or deem them more important than others; thus, students will inevitably focus more effort on such courses. One concern with priority studying is that students may neglect to study sufficiently for other courses; hence, their grades and retention may suffer in those topics.

Most students who initially planned to allocate equal study time to all courses ended up priority studying. Initially, 34% and 42% of students expected to study the most for Medicinal Chemistry and Pharmacology, respectively. By the end of the semester, more than 93% made Pharmacology their top priority for study effort. Historically, Pharmacology has been the most challenging course for P1s. Prerequisite chemistry courses may have facilitated their comfort with the content in Medicinal Chemistry. Although this change might be attributable to students’ perception that the course content is more relevant to pharmacy practice, it is more likely that the Pharmacology course was more rigorous, given the number of students who do not progress on time because of this course. Perhaps, students eventually came to view Pharmacology as the course most relevant to pharmacy practice and/or most difficult, while many of them initially expected Medicinal Chemistry to have that distinction.

Although there were significant changes in the findings for the use of tutors, those results are somewhat difficult to interpret in relation to the CE. The campus office of student academic support restricted their individual tutoring services to students performing below a certain academic level, so students initially anticipating they would use an individual tutor may have found that the service was not available to them because they were not struggling academically and/or chose not to spend the money to hire their own individual tutors. However, group tutoring was made available to all students through the campus as well as through student organizations, and actual use of group tutors increased significantly from what was anticipated. Whether or not this phenomenon was related to the CE is unknown.

At the end of the semester, students’ perceptions about the effect of the CE on their academic performance significantly changed in favor of the CE. One possible explanation for why perceptions changed could be that fear of the unknown produced anxiety at the beginning of the semester, but with frequent testing, students grew more familiar with the examination format and established study strategies they felt were effective and manageable. These results, as well as the comments submitted, speak positively to how students viewed the CE.

Finally, the top concerns shifted before and after students’ initial CE experiences. Apprehension that there would not be enough time allotted to complete the examinations and that it would not be possible to write on the examination were apparently alleviated. The volume of material per examination remained the most frequently cited top concern, while the idea that there were not enough questions per examination was noted by a larger percentage of students by the end of the semester. These are important issues for faculty members to consider, especially as they relate to the quality of examination items and assurances that questions assess the most important content areas and student learning outcomes.

Reflection on the development and implementation of the CE brings to light both advantages and challenges of the process. One important advantage is that the CE is an efficient method to increase the number of examinations in a given semester, which leads to greater retention.6 Additionally, having more examinations lowers the stakes associated with each examination, which should decrease students’ anxiety, a point supported by student comments on the survey. More frequent examinations, including content from multiple courses, also provide students time to de-stress. In light of student concerns that the CE does not provide enough questions to adequately test their knowledge, it is noteworthy that when comparing the CE with the previous testing process, one course (pharmacy math) increased the total number of examination questions asked over the semester, while others decreased the total question count by widely varying amounts. All courses had an increase in the number of examinations in the semester, which lowered the stakes for each examination. Moreover, the overall number of examinations throughout the semester decreased, allowing more “down time” for students in between examinations.

The CE process provided a benefit to course directors and their staff in that they did not have to build their own examinations, be involved in any part of the examination administration, or perform item analysis. Staff members from academic affairs handled all of these tasks so course directors did not have to spend any of their time building, proctoring, or calculating grades or item statistics for any of these examinations.

Another important advantage to the CE is that it enabled the college to conduct embedded outcomes assessment over a large portion of the curriculum in a centralized way that was easy to track. As examination items were entered, they were tagged to programmatic learning outcomes so that outcomes reports could be generated and student achievement of these tracked longitudinally throughout the five semesters. If faculty members were to do this with separate examinations, it would be more difficult to standardize the process, gather all of the data, and examine student performance across the curriculum. The CE process also made it easier for academic affairs staff to monitor progress of students performing poorly in a course.

As students indicated, the CE process changed their approach to studying. It discouraged procrastination and cramming and helped students stay on track. Students were forced to take a less compartmentalized approach to studying, and as comments reflected, the process may have helped them make connections across courses. It also may have changed faculty members’ approach to testing, as each course was no longer as isolated in the assessment process. The CE compelled faculty members to think about the rest of the curriculum rather than just their own course.

While the CE had many advantages, the process brought challenges with it as well, most of them logistical and some stemming from the fact that it required a concerted effort of many faculty members and staff working together to accomplish it. For example, it was a challenge to get all faculty members to submit their examination questions with enough time for peer review and modification. The staff members building the examination needed faculty cooperation in meeting these deadlines in order for the review process to work as intended. Faculty members were accustomed to writing examinations on their own timelines, which allowed them more freedom to make their own last-minute changes. The CE process required more forethought and earlier commitment regarding what would be tested. Although the transition to a different process was a challenge for some, the end result was positive in that it required more focus on the link between teaching and assessment.

Although we wanted to accommodate student concerns as much as possible, allowing P1, P2, and P3 classes to test on their preferred day was a challenge we were not able to overcome. We wanted to schedule the examination at the optimal time for each class, but it was not logistically feasible. Additionally, examination security concerns drove us to sequester groups of students until all were finished with the examination. Obviously, this was not a popular practice with students, as they wanted to leave after they submitted their examination. Moreover, testing in two different time zones, as is necessary beyond the P1 year when students are no longer on one campus, presented further complications, sometimes requiring staff members proctoring the examinations to stay late. We are evaluating the feasibility of wireless testing to resolve this problem.

Finally, estimating the amount of time it takes to complete the CE was challenging, especially early on in the process. For example, although multiple courses were allotted the same number of examination questions, the time it took to answer four questions from one course did not necessarily equal the time it took to answer four questions from another course. A useful function of the software was the capability of tracking the amount of time students spent on each question as well as how often they returned to a question. Reports with this information helped sharpen our ability to estimate the rate at which students work through the examination, allowing us to set reasonable amounts of time for testing. These reports also helped faculty members to understand how long it would take students to answer certain types of questions and to assess and modify for subsequent years as needed.

There are some limitations to this study. Self-reporting methods carry with them some threat to validity, given that the respondents may submit inaccurate data, whether intentional or unintentional. Additionally, the study did not control for variables other than the testing method (eg, making the transition from undergraduate to a professional curriculum) that may have influenced study behavior. Finally, the survey was limited to one class of first-year students only, and results cannot be extrapolated to advanced years in the pharmacy curriculum.

CONCLUSION

This study assessed first-year pharmacy students’ study behaviors and perceptions after implementation of a new computerized testing procedure. The CE was a positive change in assessment practice at the college. The process helped overcome logistic challenges in testing and drove positive changes in study habits. This study contributes to pharmacy education literature by describing the development and implementation of an innovative examination process and examining the effects of this process on studying behavior and student perceptions. Future studies could directly examine performance and retention to build on the student perceptions in these areas.

REFERENCES

- 1.Plaza CM. Progress examinations in pharmacy education. Am J Pharm Educ. 2007;71(4):Article 66. doi: 10.5688/aj710466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bangert-Drowns R, Kulik J, Kulik CL. Effects of frequent classroom testing. J Educ Res. 1991;85:89–99. [Google Scholar]

- 3.Brinthaupt T, Shin C. The relationship of academic cramming to flow experience. College Student J. 2001;35:457–471. [Google Scholar]

- 4.Pychyl T, Morin R, Salmon B. Procrastination and the planning fallacy: an examination of the study habits of university students. J Soc Behavior Personality. 2001;16(1):135–150. [Google Scholar]

- 5.Brown PC, Roediger HL, McDaniel MA. Make It Stick: The Science of Successful Learning. Belknap Press of Harvard University Press; Cambridge: 2014. [Google Scholar]

- 6.Roediger H, Karpicke J. The power of testing memory: basic research and implications for educational practice. Perspect Psychol Sci. 2006;1(3):181–210. doi: 10.1111/j.1745-6916.2006.00012.x. [DOI] [PubMed] [Google Scholar]