Abstract

Objective. To assess content and criterion validity, as well as reliability of an internally developed, case-based, cumulative, high-stakes third-year Annual Student Assessment and Progression Examination (P3 ASAP Exam).

Methods. Content validity was assessed through the writing-reviewing process. Criterion validity was assessed by comparing student scores on the P3 ASAP Exam with the nationally validated Pharmacy Curriculum Outcomes Assessment (PCOA). Reliability was assessed with psychometric analysis comparing student performance over four years.

Results. The P3 ASAP Exam showed content validity through representation of didactic courses and professional outcomes. Similar scores on the P3 ASAP Exam and PCOA with Pearson correlation coefficient established criterion validity. Consistent student performance using Kuder-Richardson coefficient (KR-20) since 2012 reflected reliability of the examination.

Conclusion. Pharmacy schools can implement internally developed, high-stakes, cumulative progression examinations that are valid and reliable using a robust writing-reviewing process and psychometric analyses.

Keywords: curricular assessment, progressions examination, criterion validity, content validity, reliability

INTRODUCTION

The goal of pharmacy education is to develop competent pharmacists who contribute to the care of patients in collaboration with other health care providers.1 Formative and summative assessments of student learning evaluate goal attainment.2-5 The Accreditation Council for Pharmacy Education (ACPE) recently revised and will put into effect standards in July 2016. The 2016 Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree detail in Standard 24 that evaluation of educational outcomes should include formative and summative assessments that are systematic, reliable, and valid, have results that can be compared nationally across peer schools, and show that students are considered “APPE-ready,” “practice-ready,” and “team-ready.”1 To achieve this standard, ACPE instructs schools of pharmacy to administer a reliable, valid, nationally standardized examination, the Pharmacy Curriculum Outcomes Assessment (PCOA), developed by the National Association of Boards of Pharmacy (NABP).1,6 The previous ACPE Standards allowed schools of pharmacy to use the PCOA, or an internally-developed assessment tool.

Appendix 3 of the new standards describes the required documentation for the new standards and key elements. Within appendix 3, standard 1 (foundational knowledge) stipulates that students who have nearly or fully completed the didactic curriculum should have their knowledge assessed with the PCOA as it provides an assessment of the essential content domains identified in Appendix 1 of the Standards. Appendix 1 details subjects covered in four broad content domains: biomedical sciences, pharmaceutical sciences, social/administrative/behavioral sciences, and clinical sciences.1 Standard 24 indicates how to assess Standards 1-4, and is further explained in the ACPE guidance document. Within the guidance for Standard 24, instruction 24g and 24i indicate that the PCOA provides valid and reliable assessment of student performance in several areas. The guidance document suggests that schools can benchmark student knowledge retention within their program, compare student performance with other pharmacy programs, and use PCOA score reports to aid in curricular revision.7

The benefits of administering a nationally standardized examination are that it is validated, minimal faculty time is required, and results can be compared with those of other schools. Currently, there is no cost for third-year (P3) students entering their Advanced Pharmacy Practice Experiences. However, the exam comes with a financial cost to students in their first (P1) and second (P2) years. Benefits of administering an internally developed examination include minimal financial cost, an examination tailored to the curricular outcomes of the school, and easy item analysis that identifies specific topics with poor student performance, which in turn allows for prompt remediation of learning deficiencies. Creating, validating, and measuring reliability of internally developed examinations requires time and expertise; however, results cannot be compared with other schools.

Assessing validity and reliability can include several analyses reviewed together, to conclude that students’ performance on an examination accurately represents their understanding of the material. Validation encompasses several areas; namely, content, criterion, and construct validity. Content validity is internal and describes how the examination questions represent the subject of the assessment. Criterion validity assesses if students’ performance on an examination correlates with their performance on a similar, well-validated examination. Construct validity answers if an inference about an examination is appropriate for an indirect measure. For example, construct validity could measure whether an examination predicts success in the pharmacy program.8 Reliability measures the reproducibility of scores from one testing group to another.9

A review of the literature on internally developed progress examinations to assess students’ foundational knowledge shows that such examinations can measure retention of student knowledge, assess weaknesses in the curriculum, determine if a student should progress through the curriculum, help identify students requiring remediation, and assess readiness of students for advanced pharmacy practice experiences (APPEs).4,5,10-14 One such examination is the University of Houston’s MileMarker Assessment, a comprehensive and cumulative case-based examination designed to assess student learning and retention of information.5,15 Validation efforts are also reported by several schools. Alston and Love analyzed reliability, as well as content, criterion, and construct validity of the Annual Skills Mastery Assessment at their institution.10 Latif and Stull reported the annual examination at their institution correlated significantly with final grade point average and Pharmacy College Admission Test (PCAT) scores.11 Meszaros et al reported the triple jump examination students take before progressing into APPEs correlated with preceptor grades in the first year of APPEs.14 Although several schools performed various validation methods, evaluating the criterion validity of an internally developed examination using a nationally standardized assessment is lacking.

This paper describes the development and evaluation of the third-year pharmacy Annual Student Assessment and Progression examination (P3 ASAP Exam). The P3 ASAP Exam is a high-stakes assessment that integrates basic, pharmaceutical, clinical, and social sciences in a case-based format. Third-year students must pass the examination to advance to the fourth (P4) year. This emphasizes the need to ensure a valid and reliable examination, as progression decisions are based on the results. The goal of this study was to determine the validity and reliability of this examination. The examination was validated by assessing content and criterion validity. In addition, reliability was assessed with the Kuder-Richardson coefficient (KR-20). 16 To our knowledge, this is the first report assessing criterion validity using a well-validated examination such as the PCOA.

METHODS

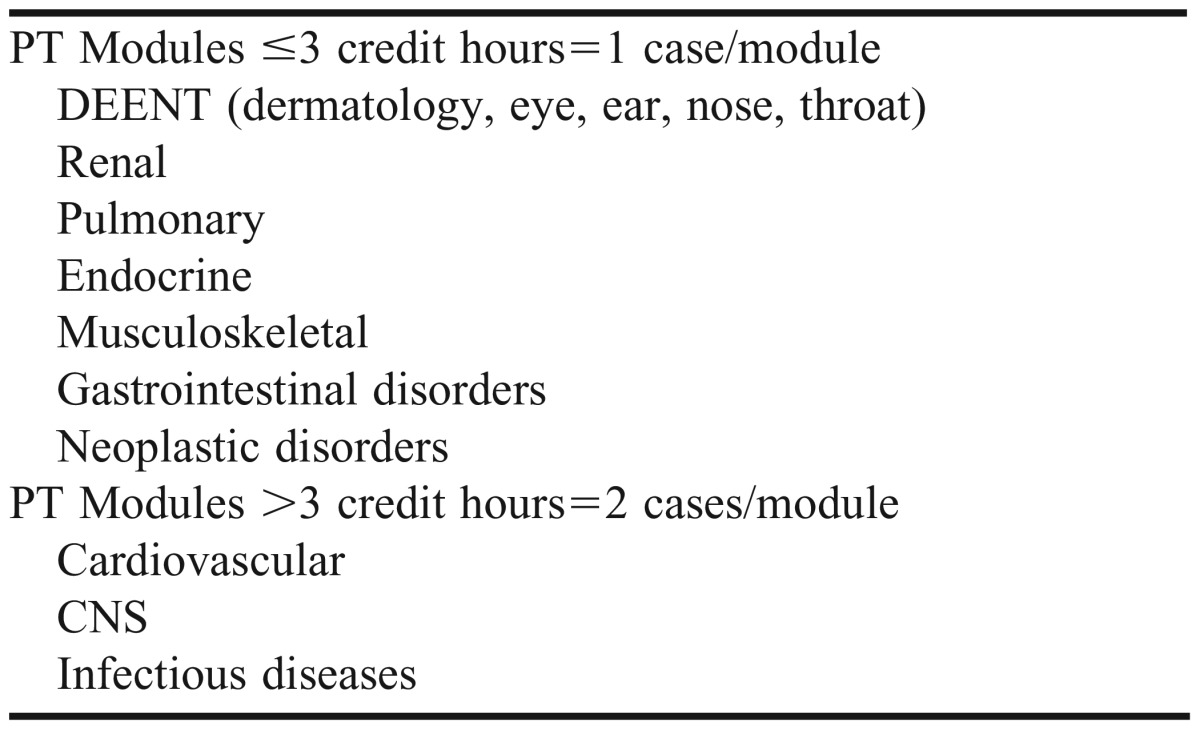

The case-based, comprehensive P3 ASAP Exam was given at the end of the third year to assess the ability of students to apply didactic knowledge to diverse patient scenarios. It combined the essentials of basic and pharmaceutical sciences with the clinical practice of pharmacy in a holistic manner through patient cases and questions written by faculty members. The pharmacotherapeutic modules for different systems (eg, cardiovascular, endocrine) or disease states (eg, infectious diseases, neoplastic diseases) were chosen as the model for writing patient cases. Ten of these modules formed the basis for developing the patient cases that could be used on the P3 ASAP Exam. The number of credit hours of a pharmacotherapeutic module dictated the number of cases initially written for each module (Table 1).

Table 1.

Pharmacotherapy (PT) Modules Credit Hours Determined for Patient Cases

The writing process began with pharmacy practice faculty members teaching in the pharmacotherapeutic modules designing patient cases. This typically included the chief complaint, past medical history, social history, laboratory values, and other specific information. The pharmacy practice faculty member also wrote at least five questions pertaining to the clinical aspects of the case. This was then placed on a central server accessible only by faculty members. The pharmaceutical sciences faculty members who team-taught the modules then wrote questions appropriate for the cases. Thus, each case had at least five therapeutics, three pharmacology, and two medicinal chemistry questions. In addition, at least two to three questions were added from the remaining basic and pharmaceutical sciences and social and administrative areas. In this way, the examination had questions covering the curriculum from each department and spanned the P1 through P3 years. Table 2 shows the pharmaceutical sciences and social/administrative sciences subjects covered in clinical cases. The cases and questions were refined by faculty members on the assessment committee to ensure the cases and items conformed to the prescribed writing style, formatting and, where necessary, were correctly sequenced within the patient case. To ensure independent review, faculty members on the committee only reviewed cases and questions they did not author.

Table 2.

Pharmaceutical and Social/Administrative Sciences Subjects Covered in Clinical Cases

All questions were multiple-choice, with four or five answer choices. Each question was tagged with the case-name, subject area, professional outcome, and in 2014, level of Bloom’s Taxonomy. This facilitated the tracking, compiling, reviewing, and optimizing of the examination as well as postexamination item analysis and improvement. Since its inception in 2010, a bank of questions and cases has been developed for the P3 ASAP Exam. Existing cases have been evaluated for revision, and new cases have been added to the case bank. Each year, a new P3 ASAP Exam is created by choosing 12 cases from the case bank, with about 150 questions. The examination is administered at the end of the P3 year, after completing the didactic courses, and has two parts, each part with a duration of 2.5 hours and an interval of one hour between the two parts.

The content validity was assessed in a 2-stage process: development and judgment-quantification.16 The development stage involved domain identification, item generation, and instrument formation. The domain for the P3 ASAP Exam was identified as the didactic portion of the curriculum spanning P1 to P3 years. Courses that imparted laboratory and patient/provider interaction skills were not included in the domain, as these were deemed more appropriate to assess in a laboratory environment or in an objective structured clinical examination (OSCE). Items (questions) were generated by faculty members writing within their content expertise pertinent to patient cases. The P3 ASAP Exam was then assembled into parts I and II with similar distribution of patient cases and questions.

The judgment-quantification stage entailed expert confirmation that items were content valid and the instrument was content valid. In the first step, faculty groups representing content expertise from pharmaceutical sciences and pharmacy practice departments reviewed the cases and items to ensure content relevance. When needed, the question was sent back to the author with feedback for revision. A follow-up review of the revised item by faculty members ensured the revision was sound. In the second step, assessment committee faculty members representing expertise from both departments reviewed parts I and II of the P3 ASAP Exam to ensure it had a representation of different courses, subject areas, professional outcomes, question difficulty, and levels of Bloom’s Taxonomy.

The criterion validity was assessed with a prospective study comparing student performance on the P3 ASAP Exam with the PCOA. Institutional review board (IRB) approval was obtained, and P4 students from the class of 2012 were invited to participate in this prospective, blinded, self-controlled study. Participants were administered a version of the P3 ASAP Exam that was different than the one they received as P3 students. They were administered the PCOA two weeks following the P3 ASAP Exam. Students did not receive scores on the examinations until after completion of the study. Additionally, students were instructed not to prepare for either examination. Data collected included P3 ASAP Exam and PCOA scores, which were compared to determine the criterion validity of the P3 ASAP Exam by using a simple linear regression analysis.

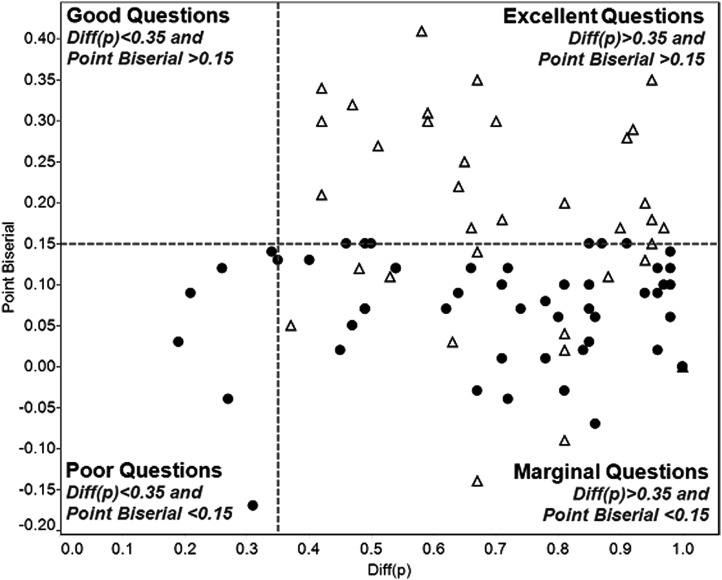

Reliability of the P3 ASAP Exam score from 2010 to 2014 was assessed with the KR-20, an index of internal reliability for examinations with more than fifty questions.17 Again, IRB approval was obtained for this portion. Spearman’s correlation was calculated to determine if the KR-20 statistically increased over the years. In addition, the P3 ASAP Exam analysis used the data visualization software, Tableau (Tableau Software, Seattle, WA) to create a scatterplot of the questions according to the point bi-serial and response rate. Low point bi-serial was defined as a question scoring <0.15, and a poor response rate (difficulty) was defined as <35% of the students answering a question correctly.18 The scatterplot was separated into four quadrants that categorized questions as poor, marginal, good, and excellent. Poor questions had point bi-serials <0.15 and response rates <35%, marginal questions had point bi-serials <0.15 and response rates >35%, good questions had point bi-serials >0.15 and response rates <35%, and excellent questions had point bi-serials >0.15 and response rates >35%. Questions identified as poor or marginal were sent to faculty members for revision before use in subsequent years and were tracked to see if the question quality improved.

RESULTS

Professional outcomes and courses have been assessed since the first administration of the P3 ASAP Exam. The addition of ExamSoft, an online testing software from ExamSoft Worldwide, Inc. (Dallas, TX) allowed for category tags to be used to analyze professional outcomes, courses, and Bloom’s levels on the 2014 P3 ASAP Exam. The curriculum is designed around three professional outcomes areas: (I) Acquire and apply the principles of pharmaceutical sciences to the profession of pharmacy; (II) Practice pharmacy in accordance with professional, legal, and ethical standards; (III) Improve the quality of health-care services for patients through leadership, advocacy, compassion, and cultural competency. All three professional outcomes areas were represented on the P3 ASAP Exam, with 57% of the content tagged as outcome I, 36% as outcome II, and 7% as outcome III.

Because the professional outcomes were accomplished through instruction in basic and pharmaceutical sciences, clinical pharmacy sciences, and social and administrative sciences respectively, they also represented these subject areas on the examination. Furthermore, the examination represented curriculum spanning the P1 through P3 years; specifically, 24% from the P1 year, 25% from the P2 year, and 51% from the P3 year. Outcome Ig (apply pharmacodynamics principles to explain the mechanisms of action, therapeutic uses, indications, and contraindications of drug substances) and IId (design individualized therapeutic regimens that are evidence-based, safe, and effective) were the most frequently tested. In contrast, there were five outcomes not covered on the 2014 P3 ASAP Exam because no case-relevant questions were written in a multiple-choice format. For example, outcome Id (prepare extemporaneously compounded sterile and nonsterile dosage forms) was better assessed in a laboratory practicum.

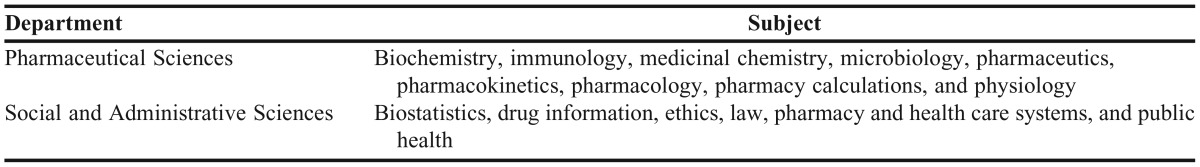

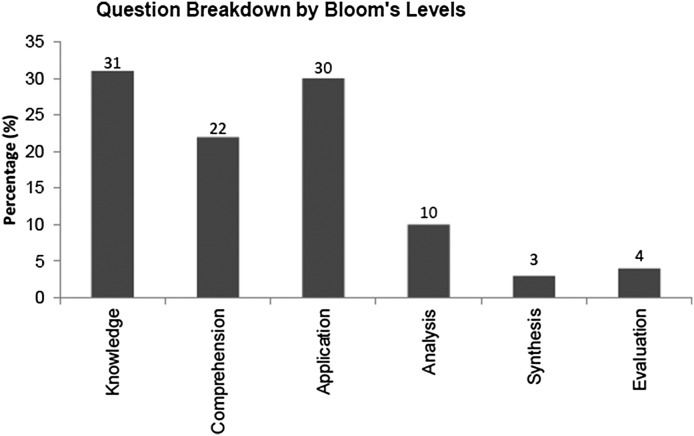

With respect to Bloom’s Taxonomy, faculty members were responsible for assigning the appropriate Bloom’s level to the questions they authored. Faculty members attended educational sessions covering Bloom’s Taxonomy to help ensure consistency. Mapping questions on the 2014 P3 ASAP Exam to Bloom’s Taxonomy showed that the questions tested student’s knowledge, comprehension, application, and analytical skills, with a smaller percentage of questions testing synthesis and evaluation skills (Figure 1). The school utilizes other forms of assessment, such as OSCEs, to evaluate synthesis and evaluation skills. Further analysis showed that 21 out of the 26 professional outcomes were tested on the P3 ASAP Exam.

Figure 1.

Percent Distribution of the P3 ASAP Exam Adminstered in 2014 by Bloom’s Levels.

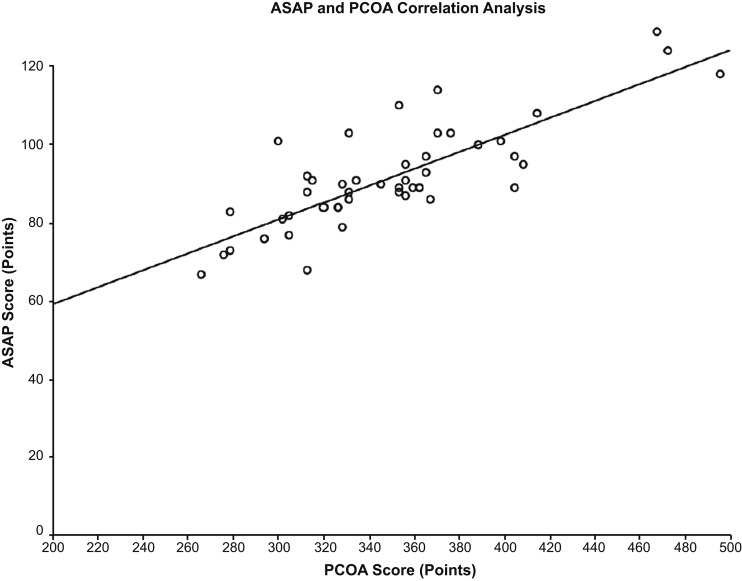

Forty-seven of the 98 (48%) P4 students participated for the criterion validity assessment. The least-squares method gave the estimated regression equation (Y=0.22X + 16.5; Figure 2), which could predict the ASAP score using the PCOA score. A strong positive correlation between PCOA and P3 ASAP Exam performance was shown by linear regression analysis including a Pearson correlation coefficient of 0.81 and an r2 of 0.65 (p<0.001). The student performance was significantly similar on the PCOA and P3 ASAP Exam, supporting the criterion validity of the latter.

Figure 2.

Linear Regression Analysis of PCOA vs P3 ASAP Exam Scores.

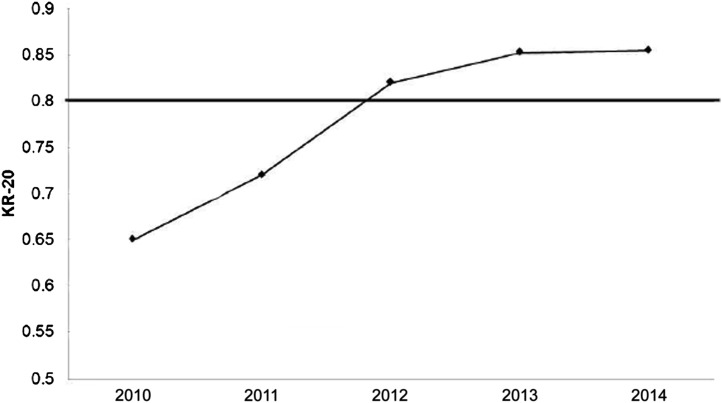

Figure 3 displays the KR-20 value for the P3 ASAP Exam since 2010. The KR-20 on the 2010 examination was 0.65, has increased steadily every year, and has been above 0.80 since 2012. The KR-20 on the 2014 P3 ASAP Exam was 0.86.

Figure 3.

Reliability of P3 ASAP Exam from 2010 to 2014.

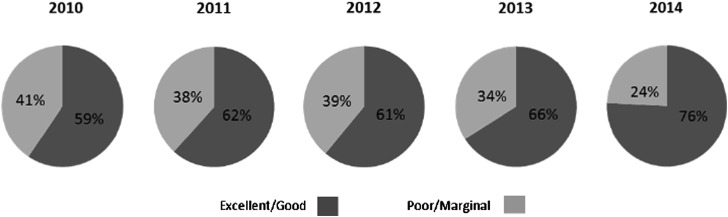

Psychometrics to assess question quality with point-biserial and difficulty was better utilized beginning with the 2012 P3 ASAP Exam, when an assessment and information systems analyst was hired. After that time, questions improved, with an overall decrease in “poor” questions and an increase in “excellent” questions (Figure 4). Of the 153 questions on the 2013 P3 ASAP Exam, 52 fell in the bottom two quadrants and were sent for revision. Thirty-five were used on the 2014 P3 ASAP Exam. Of these, 24 questions (68.6%) improved on the 2014 examination (Figure 5). Two questions moved from the poor to excellent quadrant, three moved from the poor to marginal quadrant, 19 moved from the marginal to excellent quadrant, and 11 questions remained in the marginal quadrant.

Figure 4.

Percentage of Excellent/Good vs Poor/Marginal Questions in P3 ASAP Exam.

Figure 5.

Performance of 2013 P3 ASAP Exam Poor and Marginal Questions after revision on the 2014 P3 ASAP Exam. Solid dark circles (•) are 2013 questions and open triangle (Δ) are same questions revised for 2014.

DISCUSSION

The PCOA administered in 2016 to the P3 students is compliant with the ACPE 2016 standards and key elements. The P3 ASAP Exam assessed student knowledge and performance and helped ensure assessment of the school’s professional outcomes in compliance with ACPE Standard 15 (version 2) for accreditation in the 2012 site visit. The P3 ASAP Exam was used to measure student competency at the culmination of didactic learning to ensure student readiness for APPEs. Because of its high-stakes nature, the school wanted to ensure the examination was valid and reliable. Also, because of the complexity and amount of data, much of the analysis was made possible by hiring an assessment and information systems analyst. Furthermore, support from an internal grant helped fund the criterion validity study using the PCOA.

Content validity of the P3 ASAP Exam was continually assessed by faculty members writing cases and/or questions and revising them based on peer review. The examination assessed students in all areas of the professional outcomes with an emphasis on areas I and II, which formed the bulk of the curriculum. However, professional outcome area III, which includes components of leadership, advocacy, compassion, and cultural competence, lies more in the affective domain and may be better tested through observation. Analysis of professional outcomes showed that 80% of the outcomes were addressed on the examination.

As intended, the P3 ASAP Exam included questions from the P1, P2, and P3 courses, of which about half of the questions mapped to the P3 courses. This was appropriate because more disease-specific pharmacotherapeutic modules were taught in the P3 year. However, the majority of questions were assigned professional outcome I. The pharmacotherapeutic modules were team-taught by pharmacy practice and pharmaceutical science faculty members. While the pharmacology and medicinal chemistry faculty members wrote questions ultimately assigned as professional outcome I, much of the content was taught in the pharmacotherapeutic module and pertained to medications specific to the patient case on the examination. In this way, there were many questions assigned professional outcome I that were clinically applicable to the patient case.

Criterion validity was assessed in a prospective, blinded, self-controlled study comparing student performance on the P3 ASAP Exam with their performance on the PCOA. This is the first report of a pharmacy school evaluating the criterion validity in this way. The two examinations were given in the span of two weeks, which was intended to prevent a confounding variable of improved knowledge. The examination was valid based on the positive strong correlation between scores earned on the P3 ASAP Exam compared with the PCOA. However, the validation efforts described here do have limitations. The criterion validation study had a small sample size, with 47 students participating, and later studies were not performed to ensure the results could be replicated. The participants did take the P3 ASAP Exam prior to the PCOA, which could have affected the PCOA score, but this was minimized by strongly encouraging participants not to study for either examination. Additionally, the scores of the P3 ASAP Exam were not released prior to the PCOA, removing a possible incentive to study for the PCOA.

Reliability was assessed beginning in 2010 and was evaluated with each subsequent P3 ASAP Exam offering. Starting in 2012, the examination demonstrated a stable KR-20 value above 0.80, which is considered sufficiently reliable.19 Reliability increases with an increased number of questions in the instrument.20 The KR-20 on the 2014 P3 ASAP Exam was 0.86, which was slightly lower than what was reported with the PCOA of 0.92.21 This was expected because the P3 ASAP Exam had 150 questions, compared with the PCOA, which had 200 questions.

Additionally, as seen in Figure 3, there was a dramatic improvement in reliability from 2010 to 2012. This is likely because of the improved question quality resulting from a continuous question review process. Figure 4 shows the breakdown of poor, marginal, good, and excellent questions for each year of the P3 ASAP Exam. This illustrates how the breakdown between the groups improved over time. Figure 5 further presents the analysis by showing how the majority of the 2013 poor/marginal questions improved in quality when the revised versions were used again in 2014. The remaining 11 questions in the marginal quadrant for both years will be sent again for revision. If the quality does not improve on subsequent analysis, the questions will be removed from the question bank. The revised questions from 2013 not used in 2014 will be analyzed on upcoming examinations and follow the same procedures depending on the analysis results.

While the PCOA delineates each content area into subcategories, student performance is only reported for the general category. For example, category 4F (Medication Therapy Management on the PCOA) is separated into 14 subcategories, but only the overall score in category 4F is reported.22,23 With internal examinations, schools can include other assessment areas of interest as we did with Bloom’s Taxonomy. The assessment committee is beginning to evaluate the implications of levels of Bloom’s Taxonomy used in the P3 ASAP Exam on the curriculum. Further, the construct validity will be examined for predictability of success indicators such as performance on the North American Pharmacist Licensure Examination (NAPLEX). Future direction includes exploring the feasibility of adding an OSCE to the P3 ASAP Exam.

CONCLUSION

The study provided evidence to support that the P3 ASAP Exam possessed content validity, criterion validity, and reliability necessary to evaluate curricular outcome achievement. This was possible via a robust writing-reviewing process and detailed psychometric analyses. Internal examinations can provide valuable analyses to aid curricular revision. Our analysis included reports on student performance within the subcategories for each professional outcome allowing us to better identify areas for curricular revision. Additionally, students were provided individual strength and opportunity reports based on their performance on the P3 ASAP Exam which served as an APPE-readiness tool.

REFERENCES

- 1.Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree. “Standards 2016.” Accreditation Council for Pharmacy Education. https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf. Accessed February 19, 2015.

- 2.Abate MA, Stamatakis MK, Haggett RR. Excellence in curriculum development and assessment. Am J Pharm Educ. 2003;67(3):Article 89. [Google Scholar]

- 3.Boyce EG. Finding and using readily available sources of assessment data. Am J Pharm Educ. 2008;72(5):Article 102. doi: 10.5688/aj7205102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Plaza CM. Progress examinations in pharmacy education. Am J Pharm Educ. 2007;71(4):Article 66. doi: 10.5688/aj710466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Szilagyi JE. Curricular progress assessments: the Milemarker. Am J Pharm Educ. 2008;72(5):Article 101. doi: 10.5688/aj7205101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.PCOA. National Association of Boards of Pharmacy. http://www.nabp.net/programs/assessment/pcoa. Accessed February 14, 2014.

- 7. Guidance for the Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree, “Standards 2016.” Accreditation Council for Pharmacy Education. https://www.acpe-accredit.org/pdf/GuidanceforStandards2016FINAL.pdf. Accessed February 19, 2015.

- 8.Messick S. Meaning and values in test validation: the science and ethics of assessment. Educ Res. 1989;18(2):5–11. [Google Scholar]

- 9.Peeters MJ, Beltyukova SA, Martin BA. Educational testing and validity of conclusions in the scholarship of teaching and learning. Am J Pharm Educ. 2013;77(9):Article 186. doi: 10.5688/ajpe779186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Alston GL, Love BL. Development of a reliable, valid annual skills mastery assessment examination. Am J Pharm Educ. 2010;74(5):Article 80. doi: 10.5688/aj740580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Latif DA, Stull R. Relationship between an annual examination to assess student knowledge and traditional measures of academic performance. Am J Pharm Educ. 2001;65(4):346–349. [Google Scholar]

- 12.Medina MS, Britton ML, Letassy NA, Dennis V, Draugalis JR. Incremental development of an integrated assessment method for the professional curriculum. Am J Pharm Educ. 2013;77(6):Article 122. doi: 10.5688/ajpe776122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mehvar R, Supernaw RB. Outcome assessment in a PharmD program: the Texas Tech experience. Am J Pharm Educ. 2002;66(3):219–223. [Google Scholar]

- 14.Meszaros K, Barnett MJ, McDonald K, et al. Progress examination for assessing students' readiness for advanced pharmacy practice experiences. Am J Pharm Educ. 2009;73(6):Article 109. doi: 10.5688/aj7306109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sansgiry SS, Nadkarni A, Lemke T. Perceptions of PharmD students towards a cumulative examination: the Milemarker process. Am J Pharm Educ. 2004;2004;68(4):Article 93. [Google Scholar]

- 16.Lynn MR. Determination and quantification of content validity. Nurs Res. 1986;35(6):382–385. [PubMed] [Google Scholar]

- 17.Thompson Nathan A. “KR-20.” Encyclopedia of Research Design. Ed. Neil J. Salkind. Thousand Oaks, CA: SAGE Publications, Inc., 2010. 668-69. SAGE Research Methods. Web. 2 Feb. 2016.

- 18.Ebel RL, Frisbie DA. Essentials of Educational Measurement. 5th ed. Englewood Cliffs, NJ: Prentice Hall; 1991. [Google Scholar]

- 19.Charter RA. Statistical approaches to achieving sufficiently high test score reliabilities for research purposes. J Gen Psychol. 2008;135(3):241–251. doi: 10.3200/GENP.135.3.241-251. [DOI] [PubMed] [Google Scholar]

- 20.Cortina JM. What is coefficient alpha? an examination of theory and applications. J Appl Psychol. 1993;78(1):98–104. [Google Scholar]

- 21.2011-2014 PCOA Administration Highlights. National Association of Boards of Pharmacy. http://www.nabp.net/programs/assessment/pcoa/pcoa-highlights. Accessed November 20, 2014.

- 22.PCOA Content Areas. National Association of Boards of Pharmacy. http://www.nabp.net/programs/assessment/pcoa/pcoa-content-areas. Accessed February 20, 2015.

- 23.PCOA Score Reports. National Association of Boards of Pharmacy. http://www.nabp.net/programs/assessment/pcoa/pcoa-score-reports. Accessed February 20, 2015.