Abstract

Recent studies have shown that Alzheimer's disease (AD) is related to alteration in brain connectivity networks. One type of connectivity, called effective connectivity, defined as the directional relationship between brain regions, is essential to brain function. However, there have been few studies on modeling the effective connectivity of AD and characterizing its difference from normal controls (NC). In this paper, we investigate the sparse Bayesian Network (BN) for effective connectivity modeling. Specifically, we propose a novel formulation for the structure learning of BNs, which involves one L1-norm penalty term to impose sparsity and another penalty to ensure the learned BN to be a directed acyclic graph – a required property of BNs. We show, through both theoretical analysis and extensive experiments on eleven moderate and large benchmark networks with various sample sizes, that the proposed method has much improved learning accuracy and scalability compared with ten competing algorithms. We apply the proposed method to FDG-PET images of 42 AD and 67 NC subjects, and identify the effective connectivity models for AD and NC, respectively. Our study reveals that the effective connectivity of AD is different from that of NC in many ways, including the global-scale effective connectivity, intra-lobe, interlobe, and inter-hemispheric effective connectivity distributions, as well as the effective connectivity associated with specific brain regions. These findings are consistent with known pathology and clinical progression of AD, and will contribute to AD knowledge discovery.

General Terms: Algorithms

Keywords: Brain network, Alzheimer’s disease, neuroimaging, FDG-PET, Bayesian network, sparse learning

1. INTRODUCTION

Alzheimer’s disease (AD) is the most common cause of dementia and the fifth leading cause of death in people over 65 in the US. The current annual cost of AD care in the U.S. is more than $100 billion, which will continue to grow fast. The existing knowledge about the cause of AD is very limited. Clinical diagnosis is imprecise with a definite diagnosis only possible by autopsy. Also, there is currently no cure for AD, while most drugs only modestly alleviate symptoms. To tackle these challenging issues in AD studies, fast advancing neuroimaging techniques hold great promise. Recent studies have shown that neuroimaging can provide sensitive and reliable measures of AD onset and progression, which can complement the conventional clinical-based assessments and cognitive measures.

In neuroimaging-based AD research, one important area is brain connectivity modeling, i.e., identification of how different brain regions interact to produce a cognitive function in AD, compared with normal aging. Research in this area can substantially promote AD knowledge discovery and identification of novel connectivity-based AD biomarkers to be used in clinical practice. There are two types of connectivity being studied: functional connectivity refers to the covarying pattern of different brain regions; effective connectivity refers to the directional relationship between regions [1].

A vast majority of the existing research focuses on functional connectivity modeling. Various methods have been adopted such as correlation analysis [2], Principal Component Analysis (PCA) [3], PCA-based Scaled Subprofile Model [4], Independent Component Analysis [5], and Partial Least Squares [6]. Recently, sparse models have also been introduced, such as sparse multivariate or vector autoregressions [7] and sparse inverse covariance estimation [8]. Sparse models have shown great effectiveness because neuroimaging datasets are featured by "small n large p", i.e., the number of AD patients (n) can be close to or less than the number of brain regions modeled (p). Also, many past studies based on anatomical brain databases have shown that the true brain network is indeed sparse [9].

Compared with functional connectivity modeling, effective connectivity modeling has the advantage of helping identify the pathway/mechanism whereby distinct brain regions communicate with each other. However, the existing research in effective connectivity modeling is much less extensive. Models that have been adopted include structural equation models [10] and dynamic causal models [11]. The limitations of these models include (i) they are confirmative, rather than explanatory, i.e., they require a prior model of connectivity to start with; (ii) they require a substantially larger sample size than the number of regions modeled; a typical number of regions included is less than 10 given the sample size limit. These limitations make them inappropriate for AD connectivity modeling, because there is little prior knowledge of which regions should be included and how they are connected.

We propose sparse Bayesian Networks (BN) for effective connectivity modeling. A BN is an explanatory model and the sparse estimation makes it possible to include a large number of brain regions. In a BN representation of effective connectivity, the nodes are brain regions. A directed arc from node Xi to Xj (Xi is called a parent of Xj) indicates a direct influence from Xi to Xj. Sparsity consideration has been common in the BN learning literature. For example, some early work used score functions, such as BIC and MDL, to measure the goodness-of-fit of a BN, in which a penalty term on the model complexity is usually included in the score [12]. Recently, driven by modern applications such as genetics, learning of large-scale BNs has been very popular, in which sparsity consideration is indispensible. The Sparse Candidate (SC) algorithm [13], one of the first BN structure learning algorithms to be applied to a large number of variables, assumes that the maximum number of parents for each node is limited to a small constant. The L1MB-DAG algorithm developed in [14] uses LASSO [15] to identify a small set of potential parents for each variable. Some other algorithms, such as the Max-Min Hill-Climbing (MMHC) [16], Grow-Shrink [17], TC and TC_bw [18], all follow the same line. Most of these existing algorithms employ a two-stage approach: Stage 1 is to identify the potential parents of each variable; Stage 2 usually applies some heuristic search algorithms (e.g., hill-climbing) or orientation methods (e.g., Meek's rules [19]) to identify the parents out of the potential parent set. An apparent weakness of the two-stage approach is that if a true parent is missed in Stage 1, it will never be recovered in Stage 2. Another weakness of the existing algorithms is computational efficiency, i.e., it may take hours or days to learn a large-scale BN such as one with 500 nodes.

In this paper, we propose a new sparse BN learning algorithm, which is called SBN. It is a one-stage approach that identifies the parents of all variables directly. The main contributions of this paper include:

We propose a novel sparse BN learning algorithm, i.e., SBN, using one L1-norm penalty term to impose sparsity and another penalty term to ensure the learned BN is a directed acyclic graph (DAG).

We present theoretical guidance on how to select the regularization parameter associated with the second penalty.

We perform theoretical analysis to reason why the two-stage approach popularly adopted in the existing literature has a high risk of failing to identify the true parents. Also, we conduct extensive experiments on synthetic data to compare SBN and the existing algorithms in terms of the learning accuracy and scalability.

We apply SBN to FDG-PET data of 42 AD patients and 67 normal controls (NC) subjects enrolled in the ADNI (Alzheimer's Disease Neuroimaging Initiative) project, and identify the effective connectivity models for AD and NC. Our study reveals that the effective connectivity of AD is different from NC in many ways, including the global-scale effective connectivity, intra-lobe, inter-lobe, and inter-hemisphere effective connectivity distributions, as well as the effective connectivity associated with specific brain regions. The findings are consistent with known pathology and clinical progression of AD.

2. BAYESIAN NETWORK: KEY DEFINITIONS AND CONCEPTS

This section introduces the key definitions and concepts of BNs that are relevant to this paper:

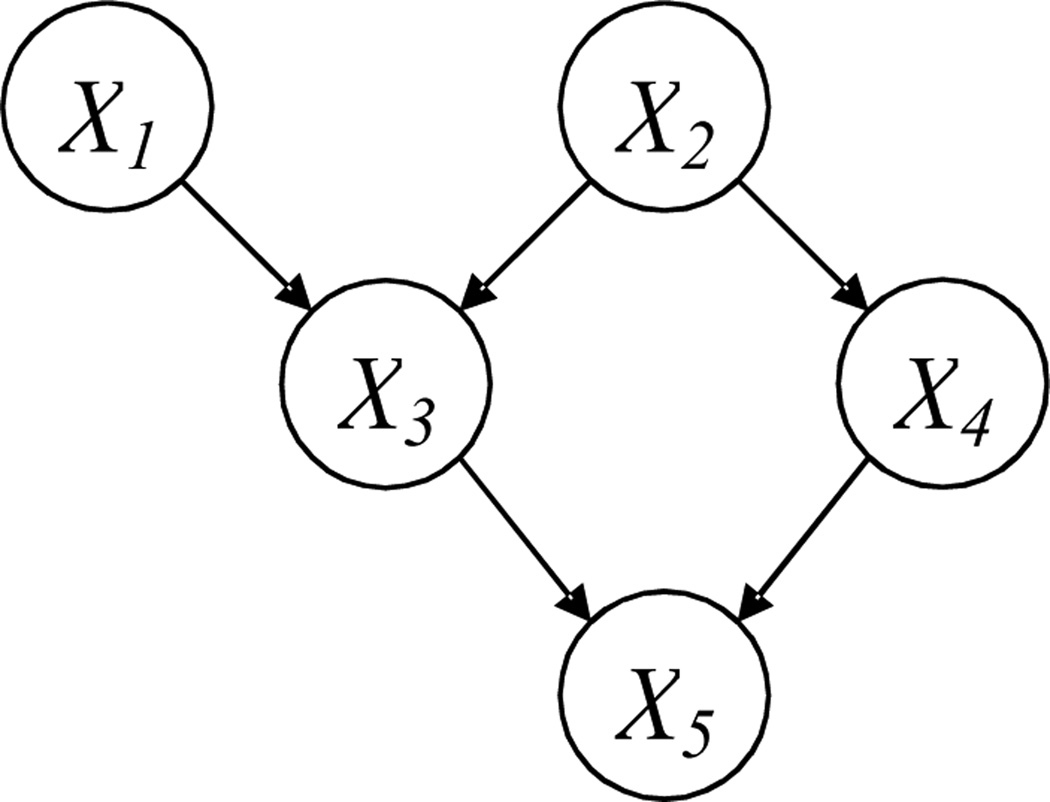

A BN is composed of a structure and a set of parameters. The structure (Fig. 1) is a DAG that consists of p nodes [X1, …, Xp] and directed arcs between some nodes; no cycle is allowed in a DAG. Each node represents a random variable. If there is a directed arc from Xi to Xj, Xi is called a parent of Xj and Xj is called a child of Xi Two nodes are called spouses if they share a common child. If there is a directed path from Xi to Xj, i.e., Xi → ⋯ →Xj, Xi is called an ancestor of Xj. A directed arc is also a directed path and a parent is also an ancestor according to this definition. The Markov Blanket (MB) of Xj is a set of variables given which Xj will be independent of all other variables. The MB includes the parents, children, and spouses of Xj.

Figure 1.

A BN structure

In this paper, we use the following notations with respect to a BN structure: Denote the structure by a p × p matrix G, with entry Gij = 1 representing a directed arc from Xi to Xj. The set of parents of a node Xi is denoted by PA(Xi) = [Xi1, …, Xik]T. In addition, we define a p × p matrix, P, which records all the directed paths in the structure, i.e., if there is a directed path from Xi to Xj, entry Pij = 1; otherwise, Pij = 0.

In addition to the structure, other important components of a BN are the parameters. The parameters are the conditional probability distribution of each node given its parents. Specifically, when the nodes follow a multivariate Gaussian distribution, a regression-type parameterization can be adopted, i.e., Xi = βiT PA(Xi) + εi with εi ~ N(0,σi2) and βi = [βi1i,⋯, βiki]T. Then, the parameters of a BN are B = [β1, …, βp]. Without loss of generality, we assume that the nodes are standardized, i.e., each with a zero mean and unit variance. This means, if using xi = [xi1, …, xin] to denote the sample vector for Xi, and n to denote the sample size, we have and .

3. THE PROPOSED SPARSE BN STRUCTURE LEARNING ALGORITHM

One of the challenging issues in BN structure learning is to ensure that the learned structure must be a DAG, i.e., no cycle is present. To achieve this, we first identify a sufficient and necessary condition for a DAG, which is given as Lemma 1 below.

Lemma 1. A sufficient and necessary condition for a DAG is βji × Pij = 0 for every pair of nodes Xi and Xj.

Proof. To prove the necessary condition, suppose that a BN structure, G, is a DAG. Let’s assume that βji × Pij ≠ 0 for a pair of nodes Xi and Xj. Then, there exists a directed path from Xj to Xi and a directed path from Xi to Xj, i.e., there is a cycle in G, which is a contradiction to our presumption that G is a DAG. To prove the sufficient condition, suppose that βji × Pij = 0 for every pair of nodes Xi and Xj. If G is not a DAG, i.e., there is a cycle, it means that there exist two variables, Xi and Xj, with a directed arc from Xj to Xi (βji ≠ 0) and a directed path from Xi to Xj (Pij = 1). This contradicts with our assumption that βji × Pij = 0 for every pair of nodes Xi and Xj.

Based on Lemma 1, we further present our formulation of the sparse BN structure learning. It is an optimization problem with the objective function and constraints given by:

| (1) |

The notations are explained as follows: xi = [xi1, …, xin] denotes the sample vector for Xi, where n is the sample size. x/i denotes the sample matrix for all the variables except Xi .The first term in the objective function, , is a profile likelihood to measure the model fit. The second term,‖βi‖1, is the sum of the absolute values of the elements in βi and thus is the so-called L1-norm penalty [15]. The regularization parameter, λ1, controls the number of nonzero elements in the solution to βi, β̂i; larger λ1, less nonzero elements. Because less nonzero elements in β̂i correspond to fewer arcs in the learned BN structure, a larger λ1 results in a sparser structure. In addition, the constraints are to assure that the learned BN is a DAG (see Lemma 1 and Theorem 1 below). We remark that these constraints are functions of B only, since P = expm(G) [20]. Here, expm(G) is the matrix exponential of G.

Solving the constrained optimization in (1) is difficult. Therefore, the penalty method [21] is employed to transform it into an unconstrained optimization problem, through adding an extra L1-norm penalty into the original objective function, i.e.,

| (2) |

where j ⋐ X /i denotes that the variable indexed by j, i.e., Xj is a variable different from Xi. Here, λ2 ∑j⋐X/i|β̂ji × Pij| is to push βji × Pij become zero. Under some mild conditions, there exists a such that for all , B̂ap is also a minimizer for (1) [21]. Theorem 1 gives a practical estimation for .

Theorem 1. Any λ2 > (n − 1)2 p/λ1 − λ1 will guarantee B̂ap to be a DAG.

Proof. To prove this we first need to show that with a certain value of λ1 and any value of λ2, B̂ap is bounded. This can be seen from , for each β̂i. The last inequality holds because is the value of the function on the left hand side with βi = 0, which is obviously larger than the function value with βi.= β̂i The last equality holds since we have standardized all the variables. Thus, we know that maxk⋐PA(Xi)|β̂ki| ≤ (n − 1)/λ1. Now, we use proof-by-contradiction to show that, with any λ2 > (n − 1)2p/λ1 − λ1, we will get a DAG. Suppose that such a λ2 doesn’t guarantee a DAG. Then, there must be at least a pair of variables Xi and Xj with βji × Pij ≠ 0, i.e., βji ≠ 0 and Pij = 1. Based on the first order optimality condition, βji ≠ 0 i.f.f. . Here, denotes the elements in β̂i without β̂ji, and x/(i,j) denotes the sample matrix for all the variables except Xi and Xj. However, we have

which results in

Theorem 1 implies that if we specify any λ2 > (n − 1)2p/λ1 − λ1, we will get a minimizer of (1) through solving (2). However, in practice, directly solving (2) by specifying a large λ2 may converge slowly. This is because that the unconstrained problem in (2) may be ill-conditioned with a too large value for λ2 [40]. To avoid this, the “warm start” method [21] can be used, which works in the following way: first, it specifies a series of values for λ2, i.e., , where is small and ; next, it optimizes (2) with to get a minimizer , using an arbitrary initial value; then, it optimizes (2) with , using as an initial value; this process iterates, until it optimizes (2) with . With the last minimizer as the initial value for the next optimization problem, this method can be quite efficient.

Given λ1 and λ2, the BCD algorithm [22] can be employed to solve (2). The BCD algorithm updates each βi iteratively, assuming that all other parameters are fixed. In our situation, this is equivalent to optimizing fi(βi) iteratively and the algorithm will terminate when some convergence conditions are satisfied. We remark that fi(βi), after some transformation, is similar to LASSO [15], i.e.,

| (3) |

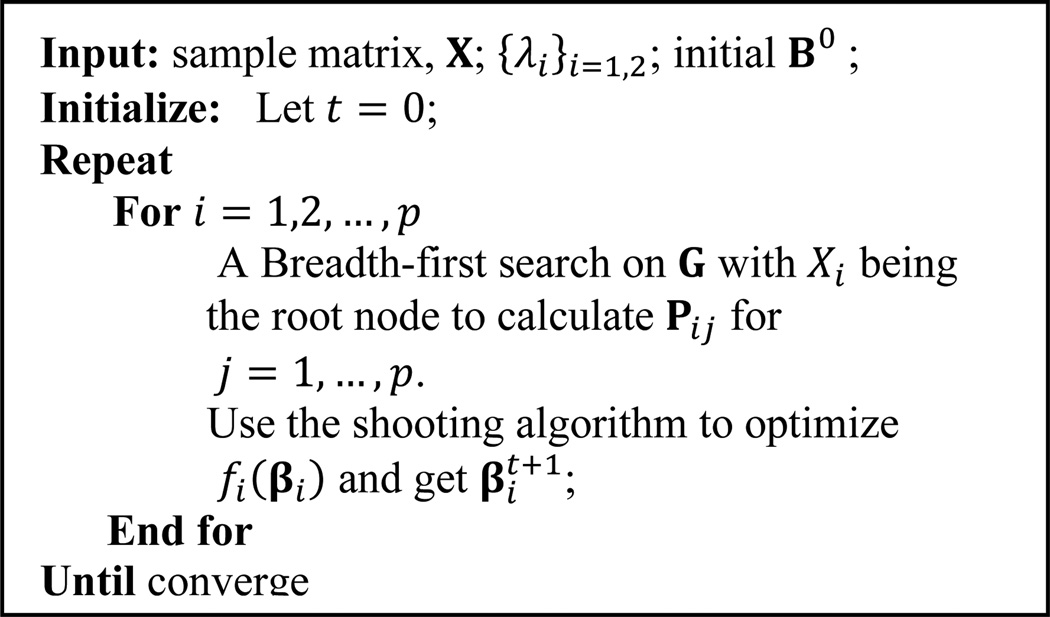

As a result, the shooting algorithm [23] for LASSO may be used to optimize fi(βi) in each iteration. Note that at each iteration for optimizing fi(βi), we also need to calculate Pij for j ⋐ X/i. This can be done by a Breadth-first search on G with Xi being the root node. A more detailed description of the BCD algorithm used to solve (2) is given in Figure 2.

Figure 2.

The BCD algorithm used for solving (2)

Finally, we want to mention that the L2-norm penalty, λ2∑j⋐X/i(βji × Pij)2, might also be used in (2). The advantage is that it is a differentiable function of βji. Also, as shown in [21], βji × Pij → 0 when λ2 → ∞. However, the limitation of the L2-norm penalty, compared with the L1-norm penalty, is that there is no guarantee that a finite λ2 exists to assure βji × Pij = 0 for all pairs of Xi and Xj.

Time complexity analysis of the proposed algorithm: Each iteration of the BCD algorithm consists of two operations: a shooting algorithm and a Breadth-first search on G. These two operations cost O(pn) [24] and O(p + |G|), respectively. Here |G| is the number of nonzero elements in G. If G is sparse, i.e., |G| = Cp with a small constant C, O(p + |G|) = O(p). Thus, the computational cost at each iteration is only O(pn). Furthermore, each sweep through all columns of B costs O(p2n). Our simulation study shows that it usually takes no more than 5 sweeps to converge.

4. THEORETICAL ANALYSIS OF THE COMPETITIVE ADVANTAGE OF THE PROPOSED SBN ALGORITHM

Simulation studies in Sec. 5 will show that SBN is more accurate than various existing algorithms that employ a two-stage approach. This section aims to provide some theoretical insights about why it is so. Recall that Stage 1 of the two-stage approach is to identify potential parents of each variable Xi. The existing algorithms achieve this by identifying the MB of Xi. A typical way is variable selection based on regression, i.e., to build a regression of Xi on all other variables and consider the variables selected to be the MB. The key differences between various algorithms are the type of regression used and the method in variable selection. For example, the TC algorithm [18] uses ordinary regression and a t-test for variable selection; the L1MB-DAG algorithm [14] uses LASSO.

In the regression of Xi, the coefficients for the variables that are not in the MB will be small (theoretically zero due to the definition of MB). However, the coefficients for the parents may also be very small due to the correlation between the parents and children. As a result, some parents may not be selected in the variable selection, i.e., they will be missed in Stage 1 of the two-stage approach, leading to greater BN learning errors. In contrast, the SBN may not suffer from this, because it is a one-stage approach that identifies the parents directly.

To further illustrate this point, we analyze one two-stage algorithm, the TC algorithm. TC does variable selection using a t-test. To determine whether a variable should be selected, a t-test uses the statistic β̂/se(β̂), where β̂ is the estimate for the regression coefficient of this variable and se(β̂) is the standard error. The larger the β̂/se(β̂), the higher chance the variable will be selected. Theorems 2 and 3 below show that even though the value of β̂/se(β̂) corresponding to a parent of Xi is large in the true BN, its value may decrease drastically in the regression of Xi on all other variables. Theorem 2 focuses on a specific type of BNs, a general tree, in which all variables have one common ancestor and there is at most one directed path between two variables. Theorem 3 focuses on a general inverse tree, which becomes a general tree if all the arcs are reversed. The proof of Theorem 2 can be found in the Appendix. The proof of Theorem 3 is not shown here due to space limitations.

Theorem 2. Consider a general tree with m + 2 variables, whose structure and parameters are given by X1 = e1, X2 = β12X1 + e2, Xi = β2iX2 + ei, i = 3,4, …, m. All the variables have unit variance. If X2 is regressed on all other variables in Stage 1 of TC, i.e., , the coefficient for X2's parent, X1, is:

Theorem 3. Consider a general inverse tree with m + l + 2 variables, whose structure and parameters are given by Xi = ei, i = 1,2, …, l,l + 3, …, l + m, . All the variables have unit variance. If Xl + 1 is regressed on all other variables, i.e.,, the coefficients for Xl+1 's parent, Xk(k = 1,2, …, l), are:

For example, consider a general tree with β12 = 0.3, β2i = 0.8, m = 8. Based on Theorem 2, and .

Consider a general inverse tree with seven variables: Xi,i = 1, ⋯, 5, are parents of X6 which is a parent of X7; [β16, ⋯, β56] = [0.24,0.325,0.256,0.304,0.216]; β67 = 0.3. Based on Theorem 3, and is equal to which is less than [β16/se(β16), ⋯, β56/se(β56)] since the later one is equal to

Note that these theorems derive the relationship between βMB (βMB/se(βMB)) and β (β/se(β)) at the population-level (i.e., sampling error is not considered), so they use the notation “β” not “β̂”.

5. SIMULATION STUDIES ON SYNTHETIC DATA

We show four sets of simulations. The first one is to show that, on a general tree, the existing algorithms based on the two-stage approach may miss some true parents with high probabilities, while the proposed SBN performs well. The second simulation is to compare the structure learning accuracy of SBN with other competing algorithms, on benchmark networks. The third and fourth simulations are to investigate the scalability of SBN and compare it with other competing algorithms.

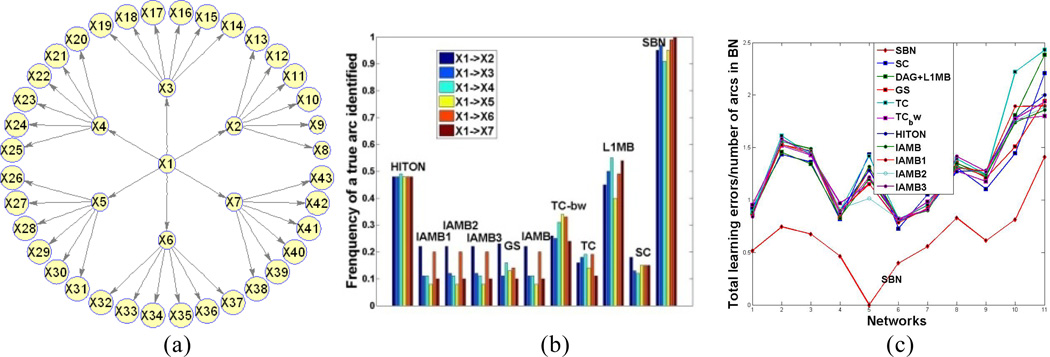

5.1 Learning accuracy for general tree

Ten existing algorithms are selected: HITON-PC [25], IAMB and three of its variants [26], GS [17], SC [13], TC and its advanced version TC-bw [18], and L1MB [14]. We simulate data from the general tree in Figure 3(a) with a sample size of 200.

Figure 3.

(a): The general tree in Sec. 5.1 (regression coefficients are 0.3 for arcs between X1 and Xi, i = 2, …, 7 and 0.8 for others); (b) Simulation results for the general tree; (c) Simulation results for 11 benchmark networks.

We apply the selected algorithms on the simulated data; the parameters of each algorithm are selected in the way that the authors have suggested in their papers, respectively. In applying the proposed SBN, λ1 is selected by BIC; λ2 is set to be 10[(n −1)2 p/λ1 − λ1] which empirically guarantees a DAG to be learned. The initial value of SBN is the output of L1MB which uses LASSO in Stage 1 to identify the MB for each variable. We treat the identified MB by L1MB as parents and use the resulting “BN” (not necessarily a DAG) as the initial value for SBN. The results over 100 repetitions are shown in Figure 3(b). The X-axis records the 10 selected algorithms and the proposed SBN (the last one). The Y-axis records the frequency of a true arc being identified. Each color bar corresponds to a true arc indicated in the legend. Six true arcs are shown, i.e., the arcs between X1 and Xi, i = 2, ⋯, 7. Because X1 is the parent of Xi, the Y-axis actually shows how well the parent of Xi can be identified by each of the algorithms. Figure 3 (b) shows that, SBN performs much better than all others. This can be explained by Theorem 1. Specifically, the MB of Xi includes X1 and six children. Although the coefficient linking X1 to Xi is as high as 0.3, this coefficient in the regression that regresses X1 on its MB reduces to 0.028, due to the inclusion of the children in the regression. As a result, X1 will have a high probability of being excluded from the MB identified in Stage 1 of the existing algorithms.

5.2 Learning accuracy for Benchmark networks

We select seven moderately large networks from BNR [27]. We also use the tiling technique to produce two large BNs, alarm2 and hailfinder2. Two other networks with specific structures, factor and chain, are also considered. The 11 networks are: (number of nodes/edges): 1. factors (27/68), 2. alarm (37/46), 3. barley (48/84), 4. carpo (61/74), 5. chain(7/6), 6. hailfinder (56/66), 7. insurance (27/52), 8. mildew (35/46), 9. water (32/66), 10. alarm2 (296/410), 11. hailfinder2 (280/390). To specify the parameters of a network, i.e., to specify the regression coefficients of each variable on its parents, we randomly sample from ±1 + N(0,1/16). Then, we simulate data from the networks with sample size 100, and apply the 10 algorithms to learn the BN structures. The results over 100 repetitions are shown in Figure 3(c). The X-axis records the 11 networks. The Y-axis records the ratio of the total learning error (false positives plus false negatives) to the number of arcs in the true BN. Each curve corresponds to one of the 11 algorithms under comparison. We can observe that the lowest curve (i.e., best performance) is SBN.

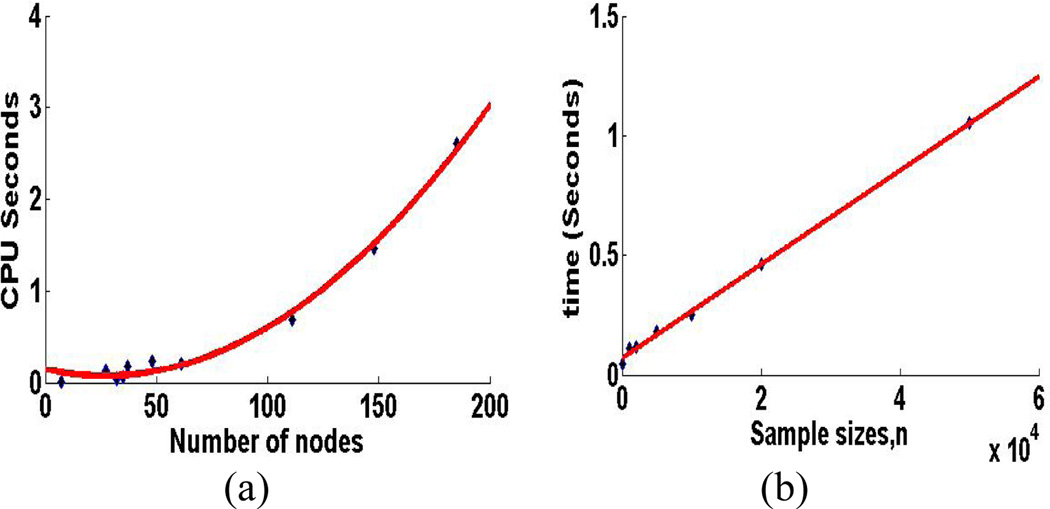

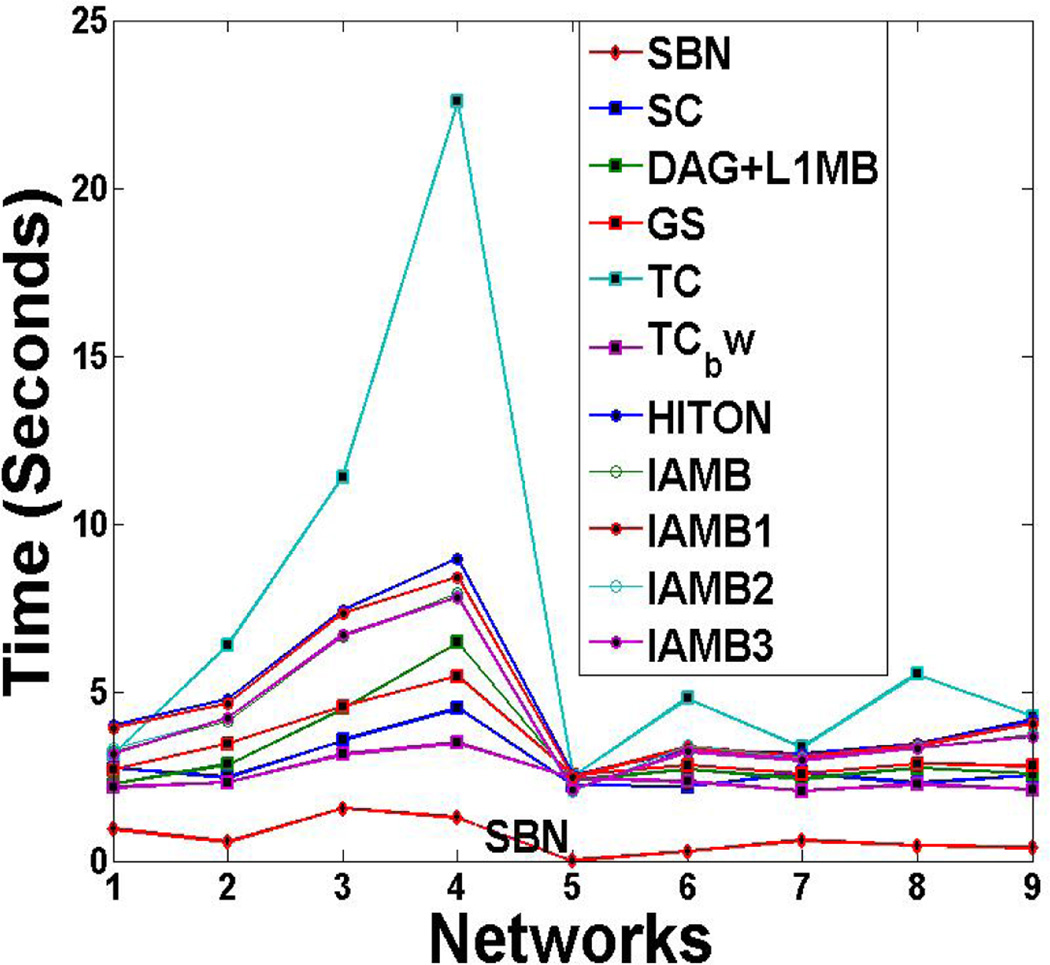

5.3 Scalability

We study two aspects of scalability for SBN: the scalability with respect to the number of variables in a BN, p, and the scalability with respect to the sample size, n. We use the CPU time for each sweep through all the columns of B as the parameter for measurement. Specifically, we fix n =1000, and vary p by using the 11 benchmark networks. Also, we fix p =37 (the Alarm network). The results over 100 repetitions are shown in Figure 4 (a) and (b), respectively. It can be seen that the computational cost is linear in n and quadratic in p, which confirms our theoretical time complexity analysis in Section 3.

Figure 4.

Scalability of SBN with respect to (a) the number of variables in a BN, p, and (b) the sample size, n, on a computer with Intel Core 2, 2.2 G Hz, 4G

6. BRAIN EFFECTIVE CONNECTIVITY MODELING OF AD BY SBN

FDG-PET baseline images of 49 AD and 67 normal control (NC) subjects from the ADNI project (http://www.loni.ucla.edu/ADNI) were used in this study. After spatially normalizing the images to the Montreal Neurological Institute (MNI) template coordinate space, average PET counts were extracted from the 116 brain anatomical regions of interest (AVOIs) defined by the Automated Anatomical Labeling [28] technique. We then selected 42 AVOIs that are considered to be potentially relevant to AD, as reported in the literature. Each of the 42 AVOIs became a node in the SBN. Please see Table 2 for the name of each AVOI brain region. These regions are distributed in the four major neocortical lobes of the brain, i.e., the frontal, parietal, occipital, and temporal lobes.

Table 2.

Names of the AVOIs for effective connectivity modeling (L = left hemisphere, R = right hemisphere)

| Frontal lobe | Parietal lobe | Occipital lobe | Temporal lobe | ||||

|---|---|---|---|---|---|---|---|

| 1 | Frontal_Sup_L | 13 | Parietal_Sup_L | 21 | Occipital_Sup_L | 27 | Temporal_Sup_L |

| 2 | Frontal_Sup_R | 14 | Parietal_Sup_R | 22 | Occipital_Sup_R | 28 | Temporal_Sup_R |

| 3 | Frontal_Mid_L | 15 | Parietal_Inf_L | 23 | Occipital_Mid_L | 29 | Temporal_Pole_Sup_L |

| 4 | Frontal_Mid_R | 16 | Parietal_Inf_R | 24 | Occipital_Mid_R | 30 | Temporal_Pole_Sup_R |

| 5 | Frontal_Sup_Medial_L | 17 | Precuneus_L | 25 | Occipital_Inf_L | 31 | Temporal_Mid_L |

| 6 | Frontal_Sup_Medial_R | 18 | Precuneus_R | 26 | Occipital_Inf_R | 32 | Temporal_Mid_R |

| 7 | Frontal_Mid_Orb_L | 19 | Cingulum_Post_L | 33 | Temporal_Pole_Mid_L | ||

| 8 | Frontal_Mid_Orb_R | 20 | Cingulum_Post_R | 34 | Temporal_Pole_Mid_R | ||

| 9 | Rectus_L | 35 | Temporal_Inf_L 8301 | ||||

| 10 | Rectus_R | 36 | Temporal_Inf_R 8302 | ||||

| 11 | Cingulum_Ant_L | 37 | Fusiform_L | ||||

| 12 | Cingulum_Ant_R | 38 | Fusiform_R | ||||

| 39 | Hippocampus_L | ||||||

| 40 | Hippocampus_R | ||||||

| 41 | ParaHippocampal_L | ||||||

| 42 | ParaHippocampal_R | ||||||

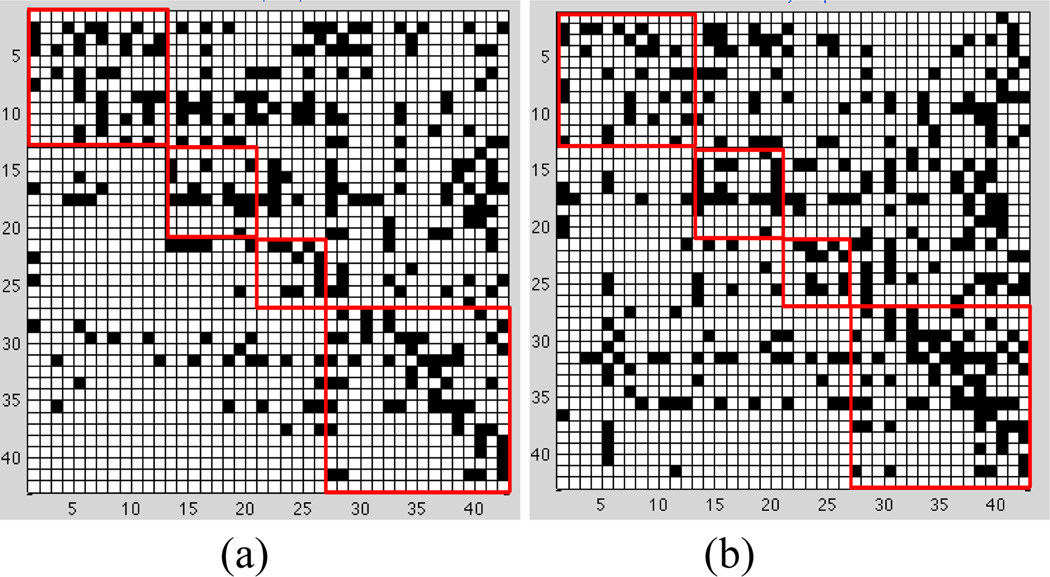

We apply SBN to learn a BN for AD and another one for NC, to represent their respective effective connectivity models. In the learning of an AD (or NC) effective connectivity model, the value for λ1 needs to be selected. In this paper, we adopt two criteria in selecting λ1: one is to minimize the prediction error of the model and the other is to minimize the BIC. Both criteria have been popularly adopted in sparse learning [12,14,15]. The two criteria lead to similar findings discovered from the effective connectivity models, so only the results based on the minimum prediction error are shown in this section. Specifically, Figure 6 shows the effective connectivity models for AD and NC. Each model is represented by a "matrix". Each row/column is one AVOI, Xj. A black cell at the i-th row and j-th column of the matrix represents that Xi is a parent of Xj. On each matrix, four red squares are used to highlight the four lobes, i.e., the frontal, parietal, occipital, and temporal lobes, from top-left to bottom-right. The black cells inside each red square reflect intra-lobe effective connectivity, whereas the black cells outside the squares reflect inter-lobe effective connectivity. The following interesting observations can be drawn from the effective connectivity models:

Figure 6.

Brain effective connectivity models by SBN. (a) AD. (b) NC.

Global-scale effective connectivity

The total number of arcs in a BN connectivity model, equal to the number of black cells in a matrix plot in Figure 6, represents the amount of effective connectivity (i.e., the amount of directional information flow) in the whole brain. This number is 285 and 329 for AD and NC, respectively. In other words, AD has 13.4% less effective connectivity than NC. Loss of connectivity in AD has been widely reported in the literature [34, 38–40].

Intra-/inter-lobe effective connectivity distribution

Aside from having different amounts of effective connectivity at the global scale, AD may also have a different distribution pattern of connectivity across the brain from NC. Therefore, we count the number of arcs in each of the four lobes and between each pair of lobes in the AD and NC effective connectivity models. The results are summarized in Table 3. It can be seen that the temporal lobe of AD has 22.9% less effective connectivity than NC. The decrease in connectivity in the temporal lobe of AD has been extensively reported in the literature [29, 2, 30]. The interpretation may be that AD is featured by a dramatic cognitive decline and the temporal lobe is responsible for delivering memory and other cognitive functions. As a result, the temporal lobe is affected early and severely by AD and the connectivity network in this lobe is severely disrupted. On the other hand, the frontal lobe of AD has 27.6% more connectivity than NC. This has been interpreted as compensatory reallocation or recruitment of cognitive resources [29–32]. Because the regions in the frontal lobe are typically affected later in the course of AD (our data are mild to moderate AD), the increased connectivity in the frontal lobe may help preserve some cognitive functions in AD patients. In addition, AD shows a decrease in the amount of effective connectivity in the parietal lobe which has also been reported to be affected by AD. There is no significant difference between AD and NC in the occipital lobe. This is reasonable because the occipital lobe is primarily involved in the brain’s visual function which is not affected by AD.

Table 3.

Intra – and inter – lobe effective connectivity amounts

| (a) AD | ||||

|---|---|---|---|---|

| Frontal | Parietal | Occipital | Temporal | |

| Frontal | 37 | 28 | 18 | 43 |

| Parietal | 16 | 14 | 42 | |

| Occipital | 10 | 23 | ||

| Temporal | 54 | |||

| (b) NC | ||||

|---|---|---|---|---|

| Frontal | Parietal | Occipital | Temporal | |

| Frontal | 29 | 32 | 12 | 61 |

| Parietal | 20 | 16 | 42 | |

| Occipital | 11 | 36 | ||

| Temporal | 70 | |||

Furthermore, the most significant reduction in inter-lobe connectivity in AD is found between the frontal and temporal lobes, i.e., AD has 29.5% less effective connectivity than NC. This may be attributed to the temporal lobe disruption of the default mode network in AD [34].

Direction of local effective connectivity

One advantage of BNs over undirected graphical models in brain connectivity modeling is that the directed arcs in a BN reflect directional effect of one region over another, i.e., the effective connectivity. Specifically, if there is a directed arc from brain regions Xi to Xj, it indicates that Xi takes a dominant role in thecommunication with Xj. The connectivity modes in Figure 6 reveal a number of interesting findings in this regard:

There are substantially fewer black cells in the area defined by rows 27–42 and columns 1–26 in AD than NC. Recall that rows 27–42 correspond to regions in the temporal lobe. Thus, this pattern indicates a substantial reduction in arcs pointing from temporal regions to the other regions in the AD brain, i.e., temporal regions lose their dominating roles in communicating information with the other regions as a result of AD. The loss is most severe in the communication from temporal to frontal regions.

Rows 31 and 35, corresponding to brain regions “Temporal_Mid_L” and “Temporal_Inf_L”, respectively, are among the rows with the largest number of black cells in NC, i.e., these two regions take a significantly dominant role in communicating with other regions in normal brains. However, the dominancy of the two regions is substantially reduced by 34.8% and 36.8%, respectively, in AD. A possible interpretation is that these are neocortical regions associated with amyloid deposition and early FDG hypometabolism in AD [34–37, 41–42].

Columns 39 and 40 correspond to regions “Hippocampus_L” and “Hippocampus_R”, respectively. There are a total of 33 black cells in these two columns in NC, i.e., 33 other regions dominantly communicate information with the hippocampus. However, this number reduces to 22 (33.3% reduction) in AD. The reduction is more severe in Hippocampus_L (50%). The hippocampus is well known to play a prominent role in making new memories and in recall. It has been widely reported that the hippocampus is affected early in the course of AD, leading to memory loss – the most common symptom of AD.

There are a total of 93 arcs pointing from the left hemisphere to the right hemisphere of the brain in NC; this number reduces to 71 (23.7% reduction) in AD. The number of arcs from the right hemisphere to the left hemisphere in AD is close to that in NC. This provides evidence that AD may be associated with inter-hemispheric disconnection and the disconnection is mostly unilateral, which has also been reported by some other papers [43–44].

7. CONCLUSION

In this paper, we proposed a BN structure learning algorithm, called SBN, for identifying effective connectivity of AD from FDG-PET data. SBN adopted a novel formulation that involves one L1-norm penalty term to impose sparsity on the learning and another penalty to ensure the learned BN to be a DAG. We performed theoretical analysis on the competitive advantage of SBN over the existing algorithms in terms of learning accuracy. Our analysis showed that the existing algorithms employ a two-stage approach in BN structure identification, which makes them have a high risk of failing to identify the parents of each variable. Also, we performed theoretical analysis on the time complexity of SBN, which showed that it is linear in the sample size and quadratic in the number of variables. Furthermore, we conducted experiments to compare SBN with 10 competing algorithms and found that SBN has significantly better performance in learning accuracy.

We applied SBN to identify the effective connectivity models of AD and NC from PDG-PET data, and found that the effective connectivity of AD is different from NC in many ways. Clinically, our findings may provide an additional tool for monitoring disease progress, evaluating treatment effects, and enabling early detection of network disconnection in prodromal AD. Future studies may be conducted to verify the statistical significance of the identified effective connectivity difference between AD and NC, and estimate the strength of the directed arcs identified by SBN to help improve the sensitivity and specificity of the effective connectivity models. Finally, although this paper focuses on the structure learning of Gaussian BNs, the same formulation can be adopted to discrete BNs, which will be explored in our future research.

Figure 5.

Comparison of SBN with competing algorithms on the CPU time in structure learning of the other nine benchmark networks

Acknowledgments

This research is sponsored in part by NSF IIS-0953662 and NSF MCB-1026710.

Appendix A

There are some notation changes as follows for the convenience of the derivation: we use b0 = β12, b1 = β23, b2 = β24, …, bn = β2m, Y1 = X3, Y2 = X4, …, Yn = Xm.

Based on Wright’s second decomposition rule [33], the covariance matrix of all the variables, Tn, can be represented as a function of the parameters of the BN, b0, ⋯,bn, i.e.,

| (A-1) |

Now, consider the regression of X2 on all other variables, i.e.,. According to the Least Square criterion, the regression coefficients,

| (A-2) |

where Cn is a sub-matrix of Tn by deleting the 1st column and 1st row from Tn. Denote by An = (aij)Then,

| (A-3) |

Our final objective is to express by the parameters of the BN. This can be achieved if we can express a11,a12,a13,⋯,a1n+1 by the parameters of the BN, which is the goal of the following derivation.

It is known that

| (A-4) |

where C1j,n is a matrix by deleting the 1st row and the jth column from Cn. So, the problem becomes calculation of det(Cn) and det det(C1j,n).

(i) Calculation of det(Cn): We first show the result:

| (A-5) |

Next, we will use the induction method in 1)–2) below to prove (A-5):

Then we need prove that (A-5) holds for n. Based on the definition of a determinant,

| (A-6) |

where c1j is the entry at the 1st row and jth column of Cn.

Now we need to derive det(C1j,n) (only results are shown below due to page limits):

When j = 1,

| (A-7) |

When j ≠ 1,

| (A-8) |

Then, insert (A-7), (A-8), and c11 = 1, c12 = b0b1, …, c1n+1 = b0bn into (A-6):

This completes the proof for (A-5).

(ii) Calculation of det(C1j,n): det(C1j,n)has been obtained by (A-7) and (A-8). Inserting (A-5), (A-7), and (A-8) into (A-4), we get:

Furthermore, a11 + a12b0b1 + a13b0b2 + ⋯ + a1n+1b0bn = 1. Inserting this into (A-3), we get . Plugging in the a11 above, we can get:

| (A-9) |

Obviously, the fraction at the right-hand side is between 0 and 1. Therefore, .

Next we derive the formula for . It is known that . Since and det(Cn) is given in (A-5), we can get:

| (A-10) |

Also, . Putting this together with (A-9) and (A-10), we can get:

It is obvious that the part in the “{ }” is less than one. Therefore,.

Footnotes

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee.

REFERENCES

- 1.Horwitz B. The Elusive Concept of Brain Connectivity. Neuroimage. 2003;19:466–470. doi: 10.1016/s1053-8119(03)00112-5. [DOI] [PubMed] [Google Scholar]

- 2.Azari N, Rapoport S. Patterns of Interregional Correlations of Cerebral Glucose Metabolic Rates in Patients with Dementia of the Alzheimer Type. Neurodegeneration. 1992;1:101–111. [Google Scholar]

- 3.Friston KJ. Functional and Effective Connectivity in Neuroimaging: A Synthesis. Human Brain Mapping. 1994;2:56–78. [Google Scholar]

- 4.Alexander G, Moeller J. Application of the Scaled Subprofile Model to Functional Imaging in Neuropsychiatric Disorders: A Principal Component Approach to Modeling Brain Function in Disease. Human Brain Mapping. 1994;2:79–94. [Google Scholar]

- 5.Calhoun V, Adali T, Pearison G, Pekar J. Spatial and Temporal Independent Component Analysis of Functional MRI Data Containing a Pair of Task-Related Waveforms. HumanBrain Mapping. 2001;13:43–53. doi: 10.1002/hbm.1024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McIntosh A, Bookstein F, Haxby J, Grady C. Spatial Pattern Analysis of Functional Brain Images Using Partial Least Squares. Neuroimage. 1996;3:143–157. doi: 10.1006/nimg.1996.0016. [DOI] [PubMed] [Google Scholar]

- 7.Chiang J, Wang Z, McKeown MJ. Sparse Multivariate Autoregressive (mAR)-based Partial Directed Coherence (PDC) for EEG Analysis; Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing; 2009. pp. 457–460. [Google Scholar]

- 8.Huang S, Li J, Sun L, Ye J, Fleisher A, Wu T, Chen K, Reiman E. Learning Brain Connectivity of Alzheimer’s Disease by Sparse Inverse Covariance Estimation. NeuroImage. 2010;50:935–949. doi: 10.1016/j.neuroimage.2009.12.120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hilgetag C, Kotter R, Stephan KE, Sporns O. In: Computational Methods for the Analysis of Brain Connectivity. Ascoli GA, editor. Totowa, NJ: Computational Neuroanatomy. Humana Press; 2002. [Google Scholar]

- 10.Bullmore E, Horwitz B, Honey G, Brammer M, Williams S, Sharma T. How Good is Good Enough in Path Analysis of fMRI? Neuroimage. 2000;11:289–301. doi: 10.1006/nimg.2000.0544. [DOI] [PubMed] [Google Scholar]

- 11.Friston KJ, Harrison L, Penny W. Dynamic Causal Modeling. Neuroimage. 2003;19:1273–1302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- 12.Lam W, Bacchus F. Learning Bayesian Belief Networks: an Approach based on the MDL Principle. Computational Intelligence. 1994;10:269–293. [Google Scholar]

- 13.Friedman N, Nachman I, Pe’er D. Learning Bayesian Network Structure from Massive Datasets: The “Sparse Candidate” Algorithm. UAI 1999. 1999 [Google Scholar]

- 14.Schmidt M, Niculescu-Mizil A, Murphy K. A. Learning Graphical Model Structures using L1-Regularization Paths. AAAI 2007. 2007 [Google Scholar]

- 15.Tibshirani R. Regression Shrinkage and Selection via the Lasso. Journal of Royal Statistical Society, Series B. 1996;58(1):267–288. [Google Scholar]

- 16.Tsamardinos I, Brown L, Aliferis C. The Max-Min Hill-Climbing Bayesian Network Structure Learning Algorithm. Machine Learning. 2006;65(1):31–78. [Google Scholar]

- 17.Margaritis D, Thrun S. Bayesian Network induction via local neighborhoods. NIPS 1999. 1999 [Google Scholar]

- 18.Pellet J, Elisseeff A. Using Markov Blankets for Causal Structure Learning. JMLR. 2008;9:1295–1342. [Google Scholar]

- 19.Meek C. Causal inference and causal explanation with background knowledge. UAI 1995. 1995 [Google Scholar]

- 20.Estrada E, Naomichi H. Communicability in Complex Networks. Phys. Rev. E. 2008;77 doi: 10.1103/PhysRevE.77.036111. 036111. [DOI] [PubMed] [Google Scholar]

- 21.Gill PE. Class Notes for Math 271ABC: Numerical Optimization. UCSD: Department of Mathematics; [Google Scholar]

- 22.Bertsekas D. Nonlinear Programming. 2nd Edition. Belmont: Athena Scientific; 1999. [Google Scholar]

- 23.Fu W. Penalized Regressions: the Bridge versus the Lasso. Computational and Graphical Statistics. 1998;7(3):397–416. [Google Scholar]

- 24.Friedman J, Hastie T, Hofling H, Tibshirani R. Pathwise Coordinate Optimization. The Annals of Applied Statistics. 2007;2:302–332. [Google Scholar]

- 25.Aliferis C, Tsamardinos I, Statnikov A. HITON, a Novel Markov Blanket Algorithm for Optimal Variable Selection. AMIA 2003. 2003:21–25. [PMC free article] [PubMed] [Google Scholar]

- 26.Tsamardinos I, Aliferis C. Towards Principled Feature Selection: Relevancy, Filters and Wrappers. AISTAT 2003. 2003 [Google Scholar]

- 27. http://www.cs.huji.ac.il/labs/compbio/Repository. [Google Scholar]

- 28.Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated Anatomical Labeling of Activations in SPM using a Macroscopic Anatomical Parcellation of the MNI MRI Single-Subject Brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- 29.Supekar K, Menon V, Rubin D, Musen M, Grecius M. Network Analysis of Intrinsic Functional Brain Connectivity in AD. PLoS Comput Biol. 2008;4(6):1–11. doi: 10.1371/journal.pcbi.1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wang K, Liang M, Wang L, Tian L, Zhang X, Li K, Jiang T. Altered Functional Connectivity in Early AD: A Resting-State fMRI Study. Human Brain Mapping. 2007;28:967–978. doi: 10.1002/hbm.20324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gould R, Arroyo B, Brown R, Owen A, Bullmore E, Howard R. Brain Mechanisms of Successful Compensation during Learning in AD. Neurology. 2006;67(6):1011–1017. doi: 10.1212/01.wnl.0000237534.31734.1b. [DOI] [PubMed] [Google Scholar]

- 32.Stern Y. Cognitive Reserve and Alzheimer Disease. Alzheimer Disease Associated Disorder. 2006;20:69–74. doi: 10.1097/01.wad.0000213815.20177.19. [DOI] [PubMed] [Google Scholar]

- 33.Korb KB, Nicholson AE. Bayesian Artificial Intelligence. London, UK: Chapman & Hall/CRC; 2003. [Google Scholar]

- 34.Greicius M, Srivastava G, Reiss A, Menon V. Default-mode Network Activity Distinguishes AD from Healthy Aging: Evidence from Functional MRI. PNAS. 2004;101:4637–4642. doi: 10.1073/pnas.0308627101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Alexander GE, Chen K, Pietrini P, Rapoport S, Reiman E. Longitudinal PET Evaluation of Cerebral Metabolic Decline in Dementia: A Potential Outcome Measure in AD Treatment Studies. Am.J.Psychiatry. 2002;159:738–745. doi: 10.1176/appi.ajp.159.5.738. [DOI] [PubMed] [Google Scholar]

- 36.Braak H, Braak E. Evolution of the Neuropathology of Alzheimer's Disease. Acta Neurol Scand Suppl. 1996;165:3–12. doi: 10.1111/j.1600-0404.1996.tb05866.x. [DOI] [PubMed] [Google Scholar]

- 37.Braak H, Braak E, Bohl J. Staging of Alzheimer-Related Cortical Destruction. Eur Neurol. 1993;33:403–408. doi: 10.1159/000116984. [DOI] [PubMed] [Google Scholar]

- 38.Hedden T, Van Dijk K, Becker J, Mehta A, Sperling R, Johnson K, Buckner R. Disruption of Functional Connectivity in Clinically Normal Older Adults Harboring Amyloid Burden. J. Neurosci. 2009;29:12686–12694. doi: 10.1523/JNEUROSCI.3189-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Andrews-Hanna J, Snyder A, Vincent J, Lustig C, Head D, Raichle M, Buckner R. Disruption of Large-Scale Brain Systems in advanced Aging. Neuron. 2007;56:924–935. doi: 10.1016/j.neuron.2007.10.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wu X, Li R, Fleisher AS, Reiman E, Guan X, Zhang Y, Chen K, Yao L. Altered Default Mode Network Connectivity in Alzheimer’s Disease - A Resting Functional MRI and BN Study. Human Brain Mapping. 2011 doi: 10.1002/hbm.21153. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ikonomovic M, Klunk W, Abrahamson E, Mathis C, Price J, Tsopelas N, Lopresti B, et al. Post-mortem Correlates of in vivo PiB-PET Amyloid Imaging in a Typical Case of Alzheimer's Disease. Brain. 2008;131:1630–1645. doi: 10.1093/brain/awn016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Klunk W, Engler H, Nordberg A, et al. Imaging brain amyloid in Alzheimer's disease with Pittsburgh Compound-B. Ann Neurol. 2004;55:306–319. doi: 10.1002/ana.20009. [DOI] [PubMed] [Google Scholar]

- 43.Reuter-Lorenz P, Mikels J. A Split-Brain Model of Alzheimer’s Disease? Behavioral Evidence for Comparable intra and interhemispheric Decline. Neuropscyhologia. 2005;43:1307–1317. doi: 10.1016/j.neuropsychologia.2004.12.007. [DOI] [PubMed] [Google Scholar]

- 44.Lipton A, Benavides R, Hynan LS, Bonte FJ, Harris TS, White CL, Bigio EG., 3rd Lateralization on Neuroimaging does not Differentiate Frontotemporal Lobar Degeneration from Alzheimer’s Disease. Dement Geriatr Cogn Disord. 2004;17(4):324–327. doi: 10.1159/000077164. [DOI] [PubMed] [Google Scholar]