Abstract

Physicians underrecognize and undertreat anaphylaxis. Effective interventions are needed to improve physician knowledge and competency regarding evidence-based anaphylaxis diagnosis and management (ADAM). We designed and evaluated an educational program to improve ADAM in pediatrics, internal medicine, and emergency medicine residents from two academic medical centers. Anonymous questionnaires queried participants' demographics, prior ADAM clinical experience, competency, and comfort. A pretest assessing baseline knowledge preceded a 45-minute allergist-led evidence-based presentation, including practice with epinephrine autoinjectors, immediately followed by a posttest. A follow-up test assessed long-term knowledge retention twelve weeks later. 159 residents participated in the pretest, 152 participated in the posttest, and 86 participated in the follow-up test. There were no significant differences by specialty or site. With a possible score of 10, the mean pretest score (7.31 ± 1.50) was lower than the posttest score (8.79 ± 1.29) and follow-up score (8.17 ± 1.72) (P < 0.001 for both). Although participants' perceived confidence in diagnosing or managing anaphylaxis improved from baseline to follow-up (P < 0.001 for both), participants' self-reported clinical experience with ADAM or autoinjector use was unchanged. Allergist-led face-to-face educational intervention improves residents' short-term knowledge and perceived confidence in ADAM. Limited clinical experience or reinforcement contributes to the observed decreased knowledge.

1. Introduction

Teaching physicians effectively about low probability, high consequence medical conditions, such as anaphylaxis, is challenging. Medical education curricula emphasize more common high stakes conditions (e.g., stroke) where misdiagnosis or mismanagement leads to poor outcomes. Physicians may lack opportunities to gain firsthand clinical experience or to reinforce their limited learning of infrequent conditions. Effective interventions are needed.

Clinicians face several challenges when dealing with anaphylaxis, a potentially life-threatening allergic reaction requiring immediate identification and treatment. First, there are no universally accepted diagnostic criteria for anaphylaxis [1, 2]. A comprehensive clinical definition of anaphylaxis from an NIH expert panel has not achieved widespread acceptance among physicians despite high reported sensitivity and negative predictive value [1, 3, 4]. Second, there are no pathognomonic anaphylaxis signs or symptoms. Physicians may overlook the diagnosis because the clinical presentation may vary among patients, even in the same patient with a history of multiple episodes [1, 5]. Third, physicians may not consider anaphylaxis when patients do not present stereotypically (e.g., laryngeal edema after bee sting). Additionally, diagnostic tests are rarely useful during an acute episode. Furthermore, the usual probability-based methods of clinical reasoning and decision-making are difficult to apply to anaphylaxis [6, 7]. Estimates of anaphylaxis prevalence and incidence are unclear due to the lack of symptom recognition, poor physician awareness of diagnostic criteria [1, 2], and paucity of robust, validated methods for identifying anaphylaxis diagnoses using currently available administrative claims [8, 9]. Thus, it is unsurprising that medical personnel underrecognize and undertreat anaphylaxis [1, 2].

Anaphylaxis diagnosis and management (ADAM) by physicians need improvement, regardless of the stage of training [10–22]. Despite the establishment and dissemination of treatment guidelines, medical providers consistently underutilize or incorrectly administer and dose epinephrine, which is accepted as first-line treatment [10–27]. Instead of prompt epinephrine administration, practitioners continue to utilize second-line agents, such as antihistamines and glucocorticoids, contrary to evidence-based recommendations [10–29].

Improving provider education regarding ADAM is an unmet but modifiable deficiency [30–33]. Allergists are particularly well suited to address this gap, as evidenced by the few published studies reporting successful allergist-led interventions [34–36]. We developed, implemented, and evaluated an educational program consisting of face-to-face didactic session and hands-on training conducted by allergy trainees or attending physicians in the proper use of epinephrine autoinjectors. We hypothesized that this allergist-led intervention would improve residents' knowledge, competence, and perceived confidence in anaphylaxis diagnosis and management. Allergists and likely other nonallergist providers can adapt our simple and resource nonintensive intervention in a variety of settings to educate other providers about evidence-based ADAM.

2. Methods

2.1. Study Description and Eligibility

This longitudinal study examined full-time resident physicians at all training levels who were enrolled in an Accreditation Council for Graduate Medical Education, accredited training program in internal medicine (N = 204), pediatrics (N = 153), or emergency medicine (N = 40), from July 2010 to June 2013, at tertiary care university hospitals in two health systems. Residents were recruited during an hour-long educational conference in which they received an explanatory letter about the study and asked to participate. To maximize participation and account for differences in academic schedules, residents were recruited during two distinct department-specific conferences on different academic blocks. The institutional review boards at both institutions approved this study and waived the need to obtain written informed consent from participants.

2.2. Intervention, Quizzes, and Questionnaire

At the recruitment session, residents completed an anonymous questionnaire which queried participants' demographics, prior clinical experience, perceived competency, and comfort with ADAM, as well as a 10-item multiple choice pretest that assessed baseline knowledge of anaphylaxis. Attending physicians (Artemio M. Jongco and Susan J. Schuval) and/or trainees (Sheila Bina and Robert J. Sporter) from the Division of Allergy and Immunology from the respective institutions presented a 45-minute evidence-based didactic lecture using PowerPoint (Microsoft, Redman, WA), followed by hands-on practice with needleless epinephrine autoinjector trainers (EpiPen® trainer). Study personnel observed participants' technique, provided constructive criticism when appropriate, and answered participants' questions related to the educational content or autoinjector use. Immediately following the presentation, the residents completed a similar 10-item posttest to evaluate knowledge acquisition. Approximately 12 weeks later, during another nonanaphylaxis conference, residents completed a similar 10-item follow-up quiz and questionnaire. To foster a safe and nonpunitive learning environment, only residents and study personnel were present at conference sessions. No identifying information was collected from the participants, nor were identifiers recorded on quizzes or questionnaires. Hence, linking individual's responses at different time points or connecting an individual's performance to his/her identity was not possible. Only residents who participated in both programs were included in the analysis. Examples of the questionnaires and quizzes are provided in Supplementary Material available online at http://dx.doi.org/10.1155/2016/9040319.

The authors developed the quizzes, consisting of clinical scenarios that evaluated knowledge of evidence-based anaphylaxis diagnosis and management. To ensure that quiz questions were roughly equivalent in complexity, the scientific content was identical from one quiz to another, with minor modifications (e.g., clinical parameters, order of questions, and answer choices). Quizzes were graded numerically on a scale from 0 to 10. Before the study, the authors reviewed the scientific content of the educational intervention, quizzes, and answers. The authors pilot-tested the quiz questions on a small group of rotating residents in the Division of Allergy & Immunology at Hofstra Northwell School of Medicine. Participants did not have access to quiz answers at any point during the study. Achieving 8 correct answers on the quiz was considered to be the minimum level of competence for medical knowledge.

2.3. Statistical Analysis

All statistical analyses were conducted using SAS 9.3 (SAS Institute Inc., Cary, NC). Graphs were generated using Prism 6 (GraphPad Software, San Diego, CA). The results of the descriptive analysis were shown as mean ± standard deviation with 95% confidence intervals and as percentages. The chi-square test was used to measure the association between the categorical variables, and Wilcoxon rank sum test or Kruskal-Wallis test was used to compare the groups on the continuous variables. A two-tailed P < 0.05 was considered significant. Bonferroni adjustment was applied for multiple comparisons.

3. Results

The two academic health centers employed a total of 397 residents that were eligible to participate (204 internal medicine, 153 pediatrics, and 40 emergency medicine residents). A total of 159 residents participated in the pretest (response rate of 40.05%). One hundred and fifty-two residents of the original 159 (95.60%) completed the posttest, and 86 residents (54.09%) were available for the follow-up test. Table 1 provides the distribution of sample characteristics by site and specialty. Since chi-squared analysis failed to reveal a significant difference by site (P = 0.86) or by specialty (P = 0.95) over time, data were combined in subsequent analyses.

Table 1.

Sample characteristics by site and specialty.

| Pretest | Posttest | Follow-up test | Total | P value | |

|---|---|---|---|---|---|

| N (%) | N (%) | N (%) | |||

| Location | |||||

| Health system 1 | 88 (55.4) | 80 (52.6) | 45 (52.3) | 213 | 0.86 |

| Health system 2 | 71 (44.6) | 72 (47.4) | 41 (47.7) | 184 | |

| Specialty | |||||

| Pediatrics | 60 (37.7) | 58 (38.2) | 35 (40.7) | 153 | 0.95 |

| Internal medicine | 84 (52.8) | 79 (52.0) | 41 (47.7) | 204 | |

| Emergency medicine | 15 (9.5) | 15 (9.8) | 10 (11.6) | 40 |

Table 2 summarizes participant characteristics at baseline and follow-up. Chi-squared analysis revealed that the proportion of participants having demonstrated epinephrine autoinjector use increased from baseline to follow-up (P = 0.006). The proportion of participants who had diagnosed (P = 0.06) or managed (P = 0.06) anaphylaxis or used an epinephrine autoinjector (P = 0.08) in the past did not differ significantly from baseline to follow-up. Of the participants who reported having managed anaphylaxis prior to the study, the most common venue was the emergency department (42.35%), followed by general ward (20.59%), intensive care unit (24.71%), and then allergy office (2.35%) (data not shown). Also, the proportion of participants who self-reported being confident in their ability to diagnose (P < 0.001) or manage (P < 0.001) anaphylaxis increased from baseline to follow-up. Table 3 summarizes participants' self-reported behaviors and attitudes at follow-up. During the interval since the intervention, despite increased self-reported confidence in ADAM, residents appear to have had few opportunities to utilize what they have learned about anaphylaxis, or to refer patients with anaphylaxis to allergists.

Table 2.

Summary of sample demographic characteristics.

| Characteristic | Pretest N (%) | Follow-up test N (%) | P value |

|---|---|---|---|

| Gender | |||

| Male | 64 (43.2) | 38 (45.8) | 0.71 |

| Class year | |||

| PGY1 | 77 (52.0) | 48 (55.8) | 0.81 |

| PGY2 | 34 (23.0) | 17 (19.8) | |

| PGY3 | 37 (25.0) | 21 (24.4) | |

| US medical school graduate | |||

| Yes | 109 (73.6) | 63 (73.3) | 0.95 |

| Diagnosed anaphylaxis in past | |||

| Yes | 49 (33.1) | 39 (45.4) | 0.06 |

| Managed anaphylaxis in past | |||

| Yes | 74 (50.0) | 54 (62.8) | 0.06 |

| Used epinephrine autoinjector in past | |||

| Yes | 13 (8.8) | 14 (16.3) | 0.08 |

| Demonstrated epinephrine autoinjector in past | |||

| Yes | 55 (37.2) | 48 (55.8) | 0.006 |

| Referred patient to allergist in past | |||

| Yes | 53 (35.8) | 30 (34.8) | 0.89 |

| Confidence in diagnosing anaphylaxis | |||

| Yes | 90 (60.8) | 72 (83.7) | <0.001 |

| Confidence in managing anaphylaxis | |||

| Yes | 77 (52.4) | 68 (79.1) | <0.0001 |

Table 3.

Summary of self-reported behaviors and attitudes at 12-week follow-up.

| Behavior and/or attitude | N (%) |

|---|---|

| Diagnosed anaphylaxis since lecture | 21/86 (24.42) |

| Managed anaphylaxis since lecture | 25/86 (29.07) |

| Used epinephrine autoinjector since lecture | 9/86 (10.47) |

| Demonstrated epinephrine autoinjector since lecture | 16/86 (18.60) |

| Referred patient to allergist since lecture | 19/86 (22.09) |

| Confidence in diagnosing anaphylaxis since lecture | 77/86 (89.53) |

| Confidence in managing anaphylaxis since lecture | 79/86 (91.86) |

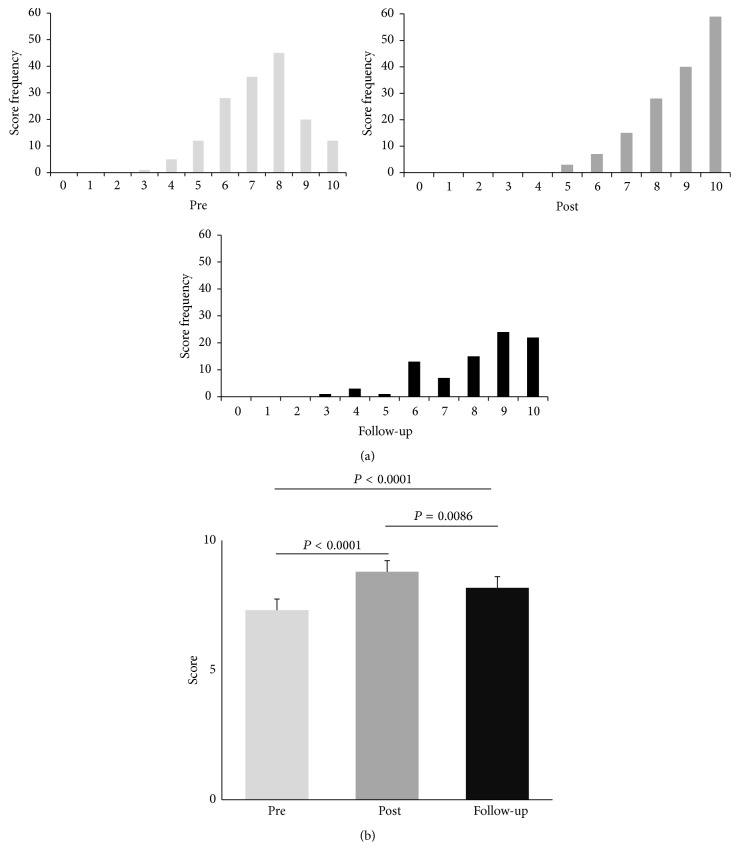

Figure 1(a) illustrates the nonnormal distribution of quiz scores. The mean pretest score of 7.31 ± 1.50 (95% CI 7.08–7.54) was lower than the posttest score of 8.79 ± 1.29 (95% CI 8.58–9.00) and follow-up score of 8.17 ± 1.72 (95% CI 7.80–8.54). Figure 1(b) shows that the distribution of pretest scores is significantly lower than posttest scores and follow-up scores (P < 0.001 for both). The distribution of follow-up scores is significantly lower than that of posttest scores (P = 0.0086). These results were significant even after Bonferroni adjustment was made for multiple comparisons (α = 0.017). Furthermore, our intervention appears to have helped the participants achieve the medical knowledge competency threshold of 8 correct answers.

Figure 1.

Summary of quiz scores. (a) Distribution of quiz scores at different time points. (b) Mean quiz scores at different time points. Error bars represent standard deviation.

Table 4 lists possible covariates of quiz score. Quiz scores at the three time points did not significantly differ by specialty (P = 0.59) or by training level (P = 0.62). Moreover, the quiz scores did not vary according to their self-reported experience of past anaphylaxis diagnosis (P = 0.10), management (P = 0.09), past use (P = 0.49), demonstration of epinephrine autoinjector (P = 0.16), or past referral to allergist (P = 0.37). However, quiz scores did significantly differ depending on residents' reported confidence in the ability to diagnose (P = 0.01) or manage (P = 0.004) anaphylaxis.

Table 4.

Possible covariates of quiz scores.

| Variable | P value |

|---|---|

| Specialty | 0.59 |

| Level of training | 0.62 |

| Diagnosed anaphylaxis in past | 0.10 |

| Managed anaphylaxis in past | 0.09 |

| Used epinephrine autoinjector in past | 0.49 |

| Demonstrated epinephrine autoinjector in past | 0.16 |

| Referred patient to allergist in past | 0.37 |

| Confidence in diagnosing anaphylaxis | 0.01 |

| Confidence in managing anaphylaxis | 0.004 |

4. Discussion

In this study, we demonstrate that allergist-led didactic lectures and hands-on practice with epinephrine autoinjectors are effective educational interventions that enhance short-term resident knowledge of evidence-based ADAM. These findings corroborate the literature which shows that the continuing opportunities to apply knowledge and to practice skills are essential to maintain knowledge and competency [34, 35]. Indeed, the majority of participants reported having limited opportunity to apply or utilize their new knowledge or skills in the 12-week interval between intervention and follow-up. We suspect that resident performance would have continued to decline in the absence of educational reinforcement if reevaluated after 12 weeks.

There are several findings, which failed to reach statistical significance, that further underscore the importance of continuing medical education and ongoing opportunities to practice clinical skills in order to maintain ADAM proficiency. There were trends to suggest an increase in the proportion of participants who had diagnosed (P = 0.06) or managed (P = 0.06) anaphylaxis or used an epinephrine autoinjector (P = 0.08) at follow-up compared to baseline. Moreover, past anaphylaxis diagnosis (P = 0.10) and management (P = 0.09) are likely covariates of quiz score. Further research is needed to identify the optimal frequency and modality of continuing medical education that will result in maximal retention of knowledge and competency.

Interestingly, study participants reported high levels of confidence in diagnosing or managing anaphylaxis at baseline and follow-up, despite limited clinical experience. In fact, the levels of self-reported confidence increased from baseline to follow-up. This observed disconnect between physician self-assessment and objective measures of competence is unsurprising since physicians have a limited ability for self-assessment [37]. Physicians' overestimation of their own competence may compromise patient safety and clinical outcomes. It may be beneficial to help physicians at all training levels to become more cognizant of this disconnect. Moreover, training programs should consider restructuring current educational endeavors to include increased allocation of time and resources for educating trainees about low probability, high consequence conditions like anaphylaxis, since simple, non-resource-intensive interventions, such as the one described in this paper, can lead to measurable improvements in resident knowledge, and possibly clinical competence.

We acknowledge that our medical knowledge competency threshold score of 8 is somewhat arbitrary and may not necessarily reflect clinical competence. Clinical competence, which relies upon a foundation of basic clinical skills, scientific knowledge, and moral development, includes a cognitive function (i.e., acquiring and using knowledge to solve problems); an integrative function (i.e., using biomedical and psychosocial data in clinical reasoning); a relational function (i.e., communicating effectively with patients and colleagues); and an affective/moral function (i.e., the willingness, patience, and emotional awareness to use these skills judiciously and humanely) [38]. Although evaluating medical knowledge through a quiz represents an incomplete assessment of clinical competence at best, it is still reasonable to hypothesize that medical knowledge correlates with clinical competence to some degree and that lower levels of medical knowledge may negatively impact the quality and efficacy of care delivered by the provider. Thus, the observation that the follow-up quiz scores trended back down towards baseline is worrisome for a possible concomitant decline in the quality of care delivered by the residents. Whether the residents demonstrated any change in their clinical practice after the intervention is unknown and outside the scope of the current study. More research is needed to determine the effect of educational interventions such as this in real-life clinical practice.

This study has several limitations. First, only 40% of all eligible participants were included in the study, and only about half of these participated in the 12-week follow-up evaluation. This is likely due to scheduling difficulties and competing demands on resident time, although we cannot exclude the possibility of participation bias. Notably, our participation rate is similar to other studies of physicians and residents [39–41].

Second, since we did not collect identifying information, we could not ensure that recruited participants completed the entire study, track individual performance over time, or give participants personalized feedback on their quiz performance.

Third, extensively validated quiz questions (e.g., questions from previous certification examinations) were not used due to the lack of access. The content of each question on the quizzes was directly linked to each one of our evidence-based learning goals, thus serving as a measure of face validity. Further, there was consensus among the board certified allergists/content experts who developed, verified, and honed the quiz questions, thereby providing us with a measure of content validity. Finally, because the quizzes were utilized at more than one site, in more than one clinical department, and on a modest sample size, we believe that the generalizability of the instrument was attained to a respectable degree.

Finally, this study only utilized traditional educational modalities of didactic lecture and hands-on practice. More research is needed to evaluate the efficacy of various educational interventions, especially with regard to long-term knowledge retention, improved performance on objective measures of clinical competence, and actual patient outcomes. Simulation-based education may hold promise in this regard [30–32, 35].

This study had several strengths. It was multicenter and included participants from multiple specialties. Also, the larger sample size of this study and the longer follow-up interval distinguish this study from other published studies of educational interventions. Furthermore, since the intervention is relatively simple and not resource-intensive, it can be adapted and implemented in a variety of educational settings.

5. Conclusion

Physicians, regardless of the stage of training, underdiagnose and undertreat anaphylaxis. Teaching providers about evidence-based ADAM is challenging. The allergist-led face-to-face educational intervention described above improves residents' short-term knowledge, competence, and perceived confidence in ADAM. Lack of clinical experience and/or educational reinforcement may contribute to knowledge and competence decline over time. Thus, continuing medical education, coupled with ongoing opportunities to apply knowledge and practice skills, is necessary. Innovative educational interventions are needed to improve and maintain resident knowledge and clinical competence regarding evidence-based ADAM. More research is also needed to determine the impact of such interventions on patient outcomes.

Supplementary Material

The educational materials generated for this study are included as Supplementary Material. Appendix 1 contains the slides presented by the authors during the 45 minute evidence-based didactic lecture. Appendix 2 and 3 are sample quizzes developed by the authors. Appendix 2 is a pre-test and Appendix 3 is a post-test/follow-up test. Appendix 4 and 5 are sample questionnaires developed by the authors to query demographics and participant experience with anaphylaxis diagnosis and management. Appendix 4 is a pre-test questionnaire, and Appendix 5 is a post-test/follow-up questionnaire.

Ethical Approval

The institutional review boards of Northwell Health System and Stony Brook Children's Hospital approved this study.

Disclosure

This paper was presented as a poster at the 2014 annual meeting of the American Academy of Allergy, Asthma, and Immunology, San Diego, CA, February 2014.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Lieberman P., Nicklas R. A., Oppenheimer J., et al. The diagnosis and management of anaphylaxis practice parameter: 2010 update. The Journal of Allergy and Clinical Immunology. 2010;126(3):477.e42–480.e42. doi: 10.1016/j.jaci.2010.06.022. [DOI] [PubMed] [Google Scholar]

- 2.Sclar D. A., Lieberman P. L. Anaphylaxis: underdiagnosed, underreported, and undertreated. American Journal of Medicine. 2014;127(1):S1–S5. doi: 10.1016/j.amjmed.2013.09.007. [DOI] [PubMed] [Google Scholar]

- 3.Sampson H. A., Muñoz-Furlong A., Campbell R. L., et al. Second symposium on the definition and management of anaphylaxis: summary report—Second National Institute of Allergy and Infectious Disease/Food Allergy and Anaphylaxis Network symposium. Journal of Allergy and Clinical Immunology. 2006;117(2):391–397. doi: 10.1016/j.jaci.2005.12.1303. [DOI] [PubMed] [Google Scholar]

- 4.Campbell R. L., Hagan J. B., Manivannan V., et al. Evaluation of national institute of allergy and infectious diseases/food allergy and anaphylaxis network criteria for the diagnosis of anaphylaxis in emergency department patients. Journal of Allergy and Clinical Immunology. 2012;129(3):748–752. doi: 10.1016/j.jaci.2011.09.030. [DOI] [PubMed] [Google Scholar]

- 5.Wood R. A., Camargo C. A., Jr., Lieberman P., et al. Anaphylaxis in America: the prevalence and characteristics of anaphylaxis in the United States. Journal of Allergy and Clinical Immunology. 2014;133(2):461–467. doi: 10.1016/j.jaci.2013.08.016. [DOI] [PubMed] [Google Scholar]

- 6.Kassirer J. P. The principles of clinical decision making: an introduction to decision analysis. Yale Journal of Biology and Medicine. 1976;49(2):149–164. [PMC free article] [PubMed] [Google Scholar]

- 7.Cahan A., Gilon D., Manor O., et al. Probabilistic reasoning and clinical decision-making: do doctors overestimate diagnostic probabilities? QJM: The Quarterly Journal of Mathematics. 2003;96(10):763–769. doi: 10.1093/qjmed/hcg122. [DOI] [PubMed] [Google Scholar]

- 8.Schneider G., Kachroo S., Jones N., et al. A systematic review of validated methods for identifying anaphylaxis, including anaphylactic shock and angioneurotic edema, using administrative and claims data. Pharmacoepidemiology and Drug Safety. 2012;21(1):240–247. doi: 10.1002/pds.2327. [DOI] [PubMed] [Google Scholar]

- 9.Walsh K. E., Cutrona S. L., Foy S., et al. Validation of anaphylaxis in the Food and Drug Administration's Mini-Sentinel. Pharmacoepidemiology and Drug Safety. 2013;22(11):1205–1213. doi: 10.1002/pds.3505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gandhi J. S. Use of adrenaline by junior doctors. Postgraduate Medical Journal. 2002;78(926, article 763) doi: 10.1136/pmj.78.926.763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gompels L. L., Bethune C., Johnston S. L., Gompels M. M. Proposed use of adrenaline (epinephrine) in anaphylaxis and related conditions: a study of senior house officers starting accident and emergency posts. Postgraduate Medical Journal. 2002;78(921):416–418. doi: 10.1136/pmj.78.921.416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wang J., Sicherer S. H., Nowak-Wegrzyn A. Primary care physicians' approach to food-induced anaphylaxis: a survey. Journal of Allergy and Clinical Immunology. 2004;114(3):689–691. doi: 10.1016/j.jaci.2004.05.024. [DOI] [PubMed] [Google Scholar]

- 13.Haymore B. R., Carr W. W., Frank W. T. Anaphylaxis and epinephrine prescribing patterns in a military hospital: underutilization of the intramuscular route. Allergy and Asthma Proceedings. 2005;26(5):361–365. [PubMed] [Google Scholar]

- 14.Krugman S. D., Chiaramonte D. R., Matsui E. C. Diagnosis and management of food-induced anaphylaxis: a national survey of pediatricians. Pediatrics. 2006;118(3):e554–e560. doi: 10.1542/peds.2005-2906. [DOI] [PubMed] [Google Scholar]

- 15.Jose R., Clesham G. J. Survey of the use of epinephrine (adrenaline) for anaphylaxis by junior hospital doctors. Postgraduate Medical Journal. 2007;83(983):610–611. doi: 10.1136/pgmj.2007.059097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Thain S., Rubython J. Treatment of anaphylaxis in adults: results of a survey of doctors at Dunedin Hospital, New Zealand. The New Zealand Medical Journal. 2007;120(1252)U2492 [PubMed] [Google Scholar]

- 17.Droste J., Narayan N. Hospital doctors' knowledge of adrenaline (epinephrine) administration in anaphylaxis in adults is deficient. Resuscitation. 2010;81(8):1057–1058. doi: 10.1016/j.resuscitation.2010.04.020. [DOI] [PubMed] [Google Scholar]

- 18.José R. J., Fiandeiro P. T. Knowledge of adrenaline (epinephrine) administration in anaphylaxis in adults is still deficient. Has there been any improvement? Resuscitation. 2010;81(12):p. 1743. doi: 10.1016/j.resuscitation.2010.08.042. [DOI] [PubMed] [Google Scholar]

- 19.Kastner M., Harada L., Waserman S. Gaps in anaphylaxis management at the level of physicians, patients, and the community: a systematic review of the literature. Allergy. 2010;65(4):435–444. doi: 10.1111/j.1398-9995.2009.02294.x. [DOI] [PubMed] [Google Scholar]

- 20.Lowe G., Kirkwood E., Harkness S. Survey of anaphylaxis management by general practitioners in Scotland. Scottish Medical Journal. 2010;55(3):11–14. doi: 10.1258/rsmsmj.55.3.11. [DOI] [PubMed] [Google Scholar]

- 21.Droste J., Narayan N. Anaphylaxis: lack of hospital doctors' knowledge of adrenaline (epinephrine) administration in adults could endanger patients' safety. European Annals of Allergy and Clinical Immunology. 2012;44(3):122–127. [PubMed] [Google Scholar]

- 22.Grossman S. L., Baumann B. M., Garcia Peña B. M., Linares M. Y.-R., Greenberg B., Hernandez-Trujillo V. P. Anaphylaxis knowledge and practice preferences of pediatric emergency medicine physicians: a national survey. Journal of Pediatrics. 2013;163(3):841–846. doi: 10.1016/j.jpeds.2013.02.050. [DOI] [PubMed] [Google Scholar]

- 23.Simons F. E. R., Sheikh A. Evidence-based management of anaphylaxis. Allergy. 2007;62(8):827–829. doi: 10.1111/j.1398-9995.2007.01433.x. [DOI] [PubMed] [Google Scholar]

- 24.Sheikh A., Shehata Y. A., Brown S. G. A., Simons F. E. R. Adrenaline for the treatment of anaphylaxis: cochrane systematic review. Allergy. 2009;64(2):204–212. doi: 10.1111/j.1398-9995.2008.01926.x. [DOI] [PubMed] [Google Scholar]

- 25.Simons K. J., Simons F. E. R. Epinephrine and its use in anaphylaxis: current issues. Current Opinion in Allergy and Clinical Immunology. 2010;10(4):354–361. doi: 10.1097/aci.0b013e32833bc670. [DOI] [PubMed] [Google Scholar]

- 26.Simpson C. R., Sheikh A. Adrenaline is first line treatment for the emergency treatment of anaphylaxis. Resuscitation. 2010;81(6):641–642. doi: 10.1016/j.resuscitation.2010.04.002. [DOI] [PubMed] [Google Scholar]

- 27.Worth A., Soar J., Sheikh A. Management of anaphylaxis in the emergency setting. Expert Review of Clinical Immunology. 2010;6(1):89–100. doi: 10.1586/eci.09.73. [DOI] [PubMed] [Google Scholar]

- 28.Sheikh A., Ten Broek V., Brown S. G. A., Simons F. E. R. H1-antihistamines for the treatment of anaphylaxis: cochrane systematic review. Allergy. 2007;62(8):830–837. doi: 10.1111/j.1398-9995.2007.01435.x. [DOI] [PubMed] [Google Scholar]

- 29.Choo K. J. L., Simons E., Sheikh A. Glucocorticoids for the treatment of anaphylaxis: cochrane systematic review. Allergy. 2010;65(10):1205–1211. doi: 10.1111/j.1398-9995.2010.02424.x. [DOI] [PubMed] [Google Scholar]

- 30.Jacobsen J., Lindekær A. L., Oøstergaard H. T., et al. Management of anaphylactic shock evaluated using a full-scale anaesthesia simulator. Acta Anaesthesiologica Scandinavica. 2001;45(3):315–319. doi: 10.1034/j.1399-6576.2001.045003315.x. [DOI] [PubMed] [Google Scholar]

- 31.Owen H., Mugford B., Follows V., Plummer J. L. Comparison of three simulation-based training methods for management of medical emergencies. Resuscitation. 2006;71(2):204–211. doi: 10.1016/j.resuscitation.2006.04.007. [DOI] [PubMed] [Google Scholar]

- 32.Gaca A. M., Frush D. P., Hohenhaus S. M., et al. Enhancing pediatric safety: using simulation to assess radiology resident preparedness for anaphylaxis from intravenous contrast media. Radiology. 2007;245(1):236–244. doi: 10.1148/radiol.2451061381. [DOI] [PubMed] [Google Scholar]

- 33.Arga M., Bakirtas A., Catal F., et al. Training of trainers on epinephrine autoinjector use. Pediatric Allergy and Immunology. 2011;22(6):590–593. doi: 10.1111/j.1399-3038.2011.01143.x. [DOI] [PubMed] [Google Scholar]

- 34.Hernandez-Trujillo V., Simons F. E. R. Prospective evaluation of an anaphylaxis education mini-handout: the AAAAI anaphylaxis wallet card. The Journal of Allergy and Clinical Immunology: In Practice. 2013;1(2):181–185. doi: 10.1016/j.jaip.2012.11.004. [DOI] [PubMed] [Google Scholar]

- 35.Kennedy J. L., Jones S. M., Porter N., et al. High-fidelity hybrid simulation of allergic emergencies demonstrates improved preparedness for office emergencies in pediatric allergy clinics. Journal of Allergy and Clinical Immunology. 2013;1(6):608–617. doi: 10.1016/j.jaip.2013.07.006. [DOI] [PubMed] [Google Scholar]

- 36.Yu J. E., Kumar A., Bruhn C., Teuber S. S., Sicherer S. H. Development of a food allergy education resource for primary care physicians. BMC Medical Education. 2008;8, article 45 doi: 10.1186/1472-6920-8-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Davis D. A., Mazmanian P. E., Fordis M., Van Harrison R., Thorpe K. E., Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. The Journal of the American Medical Association. 2006;296(9):1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 38.Epstein R. M., Hundert E. M. Defining and assessing professional competence. The Journal of the American Medical Association. 2002;287(2):226–235. doi: 10.1001/jama.287.2.226. [DOI] [PubMed] [Google Scholar]

- 39.Kellerman S. E., Herold J. Physician response to surveys. A review of the literature. American Journal of Preventive Medicine. 2001;20(1):61–67. doi: 10.1016/s0749-3797(00)00258-0. [DOI] [PubMed] [Google Scholar]

- 40.VanGeest J. B., Johnson T. P., Welch V. L. Methodologies for improving response rates in surveys of physicians: a systematic review. Evaluation and the Health Professions. 2007;30(4):303–321. doi: 10.1177/0163278707307899. [DOI] [PubMed] [Google Scholar]

- 41.Grava-Gubins I., Scott S. Effects of various methodologic strategies: survey response rates among Canadian physicians and physicians-in-training. Canadian Family Physician. 2008;54(10):1424–1430. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The educational materials generated for this study are included as Supplementary Material. Appendix 1 contains the slides presented by the authors during the 45 minute evidence-based didactic lecture. Appendix 2 and 3 are sample quizzes developed by the authors. Appendix 2 is a pre-test and Appendix 3 is a post-test/follow-up test. Appendix 4 and 5 are sample questionnaires developed by the authors to query demographics and participant experience with anaphylaxis diagnosis and management. Appendix 4 is a pre-test questionnaire, and Appendix 5 is a post-test/follow-up questionnaire.