Abstract

A framework for automated detection and classification of cancer from microscopic biopsy images using clinically significant and biologically interpretable features is proposed and examined. The various stages involved in the proposed methodology include enhancement of microscopic images, segmentation of background cells, features extraction, and finally the classification. An appropriate and efficient method is employed in each of the design steps of the proposed framework after making a comparative analysis of commonly used method in each category. For highlighting the details of the tissue and structures, the contrast limited adaptive histogram equalization approach is used. For the segmentation of background cells, k-means segmentation algorithm is used because it performs better in comparison to other commonly used segmentation methods. In feature extraction phase, it is proposed to extract various biologically interpretable and clinically significant shapes as well as morphology based features from the segmented images. These include gray level texture features, color based features, color gray level texture features, Law's Texture Energy based features, Tamura's features, and wavelet features. Finally, the K-nearest neighborhood method is used for classification of images into normal and cancerous categories because it is performing better in comparison to other commonly used methods for this application. The performance of the proposed framework is evaluated using well-known parameters for four fundamental tissues (connective, epithelial, muscular, and nervous) of randomly selected 1000 microscopic biopsy images.

1. Introduction

Cancer detection has always been a major issue for the pathologists and medical practitioners for diagnosis and treatment planning. The manual identification of cancer from microscopic biopsy images is subjective in nature and may vary from expert to expert depending on their expertise and other factors which include lack of specific and accurate quantitative measures to classify the biopsy images as normal or cancerous one. The automated identification of cancerous cells from microscopic biopsy images helps in alleviating the abovementioned issues and provides better results if the biologically interpretable and clinically significant feature based approaches are used for the identification of disease.

About 32% of Indian population gets cancer at some point during their life time. Cancer is one of the common diseases in India which has responsibility to maximum mortality with about 0.3 million deaths per year [1]. The chances of getting affected by this disease are accelerated due to change in habits in the people such as increase in use of tobacco, deterioration of dietary habits, lack of activities, and many more. The possibility of cure from cancer is increased due to recent combined advancement in medicine and engineering. The chances of curing from cancer are primarily in its detection and diagnosis. The selection of the treatment of cancer totally depends on its level of malignancy. Medical professionals use several techniques for detection of cancer. These techniques may include various imaging modalities such as X-ray, Computer Tomography (CT) Scan, Positron Emission Tomography (PET), Ultrasound, and Magnetic Resonance Imaging (MRI) and pathological tests such as urine test and blood test.

For accurate detection of cancer pathologists use histopathology biopsy images, that is, the examination of microscopic tissue structure of the patient. Thus biopsy image analysis is a vital technique for cancer detection [2, 3]. Histopathology is the study of symptoms and indications of the disease using the microscopic biopsy images. To visualize various parts of the tissue under a microscope, the sections are dyed with one or more staining components. The main goal of staining is to reveal the components at cellular level and counterstains are used to provide color, visibility, and contrast. Hematoxylin-Eosin (H&E) is staining component that has been used by pathologists for over few decades. Hematoxylin stains cell nuclei which are blue in color while Eosin stains cytoplasm and connective tissues which are of pink color. The histology [4] is related to the study of cells in terms of structure, function, and interpretations of the tissue and cells. Microscopic biopsies are most commonly used for both disease screenings because of the less invasive natures. The characteristic of microscopic biopsy images has presence of isolated cells and cell clusters. The microscopic biopsy images are easier to analyze specimens compared to histopathology due to absence of noncomplicated structures [5]. The accurate manual identification of cancer from microscopic biopsy images has always been a major issue by the pathologists and medical practitioners observing cell or tissue structure under the microscope.

In histopathology, the cancer detection process normally consists of categorizing the image biopsy into cancerous one or noncancerous one [6]. In microscopic biopsy image analysis doctors and pathologists observe many of the abnormalities and categorize the sample based on various characteristics of the cell nuclei such as color, shape, size, and proportion to cytoplasm. High resolution microscopic biopsy provides reliable information for differentiating abnormal and normal tissues. The difference between normal and cancerous cells is shown in Table 1 [7].

Table 1.

Difference between normal and cancerous cells [7].

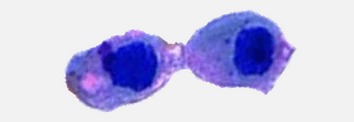

| Normal cells | Cancerous cells | Description of cancerous cells |

|---|---|---|

|

|

Large and variably shaped nuclei |

|

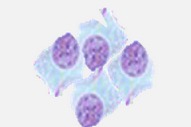

| ||

|

|

Many dividing cells and disorganized arrangements |

|

| ||

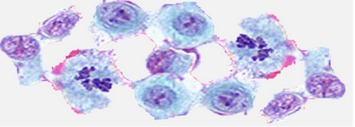

|

|

Variation in size and shape of nuclei |

|

| ||

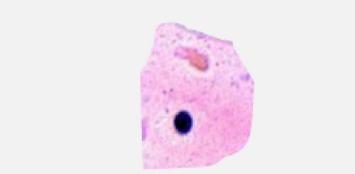

|

|

Loss of normal feature (shape and morphology) |

For the detection and diagnosis of cancer from microscopic biopsy images, the histopathologists normally look at the specific features in the cells and tissue structures. The various common features used for the detection and diagnosis of cancer from the microscopic biopsy images include shape and size of cells, shape and size of cell nuclei, and distribution of the cells. The brief descriptions of these features are given as follows.

(A) Shape and Size of the Cells. It has been observed that the overall shape and size of cells in the tissues are mostly normal. The cellular structures of the cancerous cells might be either larger or shorter than normal cells. The normal cells have even shapes and functionality. Cancer cells usually do not function in a useful way and their shapes are often not even.

(B) Size and Shape of the Cell's Nucleus. The shape and size of the nucleus of a cancer cell are often not normal. The nucleus is decentralized in the cancer cells. The image of the cell looks like an omelet, in which the central yolk is the nucleus and the surrounding white is the cytoplasm. The nuclei of cancer cells are larger than the normal cells and deviated from the centre of the mass. The nucleus of cancer cell is darker. The segmentation step mainly focuses on separation of regions of interests (cells) from background tissues as well as separation of nuclei from cytoplasm.

(C) Distribution of the Cells in Tissue. The function of each tissue depends on the distribution and arrangements of the normal cells. The numbers of healthy cells per unit area are less in the cancerous tissues. These adjectives of microscopic biopsy images have been included in shape and morphology based features, texture features, color based features, Color Gray Level Cooccurrence Matrix (GLCM), Law's Texture Energy (LTE), Tamura's features, and wavelet features which are more biologically interpretable and clinically significant.

The main aim of this paper is to design and develop a framework and a software tool for automated detection and classification of cancer from microscopic biopsy images using the abovementioned clinically significant and biologically interpretable features. This paper focuses on selecting an appropriate method for each design stage of the framework after making a comparative analysis of the various commonly used methods in each category. The various stages involved in the proposed methodology include enhancement of microscopic images, segmentation of background cells, features extraction, and finally the classification.

The rest of the paper has been structured as follows. Section 2 describes the related works, Section 3 presents the methods and models, Section 4 describes the results and discussions, and finally Section 5 draws the conclusion of the work presented in this paper.

2. Related Works

In recent years, few works have been reported in the literature for the design and development of tools for automated cancer detection from microscopic biopsy images. Kumar and Srivastava [9] presented detailed reviews on the computer aided diagnosis (CAD) for cancer detection from microscopic biopsy images. Demir and Yener [10] also presented a method for automatic diagnosis of biopsy image. They presented a cellular level diagnosis system using image processing techniques. Bhattacharjee et al. [11] presented a review on computer aided diagnosis system to detect cancer from microscopic biopsy images using image processing techniques.

Bergmeir et al. [12] proposed a model to extract the texture features by using local histograms and GLCM. The quasisupervised learning algorithm operates on two datasets, the first one having normal tissues labeled only indirectly and the second one containing an unlabeled collection of mixed samples of both normal and cancer tissues. This method was applied on the dataset of 22,080 vectors with reduced dimensionality, 119 from 132. The regions having the cancerous tissues were accurately identified having true positive rate 88% and false positive rate 19%, respectively, by using manual ground truth dataset.

Mouelhi et al. [13] used Haralick's textures features [14], histogram of oriented gradients (HOG), and color component based statistical moments (CCSM) features selection and extraction approaches to classify the cancerous cells from microscopic biopsy images. The various features used in this paper are contrast, correlation, energy, homogeneity, GLCM texture features [14], RGB, gray level, and HSV.

Huang and Lai [15] presented a methodology for segmentation and classification techniques for histology images based on texture features and by using SVM the maximum classification accuracy obtained is 92.8%.

Landini et al. [16] presented a method for morphologic characterization of cell neighborhoods in neoplastic and preneoplastic tissue of microscopic biopsy images. In this paper, authors presented watershed transforms to compute the cell and nuclei area and other parameters. The distance measure of the neighborhood value has been used for calculating the neighborhood complexity with reference to the v-cells. The best classification which has been obtained by KNN classifier is 83% for dysplastic and neoplastic classes and 58% of correct classification.

Sinha and Ramkrishan [17] extracted some features of microscopic biopsy images which include eccentricity, area ratio, compactness, average values of color components, energy entropy, correlation, and area of cells and nucleus. The classification accuracy obtained by Bayesian, K-nearest neighbor, neural networks, and support vector machine was 82.3%, 70.60%, 94.1%, and 94.1%, respectively.

Kasmin et al. [18] extracted the features of microscopic biopsy images including area, perimeter, convex area, solidity, major axis length, orientation filled area, eccentricity, ratio of cell and nucleus area, circularity, and mean intensity of cytoplasm. The KNN and neural network classifier are used for classification accuracy 86% and 92%, respectively.

In this paper, a framework for automated detection and classification of cancer from microscopic biopsy images using clinically significant and biologically interpretable features is proposed and examined. For segmentation of images colour k-means based method is used. The various hybrid features which are extracted from the segmented images include shape and morphological features, GLCM texture features, Tamura features, Law's Texture Energy based features, histogram of oriented gradients, wavelet features, and color features. For classification purposes, k-nearest neighbor based method is proposed to be used. The efficacy of other classifiers such as SVM, random forest, and fuzzy k-means is also examined. For testing purposes, 2828 microscopic biopsy images available from histology database [8] are used. From the obtained results, it was observed that the proposed method is performing better in comparison to other methods discussed as above. The overall summary and comparison of the proposed method and other methods are presented in Table 6 in Section 4 of results and analysis.

Table 6.

The comparison of the proposed method with other standard methods.

| Authors (year) | Feature set used | Methods of classification | Parameters used (%) | Dataset used |

|---|---|---|---|---|

| Huang and Lai (2010) [15] | Texture features | Support vector machine (SVM) | Accuracy = 92.8 | 1000 × 1000, 4000 × 3000, and 275 × 275 HCC biopsy images |

|

| ||||

| Di Cataldo et al. (2010) [45] | Texture and morphology | Support vector machine (SVM) | Accuracy = 91.77 | Digitized histology lung cancer IHC tissue images |

|

| ||||

| He et al. (2008) [46] | Shape, morphology, and texture | Artificial neural network (ANN) and SVM | Accuracy = 90.00 | Digitized histology images |

|

| ||||

| Mookiah et al. (2011) [47] | Texture and morphology | Error backpropagation neural network (BPNN) | Accuracy = 96.43, sensitivity = 92.31, and specificity = 82 | 83 normal and 29 OSF images |

|

| ||||

| Krishnan et al. (2011) [48] | HOG, LBP, and LTE | LDA | Accuracy = 82 | Normal-83 OSFWD-29 |

|

| ||||

| Krishnan et al. (2011) [48] | HOG, LBP, and LTE | Support vector machine (SVM) | Accuracy = 88.38 | Histology images Normal-90 OSFWD-42 OSFD-26 |

|

| ||||

| Caicedo, et al. (2009) [8] | Bag of features | Support vector machine (SVM) | Sensitivity = 92 Specificity = 88 |

2828 histology images |

|

| ||||

| Sinha and Ramkrishan (2003) [17] | Texture and statistical features | KNN | Accuracy = 70.6 | Blood cells histology images |

|

| ||||

| The proposed approach | Texture, shape and morphology, HOG, wavelet color, Tamura's feature, and LTE | KNN | Average: accuracy = 92.19, sensitivity = 94.01, specificity = 81.99, BCR = 88.02, F-measure = 75.94, MCC = 71.74 | 2828 histology images |

3. Methods and Models

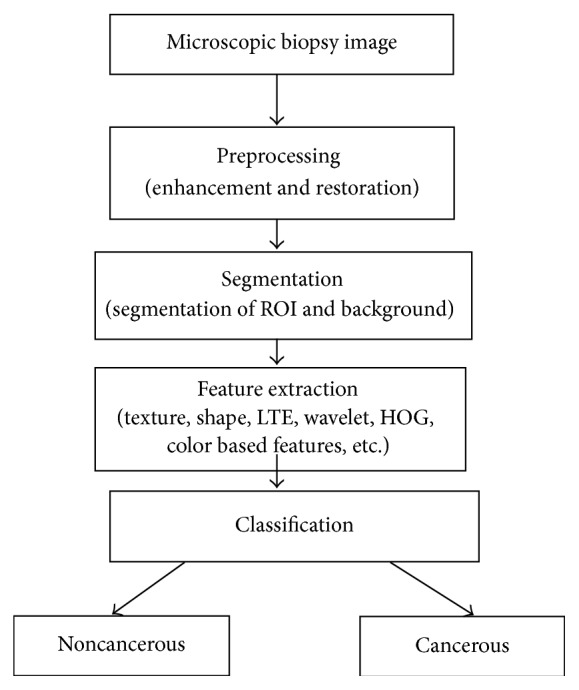

The detection and classification of cancer from microscopic biopsy images are a challenging task because an image usually contains many clusters and overlapping objects. The various stages involved in the proposed methodology include enhancement of microscopic images, segmentation of background cells, features extraction, and finally the classification. For the enhancement of the microscopic biopsy images, the contrast limited adaptive histogram equalization [19, 20] approach is used and for the segmentation of background cells k-means segmentation algorithm is used. In feature extraction phase, various biologically interpretable and clinically significant shape and morphology based features are extracted from the segmented images which include gray level texture features, color based features, color gray level texture features, Law's Texture Energy (LTE) based features, Tamura's features, and wavelet features. Finally, the K-nearest neighborhood (KNN), fuzzy KNN, and support vector machine (SVM) based classifiers are examined for classifying the normal and cancerous biopsy images. These approaches are tested on four fundamental tissues (connective, epithelial, muscular, and nervous) of randomly selected 1000 microscopic biopsy images. Finally, the performances of the classifiers are evaluated using well known parameters and from results and analysis, it is observed that the fuzzy KNN based classifier is performing better for the selected features set. The flowchart for the proposed work is given in Figure 1.

Figure 1.

Model of automated cancer detection from microscopic biopsy images.

3.1. Enhancements

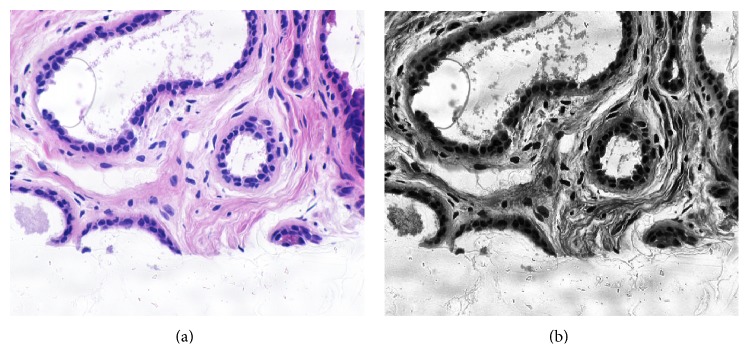

The main purpose of the preprocessing is to remove a specific degradation such as noise reduction and contrast enhancement of region of interests. The biopsy images acquired from microscope may be defective and deficient in some respect such as poor contrast and uneven staining, and they need to be improved through process of image enhancement which increases the contrast between the foreground (objects of interest) and background [21]. The contrast limited adaptive histogram equalization (CLAHE) [20] approach is used for enhancement of microscopic biopsy images. Figure 2 shows the original and enhanced image using contrast limited adaptive histogram equalization.

Figure 2.

The original (a) and enhanced microscopic biopsy image with CLAHE (b).

3.2. Segmentation

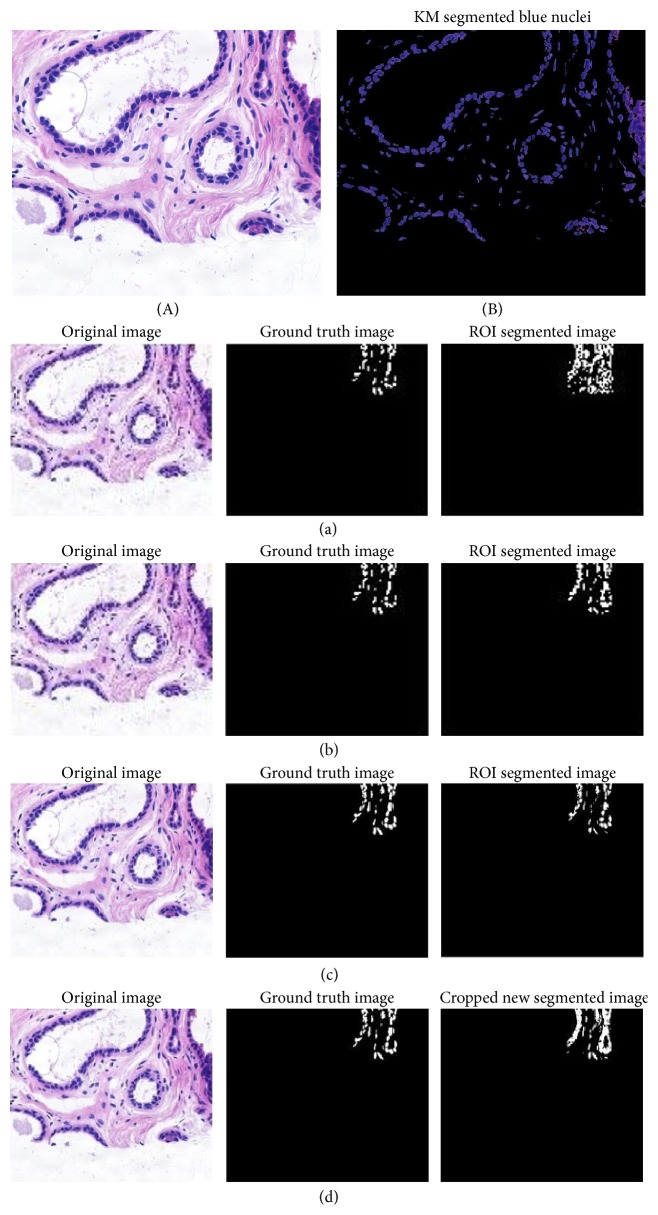

Several segmentation methods have been adapted for cytoplasm, cell, and nuclei segmentation [22] from microscopic biopsy images like threshold based, region-based, and clustering based algorithms. However the selections of segmentation methods depend on the type of the features to be preserved and extracted. For the segmentation of ROI (region of interest), the ground truth (GT) of the images is manually cropped and created from histology dataset [8]. The k-means clustering based segmentation algorithms are used because of the preservation of the desired information. From the obtained results through experimentation it is observed that the clustering based algorithms specifically k-means based method are the best suited for microscopic biopsy images. Figure 3 shows the original and k-means segmented microscopic biopsy image. For testing and experimentation purpose, twenty (20) microscopic biopsy images available from histology dataset [8] were used. These images were randomly selected for segmentation. The ground truth (GT) images are manually created by cropping the region of interest (ROI). The visual results of texture based segmentation, FCM segmentation, K-means segmentation, and color based segmentation [20, 23–26] are presented in Figures 3(a) to 3(d). Thus from the visual results obtained and reported in Figures 3(a) to 3(d), it is observed that the k-means clustering based segmentation method performs better in most of the cases as compared to other segmentation approaches under consideration for microscopic biopsy image segmentation.

Figure 3.

Original (A) and segmented microscopic biopsy image with K-means segmentation approach (B). (a) Original, ground truth, and ROI segmented by texture based segmentation. (b) Original, ground truth, and ROI segmented by FCM segmentation. (c) Original, ground truth, and ROI segmented by k-means segmentation. (d) Original, ground truth, and ROI segmented by color based segmentation.

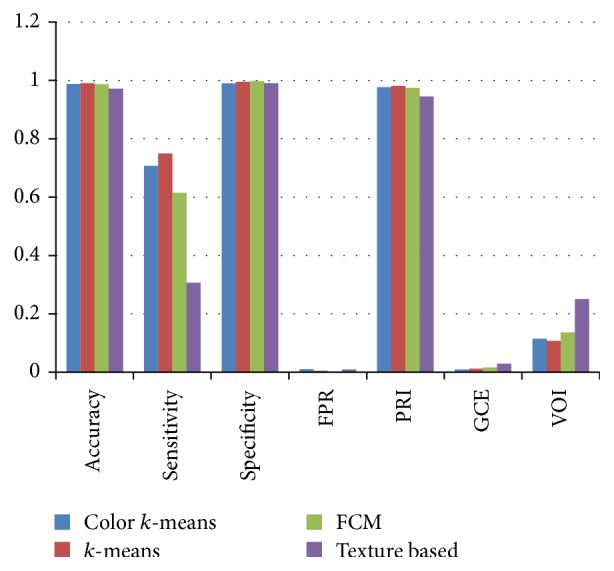

Finally the ROI segmented image of microscopic biopsy is compared to ground truth images for the quantitative evaluation of various segmentation approaches for all 20 sample images from histology dataset. The performance of the various segmentation approaches such as K-means [27], fuzzy c-means [28], texture based segmentation [29], and color based segmentation [30] was evaluated in terms of various popular parameters of segmentation measures. These parameters include accuracy, sensitivity, specificity, false positive rate (FPR), probability random index (RI), global consistency error (GCE), and variance of information (VOI).

The brief description of few of these performance measures used in this paper is as follows.

(i) Probability Random Index (PRI). Probability random index is the nonparametric measure of goodness of segmentation algorithms. Random index between test (S) and ground truth (G) is estimated by summing the number of pixel pairs with same label and number of pixel pairs having different labels in both S and G and then dividing it by total number of pixel pairs. Given a set of ground truth segmentations G k, the PRI is estimated using (1) such that c ij is an event that describes a pixel pair (i, j) having same or different label in the test image S test

| (1) |

(ii) Variance of Information (VOI). The variation of information is a measure of the distance between two clusters (partitions of elements) [31]. Clustering with clusters is represented by a random variable X, X = {1,…, k} such that P i = |X i | /n, i ∈ X, and n = ∑i X i is the variation of information between two clusters X and Y.

Thus VOI(X, Y) is represented using

| (2) |

where H(X) is entropy of X and I(X, Y) is mutual information between X and Y. VOI(X, Y) measures how much the cluster assignment for an item in clustering X reduces the uncertainty about the item's cluster in clustering Y.

(iii) Global Consistency Error (GCE). The GCE is estimated as follows: suppose segments s i and g j contain a pixel, say p k, such that s ∈ S, g ∈ G where S denotes the set of segments that are generated by the segmentation algorithm being evaluated and G denotes the set of reference segments. To begin with, a measure of local refinement error is estimated using (3) and then it is used to compute local and global consistency errors, where n denotes the set of difference operation and R(x, y) represents the set of pixels corresponding to region x that includes pixel y. Using (3) [31] the global consistency error (GCE) is computed using (4) where n denotes the total number of pixels of the image. GCE quantify the amount of error in segmentation (0 signifies no error and 1 indicates no agreement):

| (3) |

| (4) |

Table 2 and Figure 4 show the comparison of various segmentation algorithms on the basis of average accuracy, sensitivity, specificity, FPR, PRI, GCE, and VOI for 20 sample images taken from histology dataset [8]. From Table 2 and Figure 4, it is observed that k-means, color k-means, fuzzy c-means, and texture based methods are performing better at par in terms of accuracy, specificity, and PRI segmentation measures but except for k-means based segmentation methods other methods are not performing better in terms of other important parameters. Only the K-means based segmentation algorithm is associated with larger value of accuracy, sensitivity, specificity, and random index (RI) and smaller value of FPR, GCE, and VOI in comparison to other methods and hence it is better in comparison to others. Hence, k-means based segmentation is the only method which performs better in terms of all parameters and that is why it is chosen as the segmentation method in the proposed framework for cancer detection from microscopic biopsy images.

Table 2.

Quantitative evaluation of segmentation methods on the basis of average values of various performance metrics for a set of 20 microscopic images [8].

| Accuracy | Sensitivity | Specificity | FPR | PRI | GCE | VOI | |

|---|---|---|---|---|---|---|---|

| Color k-means | 0.987799 | 0.707025 | 0.989218 | 0.010782 | 0.975985 | 0.009205 | 0.115479 |

| k-means | 0.990444 | 0.748991 | 0.994933 | 0.005067 | 0.981119 | 0.012839 | 0.10818 |

| FCM | 0.987008 | 0.614717 | 0.998235 | 0.001765 | 0.974447 | 0.015902 | 0.136348 |

| Texture based | 0.97144 | 0.306398 | 0.990445 | 0.009555 | 0.944609 | 0.029276 | 0.250797 |

Figure 4.

Comparisons of various segmentation methods on the basis of average accuracy, sensitivity, specificity, FPR, PRI, GCE, and VOI for 20 sample images from histology dataset [8].

3.3. Feature Extraction

After segmentation of image features are extracted from the regions of interest to detect and grade potential cancers. Feature extraction is one of the important steps in the analysis of biopsy images. The features are extracted at tissue level and cell level of microscopic biopsy images for better predictions. To better capture the shape information, we use both region-based and contour-based methods to extract anticircularity, area irregularity, and contour irregularity of nuclei as the three shape features to reflect the irregularity of nuclei in biopsy images. The cellular level feature focuses on quantifying the properties of individual cells without considering spatial dependency between them. In biopsy images for a single cell, the shape and morphological, textural, histogram of oriented gradients and wavelet features are extracted. The tissue level features quantify the distribution of the cells across the tissue; for that, it primarily makes use of either the spatial dependency of the cells or the gray level dependency of the pixels.

Based on these characteristics, some important shape and morphological based features are explained as follows.

(i) Nucleus Area (A). The nucleus area can be represented by nucleus region containing total number of pixels; it is shown in

| (5) |

where A is nucleus area and B is segmented image of i rows and j columns.

(ii) Brightness of Nucleus. The average value of intensity of the pixels belonging to the nucleus region is known as nucleus brightness.

(iii) Nucleus Longest Diameter (NLD). The largest circle's diameter circumscribing the nucleus region is known as nucleus longest diameter; it is shown in

| (6) |

where x 1, y 1 and x 2, y 2 are end points on major axis.

(iv) Nucleus Shortest Diameter (NSD). This is represented through smallest circle's diameter circumscribing the nucleus region. It is represented in

| (7) |

where x 1, y 1 and x 2, y 2 are end points on minor axis.

(v) Nucleus Elongation. This is represented by the ratio of the shortest diameter to the longest diameter of the nucleus region, shown in

| (8) |

(vi) Nucleus Perimeter (P). The length of the perimeter of the nucleus region is represented using

| (9) |

(vii) Nucleus Roundness (γ). The ratio of the nucleus area to the area of the circle corresponding to the nucleus longest diameter is known as nucleus compactness, shown in

| (10) |

(viii) Solidity. Solidity is ratio of actual cell/nucleus area to convex hull area shown in

| (11) |

(ix) Eccentricity. The ratio of major axis length and minor axis length is known as eccentricity and defined in

| (12) |

(x) Compactness. Compactness is the ratio of area and square of the perimeter. It is formulated as

| (13) |

There are seven sets of features used for computing the feature vector of microscopic biopsy images explained as follows.

(i) Texture Features (F1–F22). [32–34] Autocorrelation, contrast, correlation, cluster prominence, cluster shade, difference variance, dissimilarity, energy, entropy, homogeneity, maximum probability, sum of squares, sum average, sum variance, sum entropy, difference entropy, information measure of correlation 1, information measure of correlation 2, inverse difference (INV), inverse difference normalized (INN), and inverse difference moment normalized are major texture features which can be calculated using equations of the texture features.

(ii) Morphology and Shape Feature (F23–F32). In papers [35, 36] authors describe the shape and morphology features. The considered shape and morphological features in this paper are area, perimeter, major axis length, minor axis length, equivalent diameter, orientation, convex area, filled area, solidity, and eccentricity.

(iii) Histogram of Oriented Gradient (HOG) (F33–F68). Histogram of oriented gradient is one of the good features set to deify the objects [32]. In our observation it will be included for better and accurate identification of objects present in microscopic biopsy images.

(iv) Wavelet Features (F69–100). Wavelets are small wave which is used to transform the signals for effective processing [3]. The wavelets are useful in multiresolution analysis of microscopic biopsy images because they are fast and give better compression as compared to other transforms. The Fourier transform converts a signal into a continuous series of sine waves, but the wavelet precedes it in both time and frequency. This accounts for the efficiency of wavelet transforms [37]. Daubechies wavelets have been used because they have fractal structures and they are useful in the case of microscopic biopsy images. In this paper mean, entropy, energy, contrast homogeneity, and sum of wavelet coefficients are taken into consideration.

(v) Color Features (F101–F106). The components of these models are hue, saturation, and value (HSV) [34]. This is represented by the six sided pyramids, the vertical axis behaves as brightness, the horizontal distance from the axis represents the saturation, and the angle represents the hue. Here mean and standard deviation of HSV components are taken as features.

(vi) Tamura's Features (F107–F109). Tamura's features are computed on the basis of three fundamental texture features: contrast, coarseness, and directionality [3]. Contrast is the measure of variety of the texture pattern. Therefore, the larger blocks that make up the image have a larger contrast. It is affected by the use of varying black and white intensities [32]. Coarseness is the measure of granularity of an image [32]; thus coarseness can be represented using average size of regions that have the same intensity [38]. Directionality is the measure of directions of the grey values within the image [32].

(vii) Law's Texture Energy (LTE) (F110–F115). These features are texture description features which mainly used average gray level, edges, spots, ripples, and wave to generate vectors of the masks. Law's mask is represented by the features of an image without using frequency domain [39]. Laws significantly determined that several masks of appropriate sizes were very instructive for discriminating between different kinds of texture features present in the microscopic biopsy images. Thus its classified samples are based on expected values of variance-like square measures of these convolutions, called texture energy measures. The LTE mask method is based on texture energy transforms applied to the image classification used to estimate the energy within the pass region of filters [40].

Table 3 provides the distribution of name of the feature type and the number of features selected for the classification of microscopic biopsy images.

Table 3.

The distribution of various features extracted from images and their ranges.

| Name of features | Number of features (range F1–F115) |

|---|---|

| Texture features | 22 (F1–F22) |

| Morphology and shape feature | 10 (F23–F32) |

| Histogram of oriented gradient (HOG) | 36 (F33–F68) |

| Wavelet features | 32 (F69–100) |

| Color features | 6 (F101–F106) |

| Tamura's features | 3 (F107–F109) |

| Law's Texture Energy | 16 (F110–F115) |

3.4. Classification

The classification of microscopic biopsy images is the most challenging task for automatic detection of cancer from microscopic biopsy images. Classification might provide the answer whether microscopic biopsy is benign or malignant. For classification purposes, many classifiers have been used. Some commonly used classification methods are artificial neural networks (ANN), Bayesian classification, K-nearest neighbor classifiers, support vector machine (SVM), and random forest (RF). Supervised machine learning approaches are used for the classification of microscopic biopsy images. There are various steps involved in the supervised learning approaches. First step is to prepare the data (feature set), the second step is to choose an appropriate algorithm, the third step is to fit a model, the fourth step is to train the fitted model, and then the final step is to use fitted model for prediction. The K-nearest neighborhood (KNN), fuzzy KNN and support vector machine (SVM), and random forest classifiers are used for classifying the normal and cancerous biopsy images.

4. Results and Discussions

The proposed methodologies were implemented with MATLAB 2013b, on dataset of digitized at 5x magnification on PC with 3.4 GHz Intel Core i7 processor, 2 GB RAM, and windows 7 platform.

For the testing and experimentation purposes, a total of 2828 histology images from the histology image dataset (histologyDS2828) and annotations are taken from a subset of images related to above database [8]. The image distributions based on the fundamental tissue structures in the histology dataset include Connective-484, Epithelial-804, Muscular-514, and Nervous-1026 microscopic biopsy images with magnifications 2.5x, 5x, 10x, 20x, and 40x. Although the method is magnification independent, in this work the results are provided on samples digitized at 5x magnification. The features extracted from microscopic biopsy images must be biologically interpretable and clinically significant for better diagnosis of cancer. Table 4 provides the brief description of dataset used for identification of cancer from microscopic biopsy images.

Table 4.

Image distribution of fundamental tissues dataset of 2828 histology images [8].

| Fundamental tissue | Number of images |

|---|---|

| Connective | 484 |

| Epithelial | 804 |

| Muscular | 514 |

| Nervous | 1026 |

| Total | 2828 |

The proposed methodology for detection and diagnosis of cancer detection from microscopic biopsy images consists of the stages of images enhancement, segmentation, feature extraction, and classification.

The contrast limited adaptive histogram equalization (CLAHE) is used for enhancement of microscopic biopsy images, because it has ability to better highlight the regions of interests in the images as tested through experimentation.

To better preserve the desired information in microscopic biopsy images during segmentation process, the various clustering and texture based segmentation approaches were examined. For microscopic biopsy images it is required to discover as much as possible the nuclei information in order to make reliable and accurate detection and diagnosis based on cells and nuclei parameters. From results and analysis presented in Section 4, k-means segmentation algorithm [40] was used for segmenting the microscopic biopsy images as it performs better in comparison to other methods. During segmentation process of k-means clustering method, the number of clusters k was set to k = 3. Further, to find the center of the clusters, squared Euclidean distance measures are used as similarity measures.

In feature extraction phase, various biologically interpretable and clinically significant shape and morphology based features were extracted from the segmented images which include gray level texture features (F1–F22), shape and morphology based features (F23–F32), histogram of oriented gradients (F33–F68), wavelet features (F69–F100), color based features (F101–F106), Tamura's features (F107–F119), and Law's Texture Energy (F110–F115) based features. Finally a 2D matrix of 2828 × 115 feature matrix was formed using all the feature sets, where 2828 are the number of microscopic images in the dataset and 115 are the total number of features extracted.

Randomly selected 1000 data/samples were used for testing various classification algorithms. The 10-fold cross validation approach was used to partition the data in training and testing sets. Thus 900 data/samples were used for training purposes and 100 data/samples were used for testing purposes. The K-nearest neighbor (KNN) is a simple classifier in which a feature vector is assigned. For KNN classification the numbers of nearest neighbor (k) were set to 5, and Euclidean distance matrix and the “nearest” rule to decide how to classify the sample were used. The proposed method was also tested by using support vector machine (SVM) based classifier for linear kernel function with 10-fold cross validation methods. In SVM classification model, the kernel's parameters and soft margin parameter C play vital role in classification process; the best combination of C and γ was selected by a grid search with exponentially growing sequences of C and γ. Each combination of parameter choices was checked using cross validations (10-fold), and the parameters with best cross validation accuracy were selected. For SVM's linear kernel function, quadratic programming (QP) optimization parameter was used to find separating hyperplane. In the case of random forest the value by default is 500 trees and mtry = 10.

The performance of classifiers was calculated using confusion matrix of size 2 × 2 matrix and the value of TP, TN, FP, and FN was calculated. The performance parameters accuracy, sensitivity, and specificity were calculated using (14)–(19).

The fundamental definitions of these performance measures could be illustrated as follows.

Accuracy. The classification accuracy of a technique depends upon the number of correctly classified samples (i.e., true negative and true positive) [40] and is calculated as follows:

| (14) |

where N is the total number of samples present in the microscopic biopsy images.

Sensitivity. Sensitivity is a measure of the proportion of positive samples which are correctly classified [41]. It can be calculated using

| (15) |

where the value of sensitivity ranges between 0 and 1, where 0 and 1, respectively, mean worst and best classification.

Specificity. Specificity is a measure of the proportion of negative samples that are correctly classified [42]. The value of sensitivity is calculated using

| (16) |

Its value ranges between 0 and 1, where 0 and 1, respectively, mean worst and best classification.

Balanced Classification Rate (BCR). The geometric mean of sensitivity and specificity is considered as balance classification rate [43, 44]. It is represented by

| (17) |

F-Measure. F-measure is a harmonic mean of precision and recall. It is defined by using

| (18) |

The value of F-measure ranges between 0 and 1, where 0 means the worst classification and 1 means the best classification.

Matthews's Correlation Coefficient (MCC). MCC is a measure of the eminence of binary class classifications [43]. It can be calculated using the following formula:

| (19) |

Its value ranges between −1 and +1, where −1, +1, and 0, respectively, correspond to worst, best, at random prediction.

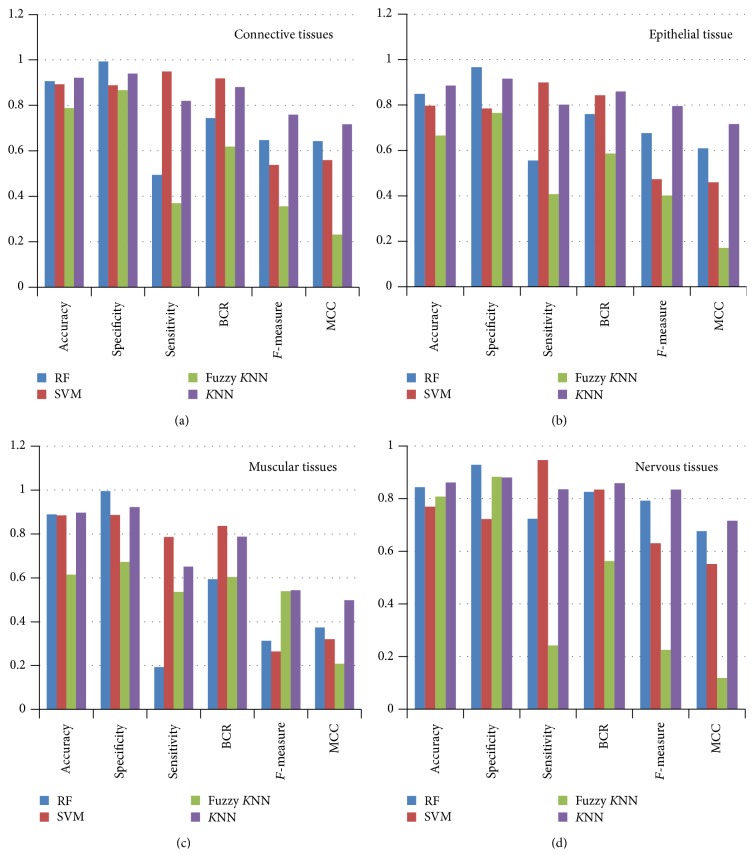

Discussions of Results. Table 5 shows classification results of the proposed framework for four different tissues of microscopic biopsy images containing cancer and noncancer cases tested using four popular classifiers like k-nearest neighbor, SVM, fuzzy KNN, and random forest.

Table 5.

Comparative performances of various classifiers for the chosen features for various tissue types.

| Accuracy | Specificity | Sensitivity | BCR | F-measure | MCC | Accuracy | Specificity | Sensitivity | BCR | F-measure | MCC | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Connective tissues | Epithelial tissues | |||||||||||

| RF | 0.907245 | 0.993668 | 0.493996 | 0.743832 | 0.647373 | 0.642137 | 0.849306 | 0.966243 | 0.555332 | 0.760788 | 0.675868 | 0.609494 |

| SVM | 0.89245 | 0.888438 | 0.948297 | 0.918756 | 0.538314 | 0.55879 | 0.796998 | 0.7851 | 0.898525 | 0.842279 | 0.472804 | 0.4587 |

| FYZZY KNN |

0.787879 | 0.867476 | 0.370074 | 0.618789 | 0.356613 | 0.231013 | 0.665834 | 0.76465 | 0.407057 | 0.585984 | 0.401181 | 0.17053 |

| KNN | 0.921909 | 0.940164 | 0.819922 | 0.880263 | 0.759395 | 0.717455 | 0.884727 | 0.916446 | 0.801733 | 0.859435 | 0.795319 | 0.71626 |

|

| ||||||||||||

| Muscular tissues | Nervous tissues | |||||||||||

| RF | 0.889878 | 0.995023 | 0.193145 | 0.594084 | 0.313309 | 0.37318 | 0.843102 | 0.92827 | 0.723262 | 0.825766 | 0.792403 | 0.676888 |

| SVM | 0.884379 | 0.886718 | 0.786303 | 0.83681 | 0.263764 | 0.320547 | 0.769545 | 0.723056 | 0.946068 | 0.834923 | 0.630126 | 0.552038 |

| FUZZY KNN |

0.614958 | 0.672503 | 0.535894 | 0.604364 | 0.538571 | 0.208941 | 0.808453 | 0.882722 | 0.242776 | 0.562835 | 0.225886 | 0.11837 |

| KNN | 0.897321 | 0.923277 | 0.650761 | 0.787092 | 0.543009 | 0.49783 | 0.861763 | 0.880866 | 0.835733 | 0.858482 | 0.834116 | 0.716492 |

From Table 5 and Figure 5(a) the following observations are made for sample test cases containing connective tissues.

-

(i)

For the identification of cancer from biopsy images of connective tissues in the case of KNN, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.921909, 0.940164, 0.819922, 0.880263, 0.759395, and 0.717455, respectively.

-

(ii)

For the identification of cancer from biopsy of connective tissues in the case of SVM, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.89245, 0.888438, 0.948297, 0.918756, 0.538314, and 0.55879, respectively.

-

(iii)

For the identification of cancer from biopsy of connective tissues in the case of fuzzy KNN, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.787879, 0.867476, 0.370074, 0.618789, 0.356613, and 0.231013, respectively.

-

(iv)

For the identification of cancer from biopsy of connective tissues, in the case of random forest classifier, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.907245, 0.993668, 0.493996, 0.743832, 0.647373, and 0.642137, respectively.

Figure 5.

Performance analysis of classifiers with four fundamental tissues: connective tissue as (a), epithelial tissue as (b), muscular tissue as (c), and nervous tissue as (d).

From Table 5 and Figure 5(b) the following observations are made for sample test cases containing epithelial tissues.

-

(i)

For the identification of cancer from biopsy images of epithelial tissues in the case of KNN, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.884727, 0.916446, 0.801733, 0.859435, 0.795319, and 0.71626, respectively.

-

(ii)

For the identification of cancer from biopsy of epithelial tissues in the case of SVM, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.796998, 0.7851, 0.898525, 0.842279, 0.472804, and 0.4587, respectively.

-

(iii)

For the identification of cancer from biopsy of epithelial tissues in the case of fuzzy KNN, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.665834, 0.76465, 0.407057, 0.585984, 0.401181, and 0.17053, respectively.

-

(iv)

For the identification of cancer from biopsy of epithelial tissues, in the case of random forest classifier, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.849306, 0.966243, 0.555332, 0.760788, 0.675868, and 0.609494, respectively.

From Table 5 and Figure 5(c) the following observations are made for sample test cases containing muscular tissues.

-

(i)

For the identification of cancer from biopsy images of muscular tissues in the case of KNN, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.897321, 0.923277, 0.650761, 0.787092, 0.543009, and 0.49783, respectively.

-

(ii)

For the identification of cancer from biopsy of muscular tissues in the case of SVM, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.884379, 0.886718, 0.786303, 0.83681, 0.263764, and 0.320547, respectively.

-

(iii)

For the identification of cancer from biopsy of muscular tissues in the case of fuzzy KNN, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.614958, 0.672503, 0.535894, 0.604364, 0.538571, and 0.208941, respectively.

-

(iv)

For the identification of cancer from biopsy of muscular tissues, in the case of random forest classifier, the accuracy, specificity, sensitivity, BCR, F-measure, and MCC are 0.889878, 0.995023, 0.193145, 0.594084, 0.313309, and 0.37318, respectively.

From Table 5 and Figure 5(d) the following observations are made for sample test cases containing nervous tissues.

-

(i)

For the identification of cancer from biopsy images of nervous tissues in the case of KNN, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.861763, 0.880866, 0.835733, 0.858482, 0.834116, and 0.716492, respectively.

-

(ii)

For the identification of cancer from biopsy of nervous tissues in the case of SVM, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.769545, 0.723056, 0.946068, 0.834923, 0.630126, and 0.552038, respectively.

-

(iii)

For the identification of cancer from biopsy of nervous tissues in the case of fuzzy KNN, the accuracy, specificity, sensitivity, BCR, F-measure, and MCC are 0.808453, 0.882722, 0.242776, 0.562835, 0.225886, and 0.11837, respectively.

-

(iv)

For the identification of cancer from biopsy of nervous tissues, in the case of random forest classifier, the average value of accuracy, specificity, sensitivity, BCR, F-measure, and MCC is 0.843102, 0.92827, 0.723262, 0.825766, 0.792403, and 0.676888, respectively.

From the above discussions for all four categories of test cases, it is observed that the KNN is performing better in comparison to other classifiers for the identification of cancer from biopsy images of nervous tissues.

From all above observations, it is concluded that the KNN classifier is producing better results in comparison to other methods for the case of biopsy images of connective tissues. The maximum values of the accuracy, sensitivity, and specificity are 0.9552, 0.9615, and 0.9543, respectively. The k-nearest neighbor classifier is also performing better for all cases as well as that was discussed above. Table 6 gives a comparative analysis of the proposed framework with other standard methods available in the literature. From Table 6, it can be observed that the proposed method is performing better in comparison to all other methods.

5. Conclusions

An automated detection and classification procedure was presented for detection of cancer from microscopic biopsy images using clinically significant and biologically interpretable set of features. The proposed analysis was based on tissues level microscopic observations of cell and nuclei for cancer detection and classification. For enhancement of microscopic biopsy images contrast limited adaptive histogram equalization based method was used. For segmentation of images k-means clustering method was used. After segmentation of images, a total of 115 hybrid sets of features were extracted for 2828 sample histology images taken from histology database [8]. After feature extraction, 1000 samples were selected randomly for classification purposes. Out of 1000 samples of 115 features, 900 samples were selected for training purposes and 100 samples were selected for testing purposes. The various classification approaches tested were K-nearest neighborhood (KNN), fuzzy KNN, support vector machine (SVM), and random forest based classifiers. From Table 5 we are in position to conclude that KNN is the best suited classification algorithm for detection of noncancerous and cancerous microscopic biopsy images containing all four fundamental tissues. SVM provides average results for all the tissues types but not better than KNN. Fuzzy KNN is comparatively a less good classifier. RF classifier provides very low sensitivity and down accuracy rate as compared to KNN classifier for this dataset. Hence, from experimental results, it was observed that KNN classifier is performing better for all categories of test cases present in the selected test data. These categories of test data are connective tissues, epithelial tissues, muscular tissues, and nervous tissues. Among all categories of test cases, further it was observed that the proposed method is performing better for connective tissues type sample test cases. The performance measures for connective tissues dataset in terms of the average accuracy, specificity, sensitivity, BCR, F-measure, and MCC are 0.921909, 0.940164, 0.819922, 0.880263, 0.759395, and 0.717455, respectively.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Ali I., Wani W. A., Saleem K. Cancer scenario in India with future perspectives. Cancer Therapy. 2011;8:56–70. [Google Scholar]

- 2.Tabesh A., Teverovskiy M., Pang H.-Y., et al. Multifeature prostate cancer diagnosis and gleason grading of histological images. IEEE Transactions on Medical Imaging. 2007;26(10):1366–1378. doi: 10.1109/tmi.2007.898536. [DOI] [PubMed] [Google Scholar]

- 3.Madabhushi A. Digital pathology image analysis: opportunities and challenges. Imaging in Medicine. 2009;1(1):7–10. doi: 10.2217/iim.09.9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Esgiar A. N., Naguib R. N. G., Sharif B. S., Bennett M. K., Murray A. Fractal analysis in the detection of colonic cancer images. IEEE Transactions on Information Technology in Biomedicine. 2002;6(1):54–58. doi: 10.1109/4233.992163. [DOI] [PubMed] [Google Scholar]

- 5.Yang L., Tuzel O., Meer P., Foran D. J. Medical Image Computing and Computer-Assisted Intervention—MICCAI 2008. Berlin, Germany: Springer; 2008. Automatic image analysis of histopathology specimens using concave vertex graph; pp. 833–841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gonzalez R. C. Digital Image Processing. Pearson Education India; 2009. [Google Scholar]

- 7.Liao S., Law M. W. K., Chung A. C. S. Dominant local binary patterns for texture classification. IEEE Transactions on Image Processing. 2009;18(5):1107–1118. doi: 10.1109/tip.2009.2015682. [DOI] [PubMed] [Google Scholar]

- 8.Caicedo J. C., Cruz A., Gonzalez F. A. Artificial Intelligence in Medicine. Vol. 5651. Berlin, Germany: Springer; 2009. Histopathology image classification using bag of features and kernel functions; pp. 126–135. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 9.Kumar R., Srivastava R. Some observations on the performance of segmentation algorithms for microscopic biopsy images. Proceedings of the International Conference on Modeling and Simulation of Diffusive Processes and Applications (ICMSDPA '14); October 2014; Varanasi, India. Department of Mathematics, Banaras Hindu University; pp. 16–22. [Google Scholar]

- 10.Demir C., Yener B. New York, NY, USA: Rensselaer Polytechnic Institute; 2005. Automated cancer diagnosis based on histopathological images: a systematic survey. [Google Scholar]

- 11.Bhattacharjee S., Mukherjee J., Nag S., Maitra I. K., Bandyopadhyay S. K. Review on histopathological slide analysis using digital microscopy. International Journal of Advanced Science and Technology. 2014;62:65–96. doi: 10.14257/ijast.2014.62.06. [DOI] [Google Scholar]

- 12.Bergmeir C., Silvente M. G., Benítez J. M. Segmentation of cervical cell nuclei in high-resolution microscopic images: a new algorithm and a web-based software framework. Computer Methods and Programs in Biomedicine. 2012;107(3):497–512. doi: 10.1016/j.cmpb.2011.09.017. [DOI] [PubMed] [Google Scholar]

- 13.Mouelhi A., Sayadi M., Fnaiech F., Mrad K., Romdhane K. B. Automatic image segmentation of nuclear stained breast tissue sections using color active contour model and an improved watershed method. Biomedical Signal Processing and Control. 2013;8(5):421–436. doi: 10.1016/j.bspc.2013.04.003. [DOI] [Google Scholar]

- 14.Haralick R. M., Shanmugam K., Dinstein I. Textural features for image classification. IEEE Transactions on Systems, Man and Cybernetics. 1973;3(6):610–621. doi: 10.1109/TSMC.1973.4309314. [DOI] [Google Scholar]

- 15.Huang P.-W., Lai Y.-H. Effective segmentation and classification for HCC biopsy images. Pattern Recognition. 2010;43(4):1550–1563. doi: 10.1016/j.patcog.2009.10.014. [DOI] [Google Scholar]

- 16.Landini G., Randell D. A., Breckon T. P., Han J. W. Morphologic characterization of cell neighborhoods in neoplastic and preneoplastic epithelium. Analytical and Quantitative Cytology and Histology. 2010;32(1):30–38. [PubMed] [Google Scholar]

- 17.Sinha N., Ramkrishan A. G. Automation of differential blood count. Proceedings of the Conference on Convergent Technologies for Asia-Pacific Region (TINCON '03); 2003; Bangalore, India. pp. 547–551. [Google Scholar]

- 18.Kasmin F., Prabuwono A. S., Abdullah A. Detection of leukemia in human blood sample based on microscopic images: a study. Journal of Theoretical & Applied Information Technology. 2012;46(2) [Google Scholar]

- 19.Srivastava R., Gupta J. R. P., Parthasarathy H. Enhancement and restoration of microscopic images corrupted with poisson's noise using a nonlinear partial differential equation-based filter. Defence Science Journal. 2011;61(5):452–461. [Google Scholar]

- 20.Pisano E. D., Zong S., Hemminger B. M., et al. Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. Journal of Digital Imaging. 1998;11(4):193–200. doi: 10.1007/bf03178082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Perona P., Malik J. Scale-space and edge detection using anisotropic diffusion. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1990;12(7):629–639. doi: 10.1109/34.56205. [DOI] [Google Scholar]

- 22.Al-Kofahi Y., Lassoued W., Lee W., Roysam B. Improved automatic detection and segmentation of cell nuclei in histopathology images. IEEE Transactions on Biomedical Engineering. 2010;57(4):841–852. doi: 10.1109/TBME.2009.2035102. [DOI] [PubMed] [Google Scholar]

- 23.Pham D. L., Xu C., Prince J. L. Current methods in medical image segmentation. Annual Review of Biomedical Engineering. 2000;2(1):315–337. doi: 10.1146/annurev.bioeng.2.1.315. [DOI] [PubMed] [Google Scholar]

- 24.Eid R., Landini G., Unit O. P. Oral epithelial dysplasia: can quantifiable morphological features help in the grading dilemma?. Proceedings of the 1st ImageJ User and Developer Conference; 2006; Luxembourg City, Luxembourg. [Google Scholar]

- 25.Bonnet N. Some trends in microscope image processing. Micron. 2004;35(8):635–653. doi: 10.1016/j.micron.2004.04.006. [DOI] [PubMed] [Google Scholar]

- 26.Krishnan M. M. R., Chakraborty C., Paul R. R., Ray A. K. Hybrid segmentation, characterization and classification of basal cell nuclei from histopathological images of normal oral mucosa and oral submucous fibrosis. Expert Systems with Applications. 2012;39(1):1062–1077. doi: 10.1016/j.eswa.2011.07.107. [DOI] [Google Scholar]

- 27.Ng H. P., Ong S. H., Foong K. W. C., Goh P. S., Nowinski W. L. Medical image segmentation using k-means clustering and improved watershed algorithm. Proceedings of the 7th IEEE Southwest Symposium on Image Analysis and Interpretation; March 2006; IEEE; pp. 61–65. [Google Scholar]

- 28.Chuang K.-S., Tzeng H.-L., Chen S., Wu J., Chen T.-J. Fuzzy c-means clustering with spatial information for image segmentation. Computerized Medical Imaging and Graphics. 2006;30(1):9–15. doi: 10.1016/j.compmedimag.2005.10.001. [DOI] [PubMed] [Google Scholar]

- 29.Pal N. R., Pal S. K. A review on image segmentation techniques. Pattern Recognition. 1993;26(9):1277–1294. doi: 10.1016/0031-3203(93)90135-j. [DOI] [Google Scholar]

- 30.Wu M.-N., Lin C.-C., Chang C.-C. Brain tumor detection using color-based K-means clustering segmentation. Proceedings of the 3rd International Conference on Intelligent Information Hiding and Multimedia Signal Processing (IIHMSP '07); November 2007; IEEE; pp. 245–248. [DOI] [Google Scholar]

- 31.Srivastava S., Sharma N., Singh S. K., Srivastava R. A combined approach for the enhancement and segmentation of mammograms using modified fuzzy C-means method in wavelet domain. Journal of Medical Physics. 2014;39(3):169–183. doi: 10.4103/0971-6203.139007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kong J., Sertel O., Shimada H., Boyer K. L., Saltz J. H., Gurcan M. N. Computer-aided evaluation of neuroblastoma on whole-slide histology images: classifying grade of neuroblastic differentiation. Pattern Recognition. 2009;42(6):1080–1092. doi: 10.1016/j.patcog.2008.10.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Loukas C. G., Linney A. A survey on histological image analysis-based assessment of three major biological factors influencing radiotherapy: proliferation, hypoxia and vasculature. Computer Methods and Programs in Biomedicine. 2004;74(3):183–199. doi: 10.1016/j.cmpb.2003.07.001. [DOI] [PubMed] [Google Scholar]

- 34.Orlov N., Shamir L., Macura T., Johnston J., Eckley D. M., Goldberg I. G. WND-CHARM: multi-purpose image classification using compound image transforms. Pattern Recognition Letters. 2008;29(11):1684–1693. doi: 10.1016/j.patrec.2008.04.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Diamond J., Anderson N. H., Bartels P. H., Montironi R., Hamilton P. W. The use of morphological characteristics and texture analysis in the identification of tissue composition in prostatic neoplasia. Human Pathology. 2004;35(9):1121–1131. doi: 10.1016/j.humpath.2004.05.010. [DOI] [PubMed] [Google Scholar]

- 36.Doyle S., Hwang M., Shah K., Madabhushi A., Feldman M., Tomaszeweski J. Automated grading of prostate cancer using architectural and textural image features. Proceedings of the 4th IEEE International Symposium on Biomedical Imaging: From Nano to Macro (ISBI '07); April 2007; pp. 1284–1287. [DOI] [Google Scholar]

- 37.Duda R. O., Hart P. E. Pattern Classification and Scene Analysis. Vol. 3. New York, NY, USA: Wiley; 1973. [Google Scholar]

- 38.Jain A. K. Fundamentals of Digital Image Processing. Vol. 3. Englewood Cliffs, NJ, USA: Prentice-Hall; 1989. [Google Scholar]

- 39.Krishnan M. M. R., Venkatraghavan V., Acharya U. R., et al. Automated oral cancer identification using histopathological images: a hybrid feature extraction paradigm. Micron. 2012;43(2-3):352–364. doi: 10.1016/j.micron.2011.09.016. [DOI] [PubMed] [Google Scholar]

- 40.Ojala T., Pietikäinen M., Mäenpää T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2002;24(7):971–987. doi: 10.1109/tpami.2002.1017623. [DOI] [Google Scholar]

- 41.Wei L., Yang Y., Nishikawa R. M. Microcalcification classification assisted by content-based image retrieval for breast cancer diagnosis. Pattern Recognition. 2009;42(6):1126–1132. doi: 10.1016/j.patcog.2008.08.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lalli G., Kalamani D., Manikandaprabu N. A perspective pattern recognition using retinal nerve fibers with hybrid feature set. Life Science Journal. 2013;10(2):2725–2730. [Google Scholar]

- 43.Yang Y., Wei L., Nishikawa R. M. Microcalcification classification assisted by content-based image retrieval for breast cancer diagnosis. Proceedings of the 14th IEEE International Conference on Image Processing (ICIP '07); September 2007; pp. 1–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Hadjiiski L., Filev P., Chan H.-P., et al. Computerized detection and classification of malignant and benign microcalcifications on full field digital mammograms. In: Krupinski E. A., editor. Digital Mammography: 9th International Workshop, IWDM 2008 Tucson, AZ, USA, July 20–23, 2008 Proceedings. Vol. 5116. Berlin, Germany: Springer; 2008. pp. 336–342. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 45.Di Cataldo S., Ficarra E., Acquaviva A., Macii E. Automated segmentation of tissue images for computerized IHC analysis. Computer Methods and Programs in Biomedicine. 2010;100(1):1–15. doi: 10.1016/j.cmpb.2010.02.002. [DOI] [PubMed] [Google Scholar]

- 46.He L., Peng Z., Everding B., et al. A comparative study of deformable contour methods on medical image segmentation. Image and Vision Computing. 2008;26(2):141–163. doi: 10.1016/j.imavis.2007.07.010. [DOI] [Google Scholar]

- 47.Mookiah M. R., Shah P., Chakraborty C., Ray A. K. Brownian motion curve-based textural classification and its application in cancer diagnosis. Analytical and Quantitative Cytology and Histology. 2011;33(3):158–168. [PubMed] [Google Scholar]

- 48.Krishnan M. M. R., Chakraborty C., Paul R. R., Ray A. K. Quantitative analysis of sub-epithelial connective tissue cell population of oral submucous fibrosis using support vector machine. Journal of Medical Imaging and Health Informatics. 2011;1(1):4–12. doi: 10.1166/jmihi.2011.1013. [DOI] [Google Scholar]