Abstract

Adoption of clinical decision support has been limited. Important barriers include an emphasis on algorithmic approaches to decision support that do not align well with clinical work flow and human decision strategies, and the expense and challenge of developing, implementing, and refining decision support features in existing electronic health records (EHRs). We applied decision-centered design to create a modular software application to support physicians in managing and tracking colorectal cancer screening. Using decision-centered design facilitates a thorough understanding of cognitive support requirements from an end user perspective as a foundation for design. In this project, we used an iterative design process, including ethnographic observation and cognitive task analysis, to move from an initial design concept to a working modular software application called the Screening & Surveillance App. The beta version is tailored to work with the Veterans Health Administration’s EHR Computerized Patient Record System (CPRS). Primary care providers using the beta version Screening & Surveillance App more accurately answered questions about patients and found relevant information more quickly compared to those using CPRS alone. Primary care providers also reported reduced mental effort and rated the Screening & Surveillance App positively for usability.

Keywords: ethnographic observation, cognitive task analysis, agile software development, cancer prevention, health promotion

INTRODUCTION

The recent rapid adoption of electronic health records (EHRs) offers a seemingly natural platform for the implementation, dissemination, and adoption of clinical decision support. Studies show, however, that integration of effective clinical decision support has proven difficult (Bright et al., 2012; Greenes, 2007; Kilsdonk, Peute, Knijnenburg, & Jaspers, 2011; Oluoch et al., 2012; Patterson, Hughes, Kerse, Cardwell, & Bradley, 2012; Sahota et al., 2011). Some have highlighted a misalignment between the types of decision support features offered, the cognitive processes of health care professionals, and the work environment (Miller & Militello, 2015; Sidebottom, Collins, Winden, Knutson, & Britt, 2012; Streiff et al., 2012; Tawfik, Anya, & Nagar, 2012; Wan, Makeham, Zwar, & Petche, 2012; Wu, Davis, & Bell, 2012). The health care community tends to define clinical decision support quite narrowly, focusing primarily on rule-based, if-then algorithms to alert or remind the physician. This narrow focus ignores strategies for supporting decision making commonly used in complex environments, such as aviation, nuclear power, cybersecurity, and others. This focus also reduces the decision to a single player (generally the physician) in isolation, often overlooking the role of the patient and the larger health care team.

These problems are exacerbated by the fact that clinical decision support elements are generally added after the EHR has been designed, implemented, and fielded. Thus, the addition or revision of clinical decision support frequently requires significant time and money to implement and is often severely constrained by the software architecture and existing EHR interface. As a result, decision support elements are difficult to revise and refine as needed to accommodate variations in clinical work flow, updates to national guidelines, emerging screening and treatment modalities, and other changes in the rapidly evolving health care environment (Scott, Rundall, Vogt, & Hsu, 2007).

We describe a project to develop decision support for colorectal cancer (CRC) screening that addresses these problems. CRC screening represents an interesting challenge because it appears to be a straightforward clinical care issue—one that should be easily supported by rule-based clinical reminders that appear in the patient record several months before screening is due and persist until screening has been ordered or the physician documents that the patient has declined screening. The evidence, however, suggests that CRC screening rates remain low despite the introduction of clinical reminders. As of 2012, only 65% of eligible adults in the United States were up-to-date with CRC screening; 7% had been screened but were not up-to-date, and 28% had never been screened (Centers for Disease Control and Prevention, 2013).

CRC Screening

CRC is the second leading cause of cancer deaths in the United States for cancers that affect both men and women (Levin et al., 2008). CRC screening strategies are highly effective at detecting early cancers when they are most treatable (Frazier, Colditz, Fuchs, & Kuntz, 2000; Zauber et al., 2012); yet, CRC screening rates remain lower than those for other screenable cancers. Several factors make CRC screening difficult to track, including the fact that there are several screening modalities available, including colonoscopy, flexible sigmoidoscopy, high-sensitivity fecal occult blood tests, and fecal immunochemical tests (U.S. Preventive Services Task Force, 2008). Depending on the modality used and the risk factors of an individual, screening is recommended at different intervals, some as long as 10 years. Furthermore, CRC risks and screening options are not well known to the general public. As a result, educating patients about CRC can take a significant proportion of the limited time physicians and patients have to interact face-to-face, which makes CRC screening a prime candidate for a postponement or cursory discussion during busy patient encounters.

Decision-Centered Design

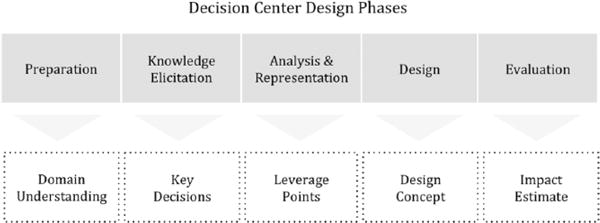

Decision-centered design (Militello & Klein, 2013), a conceptual framework grounded in cognitive engineering offers an alternate approach to computational or algorithmic decision support. Decision-centered design focuses on first understanding the difficult decisions that end users must make and the context in which they occur and then determining what types of interventions would best support decision making. Figure 1 depicts five phases associated with decision-centered design. Rule-based reminders and alerts may be a component of resulting decision support solutions, but they are rarely the primary focus.

Figure 1.

Overview of decision-centered design phases. Adapted from Working Minds: A Practitioner’s Guide to Cognitive Task Analysis (p. 181), by B. Crandall, G. A. Klein, & R. R. Hoffman, 2006, Cambridge, MA: MIT Press. Copyright 2006 by Massachusetts Institute of Technology. Adapted with permission.

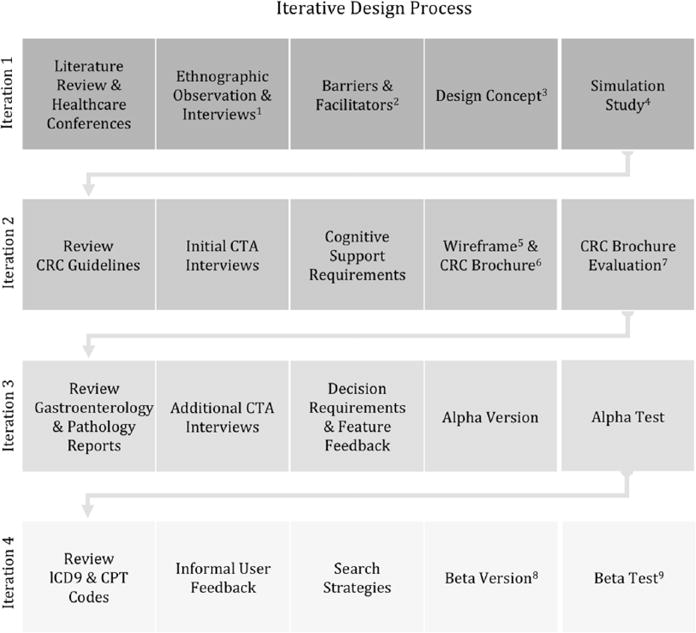

For this project in particular, we conducted ethnographic observations and cognitive task analysis (CTA) interviews to identify cognitive support requirements and understand the work context. Note that the term decision requirements is used broadly to include a range of complex cognitive activities in the decision-centered design literature (Kaempf, Klein, Thordsen, & Wolf, 1996). For clarity, we use the more descriptive term cognitive support requirements in this article. (See Figure 2 for an overview of the how we instantiated decision-centered design phases in the iterative design process.) The resulting decision support solution is designed to work with existing clinical reminders for CRC screening. We considered support for both clinicians and patients. From a clinician perspective, we developed visualizations that support complex macrocognitive processes, such as sensemaking, problem detection, and collaborative decision making. We sought to aid the primary care provider in quickly obtaining key information from the EHR to build a story about the patient’s current status to support sensemaking and anomaly detection and to aid in engaging the patient in the decision process.

Figure 2.

Decision-centered design instantiated in the iterative design process. Numbered superscript annotations refer to artifacts (either elsewhere in this manuscript or in publications) from each iteration: 1Saleem et al. (2009). 2Saleem et al. (2005). 3Figure 2. 4Saleem et al. (2011). 5Figure 3. 6Figure 4. 7Militello, Borders et al. (2014). 8Figure 5. 9Militello et al. (2015).

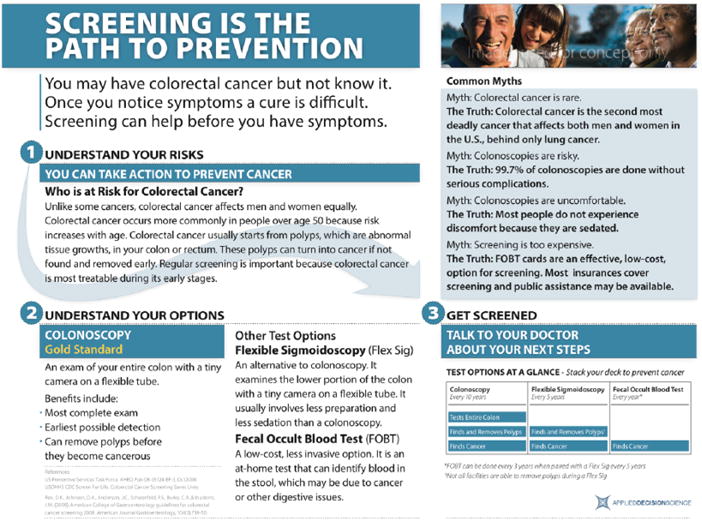

From the patient perspective, we developed a one-page educational brochure that provides key information about CRC and screening options and addresses common misconceptions about CRC screening. The brochure can be shared on the computer screen during the patient encounter, printed to send home with the patient, or disseminated via e-mail or a patient portal. Our intent was to provide patients with accessible, targeted educational materials addressing common concerns that influence patient decision making regarding CRC screening.

A Modular Software Application

To avoid the constraints of existing EHR software architecture and user interface conventions, we developed a modular software application called the Screening & Surveillance App. The app is independent from the EHR but appears to be integrated from the end user perspective. This modular software queries the EHR and laboratory data to extract information already stored in the EHR and lab files. The app displays this information in a visualization designed to support physicians in tracking and managing CRC screening and surveillance for their patients. A link to an easily displayed and printable version of the patient-centered educational brochure is accessible within the Screening & Surveillance App. This modular application does not require any changes to the existing EHR other than the inclusion of a button that provides a link to the Screening & Surveillance App. The beta version of the Screening & Surveillance App works with the Veterans Health Administration’s Computerized Patient Record System (CPRS) but could be easily tailored to work with other EHRs.

In this article, we describe an applied research project employing a decision-centered design conceptual framework (Figure 1) to understand the work context in which CRC screening occurs and to identify cognitive support requirements that informed the design of the Screening & Surveillance App and the patient educational brochure.

METHODS FOR DESIGN AND DEVELOPMENT

Methods used in this project include ethnographic observation, CTA, agile software development, and usability testing. All research activities were either determined to be exempt from institutional review board review or approved via expedited review.

Ethnographic Observation for Foundational Research

The initial phase of this research began with an exploratory study aimed at documenting barriers to CRC screening and follow-up when using computerized decision support.

Ethnographic methods

We conducted ethnographic observation at four sites recognized for their advanced health information technology: two Veterans Affairs (VA) medical centers, Partners HealthCare System, and an Eskenazi Health clinic, which uses software developed by the Regenstrief Institute (Saleem et al., 2009). Although the emphasis of this data collection was on ethnographic observation, we also conducted opportunistic interviews, asking clarifying questions during brief breaks in the clinical flow. In addition, we conducted two to three key informant interviews at each site to obtain an overview of practices and tools used to support primary care providers in managing and tracking CRC screening for their patients. We also hosted focus groups at two sites to offer observation participants an opportunity to provide additional input, if desired. (Note: The other two sites declined to participate in focus groups.) The observations, key informant interviews, and focus groups happened in the span of 3 days at each site, each informing the others. Across the four sites, we observed 62 patient encounters from intake to outtake, conducted key informant interviews with seven physicians, and hosted two focus groups.

Ethnographic data were analyzed using a codebook derived from sociotechnical systems theory (Harrison, Koppel, & Bar-Lev, 2007; Waterson, 2015). A team of four qualitative researchers reviewed transcribed field notes to identify barriers related to the social subsystem, technical system, and external subsystem. At least two coders independently reviewed each transcript and then discussed codes until consensus was reached. For more details about the methods used for data collection and analysis, see Saleem et al. (2009).

Ethnographic findings

Of the barriers identified in this exploratory study, we focused on those most relevant to provider decision making for CRC testing. We found that by organizing individual barriers in terms of macrocognitive activities (Klein et al., 2003), we were better able to characterize the barriers in the context of cognitive work. This post hoc organization of the data using the macrocognitive framework helped the design team maintain a focus on user needs within the narrative of clinical work. The macrocognitive framework allowed designers to anticipate implications of individual barriers and aspects of cognitive performance not specified initially.

The first macrocognitive activity is sensemaking, defined as a deliberate effort to understand connections or build a story in order to anticipate a trajectory and act appropriately (Klein, Moon, & Hoffman, 2006). A significant barrier to sensemaking was simply finding the information needed to build a relevant story about the patient. CRC screening history is stored in disparate places in all the EHRs observed in this study, making it difficult to find and integrate. Some of the most important information comes from a gastroenterology (GI) clinic; yet, coordination with a clinic within the same health care system was not always straightforward. GI clinics outside the health care system represented an even greater barrier. Often GI reports were delivered in a form that did not integrate well with the EHR. Thus in some cases, the primary care provider was required to search the EHR for digital files, as well as faxed reports in a paper folder. In other cases, the GI report was available in the EHR but as a portable document file (PDF) that precluded searching and integration with other health data.

A second macrocognitive activity these leading EHRs did not adequately support was problem detection, or the ability to notice an anomaly that might require nonroutine action (Klein, Pliske, Crandall, & Woods, 2005). One commonly cited barrier was that the clinical reminder recommendations were not always accurate (Saleem et al., 2005), and the information technology provided no cues that would allow the user to detect this problem. Sometimes, the EHR would indicate that the patient was due for screening when the patient was not. Because the clinical reminder provided no rationale for the recommendation, the primary care provider would generally order the test only to have it rejected by the GI clinic. One provider reported that she had ordered the same test three times based on the clinical reminder, and it had been rejected each time, creating considerable frustration for the patient. The current clinical decision support provided no direct way for the primary care provider to recognize an inaccurate recommendation. Even if the primary care provider had a hunch that the reminder was incorrect, there were no direct links to the guideline or prior findings driving the recommendation to facilitate comparison.

Another type of problem that was difficult to detect included tests that were ordered but did not occur. Primary care providers reported that it was very difficult for them to determine whether support staff and patients followed up after the order was made. Thus, there was no easy way to investigate (or even know) if a patient did not receive the test that was ordered until the next visit with the patient—which might be months or years in the future.

A third macrocognitive challenge identified was collaborative decision making. In order to increase screening rates, it is not enough for the primary care provider to know when screening is due; the provider must engage the patient in the decision process. The patient must be convinced that screening is beneficial and reasonably accessible. Providers reported that educating patients about CRC screening can be a lengthy discussion. This barrier can be attributed to variation in communication skills and time pressure rather than a limitation of the EHR. However, the information technology provides a natural platform for providing highly accessible patient education materials to better support patient–provider collaborative decision making.

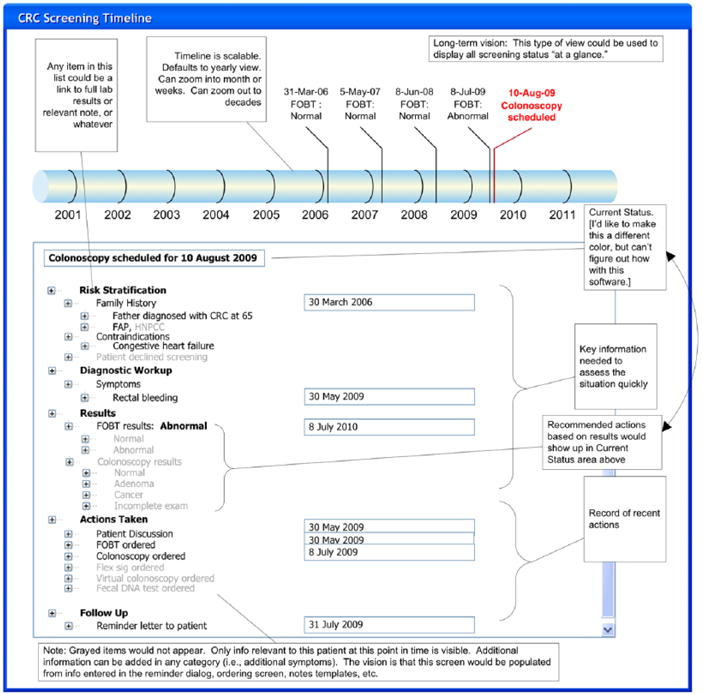

Concept design

Based on these findings, our vision was to develop a time-based display of CRC testing history and to provide easy access to supporting information, such as risk stratification, diagnostic workup, and results to facilitate rapid story building and support sensemaking by including a time-based display of CRC screening history (Figure 3). This early concept also included strategies for linking the recommendations to the source (i.e., GI clinic and/or national guidelines) and for increasing visibility into events that happen after the order was placed to facilitate problem detection. Last, we wanted to explore the efficacy of just-in-time educational materials to facilitate a streamlined discussion to support provider–patient collaborative decision making. Mock-ups of these early design ideas were tested in a laboratory simulation study to facilitate and refine the concept development (Saleem et al., 2011).

Figure 3.

Screening & Surveillance App design concept.

Initial CTA

A second phase of the project employed CTA methods (Hoffman & Militello, 2008). Objectives of the CTA included (a) to identify key cognitive support requirements that drive primary care provider decision making, (b) to increase our understanding of commonalities and differences in CRC screening work flow in different health care systems, and (c) to explore strategies for reducing barriers to CRC testing.

Initial CTA methods

An initial CTA included in-depth interviews with four primary care providers at the Roudebush Veterans Affairs Medical Center in Indianapolis, Indiana, and three primary care providers at the Kettering Health Network in Kettering, Ohio (Lopez & Militello, 2012). Participant experience as a primary care provider ranged from 1.5 years to 11 years (M = 6.28 years). Each interview lasted between 60 and 90 min and included a task diagram to understand work flow (Militello & Hutton, 1998), exploration of critical incidents and/or scenarios (Crandall et al., 2006), and a discussion of reactions to the design concepts. (See online appendix for a copy of the interview guide.) Interview notes were typed within 1 week of interviews. Two researchers reviewed notes to identify cognitive support requirements. After reaching consensus on cognitive support requirements, the same two researchers developed a table detailing cognitive support requirements and documenting important contextual information (Table 1).

TABLE 1.

Cognitive Support Requirements

| Cognitive Support Requirement | Description | |

|---|---|---|

| 1. | Determine whether the patient is in screening or surveillance mode. | For experienced primary care providers, this is an important sensemaking frame. Screening versus surveillance mode has important implications for what information the provider will access prior to the patient discussion as well as how the provider will present and discuss testing options with the patient. For those in surveillance mode, it is important to review prior findings and to ensure that the patient understands that prior findings could increase the patient’s risk for CRC. Thus, the importance of further testing at recommended intervals is greater. For those in screening mode, no additional information gathering is generally needed, and the conversation may be simpler. |

| 2. | Obtain a big-picture perspective of the patient’s testing history. | Prior CRC test data may be found in progress notes, lab reports, GI reports, and pathology reports. During a patient encounter, it is difficult to use the EHR to access each of the required screens, locate the relevant information, and mentally integrate the data in a timely manner while talking to the patient. In fact, in some cases, finding and integrating relevant data is enough of a barrier that physicians rely on patient memory of past CRC tests and findings rather than search the EHR. This represents a considerable barrier to effective sensemaking. |

| 3. | Know where the patient is in the screening cycle. | In most cases, the primary care provider orders a test and receives a report from the specialty clinic or lab in a few weeks. In some cases, however, the primary care provider does not receive a report. In these cases, it is difficult to determine where the process fell apart. There is generally no visibility into what happens after the test is ordered and why a test did not occur, greatly hindering the provider’s ability to detect problems with the process. |

| 4. | Consider conditions or medications that have implications for CRC testing. | Quickly reviewing relevant conditions and medications helps the primary care provider recognize nonroutine situations and make patient-based recommendations for testing. For example, some primary care providers reported that they consider whether the patient has a condition that may increase the risk associated with the anesthesia often used with colonoscopy. For those patients, they may recommend another test modality. |

| 5. | Assess and monitor a patient’s individual risk level for CRC. | Information related to risk stratification might be found in multiple places in the EHR, including prior progress notes and GI reports. Furthermore, risk stratification may change based on test findings or even changes in family history (e.g., a first-degree relative recently diagnosed with CRC). Primary care providers indicated that it would be useful to have the most recent data relevant to risk level available so they can quickly assess and ask relevant questions to determine whether there is a need to update their understanding of the patient’s CRC risk. |

| 6. | Educate and inform patients. | Primary care providers report that they want each patient to understand what colon cancer is and what the screening options are. Most report that they emphasize colonoscopy as a gold standard of care because it provides a more complete view of the colon and because the gastroenterologist is able to remove precancerous polyps during the procedure. Primary care providers report common CRC misconceptions from patients include underestimation of the risk of CRC, overestimation of the risk of colonoscopy procedure, fear that the colonoscopy procedure will be uncomfortable, and belief that CRC screening is expensive. Note that these concerns reported by physicians in CTA interviews align with issues and concerns derived from surveys of patients (Beydoun & Beydoun, 2008; Vernon, 1997). |

Note. CRC = colorectal cancer; CTA = cognitive task analysis; EHR = electronic health record; GI = gastroenterology.

Initial CTA findings

These initial CTA interviews resulted in six cognitive support requirements (Table 1). The first five cognitive support requirements are relevant to the primary care provider’s need to review and integrate patient data as part of sensemaking and problem detection. The sixth cognitive support requirement is relevant to collaborative decision making.

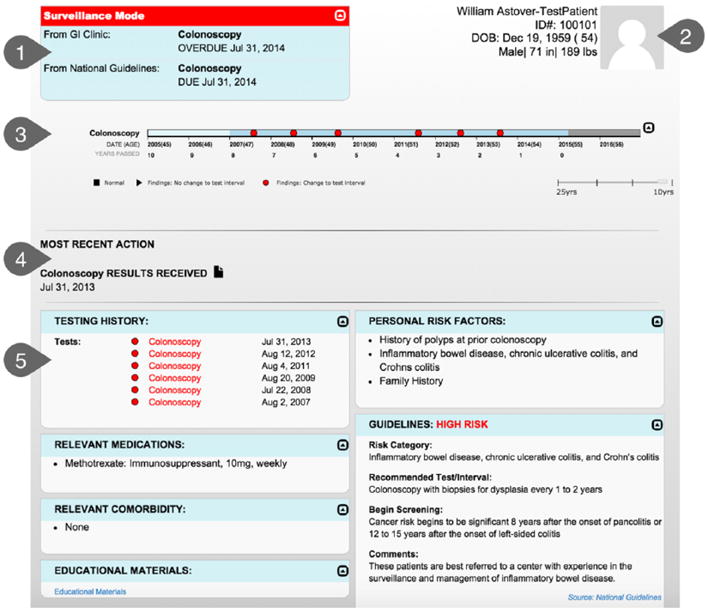

Wireframe and educational material

Based on these findings, we refined the design concept for the Screening & Surveillance App and developed wireframes illustrating key visualizations (Figure 4). The wireframe leveraged our understanding of information priorities to guide data layout. For example, the most important information is whether the patient is in screening or surveillance mode and the recommendation for the next test. For patients in routine screening mode, this may be the only information needed. Therefore, we included an at-a-glance panel in the upper left with this key information.

Figure 4.

Wireframe based on initial cognitive task analysis findings.

We also designed a one-page educational brochure to aid the primary care provider in educating patients about CRC testing (Figure 5). Providers reported that a discussion of CRC and screening options can take a large portion of the limited exam time. We anticipated that an educational brochure geared toward the patient may facilitate a more focused discussion between the primary care provider and patient and also provide a printable artifact for the patient to take away as a reminder of the importance of CRC testing even if the discussion is cut short. Making it available during the exam increases the likelihood that the provider will share the brochure with the patient and answer questions as time allows. For this brochure, our goal was to provide a single-page, easily displayed, and printable resource that provides basic information about CRC and screening options and addresses common misconceptions.

Figure 5.

Single-page educational brochure.

Additional CTA

Additional CTA methods

In a third phase of the research, the research team conducted additional CTA interviews with four primary care providers at Vanderbilt University Medical Center and four at Baylor Healthcare System. Both are recognized leaders in effective use of health information technology. Participant experience ranged from 5 to 27 years (M = 16.25 years). The Baylor health care providers represent an important perspective as the Baylor Healthcare System has higher screening rates for CRC than many other areas of the United States (Baylor, 72%; national average, 65%). These interviews were conducted to broaden our scope and understanding regarding CRC testing work flow at various health care systems and to obtain feedback regarding the wireframe for the Screening & Surveillance App.

The additional CTA interviews were similar to those in the initial CTA. We collected background information about each participant, created a task diagram to discuss work flow, explored critical incidents and scenarios, and obtained reactions to the Screening & Surveillance App wireframes. Interview notes were typed within 1 week of data collection. For these data, two researchers reviewed each set of notes for additional cognitive support requirements and feedback about the design. Researchers met to reach consensus on findings and discuss implications for design.

Additional CTA findings

No additional cognitive support requirements were identified, increasing our confidence that the cognitive support requirements articulated in the first round of interviews were applicable and generalizable beyond the two initial data collection sites. Findings also included specific feedback about key features of the design (e.g., at-a-glance panel, timeline).

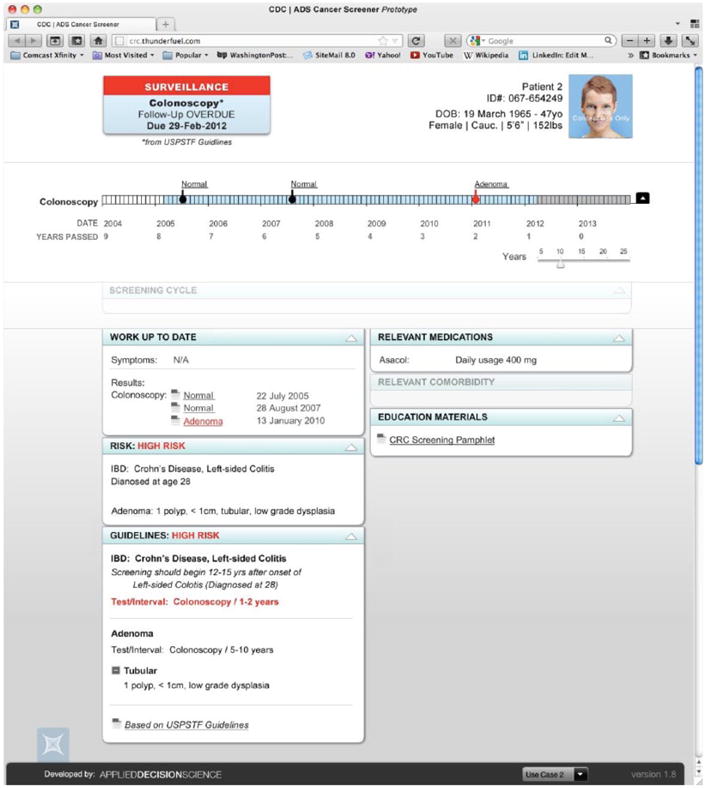

Refined wireframe

Results from these interviews allowed us to refine aspects of the user interface design, such as language choice. For example, although screening mode and surveillance mode were not universal terms, they were easily understood by all interviewees. The results also informed decisions about data presentation. One key refinement included the presentation of data on the timeline. Initial wireframes distinguished normal from abnormal findings. Interviewees reported, however, that findings could be abnormal (e.g., diverticulitis) but have no relevance for CRC screening or risk, creating ambiguity in our categories. As a result, we changed the design to distinguish three categories of findings: those that change the CRC testing interval, those that do not change the testing interval, and normal findings. The additional CTA findings informed the refined wireframes for the Screening & Surveillance App that were instantiated in the beta version (Figure 6).

Figure 6.

Beta version of the Screening & Surveillance App. 1.0 = recommendations at a glance; 2.0 = patient demographics; 3.0 = timeline; 4.0 = most recent action panel; 5.0 = dashboard.

Agile Software Development

We used an agile software development process, which allowed for rapid iteration. Prior to beginning development, we developed a list of user stories, or potential ways to use the application. Development tasks were created from the user stories and then divided into small increments, called sprints, with short time frames, usually 2 weeks. We conducted a series of sprints to develop fully functioning, individual components of the application. Following each sprint, the development and research teams conducted sprint reviews in which the application components developed in the current sprint were reviewed. The individual sprint components were integrated into an alpha version and tested to ensure that the intended functionality worked as designed before the beta version was released. This step included defect testing, including testing the application in extreme usage scenarios (i.e., patients with complex or unusual screening history) and exploring both expected and unexpected combinations of mouse clicks and scrolling.

Development environment

We obtained access to the Advanced Federal Healthcare Innovation Lab (AFHIL) hosted by Hewlett-Packard to interact with a test version of CPRS. AFHIL provided access to a recent version of CPRS and a set of test patient files.

Informal user feedback

Although we obtained informal user feedback throughout the project, we deliberately gathered more detailed informal feedback from potential end users as working components of the Screening & Surveillance App were developed. We were particularly interested in data-seeking strategies. Although previous analyses had highlighted the types of data end users seek in the context of sensemaking, problem detection, and collaborative decision making, we wanted to better understand the strategies that primary care providers develop to find the most recent GI notes and reports as well as other key information sources. Depending upon findings from the GI report, the provider may also look for pathology reports and lab reports. A third step might be to look in progress notes for other relevant notes about CRC testing for this patient. By articulating these strategies, we were able to continue to make refinements that would make it easier to locate and integrate key information needed for decision making.

SCREENING & SURVEILLANCE APP BETA VERSION

The beta version of the Screening & Surveillance App includes five specific decision support features. Each is described in turn.

Recommendations at a Glance

The recommendations-at-a-glance panel in the upper left displays key information that primary care providers need to know before discussing CRC screening with any patient. This information includes whether the patient is in screening or surveillance mode, when the next test is due, and what test is recommended. In most cases, this is all the information the primary care provider will need before making a recommendation to the patient.

Two sources of recommendations may be available. First, the Screening & Surveillance App applies an algorithm we developed that matches patient factors to the U.S. Preventative Task Force guidelines (U.S. Preventive Service Task Force, 2008) and displays the recommended testing interval and modality. Second, if there is a recommendation from a GI clinic available, that recommendation will be displayed. If either the patient or the primary care provider has questions about what is driving the recommendation, the at-a-glance panel can be expanded to compare recommendations from the GI clinic to recommendations from the U.S. Preventive Service Task Force. In this example, note that surveillance mode is highlighted in red because it is a cue to the provider that this case may require additional information.

Patient Demographics

The patient demographics window in the upper right of the screen is intended to reduce the likelihood of wrong-patient errors. There is a placeholder for a patient photo (when available) as well as name, identification number, date of birth, sex, height, and weight. If multiple patient records are open at the same time, prominent display of this information should increase the likelihood that the provider will quickly notice if the data and the patient sitting in the exam room do not match.

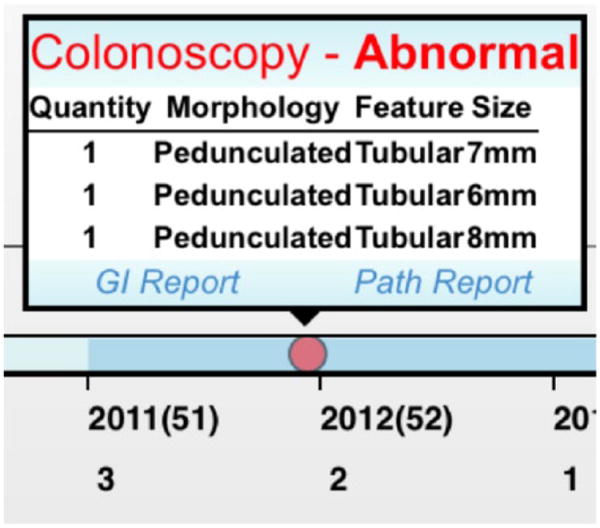

Timeline

In many EHRs, the data about a patient’s CRC testing history are stored in multiple locations, including progress notes, pathology reports, lab reports, and GI notes. The timeline integrates relevant information from all of these sources into a simple, easily scanned visualization. Tests that revealed normal findings are indicated with a black square symbol. A black triangle symbol indicates abnormal findings that do not change the testing interval. A red circle symbol indicates that findings from the test did change the recommended screening interval.

To obtain additional information about a specific test, the end user can click on the symbol for the test of interest to view a summary of findings, such as the size, number, and type of polyps found (Figure 7). Links to the pathology and GI reports are also available if more context is needed.

Figure 7.

Additional information available by clicking on any symbol on the timeline.

Most-Recent-Action Panel

The most-recent-action panel provides insight into the patient’s progress through the testing process. This field displays the most recent action, including test ordered, test scheduled, results received, or test declined. In many health care systems, the primary care provider has no visibility into what happens after a test is ordered, making it difficult to discover tests that do not occur after an order is in place, as well as reports that are never received. The most-recent-action panel is designed to aid the primary care provider in detecting problems when disruptions to the testing process occur.

Dashboard

The dashboard displays information supporting the patient’s risk stratification, including family history, personal history, and hereditary conditions. The dashboard also includes the specific guideline and risk factor that are driving the recommendations for this patient, increasing transparency regarding how the recommendations for this patient were generated. This transparency allows the physician to understand the limitations of the recommendation-generating algorithm. For example, if the patient reports that a family member was recently diagnosed with colon cancer, the provider can quickly see whether the algorithm has taken this recent information into account. For more complex cases in which the provider needs additional information about other factors that may inform the content of the CRC testing recommendations or discussions, the dashboard provides information about relevant comorbidities and relevant medications. Finally, the dashboard includes a link to the patient educational brochure. In the future, the dashboard could include links to other patient-centered materials.

Table 2 shows how our characterization of the cognitive work deepened from broad macrocognitive activities to specific cognitive support requirements to individual design features. This table is intended to illustrate the value of decision-centered design in keeping the macrocognitive activities in the foreground throughout the design and development process, increasing the likelihood that the resulting decision support tool will in fact support end users in managing cognitive complexity critical to their work. It is important to note that this table represents a somewhat sanitized and simplified version of linkages, as individual design features may support more than one cognitive support requirement or macrocognitive activity. The timeline, for example, might aid problem detection or even be used in a conversation between patient and provider to support collaborative decision making.

TABLE 2.

Linking Macrocognitive Activities to Cognitive Support Requirements and Design Features

| Macrocognitive Activity | Cognitive Support Requirement | Design Feature |

|---|---|---|

| Sensemaking | Determine whether the patient is in screening or surveillance mode | At-a-glance panel |

| Obtain a big picture perspective of the patient’s testing history | Timeline | |

| Problem detection | Know where the patient is in the screening cycle | Most recent actions |

| Consider conditions or medications that have implications for CRC testing | Dashboard: Relevant comorbidities and medication | |

| Assess and monitor a patient’s individual risk level for CRC | Dashboard: Personal risk factors and Guidelines | |

| Collaborative decision making | Educate and inform patients | Dashboard: Educational materials |

Note. CRC = colorectal cancer.

BETA TEST

We conducted a two-phase beta test to assess the effectiveness of the Screening & Surveillance App. For an in-depth description of methods and findings from the beta evaluation, see Militello et al. (2015). To obtain feedback from participants representing a broad range of health care settings, we conducted an online evaluation that included 24 primary care providers in public and private health systems. Each participant reviewed a 5-min video demonstrating key features of the Screening & Surveillance App and then worked through two patient scenarios in which they were asked to review the data presented as if they were preparing to discuss CRC screening with the test patients.

In keeping with a decision-centered design approach, we tested the Screening & Surveillance App in the context of scenarios designed to highlight cognitive challenges. The intent of this strategy is to assess how well the design supports macrocognitive activities. Patient scenarios were designed to include information that is key to story building and problem detection but is often difficult to obtain. For example, one scenario included a patient in a high-risk category with a complicated screening history, including frequent colonoscopy due to Crohn’s disease. A second scenario included a patient with average risk for CRC who was overdue for screening according to the national guidelines but also had decreased lung function that might increase risk associated with the anesthesia generally used during colonoscopy.

After reviewing the patient data, they answered 10 CRC-related questions about the patient. At the end of the session, participants completed the Subjective Workload Dominance (Vidulich, 1989; Vidulich & Tsang, 1987; Vidulich, Ward, & Schueren, 1991) and the Health Information Technology Usability Evaluation Scale (Health ITUES; Yen, Sousa, & Bakken, 2014). Participants were able to accurately answer questions about test patients, found the Screening & Surveillance App to require less workload than their usual EHR, and found the app usable and useful.

The next step was to collect comparative performance data for the Screening & Surveillance App. This second study was conducted in person (rather than online). We developed a limited facsimile of CPRS to allow for an A-B comparison. Participants were 10 primary care providers recruited from a single Veterans Affairs medical center. Using a similar design to the online study, participants viewed the demonstration video; worked through the patient scenarios, including responding to 10 questions about each patient; and responded to mental effort and usability surveys. Key differences with this study were that participants worked through two scenarios using the Screening & Surveillance App and two scenarios using CPRS alone. We collected performance data, such as response accuracy to questions about each patient, time required to complete each scenario, screens accessed, and mouse clicks required.

Participants performed significantly better on three of four performance measures using the Screening & Surveillance App. Specifically, their responses to questions about patients were more accurate, they completed the scenarios more quickly, and they accessed fewer screens to find the data they needed to discuss CRC screening with their patients. There was no significant difference in the number of mouse clicks when using the Screening & Surveillance App as compared to CPRS alone. Ratings on the Health ITUES (Yen et al., 2014) indicate that they found the Screening & Surveillance App usable. Ratings on the Rating Scale of Mental Effort (Zijlstra & Van Doorn, 1958) suggest that participants found the Screening & Surveillance App required less mental effort than the EHR they use every day.

We conducted a third study to assess the effectiveness of the patient-centered educational brochure. One hundred forty-seven people ranging in age from 50 to 95 years (M = 72.21 years) participated in the evaluation. Using a between-subjects pre-/posttest design, one half of the participants reviewed the one-page brochure, and the other half reviewed a two-page CRC fact sheet published by the Centers for Disease Control and Prevention (http://www.cdc.gov/cancer/colorectal/pdf/basic_fs_eng_color.pdf). Findings indicated that participants were significantly more knowledgeable about CRC and screening options, and more open to screening, after reviewing the CRC materials. Furthermore, there was no significant difference between scores for those who reviewed the one-page brochure and those who reviewed the two-page fact sheet, suggesting that the single-page format was equally effective. For an in-depth description of the study methods and findings, see Militello, Borders, et al. (2014).

DISCUSSION AND CONCLUSION

Decision-centered design provides an important cognitive engineering framework for creating decision support that goes beyond rule-based reminders and alerts. The focus on understanding the barriers and challenges to effective sensemaking, problem detection, and collaborative decision making within a specific clinical context (outpatient primary care) led to a broader approach to decision support. Previous approaches to CRC decision support design focused exclusively on priming providers to recommend CRC testing at evidence-based intervals and resulted in somewhat brittle algorithm-based clinical reminders. An understanding of the work flow and common variations (Militello, Arbuckle, et al., 2014), as well as cognitive support requirements, provided the foundation needed to develop an integrated visualization that supports primary care providers in tracking and managing CRC screening for their patients. We anticipate that a more traditional user-centered design approach may have resulted in an improved user interface and more accurate clinical reminders but may not have expanded the decision support to include sensemaking, problem detection, and collaborative decision making.

In the four benchmark institutions observed during the initial ethnographic observation phase of this research, none of the leading health information technology systems included a time-based display, visibility into the screening process after the test was ordered, or patient-centered educational materials integrated into the clinical reminder or decision support applications of the EHR. Yet, all were designed by informaticists working closely with primary care providers to obtain end user feedback, consistent with user-centered design practices. The iterative decision-centered design process, moving from exploratory ethnographic observation to in-depth CTA interviews to informal user feedback about specific design components, allowed for an efficient design and development process without significant false starts or redesigns.

This project represents an important instantiation of a modular application to enhance decision support. Many have highlighted the potential value of this approach for overcoming challenges associated with developing effective decision support within an existing EHR (Kawamoto, 2007; Sim et al., 2001). Several recent and ongoing efforts focus on developing a technical infrastructure that will facilitate the use of modular applications to enhance existing EHRs. For example, as part of the Strategic Health IT Advanced Research Projects (SHARP) program, researchers at Harvard are focused on developing an architecture that allows for easy implementation of applications across health records (Mandl et al., 2012). Along with the SHARP program, there are others, such as the Clinical Decision Support Consortium (Middleton, 2009) and the Morningside Initiative (Greenes et al., 2010) that are also working toward platforms that will support integration of third-party applications into EHRs. Greenes and colleagues (2010) are also developing an infrastructure that will allow testing of multiple third-party plug-ins for decision support. The OpenCDS is an open-source collaboration that leverages HL7/Object Management Group Decision Support Service standards and HL7 virtual medical record standards to clinical decision support tools that are application independent and can therefore be widely adapted (Kawamoto, 2011). In spite of these many efforts to support modular application development, few examples exist in clinical practice today.

Although some have called for better integration of cognitive engineering with more quantitative approaches, such as lean engineering (Baxter & Sommerville, 2011), we did not take this approach. Integrating more quantitative methods may have provided additional insight into quantifiable error rates (i.e., CRC tests ordered in error or outside of intervals recommended by national guidelines) and more precise time data (i.e., how much time during the patient encounter is devoted to discussion of CRC testing). Another limitation is the focus on a patient-centered educational brochure exclusively. There may be additional value in creating a provider-centered version of the brochure to aid in communication from the provider’s perspective.

Findings from the beta evaluation indicate that the types of decision support available in the Screening & Surveillance App represent a significant improvement over the currently available decision support in CPRS, one of the leading EHRs. Primary care providers were able to more quickly find information about test patients’ CRC testing history and risk factors and more accurately answer questions about test patients using the Screening & Surveillance App than when using CPRS, the EHR they use routinely. There is no doubt that if given the opportunity to implement this modular application in a clinical setting, we will encounter challenges that did not arise in a beta test using test patients in a usability laboratory. Although we have tried to account for common types of variability and design a capability that will integrate into work flow across a broad range of health care systems, it is likely that further refinements will be required if this app is widely adopted. Additional testing will be required at each step, particularly considering the rapidly changing health care industry. Despite this, we anticipate that the focus on cognitive support requirements has resulted in a design that will continue to be relevant in spite of changes in health care technologies, staffing, guidelines, and other evolving variables. Furthermore, the modular application format will allow for relatively rapid and inexpensive updates and refinements.

Supplementary Material

Acknowledgments

This research was funded by the Small Business Innovation Research program, through a contract with the Centers for Disease Control and Prevention (Contract No. 200-2012-52902), and also by the Agency for Healthcare Research and Quality (Contract HSA2902006000131). The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention, the Agency for Healthcare Research and Quality, the Department of Veterans Affairs, or the U.S. government.

Biographies

Laura G. Militello, MS, is cofounder and senior scientist at Applied Decision Science, LLC. She has been studying decision making in complex settings for over 20 years. Her research interests include the impact of electronic health records on clinical decision making and strategies for supporting expertise via technology design and training.

Jason J. Saleem, PhD, is an assistant professor with the Department of Industrial Engineering at the University of Louisville. His research involves innovation for next-generation electronic health records and health information technology to support higher-quality care and safety. His research also focuses on provider–patient interaction with respect to exam room computing as well as the coordination of multiple computing devices in a health care setting.

Morgan R. Borders, BA, is a research assistant at Applied Decision Science, LLC. She has worked at Applied Decision Science since 2012 studying decision making in health care and military domains. Her research interests include decision making, particularly in the context of supporting individuals in adopting healthy behaviors.

Christen E. Sushereba, BA, is currently a graduate student studying human factors psychology with an emphasis on interface design at Wright State University in Dayton, Ohio. She worked as a research associate at Applied Decision Science for 4 years, where she applied decision-centered design to electronic health records, medical devices, and military systems.

Donald Haverkamp, MPH, is an epidemiologist with the Centers for Disease Control and Prevention. He is currently a field assignee in New Mexico, collaborating with Indian Health Service to improve cancer screening among American Indians and Alaska Native people.

Steven P. Wolf, MBA, is cofounder and CEO at Applied Decision Science, LLC. He has studied decision making in complex environments for more than 10 years. His primary focus is on developing improved interface and technology solutions to support improved decision making and the development of expertise.

Bradley N. Doebbeling, MD, MSc, FACP, FNAP, is a professor and research chair in the School for the Science of Healthcare Delivery at the College of Health Solutions, Arizona State University. He is a physician researcher in informatics, health care systems engineering, and implementation science and a thought leader in developing strategies using informatics and engagement for health system transformation.

Footnotes

Author(s) Note: The author(s) of this article are U.S. government employees and created the article within the scope of their employment. As a work of the U.S. federal government, the content of the article is in the public domain.

Contributor Information

Laura G. Militello, Applied Decision Science, Dayton, Ohio

Jason J. Saleem, University of Louisville, Louisville, Kentucky

Morgan R. Borders, Applied Decision Science, Dayton, Ohio

Christen E. Sushereba, Applied Decision Science, Dayton, Ohio

Donald Haverkamp, Centers for Disease Control and Prevention, Albuquerque, New Mexico.

Steven P. Wolf, Applied Decision Science, Cincinnati, Ohio

Bradley N. Doebbeling, Arizona State University, Phoenix

References

- Baxter G, Sommerville I. Socio-technical systems: From design methods to systems engineering. Interacting With Computers. 2011;23:4–17. [Google Scholar]

- Beydoun H, Beydoun M. Predictors of colorectal cancer screening behaviors among average-risk older adults in the United States. Cancer, Causes, & Control. 2008;19:339–359. doi: 10.1007/s10552-007-9100-y. [DOI] [PubMed] [Google Scholar]

- Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, Lobach D. Effect of clinical decision-support systems: a systematic review. Annals of Internal Medicine. 2012;157:29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention. Vital signs: Colorectal cancer screening test use. United States, 2012. Morbidity and Mortality Weekly Report. 2013;62:881–888. [PMC free article] [PubMed] [Google Scholar]

- Crandall B, Klein G, Hoffman RR. Working minds: A practitioner’s guide to cognitive task analysis. Cambridge, MA: MIT Press; 2006. [Google Scholar]

- Frazier AL, Colditz GA, Fuchs CS, Kuntz KM. Cost-effectiveness of screening for colorectal cancer in the general population. JAMA. 2000;284:1954–1961. doi: 10.1001/jama.284.15.1954. [DOI] [PubMed] [Google Scholar]

- Greenes R. Definition, scope, and challenges. In: Greenes R, editor. Clinical decision support. Burlington, MA: Elsevier; 2007. pp. 4–31. [Google Scholar]

- Greenes R, Bloomrosen M, Brown-Connolly NE, Curtis C, Detmer D, Enberg R, Shah H. The Morningside Initiative: Collaborative development of a knowledge repository to accelerate adoption of clinical decision support. Open Medical Informatics Journal. 2010;4:278–290. doi: 10.2174/1874431101004010278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison MI, Koppel R, Bar-Lev S. Unintended consequences of information technologies in health care: An interactive sociotechnical analysis. Journal of the American Medical Informatics Association. 2007;14:542–549. doi: 10.1197/jamia.M2384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman R, Militello LG. Perspectives on cognitive task analysis: Historical origins and modern communities of practice. New York, NY: Taylor and Francis; 2008. [Google Scholar]

- Kaempf GL, Klein G, Thordsen ML, Wolf S. Decision making in complex naval command-and-control environments. Human Factors. 1996;38:220–231. [Google Scholar]

- Kawamoto K. Integration of knowledge resources into applications to enable clinical decision support: Architectural considerations. In: Greenes R, editor. Clinical decision support: The road ahead. Burlington, MA: Elsevier; 2007. pp. 503–539. [Google Scholar]

- Kawamoto K. OpenCDS: An open-source, standards-based, service-oriented framework for scalable CDS; Paper presented at the SOA in Healthcare 2011 Conference; Herndon, VA. 2011. Jul, Retrieved from http://opencds.org/ [Google Scholar]

- Kilsdonk E, Peute LW, Knijnenburg SL, Jaspers MW. Factors known to influence acceptance of clinical decision support systems. Studies in Health Technology and Information. 2011;169:150–154. [PubMed] [Google Scholar]

- Klein G, Moon BM, Hoffman RR. Making sense of sensemaking 1: Alternative perspectives. IEEE Intelligent Systems. 2006;21(4):70–73. [Google Scholar]

- Klein G, Pliske R, Crandall B, Woods DD. Problem detection. Cognition, Technology & Work. 2005;7:14–28. [Google Scholar]

- Klein G, Ross KG, Moon BM, Klein DE, Hoffman RR, Hollnagel E. Macrocognition. IEEE Intelligent Systems. 2003;18(3):81–85. [Google Scholar]

- Levin B, Lieberman DA, McFarland B, Smith RA, Brooks D, Andrews KS, Winawer SJ. Screening and surveillance for the early detection of colorectal cancer and adenomatous polyps, 2008: A joint guideline from the American Cancer Society, the US Multi-Society Task Force on Colorectal Cancer, and the American College of Radiology. CA: A Cancer Journal for Clinicians. 2008;58:130–160. doi: 10.3322/CA.2007.0018. [DOI] [PubMed] [Google Scholar]

- Lopez CE, Militello LG. Colorectal cancer screening decision support: Final report. 2012 Unpublished technical report (Contract No. 200-2011-M-41884) [Google Scholar]

- Mandl KD, Mandel JC, Murphy SN, Bernstam EV, Ramoni RL, Kreda DA, Kohane IS. The SMART platform: Early experience enabling substitutable applications for electronic health records. Journal of the American Medical Informatics Association. 2012 doi: 10.1136/amiajnl-2011-000622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middleton B. The Clinical decision support consortium. In: Adlassnig K-P, Blobel B, Mantas J, editors. Medical informatics in a united and healthy Europe. Amsterdam: IOS Press; 2009. [Google Scholar]

- Militello LG, Arbuckle NB, Saleem JJ, Patterson ES, Flanagan ME, Haggstrom DA, Doebbeling BN. Sources of variation in primary care clinical workflow: Implications for the design of cognitive support. Health Informatics Journal. 2014;20:35–49. doi: 10.1177/1460458213476968. [DOI] [PubMed] [Google Scholar]

- Militello LG, Borders MR, Arbuckle NB, Flanagan ME, Hall NP, Saleem JJ, Doebbeling BN. Proceedings of the Human Factors and Ergonomics Society 58th Annual Meeting. Santa Monica, CA: Human Factors and Ergonomics Society; 2014. Persuasive health educational materials for colorectal cancer screening; pp. 609–613. [Google Scholar]

- Militello LG, Diiulio JB, Borders MR, Sushereba CE, Saleem JJ, Haverkamp D, Imperiale TF. Evaluating a modular decision support application for colorectal cancer screening. 2015 doi: 10.4338/ACI-2016-09-RA-0152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Militello LG, Hutton RJB. Applied cognitive task analysis (ACTA): A practitioner’s toolkit for understanding cognitive task demands. Ergonomics Special Issue: Task Analysis. 1998;41:1618–1641. doi: 10.1080/001401398186108. [DOI] [PubMed] [Google Scholar]

- Militello LG, Klein G. Decision-centered design. In: Lee JD, Kirlik A, editors. The Oxford handbook of cognitive engineering. Oxford, UK: Oxford University Press; 2013. pp. 261–271. [Google Scholar]

- Miller A, Militello LG. The role of cognitive engineering in improving clinical decision support. In: Bisantz AM, Burns CM, Fairbanks RJ, editors. Cognitive engineering applications in health care. New York, NY: CRC Press; 2015. pp. 7–26. [Google Scholar]

- Oluoch T, Santas X, Kware D, Were M, Biondich P, Bailey C, de Keizer N. The effect of electronic medical record-based clinical decision support on HIV care in resource constrained settings: A systematic review. International Journal of Medical Informatics. 2012;81:e83–92. doi: 10.1016/j.ijmedinf.2012.07.010. [DOI] [PubMed] [Google Scholar]

- Patterson SM, Hughes C, Kerse N, Cardwell CR, Bradley MC. Interventions to improve the appropriate use of polypharmacy for older people. Cochrane Database of Systematic Reviews. 2012;5:CD008165. doi: 10.1002/14651858.CD008165.pub2. [DOI] [PubMed] [Google Scholar]

- Sahota N, Lloyd R, Ramakrishna A, Mackay JA, Prorok JC, Weise-Kelly L, … Haynes RB. Computerized clinical decision support systems for acute care management: A decision-maker-researcher partnership systematic review of effects on process of care and patient outcomes. Implementation Science. 2011;6:91–98. doi: 10.1186/1748-5908-6-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saleem JJ, Haggstrom DA, Militello LG, Flanagan M, Kiess CL, Arbuckle N, Doebbeling BN. Redesign of a computerized clinical reminder for colorectal cancer screening: a human-computer interaction evaluation. BMC Medical Informatics and Decision Making. 2011;11:74. doi: 10.1186/1472-6947-11-74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saleem JJ, Militello LG, Arbuckle NB, Flanagan M, Haagstrom D, Patterson E, Doebbeling B. Proceedings of the American Medical Informatics Association Annual Symposium. Washington, DC: American Medical Informatics Association; 2009. Clinical decision support at three benchmark institutions for health IT: Provider perceptions of colorectal cancer screening; pp. 558–562. [PMC free article] [PubMed] [Google Scholar]

- Saleem JJ, Patterson ES, Militello L, Render ML, Orshansky G, Asch SA. Exploring barriers and facilitators to the use of computerized clinical reminders. Journal of the American Medical Informatics Association. 2005;12:438–47. doi: 10.1197/jamia.M1777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott T, Rundall TG, Vogt TM, Hsu J. Implementing an electronic medical record system. Oxford, UK: Radcliffe; 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sidebottom AC, Collins B, Winden TJ, Knutson A, Britt HR. Reactions of nurses to the use of electronic health record alert features in an inpatient setting. Computers Informatics Nursing. 2012;30:218–226. doi: 10.1097/NCN.0b013e3182343e8f. [DOI] [PubMed] [Google Scholar]

- Sim I, Gorman P, Greenes RA, Haynes RB, Kaplan B, Lehmann H, Tang PC. Clinical decision support systems for the practice of evidence-based medicine. Journal of the American Medical Informatics Association. 2001;8:527–534. doi: 10.1136/jamia.2001.0080527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Streiff MB, Carolan HT, Hobson DB, Kraus PS, Holzmueller CG, Demski R, Haut ER. Lessons from the Johns Hopkins multi-disciplinary venous thromboembolism (VTE) prevention collaborative. BMJ. 2012;344:e3935. doi: 10.1136/bmj.e3935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tawfik H, Anya O, Nagar AK. Understanding clinical work practices for cross-boundary decision support in e-health. IEEE Transactions on Information Technology in Biomedicine. 2012;16:530–554. doi: 10.1109/TITB.2012.2187673. [DOI] [PubMed] [Google Scholar]

- U.S. Preventive Services Task Force. Screening for colorectal cancer: U.S. Preventive Services Task Force recommendation statement. Annals of Internal Medicine. 2008;149:627–637. doi: 10.7326/0003-4819-149-9-200811040-00243. [DOI] [PubMed] [Google Scholar]

- Vernon S. Participation in colorectal cancer screening: A review. Journal of the National Cancer Institute. 1997;89:1406–1422. doi: 10.1093/jnci/89.19.1406. [DOI] [PubMed] [Google Scholar]

- Vidulich MA. Proceedings of the Human Factors Society 33rd Annual Meeting. Santa Monica, CA: Human Factors Society; 1989. The use of judgment matrices in subjective workload assessment: The Subjective Workload Dominance (SWORD) technique; pp. 1406–1410. [Google Scholar]

- Vidulich MA, Tsang PS. Proceedings of the Human Factors Society 31st Annual Meeting. Santa Monica, CA: Human Factors Society; 1987. Absolute magnitude estimation and relative judgment approaches to subjective workload assessments; pp. 1057–1061. [Google Scholar]

- Vidulich MA, Ward GF, Schueren J. Using the Subjective Workload Dominance (SWORD) technique for projective workload assessment. Human Factors. 1991;33:677–691. [Google Scholar]

- Wan Q, Makeham M, Zwar NA, Petche S. Qualitative evaluation of a diabetes electronic decision support tool: Views of users. BMC Medical Informatics and Decision Making. 2012;12:61. doi: 10.1186/1472-6947-12-61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waterson P, Robertson MM, Cooke NJ, Militello L, Roth E, Stanton NA. Defining the methodological challenges and opportunities for an effective science of sociotechnical systems and safety. Ergonomics. 2015;58:565–599. doi: 10.1080/00140139.2015.1015622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu HW, Davis PK, Bell DS. Advancing clinical decision support using lessons from outside of healthcare: an interdisciplinary systematic review. BMC Medical Informatics and Decision Making. 2012;12:90. doi: 10.1186/1472-6947-12-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yen PY, Sousa KH, Bakken S. Examining construct and predictive validity of the Health-IT Usability Evaluation Scale: Confirmatory factor analysis and structural equation modeling results. Journal of the American Medical Informatics Association. 2014;21:241–248. doi: 10.1136/amiajnl-2013-001811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zauber AG, Winawer SJ, O’Brien MJ, Lansdorp-Vogelaar I, van Ballegooijen M, Hankey BF, Waye JD. Colonoscopic polypectomy and long-term prevention of colorectal-cancer deaths. New England Journal of Medicine. 2012;366:687–696. doi: 10.1056/NEJMoa1100370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zijlstra F, Van Doorn L. The construction of a subjective effort scale. Delft University of Technology; Delft, Netherlands: 1958. Technical report. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.