Abstract

How are spatial and object attention coordinated to achieve rapid object learning and recognition during eye movement search? How do prefrontal priming and parietal spatial mechanisms interact to determine the reaction time costs of intra-object attention shifts, inter-object attention shifts, and shifts between visible objects and covertly cued locations? What factors underlie individual differences in the timing and frequency of such attentional shifts? How do transient and sustained spatial attentional mechanisms work and interact? How can volition, mediated via the basal ganglia, influence the span of spatial attention? A neural model is developed of how spatial attention in the where cortical stream coordinates view-invariant object category learning in the what cortical stream under free viewing conditions. The model simulates psychological data about the dynamics of covert attention priming and switching requiring multifocal attention without eye movements. The model predicts how “attentional shrouds” are formed when surface representations in cortical area V4 resonate with spatial attention in posterior parietal cortex (PPC) and prefrontal cortex (PFC), while shrouds compete among themselves for dominance. Winning shrouds support invariant object category learning, and active surface-shroud resonances support conscious surface perception and recognition. Attentive competition between multiple objects and cues simulates reaction-time data from the two-object cueing paradigm. The relative strength of sustained surface-driven and fast-transient motion-driven spatial attention controls individual differences in reaction time for invalid cues. Competition between surface-driven attentional shrouds controls individual differences in detection rate of peripheral targets in useful-field-of-view tasks. The model proposes how the strength of competition can be mediated, though learning or momentary changes in volition, by the basal ganglia. A new explanation of crowding shows how the cortical magnification factor, among other variables, can cause multiple object surfaces to share a single surface-shroud resonance, thereby preventing recognition of the individual objects.

Keywords: Surface perception, Spatial attention, Transient attention, Sustained attention, Object attention, Object recognition, Parietal cortex, Prefrontal cortex, Attentional shroud, Crowding

1. Introduction

Typical environments contain many visual stimuli that are processed to varying degrees for perception, recognition, and control of action (Broadbent, 1958). Attention allocates visual processing resources to pursue these purposes while preserving reactivity to rapid changes in the environment (Kastner & Ungerleider, 2000; Posner & Petersen, 1990). If, for example, a car is coming towards us at high speed, the immediate imperative is to get out of the way. Once it has passed, we may want to determine its make and model to file a police report. However, to succeed at either task, the visual system must first learn what an object is. Under unsupervised learning experiences, nothing tells the brain that there are objects in the world, and any single view of an object is distorted by cortical magnification (Daniel & Whitteridge, 1961; Drasdo, 1977; Fischer, 1973; Polimeni, Balasubramanian, & Schwartz, 2006; Schwartz, 1977; Tootell, Silverman, Switkes, & De Valois, 1982; Van Essen, Newsome, & Maunsell, 1984). Yet the brain somehow learns what objects are. This article develops the hypothesis that information about an object may be accumulated as the eyes foveate several views of an object. Similar views learn to activate the same view category, and several view categories may learn to activate a view-invariant object category. The article proposes how at least two mechanistically distinct types of attention may modulate the learning of both view categories and view-invariant object categories.

While we can easily describe the subjective experience of attention (James, 1890), a rigorous description of the underlying units or mechanisms has proved elusive (Scholl, 2001). This may in part be due to the emphasis on describing attention as acting on the visual system rather than acting in the visual system. Early models, such as the `spotlight of attention' (Duncan, 1984; Posner, 1980; Scholl, 2001) and biased competition types of models (Grossberg, 1976, 1980b; Grossberg, Mingolla, & Ross, 1994; Itti & Koch, 2001; Treisman & Gelade, 1980; Wolfe, Cave, & Franzel, 1989), suggested that attention could enhance and suppress aspects of a visual scene, but most of these early models did not consider what attention must accomplish to lead to behavior, notably the learning and recognition of the objects that are situated in a scene. A notable exception is Adaptive Resonance Theory, or ART, which predicted how learned top-down expectations can focus attention upon critical feature patterns that can be quickly learned, without causing catastrophic forgetting, when the involved cells undergo a synchronous resonance (Carpenter & Grossberg, 1987, 1991, 1993; Grossberg, 1976, 1980b). Since that time, the modeling of Grossberg and his colleagues have distinguished at least three distinct types of “object” attention, and described different functional and computational roles for them:

-

(1)

Surface attention (Cao, Grossberg, & Markowitz, 2011; Fazl, Grossberg, & Mingolla, 2009; Grossberg, 2007, 2009): Spatial attention can fit itself to an object's surface shape to form an “attentional shroud” (Cavanagh, Labianca, & Thornton, 2001; Moore & Fulton, 2005; Tyler & Kontsevich, 1995). Such a shroud is formed and maintained through feedback interactions between the surface and spatial attention to form a surface-shroud resonance.

-

(2)

Boundary attention (Grossberg & Raizada, 2000; Raizada & Grossberg, 2001): Boundary attention can flow along and enhance an object's perceptual grouping, or boundary (Roelfsema, Lamme, & Spekreijse, 1998; Scholte, Spekreijse, & Roelfsema, 2001), even across illusory contours (Moore, Yantis, & Vaughan, 1998; Wannig, Stanisor, & Roelfsema, 2011). Both boundary and surface attention can facilitate figure-ground separation of an object (Grossberg & Swaminathan, 2004; Grossberg & Yazdanbakhsh, 2005).

-

(3)

Prototype attention (Carpenter & Grossberg, 1987, 1991, 1993; Grossberg, 1976, 1980b): Prototype attention can selectively enhance the pattern of critical features, or prototype, that is used to select a learned object category (Blaser, Pylyshyn, & Holcombe, 2000; Carpenter & Grossberg, 1987; Cavanagh et al., 2001; Duncan, 1984; Grossberg, 1976, 1980b; Kahneman, Treisman, & Gibbs, 1992; O'Craven, Downing & Kanwisher, 1999).

Early ART models focused on prototype attention and its role in the learning of recognition categories. In particular, ART predicted how prototype attention can help to dynamically stabilize the memory of learned recognition categories, notably view categories. Surface attention, represented as an “attentional shroud”, has more recently been proposed to play a critical role in regulating view-invariant object category learning. In particular, the ARTSCAN model (Cao et al., 2011; Fazl et al., 2009; Grossberg, 2007, 2009) predicted how the brain knows which view categories, whose learning is regulated by prototype attention, should be bound together through learning into a view-invariant object category, so that view categories of different objects are not erroneously linked together. The ARTSCAN model hereby proposed how spatial attention in the where cortical processing stream could regulate prototype attention within the what cortical processing stream.

The current article builds on this foundation by developing the distributed ARTSCAN, or dARTSCAN, model. ARTSCAN modeled how parietal cortex within the where cortical processing stream can regulate the learning of invariant object categories within the inferior temporal and prefrontal cortices of the what cortical processing stream. In ARTSCAN, a spatial attentional shroud focused only on one object surface at a time. Such a shroud forms when a surface-shroud resonance is sustained between cortical areas such as V4 in the what cortical stream and parietal cortex in the where cortical stream. The dARTSCAN model extends ARTSCAN capabilities in three ways.

First, dARTSCAN proposes how spatial attention may be hierarchically organized in the parietal and prefrontal cortices in the where cortical processing stream, with spatial attention in parietal cortex existing in either unifocal or multifocal states. In particular, dARTSCAN suggests how the span of spatial attention may be varied in a task-sensitive manner via learning or volitional signals that are mediated by the basal ganglia. In particular, spatial attention may be focused on one object (unifocal) to control view-invariant object category learning; spread across multiple objects (multifocal) to regulate useful-field-of view, and may vary between video game players and non-video game players; or spread across an entire visual scene, as during the computation of scenic gist (Grossberg & Huang, 2009). This enhanced analysis begins to clarify how, even when spatial attention seems to be focused on one object, the rest of the scene does totally vanish from consciousness.

Second, dARTSCAN does not rely only on the sustained spatial attention of a surface-shroud resonance. It also proposes how both sustained and transient components of spatial attention (Corbetta, Patel, & Shulman, 2008; Corbetta & Shulman, 2002; Egeth & Yantis, 1997) may interact within parietal and prefrontal cortex in the where cortical processing stream. Surface inputs to spatial attention are derived from what stream sources, such as cortical area V4, that operate relatively slowly. Transient inputs to spatial attention are derived from where stream sources, such as cortical areas MT and MST, that operate more quickly.

This analysis distinguishes two mechanistically different types of transient attentional components. ARTSCAN already predicted how a shift of spatial attention to a different object, that can occur when a surface-shroud resonance collapses, triggers a transient parietal signal that resets the currently active view-invariant object category in inferotemporal cortex (IT), and thereby enables the newly attended object to be categorized without interference from the previously attended object. In this way, a shift of spatial attention in the where cortical stream can cause a correlated shift of object attention in the what cortical stream. Chiu and Yantis (2009) have described fMRI evidence in humans for such a transient reset signal. This transient parietal signal is a marker against which further experimental tests of model mechanisms can be based; e.g., a test of the predicted sequence of V4-parietal surface-shroud collapse (shift of spatial attention), transient parietal burst (reset signal), and collapse of currently active view-invariant category in cortical area IT (shift of categorization rules).

The transient parietal reset signal that coordinates a shift of spatial and object attention across the where and what cortical processing streams, respectively, is mechanistically different from transient attention shifts that are directly due to where stream inputs via MT and MST, which are predicted below to play an important role in quickly updating prefrontal priming mechanisms and explaining two-object cueing data of Brown and Denney (2007).

Third, dARTSCAN models how prefrontal cortex and parietal cortex may cooperate to control efficient object priming, learning, and search.

This dARTSCAN analysis provides a neurobiological explanation of how attention may engage, move, and disengage, and how inhibition of return (IOR) may occur, as objects are freely explored with eye movements (Posner, 1980; Posner, Cohen, & Rafal, 1982; Posner & Petersen, 1990).

These three innovations are described in greater detail in Section 3 and beyond. Given these enhanced capabilities, the dARTSCAN model is able to explain, quantitatively simulate, and predict data from three experimental paradigms: two-object priming, useful-field-of-view and crowding. The two object priming task, first used by Egly, Driver, and Rafal (1994), investigates object-based attention by measuring differences in reaction time (RT) between a validly cued target, an invalidly-cued target that requires a shift of attention within the cued object, and an invalidly-cued target which requires a shift of attention between two objects. Two adaptations of the original task are examined, an extension of the original that includes location cues and targets (Brown & Denney, 2007) and a version of the task that examines individual differences between subjects (Roggeveen, Pilz, Bennett, & Sekuler, 2009). The useful-field-of-view (UFOV) task, first used by Sekuler and Ball (1986) measures the ability of a subject to detect the location of an oddball among many distracters over a wide field of view in a display, which is masked after a brief exposure. Crowding is a phenomenon in which a letter which is clearly visible when peripherally presented alone, is unrecognizable when surrounded by two nearby flanking letters (Bouma, 1970, 1973; Green & Bavelier, 2007; Levi, 2008). These data and concepts about the coordination of spatial and object attention are more fully explained in the subsequent sections.

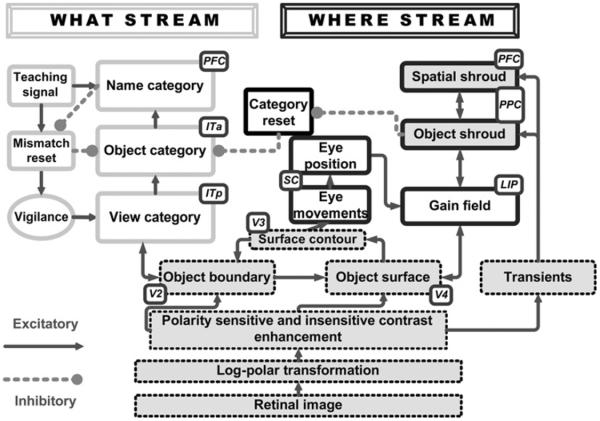

2. Spatial attention in the regulation of invariant object category learning

How does the brain learn to recognize an object from multiple viewpoints while scanning a scene with eye movements? How does the brain avoid the problem of erroneously classifying parts of different objects together? How are attention and eye movements coordinated to facilitate object learning? The dARTSCAN model builds upon the ARTSCAN model (Fig. 1; Cao et al., 2011; Fazl et al., 2009; Grossberg, 2007, 2009), which showed how the brain can learn view-invariant object representations under free viewing conditions. The ARTSCAN model proposes how an object's pre-attentively formed surface representation in cortical area V4 generates a form-fitting distribution of spatial attention, or “attentional shroud” (Tyler & Kontsevich, 1995), in the parietal cortex of the where cortical stream. All surface representations dynamically compete for spatial attention to form a shroud. The winning shroud remains active due to a surface-shroud resonance that is supported by positive feedback between a surface and its shroud, and that persists during active scanning of the object with eye movements.

Fig. 1.

Circuit diagram of the ARTSCAN model. A FACADE model (Grossberg, 1994, 1997; Grossberg & Todorović, 1988) simplified front end is in dashed boxes. What cortical stream elements are in gray outlined boxes, while where cortical stream elements are in black outlined boxes.

A full understanding of the structure of surface-shroud resonances will require an analysis of how an object's distributed, multiple-scale, 3D boundary and surface representations in prestriate cortical areas such as V4 (e.g., Fang & Grossberg, 2009; Grossberg, Kuhlmann, & Mingolla, 2007; Grossberg, Markowitz, & Cao, 2011; Grossberg & Yazdanbakhsh, 2005) activate parietal cortex in such a way that different aspects of the boundary and surface representations can be selectively attended. The simple visual stimuli used in the psychophysical experiments that are explained in this article can be simulated with correspondingly simple surface-shroud resonances. Once such a shroud is activated, it regulates eye movements and category learning about the attended object in the following way.

The first view-specific category to be learned for the attended object also activates a cell population at a higher processing stage. This cell population will become a view-invariant object category. Both types of categories are assumed to form in the inferotemporal (IT) cortex of the what cortical processing stream. For definiteness, suppose that view categories get activated in posterior IT (ITp) and view-invariant categories get activated in anterior IT (ITa) (Baker, Behrmann, & Olson, 2002; Booth & Rolls, 1998; Logothetis, Pauls, & Poggio, 1995).

As the eyes explore different views of the object, previously active view-specific categories are reset to enable new view-specific categories to be learned. What prevents the emerging view-invariant object category from also being reset? The shroud maintains the activity of the emerging view-invariant category representation by inhibiting a reset mechanism, also in parietal cortex, that would otherwise inhibit the view-invariant category. As a result, all the view-specific categories can be linked through associative learning to the emerging view-invariant object category. Indeed, these associative linkages create the view invariance property.

These mechanisms can bind together different views that are derived from eye movements across a fixed object, as well as different views that are exposed when an object moves with respect to a fixed observer. Indeed, in both cases, the surface-shroud resonance corresponding to the object does not collapse. Further development of ARTSCAN, to the pARTSCAN model (Cao et al., 2011) shows, in addition, how attentional shrouds can be used to learn view-, position-, and size-invariant object categories, and how views from different objects can, indeed, be merged during learning, and used to explain challenging neurophysiological data recorded in monkey inferotemporal cortex, if the shroud reset mechanism is prevented from acting (e.g., Li & DiCarlo, 2008, 2010). Although the objects that are learned in psychophysical displays are often two-dimensional images that are viewed at a fixed position in depth, ART models are capable of learning recognition categories of very complex objects–e.g., see the list of industrial applications at http://techlab.bu.edu/resources/articles/C5–so the current analysis also generalizes to three-dimensional objects.

Shroud collapse disinhibits the reset signal, which in turn inhibits the active view-invariant category. Then a new shroud, corresponding to a different object, forms in the where cortical stream as new view-specific and view-invariant categories of the new object are learned in the what cortical stream. The ARTSCAN model thereby begins to mechanistically clarify basic properties of spatial attention shifts (engage, move, disengage) and IOR.

The ARTSCAN model has been used to explain and predict a variety of data. A key ARTSCAN prediction is that a spatial attention shift (shroud collapse) causes a transient reset burst in parietal cortex that, in turn, causes a shift in categorization rules (new object category activation). This prediction has been supported by experiments using rapid event-related functional magnetic resonance imaging (fMRI; Chiu & Yantis, 2009). Positive feedback from a shroud to its surface is predicted to increase the contrast gain of the attended surface, as has been reported in both psychophysical experiments (Carrasco, Penpeci-Talgar, & Eckstein, 2000) and neurophysiological recordings from cortical areas V4 (Reynolds, Chelazzi, & Desimone, 1999; Reynolds & Desimone, 2003; Reynolds, Pasternak, & Desimone, 2000). In addition, the surface-shroud resonance strengthens feedback signals between the attended surface and its generative boundaries, thereby facilitating figure-ground separation of distinct objects in a scene (Grossberg, 1994; Grossberg & Swaminathan, 2004; Grossberg & Yazdanbakhsh, 2005; Kelly & Grossberg, 2000).

In particular, surface contour signals from a surface back to its generative boundaries strengthen consistent boundaries, inhibit irrelevant boundaries, and trigger figure-ground separation. When the surface contrast is enhanced by top-down spatial attention as part of a surface-shroud resonance, its surface contour signals (which are contrast-sensitive) become stronger, and thus its consistent boundaries become stronger as well, thereby facilitating figure-ground separation. This feedback interaction between surfaces and boundaries via surface contour signals is predicted to occur from V2 thin stripes to V2 pale stripes.

Corollary discharges of these surface contour signals are predicted to be mediated via cortical area V3A (Caplovitz & Tse, 2007; Nakamura & Colby, 2000) and to generate saccadic commands that are restricted to the attended surface (Theeuwes, Mathot, & Kingstone, 2010) until the shroud collapses and spatial attention shifts to enshroud another object.

Why is it plausible, mechanistically speaking, for surface contour signals to be a source of eye movement target locations, and for these commands to be chosen in cortical area V3A and beyond? It is not possible to generate eye movements that are restricted to a single object until that object is separated from other objects in a scene by figure-ground separation. If figure-ground separation begins in cortical area V2, then these eye movement commands need to be generated no earlier than cortical area V2. Surface contour signals are plausible candidates from which to derive eye movement target commands because they are stronger at contour discontinuities and other distinctive contour features that are typical end points of saccadic movements. ARTSCAN proposed how surface contour signals are contrast-enhanced at a subsequent processing stage to select the largest signal as the next saccadic eye movement command. Cortical area V3A is known to be a region where vision and motor properties are both represented, indeed that “neurons within V3A…process continuously moving contour curvature as a trackable feature…not to solve the “ventral problem” of determining object shape but in order to solve the “dorsal problem” of what is going where” (Caplovitz & Tse, 2007, p. 1179).

Last but not least, ARTSCAN quantitatively simulates key data about reaction time costs for attention shifts between objects relative to those within an object (Brown & Denney, 2007; Egly et al., 1994). However, ARTSCAN cannot simulate all cases in the Brown and Denney (2007) experiments. Nor was ARTSCAN used to simulate the UFOV task or crowding.

3. Sustained and transient attention, spatial priming, and useful-field-of-view

The dARTSCAN model incorporates three key additional processes to explain a much wider range of data about spatial attention:

-

(1)

The breadth of spatial attention (“multifocal attention”) can vary in a task-selective and learning-responsive way (Alvarez, Horowitz, Arsenio, Dimase, & Wolfe, 2005; Cavanagh & Alvarez, 2005; Cave, Bush, & Taylor, 2010; Franconeri, Alvarez, & Enns, 2007; Green & Bavelier, 2003; Jans, Peters, & De Weerd, 2010; McMains & Somers, 2004, 2005; Muller, Malinowski, Gruber, & Hillyard, 2003; Pylyshyn & Storm, 1988; Pylyshyn et al., 1994; Scholl, Pylyshyn, & Feldman, 2001; Tomasi, Ernst, Caparelli, & Chang, 2004; Yantis & Serences, 2003). The current model proposes how spatial attention can be distributed across multiple objects simultaneously, while still being compatible with the strictly unifocal attention in ARTSCAN.

Below we illustrate how flexibly altering the maximal distribution of spatial attention can be volitionally regulated by the basal ganglia using an inhibitory mechanism that is predicted to be homologous to the one that regulates visual imagery (Grossberg, 2000b) and the storage of sequences of items in working memory (Grossberg & Pearson, 2008).

-

(2)

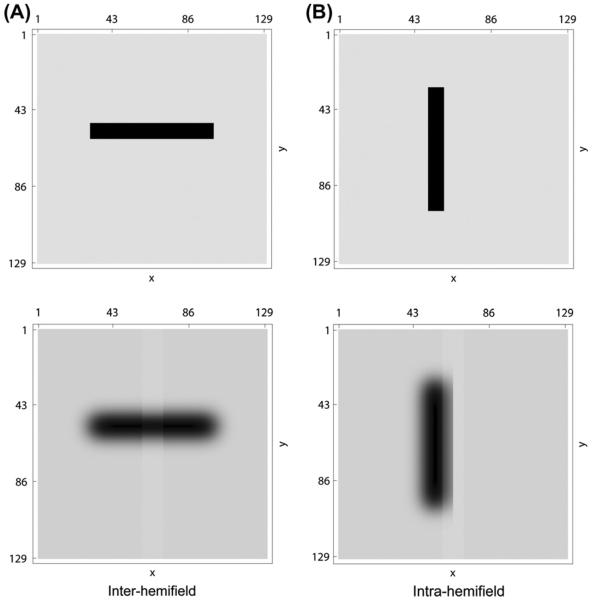

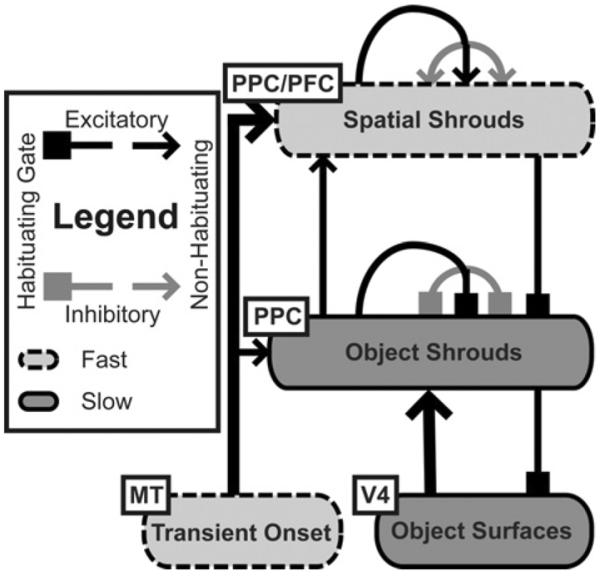

Both sustained surface-driven spatial attention and transient motion-driven spatial attention interact to control maintenance and shifts of spatial attention (Fig. 2). A large experimental literature attempts to anatomically and functionally differentiate components of sustained and transient attention (Dosenbach, Fair, Cohen, Schlaggar, & Petersen, 2008; Dosenbach et al., 2007; Gee, Ipata, Gottlieb, Bisley, & Goldberg, 2008; Gottlieb, Kusunoki, & Goldberg, 2005; Hillyard, Vogel, & Luck, 1998; Ploran et al., 2007; Reynolds, Alborzian, & Stoner, 2003; Yantis & Jonides, 1990; Yantis et al., 2002). The ARTSCAN model only incorporates sustained, surface-driven attention necessary for view-invariant category learning. On the other hand, as noted in Section 1, ART-SCAN also posits a transient reset signal that coordinates shifts of spatial attention with shifts of categorization rules.

-

(3)

In addition to parietal cortex, prefrontal cortex plays a role in priming spatial attention (Fig. 2). Many experiments document such a role for prefrontal cortex (Boch & Goldberg, 1989; Goldman & Rakic, 1979; Kastner & Ungerleider, 2000; Ungerleider & Haxby, 1994; Zikopoulos & Barbas, 2006). The ARTSCAN model is agnostic about the role of PFC in deploying spatial attention.

The above three sets of processes, working together, enable our model to explain a much larger range of data about how attention and recognition interact, notably to better characterize how multifocal attention can help to track and recognize multiple objects in familiar scenes, and focal attention can support view-invariant object category learning for unfamiliar objects.

Fig. 2.

The recurrent, feedforward and feedback connections of object and spatial shrouds. Hemifield separation is not shown.

The large cognitive literature about multifocal attention (Cavanagh & Alvarez, 2005; Pylyshyn et al., 1994) produced concepts such as Fingers of Instantiation (FINST; Pylyshyn, 1989, 2001), Sprites (Cavanagh et al., 2001), and situated vision (Pylyshyn, 2000; Scholl, 2001) which have in common an idea that objects which are not being focally attended are nonetheless spatially represented in the attentional system (Scholl, 2001). This is necessary to allow rapid shifts of attention between objects, to track several identical objects simultaneously, and to underpin visual orientation by marking the allocentric coordinates of several objects in a scene (Mathot & Theeuwes, 2010a; Pylyshyn, 2001). Such concepts are consistent with the daily experience that scenic features outside our focal attention do not disappear.

One challenge to extending the ARTSCAN shroud architecture is how these ideas might be integrated into a system that continues to allow the learning of view-invariant object categories over several saccades, which requires that attention be object-based and unifocal. Multiple shrouds cannot exist during multi-saccade exploration because saccades from one attended object to another would fail to reset the active view-invariant object category, causing distinct objects to be falsely conflated as parts of a single object. Moreover, since multiple surfaces would be recipients of contrast gain from shroud-to-surface feedback, peripheral parts of the object being learned and other objects nearby could similarly be conflated. This suggests that there are at least two model states in which stable shrouds can form. In one, unifocal attention can be maintained on a single surface over multiple saccades, allowing the learning of view-invariant object categories. In the other, multiple shrouds can simultaneously coexist, at a lower intensity not sufficient to gate multi-saccade learning, but allowing rapid recognition of familiar objects and attention to be deployed on multiple objects, regardless of familiarity, between saccades. As noted in item (1) above, volitional control of inhibition in the attention circuit, likely mediated by the basal ganglia (Brown, Bullock, & Grossberg, 2004), allows unfamiliar objects that have weak shrouds to be foveated, followed by an increase in competition to create a single strong shroud that can support learning of a view-invariant object category.

As noted in item (3) above, another challenge, for both the ARTSCAN model, as well as other biased competition models (Itti & Koch, 2001; Lee & Maunsell, 2009; Reynolds & Heeger, 2009; Treisman & Gelade, 1980; Wolfe et al., 1989), is to understand the mechanism of attention priming. Brief transient cues orient attention to an area of a visual scene, which leads to improved behavioral performance if a task-relevant stimulus is presented at the same position within several hundred milliseconds (Desimone & Duncan, 1995; Posner & Petersen, 1990). If the experiment continues and a second task-relevant stimulus appears at the same position after the first has disappeared or is no longer task-relevant, it takes longer for that area to be attended again, due to inhibition-of-return (Grossberg, 1978a, 1978b; Koch & Ullman, 1985; Posner et al., 1982; see Itti and Koch (2001) for a review). ARTSCAN and biased competition models can account for IOR, but they do not incorporate a mechanism that can explain how a brief bottom-up input onset can prime visual attention in the absence of intervening visual stimuli for several hundred milliseconds. But such priming seems to be necessary to explain an extension by Brown and Denney (2007) of the two-object cueing task first used by Egly et al. (1994); see Fig. 3. Priming by prefrontal cortex is incorporated into the model as a natural complement to allowing multifocal attention within the model parietal cortex (Fig. 2).

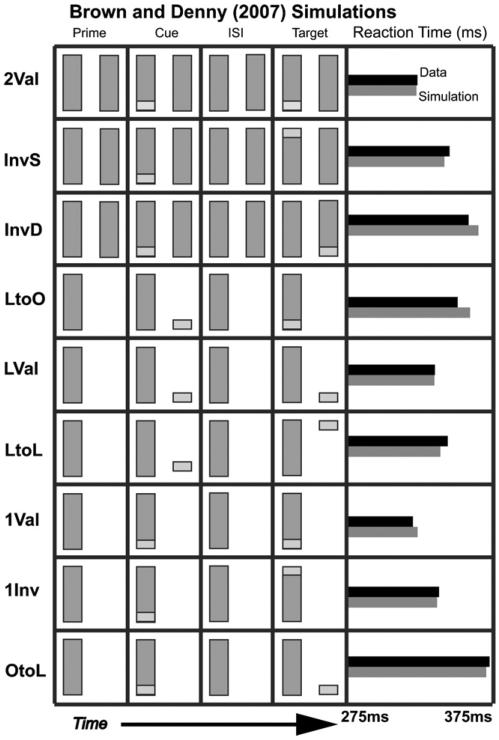

Fig. 3.

Brown and Denney (2007) data and reaction time (RT) simulations. Each experimental case is shown, with the four columns corresponding to what is seen in each display segment. Experimental RTs (black) and simulated RTs (gray) are shown at the far right. [Data reprinted with permission from Brown and Denney (2007).]

We hereby propose that a hierarchy of attentional shrouds in parietal and prefrontal cortex (Figs. 1 and 2) can smoothly switch between behavioral modes, while being sensitive to both transient event onsets and offsets, as well as to sustained shroud-mediated spatial attention, and which we will employ in order to explain key properties of cognitive models of multifocal attention, such as FINST (Pylyshyn, 1989, 2001).

The idea of a hierarchy of attention is consistent with accumulating anatomical and physiological evidence that magnocellular pathways play a role in priming object recognition in inferotemporal cortex through orbitofrontal cortex (Bar et al., 2006; Zikopoulos & Barbas, 2006). Evidence for multiple attention representations has also been found by mapping retinotopy in the visual system using fMRI, which has shown multiple, attention-sensitive maps in connecting areas of the intra-parietal sulcus (Silver, Ress, & Heeger, 2005; Swisher, Halko, Merabet, McMains, & Somers, 2007), as well as in retinotopic and head-centric representations in other areas of parietal cortex and areas of prefrontal cortex such as the frontal eye fields and dorsolateral prefrontal cortex (Saygin & Sereno, 2008).

The lower shroud level in the hierarchy, the object shroud layer, behaves similarly to the shrouds found in the ARTSCAN model (Fazl et al., 2009; PPC in Figs. 1 and 2). Neurons in this layer can resonate strongly with a single surface to gate learning in the what stream to allow the formation of view-invariant object categories. Multiple ensembles of neurons in the object shroud layer can also weakly resonate with several surfaces, allowing multifocal attention and rapid recognition of familiar scenes. Object shroud neurons can thus exist in two different regimes, which alternate reactively in response to changing visual stimuli, or can be volitionally controlled by modifying the inhibitory gain through the basal ganglia (Brown et al., 2004; Matsuzaki, Kyuhou, Matsuura-Nakao, & Gemba, 2004; Xiao, Zikopoulos, & Barbas, 2009). The first regime was studied in the original ARTSCAN model, where a single high-intensity shroud covers an object surface and gates learning during a multi-saccadic exploration of an unfamiliar object. The second regime allows multiple low-intensity object shrouds to exist simultaneously, supporting rapid recognition of a familiar scene's “gist”. Gist was modeled in the ARTSCENE model (Grossberg & Huang, 2009; Huang & Grossberg, 2010) as a large-scale texture category. Since object shroud neurons gate learning regimes lasting several seconds, they provide sustained attention and are slow to respond to changes in a scene.

In contrast to this property, reaction times in response to scenes that contain several objects are reduced if a task-relevant stimulus appears at one of the object positions within a few hundred milliseconds (Desimone & Duncan, 1995; Posner & Petersen, 1990). The attentional shrouds of the ARTSCAN model require a surface to be present to sustain a shroud-surface resonance. If that surface disappears, its corresponding shroud will collapse and another shroud will form over the next most salient object in a scene, which will start a new learning regime. This means that if a location is briefly cued, attention will shift once the cue disappears and the cued location will immediately be subject to IOR, which is inconsistent with attentional priming. Also in the ARTSCAN model, spatial attention is not preferentially sensitive to the appearance of a new object of equal contrast to the existing objects in a scene, unless the other objects in a scene had already been attended. Thus both attentional priming and fast reactions to cue changes are not adequately represented in the ARTSCAN model.

These ARTSCAN properties derive from that model's exclusive consideration of focal sustained attention and how it shifts through time. The dARTSCAN model also incorporates, and elaborates functional roles for, inputs that are sensitive to stimulus transients (Fig. 2, MT; also see Section 5.6 and Appendix A.6), consistent with models of motion perception (Berzhanskaya, Grossberg, & Mingolla, 2007) and models that combine transient and sustained contributions to spatial attention (e.g., Grossberg, 1997). Moreover, the model proposes how such transient inputs can contribute both to the formation of shrouds in the parietal cortex, and to the development of top-down priming from the prefrontal cortex.

Accordingly, in the higher level of the hierarchy, the spatial shroud level is formed by ensembles of neurons primarily driven by transient signals from the where cortical processing stream (see Sections 5.8 and Appendix A.8), notably signals due to object appearances, disappearances, and motion (PFC, Fig. 2). Unlike object shrouds, several shrouds in the spatial shroud layer can coexist at all times. This allows objects unattended in the object shroud layer to maintain spatial representations, allowing rapid (between saccades) shifts of attention, transient interruption of multiple-view learning, and attentional priming of locations at which an object recently disappeared or was occluded. Recurrent feedback allows a spatial shroud to stay active at a cued location for several hundred milliseconds, regardless of whether a surface is present at the location. Spatial shroud neurons also improve reaction times to transient or otherwise highly salient stimuli through top-down feedback onto object shroud neurons.

Top-down priming feedback enables quantitative simulation of reaction time differences in several cases presented in Brown and Denney (2007), particularly the LVal case (Fig. 3, row 5) where there is a valid location cue. As described in greater detail in Section 6.1.1, without priming, there would be no attentional representation of the cue through the interstimulus interval (ISI). In particular, as noted above, the original ARTSCAN model requires a surface to be present to sustain a shroud-surface resonance. This means that if a location is briefly cued, attention will shift once the cue disappears, and the cued location will immediately be subject to IOR. Prefrontal attentional priming overcomes this deficiency by sustaining an attentional prime throughout the ISI.

4. Individual differences and the basal ganglia: useful-field-of-view and RT

There are systematic individual differences in how attention is deployed and maintained (Green & Bavelier, 2003, 2006a, 2006b, 2007; Richards, Bennett, & Sekuler, 2006; Scalf et al., 2007; Sekuler & Ball, 1986; Tomasi et al., 2004). Green and Bavelier have, in particular, compared how video game players (VGPs) and non-video game players (NVGPs) perform in spatial attention tasks. Another line of research examined the differences in performance in the same individual under different conditions, such as before and after a training session (Green & Bavelier, 2003, 2006a, 2006b, 2007; Richards et al., 2006; Scalf et al., 2007; Tomasi et al., 2004). Finally, on psychophysics tasks such as the two object-cueing task first used by Egly et al. (1994), bootstrapping methods have been used to study between-subject differences on the same task, rather than the average population response to differing stimuli (Roggeveen et al., 2009). The dARTSCAN model proposes how these multiple modes of behavior and performance differences between individuals may arise from differences in inhibitory gain, mediated by the basal ganglia. We suggest how volitional or learning-dependent variations in the strength of inhibition that governs attentional processing may explain individual differences. As noted above, variations of this mechanism may be used in multiple brain systems to control other processes, such as visual imagery (Grossberg, 2000b) and working memory storage and span (Grossberg & Pearson, 2008).

VGPs have been found to have superior performance in several tasks involving visual attention, including flanker recognition, multiple object tracking (MOT), useful-field-of-view (UFOV), attentional blink, subitizing, and crowding (Green & Bavelier, 2003, 2006a, 2006b; Sekuler & Ball, 1986). Additionally, VPG's have better visual acuity than NVGPs (Green & Bavelier, 2007). Most of these performance improvements have also been found when a group of NVGPs trains on action video games for various periods of time typically 30–60 h; e.g., Green and Bavelier (2003). This indicates that playing action video games causes substantial changes in the basic capacity and performance of the visual attention system that is not specific to any individual or subpopulation. In some tasks, such as UFOV, similar changes have been found as the result of aging (Richards et al., 2006; Scalf et al., 2007; Sekuler & Ball, 1986).

As described above, the object shroud layer can switch between unifocal and multifocal attention through volitional control of inhibitory gain mediated by the basal ganglia. The model predicts that training through action video games increases the range of volitional control available in both shroud layers. Similarly, aging reduces the range of inhibitory control in both shroud layers. This allows VGPs (and the young) to spread their attention more broadly in the spatial shroud player, and increases the capacity of the object shroud attention layer so that the same object causes less inhibition than it would in a NVGP. We test this prediction using the UFOV task. The results are shown in Section 6.2 and Fig. 6 below. Roggeveen et al. (2009) revisited the two-object cueing task of Egly et al. (1994), who found that people respond faster when an invalid cue is presented on the same object as the target, than when the cue is presented on a different object. Roggeveen et al. (2009) reported that, while part of their subject pool showed the same effect, another part showed the opposite effect and responded faster when the invalid cue was on the other object. They also found that a valid cue improves reaction time in nearly all subjects. These data are challenging to explain in an object-based attention paradigm because, if the object is attended, how can a target on an unattended object lead to a faster response? The current model is able to produce both effects by varying the relative strength of the slower, surface-(object shroud) resonance, and the faster, transient-driven response of spatial shrouds. The model predicts that the rate with which attention spreads on a surface varies for each individual, which is attributed to different relative gains between the surface-(object shroud) resonance and the (object shroud)-(spatial shroud) resonance. For those individuals who respond faster when the invalid cue is on the other object, attention in the object shroud layer has not yet completely covered the cued object. Since inhibition in the object shroud attention layer is distance-dependent, the area of a surface immediately beyond the leading edge of the spread of attention is actively suppressed relative to the un-cued object (see Figs. 6 and 7, Section 6.1). This parametric modification leads to the model prediction that varying the cue and ISI duration will shift the proportion of subjects who exhibit same-object preference. The model also predicts that altering the visual geometry of the display or the strength of the cue will alter the width and slope of the distribution of individual differences.

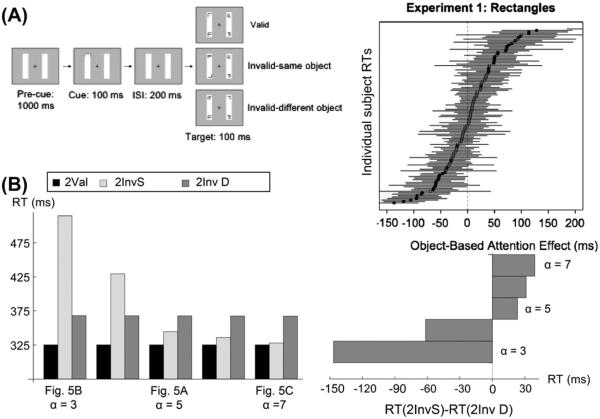

Fig. 6.

Roggeveen et al. (2009) individual difference data and modeled behavior. (A) The experimental paradigm and individual preferences for InvS, and InvD targets. (B) Simulated reaction times for two-object conditions (left plot), and reaction time differences (right plot) between InvS and InvD conditions, as the (spatial shroud)-(object shroud) resonance changes from weakest (left, α = 3, Eq. (33)) to strongest (right, α = 7, Eq. (33)) by increasing the response gain to changing inputs in the object shroud layer. This shifts preference from InvD (bottom) to InvS (top). [Data reprinted with permission from Roggeveen et al. (2009).]

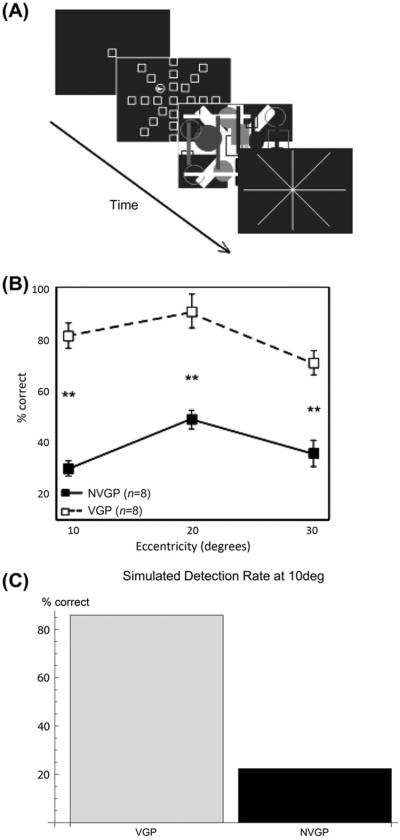

Fig. 7.

Green and Bavelier (2007) useful-field-of-view data and simulations. (A) Experimental paradigm used by Green and Bavelier (2003): After fixation, a brief (10 ms) display with an oddball is shown, followed by a mask. The task is to pick the direction of the oddball. (B) Experimental data for video game players (VPGs) and non-video game players (NVGPs) from Green and Bavelier (2003). [(A) and (B) reprinted with permission from Green and Bavelier (2007).] (C) VGPs are simulated by having 20% lower inhibitory gain in the object and spatial shroud layers (see T = .04 and TC = .032 in Eq. (36) as well as W = .04 and WC = .00016 in Eq. (42)), which facilitates detection through the mask period.

5. Model description

The dARTSCAN extension of the ARTSCAN model focuses on the where stream side of the original model (Fig. 1). Both models share similar boundary and surface processing. The current model uses a simpler approximation of cortical magnification to reduce the computational burden in simulation due to adding transient cells, prefrontal priming, and variable field-of-view. As briefly summarized above, both parietal and prefrontal shrouds are now posited: object shrouds, which are similar to the original ARTSCAN shroud representation and are primarily driven by surface signals, and spatial shrouds, which are primarily driven by transient signals (Fig. 2).

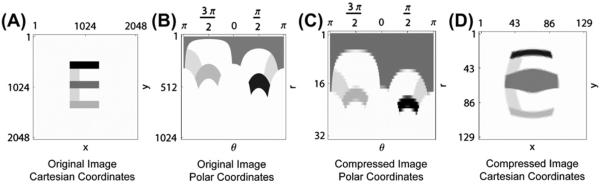

5.1. Cortical magnification

Visual representations in the early visual system are subject to cortical magnification, which has often been simulated using a log-polar approximation (Fazl et al., 2009; Polimeni et al., 2006). Working with models that include cortical magnification creates several complications. Because cortically magnified images are spatially variant, depending on the center of gaze, fixed convolutions or center-surround processing create effects that vary with eccentricity (Bonmassar & Schwartz, 1997). In addition, log-polar transformations do not fit neatly into the neighborhood relations of a matrix, which is the most convenient data structure for representing images and layers of neurons computationally.

It is possible, however, to maintain cortical magnification as a function of eccentricity while also keeping the neighborhoods of the matrix form, by approximating the central portion of the visual field using radial symmetric compression instead of a log-polar mapping. This has the advantage of simplifying computation of the model without ignoring the basic geometry reflected in the anatomy of the visual system (see Appendix A.1).

Given that model preprocessing before cortical activation is highly simplified, it is assumed that the model retina, rather than cortical area V1, already samples input images in a spatially-variant manner, using symmetric log compression to approximate cortical magnification, so that objects near the fovea have magnified representations and objects near the periphery have compressed representations. This is important in the model to bias attention towards foveated stimuli, which have correspondingly larger representations. Model retinal cell activities are normalized by receptive field size, and serve as input to the model lateral geniculate nucleus (LGN).

5.2. Hemifield independence

Model interactions also exhibit hemifield independence, which is consistent both with the anatomical separation of processing as well as behavioral observations (Alvarez & Cavanagh, 2005; Luck, Hillyard, Mangun, & Gazzaniga, 1989; Mathot, Hickey, & Theeuwes, 2010; Swisher et al., 2007; Youakim, Bender, & Baizer, 2001). Hemifield independence is implemented by using different sets of connection weights to control the strength of connections between neurons in the same hemifield, and neurons of opposite hemifields (see Appendix A.2, Eqs. (10)–(13)). Left and right hemifield representations use one set of distance-dependent connection weights between neurons that are in the same hemifield of the respective layer, or projecting layers. They use a different set of distance-dependent connection weights for neurons that are in the opposite hemifield of the same layer, or projecting layers. The connection strength between neighboring neurons is weighted near network boundaries to normalize the total input for each neuron (Grossberg & Hong, 2006; Hong & Grossberg, 2004). Thus, there are no boundary artifacts, either near the vertical meridian or the edges of the visual field.

5.3. LGN polarity-sensitive ON and OFF cells

The model LGN normalizes contrast of the input pattern using polarity-sensitive ON cells that respond to input increments and OFF cells that respond to image decrements. ON and OFF cells obey cell membrane, or shunting (Eqs. (1)–(3)), equations that receive retinal outputs within on-center off-surround networks that join other ON and OFF cells, respectively (Eqs. (14) and (15)). These single-opponent cells output to a layer of double-opponent ON and OFF cells in the what cortical processing stream (Eqs. (18) and (19)), as well as to transient cells in the where cortical processing stream (Eqs. (30)–(32)).

5.4. Boundaries

The model omits oriented simple cell receptive fields and various properties of ocularity, disparity-sensitivity, and motion processing that are found in primary sensory cortex, since no inputs used for the current model simulations require it. Instead, polarity-insensitive complex cells are directly computed at each position as a sum of rectified signals from pairs of polarity-sensitive double-opponent ON and OFF cells (Eq. (20)).

Object boundaries (Eq. (21)) are computed using bottom-up inputs from complex cells (Eq. (20)) that are amplified through a modulatory input from top-down inputs from surface contour cells (see Appendix A.4 and Eq. (23)). Surface contour signals are generated by surfaces that fill-in within closed boundaries. They select and enhance the boundaries that succeed in generating the surfaces that may enter conscious perception, thereby assuring that a consistent set of boundaries and surfaces are formed, while also, as an automatic consequence, initiating the figure-ground separation of objects from one another (Grossberg, 1994, 1997).

Surface contours are generated by contrast-sensitive networks at the boundaries of successfully filled-in surfaces; that is, surfaces which are surrounded by a connected boundary and thus do not allow their lightness or color signals to spill out into the scenic background. During 3-D vision and figure-ground separation, not all boundaries are connected (Grossberg, 1994). However, in response to the simplified input images that are simulated in this article, all object boundaries are connected and cause successful filling-in. As a result, surface contours are computed at all object boundaries, and can strengthen these boundaries via their positive feedback. Moreover, when the contrast of a surface is increased by feedback from an attentional shroud, the surface contour signals increase, so the strength of the boundaries around the attended surface increase also.

More complex boundary computations, such as those in 3D laminar visual cortical models (e.g., Cao & Grossberg, 2005; Fang & Grossberg, 2009) can be added as the model is further developed to process more complex visual stimuli, without undermining the current results.

5.5. Surfaces

Bottom-up inputs from double-opponent ON cells (Eq. (18)) trigger surface filling-in via a diffusion process (Eq. (26)) which is gated by object boundaries (Eq. (28)) that play the role of filling-in barriers (Grossberg, 1994; Grossberg & Todorović, 1988). The ON cell inputs are modulated by top-down feedback from object shrouds (Eq. (33)) that increase contrast gain during a surface-(object shroud) resonance. Such a resonance habituates through time in an activity-dependent way (Eq. (29)), thereby weakening the contrast gain caused by (object shroud)-mediated attention. Winning shrouds will thus eventually collapse, allowing new surfaces to be attended and causing IOR.

Filled-in surfaces generate surface contour output signals through contrast-sensitive shunting on-center off-surround networks (Eq. (23)). As noted above, surface contour signals provide feedback to boundary contours, which increases the strength of the closed boundary representations that induced the corresponding surfaces, while decreasing the strength of boundaries that do not form surfaces.

Although surface filling-in has often been modeled by a diffusion process since computational models of filling-in were introduced by Cohen and Grossberg (1984) and Grossberg and Todorović (1988), Grossberg and Hong (2006) have modeled key properties of filling-in using long-range horizontal connections that operate a thousand times faster than diffusion.

5.6. Transient inputs

Where stream transient cells in cortical area MT are modeled using a leaky integrator (Eq. (30)). Transient cells receive bottom-up input from double-opponent ON cells (Eq. (18)) proportional to the ratio of the contrast increment between previous and current stimuli at their position (Eq. (31)) for a brief period (Eq. (32)) after any change. Such ratio contrast sensitivity is a basic property of responses to input increments in suitably defined membrane, or shunting, equations (Grossberg, 1973, 1980a, 1980b). Any increment in contrast will trigger a transient cell response. After the period of sensitivity ends, transient activity quickly decays. OFF channel transient cells were omitted since only stimuli brighter than the background were simulated.

5.7. Object shrouds

The model where cortical stream enables one or several attentional shrouds to form in the object shroud layer, thereby supporting two different modes of behavior. The first, in which only a single shroud forms, allows an object shroud to perform the same role as in the original ARTSCAN model, gating learning when a sequence of several saccades explores a single object's surface to learn a view-invariant object category. The second, where multiple, weaker, shrouds can simultaneously coexist, supports conscious perception and concurrent recognition of several familiar object surfaces.

Object shroud neurons (Eq. (33)) receive strong bottom-up input from surface neurons (Eq.(26)) and modulatory input from transient cells to help salient onsets capture attention during sustained learning (Eq. (30); see Fig. 2). Object shroud neurons also receive top-down habituating (Eq. (38)) feedback from spatial shroud neurons (Eq. (39)), as well as recurrent on-center off-surround (Eqs. (35) and (36)) habituating (Eq. (37)) feedback from other object shroud neurons. Recurrent feedback among object shroud neurons habituates faster than spatial shroud feedback, which in turn habituates faster than feedback onto the surface layer. This combination of feedback produces several important effects. The first loop, surface-(object shroud)-surface (Fig. 2), enables a local cue on a surface that has successfully bid for object shroud attention to trigger the filling-in of attention along the entire surface (Eqs. (26)–(28)). Fully enshrouding an object which attracts attention through a local cue is slow compared to transient capture of highly salient objects, since it depends on slower surface dynamics. The second loop, recurrent on-center off-surround feedback in the object shroud layer, allows object shrouds to compete weakly in the multifocal case, to provide contrast enhancement, and strongly in the unifocal case, so that view-invariant object categories may be learned. Once a shroud has won in the unifocal case, surface-(object shroud) resonance dominates until habituation occurs. The level of competition between object shrouds depends on the inhibitory gain (Eq. (36)), which can be volitionally controlled through the basal ganglia. The third loop, (object shroud)-(spatial shroud)-(object shroud) enhances responses to salient transient signals, facilitates the spread of object shroud attention along surfaces, and helps maintain an (object shroud)-surface resonance over the whole surface, as parts of the object shroud start to habituate, by up-modulating the bottom-up surface signal. Once an object shroud that has habituated is out-competed and collapses, it is difficult for a new object shroud to form in the same position until the habituating gates recover, leading to IOR.

5.8. Spatial shrouds

When object shrouds are supporting view-invariant category learning, they must be stable on the order of seconds, to allow multiple saccades to explore an object (Fazl et al., 2009). The visual system however, is also capable of considerably faster responses, in particular to transient events (Desimone & Duncan, 1995). Spatial shrouds allow the model to respond quickly to transient stimuli when they are present, without compromising the stability required to support view-invariant object category learning in more stable environments (see Fig. 2). This basic fast-slow dynamic also underpins the model's explanation of individual difference data in the two-object cueing task, and allows the model to successfully simulate cases in the Brown and Denney (2007) data which require rapid responses to transient cues and targets, as is explained in Section 3.1.

Spatial shrouds (Eq. (39)) receive bottom-up input from transient neurons (Eq. (30)), and weaker bottom-up input from object shroud neurons (Eq. (40)). Spatial shroud neurons interact via a recurrent on-center off-surround network (Eqs. (41) and (42)) that does not habituate. As a result, multiple spatial shrouds can survive for hundreds of milliseconds in relatively stable environments unless they are out-competed by new transients. Spatial shroud cells are always sensitive to salient environmental stimuli and can mark multiple objects simultaneously, even if these objects are not being actively learned or recognized, allowing maintenance of allocentric visual orientation consistent with situated vision (Pylyshyn, 2001). The spatial shroud layer has non-habituating recurrent feedback capable of maintaining spatial shroud activity through time, so that an active spatial shroud can persistently prime object shroud formation over a surface presented at the corresponding location, unless the object shroud neurons are deeply habituated, causing IOR. There is no volitional attention from planning areas in the model, although we hypothesize that feedback to the spatial shroud layer might come from planning and executive control areas.

5.9. Computing model behavioral data

The data sets that are simulated use reaction time and detection thresholds to assess behavioral performance. We simulate these behavioral outputs by measuring activity levels at regions of interest (ROIs) important for the experimental display. To measure reaction time, we integrate activity in the object shroud layer over time until it reaches a threshold (chosen for best fit in the 2Val condition), then assume a constant delay between detection and motor output. To measure detection performance in the UFOV, we use the size of a Weber fraction comparing the level of response in the object shroud layer for the target and distracters at the end of the masking period as a direct proxy for performance.

6. Results

6.1. Two-object cueing

The two-object cueing task (Egly et al., 1994) is a sensitive probe to examine the object-based effects of attention. Two versions of the experiment extend the basic two-object task. One version includes one object and non-object positions with the same geometry (Brown & Denney, 2007). The second version shows that the general population effect found in Brown and Denney (2007) for same-object vs. inter-object attention switches is not uniform among all subjects. In both experiments, presentation occurs in four states (see Fig. 3). The first stage, called “prime,” displays two rectangles, equidistant from the fixation point, equal in size and such that the distance between the two ends of a rectangle is the same as the distance between the rectangles (Fig. 3, column 1, rows 1–3). In the cases of one object with possible position cues and targets, only one of the two rectangles is shown (see Fig. 3, column 1, rows 4–9). The rectangles can either cross the vertical meridian, or be presented in separate hemifields. In the second stage of the experiment (Fig. 3, column 2), one end of a rectangle (or the equivalent location, if there is only one rectangle) is cued, which is followed by the ISI (Fig. 3, column 3). Finally, a target is presented at one of the four possible cue positions (Fig. 3, column 4). This cue can be valid to the target or invalid.

The original ARTSCAN model could simulate the order of reaction times in four of the main cases presented in Brown and Denney (2007); namely, the primary cases that illustrate the object cueing advantage (cases 2Val, InvS, InvD and OtoL in Fig. 3). The hierarchy of attentional interactions between PPC and PFC in the dARTSCAN model can simulate all nine cases successfully. This can be done due to the addition of transient cells, which shorten reaction times for targets, and by replacing the single shroud layer with the PPC-PFC hierarchy, which allows attention priming and balancing between the dynamics of the fast spatial shroud layer and the slower object shroud layer.

The following cases explain how the model fits the entire data set.

6.1.1. Valid cues

There are three display conditions in which the cue is valid. From fastest to slowest reaction times, these are:

-

(1)

One rectangle is presented throughout the experiment, with the cue and the target at the same position on the rectangle (1Val; Figs. 3 and 4).

-

(2)

Two rectangles are presented throughout the experiment, with the cue and target at the same position (2Val; Figs. 3 and 5).

-

(3)

One rectangle is presented throughout the experiment, with the cue and the target presented outside the object (LVal; Figs. 3 and 4).

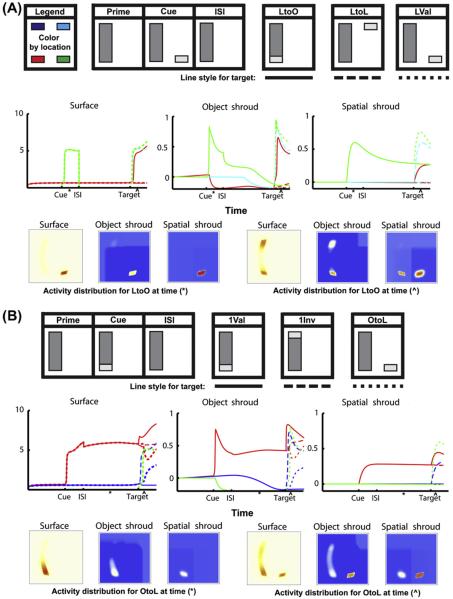

Fig. 4.

Simulations of Brown and Denney (2007) one-object cueing data showing the dynamics of the surface, object shroud, and spatial shroud layers. The top row in each panel shows a schematic of input presentations for the one-object cases used to fit the Brown and Denney (2007) data, with colors corresponding to each region of interest (ROI) indicated at the upper left corner (not all positions are shown in each plot). In all simulations, the temporal dynamics of surface (S, Eq. (26)), object shroud (AO, Eq. (33)), and spatial shroud (AS, Eq. (39)), variables are shown in the first, second and third columns respectively, for each ROI. Solid, dashed and dotted lines show the target conditions that are defined in the top row. The bottom row of each panel shows the distribution of activity in the same layers at the times (*) and (^). (A) The three cases where a position is cued. Note that while the object shroud over the cued location falls to zero, the spatial shroud is maintained, thereby priming object shroud formation when the target is valid. (B) The three cases where there is one object, which is cued.

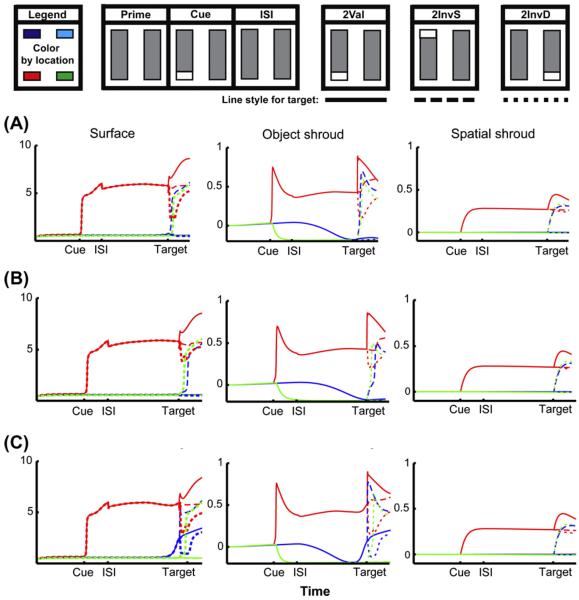

Fig. 5.

Simulations from Brown and Denney (2007) two-object cueing data, and individual difference data from Roggeveen et al. (2009) showing surface, object shroud, and spatial shroud dynamics. The top row shows a schematic of input presentations for the three two-object cases used to fit the Brown and Denney (2007) data, with colors corresponding to each ROI indicated at the upper left corner (not all positions are shown in each plot). In all simulations, the temporal dynamics of surface (S, Eq. (26)), object shroud (AO, Eq. (33)), and spatial shroud (AS, Eq. (39)), variables are shown in the first, second and third columns respectively, for each ROI. (A) The surface activity for the 2InvS case (dark blue) starts to increase slightly before target presentation, as does the activity of the object shroud. This indicates that the surface is about to be completely enshrouded, and that active inhibition has been released from the end of the rectangle opposite the cue because it has passed the edge separating the inhibitory surround from the excitatory center of the resonating shroud. This set of simulations corresponds to the behavioral results shown in middle simulation of Fig. 6B (α = 5, Eq. (33)). (B) The surface activity of the case in which 2InvD response is faster than 2InvS (green vs. dark blue), corresponding to the far left simulations in Fig. 6B (α = 3, Eq. (33)). Note that the bubble of inhibition (blue curve, object shroud layer is less than 0 and decreasing) corresponding to the 2InvS target is forming when the target is presented. (C) The surface activity of the case in which 2InvS response is faster than 2InvD (dark blue vs. green), corresponding to the far right simulations in Fig. 6B (α = 7, Eq. (33)). Note that surface-(object shroud) resonance has enshrouded the entire object prior to the InvS target being presented. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

The 1Val condition has a faster reaction time than the 2Val condition, because the presence of the second rectangle bidding for attention adds to the inhibition that the cued object must overcome to resonate with an object shroud. In both the object-valid conditions, there is a surface visible at the location of the cue throughout the experiment, and a resonant object shroud is maintained from cue presentation through target detection. In the LVal case, on the other hand, this does not occur: only a spatial shroud corresponding to the cued location endures through the ISI. While the spatial shroud primes the object shroud when the target appears, the object shroud cannot resonate until a new surface representation is formed. This in turn cannot take place until new boundaries have formed, something unnecessary when changing the contrast of an existing visible surface. If there were no spatial shroud present to prime the object shroud, the process would take longer, since after the surface representation formed, it would then have to bid for attention against a weak shroud representation on the rectangle visible throughout the experiment, substantially delaying the formation of a resonant shroud.

6.1.2. Invalid cues: one object

There are four display conditions in which one rectangle is visible throughout the experiment, but the cue is invalid. From fastest to slowest in human and model reaction times, these are:

-

(1)

The cue is presented at one end of the (only) rectangle, and the target appears at the far end of the rectangle (1Inv; Figs. 3 and 4).

-

(2)

The cue is presented at a position outside the rectangle, and the target is presented at another location outside the rectangle consistent with the spacing of 1Inv (LtoL; Figs. 3 and 4).

-

(3)

The cue is presented at a position outside of the rectangle, and the target within it (LtoO; Figs. 3 and 4).

-

(4)

The cue is presented in the rectangle, and the target is presented at a position outside of it (OtoL; Figs. 3 and 4).

Condition 1Inv has the quickest reaction time because a strong object shroud has spread over the cued object, facilitating detection. It is slower than the valid case because un-cued portions of the attended object lack a strong spatial shroud in addition to the strong object shroud. The more interesting cases are the middle two: why should a target at an invalid position be detected faster than an invalidly-cued target on an un-cued, but visible, object that has a weak object shroud formed over it? It is because the model is sensitive to transient events. An existing (object shroud)-surface resonance, especially one supporting view-invariant object category learning, is difficult to break. It can be broken through inhibition created by a competing shroud, or by exhausting its habituating gates. The model responds locally to transient effects as a function of the contrast ratio in the surround. This contrast-sensitive response is larger when a target appears against the background of the display, than on a rectangle of the display. This implies that a relatively intense spatial shroud forms on the target in the LtoL case, which allows a rapid where stream transient input to create a strong (object shroud)-(spatial shroud) resonance. This process can occur faster than more modest contrast increment on the weakly attended surface in the LtoO, which will continue to be supported by a slower surface-(object shroud) resonance. This transient-activated shroud hierarchy does not provide the same level of benefit in the OtoL case, however, since in the OtoL case a strong object shroud (rather than a weak one) has formed over the object because it was cued, thereby creating a much higher hurdle to overcome.

6.1.3. Invalid cues: two-objects and individual differences

The classic finding of Egly et al. (1994) supporting object-based attention is that, when there are two identical rectangles presented at a distance equal to their length throughout the experiment, and one end of one rectangle is cued, reaction times occur in the following order:

- (1)

-

(2)

A target appearing on the other end of the same rectangle (InvS; Figs. 3 and 5).

-

(3)

A target appearing at the same end on the other rectangle (InvD; Figs. 3 and 5).

Brown and Denney (2007) replicated this finding by measuring mean reaction times for 30 subjects (Fig. 3). Roggeveen et al. (2009) re-examined the paradigm using a variant of this task (Moore et al., 1998) and focused on the object-based attention for each individual, rather than over the population as a whole. They found that about 18% of individuals had significantly better reaction times for InvS than InvD, while another 18% of individuals had a significant reverse effect, preferring InvD to InvS. The rest of the subjects showed smaller differences in both directions, creating a fairly smooth distribution. Nearly all (96%) of subjects reacted more quickly to a valid cue.

This variant of the task requires discrimination between a target and distracters, rather than simple detection (Moore et al., 1998), which means that in all invalid trials, there is a distracter at the cued location. This is the likely cause of the comparatively large size of reaction time differences, about 200 ms faster for a valid cue, compared to the detection paradigm used in Brown and Denney (2007), which showed 40 ms differences for the same comparison. However, this does not explain why some subjects reacted more quickly to an invalidly-cued target on the same rectangle, and some reacted faster to an equidistant invalidly-cued target on the other rectangle.

As noted in Section 4, our model predicts that the difference between individuals who react faster for an invalidly-cued target on the same object, and those that do the opposite, is the relative gain between the faster dynamics of (spatial shroud)-(object shroud) resonance, and the slower dynamics of surface-(object shroud) resonance. As can be seen in Fig. 6, as the relative strength of the spatial shroud resonance is increased (from left to right on the bottom row), reaction time decreases slightly across the board, but massively for InvS. This is because a strong object shroud can spread over the rectangle before the target and distracters appear (see Fig. 5C vs. B), which diminishes the effect of the distracter at the cued location, where there is also a strong spatial shroud. If the shroud has not had time to spread over the entire bar (as in the left hand case) then there is a bubble of inhibition at the far end of the cued bar, suppressing attention to the target, while enhancing the distracter. This predicted difference between the slower parietal-V4 vs. faster prefrontal–parietal resonances may be testable using rapid, event-related fMRI or EEG/MEG.

Goldsmith and Yeari (2003) employ a variation of the Egly et al. (1994) task with two cue conditions: in the `exogenous' condition, the cues appear at one of four locations at the ends of the two rectangles, while in the `endogenous' task a third rectangle, smaller and oriented towards one of the four target locations appears near the fixation point. Since the model does not explicitly consider the effects of orientation, or learning that an oriented bar may cue a distant location, it is beyond the purview of the model to account for the endogenous-target-valid case. However, given the interference of a highly transient third object (the `endogenous' cue), and the model's clarification of how spatial attention may learn to be focused or spread, depending on task conditions, it is consistent with the findings of the current model that there is little reaction time difference between the invalid-same object and invalid-different object cases, since attention has been drawn away from both of them.

6.2. Useful-field-of-view

The UFOV paradigm measures how widely subjects can spread their attention to detect a brief stimulus that is then masked (Fig. 7A; Green & Bavelier, 2003; Sekuler & Ball, 1986). The task starts with a fixation point, which is followed by a brief (10–30 ms presentation) appearance of 24 additional elements, all but one of which is identical to the fixation point. The elements are arranged in eight spokes, at equally spaced angles and at three eccentricities. A mask then appears, followed by an eight-spoke display of lines. The subject then indicates the direction along which the oddball appeared. VGPs perform better at this task than non-VGPs (Fig. 7B).

We simulated a simplified version of this display with a contrast increment oddball, with video game players having a 20% lower inhibitory gain in the object shroud and spatial shroud layers (see Eqs. (36) and (42)). The results show that just this small change in inhibitory gain in both the PPC and PFC attentional networks has a large effect on the detection performance.

This occurs because the inhibitory gain in the attention layers of the model serves as a resource constraint, which helps VGPs detect the location of the oddball in two distinct ways. The spatial shroud layer receives strong transient input, which is excited by the appearance of the target and distracters. Initially, all the targets and distracters are represented in the spatial shroud layer before recurrent feedback causes competition. The initial signal that each element can project to the spatial shroud layer through transient response is determined by the inhibitory gain in that layer. Therefore, VGPs have an early spatial shroud response to the target which is less likely to be washed out by competition from distracters and the mask. Inhibitory gain also serves as a resource constraint in the object shroud layer. However, since shrouds in the object shroud layer require surface resonance, and object shrouds will expand over any surface that begins resonating with its corresponding shroud, the resource constraint is how many objects can be represented in the object shroud layer. Decreasing the inhibitory gain the object shroud layer increases the chance that an object shroud can begin resonating with the target before the mask, rendering its location detectable.

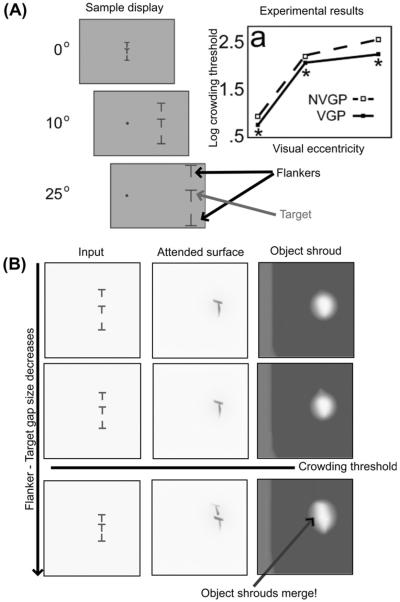

6.3. Crowding

In the crowding paradigm, an object, such as a letter, is visible and recognizable when presented by itself, but it is not recognizable when the letter is presented concurrently with similar flanking letters (Green & Bavelier, 2006a; Levi, 2008; Toet & Levi, 1992). The distance between the target letter and the flanking letters at which the target letter becomes unrecognizable is called the crowding threshold, and is a function of the eccentricity of the target and the flankers, and their relative position (Bouma, 1970, 1973; Levi, 2008). A related concept is the spatial resolution of attention (Intriligator & Cavanagh, 2001), which is the minimum distance between several simple identical objects, like filled circles, that allow an observer to covertly move attention from one circle to another based on a set of instructions, without losing track of which circle they should attend. The spatial resolution of attention is also proportional to eccentricity, and falls off faster than acuity loss due to cortical magnification (He, Cavanagh, & Intriligator, 1996; Intriligator & Cavanagh, 2001).

Crowding has been attributed both to pre-attentive visual processing in early visual areas, as well as to attentional mechanisms (see Levi (2008) for a review). It is likely that it is a combination of the two. Some models of early vision, such as LAMINART, predict how flankers can cause either facilitation or suppression of stimuli between them, depending on distance and contrast (Grossberg & Raizada, 2000) using interacting pre-attentive grouping and competitive mechanisms. A second proposal is that larger receptive fields in the what stream capture features from multiple objects and conflate them. A third proposal is that observers confuse the location of the target and the flankers due to positional uncertainty, but that object-feature binding remains intact. Yet another proposal is that crowding is the result of failed contour completion (Levi, 2008). However, because of the similarities between crowding and the spatial resolution of attention, measured without the use of flanking objects (He et al., 1996; Intriligator & Cavanagh, 2001; Moore, Elsinger, & Lleras, 2001), it is unlikely that crowding is solely due to pre-attentive factors in early visual processing.

Our model has a natural explanation for crowding due to its linkage of where stream spatial attentional processes to what stream object learning and recognition processes. In particular, the model predicts that when at least three peripherally presented similar objects are nearby, and subject to the cortical magnification factor, an object shroud forming over one object can spread to another object, confounding recognition when several objects are covered by a single shroud (see Fig. 8). Said in another way, when a single shroud covers multiple objects, it defeats figure-ground separation and forces the objects to be processed like one larger unit. This means that there is both featural and positional confusion, but this is the result of how the shroud forces the failure of object recognition, rather than being due to large receptive fields or simple positional uncertainty. This does not occur with just two peripheral objects, since the highest intensity part of each object shroud can shift to the most distant extrema of the two objects, and can thereby inhibit the gap between the objects.

Fig. 8.

Green and Bavelier (2007) crowding data and qualitative behavioral simulations. (A) Top panels show a crowding display and data from Green and Bavelier (2007), which show lower thresholds for VGPs. [Data reprinted with permission from Green and Bavelier (2007).] (B) Bottom panels show simulations of the object shrouds that form in response to three displays. The three inputs are all at the same eccentricity, but the gap between the target and flankers shrinks from top to bottom. At the smallest gap, a single object shroud forms over multiple objects, so that individual object recognition is compromised.

In summary, our proposed explanation of crowding exploits the dARTSCAN predictions of how where stream processing of attentional shrouds influences what stream learning and selection of object recognition categories, and how cortical magnification influences attention within and between object surfaces.

7. Discussion

The current article introduces three innovations to further develop the original ARTSCAN model concept of how where stream spatial attention can modulate what stream recognition learning and attention (Cao et al., 2011; Fazl et al., 2009; Grossberg, 2007, 2009): First, we show how both where stream transient inputs from object onsets, offsets, and motion can interact with what stream sustained inputs from object surfaces to control spatial attention. Second, we show how both parietal object shrouds and prefrontal spatial shrouds can help to regulate the priming, onset, persistence, and reset of spatial attention in different ways and at different speeds. Third, we show how basal ganglia control of inhibition across spatial attentional regions can control focal vs. multifocal attention, either through fast volitional control or slower task-selective learning. The interactions among these processes can explain a seemingly contradictory set of data, notably how both same-object and different-object biases are both compatible with a model founded on object-based attention.

7.1. Comparison with other models