Abstract

The appropriate use of everyday objects requires the integration of action and function knowledge. Previous research suggests that action knowledge is represented in frontoparietal areas while function knowledge is represented in temporal lobe regions. Here we used multivoxel pattern analysis to investigate the representation of object-directed action and function knowledge while participants executed pantomimes of familiar tool actions. A novel approach for decoding object knowledge was used in which classifiers were trained on one pair of objects and then tested on a distinct pair; this permitted a measurement of classification accuracy over and above object-specific information. Region of interest (ROI) analyses showed that object-directed actions could be decoded in tool-preferring regions of both parietal and temporal cortex, while no independently defined tool-preferring ROI showed successful decoding of object function. However, a whole-brain searchlight analysis revealed that while frontoparietal motor and peri-motor regions are engaged in the representation of object-directed actions, medial temporal lobe areas in the left hemisphere are involved in the representation of function knowledge. These results indicate that both action and function knowledge are represented in a topographically coherent manner that is amenable to study with multivariate approaches, and that the left medial temporal cortex represents knowledge of object function.

Keywords: conceptual representation, fMRI, multivariate pattern classification, object-directed actions, tool use

Introduction

On a daily basis we are constantly recognizing, grasping, manipulating, and thinking about manipulable objects (e.g., scissors, knife, corkscrew). The appropriate use of tools (e.g., cutting with scissors) requires the integration of action knowledge, information in the motor domain about how a tool is physically manipulated, with function knowledge, conceptual information about the purpose or goal of tool use. A number of studies have investigated the neural representation of tool knowledge from the perspective of understanding the boundaries of category-specificity in the brain—the activation elicited by viewing or naming tool stimuli is contrasted with that elicited by other categories, such as faces, bodies, places, houses, or animals. Differential blood oxygen level-dependent (BOLD) contrast for tool stimuli is typically observed in a largely left hemisphere network across temporal, parietal, and frontal cortex (Martin et al. 1996; Chao et al. 1999; Chao and Martin 2000; Rumiati et al. 2004; Noppeney et al. 2006; Mahon et al. 2007 2013; Garcea and Mahon 2014; for reviews, see Lewis 2006; Mahon and Caramazza 2009; Martin 2007, 2009). The regions that consistently exhibit differential BOLD contrast when viewing tools, or manipulable objects, include the left inferior and superior parietal lobules, the left posterior middle temporal gyrus, the left ventral premotor cortex, and the medial fusiform gyrus (fusiform activation is typically bilateral). However, it remains unclear how tool-preferring regions contribute to action and function representations associated with tool use.

Insights about the neural bases of action and function knowledge come from neuropsychological studies of brain-damaged patients, and neuroimaging studies of healthy participants performing a range of different tasks. For instance, Buxbaum et al. (2000) and Buxbaum and Saffran (2002) found that some apraxic patients with left frontoparietal lesions were disproportionately impaired for knowledge of object manipulation relative to knowledge of object function, while the opposite dissociation has been observed in patients with temporal lobe damage (Sirigu et al. 1991; Negri et al. 2007). Thus, broadly speaking, frontoparietal lesions are associated with impairments for action knowledge while temporal lobe lesions are associated with impairments for function knowledge. Evidence from brain imaging studies corroborates this general pattern. Differential activation has been observed in a left frontoparietal network when participants perform an action judgment task (Kellenbach et al. 2003; Boronat et al. 2005), while differential activation has been observed in retrosplenial cortex and lateral anterior inferotemporal cortex when participants are engaged in a function judgment task (Canessa et al. 2008). More recently, Ishibashi et al. (2011) found that repetitive transcranial magnetic stimulation (rTMS) over inferior parietal cortex caused longer response latencies for action judgments, while rTMS over the left anterior temporal lobe led to longer response latencies for function judgments (for similar TMS results, see Pobric et al. 2010; Pelgrims et al. 2011).

In the current functional magnetic resonance imaging (fMRI) study, we use multivoxel pattern analysis (MVPA; Haxby et al. 2001) to study the role of these areas in representing action and function knowledge during the execution of pantomimes of tool use. MVPA has been widely used to show that the pattern of neural responses across a population of voxels contains information that is not present in the average amplitude of responses across that population (e.g., Spiridon and Kanwisher 2002; Norman et al. 2006; Kriegeskorte et al. 2008). Of particular relevance to the current investigation is the study of Gallivan et al. (2013) who used MVPA to successfully decode neural representations of action during a delayed-movement task that required grasp or reach actions toward a target object.

However, and perhaps surprisingly, MVPA has not to date been used to discriminate pantomimes of familiar tools, or representations of object function. The purpose of the current study was to examine exactly where in the human brain information about object-directed actions and representations of object function are coded during the execution of pantomimes of familiar tools. Because we ask participants to pantomime object-directed actions during fMRI, without the object in hand and with the pantomime out of participants' view, the findings have implications for understanding the brain network that supports object pantomiming and potentially, the representation of action knowledge. We know based on prior work (e.g., Buxbaum et al. 2000; Buxbaum and Saffran 2002; Kellenbach et al. 2003; Myung et al. 2010; Yee et al. 2010) that regions in the left inferior parietal lobule support action knowledge, and that similar regions are activated when simply viewing manipulable objects (e.g., Chao and Martin 2000; Rumiati et al. 2004; Mahon et al. 2007, 2013). However, it remains an open question whether those same inferior parietal regions can decode pantomimes of object-directed actions. Thus, we performed a region of interest (ROI) analysis on the tool-preferring regions to test whether multivoxel activity patterns in those regions carry information about action and function properties of familiar tools. One additional area, left somatomotor cortex, was selected as a control region because it was expected to accurately decode actions (since participants are performing an overt pantomime task). Finally, to test whether action and function representations could be decoded outside independently defined tool-preferring regions, and to examine the specificity of any observed ROI-based effects, we performed a whole-brain “searchlight” analysis (Kriegeskorte et al. 2006).

In all analyses, we trained multivoxel classifiers (binary linear support vector machine, SVM) to discriminate action pantomimes. The SVM classifiers were trained and tested in a cross-item manner. For instance, the SVM classifier can be trained to discriminate action pantomimes for “scissors” and “screwdriver”, and then that classifier can be tested with “pliers” versus “corkscrew”. Because the action associated with scissors is similar to that for pliers, and likewise for screwdriver and corkscrew, successful transfer from training to test would indicate that the population of voxels being classified contains a population code that distinguishes the actions, over and above the objects themselves.

Materials and Methods

Participants

Ten participants took part in the main experiment (4 females; mean age = 21.1 years, standard deviation, ±2.2 years.). All participants were right handed, as assessed with the Edinburgh Handedness Questionnaire. They all had normal or corrected to normal eyesight and no history of neurological disorders. All participants gave written informed consent in accordance with the University of Rochester Institutional Review Board.

General Procedure

“A Simple Framework” (Schwarzbach 2011) written in MATLAB Psychtoolbox (Brainard 1997; Pelli 1997) was used to control stimulus presentation. Participants viewed stimuli binocularly through a mirror attached to the head coil adjusted to allow foveal viewing of a back-projected monitor (temporal resolution = 120 Hz).

The scanning session began with a T1 anatomical scan, and then proceeded with (i) 4 functional runs of an experiment designed to localize the primary somatomotor cortex, followed by (ii) 2 functional runs of the tool-use pantomime experiment. A subset of participants (n = 7) also completed a separate scanning session, which consisted of (after the T1 anatomical scan), (i) a 6 min resting functional magnetic resonance imaging (MRI) scan, (ii) 8 three-minute functional runs of a category localizer experiment (see below for design and stimuli), (iii) another 6 min resting functional MRI scan, and (iv) diffusion tensor imaging (DTI) (15 min). The resting fMRI and DTI data are not analyzed herein. The scanning sessions were video recorded (in their entirety) from inside the scanner room with permanently installed cameras, allowing real-time confirmation (and offline analysis) to ensure that participants completed all cued actions.

Primary Somatomotor Cortex Localizer Design and Materials

We developed an fMRI localizer to identify the primary somatomotor representation of the right hand. Participants were prompted to rotate or flex their left or right hand or foot upon presentation of a visual cue. Participants lay supine in the scanner, and a black screen with the cue, for instance “RH Rotate” was presented (white font), and the participant then rotated their right hand at the wrist. Eight actions (left/right*rotate/flex*hand/foot) were presented in mini-blocks of 12 s, interspersed by 12-s fixation periods. Each action was presented twice during each run, with the constraint that an action did not repeat during the course of 2 successive mini-block presentations. During the flexion trials, the participants were instructed to bring their hands or feet from a resting, inferior position, upward, into an extended position, and then to smoothly return their hand/foot back (∼0.5 oscillation per second). Likewise, during the rotation trials, the participants were instructed to comfortably rotate their hands or feet at the wrist or ankle, while minimizing elbow and hip movements (respectively, ∼0.5 oscillation per second). Participants were given explicit directions and practice with the cues before entering the scanner. Because participants lay supine in the scanner all actions were performed out of participants' view.

Tool-Use Pantomime Task Design and Materials

Participants lay supine in the scanner, and a black screen with the cue, for instance the word “scissors” was presented (white font); the participant would then shape their right hand as if they were holding scissors, and gesture as if they were cutting. Participants performed the pantomime for as long as the item was on the screen (12 s, with 12 s of fixation between trials). As for the somatomotor localizer, the action pantomimes were performed outside of participants' view because of their supine position in the scanner. Six target items (scissors|pliers|knife|screwdriver|corkscrew|bottle opener) and 3 filler items (stapler|hole puncher|paperclip) were presented. Each item was presented twice during each run, in a random order, with the constraint that an item did not repeat on successive trials. From the perspective of the experimental design, the target items were organized into 2 triads of 3 items. One triad was scissors, pliers, and knife (scissors are similar to pliers in terms of manner of manipulation, but similar to knife in terms of function). The other triad was corkscrew, screwdriver, and bottle opener (corkscrew is similar to screwdriver in terms of manner of manipulation, but similar to bottle opener in terms of function). The items were selected based on prior behavioral experimental work from our group (Garcea and Mahon 2012), and the triad-structure (and some of the items themselves) originated from the work of Buxbaum et al. (Buxbaum et al. 2000; Buxbaum and Saffran 2002; Boronat et al. 2005). The filler items loosely formed a third triad and were thus indistinguishable in structure from the critical items; the filler items were included in order to have an independent means to determine which voxels are activated during transitive actions, while preserving a clean separation between the criteria used for voxel selection and voxel test. However, because the independent functional localizer was equivalently effective for defining voxels (and none of the effects were different according to whether filler items were used for voxel selection), the filler items were not analyzed and are thus not discussed further.

Category Localizer Design and Materials

To map tool-preferring regions, a subset of participants (n = 7) who had completed the main experiment also completed 8 runs of a category localizer in a separate scanning session. Participants were presented with intact and phase-shifted images of tools, places, faces, and animals (for details on stimuli, see Fintzi and Mahon 2013; Mahon et al. 2013). Twelve items per category (8 exemplars per item; 384 total stimuli) were presented in mini-blocks of 6 s (500 ms duration, 0 ms interstimulus interval) interspersed by 6-s fixation periods. Within each run, 8 mini-blocks of intact stimuli and 4 mini-blocks of phase-scrambled stimuli were presented. To identify the brain areas selectively involved in tool-related visual processing, we computed BOLD contrast maps showing differential activity for tool stimuli compared with animal stimuli in each participant (P < 0.05, uncorrected).

MRI Parameters

Whole-brain BOLD imaging was conducted on a 3-T Siemens MAGNETOM Trio scanner with a 32-channel head coil at the Rochester Center for Brain Imaging. High-resolution structural T1 contrast images were acquired using a magnetization prepared rapid gradient echo (MPRAGE) pulse sequence at the start of each session (TR = 2530 ms, TE = 3.44 ms flip angle = 7°, FOV = 256 mm, matrix = 256 × 256, 1 × 1 × 1 mm sagittal left-to-right slices). An echo-planar imaging pulse sequence was used for T2* contrast (TR = 2000 ms, TE = 30 ms, flip angle = 90°, FOV = 256 mm, matrix 64 × 64, 30 sagittal left-to-right slices, voxel size = 4 × 4 × 4 mm). The first 2 volumes of each run were discarded to allow for signal equilibration.

fMRI Data Analysis

All MRI data were analyzed using Brain Voyager (v. 2.8). Preprocessing of the functional data included, in the following order, slice scan time correction (sinc interpolation), motion correction with respect to the first volume of the first functional run, and linear trend removal in the temporal domain (cutoff: 2 cycles within the run; no spatial smoothing). Functional data were registered (after contrast inversion of the first volume) to high-resolution de-skulled anatomy on a participant-by-participant basis in native space. For each participant, echo-planar and anatomical volumes were transformed into standardized (Talairach and Tournoux 1988) space. Functional data were interpolated to 3 × 3 × 3 mm voxels.

For the principal experiment and the localizers, the general linear model was used to fit β estimates to the events of interest. Experimental events were convolved with a standard 2-γ hemodynamic response function. The first derivatives of 3D motion correction from each run were added to all models as regressors of no interest to attract variance attributable to head movement.

Definition of Regions of Interest

We defined regions of interest (ROIs) for the primary somatomotor representation of the right hand, as well as tool-preferring regions. The right hand somatomotor area was identified by selecting voxels around the central sulcus, based on the contrast (right hand) > (left hand + left foot + right foot; weighted equally), thresholded at a corrected α (using false discovery rate, FDR, q< 0.05, or stricter) for each subject. Tool-preferring voxels were defined for the left parietal lobule, the left posterior middle temporal gyrus, and the bilateral medial fusiform gyrus, individually in each participant (n = 7). For the purposes of subsequent analyses, and in order to have the same number of voxels contributed from each ROI and each participant, a 6 mm-radius sphere centered on the peak voxel was defined (see Table 1 for Talairach coordinates).

Table 1.

ROIs with corresponding Talairach coordinates

| ROI | Talairach coordinates |

|||

|---|---|---|---|---|

| x | y | z | ||

| Primary somatomotor localizer | Right hand area | −33 ± 4.3 | −29 ± 4.5 | 52 ± 3.2 |

| Tool-preferring ROIs defined by category localizer | Left aIPS tool area | −36 ± 6.4 | −47 ± 7.7 | 43 ± 6.9 |

| Left pMTG tool area | −42 ± 5.9 | −62 ± 6.0 | −7 ± 6.5 | |

| Left mFG tool area | −27 ± 3.6 | −53 ± 9.2 | −13 ± 5.9 | |

| Right mFG tool area | −27 ± 4.2 | −52 ± 8.4 | −14 ± 2.9 | |

Note: A subset of participants (n = 7) participated in a category localizer to functionally define tool areas within the inferior parietal lobule (anterior intraparietal sulcus), the posterior middle temporal gyrus, and the medial fusiform gyrus. These regions were defined as a 6 mm-radius sphere centered on the peak coordinate showing greater activation to tools compared with animals. aIPS, anterior intraparietal sulcus; pMTG, posterior middle temporal gyrus; mFG, medial fusiform gyrus.

Statistical Analysis

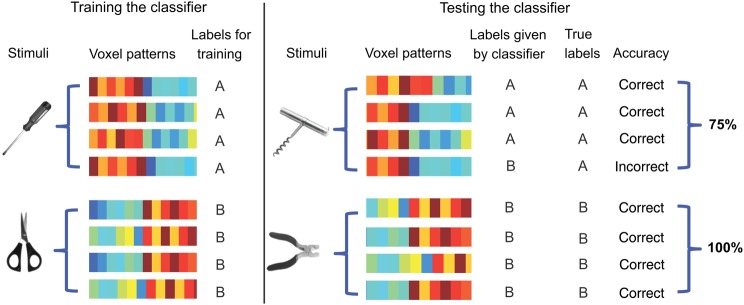

For all analyses, we used binary linear SVM. All MVPA analyses were performed over individual participants. Software written in MATLAB, utilizing the BVQX toolbox for MATLAB (http://support.brainvoyager.com/available-tools/52-MATLAB-tools-bvxqtools) was used to perform the analysis. A SVM binary classifier uses a linear kernel function to compute a hyper plane in a multidimensional space that effectively separates the patterns being discriminated. To prepare inputs for the pattern classifier, β weights were extracted for each of the 6 items (scissors|pliers|knife|screwdriver|corkscrew|bottle opener) for all voxels in the ROI. The classifiers were trained and tested in a cross-item manner. That is, the SVM classifier was trained to discriminate one pair of objects, and then the classifier was tested on a new pair of objects. Figure 1 illustrates how the cross-item SVM decodes actions. A similar procedure was used to decode function. For example, the classifier was trained to discriminate “scissors” from “corkscrew” and then the classifier was tested with “knife” versus “bottle opener”. Because the function of using scissors is similar to that for using a knife (i.e., cutting), and likewise for corkscrew and bottle opener (i.e., opening), accurate classification across objects indicates that the voxels being classified differentiate the function, over and above the objects themselves.

Figure 1.

Schematic of cross-item multivoxel pattern analysis. The SVM classifier was trained to discriminate the pantomime of using, for example, a screwdriver from the pantomime of using a pair of scissors. The (trained) classifier was then tested using a new pair of objects that match in their manner of manipulation (in this case, corkscrew, and pliers). If the labels given by the classifier match the true labels (significantly better than chance, which is 50%) then the classifier successfully discriminates the actions, over and above the specific objects. The same procedure was used to decode object function over and above the specific objects themselves.

The classification accuracies for action and function knowledge were computed by averaging together the 4 accuracies generated by using different pairs of objects for classifier training and testing, separately for each participant. We then averaged classification accuracies across participants, and compared the group mean with chance (50%) using a one-sample t-test (2-tailed). We also compared the classification performance for action and function directly using paired t-tests (2-tailed).

A whole-brain pattern analysis was also performed in each individual using a searchlight approach (Kriegeskorte et al. 2006). In this analysis, a cross-item classifier moved through the brain voxel by voxel. At every voxel, the β values for each of 6 object stimuli for the cube of surrounding voxels (n = 125) were extracted and passed through a classifier. Classifier performance for that set of voxels was then written to the central voxel. We obtained 2 whole-brain maps of classification accuracy for every subject, one for action and one for function. We then tested whether classification accuracy was >50% chance level in each voxel across subjects using one-sample t-tests (2-tailed). We also compared the decoding of action and function directly using paired t-tests (2-tailed). All whole-brain results used cluster-size corrected α levels, by thresholding individual voxels at P < 0.05 (uncorrected) and applying a subsequent cluster-size threshold generated with a Monte-Carlo style permutation test on cluster size (AlphaSim) to determine the appropriate alpha level that maintains Type I Error at 5%.

Results

To decode action and function representations during tool use, we trained cross-item binary SVM classifiers using different pairs of objects for classifier training and testing (see Statistical Analysis). Separate classification analyses were carried out to decode information about object-directed actions (manner of manipulation) and object function (the purpose or goal of tool use). Classification accuracy for both action and function knowledge was evaluated in each ROI, as defined by contrasts over independent data sets that identified (i) the primary somatomotor representation of the right hand, (ii) the left inferior parietal lobule (in the vicinity of the anterior intraparietal sulcus), (iii) the left posterior middle temporal gyrus, and (iv) the bilateral medial fusiform gyri.

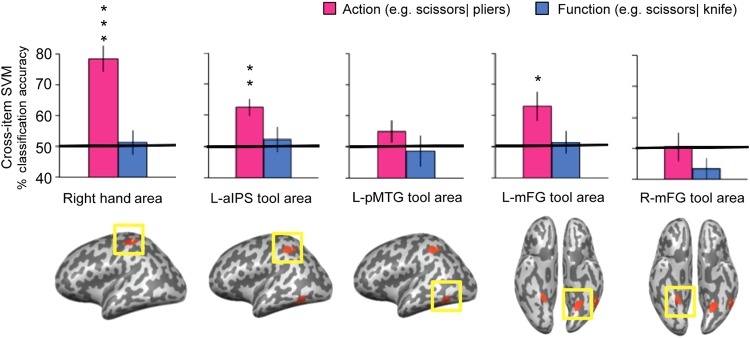

For actions, successful cross-item decoding was observed in the primary somatomotor representation of the right hand (t(9) = 6.53, P < 0.001), the left anterior intraparietal sulcus tool-preferring ROI, (t(6) = 4.45, P < 0.005), and the left medial fusiform tool-preferring ROI (t(6) = 3.35, P < 0.03) (see Fig. 2). Action decoding did not differ from chance levels in the left posterior middle temporal gyrus or the right medial fusiform gyrus.

Figure 2.

ROI analyses of cross-item classification. The bars represent the classification accuracy (pink, action; blue, function). Black asterisks assess statistical significance with 2-tailed t-tests across subjects with respect to 50% (*P < 0.05; **P < 0.01; ***P < 0.001). Solid black lines indicate chance accuracy level (50%). Error bars represent the standard error of the mean across subjects. The pictures in the bottom row show group results for the ROIs defined by the localizers (all ROIs were subject-specific for the actual analysis), thresholded at P < 0.05 (cluster corrected). Right hand area: the primary somatomotor representation of the right hand. L-aIPS, left anterior intraparietal sulcus; L-pMTG, left posterior middle temporal gyrus; L-mFG, left medial fusiform gyrus; R-mFG, right medial fusiform gyrus.

For object function, no significant decoding was found in any of those a priori defined ROIs. When contrasting classification accuracy for action with function directly, higher classification accuracy for actions was observed in the right hand somatomotor area (t(9) = 7.35, P < 0.001) and marginally in the left anterior intraparietal sulcus tool area (t(6) = 2.26, P = 0.07). No ROI showed higher accuracy for the decoding of function than action.

In order to therefore test whether representations of object function could be successfully decoded in brain regions outside of the a priori defined ROIs, and to test for the specificity of the successful decoding of actions in those ROIs, whole-brain search light analyses were carried out.

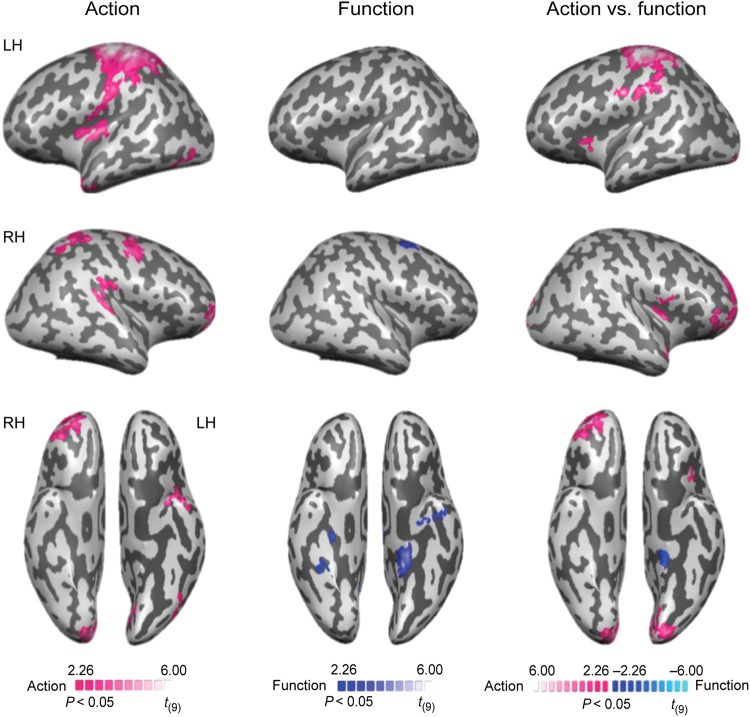

For action classification, the searchlight analysis revealed successful decoding in frontoparietal brain areas, including bilateral motor and premotor areas, and the left anterior intraparietal sulcus (see Fig. 3 left and Table 2). In the temporal lobe, accurate classification for action was observed in the left anterior temporal lobe (Fig. 4D). A region, posterior to the functionally defined posterior left middle temporal gyrus, in lateral occipital-temporal cortex also showed significant decoding of action (Fig. 4A middle). The bilateral putamen and the right cerebellum were involved in decoding action as well (Fig. 4B).

Figure 3.

Searchlight analyses of cross-item classification. (Left) The decoding of action. (Middle) The decoding of function. (Right) The direct comparison between the decoding of action and function. All results are thresholded at P < 0.05 (cluster corrected).

Table 2.

Talairach coordinates, cluster sizes, significance levels, and anatomical regions for the cross-item classification searchlight results

| Region | Talairach coordinates |

Cluster size (mm2) | t-value | P-value | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Action (vs. 50% chance) | ||||||

| Dorsal premotor cortex LH | −25 | −14 | 51 | 48 818 | 7.56 | <0.001 |

| Ventral premotor cortex LH | −49 | −11 | 18 | – | 5.12 | <0.001 |

| Motor cortex LH | −28 | −26 | 54 | – | 13.77 | <0.001 |

| Anterior intraparietal sulcus LH | −31 | −47 | 45 | – | 5.46 | <0.001 |

| Anterior temporal lobe LH | −28 | 1 | −30 | 5488 | 5.26 | <0.001 |

| Lateral occipital-temporal cortex LH | −37 | −77 | −3 | 1864 | 4.69 | <0.001 |

| Putamen LH | −31 | −8 | 6 | 12 687 | 8.88 | <0.001 |

| Superior frontal gyrus RH | 23 | 55 | 6 | 8355 | 7.13 | <0.001 |

| Dorsal premotor cortex RH | 53 | −8 | 46 | 9185 | 6.38 | <0.001 |

| Motor cortex RH | 32 | −32 | 51 | 10 056 | 5.52 | <0.001 |

| Paracentral lobule RH | 2 | −14 | 45 | 4206 | 9.97 | <0.001 |

| Lingual gyrus RH | 8 | −83 | −9 | 3373 | 4.11 | <0.002 |

| Putamen RH | 26 | −11 | 9 | 8287 | 5.84 | <0.001 |

| Cerebellum RH | 11 | −47 | −25 | 10 212 | 9.47 | <0.001 |

| Function (vs. 50% chance) | ||||||

| Parahippocampal gyrus LH | −16 | −38 | −12 | 2283 | 4.90 | <0.001 |

| Anterior temporal lobe LH | −40 | −14 | −27 | 1639 | 4.74 | <0.001 |

| Dorsal premotor cortex RH | 23 | 4 | 51 | 1861 | 6.07 | <0.001 |

| Retrosplenial cortex RH | 5 | −44 | 21 | 2375 | 4.12 | <0.003 |

| Hippocampus RH | 29 | −20 | −15 | 1558 | 6.36 | <0.001 |

| Direct comparison: action > function | ||||||

| Ventral premotor cortex LH | −52 | −8 | 21 | 42 093 | 6.01 | <0.001 |

| Motor cortex LH | −25 | −29 | 48 | – | 10.05 | <0.001 |

| Anterior intraparietal sulcus LH | −37 | −44 | 51 | – | 6.15 | <0.001 |

| Paracentral lobule LH | −7 | −11 | 54 | – | 8.39 | <0.001 |

| Cuneus LH | −10 | −83 | 3 | 5851 | 5.26 | <0.001 |

| Putamen LH | −25 | −5 | 6 | 3913 | 7.25 | <0.001 |

| Inferior Frontal gyrus RH | 41 | 28 | 6 | 11 695 | 8.66 | <0.001 |

| Motor cortex RH | 23 | −29 | 42 | 5012 | 5.75 | <0.001 |

| Paracentral lobule RH | 2 | −20 | 48 | – | 8.19 | <0.001 |

| Lingual gyrus RH | 11 | −95 | −3 | 10 517 | 10.30 | <0.001 |

| Putamen RH | 23 | 4 | −3 | 7553 | 4.69 | <0.001 |

| Cerebellum RH | 8 | −53 | −12 | 6169 | 6.56 | <0.001 |

| Direct comparison: function > action | ||||||

| Parahippocampal gyrus LH | −19 | −38 | −12 | 945 | 4.71 | <0.001 |

Note: All results are thresholded at P < 0.05 (cluster corrected). Regions for which the cluster size (mm) is indicated as ‘–’ were contiguous with the region directly above, and hence included in the above volume calculation.

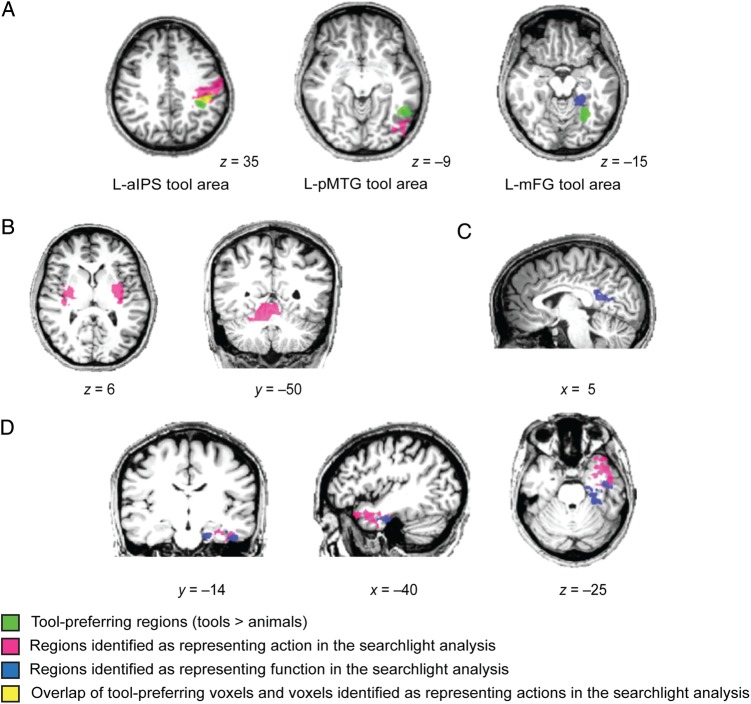

Figure 4.

Direct comparison of tool-preferring regions and searchlight analysis. (A) The searchlight results for the decoding of action (pink) and function (blue) near the tool-preferring regions. Tool-preferring regions (green) are shown based on group results. The overlap of tool-preferring voxels and voxels identified as representing actions in the searchlight analysis is shown in yellow. (B) The searchlight results for the decoding of action within the bilateral putamen and the right cerebellum. (C) The searchlight results for the decoding of function within right retrosplenial cortex. (D) The searchlight results for the decoding of action and function within the anterior temporal lobe. All results are overlaid on a representative brain (radiological convention). Numbers at the bottom of each slice denote coordinates in Talairach space. All results are thresholded at P < 0.05 (cluster corrected).

Significant decoding of function was observed in bilateral medial temporal cortex, including the left parahippocampal gyrus, left perirhinal cortex within the anterior temporal lobe, and the right hippocampus (Fig. 3middle and Table 2). In addition, the right dorsal premotor cortex (PMd) and right retrosplenial cortex (Fig. 4C) were also observed to decode function.

A direct comparison between the decoding of action and function was also performed (see Fig. 3 right and Table 2). Higher classification accuracy for action than function was observed in left frontoparietal brain areas, including premotor cortex, motor cortex and the anterior intraparietal sulcus. Bilateral occipital regions and the right cerebellum also showed higher classification accuracy for action than function. The reverse comparison revealed higher accuracies for the decoding of function only in the left parahippocampal gyrus.

Discussion

In the current study, we used multivariate analyses over fMRI data to test whether tools with similar actions or functions produce similar activity patterns during the execution of pantomimes. The principal findings are that (i) frontoparietal motor and peri-motor regions represent action similarity in terms of the actual motoric patterns involved in the action, and (ii) action pantomimes can also be successfully decoded in the left medial fusiform gyrus, and finally (iii) medial temporal areas in the left hemisphere represent abstract knowledge of the goal or purpose of object-directed actions.

It is interesting, but perhaps unsurprising, that regions in and around motor cortex contain neural codes that represent the similarity of object pantomimes. In other words, the action of using a pair of scissors elicits a pattern of neural responses in motor- and peri-motor regions that is more similar to the pattern of neural responses elicited when pantomiming the use of pliers, than when pantomiming the use of a knife. The searchlight analysis confirmed the finding of high classification accuracy for actions in left frontoparietal cortex, notably including the left inferior parietal lobule in the vicinity of the left anterior intraparietal sulcus (Fig. 3 left and Fig. 4A left). It is well known that the anterior intraparietal sulcus is involved in visually guided grasping, and specifically hand shaping for object apprehension (Binkofski et al. 1999; Culham et al. 2003; Frey et al. 2005; Tunik et al. 2005; Cavina-Pratesi et al. 2010; Gallivan, McLean, Smith, et al. 2011; Gallivan, McLean, Valyear, et al. 2011). Our results indicate that this region distinguishes action pantomimes over and above the objects themselves. An important precedent to this observation is the study of Yee et al. (2010) who observed adaptation of BOLD activity in frontoparietal areas between objects that were similar in manner of manipulation and function (e.g., pen–pencil).

Action Decoding in the Left Medial Fusiform Gyrus

Of particular relevance to theories about the causes of category-specificity in the ventral stream, is the observation that similarity of object pantomimes was also represented in the left medial fusiform gyrus—a region independently defined as showing differential BOLD contrast for manipulable objects (e.g., see also Chao et al. 1999; Noppeney et al. 2006; Mahon et al. 2007). While this finding failed to survive cluster correction in the whole-brain searchlight analysis, the fact that it was present with the a priori defined ROI analysis remains an important finding that merits consideration. One explanation of the causes of specificity for manipulable objects in the medial fusiform gyrus is that it is driven by innate connectivity between that region of the visual system and the motor system (Mahon et al. 2007, 2009; Mahon and Caramazza 2009, 2011). This general framework could explain the above-chance discrimination of objects on the basis of their action properties in terms of (i) privileged connectivity between motor- and peri-motor regions and the left medial fusiform gyrus, and (ii) spreading activation from the motor system to the visual system during the execution of object pantomimes. Previous research using neural recordings from human patients performing cued hand movements indicates that the human medial temporal lobe contains neurons that represent hand position and kinematic properties of hand actions during a motor task (Tankus and Fried 2012).

However, it is important to note that what drives pattern similarity for object-associated pantomimes may be different in different brain regions. In motor and peri-motor regions, it is likely that what drives the pattern similarity is the actual physical similarity of the actions (whether the grasp component or the complex pantomime itself). However, participants are undoubtedly thinking about the object whose pantomime they are executing (in fact, the object name was the cue) and thus it may be that properties such as visual shape drive neural similarity in posterior temporal lobe regions. Chao et al. (1999) proposed that tool-preferring areas in the ventral stream are involved in storing information about object form. More recently, Cant and Goodale (2007) have shown that regions in and around the medial fusiform gyrus on the ventral surface of posterior temporal-occipital cortex represent object texture more so than shape, while regions in lateral occipital cortex represent object shape. Furthermore, it is clear that the objects in our stimulus set that are manipulated in similar ways are also more structurally similar. Thus, it may be the case that the reason why actions could be successfully decoded in the left medial fusiform tool-preferring ROI was because of similarities in shape, rather than similarities in action per se. One argument against this interpretation is that the same objects could not be discriminated on the basis of action information (or visual information, for that matter) in the right medial fusiform tool-preferring ROI. Previous studies suggest that shape information is represented bilaterally in the ventral stream (Haushofer et al. 2008; Op de Beeck et al. 2008; Drucker and Aguirre 2009; Peelen and Caramazza 2012). If the effect observed in the left medial fusiform gyrus was merely an effect of visual similarity, there is no reason why it would not have been present bilaterally. It is known that praxis knowledge and abilities are left lateralized in parietal cortex, and that there is privileged functional connectivity between the left medial fusiform gyrus and left parietal action representations (e.g., Almeida et al. 2013; Mahon et al. 2007, 2013). Thus, and consistent with prior arguments (see Mahon et al. 2007) we would argue that while the representations in the left medial fusiform have to do with visual and surface properties of objects, the pattern of effects observed in that region may be driven by inputs from motor-relevant regions of parietal cortex. Nevertheless, future work will be required to decisively adjudicate between whether action decoding in the left medial fusiform is driven by spreading information from motor-relevant structures or by similarity in visual form.

Action Decoding in Lateral Occipital Cortex

The searchlight analysis revealed significant decoding of actions in lateral occipital-temporal cortex, posterior to the middle temporal gyrus tool area (see Fig. 4A middle). This area is very close to, or overlapping with, the extrastriate body area (EBA), which is defined as showing selectivity for images of body parts, but which also shows activity during hand movements (Astafiev et al. 2004; David et al. 2007; Peelen and Downing 2007; Bracci et al. 2010). Gallivan et al. (2013) found that the EBA could decode upcoming movements of hands, but not object-directed actions, and suggested that this area did not incorporate tools into the body schema. Thus, it may be the case that because participants were not manipulating an actual object during the scan, we observe high classification accuracy in similar extrastriate regions as those activated during hand movements.

The flipside of these issues is that neither ROI nor searchlight analyses showed that actions could be classified in the left posterior middle temporal gyrus. Gallivan et al. (2013) reported positive findings in the posterior middle temporal gyrus tool area that differentiated upcoming object-directed actions. In other words, the empirical generalization that emerges combining our results from those of past studies, is that manual actions can be decoded in regions in and around the extrastriate body area when the action does not actually involve a tool (in-hand), while actions can be decoded in the left posterior middle temporal gyrus only when the action does in fact involve a tool in-hand. Future work using complex actions, as in our study, but with actual objects, either in-hand or not in-hand, will be able to decisively test this generalization.

The Neural Representation of Object Function

The ROI analyses showed that object function could not be decoded from multivoxel patterns in any of the a priori defined regions that were defined as exhibiting tool preferences in BOLD amplitude, nor in the a priori defined somatomotor representation of the right hand. However, the searchlight analysis revealed better decoding for function than action in the left medial temporal lobe, mainly the parahippocampal gyrus (see Fig. 3 right). Although the medial fusiform gyrus tool area is close to the parahippocampal gyrus, the 2 areas have little overlap when the respective datasets are overlaid (Fig. 4A right). Previous work found that the degree of neural adaptation in the same (or a similar) region was correlated with the amount of functional similarity between word pairs that participants were reading (Yee et al. 2010). An important contribution of the current findings to prior work, is that it serves to bridge the literature on tool-specificity in the brain (e.g., Chao and Martin 2000; Noppeney et al. 2006; Mahon et al. 2007; for review see Martin 2007, 2009) with prior work that has sought to dissociate action- and function-based tool knowledge. Importantly in this regard, we trained classifiers in a novel cross-item manner, which allowed us to examine similarity in action, and similarity in function, between objects, over and above the objects themselves.

A key finding that we report is that the medial temporal lobe contains a population of voxels that represents object function, compared with object-directed action. It has been proposed (Bar and Aminoff 2003; Aminoff et al. 2007) that the parahippocampal gyrus plays a central role in processing contextual associations. In the same vein, we observed that right retrosplenial cortex in medial parietal cortex showed above-chance classification accuracy for object function (Fig. 4C). This is in agreement with the work of Canessa et al. (2008), who also observed that the retrosplenial cortex was engaged more strongly in making judgments about function than action. Bar and Aminoff (2003) argued that retrosplenial cortex is involved in analyzing nonspatial contextual associations. Our findings would suggest that the notion of “contextual association” may need to be expanded to include the purpose for which a tool is designed. Another aspect of our findings that motivates additional investigation is the observation that object function could be decoded in right dorsal premotor cortex. This raises the issue of whether that region of frontal cortex has privileged connectivity to regions of the temporal lobe that also decode object function.

The Role of the Anterior Temporal Lobe

The searchlight analysis revealed that the left anterior temporal lobe carries both action and function information associated with objects (Fig. 4D). Similarly, Peelen and Caramazza (2012) revealed that objects with a shared conceptual feature (action or location) had highly similar patterns of fMRI responses within the anterior aspects of the temporal lobes. More recently, Clarke and Tyler (2014) found that activation patterns within the anterior medial temporal lobe reflected the semantic similarity between individual objects. Those MVPA findings, in combination with ours, confirm the role of the anterior temporal lobe in conceptual object representation. Evidence from neuropsychological research supports the central role of the anterior temporal lobe in semantic memory. Patients suffering from neurodegenerative diseases affecting the anterior temporal lobe can present with an impairment for conceptual knowledge of objects, while basic shape perception and other cognitive abilities are spared (Warrington 1975; Snowden et al. 1989; Hodges et al. 1992; Patterson et al. 2007).

It is important to note that action and function representations have little overlap within the anterior temporal lobe in our study. Indeed, regions identified with the searchlight analysis as decoding actions included superior and lateral anterior temporal regions, while regions implicated in the decoding of object function involved inferior and medial anterior temporal lobe regions (mainly perirhinal cortex; Fig. 4D). This result indicates that there is some degree of dissociation in the representations of action and function information within the anterior temporal lobe.

Conclusion

We used multivoxel pattern analysis to successfully dissociate representations of object-directed actions from representations of object function during the execution of tool-use pantomimes. Our findings extend previous observations that frontoparietal and medial temporal areas represent action and function knowledge, respectively. Importantly, our findings converge with previous studies indicating a clear neural substrate for knowledge of object function. The findings that we have reported generate the expectation that the broad network of regions that participate in the representation of object function and object-directed action should exhibit functional coupling in a task dependent manner, and that disruption to that network may underlie the varied patterns of selective impairment to object knowledge observed after focal brain lesions.

Funding

This work was supported in part by NINDS grant NS076176 to B.Z.M., by a University of Rochester Center for Visual Science predoctoral training fellowship (National Institutes of Health training grant 5T32EY007125-24) to F.E.G., and by National Eye Institute core grant P30 EY001319 to the Center for Visual Science.

Notes

Conflict of Interest: None declared.

References

- Almeida J, Fintzi AR, Mahon BZ. 2013. Tool manipulation knowledge is retrieved by way of the ventral visual object processing pathway. Cortex. 49:2334–2344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aminoff E, Gronau N, Bar M. 2007. The parahippocampal cortex mediates spatial and nonspatial associations. Cereb Cortex. 17:1493–1503. [DOI] [PubMed] [Google Scholar]

- Astafiev SV, Stanley CM, Shulman GL, Corbetta M. 2004. Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nat Neurosci. 7:542–548. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E. 2003. Cortical analysis of visual context. Neuron. 38:347–358. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Buccino G, Posse S, Seitz RJ, Rizzolatti G, Freund HJ. 1999. A fronto-parietal circuit for object manipulation in man: evidence from an fMRI-study. Eur J Neurosci. 11:3276–3286. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Buxbaum LJ. 2013. Two action systems in the human brain. Brain Lang. 127:222–229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boronat CB, Buxbaum LJ, Coslett HB, Tang K, Saffran EM, Kimberg DY, Detre JA. 2005. Distinctions between manipulation and function knowledge of objects: Evidence from functional magnetic resonance imaging. Brain Res Cogn Brain Res. 23:361–373. [DOI] [PubMed] [Google Scholar]

- Bracci S, Ietswaart M, Peelen MV, Cavina-Pratesi C. 2010. Dissociable neural responses to hands and non-hand body parts in human left extrastriate visual cortex. J Neurophysiol. 103:3389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. 1997. The psychophysics toolbox. Spat Vis. 104:433–436. [PubMed] [Google Scholar]

- Buxbaum LJ, Saffran EM. 2002. Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain Lang. 82:179–199. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Veramontil T, Schwartz MF. 2000. Function and manipulation tool knowledge in apraxia: knowing ‘what for’ but not ‘how’. Neurocase. 6:83–97. [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, Tettamanti M, Shallice T. 2008. The different neural correlates of action and functional knowledge in semantic memory: an FMRI study. Cereb Cortex. 18:740–751. [DOI] [PubMed] [Google Scholar]

- Cant JS, Goodale MA. 2007. Attention to form or surface properties modulates different regions of human occipitotemporal cortex. Cereb Cortex. 17:713–731. [DOI] [PubMed] [Google Scholar]

- Cavina-Pratesi C, Monaco S, Fattori P, Galletti C, McAdam TD, Quinlan DJ, Goodale MA, Culham JC. 2010. Functional magnetic resonance imaging reveals the neural substrates of arm transport and grip formation in reach-to-grasp actions in humans. J Neurosci. 30:10306–10323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. 1999. Attribute-based neural substrates in temporal cortex for perceiving and knowing about object. Nat Neurosci. 2:913–919. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. 2000. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 12:478–484. [DOI] [PubMed] [Google Scholar]

- Clarke A, Tyler LK. 2014. Object-specific semantic coding in human perirhinal cortex. J Neurosci. 34:4766–4775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, De Souza JF, Gati JS, Menon RS, Goodale MA. 2003. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res. 153:180–189. [DOI] [PubMed] [Google Scholar]

- David N, Cohen MX, Newen A, Bewernick BH, Shah NJ, Fink GR, Vogeley K. 2007. The extrastriate cortex distinguishes between the consequences of one's own and others’ behavior. Neuroimage. 36:1004–1014. [DOI] [PubMed] [Google Scholar]

- Drucker DM, Aguirre GK. 2009. Different spatial scales of shape similarity representation in lateral and ventral LOC. Cereb Cortex. 19:2269–2280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fintzi AR, Mahon BZ. 2013. A bimodal tuning curve for spatial frequency across left and right human orbital frontal cortex during object recognition. Cereb Cortex. 24:1311–1318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frey SH, Newman-Norlund R, Grafton ST. 2005. A distributed left hemisphere network active during planning of everyday tool use skills. Cereb Cortex. 15:681–695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Smith FW, Culham JC. 2011. Decoding effector-dependent and effector-independent movement intentions from human parieto-frontal brain activity. J Neurosci. 31:17149–17168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Valyear KF, Culham JC. 2013. Decoding the neural mechanisms of human tool use. Elife. 2:0–0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Valyear KF, Pettypiece CE, Culham JC. 2011. Decoding action intentions from preparatory brain activity in human parieto-frontal networks. J Neurosci. 31:9599–9610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Mahon BZ. 2014. Parcellation of left parietal tool representations by functional connectivity. Neuropsychologia. 60:131–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Mahon BZ. 2012. What is in a tool concept? Dissociating manipulation knowledge from function knowledge. Mem Cogn. 40:1303–1313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haushofer J, Livingstone MS, Kanwisher N. 2008. Multivariate patterns in object-selective cortex dissociate perceptual and physical shape similarity. PLoS Biol. 6:e187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. 2001. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K, Oxbury S, Funnell E. 1992. Semantic dementia progressive fluent aphasia with temporal lobe atrophy. Brain. 115:1783–1806. [DOI] [PubMed] [Google Scholar]

- Ishibashi R, Lambon Ralph MA, Saito S, Pobric G. 2011. Different roles of lateral anterior temporal and inferior parietal lobule in coding function and manipulation tool knowledge: Evidence from an rTMS study. Neuropsychologia. 49:1128–1135. [DOI] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. 2003. Actions speak louder than functions: The importance of manipulability and action in tool representation. J Cogn Neurosci. 15:20–46. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. 2006. Information-based functional brain mapping. Proc Natl Acad Sci USA. 103:3863–3868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, Bandettini PA. 2008. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron. 60:1126–1141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis J. 2006. Cortical networks related to human use of tools. Neuroscientist. 12:211–231. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Anzellotti S, Schwarzbach J, Zampini M, Caramazza A. 2009. Category-specific organization in the human brain does not require visual experience. Neuron. 63:397–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. 2009. Concepts, Categories: A Cognitive Neuropsychological Perspective. Annu Rev Psychol. 60:27–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. 2011. What drives the organization of object knowledge in the brain? Trends Cogn Sci. 15:97–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Kumar N, Almeida J. 2013. Spatial frequency tuning reveals interactions between the dorsal and ventral visual systems. J Cogn Neurosci. 25:862–871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Milleville S, Negri GAL, Rumiati RI, Caramazza A, Martin A. 2007. Action-related properties of objects shape object representations in the ventral stream. Neuron. 55:507–520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A. 2009. Circuits in mind: The neural foundations for object concepts. In: Gazzaniga M., editor. The Cognitive Neurosciences, 4th ed, Cambridge (MA): MIT Press, p. 1031–1045. [Google Scholar]

- Martin A. 2007. The representation of object concepts in the brain. Annu Rev Psychol. 58:25–45. [DOI] [PubMed] [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV. 1996. Neural correlates of category-specific knowledge. Nature. 379:649–653. [DOI] [PubMed] [Google Scholar]

- Myung JY, Blumstein SE, Yee E, Sedivy JC, Thompson-Schill SL, Buxbaum LJ. 2010. Impaired access to manipulation features in apraxia: evidence from eyetracking and semantic judgment tasks. Brain Lang. 112:101–112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Negri GA, Lunardelli A, Reverberi C, Gigli GL, Rumiati RI. 2007. Degraded semantic knowledge and accurate object use. Cereb Cortex. 43:376–388. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ, Penny WD, Friston KJ. 2006. Two distinct neural mechanisms for category-selective responses. Cereb Cortex. 16:437–445. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. 2006. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci. 10:424–430. [DOI] [PubMed] [Google Scholar]

- Op de Beeck HP, Torfs K, Wagemans J. 2008. Perceived shape similarity among unfamiliar objects and the organization of the human object vision pathway. J Neurosci. 28:10111–10123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. 2007. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Rev Neurosci. 8:976–987. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Caramazza A. 2012. Conceptual object representations in human anterior temporal cortex. J Neurosci. 32:15728–15736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. 2007. The neural basis of visual body perception. Nat Rev Neurosci. 8:636–648. [DOI] [PubMed] [Google Scholar]

- Pelgrims B, Olivier E, Andres M. 2011. Dissociation between manipulation and conceptual knowledge of object use in supramarginalis gyrus. Hum Brain Mapp. 32:1802–1810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG. 1997. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vis. 10:437–442. [PubMed] [Google Scholar]

- Pobric G, Jeffries E, Lambon Ralph A. 2010. Amodal semantic representations depend on both anterior temporal lobes: Evidence from repetitive transcranial magnetic stimulation. Neuropsychologia. 48:1336–1342. [DOI] [PubMed] [Google Scholar]

- Rumiati RI, Weiss PH, Shallice T, Ottoboni G, Noth J, Zilles K, Fink GR. 2004. Neural basis of pantomiming the use of visually presented objects. NeuroImage. 21:1224–1231. [DOI] [PubMed] [Google Scholar]

- Schwarzbach J. 2011. A simple framework ASF for behavioral and neuroimaging experiments based on psychophysics toolbox for MATLAB. Behav Res Methods. 43:1194–1201. [DOI] [PubMed] [Google Scholar]

- Sirigu A, Duhamel JR, Poncet M. 1991. The role of sensorimotor experience in object recognition. Brain. 114:2555–2573. [DOI] [PubMed] [Google Scholar]

- Snowden JS, Goulding PJ, Neary D. 1989. Semantic dementia: A form of circumscribed cerebral atrophy. Behav Neurol. 2:167–182. [Google Scholar]

- Spiridon M, Kanwisher N. 2002. How distributed is visual category information in human occipital- temporal cortex? An fMRI study. Neuron. 35:1157–1165. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. 1988. Co-planar stereotaxic atlas of the human brain. New York: Thieme Medical Publishing. [Google Scholar]

- Tankus A, Fried I. 2012. Visuomotor coordination and motor representation by human temporal lobe neurons. J Cogn Neurosci. 24:600–610. [DOI] [PubMed] [Google Scholar]

- Tunik E, Frey SH, Grafton ST. 2005. Virtual lesions of the anterior intraparietal area disrupt goal-dependent on-line adjustments of grasp. Nat Neurosci. 8:505–511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warrington EK. 1975. The selective impairment of semantic memory. Q J Exp Psychol. 27:635–657. [DOI] [PubMed] [Google Scholar]

- Yee E, Drucker DM, Thompson-Schill SL. 2010. fMRI-adaptation evidence of overlapping neural representations for objects related in function or manipulation. NeuroImage. 50:753–763. [DOI] [PMC free article] [PubMed] [Google Scholar]