Despite the ethical imperative to publish clinical trials when human subjects are involved, such data frequently remain unpublished. This study evaluated 2009–2011 ASCO annual meeting abstracts to ascertain factors associated with eventual publication of clinical trial results. It is the largest reported study, to date, examining why oncology trials are not published.

Keywords: Oncology clinical trials, ClinicalTrials.gov, Publication rates

Abstract

Background.

Despite the ethical imperative to publish clinical trials when human subjects are involved, such data frequently remain unpublished. The objectives were to tabulate the rate and ascertain factors associated with eventual publication of clinical trial results reported as abstracts in the Proceedings of the American Society of Clinical Oncology (American Society of Clinical Oncology).

Materials and Methods.

Abstracts describing clinical trials for patients with breast, lung, colorectal, ovarian, and prostate cancer from 2009 to 2011 were identified by using a comprehensive online database (http://meetinglibrary.asco.org/abstracts). Abstracts included reported results of a treatment or intervention assessed in a discrete, prospective clinical trial. Publication status at 4−6 years was determined by using a standardized search of PubMed. Primary outcomes were the rate of publication for abstracts of randomized and nonrandomized clinical trials. Secondary outcomes included factors influencing the publication of results.

Results.

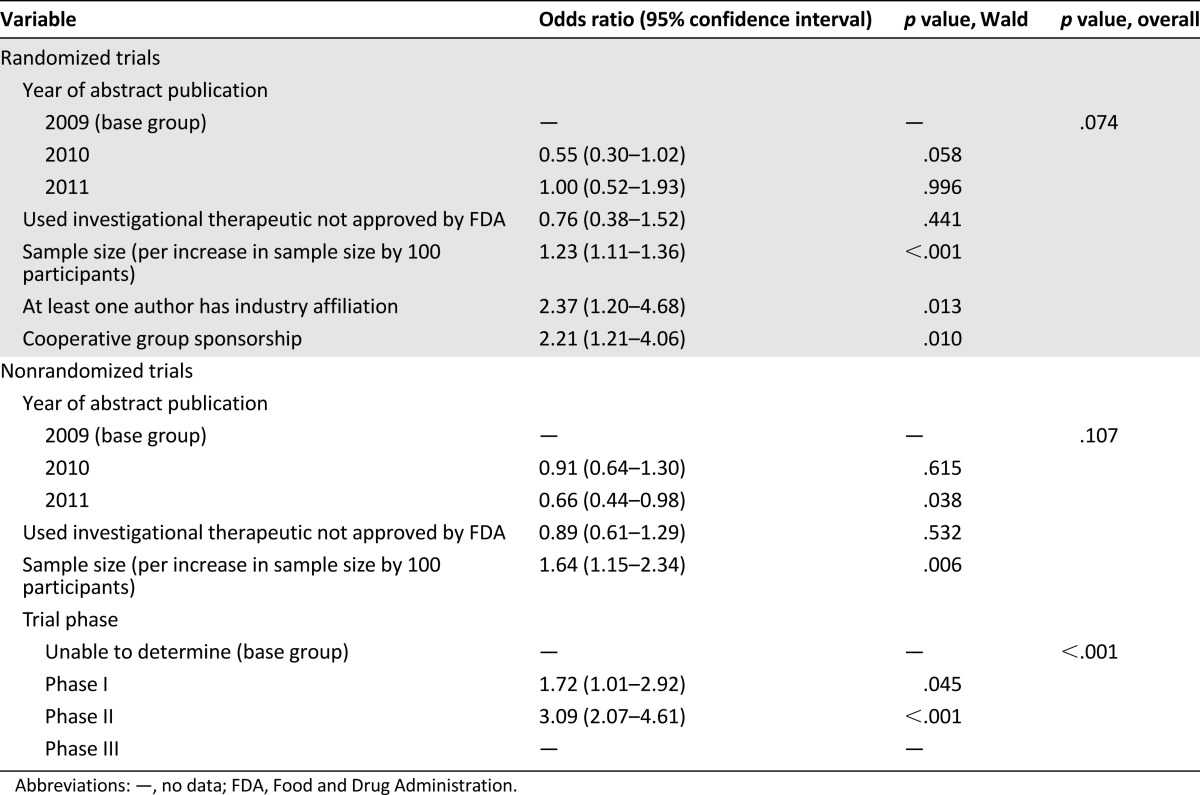

A total of 1,075 abstracts describing 378 randomized and 697 nonrandomized clinical trials were evaluated. Across all years, 75% of randomized and 54% of nonrandomized trials were published, with an overall publication rate of 61%. Sample size was a statistically significant predictor of publication for both randomized and nonrandomized trials (odds ratio [OR] per increase of 100 participants = 1.23 [1.11–1.36], p < .001; and 1.64 [1.15–2.34], p = .006, respectively). Among randomized studies, an industry coauthor or involvement of a cooperative group increased the likelihood of publication (OR 2.37, p = .013; and 2.21, p = .01, respectively). Among nonrandomized studies, phase II trials were more likely to be published than phase I (p < .001). Use of an experimental agent was not a predictor of publication in randomized (OR 0.76 [0.38–1.52]; p = .441) or nonrandomized trials (OR 0.89 [0.61–1.29]; p = .532).

Conclusion.

This is the largest reported study examining why oncology trials are not published. The data show that 4−6 years after appearing as abstracts, 39% of oncology clinical trials remain unpublished. Larger sample size and advanced trial phase were associated with eventual publication; among randomized trials, an industry-affiliated author or a cooperative group increased likelihood of publication. Unfortunately, we found that, despite widespread recognition of the problem and the creation of central data repositories, timely publishing of oncology clinical trials results remains unsatisfactory.

Implications for Practice:

The Declaration of Helsinki Ethical Principles for Medical Research Involving Human Subjects notes the ethical obligation to report clinical trial data, whether positive or negative. This obligation is listed alongside requirements for risk minimization, access, confidentiality, and informed consent, all bedrocks of the clinical trial system, yet clinical trials are often not published, particularly if negative or difficult to complete. This study found that among American Society for Clinical Oncology (ASCO) Annual Meeting abstracts, 2009–2011, only 61% were published 4–6 years later: 75% of randomized trials and 54% of nonrandomized trials. Clinicians need to insist that every study in which they participate is published.

Introduction

A large percentage of clinical trial results remain unpublished in the biomedical literature long after their data reach maturity [1–6]. In few areas of medicine is the timely dissemination of clinical trial results more crucial than in oncology, because this knowledge is used to optimize dynamic treatment decisions, often involving the ongoing, off-label use of drugs already approved [7]. Unfortunately, prior investigations have found that as many as half of completed oncology clinical trials remain unpublished [8–11].

In addition to quantifying the magnitude of the problem, prior studies have sought to determine factors influencing the publication of clinical trial results [12]. Publication bias that results in the preferential reporting of trials with positive outcomes is well-documented [12–14]. Larger studies and statistically significant results make publication more likely [6, 15, 16], whereas trial discontinuation and industry funding have been reported as detrimental to publication [1, 17, 18]

The implementation of ClinicalTrials.gov for public reporting of clinical trials has improved dissemination of results. However, a large fraction of clinical trials are still not published [1] or made available on ClinicalTrials.gov, despite legal requirements to do so [19]. Lack of exposure of valuable, but often negative, clinical trial data can impact patient care, disturb the validity of systematic reviews and meta-analyses [13], and lead to study duplication. Most concerning, however, is that failure to disseminate results violates the faith patients have that those who conduct a study will report their outcomes.

We conducted a retrospective review to ascertain the rates of and factors associated with publication of clinical trial results initially reported as abstracts in the Proceedings of the American Society of Clinical Oncology (ASCO) 4–6 years earlier. The last similar analysis of this problem evaluated ASCO abstracts published between 1989 and 2003 and found that 26% of large randomized trials remained unpublished in peer-reviewed journals. Now, a decade later, with increased awareness of this problem, we revisit this topic.

Methods

Study Selection

ASCO Annual Meeting abstracts from 2009, 2010, and 2011 were queried by using a comprehensive online database (http://meetinglibrary.asco.org/abstracts). The following cancer types were included: breast, lung, colorectal, ovarian, and prostate. Only abstracts under the heading “ASCO Annual Meeting” were queried. All abstracts reported under each cancer type were searched. Abstracts included for data extraction were those reporting results of an oncology treatment or intervention assessed in a discrete, prospective clinical trial. As such, we included abstracts reporting preliminary, safety, or final data. We did not include studies of biomarkers or assays or subgroup or quality-of-life analyses. Also excluded were meta-analyses or studies that pooled data from multiple sources.

Data Extraction

We extracted the following characteristics for each trial: year, title, first author name, disease, therapy given, whether the therapy was approved by the Food and Drug Administration (FDA), sample size, presence of randomization, phase, region of world, and whether the trial had statistical significance in the primary outcome or any result.

P.R.M. extracted all data from abstracts, with T.F. and S.E.B. adjudicating equivocal determinations. All information was entered into our study database manually. If any data were unavailable on an abstract that otherwise met study criteria, that information was scored as indeterminate. When multiple phases of a trial (e.g., phase I/II) were reported in an abstract, the highest phase was entered into our database. To assign a region of the world, we extracted the continent pertaining to each authors’ home institution. If multiple regions of the world were involved, we designated that abstract to be from “multiple” sites. In order for an abstract to be “European,” all authors must have reported home institutions in Europe. FDA-approval status was determined by querying http://www.fda.gov/Drugs/. Drugs were deemed experimental if they lacked FDA approval for any indication.

By scrutinizing titles and author affiliations of abstracts reporting randomized clinical trials, we assessed cooperative group and industry involvement. Cooperative group studies often listed a known cooperative group in the title of the abstract. Otherwise, author affiliations were scrutinized for cooperative group names listed on the abstract. If either of these features were found, the abstract was labeled a cooperative group study. Cooperative groups of any size or geographic location were accepted. Assignment of “industry co-author” required at least one author to have pharmaceutical company affiliation listed.

Publication Status

A computer-based query of PubMed assessed publication status for each abstract. Each search used the therapy name and, at a minimum, the last names of the first two authors. If this was unsuccessful, other key words from the abstract and authors were entered. All PubMed results were then analyzed for similarity to the ASCO abstract. When necessary, proper study names, numbers of patients under study, and dates of enrollment were used to confirm identity. Two searches were performed, with the first in August 2014 and the second in March 2015.

Determination of Statistical Significance

In abstracts reporting randomized trials, we determined whether statistical significance for its primary endpoint or for any endpoint was reported. Three attributes were accepted as evidence of statistical significance: a p value of <.05; nonoverlapping confidence intervals if a p value was not provided; or use of the term “statistical significance” or a variation thereof. To have statistical significance in its primary endpoint, the abstract had to clearly identify the primary endpoint and report its statistical analysis. In our database, this was recorded as “yes,” “no,” or “unable to determine.” When multiple primary endpoints were reported, statistical significance in any endpoint was sufficient to record as yes. Abstracts that either did not report a primary endpoint or reported a primary endpoint but did not list results for that endpoint were labeled “unable to determine.” If percentages for overall survival, for example, were reported on two drugs, but no p values or confidence intervals were reported, that study was reported as unable to determine. If a study’s final results were not mature at the time of abstract publication and listed as such, that study was recorded as unable to determine.

Statistical Analysis

Statistical analysis was performed using STATA (version 12.1; Statacorp, College Station, TX, http://www.stata.com/). Descriptive statistics are reported. Continuous patient numbers were categorized into quartiles determined separately for randomized and nonrandomized trials. The main outcome of this study is publication rate. To identify potential factors associated with higher publication rates for randomized and nonrandomized trials, univariable comparisons were performed by using the chi-square test. Multivariable models were constructed for randomized and nonrandomized studies by using the following covariates: (a) for randomized trials: sample size, a coauthor with pharmaceutical company affiliation, cooperative group participation, use of a non-FDA-approved therapeutic, and year of publication; (b) for nonrandomized studies: use of non-FDA-approved therapeutic, sample size, trial phase, and year of publication.

Results

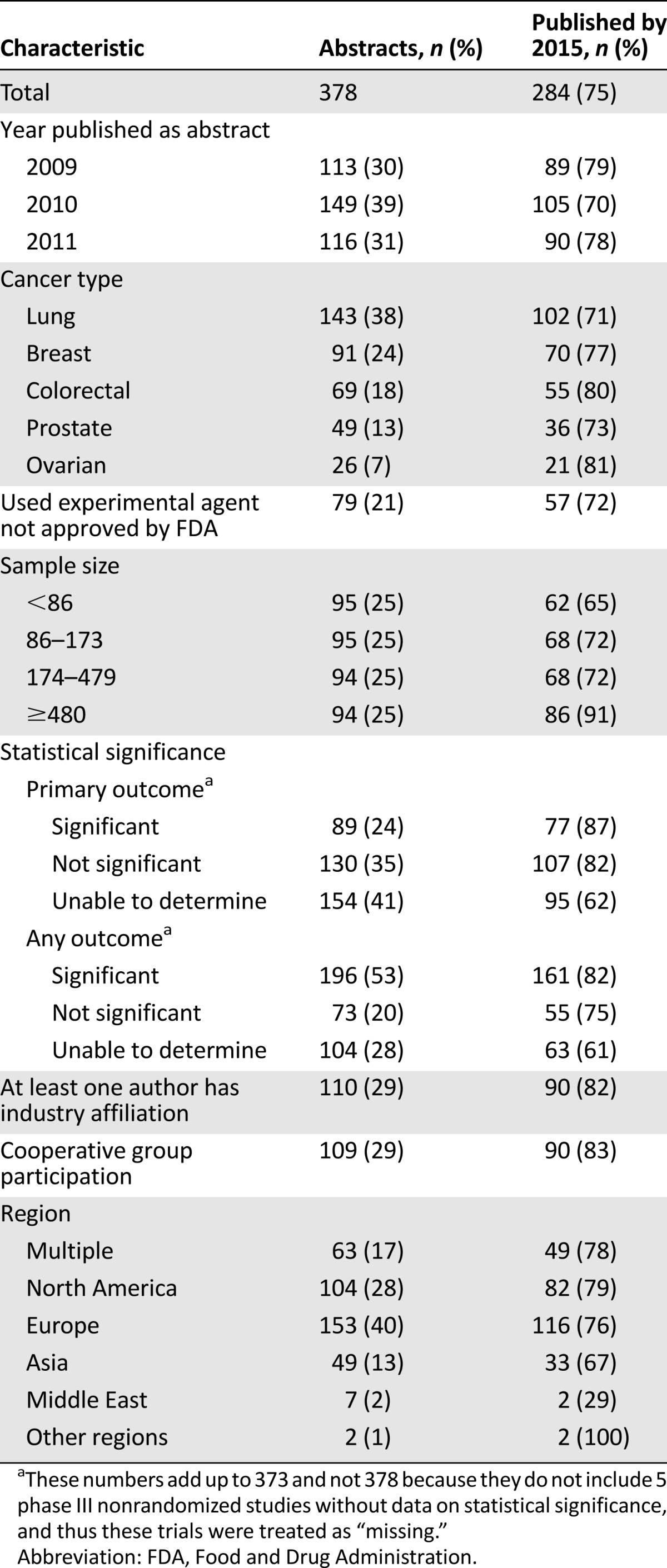

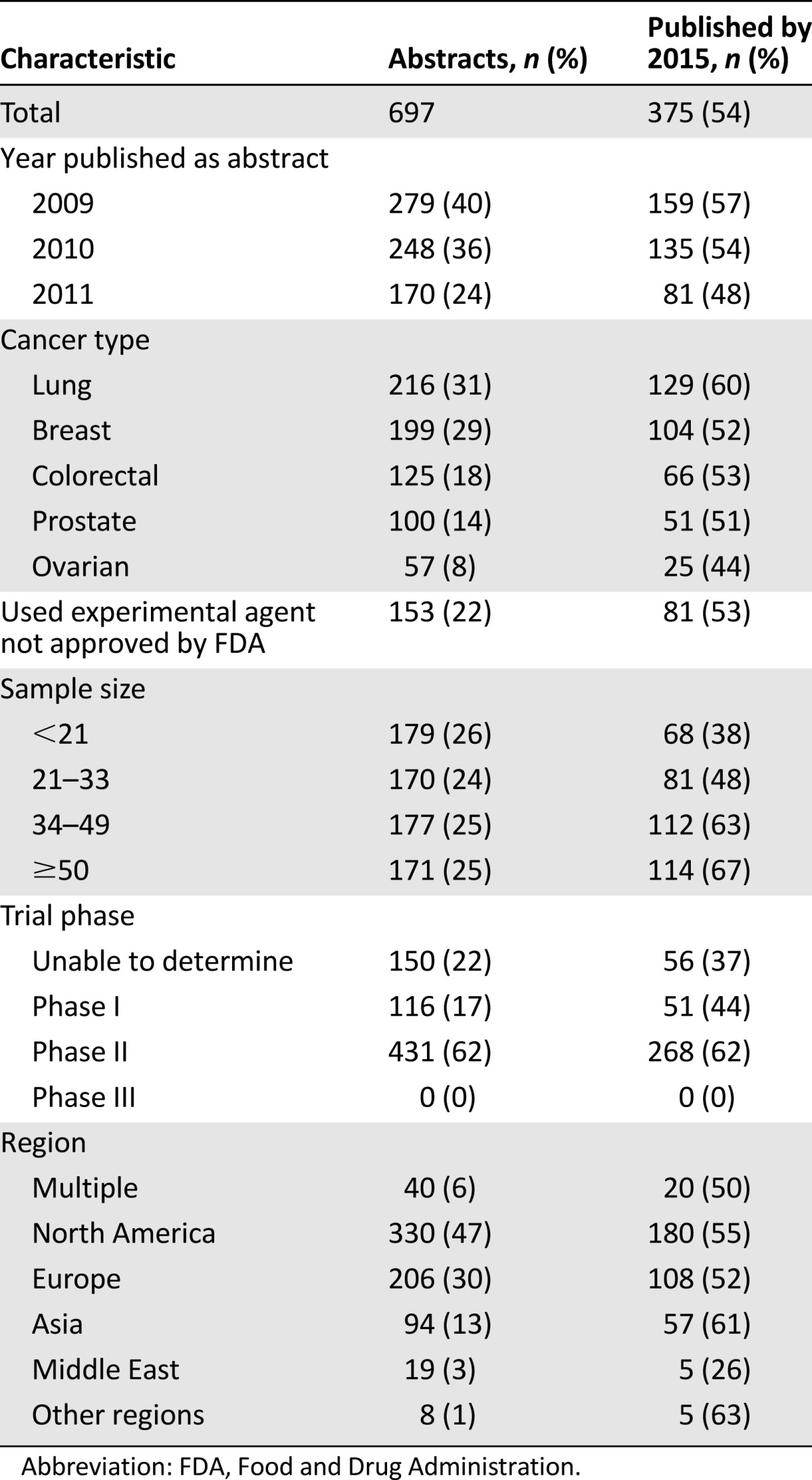

In all, we identified and extracted data from 1,075 abstracts published in 2009–2011: 378 randomized and 697 nonrandomized trials. Baseline characteristics of the abstracts reporting randomized and nonrandomized trials are shown in Tables 1 and 2, respectively. These tables also tabulate the percentage of the clinical trials reported in the abstracts that had been published at the time of this analysis. Sixty-one percent of the clinical trials reported in the abstracts were published in peer-reviewed journals, including 75% and 54% of those reporting on randomized and nonrandomized trials, respectively (75% vs. 54%, p < .001). We found an overall publication rate of 61%.

Table 1.

Characteristics of abstracts reporting results of randomized trials

Table 2.

Characteristics of abstracts reporting results of nonrandomized trials

Among abstracts reporting on randomized trials, 30% were from 2009, 39% from 2010, and 31% from 2011. Among abstracts reporting on nonrandomized trials, 40% were from 2009, 36% from 2010, and 24% from 2011. Abstracts describing lung cancer trials were the most common, comprising 38% and 31% of abstracts reporting the results of randomized and nonrandomized trials, respectively. An experimental agent was evaluated in 21% and 22% of randomized and nonrandomized trials, respectively.

To evaluate the effect of a study’s sample size on eventual publication, abstracts were grouped by quartiles. For randomized trials, quartiles were <86, 86–173, 174–479, and ≥480; quartiles for nonrandomized trials were <21, 21–33, 34–49, and ≥50. In both cases, publication rates increased with sample size: from 65% to 91% for abstracts reporting the results of the randomized trials and from 38% to 67% in the nonrandomized trial set, with p < .001 for this difference.

When the outcomes of the randomized clinical trials were examined, we found that 24% of abstracts reported that the primary outcome had achieved statistical significance, whereas 35% stated that the trial results failed to reach statistical significance. We were either unable to determine the primary outcome or the authors did not report statistics in 41% of the abstracts describing randomized trials. Among nonrandomized trials, 17% and 62% of abstracts described the results of phase I and phase II trials, respectively. We were unable to determine trial phase in 22% of abstracts.

A coauthor affiliated with industry was found in 29% of the abstracts reporting on randomized trials, and the results of 82% of those trials were identified as published. A cooperative group participated in 29% of the abstracts describing randomized trials, and 83% of those trials were identified as published. Studies with cooperative group participation were more likely to be published than those without their participation (83% vs. 72%; p = .026).

Abstracts submitted to ASCO came from around the world, and rates of subsequent publication of trial results in a peer-reviewed journal were not different by region (p = .474). Interestingly, however, the type of trial was influenced by geography. European investigators were responsible for 40% of randomized trials, whereas North American investigators were responsible for 47% of the abstracts describing nonrandomized trials. This variation in geographic distribution between the two trial types was statistically significant (p < .001).

We next performed a multivariable analysis of predictors of publication for abstracts reporting randomized and nonrandomized studies, and these are shown in Table 3. Among abstracts reporting the results of randomized trials, there was no significant difference in publication rate based on the year the abstract was presented at ASCO (p = .074). And, although the use of an experimental agent was also not a significant variable (odds ratio [OR] 0.76 [0.38−1.52]; p = .441), sample size was a statistically significant predictor of publication (OR 1.23 for an increase in sample size by 100 participants [1.11–1.36]; p < .001). The presence of an industry-affiliated author or involvement of a cooperative group was associated with similar higher rates of publication—OR 2.37 ([1.20–4.68]; p = .013) and OR 2.21 ([1.21–4.06]; p = .010)—respectively.

Table 3.

Factors associated with rate of publication of abstracts reporting randomized and nonrandomized trials in the multivariable logistic regression model

In a similar multivariable analysis of abstracts reporting nonrandomized trials, there was again no significant difference in the publication rate based on the year the ASCO abstract appeared (p = .107). As with abstracts reporting nonrandomized trials, the use of an experimental agent was no more likely to lead to publication—OR 0.89 (0.61–1.29); p = .532—whereas sample size was also a statistically significant predictor of publication—OR 1.64 per increase in sample size by 100 participants (1.15–2.34); p = .006. Abstracts reporting phase I studies were eventually published with an OR of 1.72 (1.01–2.92; p = .045), and phase II studies with an OR 3.09 (2.07–4.61; p < .001) compared with trials with unknown phase. Phase I and II studies were in general more likely to be published than trials with unknown phase (p < .001).

Among phase I trials, a larger percentage of those using FDA-approved drugs were subsequently published (36/75; 48%) than those using only experimental agents (15/41; 37%), but this difference was not significant (p = .25). After adjustment for patient number and year of publication, the odds of being published for studies using nonexperimental drugs was 0.82 (95% CI: 0.36–1.88, p = .647) times those using experimental drugs.

Discussion

Our data demonstrate a continued failure to publish clinical trial data in peer-reviewed journals for a substantial number of studies deemed worthy of dissemination as an ASCO Annual Meeting abstract. With respect to the factors influencing eventual publication, we found that abstracts reporting randomized trials were more likely to be published than abstracts of nonrandomized trials. Sample size influenced eventual publication of data in abstracts from both randomized and nonrandomized trials, with likelihood increasing as the number of patients enrolled increased. Among randomized trials, multivariable analysis showed significantly higher rates of publication for abstracts with at least one industry author or affiliation with a cooperative group. In nonrandomized trials, we found a statistically significant association of publication with advanced trial phase.

The nonpublication of completed clinical trials is a problem not limited to oncology; a recent analysis of clinical trials from all disciplines found that 30% of completed clinical trials had not reported results in any public domain 4 years after completion [20], despite the fact that Section 801 of the FDA Amendments Act requires that study results be posted on ClinicalTrials.gov [21].

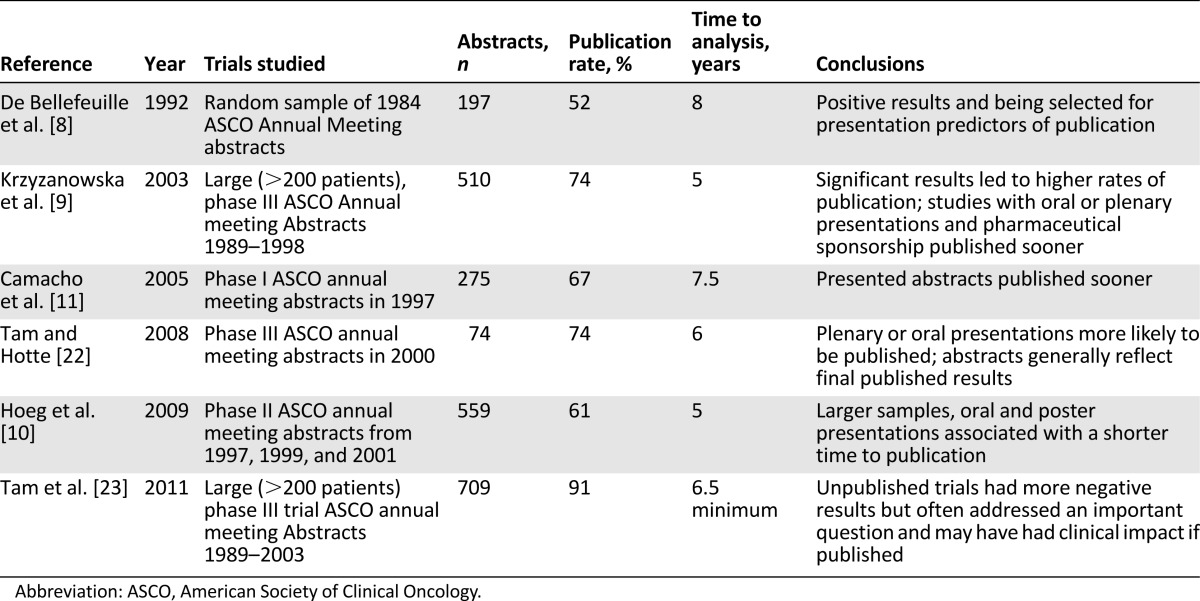

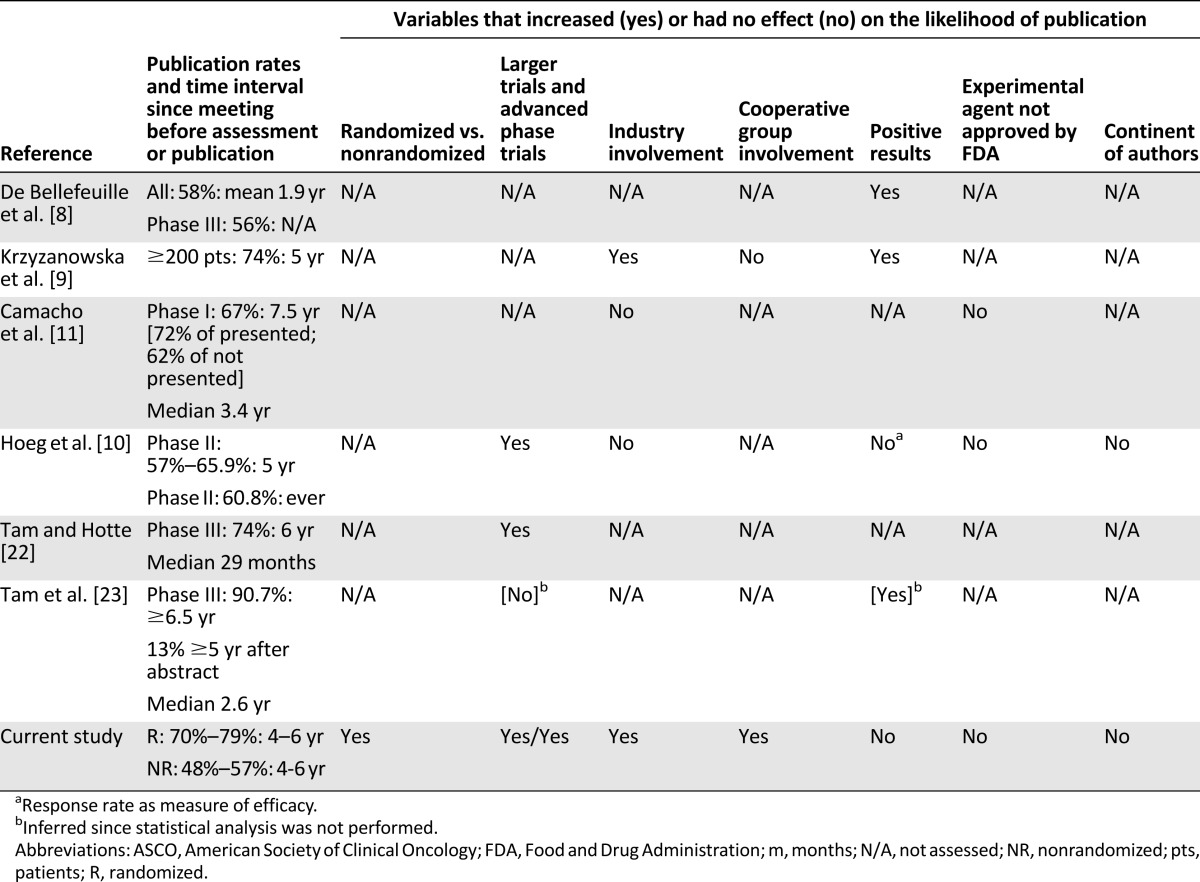

Our analysis is the most extensive examination of publication of oncology abstracts from the largest annual international oncology meeting. Prior studies using similar methods in oncology are summarized in Table 4, and a comparison of our analysis and others is summarized in Table 5 [8–11, 22, 23]. We found that the clinical trial data from 75% of abstracts reporting randomized trials and from 54% of those describing nonrandomized trials were published by March 2015, a statistically significant difference (p < .001). Our 75% rate of publication for abstracts reporting randomized trials in the ASCO database from 2009–2011 is similar to the 56%–91% reported by others who assessed rates of publication of randomized or large trials [1, 9, 22–27]. Our finding that clinical data from only 54% of abstracts reporting on nonrandomized trials had been published 4–6 years after their ASCO submissions is consistent with the 56%–74% reported by others 5–7.5 years after publication of the abstract [9, 10]. Our analysis has added significance, however, because it reports that publication rates after the creation and growth of central data repositories such as ClinicalTrials.gov and wide recognition of the problem of nonpublication. As such, the finding that 39% of clinical trials presented at an international meeting remain unpublished is quite discouraging.

Table 4.

Summary of studies evaluating the published status of ASCO annual meeting abstracts

Table 5.

Summary of studies evaluating the publication status of oncology abstracts submitted to the ASCO annual meeting

Previous studies have documented a positive influence of sample size on publication rate in oncology [9, 25, 26] and in the general literature [15], with a higher rate of nonpublication of early compared with later-phase trials [20, 28, 29]. Our results confirm this finding. The loss of small trial data is troubling because phase I trials and trials involving devices are granted an exclusion from reporting requirements on ClinicalTrials.gov [21]. Thus, it is possible that a trial could take place without any public record, and our findings indicate that this may be a frequent occurrence. This is detrimental, not only because of the possibility that an inactive combination may be pursued a second time by investigators unaware of its failure in a prior study, but also because clinicians attempting to improve individual therapy may use inactive combinations ad hoc, unaware that such a combination has already been found to be ineffective.

We also examined the impact of cooperative group and industry involvement in abstracts by using a coauthor from industry as a surrogate. In multivariable analysis, we found both to be independent predictors of publication. Our results differ from a previous study with respect to the publication of abstracts that involved cooperative groups [9]. The impact of industry sponsorship has a mixed association with publication among prior studies. Although most studies have found that industry sponsorship is associated with lower rates of publication [1, 3, 15, 20, 30, 31], some authors have found it to be a positive predictor of publication [32–34]. In oncology, this dichotomy exists as well: some have found sponsorship to be associated with lower rates of publication [10, 11], whereas others found industry sponsorship to be a positive predictor of publication [9]. We add our data to those who found a positive association, acknowledging the limitations of our method—namely, that the presence of an industry coauthor is likely specific for industry involvement, but not sensitive.

Although others have reported that positive results lead to preferential publication in the oncology literature [8, 9], our data did not demonstrate different rates of publication of trials reported in abstract form according to classification of their results as significant or nonsignificant. Mixed results are reported in the general literature regarding the importance of this property as a predictor of publication [6, 15, 30, 35]. One possible explanation for this is multiple hypothesis testing. Even negative studies may result in spuriously positive results for some post hoc endpoint, which authors may promote to spur acceptance of a manuscript [36, 37]. An alternate and desirable explanation is a cultural improvement in the acceptance and publication of nonsignificant results, as seen in some recent publications [38, 39].

Finally, as others have reported, we did not find a significant increase in publication for abstracts that report clinical trials using an experimental agent or differences based on the continents of the authors, although the data for the latter point is not unanimous [10, 11]. Whether a drug had received FDA approval for at least one indication (nonexperimental) was not a significant predictor of publication in any of our models. This fact is concerning, because once drugs are approved, they may be used by providers for off-label indications. Thus, it is both possible and likely that some oncologists are using agents in ways that have been tested but not published. In short, the failure to publish may have tangible real-world consequences.

There are several limitations to our analysis. In any study of this nature, the determination of publication rates can be difficult. Some trials open, but do not accrue enough patients to allow a meaningful outcome, although these would be unlikely to have been accepted as ASCO abstracts. Second, we relied on ASCO Annual Meeting abstracts, rather than a comprehensive registry, to identify clinical trials. Our analysis depended on being able to extract pertinent data from these abstracts. A 2004 analysis of ASCO abstracts concluded that the reporting of study details in ASCO abstracts is flawed [40]. Other authors have concluded the same [41]. Additionally, our study, having been finalized in March of 2015, allowed 4–6 years for abstracts from 2009 to 2011 to mature. In studies accruing patients to a rare or slowly developing disease, this may have been insufficient time. Finally, we used coauthorship by a member of industry as a surrogate for industry involvement in a trial, a method we acknowledge to have poor sensitivity.

The ethics of conducting clinical trials requires physicians to report their data, regardless of outcome [1]. Failure to do so is an ethical breach that also places patients at risk [42]. Ours is the largest summary of publication of recent oncology trials presented at a major conference and their subsequent publication in the biomedical literature. Discouragingly, our findings are consistent with previous reports both in oncology and in other disciplines and do not show improvement with time or with the creation of central data repositories such as ClinicalTrials.gov.

Solutions to this problem include a change in thinking about what publications matter, changing views of journal impact factors, and reducing barriers to publication. Major journals must lead the way by encouraging—indeed seeking out—negative studies for publication and must highlight these with editorial comments just as they highlight trials with “positive” outcomes. The Clinical Trial Results section in The Oncologist (http://theoncologist.alphamedpress.org/cgi/collection/clinical-trial-results) is one such solution, anchored in the principle that the results of all studies, including those that did not complete accrual or meet other endpoints, should be published. The section includes several model processes, such as an automated process for creating Kaplan-Meier and waterfall plots. Others have proposed solutions that are alternatives to conventional publishing [43]. Physicists, for example, prepublish manuscripts in an electronic archive (arXiv), which then undergo commentary from the scientific community and revision (http://arxiv.org/). arXiv, which archives manuscripts from multiple scientific disciplines, reached 1 million “eprints” in January 2015. Ultimately, our obligation to patients who enroll in clinical trials is to report the results.

Author Contributions

Conception/Design: Paul R. Massey, Vinay Prasad, Susan E. Bates, Tito Fojo

Collection and/or assembly of data: Paul R. Massey, Ruibin Wang

Data analysis and interpretation: Paul R. Massey, Ruibin Wang, Vinay Prasad, Susan E. Bates, Tito Fojo

Manuscript writing: Paul R. Massey, Vinay Prasad

Final approval of manuscript: Vinay Prasad, Susan E. Bates, Tito Fojo

Disclosures

The authors indicated no financial relationships.

References

- 1.Jones CW, Handler L, Crowell KE, et al. Non-publication of large randomized clinical trials: Cross sectional analysis. BMJ. 2013;347:f6104. doi: 10.1136/bmj.f6104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Riveros C, Dechartres A, Perrodeau E, et al. Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS Med. 2013;10:e1001566; discussion e1001566. doi: 10.1371/journal.pmed.1001566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ross JS, Mulvey GK, Hines EM, et al. Trial publication after registration in ClinicalTrials.Gov: A cross-sectional analysis. PLoS Med. 2009;6:e1000144. doi: 10.1371/journal.pmed.1000144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Misakian AL, Bero LA. Publication bias and research on passive smoking: Comparison of published and unpublished studies. JAMA. 1998;280:250–253. doi: 10.1001/jama.280.3.250. [DOI] [PubMed] [Google Scholar]

- 5.Dickersin K, Min YI, Meinert CL. Factors influencing publication of research results. Follow-up of applications submitted to two institutional review boards. JAMA. 1992;267:374–378. [PubMed] [Google Scholar]

- 6.Ioannidis JP. Effect of the statistical significance of results on the time to completion and publication of randomized efficacy trials. JAMA. 1998;279:281–286. doi: 10.1001/jama.279.4.281. [DOI] [PubMed] [Google Scholar]

- 7.Krzyzanowska MK. Off-label use of cancer drugs: A benchmark is established. J Clin Oncol. 2013;31:1125–1127. doi: 10.1200/JCO.2012.46.9460. [DOI] [PubMed] [Google Scholar]

- 8.De Bellefeuille C, Morrison CA, Tannock IF. The fate of abstracts submitted to a cancer meeting: Factors which influence presentation and subsequent publication. Ann Oncol. 1992;3:187–191. doi: 10.1093/oxfordjournals.annonc.a058147. [DOI] [PubMed] [Google Scholar]

- 9.Krzyzanowska MK, Pintilie M, Tannock IF. Factors associated with failure to publish large randomized trials presented at an oncology meeting. JAMA. 2003;290:495–501. doi: 10.1001/jama.290.4.495. [DOI] [PubMed] [Google Scholar]

- 10.Hoeg RT, Lee JA, Mathiason MA, et al. Publication outcomes of phase II oncology clinical trials. Am J Clin Oncol. 2009;32:253–257. doi: 10.1097/COC.0b013e3181845544. [DOI] [PubMed] [Google Scholar]

- 11.Camacho LH, Bacik J, Cheung A, et al. Presentation and subsequent publication rates of phase I oncology clinical trials. Cancer. 2005;104:1497–1504. doi: 10.1002/cncr.21337. [DOI] [PubMed] [Google Scholar]

- 12.Easterbrook PJ, Berlin JA, Gopalan R, et al. Publication bias in clinical research. Lancet. 1991;337:867–872. doi: 10.1016/0140-6736(91)90201-y. [DOI] [PubMed] [Google Scholar]

- 13.Ahmed I, Sutton AJ, Riley RD. Assessment of publication bias, selection bias, and unavailable data in meta-analyses using individual participant data: A database survey. BMJ. 2012;344:d7762. doi: 10.1136/bmj.d7762. [DOI] [PubMed] [Google Scholar]

- 14.Dickersin K, Chan S, Chalmers TC, et al. Publication bias and clinical trials. Control Clin Trials. 1987;8:343–353. doi: 10.1016/0197-2456(87)90155-3. [DOI] [PubMed] [Google Scholar]

- 15.von Elm E, Röllin A, Blümle A, et al. Publication and non-publication of clinical trials: longitudinal study of applications submitted to a research ethics committee. Swiss Med Wkly. 2008;138:197–203. doi: 10.4414/smw.2008.12027. [DOI] [PubMed] [Google Scholar]

- 16.Lee K, Bacchetti P, Sim I. Publication of clinical trials supporting successful new drug applications: A literature analysis. PLoS Med. 2008;5:e191. doi: 10.1371/journal.pmed.0050191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chapman SJ, Shelton B, Mahmood H, et al. Discontinuation and non-publication of surgical randomised controlled trials: Observational study. BMJ. 2014;349:g6870. doi: 10.1136/bmj.g6870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kasenda B, von Elm E, You J, et al. Prevalence, characteristics, and publication of discontinued randomized trials. JAMA. 2014;311:1045–1051. doi: 10.1001/jama.2014.1361. [DOI] [PubMed] [Google Scholar]

- 19.Anderson ML, Chiswell K, Peterson ED, et al. Compliance with results reporting at ClinicalTrials.gov. N Engl J Med. 2015;372:1031–1039. doi: 10.1056/NEJMsa1409364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Saito H, Gill CJ. How frequently do the results from completed US clinical trials enter the public domain?—A statistical analysis of the ClinicalTrials.gov database. PLoS One. 2014;9:e101826. doi: 10.1371/journal.pone.0101826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.FDAAA 801 Requirements. Available at https://clinicaltrials.gov/ct2/manage-recs/fdaaa.

- 22.Tam VC, Hotte SJ. Consistency of phase III clinical trial abstracts presented at an annual meeting of the American Society of Clinical Oncology compared with their subsequent full-text publications. J Clin Oncol. 2008;26:2205–2211. doi: 10.1200/JCO.2007.14.6795. [DOI] [PubMed] [Google Scholar]

- 23.Tam VC, Tannock IF, Massey C, et al. Compendium of unpublished phase III trials in oncology: Characteristics and impact on clinical practice. J Clin Oncol. 2011;29:3133–3139. doi: 10.1200/JCO.2010.33.3922. [DOI] [PubMed] [Google Scholar]

- 24.Manzoli L, Flacco ME, D’Addario M, et al. Non-publication and delayed publication of randomized trials on vaccines: Survey. BMJ. 2014;348:g3058. doi: 10.1136/bmj.g3058. [DOI] [PubMed] [Google Scholar]

- 25.Ross JS, Mocanu M, Lampropulos JF, et al. Time to publication among completed clinical trials. JAMA Intern Med. 2013;173:825–828. doi: 10.1001/jamainternmed.2013.136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jones N. Half of US clinical trials go unpublished. Nature 10.1038/nature.2013.14286.

- 27.Rosenthal R, Kasenda B, Dell-Kuster S, et al. Completion and publication rates of randomized controlled trials in surgery: An empirical study. Ann Surg. 2015;262:68–73. doi: 10.1097/SLA.0000000000000810. [DOI] [PubMed] [Google Scholar]

- 28.Prenner JL, Driscoll SJ, Fine HF, et al. Publication rates of registered clinical trials in macular degeneration. Retina. 2011;31:401–404. doi: 10.1097/IAE.0b013e3181eef2ad. [DOI] [PubMed] [Google Scholar]

- 29.Decullier E, Chan AW, Chapuis F. Inadequate dissemination of phase I trials: A retrospective cohort study. PLoS Med. 2009;6:e1000034. doi: 10.1371/journal.pmed.1000034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lexchin J, Bero LA, Djulbegovic B, et al. Pharmaceutical industry sponsorship and research outcome and quality: Systematic review. BMJ. 2003;326:1167–1170. doi: 10.1136/bmj.326.7400.1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bekelman JE, Li Y, Gross CP. Scope and impact of financial conflicts of interest in biomedical research: S systematic review. JAMA. 2003;289:454–465. doi: 10.1001/jama.289.4.454. [DOI] [PubMed] [Google Scholar]

- 32.Glick N, MacDonald I, Knoll G, et al. Factors associated with publication following presentation at a transplantation meeting. Am J Transplant. 2006;6:552–556. doi: 10.1111/j.1600-6143.2005.01203.x. [DOI] [PubMed] [Google Scholar]

- 33.Barnes DE, Bero LA. Why review articles on the health effects of passive smoking reach different conclusions. JAMA. 1998;279:1566–1570. doi: 10.1001/jama.279.19.1566. [DOI] [PubMed] [Google Scholar]

- 34.Lundh A, Sismondo S, Lexchin J, et al. Industry sponsorship and research outcome. Cochrane Database Syst Rev. 2012;12:MR000033. doi: 10.1002/14651858.MR000033.pub2. [DOI] [PubMed] [Google Scholar]

- 35.Hopewell S, Loudon K, Clarke MJ, et al. Publication bias in clinical trials due to statistical significance or direction of trial results Cochrane Database Syst Rev 2009:MR000006. [DOI] [PMC free article] [PubMed]

- 36.Boutron I, Dutton S, Ravaud P, et al. Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA. 2010;303:2058–2064. doi: 10.1001/jama.2010.651. [DOI] [PubMed] [Google Scholar]

- 37.Vera-Badillo FE, Shapiro R, Ocana A, et al. Bias in reporting of end points of efficacy and toxicity in randomized, clinical trials for women with breast cancer. Ann Oncol. 2013;24:1238–1244. doi: 10.1093/annonc/mds636. [DOI] [PubMed] [Google Scholar]

- 38.Fassnacht M, Berruti A, Baudin E, et al. Linsitinib (OSI-906) versus placebo for patients with locally advanced or metastatic adrenocortical carcinoma: A double-blind, randomised, phase 3 study. Lancet Oncol. 2015;16:426–435. doi: 10.1016/S1470-2045(15)70081-1. [DOI] [PubMed] [Google Scholar]

- 39.Blay JY, Pápai Z, Tolcher AW, et al. Ombrabulin plus cisplatin versus placebo plus cisplatin in patients with advanced soft-tissue sarcomas after failure of anthracycline and ifosfamide chemotherapy: A randomised, double-blind, placebo-controlled, phase 3 trial. Lancet Oncol. 2015;16:531–540. doi: 10.1016/S1470-2045(15)70102-6. [DOI] [PubMed] [Google Scholar]

- 40.Krzyzanowska MK, Pintilie M, Brezden-Masley C, et al. Quality of abstracts describing randomized trials in the proceedings of American Society of Clinical Oncology meetings: Guidelines for improved reporting. J Clin Oncol. 2004;22:1993–1999. doi: 10.1200/JCO.2004.07.199. [DOI] [PubMed] [Google Scholar]

- 41.Hopewell S, Clarke M. Abstracts presented at the American Society of Clinical Oncology conference: How completely are trials reported? Clin Trials. 2005;2:265–268. doi: 10.1191/1740774505cn091oa. [DOI] [PubMed] [Google Scholar]

- 42.Hudson KL, Collins FS. Sharing and reporting the results of clinical trials. JAMA. 2015;313:355–356. doi: 10.1001/jama.2014.10716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Vale RD. Accelerating scientific publication in biology. Proc Natl Acad Sci USA. 2015;112:13439–13446. doi: 10.1073/pnas.1511912112. [DOI] [PMC free article] [PubMed] [Google Scholar]