Abstract

Purpose:

It aimed to find if written test results improved for advanced cardiac life support (ACLS) taught in flipped classroom/team-based Learning (FC/TBL) vs. lecture-based (LB) control in University of California-Irvine School of Medicine, USA.

Methods:

Medical students took 2010 ACLS with FC/TBL (2015), compared to 3 classes in LB (2012-14) format. There were 27.5 hours of instruction for FC/TBL model (TBL 10.5, podcasts 9, small-group simulation 8 hours), and 20 (12 lecture, simulation 8 hours) in LB. TBL covered 13 cardiac cases; LB had none. Seven simulation cases and didactic content were the same by lecture (2012-14) or podcast (2015) as was testing: 50 multiple-choice questions (MCQ), 20 rhythm matchings, and 7 fill-in clinical cases.

Results:

354 students took the course (259 [73.1%] in LB in 2012-14, and 95 [26.9%] in FC/TBL in 2015). Two of 3 tests (MCQ and fill-in) improved for FC/TBL. Overall, median scores increased from 93.5% (IQR 90.6, 95.4) to 95.1% (92.8, 96.7, P=0.0001). For the fill-in test: 94.1% for LB (89.6, 97.2) to 96.6% for FC/TBL (92.4, 99.20 P=0.0001). For MC: 88% for LB (84, 92) to 90% for FC/TBL (86, 94, P=0.0002). For the rhythm test: median 100% for both formats. More students failed 1 of 3 tests with LB vs. FC/TBL (24.7% vs. 14.7%), and 2 or 3 components (8.1% vs. 3.2%, P=0.006). Conversely, 82.1% passed all 3 with FC/TBL vs. 67.2% with LB (difference 14.9%, 95% CI 4.8-24.0%).

Conclusion:

A FC/TBL format for ACLS marginally improved written test results.

Keywords: Advanced cardiac life support, Choice behavior, Learning, Students, United States

Introduction

Traditional education reflects passive transfer of information from lecturer to students in a large group. This has been shown inferior to active learning [1], which promotes more thorough and lasting understanding [2]. Active learning is a process whereby students engage in activities, such as reading, writing, discussion, or problem solving that promotes analysis, synthesis, and evaluation of class content [3]. Team-based learning (TBL) is one example of active learning, and uses a “flipped classroom” (FC) approach to promote knowledge permanence. In a FC, students receive a first exposure to material prior to class, for example, by reading or watching podcasts at home. In TBL, students then collaborate through group interactions in class to solve clinical problems and reflect on their learning [4]. Medical education has been slow to adopt these practices. While low-fidelity simulation has been used in ACLS for more than 30 years, small-group learning as an adjunct has not been studied [5]. ACLS training, as dictated by the American Heart Association (AHA), is typically done with classroom didactics, and reinforced with simulation. A recent report from the AHA indicates a paradigm shift in their core training course to be implemented with the 2015 guidelines, to involve FC with small- group learning, simulations, and online learning modules [6]. We aim to be the first to assess and report this FC/TBL model for ACLS training in the University of California-Irvine School of Medicine. We hypothesized that FC/TBL would enhance learning as shown by improved performance on multimodal written testing in 4th year (senior) medical students, compared to 3 recent historical control classes taught the same content in lecture based (LB) format.

Methods

The School of Medicine has 104 entering students per class. While the ACLS course is “mandatory,” a variable number enrolled in the course each year due to conflicts with MBA and MPH coursework, and delayed graduation for those pursuing PhD. Ninety five final year students from the School of Medicine participated in the FC/TBL ACLS course in 2015.

The simulation part of the course was taught in 8 rooms, each with a crash cart/defibrillator/transcutaneous pacemaker, airway/intubation mannequin and high-fidelity simulator (Laerdahl Sim-Man, Wappingers Falls, New York), as part of a 6,000 square foot simulation center. The TBL component was taught in a large lecture hall with tiered seating.

We taught late (March) final-year students 2010 AHA ACLS in a FC/TBL model in 2015, and compared written test performance to controls of 3 classes in LB format (2012-14). There were 27.5 scheduled hours in the FC/TBL model (TBL 10.5, podcasts 9, small-group simulation 8 hours), and 20 hours (12 lecture, small-group simulation 8 hours) in LB format. In essence, 12 hours of classroom lectures (2012-14) were replaced by 9 hours of recorded podcasts (2015), with the same material presented by the same instructor, and 10.5 hours of TBL. Comparison of teaching formats is shown in Table 1.

Table 1.

Scheduled hours of a variety of teaching course formats in the advanced cardiac life support classroom in the University of California-Irvine School of Medicine, the United States of America

| Course format | Flipped classroom/ Team-based learning (2015) | Lecture-based learning (2012-14) |

|---|---|---|

| Podcasts | 9 | 0 |

| Lectures | 0 | 12 |

| Team-based learning | 10.5 | 0 |

| Small-group simulation | 8 | 8 |

| Total classroom time | 18.5 | 20 |

| Total instructional time | 27.5 | 20 |

We used ungraded 10-question quizzes at the beginning of each class session to both encourage and gauge student compliance with assigned podcast viewing. Three to 4 multiple choice (MC) questions were drawn from each 20-45 minute podcast.

The TBL group application exercises covered 13 cardiac- and peri-arrest cases; the LB format had none. These TBL exercises were:

Acute coronary syndrome (ACS)

Ventricular fibrillation (VF)

Refractory VF

Post-cardiac arrest care

Respiratory distress

Pulseless electrical activity (PEA) case 1 septic shock

PEA case 2 hyperkalemia

Asystole

Symptomatic bradycardia

Paroxysmal supraventricular tachycardia (PSVT, SVT) with good perfusion

Ventricular tachycardia (VT), stable

VT, unstable

Acute ischemic stroke

We used 8 cardiac- and peri-arrest simulation cases with high-fidelity mannequins for both formats, in small groups of 5-9 with 1 instructor per group. These were:

ACS/ventricular fibrillation (VF) cardiac arrest/3rd degree atrioventricular block (AVB)/ST elevation myocardial infarction (STEMI) diagnosis

atrial fibrillation (AF) with rapid ventricular response (RVR)

stable then unstable (VT)

PSVT

PEA

symptomatic bradycardia

PEA case 2 hyperkalemia

unknown SVT/rate 150/atrial flutter with 2:1 conduction vs. PSVT vs. sinus tachycardia.

Torsade de pointe/polymorphic VT

The 3 written evaluation were multiple choice question (MCQ) test (not published due to copyright by the American Heart Association), cardiac rhythm test (Appendix 1), and clinical management test (Appendix 2). The 50 items of MCQ were developed by the AHA, which covered the content of the ACLS Provider Manual [7]. The questions focused on basic and advanced airway management, algorithm application, resuscitative pharmacology, and special situations like drowning and stroke recognition. Passing score set by the AHA for the MCQ test was over 84% correct.

The rhythm knowledge evaluation consisted of 20 examples of brady- and tachyarrhythmias, heart blocks, asystole/agonal rhythm, multifocal atrial tachycardia, and ventricular fibrillation, to which the students were required to match rhythm diagnoses on a one-to-one basis. Passing score defined by the instructor for Appendix 1 test was at least 18/20 correctly matched.

The clinical management “therapeutic modalities” was a fill-in-the-blank test including 7 clinical scenarios: acute coronary syndrome, symptomatic bradycardia, pulseless electrical activity, refractory ventricular fibrillation, stable and then unstable ventricular tachycardia, third-degree heart block, and asystole (Appendix 2). Passing score established by the instructor was >87% correct for Appendix 2 test.

All written evaluation tools were based on content from the ACLS Provider Manual or obtained from the AHA. Two expert ACLS instructors/experienced clinicians (anesthesiologist and emergency physician and 1 regional faculty, evaluated all testing protocols and tools prior to implementation of the course. Although we weighted the 3 components equally in the composite “correct answer” score, the maximum possible written test points were 50 (MCQ), 20 (rhythm test, Appendix 1) and 61 (“therapeutic modalities” test, Appendix 2). Written testing was constant across all 4 classes and was allotted 3 hours.

Statistical methods: The data were analyzed using Stata (version 14.0, StataCorp, College Station, TX). We used the Kruskal-Wallis rank sum test to assess differences between FC/TBL class and the aggregate scores of the 3 control classes taught in LB format. Confidence intervals for differences in proportions were calculated. We set statistical significance at P<0.05. The study was approved by the institutional review board of University of California, Irvine (IRB Number: HS# 2014-1195 ).

Results

Total 354 students took the course (259 in LB format 2012-14, and 95 in FC/TBL format 2015). The average entering MCAT scores for the classes tested (2015 vs 2012-14) were similar: 32.1 vs. 31.8 average, and average college grade point average was 3.68 for both control and experimental groups, indicating no difference in baseline academic achievement.

Two of 3 tests had statistical improvement for the FC/TBL format. For all tests combined, median scores increased from 93.5% (IQR 90.6, 95.4) to 95.1% (92.8, 96.7, P=0.0001). For the 7 case fill-in-the-blank tests, scores improved from 94.1% correct for LB (89.6, 97.2) to 96.6% for FC/TBL (92.4, 99.20 P=0.0001). For the 50 MCQ, scores improved from 88% correct for LB (84, 92) to 90% for FC/TBL (86, 94, P=0.0002). For the 20 rhythm test matching, students did well (median 100% both formats). More students failed 1 of the 3 written tests with LB vs. FC/TBL (24.7% vs. 14.7%), and 2 or 3 components of the written test (8.1% vs. 3.2%, respectively, P=0.006 for difference in number of failed tests). Conversely, 82.1% passed all 3 parts of the written test with FC/TBL vs. 67.2% with LB. (absolute difference 14.9%, 95% CI 4.8-24.0%).

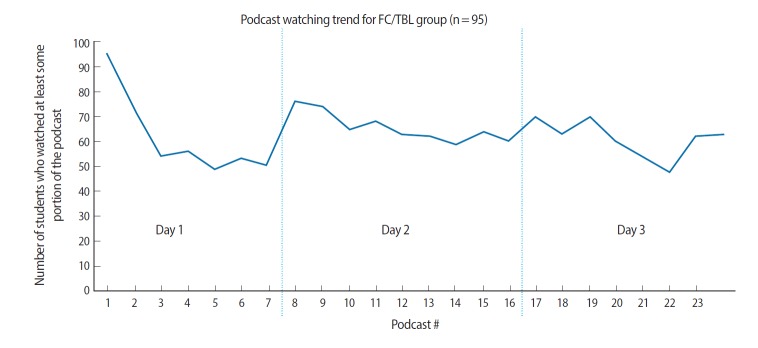

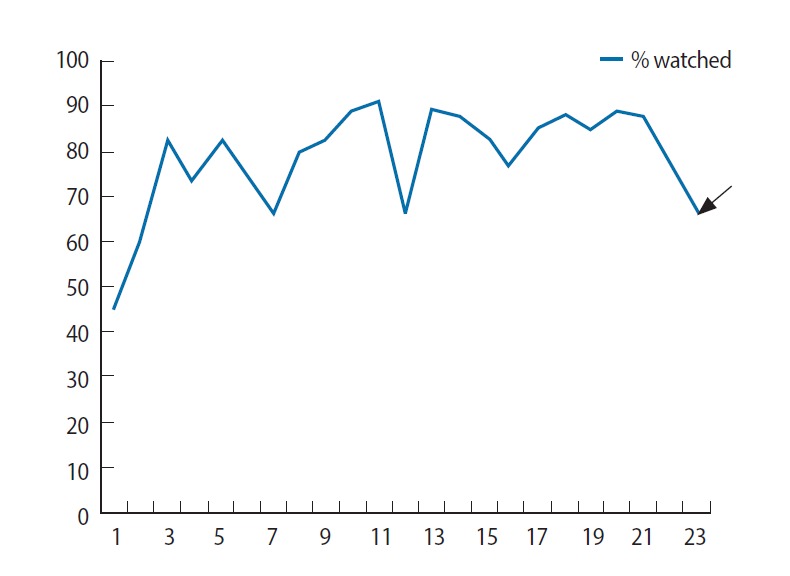

We assessed the time students in the FC/TBL group spent watching podcast instruction from analytics for each podcast obtained from the Mediasite Enterprise Video Platform (http://www.sonicfoundry.com/mediasite/, Madison, WI). We used a logged internet protocol (IP) address assigned to a device (laptop, computer, or tablet on a network) as a unique identifier to represent a single student. An average of 63 students (range 47-95, 66.3% of 95 students enrolled) viewed each of the 23 podcasts spanning 9 hours of instruction. The average length of the 23 podcasts was 23.25 minutes and the average portion watched per podcast was 77.1%. We noted a general downward trend of the number of students watching at least some portion of the podcast as the 3-day TBL phase progressed. In addition, we noted a downward trend within each day for the assigned podcast viewing (Fig. 1). However, those that watched the podcasts generally maintained or increased the proportion of each podcast viewed, until the final podcast on acute ischemic stroke (Fig. 2).

Fig. 1.

Downward trend within each day for the assigned podcast viewing by medical students during the advanced cardiac life support classroom in the University of California-Irvine School of Medicine, the United States of America.

Fig. 2.

Maintained or increased proportion of each of 23 podcasts viewed until final podcast on acute ischemic stroke for students during the advanced cardiac life support classroom in the University of California, Irvine School of Medicine, United States.

When comparing the aggregate time each student spent on line watching each podcast vs. the real-time duration of the podcast, it appeared that students spent, on average, 100% of the podcast duration watching the podcasts. In other words, time spent watching the podcast approximated the run-time of the podcasts, even though the proportion of the podcast watched was less than complete. This suggests that students must have “rewound” portions of the podcasts that they watched to a moderate degree while watching the content.

Discussion

Given improvement in test scores, we advocate for and support the AHA decision to incorporate FC and TBL techniques in the educational program for the 2015 ACLS guidelines. Evaluation methods for mastery of ACLS course, per the AHA include both content (written) and performance assessments. Successful students must demonstrate team leadership and psychomotor skills in managing cardiac arrest and peri-arrest scenarios. Though evidence for content and construct validity for the written portion of ACLS assessment is largely lacking, and some evidence is to the contrary, both written and performance assessments have been shown to correlate moderately. A previous study advocated for dual evaluation methods as complementary, demonstrating different psychomotor and cognitive skills [8,9]. Given delivery of similar content in our course, improvements in test scores indicate further mastery of the required material. In the highest-stakes environment of cardiac arrest, demonstration of mastery at an enhanced level is certainly preferable.

Further, although scores improved marginally, we found that fewer students failed 1, 2, and 3 components of the written tests. Recognizing that the only required written assessment for ACLS is currently MC, we felt that additional cognitive assessment was necessary to judge mastery of a broader set of ACLS skills, including rhythm interpretation and fill-in-the blank clinical management.

Narrative student feedback from our TBL sessions was mixed, despite numerical course evaluations of small-group sessions rated an average of 4.7 on a five-point scale. Some students viewed the TBL exercise as “inefficient,” stating that discussion with classmates seemed unnecessary, with several stating they could have done this alone. Others valued the “team” learning, and wanted to dispense with individual exercise time. These preferences seem to defeat the purpose of TBL, which relies on redundancy of personal integration of material, then small-group discussion followed by large group validation. Many opined that, since the TBL exercise was not MC format, it did not prepare them for their future testing needs.

We chose to implement TBL using individual- and group-readiness-assurance tests (IRAT and GRAT), with a worksheet that required recall of ACLS algorithm steps in clinical management. These exercises were a distinct departure from the usual MC testing of our students. We believe that medical school testing in MC format has significant limitations. Recall that the United States Medical Licensing Examination (USMLE) Parts 1 and 2, and USMLE “shelf” exams for basic science topics and clinical clerkships, are given entirely in MC format. 1 student criticized the IRAT and GRAT exercises in class, and similar fill-in-the blank case management testing as, “guess what the instructor is thinking.” Given this student’s position 2 months from graduation, the fill-in-the blank clinical scenario management test is exactly designed to demonstrate the students’ ability to “think like a doctor,” rather than regurgitate facts by choosing the best of five MCs. This speaks perhaps to the need to substantially vary and alter the USMLE format. We believe in expansion of evaluation methods that require spontaneous generation of thought.

Additional time and costs are associated with the FC/TBL model. The ACLS course required an additional 30 hours of class preparation (podcasts + worksheet development) by the instructor. The podcast recording cost is estimated at $900 ($100 per hour charged for technician time and use of recording studio). Additional costs for post-production and hosting the podcasts were absorbed by the infrastructure of the Division of Educational Technology at the School of Medicine. The remaining 21 hours of instructor preparation cost in excess of $2,000.

We had technical problems with podcasts related to limited bandwidth when 95 students tried to view the podcasts simultaneously in the same geographic area. Many students said they went home to view.

Students frequently claim that they prefer podcasts to real-time instruction because they can both speed up the podcast, running at 1.5 or 2X speed, as well as review portions of podcasts that they need to see again. They view this as more efficient. We found that these 3 dynamics balanced each other out: students who watched the podcasts spent 100% of the real-time duration watching the content, even though they covered but 77.1% of each recording. Therefore, instruction by podcast does not appear to “save time” for medical students, as they spend the same time studying without exposure to all content.

The FC model involves first exposure to learning prior to class, so face-to-face classroom time can engage students in active learning [10]. There has been little, if any, record of the FC model for teaching ACLS. Therefore, we applied this model to an ACLS course and complemented it with TBL activities to promote group interaction, reflection and student engagement. Our findings suggest that FC/TBL is a suitable model for teaching ACLS as demonstrated through marginal improvements on student assessments and student feedback: “I felt the flipped classroom format was an excellent way to start the course--the worksheets stimulated a very helpful discussion,” and, “I loved that we went over the cases and the script over and over through podcasts, quizzes, worksheets, and verbally. I think it appealed to each different style of learner.”

Our study has limitations. For the FC component, our analytics show that up to one-third of students apparently did not watch the podcasts at all. Those that did watched, on average, did for 3-quarters of the real-time duration. This confounds may claim that the new format was an improvement over LB format. The improved performance may simply have been due to increased total instructional time devoted to the course. We specifically allotted time in the mornings to watch the day’s podcasts, and then scheduled TBL for the afternoons. Other FC designs allow students to view content completely asynchronously at their sole discretion.

We used a single instructor for the large group component of TBL. Other models, perhaps preferred, would have content experts facilitate small-group discussion. Our large classroom space did not support small-group discussion during TBL. Our ACLS class was pass/fail graded, and included required remediation as per AHA guidelines. Ultimately, almost all students passed the course and received a certification card. This “low stakes” educational environment may have bred complacency in study habits and effort. Furthermore, placement of the course at the end of 4th year, just before or after match day (depending on the academic year), may have negatively motivated students for course content mastery. The class forming the experimental group in 2015 had received iPads upon entry to medical school and was very technologically savvy by their 4th year. This may have enhanced the effectiveness of the FC model.

In conclusion, a FC/TBL format for ACLS marginally improved written test results for final-year medical students over traditional LB format, and significantly reduced the number of students who failed 1, 2, and 3 components of the written evaluation.

Appendix 1. Electrocardiograhy rhythm strip test and answer sheet

Appendix 2. UC Irvine Department of Emergency Medicine. Advanced cardiac life support course therapeutic modalities test, April 2015

Footnotes

Conflict of interest

No potential conflict of interest relevant to this article was reported.

Supplementary material

Audio recording of the abstract.

References

- 1.Subramanian A, Timberlake M, Mittakanti H, Lara M, Brandt ML. Novel educational approach for medical students: improved retention rates using interactive medical software compared with traditional lecture-based format. J Surg Educ. 2012;2012:449–452. doi: 10.1016/j.jsurg.2012.05.013. http://dx.doi.org/10.1016/j.jsurg.2012.05.013. [DOI] [PubMed] [Google Scholar]

- 2.Littlewood KE, Shilling AM, Stemland CJ, Wright EB, Kirk MA. High-fidelity simulation is superior to case-based discussion in teaching the management of shock. Med Teach. 2013;35:e1003–1010. doi: 10.3109/0142159X.2012.733043. [DOI] [PubMed] [Google Scholar]

- 3.Center for research on learning and teaching . Ann Arbor, MI: University of Michigan; 2012. Active learning [Internet] [cited 2016 Jan 1]. Available from: http://www.crlt.umich.edu/tstrategies/tsal. [Google Scholar]

- 4.Thompson BM, Schneider VF, Haidet P, Levine RE, McMahon KK, Perkowski LC, Richards BF. Team-based learning at ten medical schools: two years later. Med Educ. 2007;41:250–257. doi: 10.1111/j.1365-2929.2006.02684.x. http://dx.doi.org/10.1111/j.1365-2929.2006.02684.x. [DOI] [PubMed] [Google Scholar]

- 5.Wayne DB, Butter J, Siddall VJ, Fudala MJ, Wade LD, Feinglass J, McGaghie WC. Mastery learning of advanced cardiac life support skills by internal medicine residents using simulation technology and deliberate practice. J Gen Intern Med. 2006;21:251–256. doi: 10.1111/j.1525-1497.2006.00341.x. http://dx.doi.org/10.1111/j.1525-1497.2006.00341.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.American Heart Association . Dalls, TX: American Heart Association; 2015. 2015 ACLS Guideline Update [Internet] Available from: http://view.heartemail.org/?j=fe581176716c07757410&m=fe681570756c02757514&ls=fdbb1573706d07747d11717762&l=fe8713787760007572&s=fe3015777561047c741379&jb=ffcf14&ju=fe24107971630d7d751274&r=0. [Google Scholar]

- 7.Sinz E, Navarro K, Soderberg ES. Advanced cardiovascular life support provider manual. Dalls, TX: American Heart Association; 2011. [Google Scholar]

- 8.Rodgers DL, Bhanji F, McKee BR. Written evaluation is not a predictor for skills performance in an advanced cardiovascular life support course. Resuscitation. 2010;81:453–456. doi: 10.1016/j.resuscitation.2009.12.018. http://dx.doi.org/10.1016/j.resuscitation.2009.12.01. [DOI] [PubMed] [Google Scholar]

- 9.Strom SL, Anderson CL, Yang L, Canales C, Amin A, Lotfipour S, McCoy CE, Langdorf MI. Correlation of simulation examination to written test scores for advanced cardiac life support testing: prospective cohort study. West J Emerg Med. 2015;16:907–912. doi: 10.5811/westjem.2015.10.26974. http://dx.doi.org/10.5811/westjem.2015.10.26974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Brame C. Nashville, TN: Vanderbilt University Center for Teaching; 2013. Flipping the classroom [Internet] [cited 2016 Jan 1]. Available from: http://cft.vanderbilt.edu/guides-sub-pages/flipping-the-classroom/ [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Audio recording of the abstract.