Abstract

Introduction and purpose:

Radiology information system (RIS) in order to reduce workload and improve the quality of services must be well-designed. Heuristic evaluation is one of the methods that understand usability problems with the least time, cost and resources. The aim of present study is to evaluate the usability of RISs in hospitals.

Research Method:

This is a cross-sectional descriptive study (2015) that uses heuristic evaluation method to evaluate the usability of RIS used in 3 hospitals of Tabriz city. The data are collected using a standard checklist based on 13 principles of Nielsen Heuristic evaluation method. Usability of RISs was investigated based on the number of components observed from Nielsen principles and problems of usability based on the number of non-observed components as well as non-existent or unrecognizable components.

Results:

by evaluation of RISs in each of the hospitals 1, 2 and 3, total numbers of observed components were obtained as 173, 202 and 196, respectively. It was concluded that the usability of RISs in the studied population, on average and with observing 190 components of the 291 components related to the 13 principles of Nielsen is 65.41 %. Furthermore, problems of usability were obtained as 26.35%.

Discussion and Conclusion:

The established and visible nature of some components such as response time of application, visual feedbacks, colors, view and design and arrangement of software objects cause more attention to these components as principal components in designing UI software. Also, incorrect analysis before system design leads to a lack of attention to secondary needs like Help software and security issues.

Keywords: Radiology information system, Heuristic evaluation, Usability, User interface

1. INTRODUCTION

Hospital, as one of the most important social organizations, plays a major role in the country’s health status, provision of health and treatment services and promotion of health (1). Radiology is a subset of diagnostic units in which part of fixed capital and human resources of hospital have been concentrated (2). Radiology unit is active in all aspects of care process from detection and diagnosis to treatment, follow-up and evaluation of the course of treatment (3). Therefore, activities of radiology unit include referral of patients, performing diagnostic examinations, providing diagnostic results to referring physician and sending code of bill for revenue management system (4). These activities can be optimized with the potential of information technology (5). Development and use of digital imaging systems play an important role in satisfy the needs of users and ultimately help improving performance of radiology unit and subsequently hospital (3-6).

RISs are set of software under network for managing network processes of radiology and are used by radiology department for storing, manipulating and distributing patient data and images (7).

Today, few versions of information systems are complete. Their problems are usually recognized after the establishment. Among the activities that provide valuable help to improve the design of information systems is evaluating (8). Evaluation of health information systems is an important factor for the development of medical informatics (9). In fact, evaluating health information system is of great importance for verifying its applicability in the provision of related services and also organizational investment decisions (10). The aspect of evaluation that deals with the interaction between user and data system is called evaluation of usability (11).

Usability was defines as “extent to which a product can be used by specific users to achieve a particular goal, and provide user satisfaction during use in addition to having effectiveness and efficiency” (12). Usable system helps users to work quickly and easily and with minimum mental effort (11). Usability evaluation methods can be divided into two broad categories: evaluation through user, where the user tries to use and evaluate software; evaluation through specialists, where a specialist evaluates the usability of the system (11, 13, 14).

One of the ways of inspection by specialists who evaluate compliance of system with standard principles of designing user interface of information systems is heuristic evaluation (3). This is one of the easiest and quickest methods to evaluate the usability, which is takes less cost, time and resources than other evaluation methods for identifying usability problems (15). In heuristic evaluation, experts investigate usability of the user interface and compare it with the accepted principles of usability. Analysis results are put in a potential usability list (11).

Generally, radiology is a busy and vital unit in hospital that the necessity of making its tasks electronic is important. Software of this unit should have appropriate interaction with its users, so by mentioning the advantages described for evaluation of information systems, especially the heuristic evaluation, the kind of evaluation of interoperability problems between the user and software can be identified and corrected. Therefore, since no study has been conducted on the status of RISs in hospitals of Tabriz and on other hand, given the importance of the vital activities of radiology, the present research was conducted determine the status of usability and identify usability problems of RISs in Tabriz hospitals with digital radiology unit.

2. METHODOLOGY

The present cross-sectional descriptive study (2015) evaluates RISs in three hospitals with radiology and digital equipment, in the city of Tabriz, using heuristic evaluation method. Among 10 public and two private hospitals of Tabriz, just one public hospital and two private hospitals used radiology software to manage, store and send images. Two hospitals did not have radiology unit, five hospitals used analog system, and two hospitals were setting up digital equipment in radiology unit and their information system of radiology unit was not still in operation stage. The RISs in all three hospitals were completely local and worked separate from the hospital information system. RISs were accessed for evaluation in the environment of radiology unit in two private hospitals and one teaching hospital. The performed evaluation was generally performed on user interface RISs and in some cases of observing hardware equipment status.

Data collection tool was Nielsen standard checklist of heuristic exploratory evaluation of usability (16) which contains 13 principles to evaluate the usability of the software. Principles that have been raised in this checklist are: observing system status, matching system and the real world, freedom and control of user, consistency and standards, helping users to identify, detect and recover errors, prevent errors, recognition instead recall, flexibility and minimalist of design, support and documentation, skills, pleasant interaction and respecting the user’s privacy.

After translation of the checklist by the researcher, content validity of tool was investigated by a number of experts (including four masters of software and four PhD students in medical informatics). The purpose of evaluation was to answer the question whether the content of instruments is capable of measuring its defined purpose or not? Therefore, since the checklist was standard and necessity of all components in order to evaluate the usability had been confirmed, just content validity was investigated.

Three trained evaluators evaluated RISs independently using components of the 13 principle of Nielsen. Evaluators had work experience in the field of user interface design and evaluation of its usability the time of evaluation was May 2015 in which the evaluators visited the hospitals during day and in addition to working with the system, observed reaction and performance of users and completed related checklist. Any disagreement about the evaluated components was eliminated by holding groups meetings and discussion and reinvestigation of the component and applying opinion of majority.

Ratios were used for calculating usability and usability problems. Components were used, that is the ratio of the number of observed components to total components was used for calculating usability of RIS and for calculating problems of usability in each hospital, the ratio of non-observed components and the number of nonexistent components to total components was used. Usability rate of RISs in three hospitals was obtained by averaging usability of each hospital. Calculation of the ratios was performed in the software SPSS version 19 and drawing graphs in Microsoft Excel version 2010.

3. RESULTS

The findings of present research suggest that usability of RISs in three hospitals is 65.41%. The usability of each hospital is shown in Figure 1.

Figure 1.

The calculated usability for each hospital

According to Figure 1, the most usable RIS related was related to the second hospital with usability of 69.42 % (n =202) and the first hospital with usability of 59.45 % (n= 173) has the lowest level of usability. The third hospital with usability of 67.3% (n =196) is in the second place.

The results of evaluation showed that among variables studied, the highest components observed were related to the ninth principle (Aesthetic and Minimalist Design) with average of 83.33 %, the seventh one (Recognition rather than recall) with an average of 80 % of the components observance and the first principle (Visibility of System Status) with average of 78.16 % of components observance. Figure 2 shows the usability related to each Nielsen principle.

Figure 2.

The average rate of usability calculated for each Nielsen principle

In the following the usability problems of RISs are discussed. It should be noted that the usability problems are examined from two aspects:

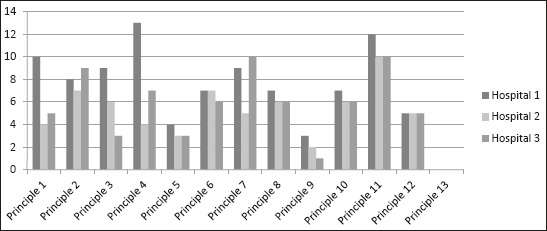

a) Problems arising from non-observance of components: these problems are caused generally by non-observance of a component; b) Problems arising from non-existent or lack of principles and components: these problems are caused by ignoring or not considering some components while designing and implementing the software or low level of hardware facilities for the use of these components. The average of usability problems associated with non-observance of components was obtained as 26.35 %. Figure 3 shows the number of non-observed components in each principle with division of hospitals.

Figure 3.

The number of non-observed components from each principle with division of hospitals

Most of the problems identified were in first hospital with 94 non observed components, the third hospital with 71 components non-observed and finally the second hospital with 65 components non-observed.

2.1. Extracted problems according to Nielsen components (principles)

Visibility of System Status: in evaluating first principle of major usability problems in all three hospitals were related to “lack of visual feedbacks on the cursor position on the options of menus or dialog boxes”, “failure to keep the user aware in significant delays of system”, “lack of enough facilities for moving between windows”, “ambiguity in selection and exiting menu option from selection”.

Match Between System and the Real World: problems such as “inappropriate sorting and irrational menu options”, “lack of logical grouping and meaningful grouping of Menu Options”, “improper command language special for computer classes”, “lack of appropriate alignment of decimal point “, “ lack of automatic use of commas between the numbers”, in RIS of all three hospitals were confirmed.

User Control and Freedom: Major problems were related to “problems of sorting overlapping windows”,” inability of users to adjust files and system defaults”.

Consistency and Standards: “lack of definition of valid inputs and defaults for Q & A interfaces”, “non-uniformity of the abbreviations”, and “non-distinguished field labels and fields themselves regarding typography” were problems.

Help Users Recognize, Diagnose, and Recover from Errors: two main problems found in software of all three hospitals included: “not informing the user about severity of the error,” and “lack of various levels of details in error messages for expert and novice users “. In addition to these two problems in first hospital, “lack of proper expression of error messages associated with the error source” and “lack of using a special method, form, term and structural abbreviation in all error messages”, and the second and third hospital “lack of use of right and soft words to express error”, were identified as problems of the fifth principle.

Error Prevention: the following problems were found in RISs of all three hospitals: “Impossibility for the user to enter more than one group of data in a single display screen”, “non-use of dots or other signs to show length of fields”, “non-use of primary keys continuously throughout the system “,” not preventing user errors whenever possible “,” failure to warn users if they are exposed to a serious potential risk “and” lack of intelligent interpretation of changes in user commands “.

Recognition rather than recall: the main usability problems in RISs of all three hospitals were related to “failure to maintain the relationship between soft function keys and function keys on the keyboard”, “lack of use of symbols to break up long input strings into several pieces”, “ lack of users’ online access to space plan of menus in case of many menu levels or levels of complicated menu” and “lack of satisfactory performance in menus in such a way that where it is necessary they get active and where it is not necessary to be passive “.

Flexibility and Minimalist Design: problems identified in all three hospitals were: “lack of sufficient details in error messages for expert users and novices”, “lack of using appropriate grammar for supporting both the novice and the expert users “,” lack of needed facilities for the novice user to enter the simplest and most common form each command “, “ lack of facilities needed for expert users to enter several commands in a single string “and” lack of automatic filling zeros required by the system “.

Aesthetic and Minimalist Design: After investigating components of the ninth principle, “lack of a simple, short and clear title in data entry pages” in RISs all three hospitals was identified as a common problem. In addition to the above problem in RIS of the first and second hospital “lack of different icons in a set visually and conceptually” was found as a non-observed component.

Help and Documentation: it was concluded that in RISs of all three hospitals there are major problems in “supports of data entry screens and dialog boxes with additional and strategic guidelines” “providing detailed information when selecting an item from the menu,” and “user access to different levels of detail”.

Skills: the identified problems were: “ inability of users to choose how to display data in the form of text and symbolic”, “lack of support from both expert and novice users by providing the different levels of detail, ““lack of translation data for the user “,” lack of avoiding the combination of alpha-numeric characters on the content of the fields, ““no option to type ahead in deep and multilevel menus “and” disorientation of function keys to important, general tasks with high frequency “.

Pleasurable and Respectful Interaction with the User: problems identified are as follows: “Failure to avoid the details in design of icons”, “absence of alternative pointer device to support graphics “and” no automatic without ambiguity completion of partial entries by the system in data entry field”.

Privacy: there only three components in this principle, none of which are included in the system and considered as problems associated with non-existent components in the RIS.

In connection with the problems of non-existent or undetectable components, the mean usability problem was obtained as 8.59%. Figure 4 shows number of non-existent or undetectable components in each principle by division of hospitals. With the calculations conducted, based on non-existent or undetectable components, most of the problems were related to the tenth, fourth, third and thirteenth principles.

Figure 4.

Number of non-existent and unknown components in each principle with division of hospitals.

By studying non-existent components, it was concluded that lack of definition of Help and also Available in analysis and design of RISs in all three hospitals will result in not applying some components including those related to the tenth and thirteenth principles in RISs.

4. DISCUSSION

Several studies have shown that some information systems have not been successful in achieving predetermined goal and user satisfaction (17). No modification or replacement of weak information systems can have negative effects on user performance (confusion, fatigue, loss of time) (18). In the present study it can be clearly stated that the highest usability was respectively related to the principle of Aesthetic and Minimalist Design, (83.33 %), Recognition rather than Recall (80%), observing components and the Visibility of system’s status (78.16 %). In heuristic evaluation of laboratory information system (LIS), it was proved that the Aesthetic and Minimalist Design and Visibility of the system, the system is well respected (18).

Inconsistency of components of the principle of uniformity and in RISs in three hospitals is the least. In information system of emergency, after the principle of consistency and standards, the highest rate of non-compliance was related to the principle of Recognition Rather Than Recall and consistency between system and real world (15). To explain this contradiction, it can be said that general principles of interoperability between user and software are observed in user interface, sometimes the strengths and weaknesses of user interface design in some systems are influenced by the nature of that system (19-22).

According to the results of research, problems due to unclear or non-existent of components in RISs can be categorized in three broad categories: the problems of inappropriate definition or lack of definition of Help for the software, the problems caused by lack of definition of Views for different levels of users and the problems caused by lack of or using sound in software or weakness of hardware in support and play of software sound.

In RISs evaluation in the present study, it was concluded that software designers have paid no attention to the definition of Help and documentation to help users. The same result was confirmed in heuristic evaluation studies of EISs (15), and heuristic evaluation of LISs(17, 18). In the interpretation of this matter, it can be said that since Software Help is generally a secondary and additional facility for software from designers’ perspective, they refused to consider it as main requirements. But since while working with system, users frequently encounter questions and many of these questions need to be answered by management and system supporter, so capability of guiding users in working with the system can be defined as a document on the system (15).

Another problem of RISs usability in the present study is lack of definition of view for access to the software objects. In fact, in all three RISs, no provisions have been defined on the definition of username and password and access level, so that all users at every level, including radiologist physician, radiology technicians and system operators can easily access all parts of the software. Given the importance of security, privacy and confidentiality in the electronic age, especially in the field of health, it can be said that this is one of the most important problems in the field of e-health, that apart from the nature of field for which for software is defined, should be observed in all cases (23).

In the present study, due to sound hardware limitations in hospitals including speakers and sound card, evaluation of status of user voice commands and voice interaction and software and user was not possible. Therefore, this problem was considered as software usability problems. Although software of radiology should ideally be for transcription of doctor’ orders equipped with sound system and speech recognition (19), but RISs in three hospitals, these software worked with their most basic mode and completely local and separate from hospital HIS.

In general, the results showed that although these systems are new and it is expected that they accurately designed, based on user requirements and standards in this regard, but in some cases such as security issues and to definition of different views to reduce unauthorized use and also convenient Help, it has many problems.

Continue and correction of these problems in long terms have harmful effects on performance of users since cause problems like confusion, waste of time and as a result lack of user satisfaction. This also causes error, thus reducing the quality of treatment, and finally the patient’s life is threatened. Thus, it is necessary that software designers in particular user interface designers consider this principles and components in software design and modification of software available and providing new versions.

5. CONCLUSION

The results of this study showed that with higher accuracy and observance of standards and rules for system design, especially standard related to human - computer interfaces, a lot of problems can be prevented. Problems such as lack of guidance in the system, inappropriate messages, improper display of numbers, lack of attention to auto-fill in the appropriate fields and inappropriate abbreviations can be solved through observing international predefined standards and user confusion and waste of time can be prevented.

Acknowledgment

This study as a MSc thesis was funded by School of Health Management and Medical informatics of Tabriz University of Medical Sciences (NO: 5/4/1867).

Footnotes

• Author’s contribution: All authors were fully involved in the study and preparation of the manuscript including: (1) the conception and design of the study, or acquisition of data, or analysis and interpretation of data, (2) drafting the article or revising it critically for important intellectual content.

• Conflict of interest: none declared.

REFERENCES

- 1.Sadoughi F, Khoshkam M, Farahi SR. Usability Evaluation of Hospital Information Systems in Hospitals Affiliated with Mashhad University of Medical Sciences, Iran. Health Information Management. 2012;9(3) [Google Scholar]

- 2.Rabiei R, Moghaddasi H, Hosseini AS, Paydar S. A Study on Radiology Management Information System (RMIS) in Teaching Hospitals Affiliated to Medical Universities in Tehran. Health Information Management. 2013;12(1) [Google Scholar]

- 3.Cimino JJ, Shortliffe EH. Biomedical Informatics: Computer Applications in Health Care and Biomedicine (Health Informatics) Springer-Verlag New York, Inc; 2006. [Google Scholar]

- 4.Wolbarst AB. Imaging Systems for Medical Diagnosticsc: Fundamentals, Technical Solutions and Applications for Systems Applying Ionizing Radiation, Nuclear Magnetic Resonance and Ultrasound. LWW. 2006 [Google Scholar]

- 5.Houghton J. ICTs and the Environment in Developing Countries: Opportunities and Developments. The Development Dimension ICTs for Development Improving Policy Coherence: Improving Policy Coherence. 2010:149. [Google Scholar]

- 6.Griffin DJ. Hospitals: What they are and how they work. Jones & Bartlett Publishers; 2011. [Google Scholar]

- 7.Dreyer KJ, Hirschorn DS, Thrall JH, Mehta A. PACS: a guide to the digital revolution. Springer Science & Business Media; 2006. [Google Scholar]

- 8.Anderson JG, Aydin CE, Jay SJ. Evaluating health care information systems: methods and applications. Sage Publications, Inc; 1993. [Google Scholar]

- 9.Friedman CP, Wyatt J. Evaluation methods in biomedical informatics. Springer Science & Business Media; 2006. [Google Scholar]

- 10.Van Bemmel J, Musen M. What is medical informatics. Handbook of Medical Informatics. 1997:1. [Google Scholar]

- 11.Nielsen J. Usability engineering. Elsevier; 1994. [Google Scholar]

- 12.Jokela T, Iivari N, Matero J, Karukka M. The standard of user-centered design and the standard definition of usability: analyzing ISO 13407 against ISO 9241-11. Proceedings of the Latin American conference on Human-computer interaction. ACM. 2003 [Google Scholar]

- 13.Thyvalikakath TP, Monaco V, Thambuganipalle H, Schleyer T. Comparative study of heuristic evaluation and usability testing methods. Studies in health technology and informatics. 2009;143:322. [PMC free article] [PubMed] [Google Scholar]

- 14.Yen PY, Bakken S. A comparison of usability evaluation methods: heuristic evaluation versus end-user think-aloud protocol - an example from a web-based communication tool for nurse scheduling. AMIA annual symposium proceedings. 2009 [PMC free article] [PubMed] [Google Scholar]

- 15.Khajouei R, Azizi A, Atashi A. Usability Evaluation of an Emergency Information System: A Heuristic Evaluation. Journal of Health Administration. 2013;16(52):61–72. [Google Scholar]

- 16.Pierotti D. Usability techniques: Heuristic evaluation-a system checklist. 2006 [Google Scholar]

- 17.Nabovati E, Vakili-Arki H, Eslami S, Khajouei R. Usability evaluation of Laboratory and Radiology Information Systems integrated into a hospital information system. Journal of medical systems. 2014;38(4):1–7. doi: 10.1007/s10916-014-0035-z. [DOI] [PubMed] [Google Scholar]

- 18.Agharezaei Z, Khajouei R, Ahmadian L, Agharezaei L. Usability Evaluation of a Laboratory Information System. Director General. 2013;10(2) [Google Scholar]

- 19.Salvado VFM, de Assis Moura L., Jr . Heuristic evaluation for automatic radiology reporting transcription systems. Information Sciences Signal Processing and their Applications (ISSPA), 2010 10th International Conference on. IEEE; 2010. [Google Scholar]

- 20.Zhang J, Johnson TR, Patel VL, Paige DL, Kubose T. Using usability heuristics to evaluate patient safety of medical devices. Journal of biomedical informatics. 2003;36(1):23–30. doi: 10.1016/s1532-0464(03)00060-1. [DOI] [PubMed] [Google Scholar]

- 21.Chan J, Shojania KG, Easty AC, Etchells EE. Usability evaluation of order sets in a computerised provider order entry system. BMJ quality & safety. 2011;20(11):932–40. doi: 10.1136/bmjqs.2010.050021. [DOI] [PubMed] [Google Scholar]

- 22.Edwards PJ, Moloney KP, Jacko JA, Sainfort F. Evaluating usability of a commercial electronic health record: A case study. International Journal of Human-Computer Studies. 2008;66(10):718–28. [Google Scholar]

- 23.Shoniregun CA, Dube K, Mtenzi F. Electronic healthcare information security. Springer Science & Business Media; 2010. [Google Scholar]