Abstract

We address the problem of analyzing sets of noisy time-varying signals that all report on the same process but confound straightforward analyses due to complex inter-signal heterogeneities and measurement artifacts. In particular we consider single-molecule experiments which indirectly measure the distinct steps in a biomolecular process via observations of noisy time-dependent signals such as a fluorescence intensity or bead position. Straightforward hidden Markov model (HMM) analyses attempt to characterize such processes in terms of a set of conformational states, the transitions that can occur between these states, and the associated rates at which those transitions occur; but require ad-hoc post-processing steps to combine multiple signals. Here we develop a hierarchically coupled HMM that allows experimentalists to deal with inter-signal variability in a principled and automatic way. Our approach is a generalized expectation maximization hyperparameter point estimation procedure with variational Bayes at the level of individual time series that learns an single interpretable representation of the overall data generating process.

1. Introduction

Over the past two decades, single-molecule biophysical techniques have revolutionized the study of Nature's biomolecular machines by enabling direct observation of some of the cell's most fundamental and complex biochemical reactions (Tinoco & Gonzalez, 2011; Joo et al., 2008; Borgia et al., 2008; Neuman & Nagy, 2008; Cornish & Ha, 2007). At the molecular level, such reactions can be described as a ‘kinetic scheme’ that details the number of conformational states accessible to the biomolecular machine, the order in which these states are explored, and the associated transition rates. In single-molecule biophysics, the goal of inference is to reconstruct this kinetic scheme from a noisy time-dependent observable, such as a fluorescence intensity or bead position, which indirectly reports on the state transitions in a biomolecular process.

The learning task that motivates this work is the estimation of a consensus kinetic scheme from a multitude of time series. Single-molecule experiments commonly yield observations for an ‘ensemble’ of hundreds of molecules. An illustration of this analysis problem can be seen in figure 1. The time series shown are obtained in a single-molecule fluorescence resonance energy transfer (smFRET) experiment, where a fluctuating intensity ratio EFRET = IA/(ID + IA) of two fluorescent molecules, known as the donor and acceptor, reports on the conformational transitions of a molecule of interest. Conditioned on the conformational state these observables are approximately normally distributed, but their means vary significantly over the ensemble owing to a combination of image-processing artifacts and physical inhomogeneities. Moreover, the number of conformational transitions that can be observed for each molecule is limited by the life-time of the fluorescent labels, which have a fixed probability of photobleaching upon each excitation. Learning a common set of states and associated transition probabilities from time series such as in figure 1 remains a challenging task. Existing approaches use maximum likelihood (McKinney et al., 2006; Greenfeld et al., 2012) or variational Bayesian (Bronson et al., 2009) estimation on HMMs to infer independent models for each individual time series, resulting in a set of similar but variable parameter estimates. Experimentalists then resort to an ad-hoc semi-manual binning of states with similar observation means, after which the corresponding binned transition counts can be averaged over the ensemble to obtain a more informed estimate of the consensus kinetic rates, implicitly assuming an identical set of transition probabilities for all time series.

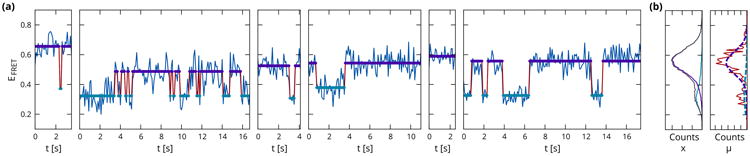

Figure 1.

(a) Sample time series from a single-molecule fluorescent energy transfer (smFRET) experiment, which measures a ratio of intensities of two fluorescent labels EFRET = IA/(ID + IA) to detect conformational transitions between two states (see section 5). Analysis with a variational Bayesian HMM shows states with means and precisions that vary significantly from molecule to molecule. Moreover photobleaching of the fluorophores results in time series of variable (exponentially distributed) lengths. (b) Histogram of observables xn, t, and inferred state means μn, k (red) along with empirical Bayes estimates of priors (dotted lines) for an ensemble of N = 336 time series from a single experiment.

The contribution presented here is to develop hierarchically coupled HMMs to learn a distribution on the parameters for each state. Specifically, we assume a HMM joint p(xn, zn | θn) for the observables xn and latent states zn for each time series n ∈ [N], conditioned on a set of parameters θn drawn from a shared prior p(θ | ψ). In the statistical community this is known as a conditionally independent hierarchical model (Kass & Steffey, 1989). The hyperparameters are estimated with an empirical Bayes approach (Morris, 1983). This generalized expectation (EM) procedure iteratively performs variational Bayes (VB) estimation for each time series, after which the summed lower bound is maximized with respect to ψ.

The resulting procedure, which we will call variational empirical Bayes (VEB), infers a single consensus parameter distribution from an ensemble of time series that represents the kinetic scheme that is of experimental interest. The VEB procedure also yields more accurate inference results in individual time series. This is a well-known feature of empirical Bayes models (Berger, 1982), that intuitively follows from the fact that the shared prior p(θ | ψ) incorporates knowledge of parameter values across the ensemble that can aid the inference process in individual time series. Finally our hierarchical construction allows comparison of prior densities to their ensemble-averaged posterior estimates, providing the experimentalist with a intuitive diagnostic for the agreement between observed experimental data and a chosen graphical model, which can then inform the next iteration of model design.

2. Related Work

Variational approaches are a mainstay of Bayesian inference (Jordan et al., 1999; Wainwright & Jordan, 2008; Bishop, 2006). Variational Bayes estimation for HMMs has previously been applied to smFRET experiments (Bronson et al., 2009; Fei et al., 2009; Bronson et al., 2010), and these techniques have both been used directly (Sorgenfrei et al., 2011) and in a modified form (Okamoto & Sako, 2012) in the context of several other single-molecule experiments.

Methods that obtain point estimates for a set of hyperparameters are known under a variety of names, including empirical Bayes, type II maximum likelihood, generalized maximum likelihood and evidence approximation (Bishop, 2006). A common use for such techniques is to adaptively set the hyperparameters of a model to values that are appropriate for a given inference application. These approaches have the well-documented feature of allowing more accurate inferences for individual samples in learning scenarios where parameters are correlated (Morris, 1983; Kass & Steffey, 1989; Carlin & Louis, 1996), which can be interpreted as a generalization of the Stein effect (Casella, 1985; Stein, 1981; Berger, 1982). In some cases, such as mixture models, type II maximum likelihood methods can also be employed in quasi non-parametric manner, by using a larger than required number of mixture components and relying on the inference procedure to leave superfluous components unpopulated (Corduneanu & Bishop, 2001).

In this biophysical application, the hyperparameters provide an explicit representation of the common features in multiple time series, which are individually represented as HMMs with Gaussian observations

For each n ∈ [N] time series, the set of parameters θn = {μn, λn, An, πn} are drawn from the priors

This graphical model (fig 2a) represents each of the k ∈ [K] consensus states with a set hyperparameters ψ = {mk, βk, ak, bk, αk, ρ}, introducing a hierarchical coupling that can be used to represent an ensemble of HMMs with similar yet not identical parameters. This type of construction is more expressive than a model with identical parameters for each time series (fig 2b). At the same time the use of differentiated priors defines a correspondence between the states in individual time series and a set of consensus states, eliminating label-switching issues across the ensemble.

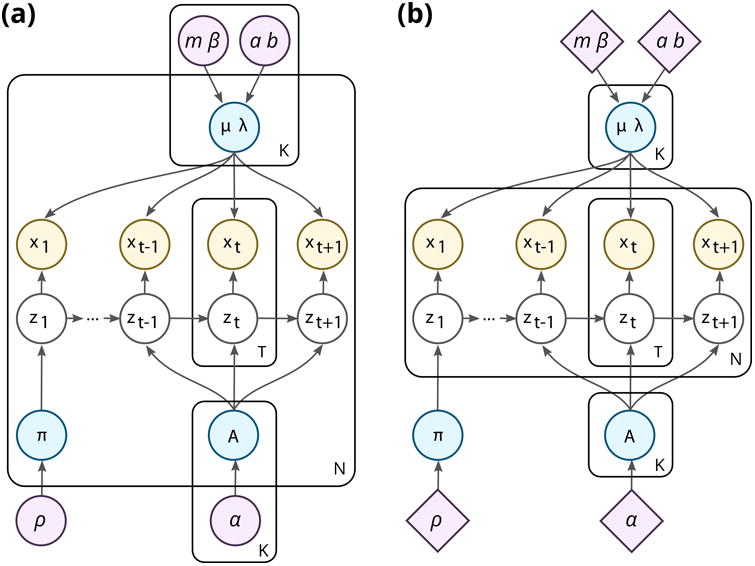

Figure 2.

HMMs for ensembles of time series. (a) Our model employs a separate set of hyperparameters for each state, representing a consensus model whose parameters are allowed to vary across the ensemble. (b) A fully shared approach with a single set of K states, which are identical for all N time series, and are each drawn from the same (typically uninformative) prior.

As we will see in our discussion of results, estimation of the hyperparameters allows us to take advantage of the known features of empirical Bayes methods, including increased accuracy of inferred parameters and adaptive selection of model complexity. However, from the point of view of modeling single-molecule biophysical systems the most substantial advantage of this type of approach is one of dimensionality reduction. A comparatively small set of K(K + 5) hyperparameters provides a single model that incorporates the information contained in hundreds of single-molecule time series in a statistically robust manner. In contrast to applications where empirical Bayes estimation is used to aid posterior inference, the learned hyperparameters in this hierarchical model are in fact the primary quantity of interest.

3. Variational empirical Bayes

All variants of expectation maximization procedures have equivalent formulations in terms of the minimization of a Kullback-Leibler (KL) divergence, or the maximization of a (lower bound marginal) likelihood. In the first formulation an optimization criterion for parametric empirical Bayes models can be written as

| (1) |

Intuitively this self-consistency relationship states that the joint prior p(z, θ|ψ*) should be chosen to be as similar as possible to the posterior p(z, θ | x, ψ*).

A dual problem for equation 1 can be constructed by introduction of a variational approximation to the posterior. Given any function q(z, θ), the marginal likelihood p(x|ψ), also known as the evidence, can be trivially represented as

Taking the logarithm of the above equation, followed by an expectation over q(z, θ) yields the relationship

In a model where p(x, z, θ|ψ) = p(x | z, θ)p(z, θ | ψ) we can additionally write

Because Lveb ≤ logp(x|ψ), this quantity is often called an evidence lower bound (ELBO). Maximization of Lveb with respect to q(z, θ) is equivalent to minimization of the KL-divergence between q(z, θ) and the posterior, whereas maximization with respect to ψ minimizes the KL-divergence between q(z, θ) and the prior. These two steps can be combined to construct an iterative optimization algorithm

If the posterior could be calculated directly, we could solve the first equation by setting q(z, θ) = p(z, θ|x, ψ). The second equation then gives us the solution the hyperparameter estimation problem posed in equation 1. In this scenario, parametric empirical Bayes estimation is equivalent to an expectation maximization (EM) algorithm for the marginal likelihood p(x | ψ).

For models where the posterior cannot be calculated directly we can substitute any analytically convenient form for q(z, θ) and calculate an approximation

An often convenient choice is a factorized form

which in conjugate exponential family models guarantees that the approximate posterior has the same analytical form as the prior, i.e. q(θn) = p(θn | ψ̂n), where ψ̂n is a set of variational posterior parameters that can typically be expressed as a weighted average of the hyperparameters and the expectation of a set of sufficient statistics (Beal, 2003).

With the above factorization, we can construct an approximate inference procedure for parametric empirical Bayes models, which we call variational empirical Bayes (VEB), that optimizes Lveb through

This method is directly related to existing variational inference techniques on HMMs, which employ the lower bound

Because optimization w.r.t. q(z, θ) simply reduces to performing VB estimation on each of the individual time series.

The hyperparameter updates ∂Lveb/∂ψ = 0 take the form of a coupled set of equations over the n ∈ [N] time series in the ensemble

We can express these coupled equations in terms of a set of ensemble averages, defined by

For a Dirichlet prior, the hyperparameter updates simply match the log expectation values

In terms of ψ these log expectation values can be expressed in terms of the digamma function Ψ

While these equations have no analytical solution their stationary point can be found efficiently with a Newton iteration method (Minka, 2012).

Maximimzation with respect to the Normal-Gamma prior on μ and λ similarly yields four relationships between prior expectation values and their ensemble posterior averages

which are equivalent to the following updates for ψ

where the ensemble expectations are given by

4. Performance on simulated data

Because VEB estimation is a generalized EM algorithm for the hyperparameters, it is in principle subject to the usual concerns about overfitting and the number of restarts required to avoid local maxima. At the same time results for type II maximum likelihood estimation of mixture weights suggest (Corduneanu & Bishop, 2001) that such estimation schemes may leave superfluous states unpopulated, and the empirical Bayes literature suggests that hyperparameter estimation results in more accurate posterior inference (Morris, 1983). As a pragmatic approach to evaluating the performance of this method we have sampled simulated datasets from a range of experimentally plausible parameters, and compared inference results from the VEB algorithm to those obtained with maximum likelihood (ML) and variational Bayesian (VB) methods.

We performed inference on simulated datasets containing N = 500 time series, each with T = 100 time points, exploring K = 3, 4, 5 evenly spaced states at distance Δμ = 0.2, and increase the noise relative to this separation. Noise levels for σ = λ−1/2/Δμ range from 0.1 (unrealistically noiseless) to 0.9 (unrealistically noisy), where 0.5 can be considered an upper limit of data that still permits analysis with state-of-the-art algorithms. In typical experiments the variance of apparent state means Vp(θ|ψ)[μ] is of a similar order of magnitude as the variance of the observables Vp(x|θ, z) [x]. For the purposes of this numerical experiment, we simulated a relatively homogeneous ensemble with Vp(θ|ψ)[μ] = 0.4 Vp(x|θ, z) [x] in order to isolate the influence of the experimental signal-to-noise ratio on the difficulty of the inference problem.

In single-molecule biophysical applications, the goal of inference is to reconstruct a kinetic scheme in the form of a set of consensus states and associated transition probabilities. For this reason the quantities that are of primary interest are the transition pseudo-counts

In ML and VB and estimation, the inferred states in each time series are unconnected, so we must construct some form of mapping (n, i) ↦ (n, k) to identify a set of consensus states. Here we use an approach that could typically be applied in existing experimental work flows, which is to simply cluster the inferred state means using a Gaussian mixture model. The remapped set of counts ξn,kl can now be used to calculate the error relative to the true counts that were sampled from the generative model.

We identify an ‘occupancy’ error for the diagonal terms and a ‘transition’ error for the off-diagonal terms, both defined in terms of the number of false positives and false negatives in the inferred transition counts. These quantities have the advantage of having simple intuitive interpretations. One is the error in the fraction of time spent in each state, whereas the other is the fraction of spurious/missed transitions.

The error rates obtained from this analysis are shown in figure 3a. By both measures, the VEB algorithm significantly outperforms the ML and VB approaches over the entire range of noise levels. From an experimental perspective, this suggests that switching from a VB to a VEB procedure yields an improvement in accuracy comparable to increasing the signal-to-noise ratio of the measurements by a factor 2.

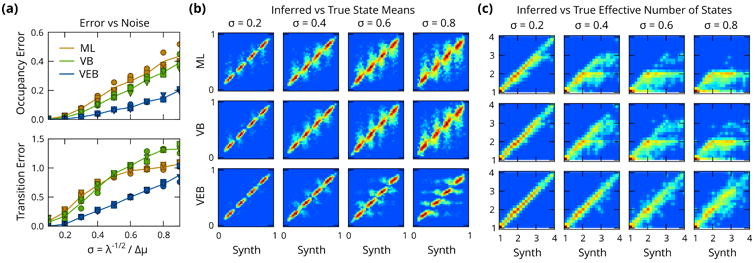

Figure 3.

Validation on simulated data. (a) Errors in inferred transition pseudocounts, separated into diagonal ‘occupancy’ and off-diagonal ‘transition’ terms. By both measures the VEB algorithm (blue) significantly outperforms VB (green) and ML (orange) methods. (b) Histograms (logarithmically scaled) of inferred vs true state mean μz(t), for simulated datasets with K = 4. The increasing inference error is visible in the progressively blurred distribution along the vertical axis, which is markedly reduced when using VEB inference. (c) Effective number of states. ML and VB estimation (using the BIC and ELBO for model selection respectively), both systematically underestimate the number of states, whereas VEB algorithm shows a significantly improved performance, even at high noise.

Figure 3b shows 2-dimensional histograms (logarithmically scaled) that represent the joint distribution of inferred and true means μk. Learning parameter distributions for each state results in well-defined peaks of the inferred posterior means, whereas the distributions for ML and VB become progressively poorly defined as the noise level increases.

To evaluate the accuracy of inference in terms of the number of states populated in each time series, we calculate a quantity known as the effective number of degrees of freedom, which is based on the Shannon entropy of the time-averaged posterior

Note that for a discrete distribution with K choices, equal probability Q(z) = 1/K for each outcome yields

whereas any distribution of lower entropy will have a correspondingly smaller number Keff, resulting in a continuous measure of the degrees of freedom as a function of state occupancy.

Figure 3c shows 2-dimensional histograms of the inferred and true number of effective states for the same dataset shown in figure 3b. In VB and ML inference, we perform model selection on each time series using the lower bound Lvb and BIC respectively. VEB is given the correct maximum number of states at the hyperparameter level, but no additional model selection is performed for individual time series. The results in figure 3c show a clear picture: at high noise levels, both VB and ML estimation procedures underestimate the number of states, whereas VEB estimation is much more robust.

These results can each be seen as instances of the well known Stein effect (Berger, 1982): an estimator that exploits shared information between observations, in our case different time series, can provide more accurate inferences than an estimator that lacks this shared information. Empirical Bayes methods obtain information about the distribution of the parameters p(θ|ψ) that is not available in ML and VB approaches.

In other words, the prior knowledge encoded in the estimated hyperparameters can be used to obtain more robust inference results, as visible in the transition probabilities (fig 3a) and state means (fig 3b).

We additionally observe that VEB inference populates the correct number of states in individual time series without needing model selection criteria (fig 3c), which is consistent with the results obtained for mixture models (Corduneanu & Bishop, 2001). A caveat is that all three analysis methods have thus far been supplied with a correct guess of the maximum number of states at the ensemble level. We will return to the question of ensemble model selection in section 6.

5. Analysis of smFRET data

The VEB method introduced in this paper can be implemented for any single-molecule experiment amenable to analysis with HMMs and related graphical models. Here we focus on the analysis of smFRET experiments, which track conformational changes in real time by measuring the anti-correlated intensity changes of a pair of fluorophores, known as the donor and acceptor. Figure 4 shows analysis of a set of experiments that investigate the mechanism of protein synthesis, or translation, by the bacterial ribosome. Specifically these experiments focus on a process known as translocation, the precise motion of the ribosome along its messenger RNA (mRNA) template as it synthesizes the protein encoded by the mRNA (for a review see (Tinoco & Gonzalez, 2011)).

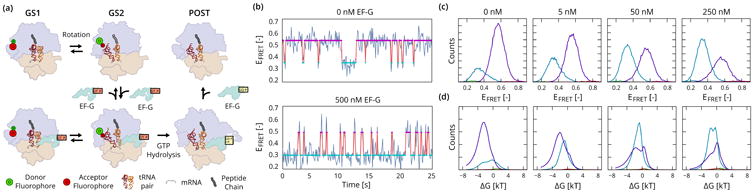

Figure 4.

smFRET studies of translocation in the bacterial ribosome (Fei et al., 2009). (a) The dominant pathway for translocation is believed to have three steps: A reversible rotation of the two subunits (purple and tan), followed by the binding of EF-G (green) which stabilizes the rotated GS2 state long enough for a GTP-driven transition to the post-translocation (POST) complex to take place. (b) smFRET signals show a shift of the equilibrium from the GS1 state (magenta, EFRET ≃ 0.55) towards the GS2 state (cyan, EFRET ≃ 0.35) in the presence of EF-G. (c) Histograms of observables xn, t, split by state, showing a continuous shift of the equilibrium towards GS2 as the concentration of EF-G is increased. Out of 4 states used for inference, only two dominant states are significantly populated. (d) Summed posterior distributions on the relative free energy q(ΔGn, k), showing a weak bi-modal distribution in the free energy of the GS2 state whose lower component becomes more populated with increasing EF-G concentration.

The hypothesized mechanism for translocation (figure 4a) breaks down into 3 kinetic steps. The first step is a thermally driven, reversible transition between two conformational states of the pre-translocation ribosomal complex (labeled GS1 and GS2). This transition is followed by the binding of a GTPase translation factor, EF-G, which has the effect of stabilizing the GS2 long enough to enable a GTP hydrolysis-driven movement of the ribosome along the mRNA template. The first two steps in this kinetic scheme can be studied experimentally by substitution of GTP with a non-hydrolyzable GTP analogue, preventing the GTP hydrolysis-driven final step in the translocation reaction. Figure 4b shows two time series that measure reversible transitions between the GS1 and GS2 states, customarily plotted as the intensity ratio of the donor and acceptor fluorophores, which is given by EFRET = IA/(IA + ID). The first, recorded in the absence of EF-G, shows a preference for the GS1 state. The second time series, taken from an experiment where 500 nM EF-G was added to the solution, shows a shift of the equilibrium towards the GS2 state.

Analysis of 4 experiments performed with EF-G concentrations of 0 nM to 250 nM are shown in figure 4c. We perform VEB inference with 4 states on the ensemble of traces from all experiments to learn a set of shared states for the entire series. In each of the experiments we observe two dominant states, corresponding to the GS2 (cyan) and GS1 (magenta) state respectively. Histograms of observations, on the top row, show a shift in the GS1-GS2 equilibrium towards the GS2 state as the concentration of EF-G is increased.

The marginal probability for each state can be expressed in terms of a free energy Gk via the relation q(z=k) ∼ exp[−Gk/kBT]. An energy relative to other states can be defined as

allowing formulation of a set of posterior distributions

Figure 4d shows the posterior ensemble-averaged distributions on ΔGk. The distribution for the GS1 state exhibits a bi-modal signature, indicative of a mixed population of EF-G bound and unbound molecules. At low EF-G concentrations, the lower mode dominates, whereas the upper mode takes over at high EF-G concentrations, indicating an energetically favorable GS2 state. This type of bi-modal signature in the posterior would be difficult, if not outright impossible to discern with existing non-hierarchical approaches.

6. Model Selection Criteria

Our analysis of simulated data in section 4 suggests that the VEB procedure may exhibit a resistance to overfitting similar to what has been observed in other type II maximum likelihood studies. In particular, we could supply the method with a larger than sufficient maximum number of states at the hyperparameter level and hope to learn a model that leaves superfluous degrees of freedom unpopulated. To see how well such an approach works in practice we compare analysis of our experimental dataset in the absence of EF-G to a simulated dataset with similar hyperparameters, i.e. we first perform inference with a single population on the experimental dataset, and then use the inferred hyperparameters to generate our simulated data.

Results of this analysis are shown in figure 5. Inference on experimental data shows a more or less monotonic increase of the lower bound. This same trend is visible in the lower bound on held-out data obtained from 10fold cross-validation. The reason for this becomes apparent when examining histograms of the observations, shown in figure 5c. On a semi-logarithmic scale normally distributed observables should show a parabolic profile. The experimental histograms at K = 2 show significant discrepancies with respect to this distribution, in the form of asymmetries and long tails, which are separated into additional states when inference is performed with a higher number of degrees of freedom.

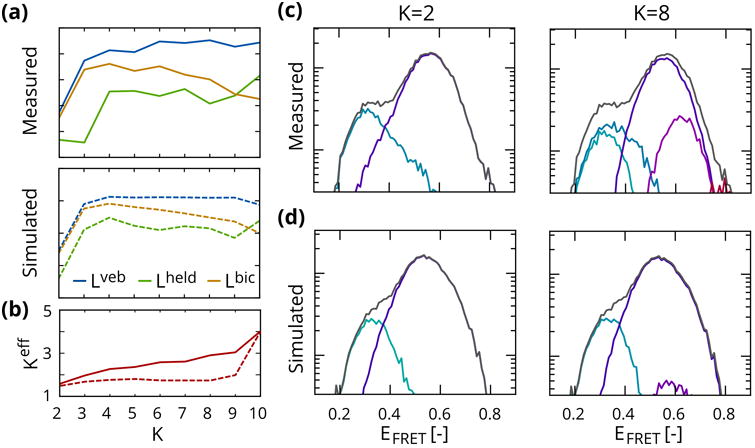

Figure 5.

Dependence on number of states in experimental and simulated data. (a) Lower bound Lveb (blue), lower bound on held out data (green) and heuristic lower bound Lbic (orange). (b) The effective number of states increases monotonically for experimental data, but does not reveal significant over-fitting on simulated data. (c) Experimental data show asymmetries and long tails, resulting in population of additional states. (d) In simulated data, which lack these discrepancies with respect to the generative model, additional states are left largely unpopulated.

Given that the parameters for each time series are i.i.d., we can attempt to use a heuristic like the BIC = −2Lveb + K(K + 5) log N for model selection. This yields a model with 4 states. We now use the estimated hyperparameters to sample a simulated dataset from the generative model. In the absence of artifacts and discrepancies with respect to the underlying model, the monotonic increase of Lveb is absent, and we in fact observe an all but infinitesimal decrease when overfitting the data. This decrease is also present, if not necessarily more pronounced, in the lower bound on held out data obtained from cross-validation. This qualitatively different outcome is also reflected in the effective number of states, shown in figure 5b, which increases much more modestly as a function of K as compared to the experimental case.

In short, these results show that the VEB method does in principle have a built-in resistance to overfitting, in the sense that superfluous states are left unpopulated by the algorithm. Moreover solutions that do over-fit the data exhibit a decreased evidence lower bound. At the same time analysis of actual biophysical data shows that this feature of the method is in practice outweighed by discrepancies between the data and the hypothesized generative model. Model selection on real biological datasets is therefore limited by our ability to construct a generative model that is not overly sensitive to such discrepancies.

7. Discussion

We have formulated an approximate parametric empirical Bayes procedure on HMMs based on variational inference, for learning of a single model from a multitude of biological time series. The estimation of parameter distributions that lies at the core of this method can be thought of as a mechanism for introducing a “loose” sharing of parameters between time series. This type of approach could therefore be useful in any type of learning scenario where it is desirable to extract a single interpretable model from an ensemble of similar yet heterogeneous time series.

The methodology employed here has a number of desirable features, including increased accuracy of posterior inference and a built-in resistance to overfitting. However its main advantage in the context of single-molecule biophysics is that it enables robust estimation on large numbers of time series. The learned hyper-parameters not only provide a single summary representation of an entire experiment, but are also useful in validating mechanistic hypotheses encoded in different graphical model variants. Comparison of prior and posterior densities allows detailed evaluation of the agreement between observed data and a chosen graphical model, even in the case where one may not wish to blindly employ the evidence lower bound as a model selection criterion. For example, the bi-modal signature of the posterior in figure 4 suggests that a more detailed analysis could be performed by assuming a mixture of parameter distributions p(A | ψm) conditioned on a new latent state that indicates the presence of an EF-G molecule bound to the ribosomal complex.

In experimental platforms beyond smFRET, several obvious extensions to graphical models related to the HMM could extend the scope of applications of this VEB approach. Double Markov chain models can be used to model diffusive motion governed by a latent state, as observed in tethered particle motion experiments (Beausang et al., 2007). Factorial HMMs (Ghahramani & Jordan, 1997), can be used to model systems with orthogonal degrees of freedom such as the internal state of a molecular machine that performs a stepping motion along a substrate, or dynamic binding and unbinding of external factors.

All code used in this publication is available as open source on http://ebfret.github.com.

Acknowledgments

The authors would like to thank Matt Hoffman and David Blei for helpful discussions. This work was supported by an NSF CAREER Award (MCB 0644262) and an NIH-NIGMS grant (R01 GM084288) to R.L.G.; and a Rubicon fellowship from the Netherlands Organization for Scientific Research (NWO) to J.W.M.

Contributor Information

Jan-Willem van de Meent, Email: JANWILLEM.VANDEMEENT@COLUMBIA.EDU.

Jonathan E. Bronson, Email: JONATHAN.BRONSON@GMAIL.COM.

Frank Wood, Email: FWOOD@ROBOTS.OX.AC.UK.

Ruben L. Gonzalez, Jr., Email: RLG2118@COLUMBIA.EDU.

Chris H. Wiggins, Email: CHRIS.WIGGINS@COLUMBIA.EDU.

References

- Beal MJ. Phd thesis. University College; London: 2003. Variational Algorithms for Approximate Bayesian Inference. [Google Scholar]

- Beausang JF, Zurla C, Manzo C, Dunlap D, Finzi L, Nelson PC. DNA looping kinetics analyzed using diffusive hidden Markov model. Biophys J. 2007 May;92(8):L64–6. doi: 10.1529/biophysj.107.104828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger J. Bayesian Robustness and the Stein Effect. JASA. 1982 Jun;77(378):358–368. [Google Scholar]

- Bishop CM. Pattern recognition and machine learning. Springer; New York: 2006. [Google Scholar]

- Borgia A, Williams PM, Clarke J. Single-molecule studies of protein folding. Ann Rev Biochem. 2008 Jan;77:101–25. doi: 10.1146/annurev.biochem.77.060706.093102. [DOI] [PubMed] [Google Scholar]

- Bronson JE, Fei J, Hofman JM, Gonzalez RL, Wiggins CH. Learning rates and states from biophysical time series: a Bayesian approach to model selection and single-molecule FRET data. Biophys J. 2009 Dec;97(12):3196–205. doi: 10.1016/j.bpj.2009.09.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronson JE, Hofman JM, Fei J, Gonzalez RL, Wiggins CH. Graphical models for inferring single molecule dynamics. BMC Bioinf. 2010 Jan;11(Suppl 8)(Suppl 8):S2. doi: 10.1186/1471-2105-11-S8-S2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlin BP, Louis TA. Bayes and Empirical Bayes Methods for Data Analysis. Chapman & Hall; London: 1996. Number 1. [Google Scholar]

- Casella G. An introduction to empirical Bayes data analysis. Am Statistician. 1985;39(2):83–87. [Google Scholar]

- Corduneanu Adrian, Bishop CM. Variational Bayesian model selection for mixture distributions. AISTATS. 2001 [Google Scholar]

- Cornish PV, Ha T. A survey of single-molecule techniques in chemical biology. ACS Chem Biol. 2007 Jan;2(1):53–61. doi: 10.1021/cb600342a. [DOI] [PubMed] [Google Scholar]

- Fei J, Bronson JE, Hofman JM, Srinivas RL, Wiggins CH, Gonzalez RL. Allosteric collaboration between elongation factor G and the ribosomal L1 stalk directs tRNA movements during translation. Proc Nat Acad Sci USA. 2009 Sep;106(37):15702–7. doi: 10.1073/pnas.0908077106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghahramani Z, Jordan MI. Factorial Hidden Markov Models. Machine Learning. 1997;29(2-3):245–273. [Google Scholar]

- Greenfeld M, Pavlichin DS, Mabuchi H, Herschlag D. Single Molecule Analysis Research Tool (SMART): An Integrated Approach for Analyzing Single Molecule Data. PloS One. 2012 Jan;7(2):e30024. doi: 10.1371/journal.pone.0030024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joo C, Balci H, Ishitsuka Y, Buranachai C, Ha T. Advances in single-molecule fluorescence methods for molecular biology. Ann Rev Biochem. 2008 Jan;77:51–76. doi: 10.1146/annurev.biochem.77.070606.101543. [DOI] [PubMed] [Google Scholar]

- Jordan MI, Ghahramani Z, Jaakkola TS. An introduction to variational methods for graphical models. Machine Learning. 1999;233:183–233. [Google Scholar]

- Kass RE, Steffey D. Approximate Bayesian inference in conditionally independent hierarchical models (parametric empirical Bayes models) JASA. 1989;84(407) [Google Scholar]

- McKinney SA, Joo C, Ha T. Analysis of single-molecule FRET trajectories using hidden Markov modeling. Biophys J. 2006 Sep;91(5):1941–51. doi: 10.1529/biophysj.106.082487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minka Thomas P. Technical Report 8. MIT; 2012. Estimating a Dirichlet distribution. [Google Scholar]

- Morris CN. Parametric Empirical Bayes Inference: Theory and Applications. JASA. 1983 Mar;78(381):47–55. [Google Scholar]

- Neuman KC, Nagy A. Single-molecule force spectroscopy: optical tweezers, magnetic tweezers and atomic force microscopy. Nat Methods. 2008 Jun;5(6):491–505. doi: 10.1038/nmeth.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okamoto K, Sako Y. Variational Bayes Analysis of a Photon-Based Hidden Markov Model for Single-Molecule FRET Trajectories. Biophys J. 2012 Sep;103(6):1315–24. doi: 10.1016/j.bpj.2012.07.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sorgenfrei S, Chiu CY, Gonzalez RL, Yu YJ, Kim P, Nuckolls C, Shepard KL. Label-free single-molecule detection of DNA-hybridization kinetics with a carbon nanotube field-effect transistor. Nat Nanotechnol. 2011 Feb;6(2):126–32. doi: 10.1038/nnano.2010.275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein CM. Estimation of the Mean of a Multivariate Normal Distribution. Ann Stat. 1981 Nov;9(6):1135–1151. [Google Scholar]

- Tinoco I, Gonzalez RL. Biological mechanisms, one molecule at a time. Genes Dev. 2011 Jun;25(12):1205–31. doi: 10.1101/gad.2050011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wainwright MJ, Jordan MI. Graphical Models, Exponential Families, and Variational Inference. Found Trends Machine Learning. 2008;1(12):1–305. [Google Scholar]