Abstract

Background

Direct ophthalmoscopy is an essential skill that students struggle to learn. A novel 'teaching ophthalmoscope' has been developed that allows a third person to observe the user's view of the fundus.

Objectives

To evaluate the potential use of this device as an aid to learning, and as a tool for objective assessment of competence.

Methods

Participants were randomised to be taught fundoscopy either with a conventional direct ophthalmoscope (control) or with the teaching device (intervention). Following this teaching session, participant competence was assessed within two separate objective structured clinical examination (OSCE) stations: the first with the conventional ophthalmoscope and the second with the teaching device. Each station was marked by two independent masked examiners. Students were also asked to rate their own confidence in fundoscopy on a scale of 1-10. Scores of competence and confidence were compared between groups. The agreement between examiners was used as a marker for inter-rater reliability and compared between the two OSCE stations.

Results

Fifty-five medical students participated. The intervention group scored significantly better than controls on station 2 (19.8 vs 17.6; P=0.01). They reported significantly greater levels of confidence in fundoscopy (7.3 vs 4.9; P<0.001). Independent examiner scores showed significantly improved agreement when using the teaching device during assessment of competence, compared to the conventional ophthalmoscope (r=0.90 vs 0.67; P<0.001).

Conclusion

The teaching ophthalmoscope is associated with improved confidence and objective measures of competence, when compared with a conventional direct ophthalmoscope. Used to assess competence, the device offers greater reliability than the current standard.

Introduction

Almost two decades after Roberts et al1 first described the risk of ocular fundoscopy becoming a ‘forgotten art', the direct ophthalmoscope continues to be a topic of heated discussion.2, 3 Roberts observed that the direct ophthalmoscope was an under-utilised tool in the clinical setting and that doctors lacked confidence in its use. Since then, medical students, junior doctors, and general practitioners have consistently reported low levels of confidence in ophthalmoscopy.4, 5, 6, 7, 8 Although some parties have suggested that direct ophthalmoscopy might be replaced by ocular fundus photographs,3, 9 pan-optic ophthalmoscopes2, 10 or by smartphone technology,11 the reality is that the conventional handheld direct ophthalmoscope remains the most readily accessible tool for fundoscopy to the majority of frontline clinicians. It can be used to quickly and effectively detect a number of life-threatening and disabling diseases and so remains a diagnostic tool with which all graduating doctors should be familiar.2, 12, 13, 14 Yet, clinicians still appear to avoid the direct ophthalmoscope in practice, putting patient safety at risk and placing increasing demands on eye specialists.2, 5, 15, 16, 17 In an effort to preserve the skill-set of future doctors, various groups have tried novel approaches to improve the teaching and assessment of direct ophthalmoscopy, with varying degrees of success.18, 19, 20, 21, 22, 23

Little attention has been focused on what is one of the fundamental difficulties in learning this skill; by the nature of the conventional ophthalmoscope's design, it is not possible for a student and teacher (or assessor) to share the same view of the fundus. This makes it challenging for the student to appreciate exactly what is expected of them and for the educator to provide useful feedback to improve the student's technique.24 When assessing competence, it is difficult for an examiner to verify the student's reported findings or appreciate actual fundus visualisation, limiting any thorough or truly objective evaluation.20, 24, 25, 26

Teaching cameras and mirrors facilitate learning in slit-lamp biomicroscopy and microsurgery and may have a role in improving ophthalmoscopy training. A novel teaching device has previously been described that allows a user to perform direct ophthalmoscopy while their view of the fundus can be visualised by an observer simultaneously on a nearby computer screen.26 This prototype device is a modified version of a commercially available direct ophthalmoscope (Welch Allyn model ref. 11720-BI, New York, USA). By incorporating a beam-splitter and miniature video camera within the housing of the ophthalmoscope head, a live video feed of the user's view is transmitted via a USB cable to a computer running standard webcam software (refer to Supplementary Information I). Aside from the USB cable projecting from the ophthalmoscope head, the device appears, handles, and is used in the same way as a conventional ophthalmoscope.

Objective

The aim of this study is to evaluate the described ‘teaching ophthalmoscope' as an aid to both learning and assessing fundoscopy among medical students. The study compares this device with a conventional direct ophthalmoscope by investigating:

The effect on medical student competence and self-reported confidence when used as an aid to teaching.

The agreement of the two devices as an assessment tool and the difference in inter-observer reliability of examiners during an objective structured clinical examination (OSCE).

Materials and methods

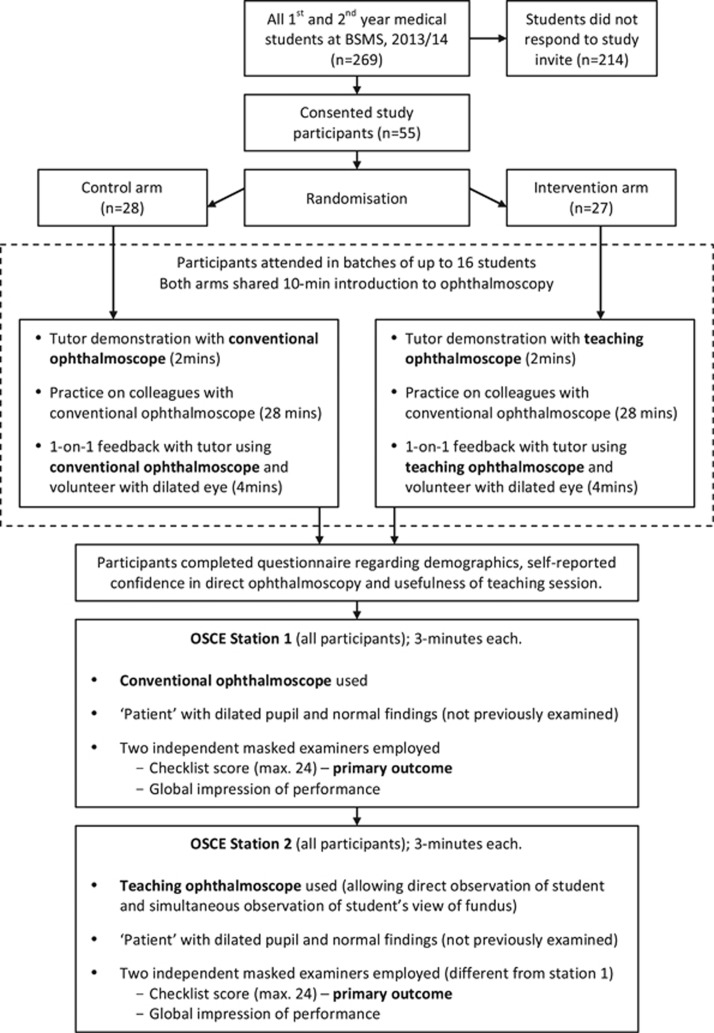

This randomised (1 : 1) parallel-group study was conducted at Brighton and Sussex Medical School (BSMS; Figure 1). Approval was granted by the BSMS Research Governance and Ethics Committee in November 2013 (13/175/SCH).

Figure 1.

Methodology and participant flow diagram. BSMS, Brighton and Sussex Medical School; OSCE, objective structured clinical examination.

Participant recruitment and randomisation

Eligible participants were first- and second-year (preclinical) medical students enrolled at BSMS during the academic year 2013/2014. There were no exclusion criteria. At BSMS, medical students are introduced to fundoscopy in year 2, and begin clinical rotations (including ophthalmology) during years 3–5.

All eligible students were invited to participate in the study. Consented participants attended one of four study sessions in batches of up to 16 students. At each session, the students were allocated a unique numerical identifier, and a computer-based random allocation sequence was generated in order to randomly assign each participant to either a control arm or an intervention arm. Randomisation was conducted by two administrators from whom the identity of participants was concealed.

Study design

At each of the four sessions, both randomised study arms shared a 10-min didactic introduction on using the direct ophthalmoscope. The students were subsequently divided into their randomised arms. Each arm received a 2-min demonstration on ophthalmoscopy by a trained tutor, followed by a 32-min period where students were encouraged to practice on their colleagues using three available direct ophthalmoscopes (Welch Allyn model ref. 11720-BI). During this time, each student was allocated a strict four minutes of one-to-one feedback and guidance with the tutor, while examining a volunteer's normal eye that had been dilated with 1% tropicamide. In the control arm, a conventional direct ophthalmoscope (Welch Allyn model ref. 11720-BI) was used for the tutor's demonstration and during the allotted individual feedback time. This is in contrast to the intervention arm, in which the teaching ophthalmoscope was used for both the tutor's demonstration and for providing the 4-min one-to-one feedback. The same two tutors were employed at each study session, and exclusively taught either the control or intervention arm. Both tutors share an equivalent amount of experience in fundoscopy and clinical skills teaching, and were trained in providing feedback to students.

After this period of time, all participants were asked to complete a questionnaire detailing baseline characteristics (age, gender, year of study, and prior fundoscopy experience). The questionnaire also asked them to rate both their ‘confidence using a direct ophthalmoscope' and the ‘usefulness of the teaching and feedback they received.' Ratings were provided on a scale of 1 (‘not at all') to 10 (‘extremely'). Similar scales of self-reported confidence have been used previously.4, 5, 24

All participants, regardless of their study arm, were subsequently required to complete two ‘OSCE stations', each of which were 3 min in duration. In the first station, they were asked to use a conventional ophthalmoscope (Welch Allyn model ref. 11720-BI) to perform a fundus examination of a normal ‘patient', whose pupil was dilated with 1% tropicamide. This ‘patient' was a different volunteer to those examined during the study session. Two masked assessors independently marked each participant according to a weighted checklist (refer to Supplementary Information II) that was based on a validated OSCE scoring system for fundoscopy.27 The checklist was adapted to allow weighting for examination of the optic disc, vessels, and macula in order to reflect the undergraduate guidelines set out by the International Council of Ophthalmology.13 The assessors were also asked to independently provide a ‘global impression', indicating whether the student was at a level that satisfied, exceeded, or did not satisfy expectations of a graduating medical student performing fundus examination of a normal eye.28, 29

During the second ‘OSCE station', all students, regardless of their study arm, were required to use the teaching ophthalmoscope to examine the fundus of a previously unencountered ‘patient' with a dilated pupil (using 1% tropicamide). Two additional masked assessors were employed with equivalent training to those in the first ‘station'. These assessors had the opportunity to directly observe not only the student's clinical examination, but also the student's view of the fundus, using the teaching ophthalmoscope. This second station was otherwise identical in design to the first with equivalent time permitted and scoring systems employed.

Outcomes

In order to compare the two ophthalmoscopes as a method of learning, the primary outcome compared between groups was student competence, measured as checklist scores in each of the two OSCE stations. For each station, the score was calculated as a mean of the two independent examiner scores. Mean scores between the study groups were compared using the independent t-test. In order to evaluate the consistency of each teaching method, variance of OSCE scores was compared between groups using the Brown–Forsythe test.30

A secondary measure of student competence was the examiners' global impression for each student, categorised as ‘exceeding', ‘satisfying', or ‘not satisfying' the expectations of a graduating medical student performing fundoscopy on a normal eye. Owing to the high stakes of students ‘not meeting expectations' the proportion of students falling into this category was compared between study groups using the χ2-test.

Student ratings of ‘confidence' and ‘usefulness of teaching' were compared between groups using the independent t-test as a secondary outcome.

Agreement between the two methods of assessment (conventional vs teaching ophthalmoscope) was evaluated using the Bland–Altman method,31 plotting the mean score of the two OSCE stations against the difference between these scores. Further Bland–Altman plots were constructed in order to evaluate agreement between the two examiners when using the conventional ophthalmoscope (OSCE 1) or the teaching ophthalmoscope (OSCE 2). Inter-observer reliability was also compared between assessment methods using: (i) Pearson's correlation coefficient calculated using the paired checklist scores; (ii) Cohen's kappa coefficient calculated using the categorical global impressions.

Results

In the academic year 2013/2014, 269 students were deemed eligible to participate. Out of these, 55 students enroled and were consented to take part. All of these students were randomly assigned to each of the two study groups (28 control; 27 intervention) and there were no losses or exclusions following randomisation. A complete set of data were collected for each study participant and included in the analysis (Figure 1).

Baseline characteristics

The mean participant age was 22.1 years (SD 3.6) with 27 (49.1%) male participants (Table 1). Twenty-five (45.5%) participants were in their first year of medical school, having had no prior experience of fundoscopy. The remaining 55.6% were all second-year medical students with one prior teaching session on fundoscopy. No students had any clinical experience of fundoscopy.

Table 1. Baseline characteristics of study population.

| Control (n=28) | Intervention (n=27) | Total (n=55) | |

|---|---|---|---|

| Age, years | 21.9 (3.5) | 22.3 (3.6) | 22.1 (3.6) |

| Gender (male) | 14 (50%) | 13 (48.1%) | 27 (49.1%) |

| First year medical students | 12 (42.9%) | 13 (48.1%) | 25 (45.5%) |

| Second year medical students | 16 (57.1%) | 14 (51.9%) | 30 (54.5%) |

| Previous teaching on fundoscopy? (responded ‘yes') | 16 (57.1%) | 14 (51.9%) | 30 (54.5%) |

Data presented as means (SD) or numbers (%).

Primary outcome

On station 1 (using a conventional ophthalmoscope), the control group scored a mean value of 18.4 (95% CI: 17.1–19.7; SD=3.4) out of a possible score of 24 points (Table 2). The intervention group scored 19.1 (CI: 17.5–20.7; SD=4.3). Using the independent t-test, there was a significant probability that the difference in scores was owing to chance (P=0.515). On station 2 (using the teaching ophthalmoscope), the control group scored 17.6 (CI: 16.2–19.0; SD=3.8) and the intervention group scored 19.8 (CI: 19.0–20.7; SD=2.3). The difference in these scores between groups was statistically significant (P=0.012). The Brown–Forsythe test was used to compare the variance of scores between the two study arms. Although there was no significant difference between groups in station 1 (P=0.517), the intervention arm demonstrated significantly more consistent results in the second OSCE station (P=0.012).

Table 2. Outcome measures.

| All participants (n=55) | Control group (n=28) | Intervention group (n=27) | P-value | |

|---|---|---|---|---|

| Primary outcomes | ||||

| OSCE 1 checklist scorea (conventional ophthalmoscope) | 18.8 (17.7–19.8) | 18.4 (17.2–19.7) | 19.1 (17.5–20.7) | 0.515b |

| OSCE 2 checklist scorea (teaching ophthalmoscope) | 18.7 (17.8–19.6) | 17.6 (16.2–19.0) | 19.8 (19.0–20.7) | 0.012b |

| SD of OSCE 1 | 3.8 | 3.4 | 4.3 | 0.517c |

| SD of OSCE 2 | 3.4 | 3.8 | 2.3 | 0.012c |

| Secondary outcomes | ||||

| OSCE Station 1 Examiners' global impression | ||||

| Graded as ‘exceeding' by both examiners | 0 | 0 | 0 | |

| One grade ‘exceeding', one ‘satisfied' | 6 | 3 | 3 | |

| Graded as ‘satisfying' by both examiners | 29 | 12 | 17 | |

| One grade ‘satisfying', one ‘unsatisfying' | 17 | 13 | 4 | |

| Graded as ‘unsatisfying' by both examiners | 3 | 0 | 3 | |

| At least one ‘unsatisfying' grade | 20 (36.3%) | 13 (46.4%) | 7 (25.9%) | 0.11d |

| OSCE Station 2 Examiners' global impression | ||||

| Graded as ‘exceeding' by both examiners | 2 | 0 | 2 | |

| One grade ‘exceeding', one ‘satisfied' | 3 | 2 | 1 | |

| Graded as ‘satisfying' by both examiners | 33 | 11 | 22 | |

| One grade ‘satisfying', one ‘unsatisfying' | 0 | 0 | 0 | |

| Graded as ‘unsatisfying' by both examiners | 17 | 15 | 2 | |

| At least one ‘unsatisfying' grade | 17 (30.9%) | 15 (53.6%) | 2 (7.4%) | <0.001d |

| Self-reported student confidence | 6.1 (5.6–6.5) | 4.9 (4.4–5.3) | 7.3 (6.8–7.8) | <0.001b |

| Student-reported ‘usefulness of feedback' | 8.2 (7.8–8.7) | 8.2 (7.7–8.8) | 8.3 (7.6–8.9) | 0.92b |

Data presented as mean (95% confidence intervals) or numbers (percentages).

Checklist score marked out of 24.

P-value calculated according to independent t-test.

P-value calculated according to Brown–Forsythe test.

P-value calculated according to χ2-test. P<0.05 considered significant and displayed in bold.

Secondary outcomes

In addition to the checklist score, examiners were asked to provide a global impression of the student's performance (Table 2). In the first OSCE station (using the conventional ophthalmoscope), 13 (46.4%) of the students in the control group received at least one report that their performance did not satisfy the expected requirements. This compares with 7 (25.9%) in the intervention group (P=0.11).

In station 2 (using the teaching ophthalmoscope), 15 (53.6%) of the students in the control group received at least one ‘unsatisfying' report. This compares with only 2 (7.4%) of students in the intervention group. This difference was statistically significant (P<0.001).

Students reported both self-confidence in fundoscopy and the usefulness of feedback provided using a scale of 1 (not at all) to 10 (extremely). Mean confidence for all students was 6.1 (95% CI 5.6–6.5). Whereas students in the control group reported a mean confidence score of 4.9 (4.4–5.3), students in the intervention group reported a mean score of 7.3 (6.8–7.8). This difference was highly significant (P<0.001). There was no statistical difference between groups in the students' satisfaction of teaching and feedback received (mean score=8.2 vs 8.3; P=0.92).

Comparing methods of assessment

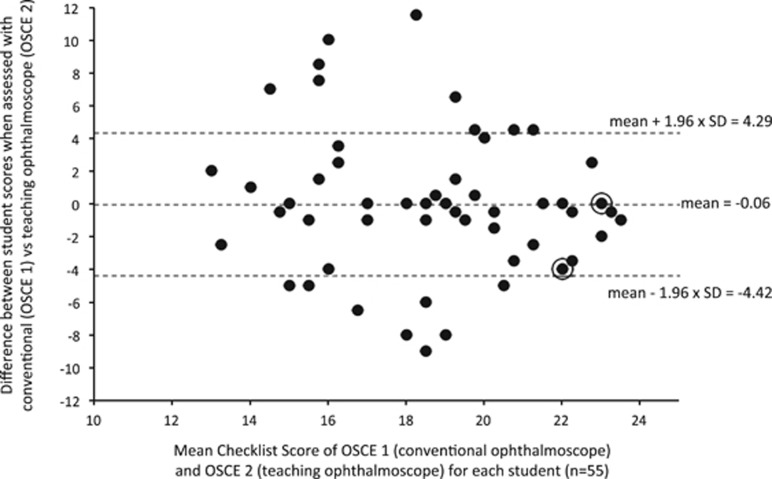

Figure 2 demonstrates the agreement between the two different methods of assessment (ie ‘does the student score in an OSCE using the conventional ophthalmoscope agree with the score they receive using the teaching ophthalmoscope?'). Students from both control and intervention groups were included for this analysis (n=55). While there was no fixed bias evident (mean difference=−0.06), the 95% level of agreement was >4 marks above and below the mean. The two different methods of assessment tended to agree more at the upper and lower ends of student performance.

Figure 2.

Agreement between the conventional ophthalmoscope and the teaching ophthalmoscope as an assessment tool. Data is presented using the Bland–Altman method. The students' mean score for both OSCE 1 (assessed using the conventional ophthalmoscope) and OSCE 2 (assessed using the teaching ophthalmoscope) is plotted against the difference between these scores. Data includes students from both the control group and the intervention group (n=55). Dashed lines represent the mean difference in scores using the two assessment methods and the 95% levels of agreement. Circled points highlight multiple (2 or more) observations at the same point.

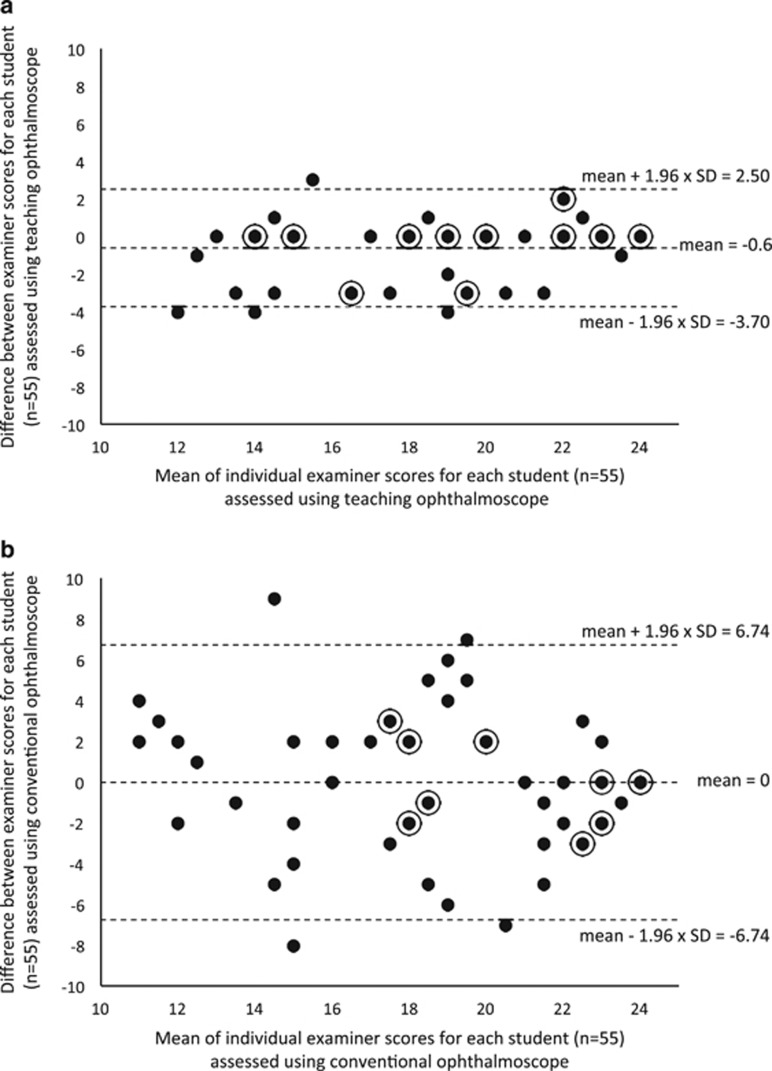

Additional Bland–Altman plots were also used to compare the agreement between the two examiners in OSCE 1 using the conventional ophthalmoscope with the agreement between the two examiners in OSCE 2 using the teaching ophthalmoscope (Figure 3a and b). Although neither method demonstrated a significant fixed bias between examiners, there was clearly a much narrower level of examiner agreement using the teaching ophthalmoscope (Figure 3a) compared with the conventional ophthalmoscope (Figure 3b). Indeed the levels of agreement were consistent across the entire range of student scores. Using the conventional ophthalmoscope, examiner scores were moderately correlated (Pearson's r=0.67; 95% CI 0.38–0.79; P<0.001). Examiner scores using the teaching ophthalmoscope were better correlated: r=0.90 (95% CI 0.56–0.94; P<0.001). The difference in examiner score correlation between the two assessment methods was statistically significant (P<0.001).

Figure 3.

Comparison of inter-observer (examiner) agreement using the teaching ophthalmoscope (a) and the conventional ophthalmoscope (b) as an assessment tool. Data is presented using the Bland–Altman method with the mean examiner score for each student plotted against the difference between examiner scores. The plots include all students from both the control group and the intervention group (n=55). Dashed lines represent the mean difference in examiner scores and the 95% levels of agreement. Circled points highlight multiple (2 or more) observations at the same point.

Regarding the agreement between examiners on scores of global impression (ie, ‘exceeding', ‘satisfying', or ‘not satisfying' requirements), Cohen's kappa coefficient was deemed a more appropriate calculation owing to the categorical nature of the data. Subtracting out the agreement that can be expected owing to chance alone, there was no agreement between OSCE examiners using the conventional ophthalmoscope to assess students (k=−0.014; 95% CI −0.33 to 0.30). This is in contrast to the very high agreement between examiners using the teaching ophthalmoscope (k=0.89; 95% CI 0.77–1.0).

Discussion

The results of this study highlight that there is a potential role for this novel device as an aid to both learning and assessing fundoscopy among medical students:

There is an improved effect on objective measures of medical student competence as well as self-reported confidence when the teaching ophthalmoscope is used as an aid to teaching.

Used as an assessment tool during an OSCE, the teaching ophthalmoscope shows improved inter-observer agreement when compared with a conventional ophthalmoscope.

These two topics will be discussed separately.

Improving student competence and confidence

Although the fundus examination is poorly performed and under-utilised by students and doctors, student competence can be improved with formal instruction.32 It has been noted that undergraduate exposure to ophthalmic teaching is becoming increasingly limited.33, 34 Many attempts have been made to improve the teaching of direct ophthalmoscopy, with the hope that if clinicians are more confident and more competent, they will use the ophthalmoscope more appropriately.18, 19, 20, 21, 22, 23 Of these, only the use of peer optic nerve photographs have demonstrated an improvement in medical students' performance of direct ophthalmoscopy.23 In most institutions this is likely to be a time- and labour-intensive intervention, requiring access to specialist photographic equipment. Other interventions such as pan-optic ophthalmoscopes10 and smartphone adaptors11 have been proposed, but these are not yet common among most frontline clinicians and their educational benefit is still yet to be explored. Presently, we describe a teaching intervention that demonstrates an improvement in both confidence and objective performance amongst medical students performing direct ophthalmoscopy when compared with controls in a randomised trial.

Students that were taught using the conventional ophthalmoscope scored 18.4 (76.8%) and 17.6 (73.4%) on each of the two OSCE stations. The marking scheme used was a modified version of a previously validated system.27 There is remarkable similarity in the mean checklist score of our control group and a similar cohort in the previous report (77.5%), adding further weight to its validity. In the present study, students in the intervention group demonstrated improved checklist scores of 19.1(80.0%) and 19.8(82.6%) in both OSCE stations (t-test P=0.52 and 0.01 respectively). This approaches but does not yet match (as might be expected) the scores of postgraduate ophthalmology trainees (94.0%).27

In a previous study, 42% of 2nd-year medical students reported being confident in direct ophthalmoscopy.4 In the present study, the mean level of self-reported confidence in fundoscopy among the control arm was found to be 49% and in the intervention group this was 73% (t-test P<0.001). This compares to an average confidence score of 3.12 out of 5 (62%) found in a mixed sample of Canadian pre-clerk medical students and ophthalmology trainees.27 Self-reported confidence has also been shown to be directly correlated with experience.4, 5, 24, 27 While none of these scores are directly comparable to our data set, when taken together they offer some additional context. Further validation could be achieved in future by obtaining questionnaire data from more experienced clinicians and students that have received no fundoscopy teaching for comparison with our study population.

Improving the reliability of objective assessment

It has been observed that fundoscopy is a difficult skill to assess effectively and there is still no gold standard for reliable assessment.20 This is largely owing to the inability to directly observe students' performance, leaving the examiner's rating to be influenced by a number of variable factors.35 Our method of assessing fundoscopy using the new teaching ophthalmoscope shows improved inter-observer reliability over the current standard. The improvement in reliability between examiners is independent of whether checklist scores or global impressions are used to evaluate students. The reported differences in examiner agreement may be partly due to differences in characteristics that are inherent to each individual examiner, or the student's repeated performances that is, intra-observer reliability. By reporting inter-observer reliability, the analysis accounts for all the sources of error contributing to intra-observer reliability plus any additional differences between examiners.

As an assessment tool, the teaching ophthalmoscope was able to detect statistically significant differences between the control group and the intervention group, while the conventional ophthalmoscope did not. Although this could be interpreted as being confounded by a ‘learning effect' using the teaching ophthalmoscope, the sizeable difference in ‘confidence' between groups is reassuring, as this outcome measure would not be influenced by any potential learning effect. Rather, it is more appropriate for this ability to detect a difference between students to be interpreted as a form of construct validity. The teaching device is a more sensitive method of assessment in detecting differences between students than the conventional ophthalmoscope. By allowing the examiner to observe the student's view of the patient's fundus, this device may permit a more systematic and sensitive evaluation of the components necessary for thorough fundoscopic examination. The International Council of Ophthalmology has outlined specific competencies that should be met by medical students including examination of the optic disc, macula, and retinal vessels using the direct ophthalmoscope.13 Until now, direct observation of the student achieving each of these specific criteria has not been possible.

Limitations

Although OSCE examiners were masked to the participants' study group, it was clearly not possible to mask students or tutors. In an effort to limit potential bias introduced by the tutors' lack of masking, different tutors were used for each study group. By using tutors that were specifically trained and had similar amounts of experience, attempts were made to limit this as a potential confounding factor. Reassurance is offered by the fact that both groups of students reported very similar scores when asked to rate the usefulness of teaching that they received (8.2 vs 8.3).

Further work would be needed in order to comment on both the diagnostic ability of medical students, and the long-term retention of student proficiency and confidence. Based on prior work done by Mottow-Lippa et al, 18 it is proposed that this tool may work most effectively as part of a longitudinal, embedded ophthalmology curriculum.

Conclusion

Improving both student confidence and competence in using the direct ophthalmoscope is essential if we are to prevent fundoscopy from becoming a ‘forgotten art.' We present an evaluation of a novel ‘teaching ophthalmoscope' that allows a third person (student, tutor or examiner) to directly observe the user's view of the patient's fundus. By allowing an educator and student to share the same fundal view, this device improved student-reported confidence and objective measures of competence, when compared with controls. By providing an examiner with direct visualisation of the student's view, the teaching ophthalmoscope offers greater reliability than the current standard for objective assessment.

The authors declare no conflict of interest.

Footnotes

Supplementary Information accompanies this paper on Eye website (http://www.nature.com/eye)

Supplementary Material

References

- Roberts E, Morgan R, King D, Clerkin L. Funduscopy: a forgotten art? Postgrad Med J 1999; 75(883): 282–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yusuf IH, Salmon JF, Patel CK. Direct ophthalmoscopy should be taught to undergraduate medical students-yes. Eye 2015; 29(8): 987–989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Purbrick RMJ, Chong NV. Direct ophthalmoscopy should be taught to undergraduate medical students-No. Eye 2015; 29(8): 990–991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gupta RR, Lam WC. Medical students' self-confidence in performing direct ophthalmoscopy in clinical training. Can J Ophthalmol 2006; 41(2): 169–174. [DOI] [PubMed] [Google Scholar]

- Dalay S, Umar F, Saeed S. Fundoscopy: a reflection upon medical training? Clin Teach 2013; 10(2): 103–106. [DOI] [PubMed] [Google Scholar]

- Noble J, Somal K, Gill HS, Lam WC. An analysis of undergraduate ophthalmology training in Canada. Can J Ophthalmol 2009; 44(5): 513–518. [DOI] [PubMed] [Google Scholar]

- Wu EH, Fagan MJ, Reinert SE, Diaz JA. Self-confidence in and perceived utility of the physical examination: a comparison of medical students, residents, and faculty internists. J Gen Intern Med 2007; 22(12): 1725–1730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shuttleworth GN, Marsh GW. How effective is undergraduate and postgraduate teaching in ophthalmology? Eye 1997; 11(5): 744–750. [DOI] [PubMed] [Google Scholar]

- Bruce BB, Thulasi P, Fraser CL, Keadey MT, Ward A, Heilpern KL et al. Diagnostic accuracy and use of nonmydriatic ocular fundus photography by emergency physicians: phase II of the FOTO-ED study. Ann Emerg Med 2013; 62(1): 28–33 e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McComiskie JE, Greer RM, Gole GA. Panoptic versus conventional ophthalmoscope. Clin Experiment Ophthalmol 2004; 32(3): 238–242. [DOI] [PubMed] [Google Scholar]

- Livingstone I, Bastawrous A, Giardini ME, Jordan S. Peek. Portable eye examination kit. the smartphone ophthalmoscope. Invest Ophthalmol Vis Sci 2014; 55(13): 1612. [Google Scholar]

- Tso MOM, Goldberg MF, Lee AG, Selvarajah S, Parrish RK, Zagorski Z. An international strategic plan to preserve and restore vision: four curricula of ophthalmic education. Am J Ophthalmol 2007; 143(5): 859–865. [DOI] [PubMed] [Google Scholar]

- International Task Force on Opthalmic Education of Medical Students, International Council of Opthalmology. Principles and guidelines of a curriculum for ophthalmic education of medical students. Klin Monbl Augenheilkd 2006; 223 Suppl 5: S1–S19. [DOI] [PubMed] [Google Scholar]

- Benbassat J, Polak BCP, Javitt JC. Objectives of teaching direct ophthalmoscopy to medical students. Acta Ophthalmol 2012; 90(6): 503–507. [DOI] [PubMed] [Google Scholar]

- Bruce B, Thulasi P, Fraser C. Nonmydriatic ocular fundus photography in the emergency department. N Eng J Med 2011; 364(4): 387–389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morad Y, Barkana Y, Avni I, Kozer E. Fundus anomalies: what the pediatrician‘s eye can't see. Int J Qual Health Care 2004; 16(5): 363–365. [DOI] [PubMed] [Google Scholar]

- Nicholl DJ, Yap CP, Cahill V, Appleton J, Willetts E, Sturman S. The TOS study: can we use our patients to help improve clinical assessment? J R Coll Physicians Edinb 2012; 42(4): 306–310. [DOI] [PubMed] [Google Scholar]

- Mottow-Lippa L, Boker JR, Stephens F. A prospective study of the longitudinal effects of an embedded specialty curriculum on physical examination skills using an ophthalmology model. Acad Med 2009; 84(11): 1622–1630. [DOI] [PubMed] [Google Scholar]

- Kelly LP, Garza PS, Bruce BB, Graubart EB, Newman NJ, Biousse V. Teaching ophthalmoscopy to medical students (the TOTeMS study). Am J Ophthalmol 2013; 156(5): 1056–1061e10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarthy DM, Leonard HR, Vozenilek JA. A New Tool for Testing and Training Ophthalmoscopic Skills. J Grad Med Educ 2012; 4(1): 92–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoeg TB, Sheth BP, Bragg DS. Evaluation of a tool to teach medical students direct ophthalmoscopy. Wisconsin Med J 2009; 108(1): 24–26. [PubMed] [Google Scholar]

- Bradley P. A simple eye model to objectively assess ophthalmoscopic skills of medical students. Med Educ 1999; 33(8): 592–595. [DOI] [PubMed] [Google Scholar]

- Milani BY, Majdi M, Green W, Mehralian A, Moarefi M, Oh FS et al. The use of peer optic nerve photographs for teaching direct ophthalmoscopy. Ophthalmology 2013; 120(4): 761–765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulz C, Hodgkins P. Factors associated with confidence in fundoscopy. Clin Teach 2014; 11(6): 431–435. [DOI] [PubMed] [Google Scholar]

- Li K, He J-F. Teaching ophthalmoscopy to medical students (the TOTeMS study). Am J Ophthalmol 2014; 157(6): 1328–1329. [DOI] [PubMed] [Google Scholar]

- Schulz C. A novel device for teaching fundoscopy. Med Educ 2014; 48(5): 524–525. [DOI] [PubMed] [Google Scholar]

- Haque R, Abouammoh MA, Sharma S. Validation of the Queen's University Ophthalmoscopy Objective Structured Clinical Examination Checklist to predict direct ophthalmoscopy proficiency. Can J Ophthalmol 2012; 47(6): 484–488. [DOI] [PubMed] [Google Scholar]

- Malau-Aduli BS, Mulcahy S, Warnecke E, Otahal P, Teague P-A, Turner R et al. Inter-rater reliability: comparison of checklist and global scoring for OSCEs. Creative Educ 2012; 3(6): 937. [Google Scholar]

- Wilkinson TJ, Frampton CM, Thompson-Fawcett M, Egan T. Objectivity in objective structured clinical examinations: checklists are no substitute for examiner commitment. Acad Med 2003; 78(2): 219–223. [DOI] [PubMed] [Google Scholar]

- Brown MB, Forsythe AB. Robust tests for the equality of variances. J Am Stat Assoc 2012; 69(346): 364–367. [Google Scholar]

- Martin Bland J, Altman D. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986; 1(8476): 307–310. [PubMed] [Google Scholar]

- Cordeiro MF, Jolly BC, Dacre JE. The effect of formal instruction in ophthalmoscopy on medical student performance. Med Teach 1993; 15(4): 321–325. [DOI] [PubMed] [Google Scholar]

- Welch S, Eckstein M. Ophthalmology teaching in medical schools: a survey in the UK. Br J Ophthalmol 2011; 95(5): 748–749. [DOI] [PubMed] [Google Scholar]

- Shah M, Knoch D, Waxman E. The state of ophthalmology medical student education in the United States and Canada, 2012 through 2013. Ophthalmology 2014; 121(6): 1160–1163. [DOI] [PubMed] [Google Scholar]

- Kogan JR, Conforti L, Bernabeo E, Iobst W, Holmboe E. Opening the black box of clinical skills assessment via observation: a conceptual model. Med Educ 2011; 45(10): 1048–1060. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.