Abstract

Objective

We investigated whether language production is atypically resource-demanding in adults who stutter (AWS) versus typically-fluent adults (TFA).

Methods

Fifteen TFA and 15 AWS named pictures overlaid with printed Semantic, Phonological or Unrelated Distractor words while monitoring frequent low tones versus rare high tones. Tones were presented at a short or long stimulus onset asynchrony (SOA) relative to picture onset. Group, Tone Type, Tone SOA and Distractor type effects on P3 amplitudes were the main focus. P3 amplitude was also investigated separately in a simple tone oddball task.

Results

P3 morphology was similar between groups in the simple task. In the dual task, a P3 effect was detected in TFA in all three Distractor conditions at each Tone SOA. In AWS, a P3 effect was attenuated or undetectable at the Short Tone SOA depending on Distractor type.

Conclusions

In TFA, attentional resources were available for P3-indexed processes in tone perception and categorization in all Distractor conditions at both Tone SOAs. For AWS, availability of attentional resources for secondary task processing was reduced as competition in word retrieval was resolved.

Significance

Results suggest that language production can be atypically resource-demanding in AWS. Theoretical and clinical implications of the findings are discussed.

Keywords: Language production, attention, stuttering, adults, brain electrophysiology

INTRODUCTION

Stuttering is a fluency disorder that begins in childhood and persists in ~1% of adults (Yairi and Ambrose, 2013). Persistent stuttering has been associated with a variety of negative quality-of-life, vocational and emotional sequelae (Iverach et al., 2009; Beilby et al., 2013; Bricker-Katz et al., 2013). Intervention focused on improving fluency as well as social, emotional and cognitive well-being can benefit adults who stutter (AWS) (Bothe et al., 2006). However, treatment gains and end-user perceptions of intervention approaches are often limited (McClure and Yaruss, 2003). A contributing factor may be that interventions incompletely address the underlying deficit.

The ability to produce speech is driven by mechanisms of language production (e.g., the activation, selection and phonological encoding of words that convey target concepts) and by mechanisms of motor speech production (e.g., planning/programming, executing and monitoring articulation). Producing speech also demands attention, or top-down cognitive control over which language and speech motor information is enhanced or inhibited given the goals of speaking. Crucially, human attentional capacity is limited. Even for typically-fluent adults (TFA), challenging speaking conditions may tax the allocation of attentional resources to, and cause decrements in, language and/or motor speech production (Ferreira and Pashler, 2002; Dromey and Benson, 2003).

A ‘demands-and-capacities’ mismatch has also been proposed in relation to stuttering (Starkweather and Givens-Ackerman, 1997). According to this model, adequate capacity in language and motor functioning is required to produce speech fluently. If conditions exist in which demand exceeds capacity, fluency can break down. Based on findings from the first author’s studies and other research, the aim of this study was to investigate whether language production is atypically demanding of attentional resources in AWS.

Attention and Language Production

More than a decade ago, Ferreira and Pashler (2002) investigated whether language production in TFA is supported by domain-specific (modular) versus domain-general cognitive resources. Their research demonstrated that lexical-semantic processing draws upon domain-general resources (i.e., cognitive resources available to support a range of human functioning). Later research (Cook and Meyer, 2008) demonstrated that processing the phonological codes of words in language production also consumes domain-general cognitive resources. These and other findings have been used to support the view that language production demands at least some form of attention, or central cognitive control (see Roelofs and Piai, 2011).

One proposed role of attention in language production is to enhance the activation of target concepts and words (lexical-semantic processing) until the phonological and articulatory properties of those words can be encoded. Roelofs (2011) suggested that this role of attention in language production is particularly important, because concepts and phonological forms are only distantly-connected in the network architecture of the mental lexicon. Thus, activated conceptual and lexical information associated with a target word must be maintained until sufficient activation can spread through the mental lexicon to the phonological code for that word. As noted previously, attentional capacity is limited, and a greater proportion of cognitive resources is allocated to processes that are more effortful or demanding (Kahneman, 1973).

Language Production and Attentional Demand in AWS: Behavioral Evidence

As reviewed in (Maxfield, 2015; Maxfield et al., 2010, 2012, 2014), psycholinguistic research has produced some evidence that both lexical-semantic and phonological processing may operate differently in AWS versus TFA, including evidence that these processes may be atypically resource-demanding in AWS. For example, some studies using word association, picture naming, vocabulary and other relatively simple language production tasks have produced evidence that the accuracy or efficiency with which AWS retrieve conceptually-appropriate words may be diminished (Crowe and Kroll, 1991; Wingate, 1988; Newman and Ratner, 2007; Pellowski, 2011; Watson et al., 1994; Bosshardt and Fransen, 1996). In an investigation pairing sentence production with a secondary task, AWS stuttered less often on sentences less rich in semantic content (Bosshardt, 2006). From an attentional perspective, one interpretation is that lexical-semantic processing is not only less accurate/efficient but also particularly resource-demanding in AWS and, thus, may be sacrificed to preserve fluency.

In addition to lexical-semantic processing, language production involves phonological encoding. Several relatively simple word production experiments found no evidence of atypical phonological encoding in AWS (Hennessey et al., 2008; Wijnen and Boers, 1994; Burger and Wijnen, 1999; Newman and Ratner, 2007). However, sub-vocalized phonological tasks have produced evidence of phonological processing decrements in AWS (Sasisekaran et al., 2006; Sasisekaran and De Nil, 2006; Bosshardt and Nandyal, 1988; Postma et al., 1990; Hand and Haynes, 1983; Rastatter and Dell, 1987). Additional studies found that increasing cognitive load in phonological encoding both slowed sub-vocalized phonological judgments in AWS (Weber-Fox et al., 2004; Jones et al., 2012) and affected overt speech production in AWS (Postma and Kolk, 1990; Eldridge and Felsenfed, 1998; Brocklehurst and Corley, 2011; Byrd et al., 2012). From an attentional perspective, these results suggest that phonological encoding requirements may, sometimes but not always, be resource-demanding enough in AWS as to limit the availability of attentional resources to support other functions (e.g., processes in motor speech production).

Language Production and Attentional Demand in AWS: ERP Evidence

Recently, the first author and colleagues began investigating real-time language production in AWS using brain event-related potentials (ERPs) (Maxfield et al., 2010; 2012, 2014). The aim of this work has been to extend psycholinguistic research with AWS by investigating ERP components that, in principle, index language and cognitive processing more precisely than behavioral measures such as naming reaction time (RT) and accuracy. One outcome of this research is evidence that AWS may atypically enhance focal attention on the path to picture naming.

In Maxfield et al. (2010), we investigated whether lexical-semantic processing in picture naming operates similarly in AWS versus TFA, using ERPs recorded during a picture-word priming task adopted from Jescheniak et al. (2002). On most trials of that experiment, a presented picture was followed 150 milliseconds (ms) later by an auditory probe word. 1500 ms after the probe word, a cue to name the picture appeared on the screen (i.e., pictures were named at a delay so as to limit muscle artifact during processing of the auditory probe words, to which ERPs were recorded). Probe words were semantically associated with the target picture labels, or semantically- and phonologically-unrelated. Instructions were to prepare to name the picture on each trial, ignore the auditory probe word (so as to deemphasize phonological processing of probes), and name the pictures when cued. The main expectation was that the N400 ERP component, which indexes contextual priming in language processing (Kutas and Federmeier, 2011), would be elicited to the probe words but attenuated in amplitude when the labels of pictures preceding the probes were semantically-related versus unrelated. This standard semantic N400 priming effect was seen in TFA. However, a reverse semantic N400 priming effect (larger amplitudes for semantically-related versus unrelated probes) was seen for AWS. One interpretation was that - at picture onset - semantic associates of the target picture labels were atypically inhibited in AWS. When those neighbors subsequently appeared as probe words, enhanced processing was necessary to reactivate (or disinhibit) them, indexed by an enhanced N400 amplitude on semantically-related trials. We likened this effect to ‘center-surround inhibition’, a compensatory attentional mechanism for retrieving words poorly-represented in the mental lexicon (Dagenbach et al., 1990). As described by Carr and Dagenbach (1990), “…when activation from the sought-for code is in danger of being swamped or hidden by activation in other related codes, activation in the sought-for code is enhanced, and activation in related codes is dampened by the operation of the center-surround retrieval mechanism” (p. 343).

In Maxfield et al. (2012), we investigated whether phonological processing in picture naming operates similarly in AWS versus TFA, also using ERPs recorded in a picture-word priming task. On most trials of that experiment, a picture was presented followed 150 ms later by an auditory probe word, and then a cue to name the picture 1500 ms later. Once again, ERPs were recorded to the probe words, which were either phonologically-related to the target picture labels, or semantically- and phonologically-unrelated. Task instructions were modified from Maxfield et al. (2010) such that, instead of ignoring the auditory probe words, participants here were required to remember them (so as to emphasize phonological processing of the probes). After the picture was named on each trial, participants were asked to verify the auditory probe word. Once again, the expectation was that the N400 ERP component would be elicited to the probe words but attenuated in amplitude when the labels of the pictures preceding the probes were phonologically-related versus unrelated. This phonological N400 priming effect was seen for TFA. However, a reverse phonological N400 priming effect (larger amplitudes for phonologically-related versus unrelated probes) was seen in AWS. Again, we speculated that - at picture onset - phonological associates of target picture labels were atypically inhibited. When those neighbors subsequently appeared as probe words, enhancements in processing were necessary to reactivate (or disinhibit) them, indexed by enhanced N400 amplitude on phonologically-related trials.

In Maxfield et al. (2014), we investigated whether a task other than picture-word priming would also reveal atypical processing in language production in AWS. For this purpose, we adopted a modified version of a masked picture priming task from Chauncey et al. (2009). On each trial, a picture was named, emphasizing accuracy over speed. The picture was preceded by a masked printed prime word, which was barely perceptible to participants if at all. Prime words were either identical to the target picture labels, or semantically- and phonologically-unrelated. ERPs were recorded from picture onset. Among other findings, a P280 ERP component was modulated with priming in AWS but not TFA. P280 has been associated with enhanced focal attention to facilitate processing of target words under attentionally-demanding conditions (Rudell and Hua, 1996; Mangels et al., 2001). That AWS evidenced P280 activation without lexical priming, once again, suggests atypical attentional control as AWS initiate word retrieval.

Current Study

The possibility that atypical attentional control mediates processes in language production in AWS raises an important question, namely whether language production disproportionately draws resources away from secondary task processing. This can be addressed by pairing a) a picture naming task that heightens competition in lexical retrieval with b) a secondary non-linguistic task that demands attention concurrently with picture naming. An example is the task used by Ferreira and Pashler (2002) to investigate central resource consumption in word retrieval. Participants engaged in a picture-word interference (PWI) task (Task 1) while judging the pitch of tones (Task 2). Tones presented in close proximity to pictures elicited longer RTs than tones presented distally, consistent with a psychological refractory period effect. In Semantic PWI, naming RTs were prolonged (the standard Semantic PWI effect) and, crucially, tone judgment RTs increased relative to a control condition. This indicates that lexical-semantic processing interferes with tone discrimination (as tone judgment times would otherwise have been unaffected). In Phonological PWI, naming RTs were shortened but tone judgment RTs were unaffected (but see Roelofs, 2008 for a different pattern of results using a visual rather than an auditory Task 2).

In the current experiment, we modified the Ferreira and Pashler (2002) task to include ERP in addition to RT measures. The ERP component of interest here is P3. A standard experimental approach for eliciting P3 involves presenting frequent stimuli interspersed with task-relevant infrequent stimuli requiring a button press. Relative to frequent stimuli, ERP activity to infrequent stimuli typically has a larger positive-going amplitude, most prominently at posterior electrodes, reflecting activation of the P3 component (Spencer et al., 2001). As summarized by Luck (1998), “P3 amplitude can be used as a relatively pure measure of the availability of cognitive processing resources for accomplishing target perception and categorization” (p. 223). To investigate the impact of PWI on P3 amplitude, we recorded tone-elicited ERPs in a modified version of the dual PWI/tone discrimination task used in Ferreira and Pashler (2002). Tones were low or high in pitch, occurred relatively frequently (Standard low tones) or infrequently (Target high tones, requiring a button press), close in proximity to picture onset (Short Tone SOA = 50 ms) or far in proximity from picture onset (Long Tone SOA = 900 ms), following pictures overlaid with Unrelated, Semantically-related or Phonologically-related Distractors. Analysis aimed to determine whether P3 amplitude was influenced by Tone Stimulus Onset Asynchrony (SOA), Distractor Type and/or the interaction of these factors similarly between groups. If lexical-semantic and/or phonological processes in language production are particularly resource-demanding in AWS, then we would expect disproportionately attenuated P3 amplitudes at the Short Tone SOA in either condition.

We also compared P3 amplitude in AWS versus TFA in a simple (single-task) tone oddball task. This was included to rule-out the possibility that P3 morphology differed between our two participant groups in the absence of any explicit language production demands. There is some prior evidence that P3 elicited in simple oddball paradigms can differ in morphology in at least some AWS versus TFA (e.g., Morgan et al., 1997; Hampton and Weber-Fox, 2008; Sassi et al, 2011).

METHOD

Participants

Participants were a convenience sample of 15 TFA (5 male, mean age=23 years, 8 months) and 15 AWS (12 male, mean age=26 years). The difference in age between groups was not statistically significant (t[28]=1.35, p=.19). In relation to gender, although there is some evidence that auditory P3 amplitudes are larger in women versus men (Hoffman and Polich, 1999), other studies did not show this effect (Sangal and Sangal, 1996; Yagi et al., 1999) including a large-sample study by Polich (1986). Auditory P3 topography may be affected by gender, with P3 amplitudes larger at electrode Pz relative to central and frontal sites in women but not men (e.g., Polich, 1986; Polich et al., 1988; Cahill and Polich, 1992). If present, gender effects on P3 tend to be small (Polich and Herbst, 2000). As reported in the Results, neither P3 amplitude nor topography differed between groups in our simple oddball task despite the different gender make-up of the AWS versus TFA groups.

Each participant gave written informed consent before testing, and received $50 upon completion. At time of testing, participants reported that they were in good health, had no history of neurological injury or disease, were not taking medications that affect cognitive functions, had normal or corrected-to-normal vision, had normal hearing, and had typical speech and language abilities. All participants were right-handed. All were born in the United States, spoke English as their only language, and minimally had a high-school education. Specifically, 7 TFA had a high school education or GED equivalent, 1 completed vocational technical school, 6 had an earned undergraduate college degree, and 1 had an earned master’s degree. Five AWS had a high school education or GED equivalent, 1 completed vocational technical school, 6 had an earned undergraduate college degree, 2 had an earned master’s degree, and 1 had an earned doctoral degree.

The Peabody Picture Vocabulary Test, Fourth Edition, Form B (PPVT-4; Dunn and Dunn, 2007) and the Expressive Vocabulary Test, Second Edition, Form B (EVT-2, Williams, 2007) were administered to assess receptive and expressive vocabulary knowledge, respectively. Group did not affect PPVT-4 scores (TFA mean score=107.76, SD=9.54; AWS mean score=104.59, SD=10.33) (t[28]=.81, p=.43). Minimally, all participants scored within one standard deviation from the mean on the PPVT-4, with two AWS and three TFA scoring better than two standard deviations above the mean (two AWS also scored one point below two standard deviations above the mean). Nor did Group affect EVT-2 scores (TFA mean score=104.94, SD=10.04; AWS mean score=100.29, SD=10.17) (t[28]=1.12, p=.27). Minimally, all participants scored within one standard deviation from the mean on the EVT-2. Three TFA and two AWS scored better than two standard deviations above the mean on the EVT-2. In general, the groups were well-matched by age, educational level, and receptive/expressive vocabulary knowledge.

For the AWS, the presence of stuttering was verified by the first author using speech samples (conversational and reading) produced by each participant. Quality-of-life impacts of stuttering were measured using the Overall Assessment of the Speaker’s Experience with Stuttering (OASES) (Yaruss and Quesal, 2006). Self-rated impact of stuttering was mild for three AWS, mild-moderate for seven AWS and moderate for five AWS. None of the AWS participants reported severe quality-of-life impacts stemming from their experiences with stuttering.

Stimuli

Stimuli for the dual-task experiment included 25 target and 25 filler black-line drawings of common objects. Each drawing elicited a single noun label, in English, with 90% or better agreement, according to norms from the International Picture Naming Project (IPNP) (Szekely et al., 2004). The 25 targets comprised a subset of stimuli used by Damian and Martin (1999) in their series of picture-word experiments (18 drawings match those in Damian and Martin-Appendix A, and 7 drawings match those in Damian and Martin-Appendix B).

Each of the 50 drawings was assigned three distractor words. One was Semantically- (i.e., Categorically-) related to the label, the second was Phonologically-related (minimally sharing the initial two phonemes and the initial two letters), and the third was Unrelated in form or meaning. With two exceptions, the distractors assigned to the 25 target drawings were the same used by Damian and Martin (1999). Two target distractors were replaced to prevent duplication, as they were assigned to more than one picture in the Damian and Martin (1999) stimulus sets. Three distractor words were also assigned to each of the 25 filler pictures, with an eye toward matching the average frequency of filler distractors with those of target distractors.

Procedure

Testing had three components. First, each participant completed a simple oddball tone monitoring task in which low (1000Hz) and high (1500Hz) pure tones, each 60 ms in duration, were presented continuously at an SOA of 2000 ms. The probability of low versus high tones was 80% versus 20%. Participants were instructed to press a button to high tones, using the index finger of their right hand, as quickly and accurately as possible. 180 trials comprised this task, ~6 minutes in duration. Continuous EEG was recorded during this task as described in the Recording and Apparatus section.

Next, participants were familiarized with the 50 black-line drawings selected for the main task, after which they completed a practice task. Participants were told that, in addition to discriminating high versus low tones, a picture-distractor word pair would appear on each trial. Instructions were to name the picture, as quickly and accurately as possible, while judging the tone. Practice included 100 trials (each of the 25 filler pictures, presented twice with its unrelated distractor word, with each tone type at each tone SOA). Trial structure was the same as in the main task. EEG was not recorded during this warm-up task.

For the main task, 600 trials were presented in a single, large block. Each trial included a crosshair (+) presented for 500 ms, replaced by a Picture-Distractor pair, followed by a (1000Hz or 1500Hz) tone at an SOA of either 50 ms or 900 ms relative to picture onset. Distractor word SOA was always 0 ms relative to picture onset. The distractor word on each trial was masked (using 7 upper-case X’s) at 200 ms after picture onset. Trials were separated by a 500-ms intertrial interval, during which a blank screen was shown. The time-out period for responding was 3000 ms for naming and 2500 ms for tone judgments. Each picture appeared a total of 12 times. Each target picture appeared with each of its three distractor words, once with the low tone at each tone SOA, and once with the high tone at each tone SOA. To achieve an oddball effect (75% low tones, 25% high tones), each filler picture appeared with each of its three distractor words, only with a low tone, twice at each tone SOA. Trial type was completely randomized. Continuous EEG was recorded during this task, too, as described next.

Recording and Apparatus

This experiment was conducted in the same facility as our previous work (Maxfield et al., 2010, 2012, 2015), thus involving many of the same recording tools and settings. The experiment took place in a sound-attenuating booth contained within a laboratory. Participants viewed the visual stimuli on a 19-inch monitor located inside the booth, at a viewing distance of ~90 cm and at an angle subtending ~6.8 degrees. The height and width of the picture stimuli did not exceed 10.7 centimeters. Presentation of the experimental stimuli, and logging of behavioral responses, was controlled by Eprime software, Version 1.1 (Psychological Software Tools). A hardware response box recorded both naming and push-button RTs. The auditory tone stimuli were presented through E-A-RTone 3A (Aearo) insert earphones.

During each task, EEG was recorded continuously from each participant at a sampling rate of 500Hz using a nylon QuikCap and SCAN software, Version 4.3 (Neuroscan). Thirty-two active recording electrodes constructed of Ag/AgCl were located at standard positions in the International 10–20 system (Klem et al., 1999) and referenced to a midline vertex electrode. The ground electrode was positioned anterior to Fz on the midline. Electro-ocular activity was recorded from two bipolar-referenced vertical electro-oculograph electrodes, and from two bipolar-referenced horizontal electro-oculograph electrodes. Recording impedance did not exceed 5 kOhm. Online low-pass filtering was used (corner frequency of 100 Hz, DC time constant).

EEG-to-ERP Reduction

The data processing workflow also resembled that used in our previous studies (Maxfield et al., 2010, 2012, 2015). The continuous EEG record for each participant in each of the two tasks was first epoched. The epoch for each trial contained EEG data elicited by a tone, beginning 300 ms before tone onset and terminating 1200 ms after tone onset. Trials on which pictures were named incorrectly and/or tone judgments were incorrect were excluded. A procedure using Independent Component Analysis to de-mix the EEG data and remove ocular artifacts (Glass et al., 2004; Bell and Sejnowski, 1995) was implemented in Matlab to maximize the number of trials retained for averaging (Picton et al., 2000). After ocular artifact correction, noisy channels were identified. Channels were noisy if the fast-average amplitude exceeded 200 microvolts (consistent with large drift) or if the differential amplitude exceeded 100 microvolts (consistent with high-frequency noise). A trial was rejected if more than three channels were noisy. A three-dimensional spline interpolation procedure was implemented in Matlab (Nunez and Srinivasan, 2006, Appendices J1–J3) to replace noisy channels in accepted trials. Next, averages were computed. For the dual-task data, no fewer than 20 artifact-free trials went into the ERP averages for each participant in each condition. For the simple tone task, no fewer than 131 trials comprised the ERP averages in the Standard condition and no fewer than 20 trials comprised the ERP averages in the Target condition for each participant. Finally, the ERP averages were low-pass filtered (corner frequency of 40 Hz), re-referenced to averaged mastoids, truncated (−100 to 1000 ms) and baseline-corrected (−100 to 0 ms).

Analysis

Dual-task behavioral data

For the dual task, naming accuracy, naming RT, tone judgment accuracy and tone judgment RT were analyzed separately. Naming on each trial was correct if the participant produced the target label within the time-out period. Naming was incorrect for trials eliciting no response, a whole-word substitution, a phonological error, a multi-word response, or any response after the time-out period. Tone judgment on each trial was correct if the participant withheld responding to a low (Standard) tone or pressed the button to a high (Target) tone within the time-out period. Each set of accuracy data was submitted to a repeated-measures ANOVA with Group entered as a between-subjects variable with two levels (TFA, AWS), Distractor Type entered as a within-subjects factor with three levels (Semantic, Phonological, Unrelated), Tone Type entered as a within-subjects factor with two levels (Low, High), and Tone SOA entered as a within-subjects factor with two levels (Short, Long). Untrimmed naming RTs were also analyzed using this same approach. Untrimmed tone judgment RTs were analyzed in a repeated-measures ANOVA with three of the same factors (Group, Distractor Type, and Tone SOA), but no effect of Tone Type because correct tone responses were only given to high tones. All four ANOVAs had an alpha-level of 0.05. For any test violating the assumption of sphericity, we report p-values based on adjusted degrees of freedom (Greenhouse and Geiser, 1959) along with original F-values. Statistically significant interactions were followed-up with Bonferroni-corrected pair-wise comparisons.

Dual-task ERP data

As discussed by Luck (1998), a challenge in measuring P3 activity in a psychological refractory period context is that ERP activity from Task 1 can overlap with ERP activity from Task 2 differently at different SOAs. His solution was to compute difference waves (Target ERPs minus Standard ERPs) separately for each Tone SOA condition in order to attenuate activity unrelated to P3. The logic of this approach is that both Target and Standard ERPs to Task 2 should be similarly influenced by overlapping Task 1 activity. Subtracting them should isolate mostly P3 activity while attenuating overlapping ERP activity from Task 1 (see Luck, 1998).

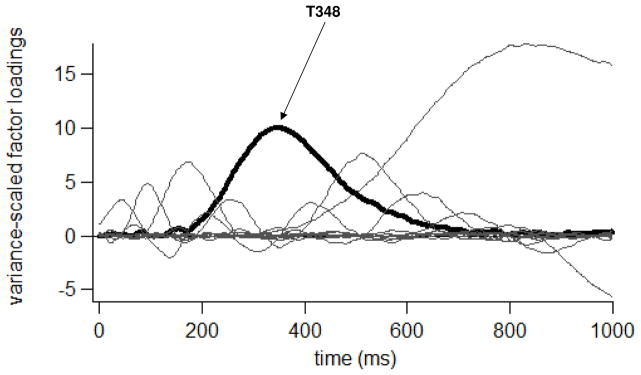

We adopted this approach. However, before computing Target minus Standard differences, the averaged ERP data were preprocessed using a covariance-based temporal principal component analysis (tPCA) (Dien and Frishkoff, 2005). PCA is a data reduction technique that can be used to facilitate objective identification of ERP components, address overlap of ERP components, and control type-1 measurement error. The aim of the tPCA was to identify distinct windows of time (hereafter, temporal factors) during which similar voltage variance was registered across consecutive sampling points in the averaged ERP waveforms. Each temporal factor is defined by a set of loadings and by a set of scores. The variance-scaled loadings describe the time-course of each temporal factor. The temporal factor scores summarize the ERP activity during the time window defined by each temporal factor for each participant, at each electrode, and in each condition. tPCA, when followed-up by topographic analysis of temporal factor scores, has been shown to optimize power for detecting statistically significant effects in ERP data sets (Kayser and Tenke, 2003; Dien, 2010).

To compute the tPCA, the averaged ERP waveforms were combined into a data matrix comprised of 501 columns (one column per time point in the 0–1000 ms epoch) and 11,520 rows (the averaged ERP voltages for 30 participants, at each of the 32 electrodes, in each of the 12 Distractor Type-by-Tone Type-by-Tone SOA conditions). As reported below, 12 temporal factors were retained based on the Visual Scree Test (Catell, 1966). The 12 retained temporal factors were rotated to simple structure using Promax (Hendrickson and White, 1964) with Kaiser normalization and k=3 (following recommendations in Richman, 1986; Tataryn et al., 1999; Dien, 2010). The tPCA and Promax rotation were carried-out using the Matlab-based PCA Toolbox (Dien, 2010).

In order to target P3 effects, a temporal factor with a time-course most consistent with P3 was selected. As reported below, the selected temporal factor had a peak latency of 348 ms. Filtering the averaged ERP data by this temporal factor isolated the ERP variance within a time window peaking at ~350 ms after tone onset for each participant, at each electrode, in each condition. To verify the presence of a P3 effect, the temporal factor scores were submitted to repeated-measures ANOVA with Group entered as a between-subjects factor with two levels (TFA, AWS), Distractor Type entered as a within-subjects factor with three levels (Semantic, Phonological, Unrelated), Tone Type entered as a within-subjects factor with two levels (Low, High), and Tone Lag (SOA) entered as a within-subjects factor with two levels (Short, Long). Two topographic factors were also included as within-subjects factors including Laterality with five levels (Left Inferior, Left Superior, Midline, Right Superior, Right Inferior) and Anteriority with three levels (Frontal, Central, Posterior). The 15 electrodes included for analysis were grouped by Laterality and Anteriority as follows: F7, T7, P7 (Left Inferior); F3, C3, P3 (Left Superior); Fz, Cz, Pz (Midline); F4, C4, P4 (Right Superior); and F8, T8, P8 (Right Inferior). The aim of this analysis was to determine whether temporal factor score amplitudes differed to Target (High) tones versus Standard (Low) tones (i.e., had a larger positive-going amplitude to Target versus Standard tones consistent with a P3 component) as a main effect and/or interacting with Group, Distractor Type, Tone Lag, Laterality and/or Anteriority. As reported in the Results, robust P3 effects were detected for the TFA group in all six Distractor Type-by-Tone Lag conditions. For the AWS, however, P3 effects were only detected for a subset of Distractor Type-by-Tone Lag conditions.

Next, difference scores were computed using the same set of temporal factor scores. Standard (Low) tone scores were subtracted from Target (High) tone scores, separately for each group, in each Distractor Type, at each Tone Lag, and at each of the 15 electrodes included in the analysis. The Difference scores were then submitted to repeated-measures ANOVA with Group as a between-subjects factor with two levels (TFA, AWS), Distractor Type as a within-subjects factor with three levels (Semantic, Phonological, Unrelated) and Tone SOA as a within-subjects factor with two levels (Short, Long). Laterality and Anteriority were also entered as within-subjects factors as described previously. The aim of this analysis was to determine whether the amplitude of isolated P3 effects differed as a function of Group, Conditions, scalp topography or their interaction.

For both ANOVAs, we report p-values based on adjusted degrees of freedom (Greenhouse and Geiser, 1959) when necessary along with original F-values. Statistically significant interactions were followed-up with Bonferroni-corrected pair-wise comparisons.

Simple oddball task behavioral data

For the simple oddball task, tone judgment accuracy and tone judgment RT were analyzed separately. Tone judgment on each trial was correct if the participant withheld responding to a low (Standard) tone or pressed the button to a high (Target) tone within the time-out period. Tone judgment accuracy data were submitted to a repeated-measures ANOVA with Group entered as a between-subjects factor with two levels (TFA, AWS) and Tone Type entered as a within-subjects factor with two levels (Low, High). Untrimmed tone judgment RTs were analyzed using an independent-samples t-test comparing Group (TFA versus AWS).

Simple oddball task ERP data

ERP data for the simple oddball task were also submitted to temporal PCA, following the same general procedures outlined previously. A temporal factor most consistent with the P3 component was selected. Factor scores associated with this temporal factor combination were analyzed in a repeated-measures ANOVA with Group entered as a between-subjects factor with two levels (TFA, AWS) and Tone Type entered as a within-subjects factor with two levels (Low, High). Laterality and Anteriority were also entered as within-subjects factors as described previously. The aim of this analysis was to determine whether temporal factor score amplitudes differed to Target (High) tones versus Standard (Low) tones as a main effect and/or interacting with Group and/or scalp topography. As reported in the Results, a robust P3 effect was detected for both groups. Difference scores were then computed using the same set of temporal factor scores. Standard (Low) tone scores were subtracted from Target (High) tone scores, separately for each group at each of the 15 targeted electrodes. The Difference scores were then compared between Groups using repeated-measures ANOVA with Laterality and Anteriority entered as within-subjects factors. The aim of this analysis was to determine whether the amplitude of isolated P3 effects differed by Group and/or the interaction of Group and scalp topography.

RESULTS

Findings from the simple oddball task are reported first to provide a frame of reference for evaluating the dual-task behavioral and ERP findings.

Simple Oddball Task Behavioral Data

Behavioral data for the Simple Tone Oddball Task are summarized in Table 1. Tone judgment accuracy was not affected by Group, Tone Type or their interaction. Tone judgment RT was marginally affected by Group (t[28]=1.79, p=.08), with tone judgments faster for AWS (mean=323.98 ms) than for TFA (mean=365.63 ms).

Table 1.

Mean accuracy and RT (with standard deviations) for each Group in the Simple Tone Oddball Task.

| TFA | AWS | |

|---|---|---|

| Button Press Accuracy | ||

| Standard (n=144 items) | 143.67 (.82) | 143.6 (.91) |

| Target (n=36 items) | 35.93 (.26) | 35.87 (.35) |

| Button Press RT (in ms) | ||

| Target | 365.63 (48.54) | 323.98 (75.99) |

Simple Oddball Task ERP Data

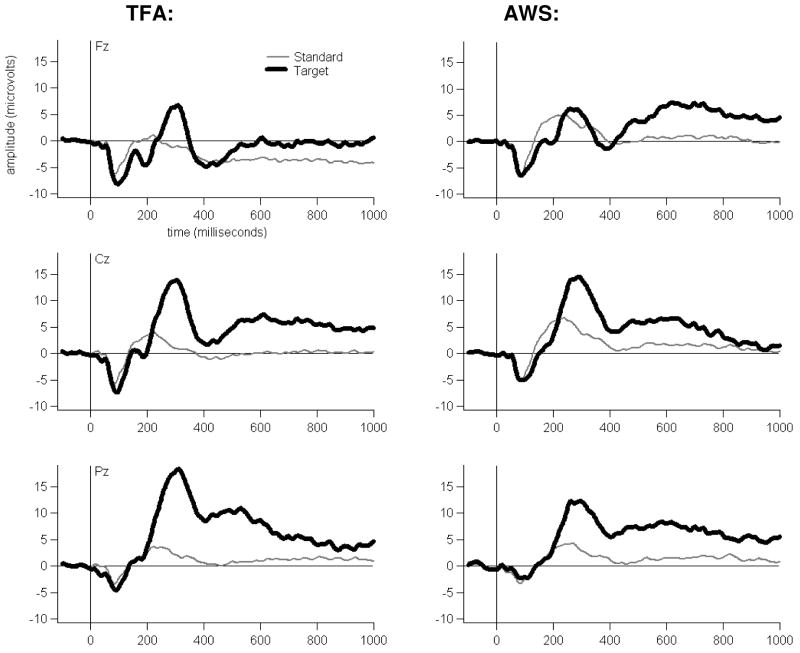

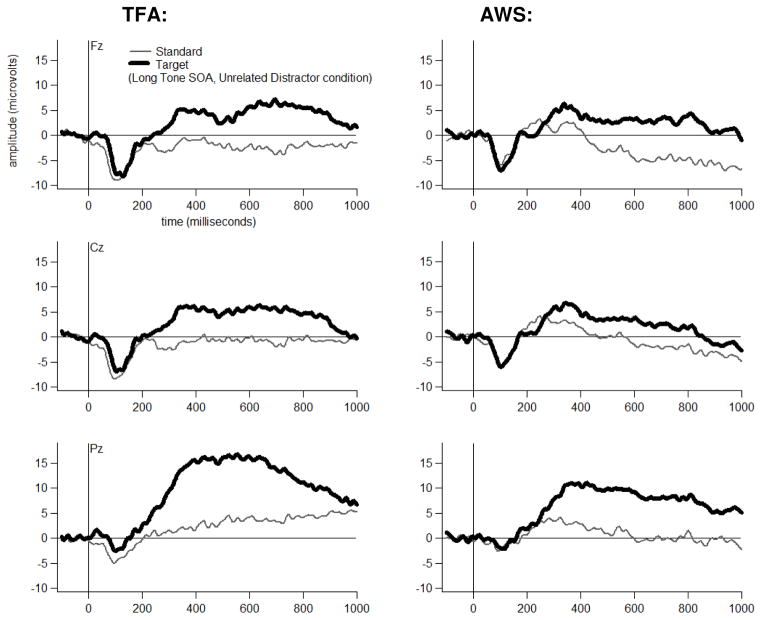

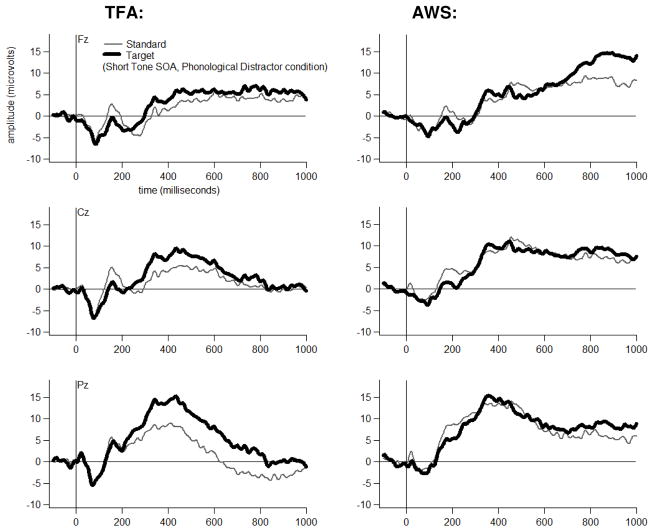

Simple oddball task grand average ERP waveforms are shown for each Group, at three midline electrodes, in Figure 1. As shown, the tones generally elicited a pattern of early (exogenous) ERP activity followed by later positive-going activity modulated by Tone Type, particularly at electrode Pz.

Figure 1.

Simple Tone Oddball Task grand average waveforms at Fz, Cz and Pz for each Group to each Tone Type.

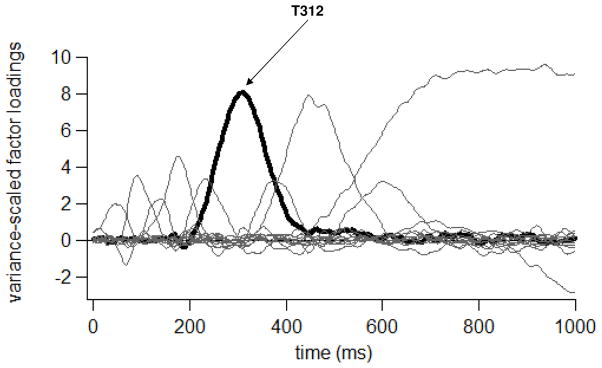

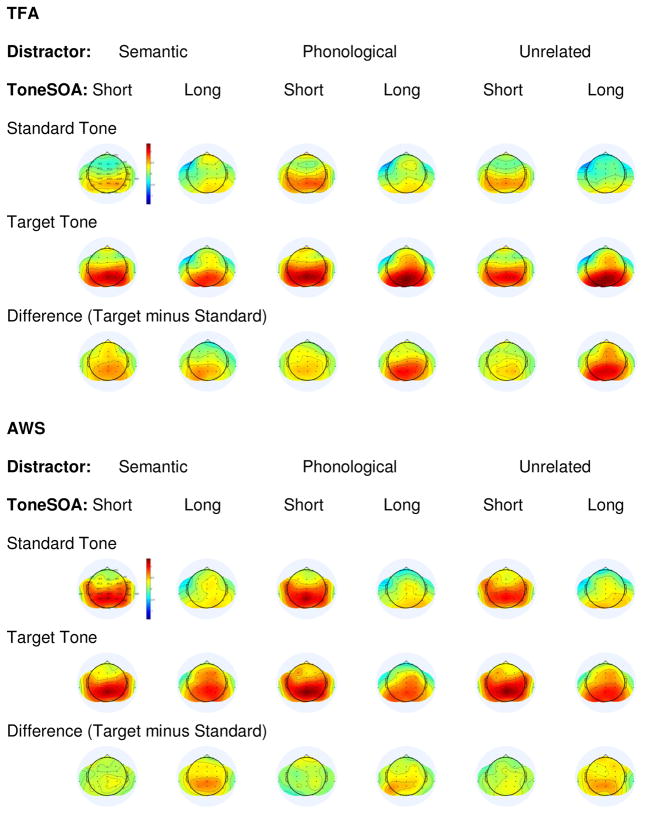

The temporal PCA resulted in 14 Promax-rotated temporal factors, accounting for 84.10% of the variance in the simple oddball average ERP data set. One temporal factor was defined by a set of loadings that peaked in amplitude at 312 ms after tone onset (hereafter, T312, see Figure 2). The T312 factor scores were affected by an interaction of Laterality, Anteriority and Tone Type (F[8,224]=5.84, p =.003). As shown in Figure 3, T312 scores had a larger positive-going amplitude to Target versus Standard tones in both Groups, primarily at posterior electrodes. Group did not affect T312 amplitudes, either as a main effect or interacting with Laterality, Anteriority and/or Tone Type. Still, to investigate whether gender may have affected P3 activity disproportionately in either Group, we checked whether T312 scores to Target tones were larger in the TFA (comprised of mostly women) than in the AWS (comprised of mostly men) at any of the midline electrodes, consistent with other research cited previously (e.g., Hoffman and Polich, 1999). No such effect was observed at Fz (p=.82), Cz (p=.83) or Pz (p=.13). We also checked for a characteristic increase in P3 amplitude at Pz versus frontal and central sites often reported for women but not men, also mentioned previously. For both Groups, T312 activity to Target tones was larger at Pz than at Fz (p<.001 for each Group). If anything, this suggests that female participants impacted P3 topography similarly in each Group despite their different numbers.

Figure 2.

Promax-rotated factor loadings for each of 14 temporal factors generated from the Simple Tone Oddball Task ERP data.

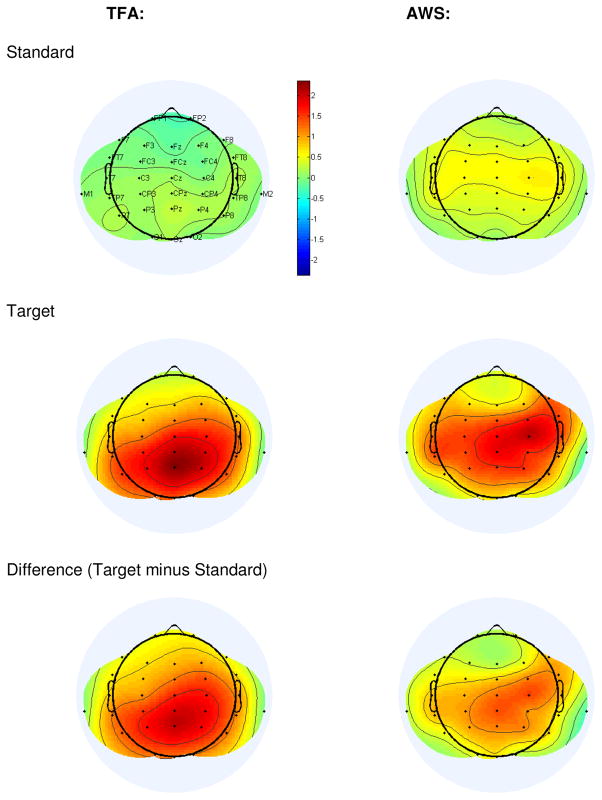

Figure 3.

Topographic plots of grand average T312 scores, shown separately for each Group to each Tone Type and for the Target minus Standard Difference.

T312 difference scores were analyzed (Target minus Standard) to determine whether detected P3 effects differed in magnitude between Groups. The Difference scores were shown not to be affected by Group, either as a main effect or interacting with Laterality and/or Anteriority. Here, too, we checked whether T312 difference score amplitudes differed at Pz versus Fz and Cz. For both Groups, T312 difference score amplitudes were larger at Pz versus Fz (p<.001 for each Group) suggesting, once again, that female participants impacted P3 topography similarly in each group.

Dual-Task Behavioral Data

Dual-task behavioral data are summarized in Table 2. Statistically significant effects were as follows.

Table 2.

Mean accuracy and RT (with standard deviations) for each Group in each Tone Type x Tone SOA x Distractor Type condition.

| TFA | AWS | |||

|---|---|---|---|---|

| Naming Accuracy (n=25 items per condition) in Standard Tone Context | ||||

| Short Tone SOA | Long Tone SOA | Short Tone SOA | Long Tone SOA | |

| Semantic | 24.13 (1.41) | 24.27 (1.03) | 24.6 (.63) | 24 (.65) |

| Phonological | 24.67 (.62) | 24.87 (.35) | 24.67 (.82) | 24.93 (.26) |

| Unrelated | 24.6 (.63) | 24.8 (.41) | 24.73 (.59) | 24.87 (.35) |

| Naming Accuracy (n=25 items per condition) in Target Tone Context | ||||

| Short Tone SOA | Long Tone SOA | Short Tone SOA | Long Tone SOA | |

| Semantic | 24.6 (.63) | 24.27 (.62) | 24.4 (.63) | 24.53 (.64) |

| Phonological | 24.27 (1.03) | 24.73 (.59) | 24.87 (.35) | 24.6 (.51) |

| Unrelated | 24.93 (.26) | 24.73 (.46) | 24.73 (.46) | 24.73 (.59) |

| Naming RT (in ms) in Standard Tone Context | ||||

| Short Tone SOA | Long Tone SOA | Short Tone SOA | Long Tone SOA | |

| Semantic | 776.74 (79.73) | 861.1 (242.74 | 768.74 (139.84) | 902.63 (263.35) |

| Phonological | 681.43 (82.24) | 795.27 (285.73) | 677.53 (112.74) | 831.37 (297.86) |

| Unrelated | 716.08 (66.31) | 827.56 (265.71) | 704.33 (127.95) | 855.08 (280.52) |

| Naming RT (in ms) in Target Tone Context | ||||

| Short Tone SOA | Long Tone SOA | Short Tone SOA | Long Tone SOA | |

| Semantic | 788.67 (100.13) | 887.75 (274.63) | 798.96 (125.29) | 925.66 (299.31) |

| Phonological | 713.9 (85.92) | 819.98 (287.71) | 685.88 (111.71) | 831.09 (306.24) |

| Unrelated | 729.31 (97.47) | 834.51 (292.13) | 749.13 (123.33) | 862.43 (293.06) |

| Button Press Accuracy (n=25 items per condition) to Standard Tones | ||||

| Short Tone SOA | Long Tone SOA | Short Tone SOA | Long Tone SOA | |

| Semantic | 24.73 (.46) | 24.73 (.46) | 24.4 (.83) | 24.6 (.63) |

| Phonological | 24.53 (.92) | 24.87 (.35) | 24.73 (.59) | 24.8 (.41) |

| Unrelated | 24.87 (.35) | 24.8 (.41) | 24.73 (.46) | 24.8 (.41) |

| Button Press Accuracy (n=25 items per condition) to Target Tones | ||||

| Short Tone SOA | Long Tone SOA | Short Tone SOA | Long Tone SOA | |

| Semantic | 24.4 (.74) | 24.07 (1.1) | 24.07 (1.1) | 24.33 (.72) |

| Phonological | 24.73 (.59) | 24.2 (.94) | 24.07 (1.16) | 24.53 (.74) |

| Unrelated | 24.6 (.83) | 24.53 (.64) | 24.07 (1.62) | 24.53 (.74) |

| Button Press RT ( in ms) to Target Tones | ||||

| Short Tone SOA | Long Tone SOA | Short Tone SOA | Long Tone SOA | |

| Semantic | 802.69 (195.97) | 566.3 (203.42) | 736.05 (141.82) | 522.11 (134.49) |

| Phonological | 756.38 (173.79) | 532.28 (208.53) | 727.69 (160.57) | 487.94 (122.38) |

| Unrelated | 768.41 (175.85) | 528.54 (181.62) | 715.1 (141.18) | 474.45 (111.02) |

Naming accuracy

Naming accuracy was affected by the interaction of Group, Distractor Type, Tone Type and Tone SOA (F[2,56]=4, p=.03). Bonferroni-corrected pairwise t-tests revealed that, for the TFA group, naming accuracy was slightly albeit it significantly poorer in the Phonological condition (mean=24.27) than in Unrelated (mean=24.93) in the context of High Tones presented at a Short SOA (p=.02) (see Table 2). In contrast, for the AWS group, naming accuracy was slightly poorer in the Semantic condition (mean=24) than in Unrelated (mean=24.87) in the context of Standard (Low) Tones presented at a Long SOA (p=.003).

Naming RT

Naming RT was affected by Distractor Type (F[2,56]=83.77, p<.001). Bonferroni-corrected pairwise comparisons revealed that naming RTs were slower in Semantic Distractor Type (mean=838.78 ms) than in Unrelated (mean=784.8 ms). In contrast, naming RTs were faster in Phonological Distractor Type (mean=754.56 ms) versus Unrelated. The former is consistent with the standard Semantic interference effect, while the latter is consistent with Phonological facilitation.

Naming RT was also affected by Tone SOA (F[1,28]=9.91, p=.004), with naming RTs shorter in the Short Tone SOA Condition (mean=732.56 ms) than in the Long Tone SOA Condition (mean=852.87 ms).

Finally, naming RT was affected by Tone Type (F[1,28]=16.18, p<.001), with naming RTs shorter in the context of Standard (Low) Tones (mean=783.15 ms) than in the context of Target (High) Tones (mean=802.27 ms).

Button press accuracy

Tone judgment accuracy was affected by Distractor Type (F[2,56]=4.47, p=.017), with more errors in Semantic Distractor Type (mean=24.42) than in Unrelated (mean=24.62).

Accuracy in tone judgments was also affected by an interaction of Group, Tone SOA and Tone Type (F[1,28]=7.72, p=.01). Bonferroni-corrected t-tests revealed that TFA had less accurate tone judgments for Target (High) tones (mean=24.27) than for Standard (Low) tones (mean=24.8) at the Long Tone SOA. In contrast, AWS had less accurate tone judgments for Target (High) Tones (mean=24.07) than for Standard (Low) tones (mean=24.62) at the Short Tone SOA.

Button press RT

Tone judgment RT was affected by Distractor Type (F[2,56]=13.82, p<.001). Crucially, tone judgments were slower in Semantic Distractor Type (mean=656.79 ms) than in Unrelated (mean=621.63 ms).

Tone judgment RT was also affected by Tone SOA (F[1,28]=263.65, p<.001), with tone judgments slower at the Short Tone SOA (mean=751.05 ms) than at the Long Tone SOA (mean=518.6 ms). This difference represents the basic psychological refractory period effect, in which Task 2 responses are delayed when tasks overlap (see Pashler, 1984).

Dual-Task ERP Data

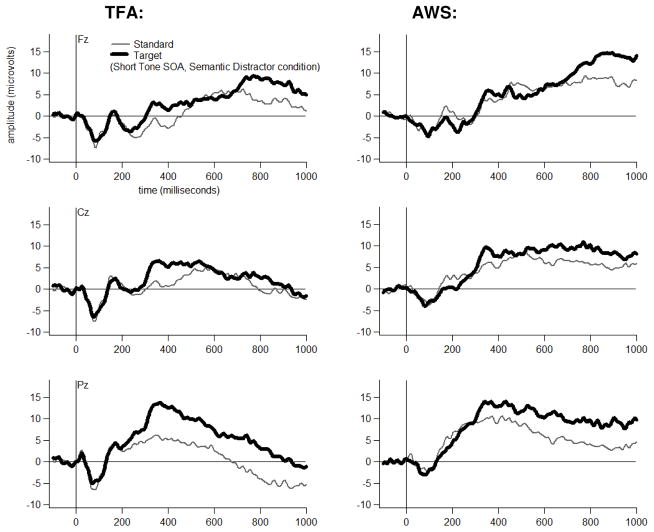

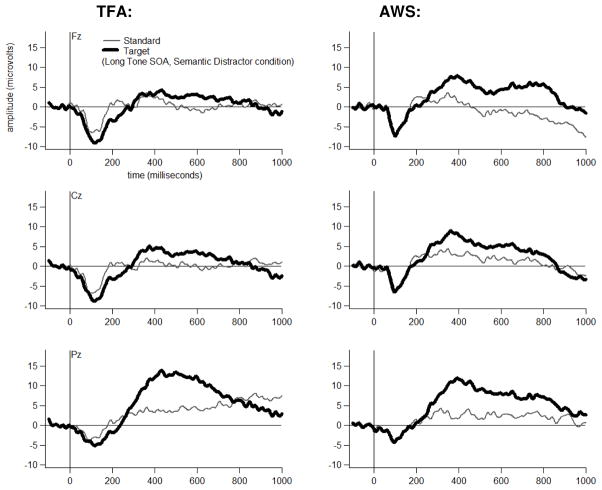

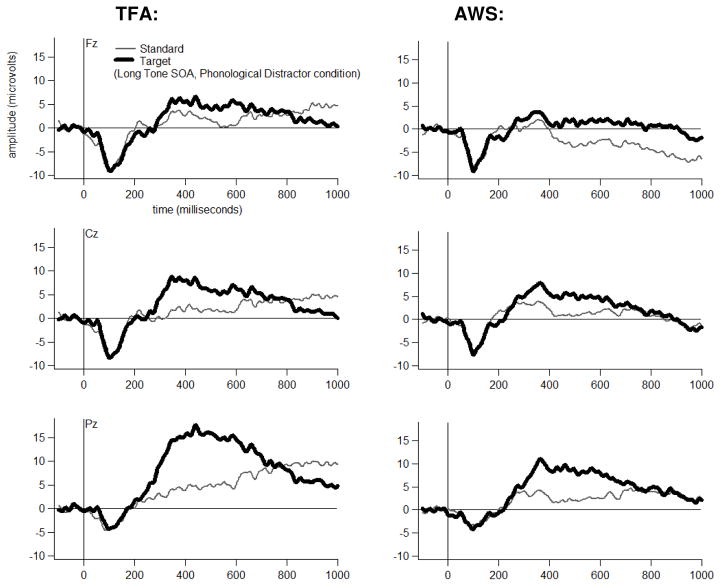

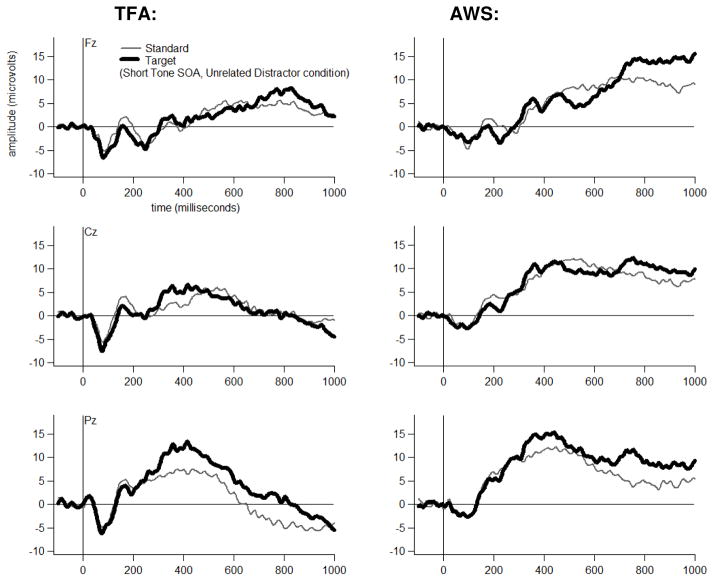

Grand average ERP waveforms are shown for each Group, at three midline electrodes, for each Tone Type, separately for each Distractor Type-by-Tone SOA combination in Figures 4 through 9. The tones generally elicited a pattern of early (exogenous) ERP activity followed by later positive-going activity that was often modulated by Tone Type, particularly at electrode Pz. This Tone Type effect appeared to be attenuated in at least some Distractor Type-by-Tone SOA conditions for the AWS.

Figure 4.

Dual-task grand average waveforms at Fz, Cz and Pz for each Group to each Tone Type in the Semantic Distractor condition at the Short Tone SOA.

Figure 9.

Dual-task grand average waveforms at Fz, Cz and Pz for each Group to each Tone Type in the Unrelated Distractor Condition at the Long Tone SOA.

The temporal PCA resulted in 12 Promax-rotated temporal factors, accounting for 80.79% of the variance in the average ERP data set. One temporal factor was defined by a set of loadings that peaked in amplitude at 348 ms after tone onset (hereafter, T348, see Figure 10). T348 factor scores were affected by an interaction of Group, Distractor Type, Tone Type, Tone SOA, Laterality and Anteriority (F[16,448]=2.01, p=.047). Figure 11 depicts grand average T348 scores topographically.

Figure 10.

Promax-rotated factor loadings for each of 12 temporal factors generated from the Dual-Task ERP data set.

Figure 11.

Topographic plots of grand average T348 scores, shown separately for each Group in each Distractor condition to each Tone Type and for the Target minus Standard Difference at each Tone SOA.

Bonferroni-corrected t-tests revealed that, for the TFA, T348 scores to Target (High) tones had a larger positive-going amplitude than T348 scores to Standard (Low) tones in each Distractor Type-by-Tone SOA condition at electrode Pz (p<=.01). Table 3 lists the other electrodes at which a significant Target versus Standard difference was also detected (p<.05) in the TFA in each Distractor Type, at each Tone SOA.

Table 3.

Electrodes by region at which a significant P3 effect was detected for each Group in each Tone Type x Tone SOA x Distractor Type condition (top and middle sections), and Electrodes by region at which P3 difference scores differed between Groups in each Tone Type x Tone SOA x Distractor Type condition (bottom section).

| TFA | Distractor Type | Tone SOA | Right Inferior | Right Superior | Middle | Left Superior | Left Inferior |

|---|---|---|---|---|---|---|---|

| Semantic | Short | T7, P7 | C3, P3 | Fz, Cz, Pz | C4, P4 | T8, P8 | |

| Long | P7 | C3, P3 | Pz | P4 | |||

| Phonological | Short | T7, P7 | F3, C3, P3 | Fz, Cz, Pz | C4, P4 | P8 | |

| Long | T7, P7 | C3, P3 | Cz, Pz | C4, P4 | T8, P8 | ||

| Unrelated | Short | P7 | P3 | Cz, Pz | C4, P4 | ||

| Long | T7, P7 | F3, C3, P3 | Fz, Cz, Pz | F4, C4, P4 | T8, P8 | ||

| AWS | Right Inferior | Right Superior | Middle | Left Superior | Left Inferior | ||

| Semantic | Short | Cz, Pz | P4 | ||||

| Long | T7, P7 | C3, P3 | Cz, Pz | C4, P4 | P8 | ||

| Phonological | Short | ||||||

| Long | P7 | P3 | Pz | P4 | |||

| Unrelated | Short | ||||||

| Long | T7, P7 | F3, C3, P3 | Fz, Cz, Pz | F4, C4, P4 | P8 | ||

| AWS vs. TFA | Right Inferior | Right Superior | Middle | Left Superior | Left Inferior | ||

| Semantic | Short | P3 | Pz | P4 | P8 | ||

| Long | |||||||

| Phonological | Short | P7 | C3 | Pz | |||

| Long | |||||||

| Unrelated | Short | ||||||

| Long | P7 | P3 | Pz | P4 | P8 |

For the AWS, T348 scores to Target (High) tones had a larger positive-going amplitude than T348 scores to Standard (Low) tones for four of the six Distractor Type-by-Tone SOA conditions at electrode Pz (p<.05). A Tone Type effect was not detected for AWS at Pz for the Phonological Distractor+Short SOA condition (p=.48) or for the Unrelated Distractor+Short SOA conditions (p=.09). Nor was a Tone Type effect detected for these two conditions at any of the other electrodes. Table 3 lists the electrodes at which a significant Target versus Standard difference was detected (p<.05) in each Distractor Type, at each Tone SOA.

Inspection of Figure 11 suggests that T348 scores may have differed between Groups in each Tone Type. To investigate this possibility, T348 scores were compared between Groups separately for each Tone Type, in each Distractor Type, at each Tone SOA. T348 scores were shown to be larger in amplitude for the AWS versus TFA, in the Semantic Distractor+Standard Tone+Short SOA condition, at electrode P3 (p=.043) and, marginally, at electrode Pz (p=.08). T348 scores were also shown to be marginally larger in amplitude for the AWS versus TFA, in the Unrelated Distractor+Standard Tone+Short SOA condition at electrodes Cz (p=.06) and C4 (p=.09).

Next, we analyzed Difference scores (Target minus Standard) to determine whether detected P3 effects differed in magnitude between Groups as a function of Distractor Type and Tone SOA. The Difference scores were shown to be affected by an interaction of Group, Distractor Type, Tone SOA, Laterality and Anteriority (F[16,448]=2.01, p=.047). Bonferroni-corrected t-tests, comparing Group at electrode Pz for each Distractor Type-by-Tone SOA combination, revealed attenuated Difference score amplitudes for AWS versus TFA in the Semantic Distractor+Short SOA (p=.038), Phonological Distractor+Short SOA (p=.026), and Unrelated Distractor+Long SOA (p=.018) conditions. Table 3 shows other electrodes at which Difference scores were significantly attenuated (p<=.05) in AWS versus TFA. Dual-task P3 results most relevant to the study aims are summarized in Table 4.

Table 4.

Summary of dual-task P3 results.

| Distract or Type | Tone SOA | Was a P3 effect (Target versus Standard difference) detected? | Did P3 difference scores (Target minus Standard) differ in amplitude between Groups? |

|---|---|---|---|

| Semantic | Short | Yes, both groups | Yes, attenuated in AWS versus TFA at Pz and other posterior sites |

| Long | Yes, both groups | No | |

| Phonological | Short | Yes for TFA; No for AWS | Yes, attenuated in AWS versus TFA at Pz and other central and posterior sites |

| Long | Yes, both groups | No | |

| Unrelated | Short | Yes for TFA; No for AWS | No |

| Long | Yes, both groups | Yes, attenuated in AWS versus TFA at Pz and other posterior sites |

DISCUSSION

The study aim was to investigate whether lexical-semantic and phonological processes in language production are atypically demanding of attentional resources in AWS. Fifteen TFA and 15 AWS completed a dual task in which they named pictures overlaid with printed distractor words while judging two tone types (frequent Standard and rare Target, pressing a button to rare Target tones). Tones were presented nearly simultaneously with picture-distractor pairs on some trials while, on other trials, they were presented after a sizable delay. In the naming task, distractor words were Semantically-related to the picture labels, Phonologically-related or Unrelated. The amplitude of the P3 component elicited to rare Target tones was measured in the context of Semantic, Phonological or Unrelated picture-word interference. Additionally, P3 amplitude was compared between groups in a simple tone oddball task. No differences in P3 amplitude were detected between groups in the simple task. In the dual task, however, P3 effects were attenuated in AWS in the context of picture-word interference. As the amplitude of the P3 can be used to index available processing resources (Luck, 1998), this suggests that resolving competition in word retrieval can atypically demand attentional resources in AWS.

Simple Task Results

No behavioral or P3 effects robustly differentiated AWS from TFA in the simple tone oddball task. The AWS did trend toward faster tone judgment RTs. Previous studies have reported that manual RTs were slower in AWS versus TFA, but other studies have found no such evidence; the current results add to this conflicting literature (see Bloodstein and Ratner, 2008). Faster tone judgment RTs for at least some of our AWS may have reflected a strategy of emphasizing speed of performance on the simple task, although this was not at the expense of accuracy of target tone detection.

Visual inspection of the simple task ERP data was suggestive of attenuated P3 amplitudes in AWS versus TFA. However, a statistically significant between-groups difference was not detected. A tendency toward reduced P3 amplitudes has also been observed in other AWS participant groups (e.g., Hampton and Weber-Fox, 2008).

The scalp distribution of temporal factor scores associated with P3 activity also appeared to differ slightly in AWS versus TFA. For AWS, P3 activity appeared more localized at right central electrodes versus at right posterior electrodes in TFA. However, this difference was not statistically significant. These results contrast with a previous report in which five of eight AWS had greater P3 amplitudes over left scalp sites (Morgan et al., 1997).

The current simple task P3 results suggest that, in the absence of dual-task demands, P3 morphology for our AWS at least grossly resembled that seen in the TFA.

Dual-Task Behavioral Results

For the dual task, we sought to replicate Ferreira and Pashler (2002) by demonstrating that both naming times and tone judgment times were slower in the Semantic Distractor condition. This effect was observed, suggesting that conditions in the current dual task at least approximated those in Ferreira and Pashler (2002).

For both groups, naming RTs were affected by Distractor Type. Naming RTs were slower in the Semantic Distractor condition than in Unrelated. In contrast, naming RTs were faster in the Phonological Distractor condition than in Unrelated. This pattern is consistent with Ferreira and Pashler (2002), who reported that TFA had slower naming RTs in the Semantic Distractor condition (the standard Semantic Interference effect) and faster naming RTs in the Phonological Distractor condition (the standard Phonological Facilitation effect).

For both groups in the current experiment, tone judgement RTs were also slower in the Semantic Distractor condition than in Unrelated, particularly at the Short Tone SOA. This finding too is consistent with Ferreira and Pashler (2002). The combination of prolonged naming RTs with Semantic Distraction and prolonged tone judgment RTs in the context of Semantic Distraction was interpreted by Ferreira and Pashler (2002) as suggesting that lexical-semantic processing in picture naming bottlenecks centrally with processing of the tones.

Several other RT effects were observed. Naming RTs were shorter at the Short versus Long Tone SOA. One interpretation is that participants adopted a strategy of delaying naming when tones were not immediately presented. Alternatively, participants may have strategically named pictures more quickly as they held the tone in working memory (i.e., at the Short Tone SOA). Additionally, naming RTs were shorter in the presence of Standard (Low) tones than Target (High) tones. This suggests that the additional processes of context-updating and/or preparing push-button responses uniquely required by Target tones prolonged the process(es) of resolving picture-word interference and/or programming verbal responses to the pictures.

In addition to RT effects, several accuracy effects were observed. For TFA, naming accuracy was poorer in the Phonological Distractor+Target Tone+Short Tone SOA condition than in Unrelated Distractor+Target Tone+Short SOA. A possible explanation for this result may be found in Roelofs (2008) who pointed out that, during speech production, auditory processing is suppressed. Additionally, in a dual-task context, Task 1 can interfere with Task 2 performance. In order to maintain Task 2 performance in the current task, TFA may have strategically shifted attention to Task 2 relatively early on each trial, particularly when Target tones were presented. As a result, more errors may have occurred in the Phonological Distractor condition as attention shifted prematurely away from naming. These results are not consistent with Ferreira and Pashler (2002), who reported that TFA had poorer naming accuracy in the Semantic Distractor condition in the context of all Tone SOAs and Distractor SOAs.

More similar to what Ferreira and Pashler (2002) observed, AWS in the current task did have poorer naming accuracy on Standard Tone+Long SOA trials when distractors were Semantically Related vs. Unrelated. One interpretation is that semantic interference made it more difficult for AWS to encode and/or maintain target words in short-term memory until standard tones were verified at the Long Tone SOA.

Both groups had less accurate tone judgements in the Semantic Distractor condition than in Unrelated. This finding is consistent with Ferreira and Pashler (2002), who suggested that more tone judgement errors were made in semantic interference due to heightened demands of response selection posed by increased competition in word retrieval.

Finally, tone type affected accuracy of tone judgments differently in the two groups. TFA had less accurate tone judgements for Target (High) tones than for Standard (Low) tones at the Long SOA. Although, as noted previously, the TFA may have shifted attention to Task 2 tone processing relatively early on each trial, perhaps their attention to tone processing was not maintained as robustly at the Long Tone SOA. Conversely, AWS had less accurate tone judgements for Standard (Low) tones than Target (High) tones at the Short Tone SOA. Perhaps demands of resolving picture-word interference were more easily overcome for AWS at the Short Tone SOA when Target versus Standard tones were presented.

Dual-Task P3 Results: TFA

Another aim of the dual task used here was to determine whether a P3 effect could be detected at each Tone SOA in each Distractor condition. For the TFA, P3 was detected at both Tone SOAs in all three Distractor conditions. Differences were observed in the scalp topographies of P3 effects. In general, different scalp topographies may suggest that different neural sources were involved in generating P3 effects in the different Tone SOA by Distractor conditions and/or that the same neural resources were involved in generating P3 effects but activated to different degrees in the different Tone SOA by Distractor conditions (see Alain et al., 1999).

TFA always exhibited a topographically-widespread positivity peaking at 348 ms after Target tone onset, consistent with P3 activation. This time course is consistent with P3 latencies reported in other dual-task literature (Luck, 1998; Dell’Acqua et al., 2005). In the Semantic Distractor condition at the Short Tone SOA, a topographically-widespread P3 effect was observed. In the Semantic Distractor condition at the Long SOA, the P3 effect had a more focal topography. This same pattern was observed in the Phonological Distractor condition at the Short versus Long Tone SOA.

In the Unrelated Distractor condition at the Short Tone SOA significant P3 effects were identified primarily at posterior electrode sites. Conversely, P3 topography was more widespread at the Long Tone SOA. One possibility is that TFA recruited different neural sources in tone perception and categorization in the context of Unrelated distractors versus in the other Distractor conditions.

It is significant that P3 effects were detected for TFA in all Distractor conditions at all Tone SOAs. As mentioned previously, Ferreira and Pashler (2002) hypothesized that at least some aspects of word retrieval bottleneck centrally with processing in a secondary tone monitoring task. They suggested that the bottleneck was located specifically at the level of response selection. This stage of cognitive processing involves “…determining an appropriate response from some input representation” (Ferreira and Pashler, 2002, p. 1197). In the case of picture naming, response selection is involved in the selection of specific words and their constituent phonemes determined by pictured objects; in oddball tone categorization, response selection is involved in the selection of a manual response determined by Target tones. The P3 component is generally thought to reflect processing in perception and categorization of task-relevant stimuli but not in response selection, although there is debate about whether P3 might also index response selection to some extent (see Dien et al., 2004). Based on the standard interpretation of P3, the presence here of a P3 effect in all Distractor conditions at both Tone SOAs suggests that at least some attentional resources were always available for tone perception and categorization in TFA, even simultaneously with semantic and phonological processes in word retrieval. On the other hand, topographic differences in P3 effects, outlined previously, suggest that processes in language production and processes in perceiving/categorizing auditory stimuli may bottleneck to some extent in TFA, in addition to a later (and possibly more severe) bottleneck at the level of response selection as proposed by Ferreira and Pashler (2002). Other ERP data indirectly support this conclusion. Dell’Acqua et al. (2010) found that Semantic PWI heightened sensory processing in TFA. Heightened sensory processing in PWI may, in turn, affect resource allocation to sensory processing in a secondary task, as observed here in topographically different P3 effects for TFA depending on Distractor Type and Tone SOA.

Dual-Task P3 Results: AWS

In contrast to the TFA, a robust P3 effect was not observed for AWS in some Distractor-by-Tone SOA conditions. Furthermore, when P3 effects were detected for AWS, they were sometimes attenuated in amplitude relative to TFA.

AWS demonstrated a relatively local P3 effect detected only at Cz, Pz and P4 electrodes during the Semantic Distractor Type at the Short Tone SOA. Furthermore, even though P3 activation was detected at these electrodes for AWS, the amplitude of this effect was smaller versus P3 amplitude at these same electrodes in TFA. One interpretation is that, for AWS, resolving Semantic competition was particularly resource demanding versus in TFA. Conversely, a topographically-widespread P3 was detected in the Semantic Distractor condition at the Long SOA. As the tone was presented at the longer latency, resolution of semantic competition may have allowed more attentional resources to be allocated toward processes in perceiving and categorizing the tones.

No P3 effect was detected statistically at any electrode for AWS in the Phonological Distractor condition at the Short Tone SOA. One interpretation is that, for AWS, resolving phonological competition was so resource-demanding as to severely draw cognitive resources away from tone categorization. A P3 effect was detected for AWS in the Phonological Distractor condition at the Long Tone SOA, but only at the P3, Pz and P4 electrodes. This implies that, even at the Long Tone SOA, AWS still allocated significant attentional resources toward phonological processing, perhaps due to prolonged difficulty resolving phonological competition and/or maintaining target picture labels in phonological memory for overt naming. As noted in the Introduction, previous reports suggest that sub-vocalized phonological encoding can take longer in AWS versus TFA and that phonological working memory may be limited in AWS (also see Bajaj, 2007).

Finally, a P3 effect was not detected in AWS in the Unrelated Distractor Type+Short Tone SOA condition. A widespread P3 effect was detected in Unrelated Distractor at the Long SOA. However, the amplitude of this effect was reduced for AWS versus TFA. These findings suggest that resolving Unrelated PWI in addition to Semantic and Phonological PWI was atypically resource-demanding in AWS.

The P3 data reported here suggest that processes in word retrieval can be atypically demanding of attentional resources in AWS. Specifically, processes in resolving lexical-semantic competition and, separately, processes in resolving phonological competition in word retrieval both drew disproportionate attentional resources away from P3-indexed processes in perceiving and categorizing tones in a (near) simultaneous auditory monitoring task in AWS. An important consideration is how this effect might originate at a neuro-mechanistic level. There is evidence that P3 is generated by multiple brain sources (Key et al., 2005). For example, auditory P3 has been associated with activity in a distributed network of generators in the frontal, temporal and parietal cortices (Kanovsky et al., 2003). Some other brain regions involved in oddball processing (Rektor et al., 2007) are active in language production too, including prefrontal cortex, cingulate gyrus and lateral temporal cortex (Indefrey and Levelt, 2004). Crucially, involvement of different P3 generators can fluctuate depending on task demands (Brazdil et al., 2001, 2003; Kanovsky et al., 2003; Rektor et al., 2007). For example, parietal and frontal lateral generators associated with auditory P3 were shown to slow when auditory monitoring was paired with motor responding compared to auditory monitoring without motor responding, suggesting those generators play a role in both oddball processing and motor preparation (Kanovsky et al., 2003). From this perspective, the current results might suggest that heightened language production demands can significantly alter the cortical/subcortical network involved in oddball processing in AWS. More research will be needed to localize specific bottlenecks.

As discussed next, the current findings also open up several other questions, including how resolving both lexical-semantic and phonological competition in the same production might (additively) impact attentional resources in AWS, how AWS process information across a broader variety of dual-task contexts (e.g., non-linguistic and linguistic), how modality affects dual-task processing in AWS, and the impact language production may have on processes other than perception in AWS. Implications for intervention are also briefly discussed.

Future Directions and Implications

The current results suggest that both lexical-semantic and phonological processes in picture naming can be atypically demanding on attentional resources in AWS relative to TFA. Although each aspect of processing was manipulated separately here, spontaneous word production involves both lexical-semantic and phonological processing. Unknown is whether resolving competition at both levels for a single utterance would result in additive demands on attention in AWS. This could be investigated by using a PWI manipulation that introduces both lexical-semantic and phonological competition simultaneously (for example, see Damian and Martin, 1998).

The current results also suggest that resolving Unrelated distraction in word retrieval can atypically demand attentional resources in AWS. This raises the question of whether word retrieval exerts unique attentional demands in AWS, or whether dual-task processing in general is problematic for AWS. As cited in the Introduction, there is evidence that speech production can suffer in AWS when attention demands of language production are high. Still other evidence suggests that AWS have limited attentional control in managing dual tasks combining speech and manual movements (e.g., Smits-Bandstra and De Nil, 2009). Instances of stuttering, themselves, also seem to draw attentional resources away from simultaneous task performance (e.g., Saltuklaroglu et al., 2009). One possibility is that AWS have limited attentional control at a central level that affects processing and performance in many different domains (e.g., linguistic and motor). One approach for testing this hypothesis could be to compare P3 amplitudes in AWS versus TFA in a non-speech/non-linguistic dual task (e.g., Luck, 1998).

Still another question raised by the current results is whether the auditory modality of our secondary task was uniquely challenging for AWS. Brain imaging studies suggest that stuttering may be associated with deficits in auditory-motor integration (Daliri and Max, 2015; Beal et al., 2010; Brown et al., 2005; Cai et al., 2012; Chang et al., 2011; Max et al., 2004). Daliri and Max (2015) reported that AWS had atypical auditory evoked potentials. Thus, the auditory modality of the secondary task used here may have posed particular problems for AWS. Investigating P3-indexed attentional control in other modalities (e.g., visual) may shed light on whether vulnerability of sensory processing to attentional demands of concurrent processes in speech production extends beyond the auditory domain. Khedr and colleagues (Khedr et al., 2000) investigated ERPs in AWS using both auditory and visual stimuli. In visual modality, P1 amplitude was attenuated in AWS versus TFA. However, P2, N2 and P3 morphology were similar between groups. A next step is to investigate whether results obtained in the current study could be replicated in a visual-only dual task (e.g., similar to that in Roelofs, 2008).

Finally, it remains to be seen whether processes in language production can draw attentional resources directly away from processes in speech motor preparation and control in AWS, just as language production can directly interfere with perception and categorization of auditory stimuli in this speaker group. Speech as well as non-speech motor performance has been shown to reflect greater instability in AWS versus TFA, particularly as utterances increase in length and grammatical complexity (e.g., Kleinow and Smith, 2000; McLean et al., 1990; Zimmerman, 1980). Investigating speech motor readiness potentials (see Wohlert, 1993) in the context of simpler versus increased language production demands might shed light more directly on whether allocation of attentional resources to processes in prearticulatory speech motor readiness is delayed or diminished by increasing language processing demands in AWS.

From an intervention perspective, the current results raise the question of whether AWS might benefit from therapy aimed at improving attentional control in general, and in language production in particular. An attentional training program was recently shown to improve fluency in pre-teens who stutter (Nejati et al., 2013). Unknown is whether the training had the effect of stabilizing lexical retrieval, speech motor and/or other processes involved in producing speech. Also unknown is whether this type of intervention might benefit AWS similarly. An intervention that more specifically aims to stabilize attentional control of the action system underlying language production has been developed for people with aphasia (Crosson et al., 2005). AWS could potentially benefit from this type of intervention too.

Summary and Conclusions

Results of the present study suggest that AWS allocate disproportionate attentional resources to both lexical-semantic and phonological processes in language production. Additional research is necessary to better understand the combined effect of these processes on attentional resources in AWS, and the general capacity for AWS to process information in a range of dual tasks. More research is also necessary to clarify whether auditory processing is uniquely sensitive to dual-task processing in AWS. Also of critical importance will be investigations of whether attentional demands of language production atypically draw resources away from processes in speech motor planning, programming and/or execution in AWS.

Results of this study raise the possibility that attentional training may have a place in interventions for stuttering. Until this intervention focus is developed, it is important to consider the impact of existing interventions on language and cognitive processing in AWS. Two main approaches to the treatment of adulthood stuttering are Stuttering Management and Fluency Shaping. In Stuttering Management, the aim is to teach clients to stutter without unnecessary avoidance behaviors, tension or struggle. In Fluency Shaping, the aim is to teach clients to stutter less frequently (Prins and Ingham, 2009). These approaches are sometimes combined to form a comprehensive therapy approach for adulthood stuttering (Blomgren, 2010). In Stuttering Management, clients learn to eliminate avoidance behaviors commonly used to minimize or disguise the presence of stuttering. Often these include linguistic avoidance behaviors (e.g., word substitutions, circumlocutions, retrials) (Vanryckeghem et al., 2004). In principle, reducing linguistic avoidance behaviors should reduce any tendency to use the mental lexicon atypically. This, in turn, may have an effect of stabilizing word retrieval processes in AWS. Although not an explicit aim of reducing linguistic avoidance behaviors, evidence from the current study and from other cited research provides support that normalizing word retrieval behavior should continue to be a target of intervention for adulthood stuttering in addition to the usual focus on establishing better speech motor control.

Figure 5.

Dual-task grand average waveforms at Fz, Cz and Pz for each Group to each Tone Type in the Semantic Distractor condition at the Long Tone SOA.

Figure 6.

Dual-task grand average waveforms at Fz, Cz and Pz for each Group to each Tone Type in the Phonological Distractor condition at the Short Tone SOA.

Figure 7.

Dual-task grand average waveforms at Fz, Cz and Pz for each Group to each Tone Type in the Phonological Distractor condition at the Long Tone SOA.

Figure 8.

Dual-task grand average waveforms at Fz, Cz and Pz for each Group to each Tone Type in the Unrelated Distractor condition at the Short Tone SOA.

HIGHLIGHTS.

Processes in language production may demand atypical attentional control in adults who stutter (AWS).

EEG P3 findings suggest that language production can be atypically resource demanding in AWS.

Normalizing word retrieval behavior should continue to be a target of intervention for AWS.

Acknowledgments

This research was supported by grants from the National Institutes of Health awarded to the first author (R03DC011144) and Victor Ferreira (HD051030). We are grateful to Kalie Morris, Caitlin Kellar, Fanne L’Abbe and Alissa Belmont for their efforts in collecting and processing the data presented here. We thank Dr. Emanuel Donchin for comments on an early draft of this manuscript. Participation of adults who stutter is greatly appreciated.

Footnotes

CONFLICT OF INTEREST

None.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bajaj A. Working memory involvement in stuttering: exploring the evidence and research implications. J Fluency Disorder. 2007;32:218–238. doi: 10.1016/j.jfludis.2007.03.002. [DOI] [PubMed] [Google Scholar]

- Beal DS, Cheyne DO, Gracco VL, Quraan MA, Taylor MJ, De Nil LF. Auditory evoked fields to vocalization during passive listening and active generation in adults who stutter. Neuroimage. 2010;52:2994–3003. doi: 10.1016/j.neuroimage.2010.04.277. [DOI] [PubMed] [Google Scholar]

- Beilby JM, Byrnes ML, Meagher EL, Yaruss JS. The impact of stuttering on adults who stutter and their partners. J Fluency Disorder. 2013;38:14–29. doi: 10.1016/j.jfludis.2012.12.001. [DOI] [PubMed] [Google Scholar]

- Bell AJ, Sejnowski TJ. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 1995;7:1129–1159. doi: 10.1162/neco.1995.7.6.1129. [DOI] [PubMed] [Google Scholar]

- Blomgren M. Stuttering Treatment for Adults: An Update. Semin Speech Lang. 2010;31:272–282. doi: 10.1055/s-0030-1265760. [DOI] [PubMed] [Google Scholar]

- Bloodstein O, Bernstein Ratner N. A Handbook on stuttering. 6. New York: Thomson-Delmar; 2008. [Google Scholar]

- Bosshardt HG. Cognitive processing load as a determinant of stuttering: Summary of a research programme. Clin Linguist Phon. 2006;20:371–385. doi: 10.1080/02699200500074321. [DOI] [PubMed] [Google Scholar]

- Bosshardt HG, Fransen H. Online sentence processing in adults who stutter and adults who do not stutter. J Speech Lang Hear Res. 1996;39:785–797. doi: 10.1044/jshr.3904.785. [DOI] [PubMed] [Google Scholar]

- Bosshardt HG, Nandyal I. Reading rates of stutterers and nonstutterers during silent and oral reading. J Fluency Disorder. 1988;13:407–420. [Google Scholar]

- Bothe AK, Davidow JH, Bramlett RE, Ingham RJ. Stuttering treatment research 1970–2005: I. Systematic review incorporating trial quality assessment of behavioral, cognitive, and related approaches. Am J Speech Lang Pathol. 2006;15:321–341. doi: 10.1044/1058-0360(2006/031). [DOI] [PubMed] [Google Scholar]