Abstract

Non-human primates use various communicative means in interactions with others. While primate gestures are commonly considered to be intentionally and flexibly used signals, facial expressions are often referred to as inflexible, automatic expressions of affective internal states. To explore whether and how non-human primates use facial expressions in specific communicative interactions, we studied five species of small apes (gibbons) by employing a newly established Facial Action Coding System for hylobatid species (GibbonFACS). We found that, despite individuals often being in close proximity to each other, in social (as opposed to non-social contexts) the duration of facial expressions was significantly longer when gibbons were facing another individual compared to non-facing situations. Social contexts included grooming, agonistic interactions and play, whereas non-social contexts included resting and self-grooming. Additionally, gibbons used facial expressions while facing another individual more often in social contexts than non-social contexts where facial expressions were produced regardless of the attentional state of the partner. Also, facial expressions were more likely ‘responded to’ by the partner’s facial expressions when facing another individual than non-facing. Taken together, our results indicate that gibbons use their facial expressions differentially depending on the social context and are able to use them in a directed way in communicative interactions with other conspecifics.

Introduction

In searching for the evolutionary roots of human communication, comparative researchers have dedicated much attention to the question whether communication of non-human primates is also characterized by voluntary and intentional use of different signal types, which is a key feature of human communication. In which case, non-human primates should use their signals purposefully, direct them to other group members and adjust them to the attentional state of the recipient. This would indicate that they have some voluntary control over the production of their signals. There are only a few studies investigating systematically whether and how non-human primates use facial expressions in social interactions. Waller et al. [1] found that orang-utans modify their facial expressions depending on the attentional state of the recipients. The authors offer a lower-level explanation for the differential use of facial expressions, since sensitivity to the attentional state of others could be the result of the salience of the face as a social stimulus. However, it is important to emphasize that currently there is only a small set of studies available that provide data on the use of facial expressions as a function of the recipient’s attentional state.

Most existing research on facial expressions has been devoted to investigating the role of facial expressions for coordinating social interaction, facilitating group cohesion and the maintenance of individual social relationships in non-human primates [2–6], including several species of monkeys and great apes (e.g., Pan troglodytes: [7–9]; Pongo pygmaeus: [10–13]; Macaca mulatta: [14–17]; Callithrix jacchus: [18–22]), but little is known about the various species of small apes (Hylobatidae) [23–25]. Gibbons are equipped with extensive facial muscles [26], which they use to perform a variety of facial movements [25,27]. Still, from the little that is currently known, their facial communication seems less complex than those of more terrestrial and/or socially more complex primate species [24,26].

The newly developed Facial Action Coding System for hylobatid species (GibbonFACS: [24]) offers the opportunity to examine facial expressions in much greater detail than previous methods and enables the objective and standardized comparison of facial movements across species [28,29]. The coding system can be used to identify the muscular movements underlying facial expressions and thus used to define facial expressions as a combination of such facial muscle contractions (Action Units [AUs]) or more general head/eye movements (Action Descriptors [ADs]). In a previous study we used GibbonFACS and provided a detailed description of the repertoire, the rate of occurrence and the diversity of facial expressions in five different hylobatid species comprising three genera (Symphalangus, Hylobates and Nomascus) [25]. The focus of the investigation was to compare the different genera and to reveal potential correlations with socio-ecological factors (group size and monogamy level) on a species level. In the current study we focus on how hylobatids adjust their use of facial expressions to the behaviour of a recipient. Liebal et al. [23] observed four distinct facial expressions in siamangs (‘mouth-open half’, ‘mouth-open full’, ‘grin’, and ‘pull a face’), which were used across different social contexts. Most importantly, these four types of facial expressions were almost exclusively used when the recipient was visually attending. This is currently the only evidence indicating that hylobatids adjust the use their facial expression to the behaviour of their audience.

To confirm these previous findings and to systematically investigate the use of facial expressions in hylobatids in social contexts, we explored the influence of the attentional state of a potential receiver on the production of facial expressions. Additionally, in order to examine the influence of a given facial expression on the recipient’s behaviour, we investigated whether recipients respond themselves by using a facial expression. To do so, we analysed the distribution of consecutive facial expressions (two facial expressions between the pair partners within a defined period of time), but excluded identical facial expressions to rule out more basic, reflexive responses like facial mimicry (the repetition of the same facial expression of the sender by the receiver; [13,30,31]).

If facial expressions of hylobatids have a social function and individuals are capable of adjusting their use to the behaviour of the recipient and the context in which they are used, we predicted that 1) senders use facial expressions with longer duration when the recipient is facing the sender than when they are not and 2) senders use facial expressions more frequently in social than in non-social contexts when the recipient is visually attending. Both this longer duration and the more frequent use of facial expressions in social contexts would enhance signal transmission and indicate that they indeed have an intended communicative function instead of merely representing undirected behaviours. Furthermore we predicted that 3) consecutive facial expressions, which indicate that the recipient responds to the sender’s facial expression by producing another facial expression, are more common when individuals are facing each other than when they are not.

Materials and Methods

Ethics Statement

Animal husbandry and research comply with the ‘‘EAZA Minimum Standards for the Accommodation and Care of Animals in Zoos and Aquaria”, the ‘‘WAZA Ethical Guidelines for the Conduct of Research on Animals by Zoos and Aquariums” and the ‘‘Guidelines for the Treatment of Animals in Behavioral Research and Teaching” of the Association for the Study of Animal Behavior (ASAB). IRB approval was not necessary because no special permission for the use of animals in observational studies is required in Germany. Further information on this legislature can be found in paragraphs 7.1, 7.2 and 8.1 of the German Protection of Animals Act (‘‘Deutsches Tierschutzgesetz”). Our study was approved by all participating Zoos.

Subjects

Mated pairs of five different gibbon species were observed, comprising three pairs of siamangs (Symphalangus syndactylus), two pairs of pileated gibbons (Hylobates pileatus), one pair of white-handed gibbon (Hylobates lar), one pair of yellow-cheeked gibbon (Nomascus gabriellae) and one pair of southern white-cheeked gibbon (Nomascus siki), resulting in a total of 16 individuals. All pairs except one were housed together with their one to three offspring (for details of the individuals and group composition see S1 Table).

Data collection and coding

Data collection took place between March 2009 and July 2012 in different zoos in the UK (Twycross), France (Mulhouse), Switzerland (Zurich) and Germany (Rheine). The behaviour of each pair was video-recorded in 15 min bouts using the focal animal sampling method [32] (with both animals always in view). We focused on situations when the pair was in reaching distance and so had the opportunity to interact and we coded only facial expressions that occurred when being in that distance to each other. Recordings were evenly distributed across different times of the day and conducted on several different consecutive days. This resulted in in a total of 21 hours of observation, a mean observation time of 158 minutes per pair (SD = 34 min). We later coded the video footage with the software Interact (Mangold International GmbH, Version 9.6) and identified facial expressions using GibbonFACS [24]. A facial expression was defined using GibbonFACS, as a single or a combination of more than one facial movement (so-called Action Units (AU) or Action Descriptors (AD)), regardless whether it was used in interactions with others or not. The facial expression was coded at the apex of the expression. In total, 1080 facial expression events were identified. For each facial expression, we also measured its duration (from the onset to apex to offset) and coded whether individuals were facing each other while producing the facial expression (if the faces of both individuals were clearly directed at each other). All other instances were coded as non-facing. For each facial expression, we also coded the context in which it was used. We differentiated between social contexts (Agonism, Play, Grooming) and non-social contexts (Selfgrooming, Resting) and coded “Unclear” when we were not able to assign any of those contexts. We conducted a reliability analysis on 10% of the data, which was calculated using Wexler’s Agreement as for the human FACS and all other non-human animal FACS systems [33]. Agreement was 0.83, which in FACS methodology is considered very good agreement [33]. Agreement for the measurements of context (social, non-social, unclear) and facing (facing vs non-facing) were tested using Cohen’s Kappa [34] and were substantially to almost perfect (context: K = 0.74, P < 0.001; facing: K = 0.85, P < 0.001).

Statistical Analyses: Duration of facial expressions

To test whether the duration of facial expressions (response variable; log-transformed) was influenced by individuals of a mated pair facing each other (thereafter 'facing') or not and/or whether they used facial expressions in a social context compared to non-social (thereafter 'context') we used a Linear Mixed Effects Model (LMM; [35]) into which we included these two predictors as well as their interaction as fixed effects, and individual and number of video clip as random effects. To keep type I error rates at the nominal level of 5%, we included random slopes of facing and context (manually dummy-coded) and their interaction within individuals and video clip, but not the correlation parameters between the predictors and nor the random intercept [36,37]. We used R [38] and lme4 [39] to perform the LMM.

We checked for model stability by excluding levels of the random effects one at a time from the data and comparing the estimates derived with those obtained from using the entire data set which indicated no influential cases to exist. Variance inflation Factors (VIF; [40]) were derived using the function vif of the R-package car [41] applied to a standard linear model with random effects and the interaction excluded and found that collinearity was not an issue (maximum VIF: 1.14). We checked whether the assumptions of normally distributed and homogeneous residuals were fulfilled by visual inspection of a qq-plot of the residuals and residuals plotted against fitted values, which indicated no violation of these assumptions.

An overall test of the significance of the fixed effects as a whole [36] was obtained using a likelihood ratio test (R function anova with argument test set to “Chisq”) testing the full model against the null model (comprising only the random effects). P-values for the individual effects were based on likelihood ratio tests comparing the full with the respective reduced models (R function drop1; [37]).

Results

Types of facial expressions

In total, we found 45 different types of facial expressions (see S2 Table). Some types were single AUs or ADs; however, the majority were combinations of several AUs and/or ADs. Most types of the facial expressions were used in both facing and non-facing situations (24 types) followed by types, which were used only in non-facing situations (17) and only 4 types were exclusively used in facing situations (see Table 1).

Table 1. Type of facial expressions used exclusively when facing another individual, those used exclusively when not facing another individual, and those that occurred in both facing and non-facing situations.

For more details on the morphology regarding previous descriptions of these facial expressions, see S2 Table. All facial expressions including AU10, AU16, AU25, AU26, AU27 are forms of ‘open-mouth’ displays [23]. Frequencies and other details are reported in S2 and S3 Tables.

| Use of facial expressions (number of types of facial expressions) | Facial expressions (single AUs/Ads or in combination with other AUs/Ads) |

|---|---|

| Only Facing (4) | {AU9+AU10+AU16+AU25+AU27} {AU10+AU12+AU25+AU27} {AU16+AU25+AU26+AUEye*} {AU1+2+AU10+AU16+AU25+AU27} |

| Only Non-Facing (17) | {AU1+2} {AU8} {AU12} {AU17} {AD500} {AU1+2+AU18} {AU10+AU25} {AU16+AU25} {AU41+AUEye*} {AU7+AU25+AU26} {AU1+2+AU5+AU25+AU26} {AU8+AU25+AU26+AD19} {AU9+AU10+AU25+AU27} {AU12+AU25+AU26+AD37} {AU25+AU26+AUEye+AD37} {AU25+AU26+AD37+AD500} {AU10+AU12+AU16+AU25+AU27+AUEye*} |

| Facing and Non-Facing (24) | {AU18} {AU25} {AU41} {AUEye*} {AU25+AU26} {AU25+AU27} {AU8+AU25+AU26} {AU10+AU25+AU26} {AU10+AU25+AU27} {AU12+AU25+AU26} {AU12+AU25+AU27} {AU16+AU25+AU26} {AU16+AU25+AU27} {AU18+AU25+AU26} {AU25+AU26+AD19} {AU25+AU27+AD19} {AU25+AU26+AD37} {AU8+AU25+AU26+AD37} {AU10+AU16+AU25+AU26} {AU10+AU16+AU25+AU27} {AU12+AU16+AU25+AU26} {AU12+AU16+AU25+AU27} {AU10+AU12+AU16+AU25+AU26} {AU10+AU12+AU16+AU25+AU27} |

(*AUEye includes either AU43 (eye closure) or AU45 (eye blink), we did not differentiate between the two AUs here).

Duration of facial expressions when facing another individual compared to non-facing in social versus non-social context

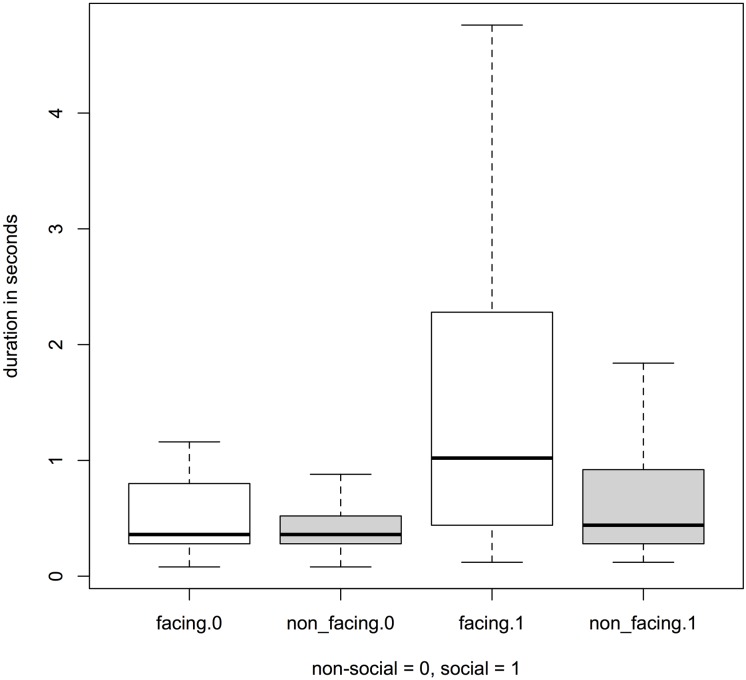

By using a Linear Mixed Effects Model (see Material and Methods for details), we tested the influence on different factors on the duration of facial expressions and found that the full model was significantly different from the null model (likelihood ratio test: χ23 = 19.96, P < 0.001; see also Table 2) indicating that the three fixed effects (facing, context and their interaction) as a collective have an impact on the response. A test of the significance of the interactions was obtained from applying the function drop 1 [37] to the full model. It revealed a significant interaction between facing and context (likelihood ratio test of the interaction: χ21 = 5.32, P = 0.02; Fig 1). Thus, facial expressions produced while facing another individual had a longer duration compared to non-facing events, but only in social and not in non-social contexts (see Estimates and Std. Errors in Table 2; Full Model: Duration).

Table 2. Estimates and Std.

Errors for the coefficients of the two above-mentioned full models.

| Model | Estimates | Std. Error | Lower CL | Upper CL |

|---|---|---|---|---|

| Full Model: Duration | ||||

| (Intercept) | 6.320 | 0.145 | 6.035 | 6.607 |

| FacingNon-facing | -0.167 | 0.143 | -0.447 | 0.114 |

| ContextSocial | 0.398 | 0.170 | 0.047 | 0.735 |

| FacingNon-facing: ContextSocial | 0.398 | 0.172 | -0.736 | -0.06 |

| Full Model: Facing | ||||

| (Intercept) | 2.417 | 0.177 | 2.083 | 2.833 |

| ContextSocial | -1.705 | 0.28 | -2.24 | -1.115 |

Fig 1. Duration (in sec) of facial expressions when facing another individual compared to not facing in social and non-social contexts.

Facial expressions while facing another individual were significantly longer compared to non-facing events, but only in social (right) and not in non-social (left) situations (outliers were excluded for better visualization).

Frequency of facing events versus non-facing events in social compared to non-social contexts

To test whether facial expressions while facing another individual (response variable, binary) were used more frequently in social compared to non-social contexts, we used a Generalized Linear Mixed Model with binomial error structure and logit link function [42] into which we included context (predictor) as a fixed effect, and individual and video clip as random effect. We also included the random slopes of context (manually dummy coded) within individual and video clip into the model. Apart from that we used the same software and procedures as described above.

Overall, the full model was significantly different from the null model (likelihood ratio test: χ21 = 13.84, P < 0.001), indicating that the fixed effect had an impact on the response variable. Results showed that the occurrence of facial expressions while facing another individual was more common in the social context compared to the non-social context (see Estimates and Std. Errors in Table 2; Full Model: Facing). Importantly, this difference did not result from a longer duration of facing events compared to non-facing events in social contexts. In social contexts, gibbons did still not face each other in 74,6% of the time.

Pattern of consecutive facial expressions

In order to test whether the behaviour of the recipient is influenced by the use of a facial expression of the other individual, we compared consecutive facial expressions in pairs when individuals were facing to when they were not facing each other. In order to rule out facial mimicry [13,30,31], we focused on consecutive, but different types of facial expressions. To investigate whether two consecutive facial expressions (response, binary) were more common in situations in which individuals faced each other compared to non-facing situations, we used a Generalized Linear Mixed Model with binomial error structure and logit link function into which we included facing (predictor) as a fixed effect, and individual as random effect. We also included the random slope of facing (manually dummy coded) within individual into the model. Apart from that we used the same software and procedures as described above. Testing for different cut-offs (time intervals between the consecutive facial expressions), we found that the full model was significantly different from the null model (results for different cut-offs see Table 3). Results show that consecutive facial expression events were more common while individuals were facing each other compared to non-facing events (likelihood ratio tests for different cut-offs see Table 3). Results of the analyses including the mimic events showed the same pattern (likelihood ratio tests for different cut-offs see S4 Table).

Table 3. Results of likelihood ratio tests of GLMM.

Cut-off refers to the time interval between the consecutive facial expressions.

| Cut-off | Estimate | Std. Error | Lower CL | Upper CL | χ2 (df) | P-value |

|---|---|---|---|---|---|---|

| 2 seconds | 7.348 | 6.7 * 103 | ||||

| (Intercept) | -3.288 | 0.34 | -4.047 | -2.683 | ||

| facingNonfacing | -1.854 | 0.825 | -4.228 | -0.475 | ||

| 3 seconds | 6.599 | 10.2 * 103 | ||||

| (Intercept) | -2.987 | 0.296 | -3.884 | -2.453 | ||

| facingNonfacing | -1.444 | 0.61 | -3.082 | -0.345 | ||

| 4 seconds | 9.101 | 2.6 * 103 | ||||

| (Intercept) | -2.752 | 0.266 | -3.718 | -2.266 | ||

| facingNonfacing | -1.701 | 0.611 | -3.32 | -0.578 | ||

| 5 seconds | 8.421 | 3.7 * 103 | ||||

| (Intercept) | -2.618 | 0.251 | -3.452 | -2.157 | ||

| facingNonfacing | -1.512 | 0.565 | -3.047 | -0.49 |

Discussion

In this study, we investigated whether different gibbon species adjusted their usage of facial expressions in interactions with others to the recipients’ behaviour and thus use them differently when others are visually attending versus not attending. Gibbons directed at least some of their facial expressions to specific individuals when they were visually attending and facial expressions that were used when both individuals were facing each other lasted significantly longer than those not directed at others. A similar pattern has been reported for orangutans (Pongo pygmaeus) in the context of social play [1]. Interestingly, our study shows that this pattern is present only in social but not in non-social contexts (social context included grooming, agonistic behaviour and play; non-social context included self-grooming and resting). It is important to note that in both social and non-social contexts, individuals were in close proximity to another, but only when in social contexts did facing another individual elicit longer durations of their facial expressions. The same pattern was not observed in non-social contexts. Therefore, we can exclude the possibility that a simple ‘looking at someone’s face’ causes longer durations of facial expressions.

From the total of 45 facial expressions, only four occurred exclusively when facing another individual. These four types are morphologically very similar and mostly represent variations of the ‘mouth open’ facial expressions and thus could also be interpreted as the same expression performed with varying degrees of intensity. However, although these facial expressions were not context-specific as they occurred in more than one context, future studies are needed to investigate whether these variants of one facial expression serve different functions [43].

Furthermore, facial expressions when facing another individual occurred more frequently in social compared to non-social contexts. This shows that individuals direct facial expressions to the other individual more often when interacting with each other and that they influence the recipient’s behaviour in a way that they respond by using another facial expression (while other communicative behaviors were not considered in this study). Based on the current data, we can not rule out the potential explanation that activities in social contexts differ from those in non-social contexts, and as a consequence, they facilitate more frequent facial expressions and longer durations.

In order to examine whether directed facial expressions elicited a response of the recipient, we investigated whether facial expressions of one individual were immediately followed by another facial expression from their pair partner. We found that these consecutive facial expressions were significantly more common if the individual producing a facial expression was facing the recipient compared to events when the interacting individuals were not facing each other. This shows that recipients were influenced by the facial expressions of the other individual as indicated by their facial response.

Since we focused only on those consecutive facial expressions, which were different from the type of first facial expression produced, it is at least less likely that the response of the other individual was merely driven by facial mimicry i.e. the mechanism, which induces the same facial expression in others [e.g. 30]. The exclusion of facial mimicry instances can be considered as very conservative as they could potentially also include instances of purposeful communication instead of merely reflexive reactions to the sender’s initial facial expression. For example, it has been shown (at least in chimpanzees for non-human primates) that individuals can be selective as to which facial expression is mimicked or not [44]. This seems to support the conclusion that higher level cognitive processes are involved. However, to fully understand how hylobatids are using consecutive facial expressions and how this might interact with other more reflexive behaviours (such as mimics of other expressions) future research needs to be conducted.

To determine in more detail whether facial expressions are under purely voluntary control compared to reflexive production, experimental studies are necessary in order to maximally rule out other low-level explanations [e.g. 9]. Since we only considered facial expressions, but not gestures, body postures or vocalizations as possible reactions to an initial facial expression, we could have underestimated the reactions elicited by facial expressions. Therefore, future studies should apply a multimodal approach to narrow down the mechanisms underlying communicative expressive signals in non-human primates' social interactions.

Together our findings suggest that small apes have at least some control over the production of their facial expressions because they use them differentially depending on the recipient’s state of attention. This suggests that some gibbon facial expressions do indeed represent intentionally used signals. However, the adjustment to the recipient’s behaviour is just one of several criteria to identify instances of intentional communication [45]. Future research needs to consider additional markers of intentionality to fully conclude intentional use of facial expressions.

Supporting Information

(DOCX)

Different types of facial expressions observed, number of occurrence, contexts and references to similar descriptions in the literature.

(DOCX)

Facial expression events for each individual (ID) categorized by context and facing (F) and non-facing (NF) in the whole data set. Explicit descriptions, which instances are excluded from the analyses, are marked. Grey marked categories were excluded from the analyses. Rationales for exclusion are provided in description below table.

(DOCX)

This table includes all data points (facial expression events) which occurred given our criteria (see Methods). It contains information about the signalling individual (species, gender, individual of which pair) and information about the signal (AU combination, duration, context and whether individuals of the pair were facing each other or not).

(CSV)

Acknowledgments

This project was funded by Deutsche Forschungsgemeinschaft (DFG) within the Excellence initiative Languages of Emotion “Comparing emotional expression across species—GibbonFACS.” We thank Robert Zingg and Zoo Zurich (Switzerland), Jennifer Spalton and Twycross Zoo (UK), Neil Spooner and Howletts Wild Animal Park (UK), and Corinne Di Trani, Mulhouse Zoo (France) and Johann Achim and NaturZoo Rheine (Germany) for allowing us to collect footage of their animals. We also thank Manuela Lembeck, Wiebke Hoffman and Paul Kuchenbuch for support in collecting the video footage. We thank Manuela Lembeck and Laura Asperud Thomsen for reliability coding. Thanks to Roger Mundry and Bodo Winter who helped with the statistical analyses. LO was financially supported by the European Research Council (Starting Independent Researcher Grant #309555 to G.S. van Doorn).

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

This project was supported by Deutsche Forschungsgemeinschaft (DFG)/Excellence Cluster "Languages of Emotion" (EXC 302).

References

- 1.Waller BM, Caeiro CC, Davila-Ross M. Orangutans modify facial displays depending on recipient attention. PeerJ. 2015;3: e827 10.7717/peerj.827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Darwin C. The Expression of the Emotions in Man and Animals. New York: Oxford University Press; 1872. [Google Scholar]

- 3.Parr LA, Preuschoft S, de Waal F. Afterword: research on facial emotion in chimpanzees, 75 years since Kohts In: de Waal F, editor. Infant Chimpanzee and Human Child. New York, US: Oxford University Press; 2002. pp. 411–52. [Google Scholar]

- 4.Waller BM, Micheletta J. Facial Expression in Nonhuman Animals. Emot Rev. 2013;5: 54–59. 10.1177/1754073912451503 [DOI] [Google Scholar]

- 5.Chevalier-Skolnikoff S. Facial Expression of Emotion in Nonhuman Primates In: Ekman P, editor. Darwin and Facial Expression: A Century of Research in Review. Vol. 16 Cambridge: Marlor Books; 1940. [Google Scholar]

- 6.Dobson SD. Socioecological correlates of facial mobility in nonhuman anthropoids. Am J Phys Anthropol. 2009;139: 413–420. 10.1002/ajpa.21007 [DOI] [PubMed] [Google Scholar]

- 7.Parr L, Cohen M, De Waal F. Influence of Social Context on the Use of Blended and Graded Facial Displays in Chimpanzees. Int J Primatol. 2005;26: 73–103. 10.1007/s10764-005-0724-z [DOI] [Google Scholar]

- 8.Parr LA, Waller BM. Understanding chimpanzee facial expression: insights into the evolution of communication. Soc Cogn Affect Neurosci. 2006;1: 221–8. 10.1093/scan/nsl031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hopkins WD, Taglialatela JP, Leavens D. Do chimpanzees have voluntary control of their facial expressions and vocalizations? 2011; Available: http://sro.sussex.ac.uk/13565/

- 10.Mackinnon J. The behaviour and ecology of wild orang-utans (Pongo pygmaeus). Anim Behav. 1974;22: 3–74. 10.1016/S0003-3472(74)80054-0 [DOI] [Google Scholar]

- 11.Maple T. Orang Utan Behaviour. New York: Van Nostrand Reinhold Company; 1980. [Google Scholar]

- 12.Steiner JE, Glaser D, Hawilo ME, Berridge KC. Comparative expression of hedonic impact: Affective reactions to taste by human infants and other primates. Neurosci Biobehav Rev. 2001;25: 53–74. 10.1016/S0149-7634(00)00051-8 [DOI] [PubMed] [Google Scholar]

- 13.Davila Ross M, Menzler S, Zimmermann E. Rapid facial mimicry in orangutan play. Biol Lett. 2008;4: 27–30. 10.1098/rsbl.2007.0535 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hinde R, Rowell T. Communication by postures and facial expressions in the rhesus monkey (Macaca mulatta). Proc Zool Soc London. 1962;138: 1–21. [Google Scholar]

- 15.Partan S. Single and multichannel signal composition: facial expressions and vocalizations of rhesus macaques (Macaca mulatta). Behaviour. 2002;139: 993–1027. [Google Scholar]

- 16.Ghazanfar AA, Takahashi DY, Mathur N, Fitch WT. Cineradiography of monkey lip-smacking reveals putative precursors of speech dynamics. Curr Biol. 2012;22: 1176–82. 10.1016/j.cub.2012.04.055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gulledge JP, Fernández-Carriba S, Rumbaugh DM, Washburn DA. Judgments of Monkey’s (Macaca mulatta) Facial Expressions by Humans: Does Housing Condition “Affect” Countenance? Psychol Rec. 2015;65: 203–207. 10.1007/s40732-014-0069-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Epple G. Soziale Kommunikation bei Callithrix jacchus Erxleben 1777 In: Starck D, Schneider R, Kuhn H-J, editors. Progress in primatology: first congress of the international primatology society. Stuttgart: Gustav Fischer; 1967. pp. 247–254. [Google Scholar]

- 19.Hook-Costigan M., Rogers L. Lateralized use of the mouth in production of vocalizations by marmosets. Neuropsychologia. 1998;36: 1265–1273. 10.1016/S0028-3932(98)00037-2 [DOI] [PubMed] [Google Scholar]

- 20.Stevenson M, Poole T. An ethogram of the common marmoset (Calithrix jacchus jacchus): general behavioural repertoire. Anim Behav. 1976;24: 428–51. [DOI] [PubMed] [Google Scholar]

- 21.van Hooff J. The facial displays of Caterrhine monkeys and apes In: Morris D, editor. Primate ethology. Chicago: Alsine de Gruyter; 1967. pp. 7–68. [Google Scholar]

- 22.Kemp C, Kaplan G. Facial expressions in common marmosets (Callithrix jacchus) and their use by conspecifics. Anim Cogn. 2013; 10.1007/s10071-013-0611-5 [DOI] [PubMed] [Google Scholar]

- 23.Liebal K, Pika S, Tomasello M. Social communication in siamangs (Symphalangus syndactylus): use of gestures and facial expressions. Primates. 2004;45: 41–57. 10.1007/s10329-003-0063-7 [DOI] [PubMed] [Google Scholar]

- 24.Waller BM. GibbonFACS: A Muscle-based Facial Movement Coding System for Hylobatids. Int J Primatol. 2012;4: 809–821. [Google Scholar]

- 25.Scheider L, Liebal K, Oña L, Burrows A, Waller B. A comparison of facial expression properties in five hylobatid species. Am J Primatol. 2014;76: 618–628. 10.1002/ajp.22255 [DOI] [PubMed] [Google Scholar]

- 26.Burrows AM, Diogo R, Waller BM, Bonar CJ, Liebal K. Evolution of the muscles of facial expression in a monogamous ape: evaluating the relative influences of ecological and phylogenetic factors in hylobatids. Anat Rec (Hoboken). 2011;294: 645–63. 10.1002/ar.21355 [DOI] [PubMed] [Google Scholar]

- 27.Waller BM, Lembeck M, Kuchenbuch P, Burrows AM, Liebal K. GibbonFACS: A Muscle-Based Facial Movement Coding System for Hylobatids. Int J Primatol. 2012;33: 809–821. 10.1007/s10764-012-9611-6 [DOI] [Google Scholar]

- 28.Vick SJ, Paukner A. Variation and context of yawns in captive chimpanzees (Pan troglodytes). Am J Primatol. 2010;72: 262–269. 10.1002/ajp.20781 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Waller BM, Misch A, Whitehouse J, Herrmann E. Children, but not chimpanzees, have facial correlates of determination. Biol Lett. 2014;10: 20130974 10.1098/rsbl.2013.0974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Dimberg U, Thunberg M, Elmehed K. Unconscious Facial Reactions to Emotional Facial Expressions. Psychol Sci. 2000;11: 86–89. 10.1111/1467-9280.00221 [DOI] [PubMed] [Google Scholar]

- 31.Decety J, Jackson PL. A social neuroscience perspective on empathy. Curr Dir Psychol Sci. 2006;15: 54–58. [Google Scholar]

- 32.Altmann J. Observational Study of Behavior: Sampling Methods. Behaviour. 1974;49: 227–266. [DOI] [PubMed] [Google Scholar]

- 33.Ekman P, Friesen W, Hager J. Facial ction coding system. Salt Lake City: Research Nexus; 2002. [Google Scholar]

- 34.Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;XX: 37–46. [Google Scholar]

- 35.Baayen R. Analyzing Linguistic Data. Cambridge: Cambridge University Press; 2008. [Google Scholar]

- 36.Forstmeier W, Schielzeth H. Cryptic multiple hypotheses testing in linear models: Overestimated effect sizes and the winner’s curse. Behav Ecol Sociobiol. 2011;65: 47–55. 10.1007/s00265-010-1038-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Barr DJ, Levy R, Scheepers C, Tily HJ. Random effects structure for confirmatory hypothesis testing: Keep it maximal. J Mem Lang. 2013;68: 255–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.R Core Team. R: A Language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2012. [Google Scholar]

- 39.Bates D, Maechler M, Bolker B. lme4: Linear mixed-effects models using S4 classes. R package version 0.999999–0; 2012.

- 40.Field A. Discovering statistic using SPSS. London, UK: Sage Publications; 2005. [Google Scholar]

- 41.Fox J, Weisberg S. An R Companion to Applied Regression. 2nd ed Thousand Oaks, CA: Sage Publications; 2011. [Google Scholar]

- 42.McCullagh P, Nelder J. Generalized linear models. London, UK: Chapman and Hall; 1996. [Google Scholar]

- 43.Waller BM, Cherry L. Facilitating play through communication: significance of teeth exposure in the gorilla play face. Am J Primatol. 2012;74: 157–64. 10.1002/ajp.21018 [DOI] [PubMed] [Google Scholar]

- 44.Davila-Ross M, Jesus G, Osborne J, Bard KA. Chimpanzees (Pan troglodytes) Produce the Same Types of “Laugh Faces” when They Emit Laughter and when They Are Silent. PLoS One. 2015;10: e0127337 10.1371/journal.pone.0127337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Liebal K, Waller M, Burrows A, Slocombe K. Primate Communication: A Multimodal Approach. Cambridge: Cambridge University Press; 2014. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX)

Different types of facial expressions observed, number of occurrence, contexts and references to similar descriptions in the literature.

(DOCX)

Facial expression events for each individual (ID) categorized by context and facing (F) and non-facing (NF) in the whole data set. Explicit descriptions, which instances are excluded from the analyses, are marked. Grey marked categories were excluded from the analyses. Rationales for exclusion are provided in description below table.

(DOCX)

This table includes all data points (facial expression events) which occurred given our criteria (see Methods). It contains information about the signalling individual (species, gender, individual of which pair) and information about the signal (AU combination, duration, context and whether individuals of the pair were facing each other or not).

(CSV)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.