Abstract

Background:

The Accreditation Council for Graduate Medical Education (ACGME) fully implemented all aspects of the Next Accreditation System (NAS) on July 1, 2014. In lieu of periodic accreditation site visits of programs and institutions, the NAS requires active, ongoing oversight by the sponsoring institutions (SIs) to maintain accreditation readiness and program quality.

Methods:

The Ochsner Health System Graduate Medical Education Committee (GMEC) has instituted a process that provides a structured, process-driven improvement approach at the program level, using a Program Evaluation Committee to review key performance data and construct an annual program evaluation for each accredited residency. The Ochsner GMEC evaluates the aggregate program data and creates an Annual Institutional Review (AIR) document that provides direction and focus for ongoing program improvement. This descriptive article reviews the 2014 process and various metrics collected and analyzed to demonstrate the program review and institutional oversight provided by the Ochsner graduate medical education (GME) enterprise.

Results:

The 2014 AIR provided an overview of performance and quality of the Ochsner GME program for the 2013-2014 academic year with particular attention to program outcomes; resident supervision, responsibilities, evaluation, and compliance with duty‐hour standards; results of the ACGME survey of residents and core faculty; and resident participation in patient safety and quality activities and curriculum. The GMEC identified other relevant institutional performance indicators that are incorporated into the AIR and reflect SI engagement in and contribution to program performance at the individual program and institutional levels.

Conclusion:

The Ochsner GME office and its program directors are faced with the ever-increasing challenges of today's healthcare environment as well as escalating institutional and program accreditation requirements. The overall commitment of this SI to advancing our GME enterprise is clearly evident, and the opportunity for continued improvement resulting from institutional oversight is being realized.

Keywords: Accreditation, education–medical–graduate, internship and residency, program evaluation, quality improvement

INTRODUCTION

Ochsner Clinic Foundation (OCF) is accredited by the Accreditation Council for Graduate Medical Education (ACGME) for institutional operation of medical and surgical graduate medical education (GME) programs. Ochsner Medical Center (OMC) in New Orleans, LA is the primary educational facility for the Ochsner-sponsored programs, and the site is accredited by the Joint Commission. Five additional hospitals are affiliated with OMC to meet training needs in various programs: Leonard J. Chabert Medical Center, Houma, LA; New Orleans Children's Hospital, New Orleans, LA; Touro Infirmary, New Orleans, LA; Interim Louisiana State University Hospital, New Orleans, LA; and East Jefferson General Hospital, Metairie, LA. These 5 hospitals are accredited by the Joint Commission.

In the 2013-2014 academic year, OCF sponsored 23 ACGME-accredited medical residencies that served 251 residents. In comparison, during the 2008-2009 academic year, OCF sponsored 18 ACGME-accredited medical residencies with 226 trainees. The ever-increasing number of Ochsner-sponsored programs and residents affirms the commitment of the Ochsner Health System to GME.

Forty-two residency and fellowship programs affiliate with OCF for training. This training reaches more than 390 unique residents (70 full-time equivalents). All affiliated programs are also accredited by the ACGME.

The OCF commitment to medical education expands beyond GME to include undergraduate medical education. Six hundred unique medical students were provided the equivalent of more than 1,400 student months of training at OCF in the 2013-2014 academic year. The presence of medical students is an important learning opportunity for residents as they develop as mentors and teachers.

ANNUAL PROGRAM EVALUATION PROCESS

The ACGME fully implemented all aspects of the Next Accreditation System (NAS) on July 1, 2014. In lieu of periodic accreditation site visits of programs and institutions, the NAS requires active, ongoing oversight by the sponsoring institutions (SIs) to maintain accreditation readiness and program quality. The Ochsner Health System Graduate Medical Education Committee (GMEC) has instituted a process that provides a structured, process-driven improvement approach at the program level, using a Program Evaluation Committee (PEC) to review key performance data and construct an annual program evaluation (APE) for each accredited residency. This annual evaluation of GME programs and the SI provides a review of program strengths and a framework to identify areas for ongoing improvement. This evaluation follows a standardized process that directs review of key program indicators and individual resident performance in the context of expected outcomes.

All sponsored programs participate in the Annual Review of Program, a structured self-evaluation typically conducted in May or June by a PEC composed of key faculty, residents, program managers, and others who may provide constructive feedback on key elements and outcomes of the program.1

The results of the review are presented as the APE and provided to GME administration and the designated institutional official (DIO) for further review of findings, with a particular focus on ongoing program improvement and action plans. The Ochsner GMEC has added an additional letter to the acronym, creating an APE & I that reflects the importance of ongoing program improvement.

The formal APE & I template document is uploaded in our resident management system, New Innovations (New Innovations, Inc.), by each of our 23 PECs. The DIO reviews and scores each program's APE & I based on multiple objective and subjective accrediting metrics. The findings are then presented and reviewed at a meeting that includes the DIO, assistant vice president for GME, program director, program manager, and department chair for each sponsored program. Each of these meetings focuses on the following performance metrics:

-

•

Overall contents of the APE & I

-

•

ACGME resident and core faculty survey results

-

•

Internal anonymous evaluation of the program by the residents and faculty

-

•

Updates to existing ACGME citations

-

•

Major program changes submitted to the ACGME

-

•

Program action plan for improvement

This discussion allows for feedback and recommendations for each program regarding the proposed action plans and performance metrics identified to assess when program improvement has been realized. These sessions also provide an opportunity to share best practices across all Ochsner-sponsored programs.

A summative report of these meetings is presented to the GMEC in late fall as the Annual Institutional Review (AIR) is conducted. This report affords the GMEC the opportunity to review results cumulatively and by program, providing sufficient data and information to allow for effective oversight and direction. In doing so, the SI ensures compliance with ACGME institutional requirements I.B.5.a)-I.B.5.a).(3) (Figure 1) that highlight the necessary indicators for conducting the AIR, including results of the most recent institutional self-study (not applicable to our institution until 2027), results of both ACGME annual surveys (residents and faculty), and notification of each ACGME-accredited program's accreditation status and self-study visits (the latter will be first captured in 2017 for 2 sponsored programs).2 The process as detailed to this point has been completed for 2014. The final step is the submission of a written annual executive summary of the AIR to the governing body. Select performance metrics are detailed in the next section.

Figure 1.

Importance of institutional oversight as evidenced by Accreditation Council for Graduate Medical Education requirements.

PERFORMANCE METRICS

Excellence in Resident Satisfaction

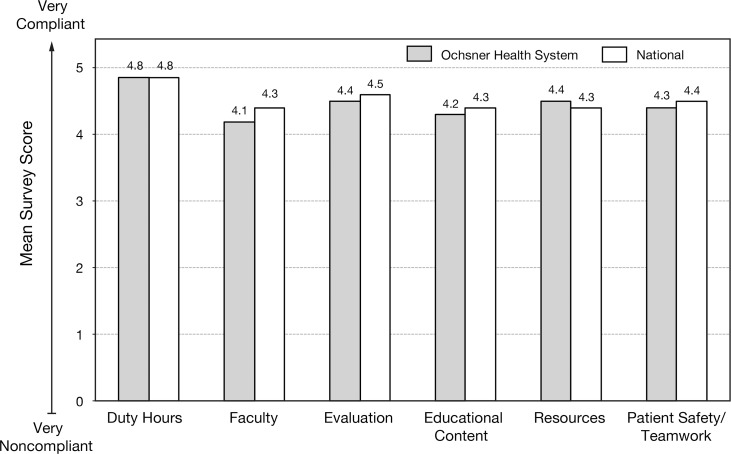

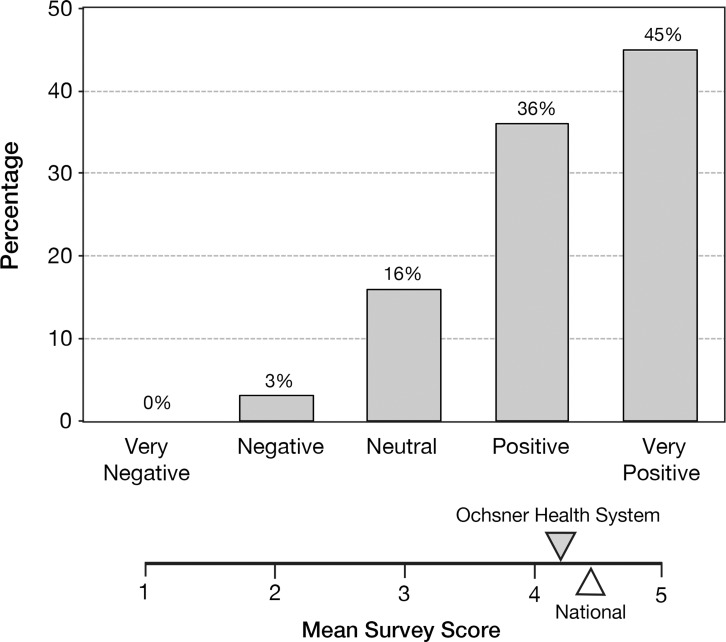

The ACGME conducts an annual survey of residents with a required 70% participation rate (231 of 246 eligible OMC trainees completed the survey, a 94% institutional response). The GMEC evaluates the results of this survey at the programmatic and institutional levels. Ochsner programs consistently score at the national means (or slightly above) on the elements of this survey. Institutional metrics require this level of performance, and programs scoring below these thresholds must include these elements in their action plans to improve the program. The survey includes questions that evaluate compliance with duty-hour requirements, effectiveness of faculty teaching, learning opportunities, resource availability, and overall satisfaction with the program (Figures 2 and 3).

Figure 2.

Ochsner Health System mean scores for elements of the Accreditation Council for Graduate Medical Education Annual Resident Survey compared to national mean scores.

Figure 3.

Residents' overall evaluation of the training programs at Ochsner Health System.

Updates to Existing ACGME Program Citations

Because the SI requires programs to annually update their existing ACGME/Residency Review Committee (RRC) citations and because 2 years of review by an RRC has resulted in no additional citations, 9 of 23 (39%) accredited programs have had their old citations completely eliminated. We anticipate that several additional programs within this SI will have all previous citations eliminated after the annual RRC review for the 2014-2015 academic year.

Excellence in Accreditation

Excellence in accreditation is another metric evaluated annually. All of our training programs have successfully transitioned to the NAS in which cycle lengths (formerly 1-5 years) are no longer reported by the ACGME. As a result of this new process, several Ochsner programs have been granted continuous accreditation with a self-study in 10 or more years. Compliance with the NAS, the new process for institutional review, and the written report from our first Clinical Learning Environment Review (CLER) were primary focuses of the DIO, GMEC, and the GME administrative staff in the 2013-2014 academic year and will continue to be priorities in coming years.

Based on our last institutional accreditation site visit conducted in April 2013, the ACGME Institutional Review Committee on October 17, 2013 awarded OMC continuous accreditation (instead of the 5 years of accreditation in the old system) with a self-study (in the NAS) in November 2027. Our initial CLER visit was conducted on July 23-24, 2013.3 The GME office received approximately 10 days' notice of the impending visit, an essential element of such visits being “unannounced.” The GME office had completed a CLER readiness self-assessment in May 2013 and had scored itself on the 6 focus areas of such a visit. The ACGME final results of the CLER visit were essentially the same as our self-assessment, with unique opportunities identified: to standardize transitions of care and to engage our faculty and trainees to use data to identify and decrease healthcare disparities.

Excellence in Board Certification

A key indicator of the success of medical education in any program is the first-time board pass rate for those completing the program. Graduates of the 2013-2014 Ochsner class achieved an 89.94% first-time board pass rate on their certifying examinations (the institutional metric is ≥85%; national metrics vary by sponsoring board and/or respective RRC).

Resident Supervision

Supervision is monitored at the program level by the program director and at the institutional level by the GMEC through review of the annual Resident Survey and the APE & I and during quarterly meetings with both the Chief Resident Council and the program directors group. All residents providing patient care receive direct supervision, indirect supervision, and oversight provided by appropriately credentialed Ochsner staff physicians. The results of the 2013 CLER visit revealed that approximately 10% of our trainees felt they were oversupervised, 5% felt they were undersupervised, and 85% felt that supervision levels were appropriate.

Resident Evaluation

The evaluation of residents is required to ensure the formation of clinically competent physicians. Specified processes by the ACGME/RRCs are objectively assessed annually as part of the ACGME Resident Survey. In the 2013-2014 survey, Ochsner residents reported that they were provided ready access to their personal evaluations (98% compliance) and were provided an opportunity to anonymously evaluate their faculty and program on an annual basis (99% compliance). However, in the same survey, our trainees pointed to a need for improvement in 6 areas pertaining to resident evaluation, educational content, and faculty:

-

•

Program uses evaluations to improve (63% compliance)

-

•

Satisfied with feedback after assignments (68% compliance)

-

•

Appropriate balance for education (69% compliance)

-

•

Education (not) compromised by service obligations (63% compliance)

-

•

Faculty and staff interested in residency education (66% compliance)

-

•

Faculty and staff create an environment of inquiry (64% compliance)

The GMEC in partnership with individual programs will develop action plans to address the areas in which opportunities to improve have been identified.

It should be noted that the release of the ACGME annual resident and faculty surveys occurred after all programs in this SI had completed their APE & Is. While this timing was unfortunate, the program survey results were included in a retrospective fashion. This delay also led to each program's reliance on the anonymous evaluation of the program by residents and faculty obtained using the resident management system (New Innovations). New Innovations allows programs to capture written comments that can potentially provide additional elements for program improvement that are not readily captured in the ACGME surveys. By spring 2016, we hope the ACGME will better time the release of the surveys to enhance the APE & I process that occurs annually in May-June.

Resident Duty-Hour Compliance

The implementation of expanded duty-hour restrictions and the changes needed to maintain compliance require the GMEC to evaluate compliance on a monthly basis. The primary objective of the duty-hour policy is to minimize resident sleep deprivation and excess fatigue that may contribute to errors and potential patient harm. Overall, programs are compliant with duty-hour requirements as evidenced by the 2013-2014 ACGME Resident Survey.

Objective data provided by the ACGME allows comparisons between Ochsner and nationally published criteria. Ochsner GME exceeded national duty-hour compliance in the following areas:

-

•

In-house call every third night (100% compliance)

-

•

Night float no more than 6 nights (99% compliance)

-

•

Eight hours between duty periods (differs by level of training) (98% compliance)

Ongoing challenges to compliance with the duty-hour restrictions are most commonly the consequence of transitions of care or violations of the short break rule. Our programs are committed to full compliance with ACGME accreditation standards by actively managing individual resident duty hours while improving patient care through promoting consistency and efficiency in handoffs and care transitions.

Resident Patient Safety, Quality, and Performance Improvement Participation

The progression of ACGME to the NAS and the clear imperative to link medical education to the outcomes of care have led to the implementation of several initiatives. Linking medical education at both the graduate and undergraduate levels closely aligns the curriculum and practice with institutional strategies. This effort also engages students and residents to apply safety and quality science to resolve real-world issues and problems.

GME staff and faculty are fully engaged with Ochsner Health System operations to deliver quality patient care in the safest of environments. This engagement is achieved through several mechanisms and processes. All house staff are required to complete the Institute for Healthcare Improvement Open School modules that provide a fundamental understanding of the science of performance improvement and patient safety. As of 2015, all current Ochsner house staff will have completed the training that is now a requirement for all entering residents and fellows. Beginning with the 2014-2015 incoming class, TeamSTEPPS (Agency for Healthcare Research and Quality) theory and methods are also a part of required learning to further integrate all house staff into this unique training process designed to optimize patient healthcare outcomes by enhancing teamwork and communication skills.

NAS, milestones, and the CLER criteria clearly define requirements for house staff participation in patient safety and quality initiatives. As part of the GME office oversight for all programs, an assessment of each program's engagement in quality and safety initiatives is included as part of the APE & I. During the 2013-2014 academic year, more than 40 projects were identified as initiated and/or completed. The projects ranged from program-/department-specific initiatives to those that addressed organizational issues such as transitions of care.

Ochsner GME continues to be an active participant in the Alliance of Independent Academic Medical Centers (AIAMC) National Initiative programs. As a participant in National Initiative IV, a team comprised of faculty, administrative staff, and residents took on the aggressive objective of creating a process in Epic (Epic Systems Corporation) to support resident handoffs during transitions of care. This project was successfully launched in November 2013 and continues to move through PDSA (Plan, Do, Study, Act) cycles as the team refines and expands the scope of this important project, even after the March 2015 conclusion of the initiative. Ochsner was recently selected to participate in the AIAMC National Initiative V that will focus on enhancing quality improvement by engaging our trainees and faculty in the use of data to better identify and address healthcare disparities.

The Resident Quality Council continues to be a forum for house staff to raise awareness of safety and quality issues that they have identified and provides a venue for discussion of quality metrics, data, and ongoing education. The council is in the process of transitioning to greater ownership by the residents and fellows as they more actively engage in institutional quality and safety priorities. The strength of commitment to this effort is reflected in the recently established Excellence in Quality Improvement and Patient Safety Award that includes a plaque and a financial gift to a resident who has contributed most significantly to this effort. The third of these annual awards was granted at the house staff commencement ceremony in June 2015.

CONCLUSION

The process detailed above regarding the development of a program APE & I is a chapter in the continued accreditation life of each program within an SI. Subsequent chapters have been added on an annual basis to each program since the last scheduled ACGME accreditation site visit. The APE & Is are incorporated into the essential elements of a program self-study that should incorporate programs' strengths and opportunities for improvement, along with the specific action plans that were developed and completed to ensure continuous program improvement. This annual process should benefit the period of program self-study and the creation of the self-study narrative and should ultimately lead to success with the self-study/accreditation site visit. At Ochsner, our programs will first enter self-study in fall 2017, and our institutional self-study is not scheduled until November 2027.

Through the coming years, we have much to learn from this new process. However, it is essential that as an institution we begin to collect actionable annual data in a transparent manner that will facilitate an effective self-study. Many variations on the above process may lead to equally effective periods of self-study at other institutions. What we have described represents but one institution's attempt to seize an opportunity to improve both program and institutional excellence while at the same time lessening the previous burden of accreditation. We will remain open to sharing our learning as our institution's journey continues.

ACKNOWLEDGMENTS

Ronald G. Amedee, MD is the designated institutional official and Janice C. Piazza is the assistant vice president for graduate medical education at Ochsner Health System.

This article meets the Accreditation Council for Graduate Medical Education and the American Board of Medical Specialties Maintenance of Certification competencies for Systems-Based Practice and Practice-Based Learning and Improvement.

REFERENCES

- 1. . Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system—rationale and benefits. N Engl J Med. 2012. March 15; 366 11: 1051- 1056. doi: 10.1056/NEJMsr1200117. [DOI] [PubMed] [Google Scholar]

- 2. . Philibert I, Lieh-Lai M. A practical guide to the ACGME self-study. J Grad Med Educ. 2014. September; 6 3: 612- 614. doi: 10.4300/JGME-06-03-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. . Weiss KB, Bagian JP, Nasca TJ. The clinical learning environment: the foundation of graduate medical education. JAMA. 2013. April 24; 309 16: 1687- 1688. doi: 10.1001/jama.2013.1931. [DOI] [PubMed] [Google Scholar]