Abstract

Background

Health professions education is characterised by work-based learning and relies on effective verbal feedback. However the literature reports problems in feedback practice, including lack of both learner engagement and explicit strategies for improving performance. It is not clear what constitutes high quality, learner-centred feedback or how educators can promote it. We hoped to enhance feedback in clinical practice by distinguishing the elements of an educator’s role in feedback considered to influence learner outcomes, then develop descriptions of observable educator behaviours that exemplify them.

Methods

An extensive literature review was conducted to identify i) information substantiating specific components of an educator’s role in feedback asserted to have an important influence on learner outcomes and ii) verbal feedback instruments in health professions education, that may describe important educator activities in effective feedback. This information was used to construct a list of elements thought to be important in effective feedback. Based on these elements, descriptions of observable educator behaviours that represent effective feedback were developed and refined during three rounds of a Delphi process and a face-to-face meeting with experts across the health professions and education.

Results

The review identified more than 170 relevant articles (involving health professions, education, psychology and business literature) and ten verbal feedback instruments in health professions education (plus modified versions). Eighteen distinct elements of an educator’s role in effective feedback were delineated. Twenty five descriptions of educator behaviours that align with the elements were ratified by the expert panel.

Conclusions

This research clarifies the distinct elements of an educator’s role in feedback considered to enhance learner outcomes. The corresponding set of observable educator behaviours aim to describe how an educator could engage, motivate and enable a learner to improve. This creates the foundation for developing a method to systematically evaluate the impact of verbal feedback on learner performance.

Keywords: Feedback, Clinical practice, Delphi process, Health professions education, Educator behaviour

Background

Health professions education is characterised by work-based learning where a student or junior clinician (a ‘learner’) learns from a senior clinician (an ‘educator’) through processes of modelling, explicit teaching, task repetition, and performance feedback [1, 2]. Feedback, which follows an educator observing a learner perform a clinical task, is an integral part of this education. This may occur ‘on the run’, during routine clinical practice or as scheduled feedback during workplace-based assessments, planned review sessions, or at mid- or end-of-attachment performance appraisals.

Feedback has been defined as a process in which learners seek to find out more about the similarities and differences between their performance and the target performance, so they can improve their work [3]. This definition focuses on the active role of the learner and highlights that feedback should impact on subsequent learner performance.

Feedback needs to help the learner develop a clear understanding of the target performance, how it differs from their current performance and what they can do to close the gap [4–6]. To accomplish this, a learner has to construct new understandings, and develop effective strategies to improve their performance. A learner also has to be motivated to devote their time and effort to implementing these plans, and to persist until they achieve the target performance.

In an attempt to enhance learner-centred feedback, it is enticing to focus on the learner and their role in the feedback exchange. However given that educators typically lead educational interactions, particularly in the early stages, targeting the educator’s role in feedback may have a greater influence in cultivating learner-centred feedback. A skilled educator can create an optimal learning environment that engages, motivates and supports learners, thereby enabling them to take an active role in evaluating their performance, setting valuable goals and devising effective strategies to improve their performance [7, 8]. Learners who have experienced such sessions could then carry forward a clear model of high quality feedback into future interactions throughout their professional life.

Experts in health professions education assert that feedback is a key element in developing expertise [6, 9–14]. Learners in the health professions also believe feedback can help them and they want it [15–18]. However there is limited evidence to support this conviction that feedback improves the performance of health professionals. The strongest evidence is from two meta-analyses, which indicated that audit followed by feedback improved adherence to clinical guidelines [19, 20]. Beyond the health professions there is stronger evidence. In a synthesis of 500 meta-analyses (180,000 studies), feedback was reported to have one of the most powerful influences on learning and achievement in schools [4]. Another meta-analysis of 131 studies compared feedback alone with no feedback on objective measures of performance of diverse tasks. That analysis also supported the conclusion that feedback improved performance [21].

Despite the enviable theoretical benefits of feedback, problems have been reported in practice. In observational studies of face-to-face feedback, educators often delivered a monologue of their conclusions and recommendations. Learners spoke little, asked few questions, minimised self-assessment (if asked) and were not involved in deciding what was talked about, explaining their perspective or planning ways to improve [22–27].

Observational studies and reviews of feedback forms indicated that educators’ comments were often not specific, did not identify what was done satisfactorily and what needed improvement, and did not include an improvement plan [23, 28–30].

Educators have reported that they did not feel confident in their feedback skills. In particular they avoided direct corrective comments as they feared it could undermine a learner’s self-esteem, trigger a defensive emotional response or spoil the learner-educator relationship. Educators experienced negative feelings themselves, such as feeling uncomfortable or mean [17, 22, 23, 31].

Feedback does not always improve performance and can even cause harm [4, 19, 20, 32, 33]. In Kluger and DeNisi’s meta-analysis [21], approximately a third of studies found that performance deteriorated following feedback.

Learners have reported that they do not always implement feedback advice. Their reasons included they did not consider there was a problem, did not believe the educator’s comments were credible or relevant [34, 35], or did not understand what needed improving or how to do it [34, 36]. Learners have also reported experiencing strong negative emotions such as anger, anxiety, shame, frustration and demotivation following feedback, especially if they thought feedback comments were unfair, derogatory, personal or unhelpful [17, 36–38].

Our goal is to promote high quality feedback by helping educators to refine the way they participate in feedback, and subsequently to enhance learner outcomes. It is not clear what comprises high quality, learner-centred feedback or how educators can promote it. [39, 40]. One explanation for the mismatch between the theoretical benefits of feedback and the problems experienced in practice, is that feedback involves multiple unidentified elements that may influence the outcome. Therefore it would be useful to clarify the components of an educator’s role in feedback required to achieve the aim of engaging, motivating and enabling a learner to improve their skills and develop a list of key educator behaviours that describe how these objectives could be accomplished in clinical practice.

In this study we chose to target the educator’s role first because educators have substantial influence and a primary responsibility to model high quality feedback skills. The setting we focused on was scheduled face-to-face verbal feedback following observation of a learner performing a task, as this is a particularly common form of feedback in the workplace education of health professionals.

Methods

In this paper we describe the first phase in this process, which had two stages. The first stage involved conducting an extensive literature review to delineate the key elements of an educator’s role in effective feedback. In the second stage, a set of correlated educator behaviours was created and then refined in collaboration with an expert panel.

Stage 1: literature review

The literature review was conducted to identify distinct elements of an educator’s role in feedback asserted to help a learner to improve their performance and the supporting evidence. The elements describe the key goals of an educator in high quality, learner centred feedback i.e., what needs to be achieved but not necessarily how to do it. In addition published instruments (or portions thereof) designed to assess face-to-face verbal feedback in health professions education were reviewed for descriptions of educator behaviours considered to be important in effective feedback.

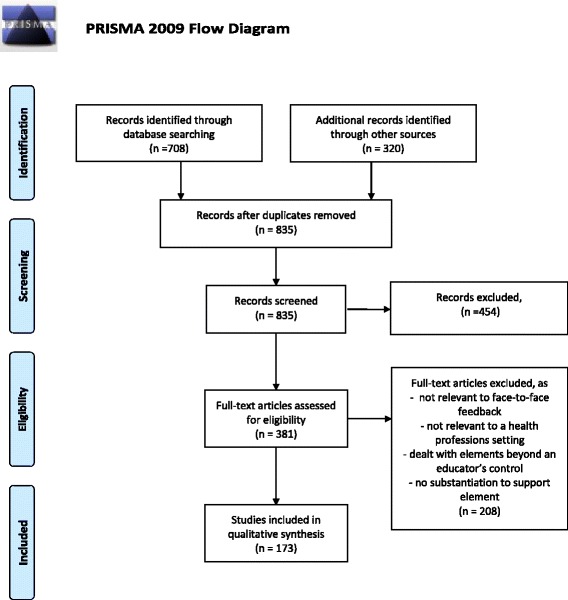

The target information was embedded within diverse articles spread across a broad literature base and was poorly identified by standardised database search terms. We therefore utilised a ‘snowball’ technique [13, 41]. This began with identifying systematic reviews on feedback plus published articles and book chapters in the health professions, education, psychology and business by prominent experts. When authors cited articles to support claims and recommendations, the original substantiating source was traced. Additional relevant articles were identified through bibliographies and citation tracking. This continued to the point of saturation where no new elements were identified. In addition, published instruments (or portion thereof) designed to assess face-to-face verbal feedback in health professions education were searched to identify relevant educator activities. Published literature was searched across the full holdings of Medline, Embase, CINAHL, PsychINFO and ERIC up to March 2013, and then continued to be scanned for previously unidentified elements until September 2015 (see Fig. 1).

Fig. 1.

PRISMA flow diagram for the literature review

Element construction

Elements were constructed by analysing and triangulating supporting information extracted during the literature review. Potential elements and substantiation were extracted by one researcher (CJ) and verified by core research team members (JK and EM). Similar elements were grouped and those with overlapping properties were collapsed. The core research team used an iterative process of thematic analysis [42] to develop a list of elements that described distinct aspects of an educator’s role in feedback.

Stage 2: Development and refinement of the educator behaviour statements

The next step was to operationalise the elements by reconstructing them as statements describing observable educator behaviours that exemplify high quality feedback in clinical practice. An initial set of statements was developed by the core research team, using the same iterative process of thematic analysis, in accordance with the following criteria [43]: the statement describes an observable educator behaviour, that is considered important for effective feedback that results in improved learner performance, targets a single, distinct concept, and uses unambiguous language with self-evident meaning.

A Delphi technique was used to develop expert consensus on the statement set, in which sharing of anonymous survey responses enables consensus to develop as opinions converge over sequential rounds [44–46]. An expert panel was formed. All panel members provided informed consent. Members refined the individual statements and the composition of the list as a whole, and developed consensus on each statement (defined as over 70 % panel agreement) during three rounds using a Delphi technique [47].

Expert panel

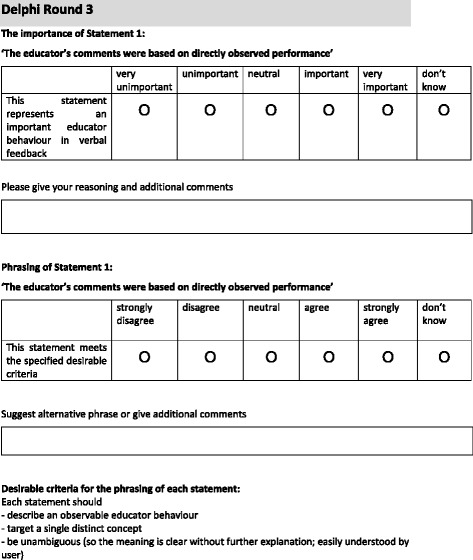

The research team invited nine Australian experts with experience in health professions education, feedback, psychology, education and instrument development to join research team members (JK and EM) to create a panel to refine the statement set. The primary researcher (CJ) acted as the facilitator. A structured survey presenting the initial statements was distributed to panel members using online survey software. For each statement, panel members were asked to consider two questions i) importance: ‘this statement represents an important educator behaviour in verbal feedback’ (rating options were ‘very unimportant, unimportant, neutral, important, very important or don’t know’) and ii) phrasing: ‘this statement meets the specified criteria’ (rating options were ‘agree, neutral, agree, strongly agree or don’t know’). For each question, panel members were asked to provide their reasoning and additional comments in free text boxes. Criteria for each statement and examples of two questions from the survey are presented in Fig. 2.

Fig. 2.

Desirable criteria and example of two questions from Delphi Round 3 survey

After each round, the ratings and comments were analysed using an iterative process of thematic analysis [42], and the educator behaviour statements refined accordingly. For the following round, a revised set of statements was circulated. This was accompanied by summarised anonymous panel responses from the previous round for participants to consider before continuing with the survey.

Following the conclusion of the three Delphi rounds, a face-to-face meeting of panel members was convened to resolve outstanding decisions. The meeting was audiotaped, transcribed verbatim and analysed using thematic analysis, and a set of educator behaviours was finalised.

Ethics approval for this study was obtained from Monash University Human Research Ethics Committee Project Number: CF13/1912-2013001005.

Results

Literature review

The database search identified a key set of reports [4, 10, 11, 13, 19–21, 48–54] that led to the identification of more than 170 relevant articles. These articles included observational studies of feedback, interviews and surveys of educators and learners, summaries of written feedback forms, feedback models, eminent expert commentary, consensus documents, systematic reviews and meta-analyses, and established theories across education, health professions education, psychology and business literature. There was little high quality evidence to clarify the effects of specific elements of feedback.

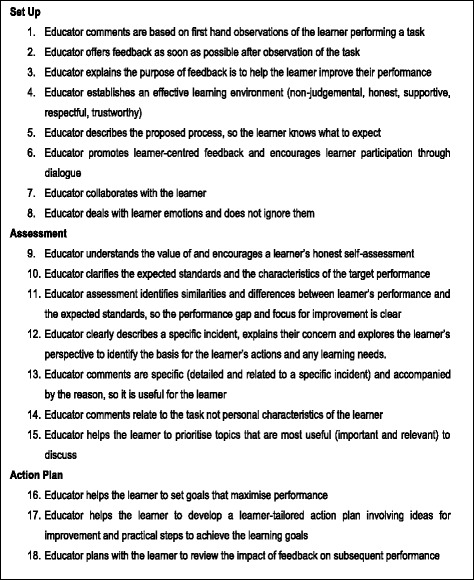

Literature review: elements

Eighteen elements that describe the educator’s role in high quality feedback, were created by identifying substantiating information offered to support expert argument across diverse literature. These are presented in Fig. 3. The order is aligned to the usual flow of a feedback interaction including set up (including some elements that apply throughout), discussing the assessment and developing an action plan.

Fig. 3.

Key elements of an educator’s role in effective feedback, extracted and substantiated from the literature

Literature review: face-to-face verbal feedback instruments

The literature search identified 10 instruments (and additional modified versions) that, to some extent, assessed face-to-face verbal feedback in health professions education. It was hoped that these instruments would include items that described educator behaviours associated with effective feedback in clinical practice. However none of these instruments were designed to assess an educator’s contribution to an episode of face-to-face verbal feedback following observation of a learner performing a task in the workplace. Three instruments assessed a simulated patient’s feedback comments [55–59], three assessed an instructor’s debriefing to a group following a healthcare simulation scenario [60–62], two instruments assessed brief feedback associated with an Objective Structured Clinical Examination (in which the primary aim of the study was to determine if a senior medical student’s feedback was of a similar standard to a doctor’s) [63, 64], and two longitudinally assessed an educator’s overall clinical supervision skills, including feedback, across a clinical attachment [65–67].

Development and refinement of the educator behaviour statements using a Delphi technique

Panel

All nine invited experts agreed to participate to create an eleven member panel; the primary researcher acted as facilitator. All panel members had senior education appointments at a hospital or university (the majority were professors and/or directors). The panel included seven health professionals (medicine, nursing, physiotherapy, dietetics and psychology) and several internationally recognised experts in feedback, education and training, simulation and instrument development. There was a high level of engagement by the panel throughout; all members completed each survey in full and made frequent, detailed additional comments.

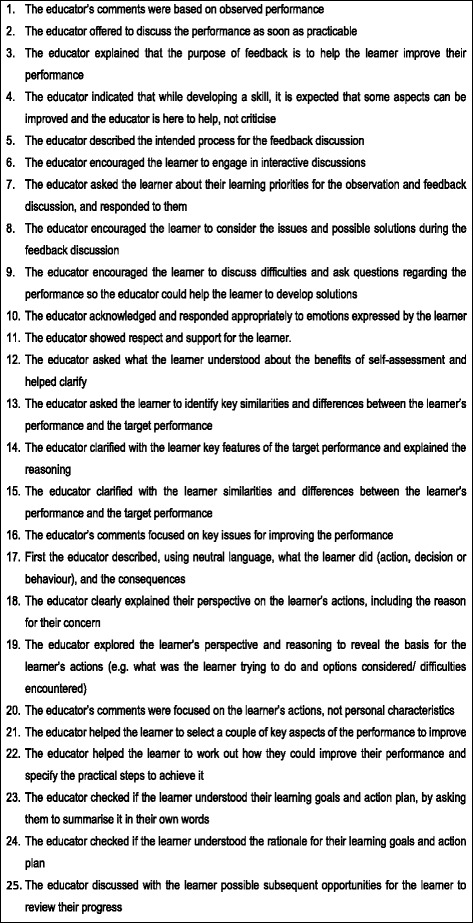

Development of observable behaviour statements

The initial set of observable educator behaviours, developed by the core research team from the elements, contained 23 statements as some elements required more than one for operationalisation. This set was submitted to the Delphi process. After every round, the individual statements and the set as a whole were modified, based on the panel’s ratings and comments. Revisions included refining statements to better target the underlying concept, and rewording statements to better align with the specified criteria (see Fig. 1). Overlapping statements were combined and new ones were developed.

One example of how an element was refashioned into a corresponding observable educator behaviour, is described here. Element 4 states an “educator establishes an effective learning environment”. This was operationalised into “the educator showed respect and support for the learner” (Behaviour Statement 11) and “the educator indicated that while developing a skill, it is expected that some aspects can be improved and the educator is here to help, not criticise” (Behaviour Statement 4).

After completion of the third round, there were 25 statements in the set. Expert consensus was achieved for i) statement importance: all except one and ii) statement phrasing: all except three. These outstanding issues were resolved at the face-to-face panel meeting.

The final list, presented in Fig. 4, included 25 statements that explicitly describe observable educator behaviour in high quality verbal feedback.

Fig. 4.

List of educator behaviours that demonstrate high quality verbal feedback in clinical practice

Discussion

We sought to distinguish the key elements of an educator’s role in feedback, endorsed by the literature, and to develop consensus on a set of observable behaviours that could engage, motivate and enable a learner to improve their performance in clinical practice. Support for these elements came from triangulating information from observational studies of feedback, surveys and interviews of educators and learners, summaries of written feedback forms, systematic reviews and meta-analyses of feedback, and established psychological and behavioural theories, in addition to expert argument, published across health professions, education, psychology and business literature. However there is little high quality evidence to substantiate these educator behaviours and they require formal testing to explore their impact in clinical practice. One of the drivers for this research was the desire to investigate whether specific constituents of feedback argued to be important, do indeed enhance learning.

Characteristics of educator feedback behaviours in high quality feedback

We identified 18 distinct elements and 25 educator behaviours; this exposes the complexity of a feedback interaction. To facilitate further discussion and consideration, we propose four overarching themes that may describe the key concepts of high quality feedback.

-

The learner has to ‘do the learning’

A learner needs to develop a clear vision of the target performance, how it differs from their performance and the practical steps they can take to improve their subsequent performance (Statements: 14–16, 22–24) [4, 5, 68]. This requires the learner to make sense of an educator’s comments, to compare the new information with their previous understanding of the issue and resolve gaps or discrepancies [14, 69, 70]. A learner has to actively construct their own understanding; an educator cannot deliver it ‘ready-made’ to them. Feedback is best done as soon as the learner and educator can engage after the performance (Statement: 2). A learner can only work on one or two changes at a time, in accordance with theories of cognitive load [71]. This would suggest that it is important to prioritise the most important and relevant issues (Statement: 21) [14, 24]. As feedback is an iterative process, the progress achieved (or difficulties encountered) after implementing the action plan should be reviewed (Statement: 25) [5, 14, 72].

The primary purpose of the learner’s self-assessment is to develop their evaluative judgement, contributing to their self-regulatory skills (Statements: 7–8, 12–13) [73, 74]. The learner is positioned to take responsibility for their own learning. As they compare their performance to the target performance, it offers an opportunity for them to clarify their vision of the target performance (Statement: 14), calibrate their assessment to the educator’s assessment (Statement: 15), and highlight their priorities and ideas about how their performance could be improved (Statements: 7–8) [72].

Once the learner is seen as ‘the enacter’ of feedback, the educator’s role becomes ‘the enabler’. The educator uses their expertise to discuss the performance gap, explore the learner’s perspective and reasoning, clarify misunderstandings, help to solve problems, offer guidance in setting priorities and effective goals, and suggest ideas for improvement (multiple statements).

-

The learner is autonomous

High quality feedback supports a learner’s intrinsic motivation to develop their expertise and respects their autonomy [75]. It recognises that the learner decides which changes to make (if any) and how they will do this. Feedback information is only ‘effective’ if a learner choses to implement it. This is more likely to occur when a learner believes an educator’s comments are true and fair, and will help them to achieve their personal goals. This is more likely when an educator’s comments are based on specific first-hand observations (Statement: 1) as a starting point for an open-minded discussion with the learner about the reasons for their actions, and enables identification of learning needs (Statements: 17–19) [10, 76, 77]. An educator’s comments are best directed to actions that can be changed, not personal characteristics (Statement: 20), that is, ‘what the learner did, not what the learner is’ [10, 21, 77]. Comments that target a person’s sense of ‘self’ (including valued self-concepts like ‘being a health professional’) or general corrective comments, may stimulate strong defensive reactions, and do not appear to improve task performance [21, 37, 78, 79]. To support a learner’s intrinsic motivation, an educator should offer suggestions as opposed to giving directives, explain the reasons for their recommendations and help a learner to develop an action plan that aligns with their (often revised) goals, priorities and preferences (Statements: 7,14,18,22,24) [75, 80, 81].

-

The importance of the learner-educator relationship

The learner-educator relationship strongly influences face-to-face feedback; the personal interaction can enrich or diminish the potential for learning [4, 8, 82]. During the encounter, a learner’s interpretation of the educator’s message is affected by their knowledge and experience of the educator. If a learner believes an educator has the learner’s ‘best interests at heart’, is respectful and honest, this creates a trusting relationship and an environment that supports learning (Statements: 3–4,11) [8]. This sense of trust, or psychological safety, encourages the learner to take a ‘learning focus’ not a ‘performance focus’, so the learner can concentrate on improving their skills, as opposed to trying to appear competent by covering up difficulties (Statement: 9) [14, 78, 83]. Performance evaluation often stimulates emotions [6]. An educator may help by responding to a learner’s emotions appropriately (Statement: 10) [84]. In addition an educator should aim for a feedback process that is transparent and therefore predictable, which may help a learner manage feelings of anxiety about what is likely to happen in the session (Statements: 5) [39, 85].

-

Collaboration

Collaboration, through dialogue, is essential for high quality feedback (multiple items). The learner and educator work together, with the common aim of creating an individually-tailored action plan to help the learner improve. The behaviours specified in the items are designed to promote shared understanding and decision-making. Feedback is more than two separate contributions; each one seeks, responds to and builds on the other’s input. Face-to-face verbal feedback offers a unique opportunity for direct, immediate and flexible interaction. This makes it possible for a learner or educator to seek further information, clarify what was meant, raise different perspectives, debate the value of various options and modify proposals in response to the other’s comments. Collaboration optimises the potential for a fruitful outcome because insufficient information, misunderstandings and other obstacles to success can be dealt with during the discussion.

Research strengths and limitations

This research has several strengths. It addresses an important gap in health professions education with a practice-orientated solution. The research design was systematic and rigorous, starting with an extensive literature search followed by expert scrutiny. The literature search continued to the point of saturation but we cannot be sure that all relevant information was assembled. Countering the potential for oversight was the in-depth scrutiny by experts in the health professions and education.

Conclusion

Work-based learning in the health professions [86] relies on effective verbal feedback but problems with current feedback practice are common. This research advances the feedback literature by creating an endorsed, explicit and comprehensive set of educator behaviours intended to engage, motivate and support a learner during a feedback interaction. The recommended educator behaviours provide a platform for developing a method to systematically evaluate the impact of the verbal feedback on learner performance.

*Examples of survey format and responses are available from the first author on request.

Acknowledgements

Christina Johnson, Jennifer Keating and Elizabeth Molloy received the following grant: Office of Learning and Teaching (OLT) and Higher Education and Research Development Society in Australasia inc (HERSDA) Researching New Directions in Learning and Teaching: Seed Funding to Support Project Mentoring and Collaboration.

Footnotes

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

CJ: conceived the research, participated in developing the protocol, gathered, analysed and interpreted the data and prepared the manuscript. JK: assisting in developing the research concept and the protocol, participated in the panel, analysed and interpreted the data and assisted in preparing the manuscript. DB: participated in the panel and suggested revisions to the manuscript. MD: participated in the panel and suggested revisions to the manuscript. DK: participated in the panel and suggested revisions to the manuscript. MH: participated in the panel and suggested revisions to the manuscript. BM: participated in the panel and suggested revisions to the manuscript. WM: participated in the panel and suggested revisions to the manuscript. BN: participated in the panel and suggested revisions to the manuscript. DN: participated in the panel and suggested revisions to the manuscript. CP: participated in the panel and suggested revisions to the manuscript. EM: assisting in developing the research concept and the protocol, participated in the panel, analysed and interpreted the data and assisted in preparing the manuscript. All authors read and approved the final manuscript.

Authors’ information

CJ (MHPE, FRACP) is Medical Director, Monash Doctors Education; Consultant Physician, Monash Health; Senior Lecturer in the Faculty of Medicine, Nursing and Health Sciences, and PhD candidate, HealthPEER, Monash University.

JK (PhD) is Professor, Department of Physiotherapy and Associate Dean Allied Health, Monash University.

DB (PhD) is Director of the Centre for Research on Assessment and Digital Learning, Deakin University, Geelong; Emeritus Professor of Adult Education, Faculty of Arts and Social Sciences, University of Technology Sydney and Research Professor in the Institute of Work-Based Learning, Middlesex University, London.

MD (PhD) is Associate Professor and Deputy Dean, Learning and Teaching, School of Human, Health and Social Sciences, Central Queensland University. Her clinical background is physiotherapy.

DK (PhD) is Associate Professor and Clinical Chair Health Workforce and Simulation, Holmesglen Institute and Healthscope Hospitals, Faculty of Health Science, Youth & Community Studies; Adjunct Senior Lecturer, Monash University. Her clinical background is intensive care nursing.

MH (PhD) is Associate Professor, Academic Director, Student Admissions, Faculty of Medicine, Nursing and Health Sciences, Monash University. Her background is clinical psychology.

BM is Adjunct Professor of Medicine, Monash University and the Clinical Lead, Australian Medical Council National Test Centre; Deputy Chair, Australian Medical Council Examinations Committee and former Chair, Confederation of Postgraduate Medical Education Councils of Australia.

WM (PhD) is Teaching Associate, Faculty of Education, Monash University. Her background is in cognitive and educational psychology.

BN is Professor of Medicine and Associate Dean, Faculty of Health and Medicine, University of Newcastle; Director, Centre for Medical Professional Development, Hunter New England Health Service; Committee Member for Royal Australasian College of Physicians National Panel of Examiners and Chair of Workplace Based Assessment, Australian Medical Council.

DN (PhD) is Professor of Simulation Education in Healthcare, HealthPEER, Monash University.

CP (PhD) is Senior Lecturer, Department of Nutrition and Dietetics, Monash University and an Office for Learning and Teaching Fellow. Her clinical background is nutrition and dietetics.

EM (PhD) is Associate Professor, HealthPEER, Monash University. Her clinical background is physiotherapy.

Contributor Information

Christina E. Johnson, Phone: +61 410 454 535, Email: christina.johnson@monashhealth.org

Jennifer L. Keating, Email: Jenny.Keating@monash.edu

David J. Boud, Email: David.Boud@uts.edu.au

Megan Dalton, Email: Megan.Dalton@cqu.edu.au.

Margaret Hay, Email: Margaret.Hay@monash.edu.

Barry McGrath, Email: Barry.Mcgrath@monash.edu.

Wendy A. McKenzie, Email: Wendy.McKenzie@monash.edu

Kichu Balakrishnan R. Nair, Email: Kichu.Nair@newcastle.edu.au

Debra Nestel, Email: Debra.Nestel@monash.edu.

Claire Palermo, Email: Claire.Palermo@monash.edu.

Elizabeth K. Molloy, Email: Elizabeth.Molloy@monash.edu

References

- 1.Morris C, Blaney D. Work-based learning. In: Swanwick T, editor. Understanding medical education: evidence, theory and practice. Oxford: Assocation for the Study of Medical Education; 2010. pp. 69–82. [Google Scholar]

- 2.Higgs J. Ways of knowing for clinical practice. In: Delany C, Molloy E, editors. Clinical education in the health professions. Sydney: Elsevier; 2009. p. 26. [Google Scholar]

- 3.Boud D, Molloy E, Boud D, Molloy E. Feedback in higher and professional education. London: Routledge; 2013. What is the problem with feedback? p. 6. [Google Scholar]

- 4.Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77(1):81–112. doi: 10.3102/003465430298487. [DOI] [Google Scholar]

- 5.Sadler DR. Formative assessment and the design of instructional systems. Instr Sci. 1989;18(2):119–144. doi: 10.1007/BF00117714. [DOI] [Google Scholar]

- 6.Molloy E, Boud D. Changing conceptions of feedback. In: Boud D, Molloy E, editors. Feedback in higher and professional education. London: Routledge; 2013. pp. 11–33. [Google Scholar]

- 7.Nicol D. Resituating feedback from the reactive to the proactive. In: Boud D, Molloy E, editors. Feedback in higher and professional education. Oxford: Routledge; 2013. pp. 34–49. [Google Scholar]

- 8.Carless D. Trust and its role in facilitating dialogic feedback. In: Boud D, Molloy E, editors. Feedback in higher and professional education. London: Routledge; 2013. pp. 90–103. [Google Scholar]

- 9.Ericsson KA. Deliberate practice and acquisition of expert performance: a general overview. Acad Emerg Med. 2008;15(11):988–994. doi: 10.1111/j.1553-2712.2008.00227.x. [DOI] [PubMed] [Google Scholar]

- 10.Ende J. Feedback in clinical medical education. JAMA. 1983;250(6):777–781. doi: 10.1001/jama.1983.03340060055026. [DOI] [PubMed] [Google Scholar]

- 11.Kilminster S, Cottrell D, Grant J, Jolly B. AMEE Guide No. 27: effective educational and clinical supervision. Med. Teach. 2007;29(1):2–19. doi: 10.1080/01421590701210907. [DOI] [PubMed] [Google Scholar]

- 12.Norcini JJ, Burch V. Workplace-based assessment as an educational tool: AMEE Guide No. 31. Med. Teach. 2007;29(9-10):855–871. doi: 10.1080/01421590701775453. [DOI] [PubMed] [Google Scholar]

- 13.Archer JC. State of the science in health professional education: effective feedback. Med Educ. 2010;44(1):101–108. doi: 10.1111/j.1365-2923.2009.03546.x. [DOI] [PubMed] [Google Scholar]

- 14.Nicol D, Macfarlane-Dick D. Formative assessment and self‐regulated learning: a model and seven principles of good feedback practice. Stud High Educ. 2006;31(2):199–218. doi: 10.1080/03075070600572090. [DOI] [Google Scholar]

- 15.Bing-You RG, Stratos GA. Medical students’ needs for feedback from residents during the clinical clerkship year. Teach. Learn. Med. 1995;7(3):172–176. doi: 10.1080/10401339509539736. [DOI] [Google Scholar]

- 16.Cohen SN, Farrant PBJ, Taibjee SM. Assessing the assessments: U.K. dermatology trainees’ views of the workplace assessment tools. Br J Dermatol. 2009;161(1):34–39. doi: 10.1111/j.1365-2133.2009.09097.x. [DOI] [PubMed] [Google Scholar]

- 17.Hewson MG, Little ML. Giving feedback in medical education. J Gen Intern Med. 1998;13(2):111–116. doi: 10.1046/j.1525-1497.1998.00027.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Weller JM, Jones A, Merry AF, Jolly B, Saunders D. Investigation of trainee and specialist reactions to the mini-Clinical evaluation exercise in anaesthesia: implications for implementation. Br J Anaesth. 2009;103(4):524–530. doi: 10.1093/bja/aep211. [DOI] [PubMed] [Google Scholar]

- 19.Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. Systematic review of the literature on assessment, feedback and physicians’ clinical performance*: BEME Guide No. 7. Med. Teach. 2006;28(2):117–128. doi: 10.1080/01421590600622665. [DOI] [PubMed] [Google Scholar]

- 20.Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, O’Brien MA, Johansen M, Grimshaw J, Oxman AD. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;6:CD000259. doi: 10.1002/14651858.CD000259.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kluger AN, DeNisi A. The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol Bull. 1996;119(2):254–284. doi: 10.1037/0033-2909.119.2.254. [DOI] [Google Scholar]

- 22.Kogan JR, Conforti LN, Bernabeo EC, Durning SJ, Hauer KE, Holmboe ES. Faculty staff perceptions of feedback to residents after direct observation of clinical skills. Med Educ. 2012;46(2):201–215. doi: 10.1111/j.1365-2923.2011.04137.x. [DOI] [PubMed] [Google Scholar]

- 23.Blatt B, Confessore S, Kallenberg G, Greenberg L. Verbal interaction analysis: viewing feedback through a different lens. Teach. Learn. Med. 2008;20(4):329–333. doi: 10.1080/10401330802384789. [DOI] [PubMed] [Google Scholar]

- 24.Molloy E. Time to pause: feedback in clinical education. In: Delany C, Molloy E, editors. The health professions. Sydney: Elsevier; 2009. pp. 128-–146. [Google Scholar]

- 25.Salerno S, O’Malley P, Pangaro L, Wheeler G, Moores L, Jackson J. Faculty development seminars based on the one-minute preceptor improve feedback in the ambulatory setting. J Gen Intern Med. 2002;17(10):779–787. doi: 10.1046/j.1525-1497.2002.11233.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Holmboe ES, Yepes M, Williams F, Huot SJ. Feedback and the mini clinical evaluation exercise. J Gen Intern Med. 2004;19(5p2):558–561. doi: 10.1111/j.1525-1497.2004.30134.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Frye AW, Hollingsworth MA, Wymer A, Hinds MA. Dimensions of feedback in clinical teaching: a descriptive study. Acad Med. 1996;71(1):S79–81. doi: 10.1097/00001888-199601000-00049. [DOI] [PubMed] [Google Scholar]

- 28.Pelgrim EAM, Kramer AWM, Mokkink HGA, Van der Vleuten CPM. Quality of written narrative feedback and reflection in a modified mini-clinical evaluation exercise: an observational study. BMC Med. Educ. 2012;12:97. doi: 10.1186/1472-6920-12-97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hamburger EK, Cuzzi S, Coddington DA, Allevi AM, Lopreiato J, Moon R, Yu C, Lane JL. Observation of resident clinical skills: outcomes of a program of direct observation in the continuity clinic setting. Acad Pediatr. 2011;11(5):394–402. [DOI] [PubMed]

- 30.Huang WY, Dains JE, Monteiro FM, Rogers JC. Observations on the teaching and learning occurring in offices of community-based family and community medicine clerkship preceptors. Fam Med. 2004;36(2):131–136. [PubMed] [Google Scholar]

- 31.Ende J, Pomerantz A, Erickson F. Preceptors’ strategies for correcting residents in an ambulatory care medicine setting: a qualitative analysis. Acad Med. 1995;70(3):224–229. doi: 10.1097/00001888-199503000-00014. [DOI] [PubMed] [Google Scholar]

- 32.Sargeant J, Mann K, Sinclair D, Van der Vleuten C, Metsemakers J. Challenges in multisource feedback: intended and unintended outcomes. Med Educ. 2007;41(6):583–591. doi: 10.1111/j.1365-2923.2007.02769.x. [DOI] [PubMed] [Google Scholar]

- 33.Litzelman DK, Stratos GA, Marriott DJ, Lazaridis EN, Skeff KM. Beneficial and harmful effects of augmented feedback on physicians’ clinical-teaching performances. Acad Med. 1998;73(3):324–332. doi: 10.1097/00001888-199803000-00022. [DOI] [PubMed] [Google Scholar]

- 34.Bing-You RG, Paterson J, Levine MA. Feedback falling on deaf ears: residents’ receptivity to feedback tempered by sender credibility. Med. Teach. 1997;19(1):40–44. doi: 10.3109/01421599709019346. [DOI] [Google Scholar]

- 35.Lockyer J, Violato C, Fidler H. Likelihood of change: a study assessing surgeon use of multisource feedback data. Teach. Learn. Med. 2003;15(3):168–174. doi: 10.1207/S15328015TLM1503_04. [DOI] [PubMed] [Google Scholar]

- 36.Sargeant J, Mann K, Ferrier S. Exploring family physicians’ reactions to multisource feedback: perceptions of credibility and usefulness. Med Educ. 2005;39(5):497–504. doi: 10.1111/j.1365-2929.2005.02124.x. [DOI] [PubMed] [Google Scholar]

- 37.Sargeant J, Mann K, Sinclair D, Vleuten C, Metsemakers J. Understanding the influence of emotions and reflection upon multi-source feedback acceptance and use. Adv in Health Sci Educ. 2008;13(3):275–288. doi: 10.1007/s10459-006-9039-x. [DOI] [PubMed] [Google Scholar]

- 38.Moss HA, Derman PB, Clement RC. Medical student perspective: working toward specific and actionable clinical clerkship feedback. Med. Teach. 2012;34(8):665–667. doi: 10.3109/0142159X.2012.687849. [DOI] [PubMed] [Google Scholar]

- 39.Molloy E, Boud D. Feedback models for learning, teaching and performance. In: Spector JM, Merrill MD, Elen J, Bishop MJ, editors. Handbook of research on educational communications and technology. 4. New York: Springer Science + Business Media; 2014. pp. 413–424. [Google Scholar]

- 40.Pangaro L, ten Cate O. Frameworks for learner assessment in medicine: AMEE Guide No. 78. Med. Teach. 2013;35(6):e1197–e1210. doi: 10.3109/0142159X.2013.788789. [DOI] [PubMed] [Google Scholar]

- 41.Booth A. Unpacking your literature search toolbox: on search styles and tactics. Health Info. Libr. J. 2008;25(4):313–317. doi: 10.1111/j.1471-1842.2008.00825.x. [DOI] [PubMed] [Google Scholar]

- 42.Miles M, Huberman AJS. Qualitative data analysis: a methods sourcebook. 3. Los Angeles: Sage; 2014. [Google Scholar]

- 43.Streiner DL, Norman GR. Health measurement scales: a practical guide to their development and use. 3. Oxford University Press: New York; 2003. [Google Scholar]

- 44.Bond S, Bond J. A Delphi study of clinical nursing research priorities. J Adv Nurs. 1982;7:565–575. doi: 10.1111/j.1365-2648.1982.tb00277.x. [DOI] [PubMed] [Google Scholar]

- 45.Duffield C. The Delphi technique: a comparison of results obtained using two expert panels. Int J Nurs Stud. 1993;30(3):227–237. doi: 10.1016/0020-7489(93)90033-Q. [DOI] [PubMed] [Google Scholar]

- 46.Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–382. doi: 10.1046/j.1365-2648.2003.02537.x. [DOI] [PubMed] [Google Scholar]

- 47.Riddle DL, Stratfrod PW, Singh JA, Strand CV. Variation in outcome measures in hip and knee arthroplasty clinical trials: a proposed approach to achieving consensus. J. Rheumatol. 2009;36(9):2050–2056. doi: 10.3899/jrheum090356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Van De Ridder JMM, Stokking KM, McGaghie WC, Ten Cate OTJ. What is feedback in clinical education? Med Educ. 2008;42(2):189–197. doi: 10.1111/j.1365-2923.2007.02973.x. [DOI] [PubMed] [Google Scholar]

- 49.Feedback in higher and professional education London: Routledge; 2013

- 50.Harvard Business Review Guide to Giving Effective Feedback. Boston MA: Harvard Buiness Review Press; 2012.

- 51.Understanding medical education: evidence, theory and practice Oxford: Association for the study of medical education 2010.

- 52.ABC of learning and teaching in medicine 2nd edn. Oxford: Blackwell Publishing Ltd; 2010.

- 53.Essential guide to educational supervision in postgraduate medical education. Oxford: Blackwell Publishing; 2009.

- 54.Vickery AW, Lake FR. Teaching on the run tips 10: giving feedback. Med J Aust. 2005;183(5):267–268. doi: 10.5694/j.1326-5377.2005.tb07035.x. [DOI] [PubMed] [Google Scholar]

- 55.Wind LA, Van Dalen J, Muijtjens AM, Rethans JJ. Assessing simulated patients in an educational setting: the MaSP (Maastricht assessment of simulated patients) Med Educ. 2004;38(1):39–44. doi: 10.1111/j.1365-2923.2004.01686.x. [DOI] [PubMed] [Google Scholar]

- 56.Perera J, Perera J, Abdullah J, Lee N. Training simulated patients: evaluation of a training approach using self-assessment and peer/tutor feedback to improve performance. BMC Med. Educ. 2009;9:37. doi: 10.1186/1472-6920-9-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.May W, Fisher D, Souder D. Development of an instrument to measure the quality of standardized/simulated patient verbal feedback. Med Educ Dev. 2012;2:e3. doi: 10.4081/med.2012.e3. [DOI] [Google Scholar]

- 58.Schlegel C, Woermann U, Rethans JJ, van der Vleuten C. Validity evidence and reliability of a simulated patient feedback instrument. BMC Med. Educ. 2012;12:6. doi: 10.1186/1472-6920-12-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Bouter S, van Weel-Baumgarten EMDP, Bolhuis SP. Construction and validation of the nijmegen evaluation of the simulated patient (nesp): assessing simulated patients’ ability to role-play and provide feedback to students. Acad Med. 2013;88(2):253–259. doi: 10.1097/ACM.0b013e31827c0856. [DOI] [PubMed] [Google Scholar]

- 60.Gururaja RP, Yang T, Paige JT, Chauvin SW. Examining the effectiveness of debriefing at the point of care in simulation-based operating room team training. In: Henriksen K, Battles JB, Keyes MA, Gary ML, editors. Advances in patient safety: new directions and alternative approaches (vol 3: performance and tools) Rockville: Agency for Healthcare Research and Quality; 2008. [PubMed] [Google Scholar]

- 61.Brett-Fleegler MMD, Rudolph JP, Eppich WMDM, Monuteaux MS, Fleegler EMDMPH, Cheng AMD, Simon RE. Debriefing assessment for simulation in healthcare: development and psychometric properties. Simul Healthc. 2012;7(5):288–94. [DOI] [PubMed]

- 62.Arora SP, Ahmed MMPH, Paige JMD, Nestel DP, Runnacles JM, Hull LM, Darzi AMDF, Sevdalis NP. Objective structured assessment of debriefing: bringing science to the art of debriefing in surgery. ann surg. 2012;256(6):982–8. [DOI] [PubMed]

- 63.Reiter HI, Rosenfeld J, Nandagopal K, Eva KW. Do clinical clerks provide candidates with adequate formative assessment during objective structured clinical examinations? Adv in Health Sci Educ. 2004;9(3):189–199. doi: 10.1023/B:AHSE.0000038172.97337.d5. [DOI] [PubMed] [Google Scholar]

- 64.Moineau G, Power B, Pion A-MJ, Wood TJ, Humphrey-Murto S. Comparison of student examiner to faculty examiner scoring and feedback in an OSCE. Med Educ. 2011;45(2):183–191. doi: 10.1111/j.1365-2923.2010.03800.x. [DOI] [PubMed] [Google Scholar]

- 65.Stalmeijer RE, Dolmans DHJM, Wolfhagen IHAP, Peters WG, Coppenolle L, Scherpbier AJJA. Combined student ratings and self-assessment provide useful feedback for clinical teachers. Adv in Health Sci Educ. 2010;15(3):315–328. doi: 10.1007/s10459-009-9199-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Stalmeijer RE, Dolmans DHJM, Wolfhagen IHAP, Muijtjens AMM, Scherpbier AJJA. The development of an instrument for evaluating clinical teachers: involving stakeholders to determine content validity. Med. Teach. 2008;30(8):e272–e277. doi: 10.1080/01421590802258904. [DOI] [PubMed] [Google Scholar]

- 67.Fluit C, Bolhuis S, Grol R, Ham M, Feskens R, Laan R, Wensing M. Evaluation and feedback for effective clinical teaching in postgraduate medical education: Validation of an assessment instrument incorporating the CanMEDS roles. Med Teach. 2012;34(11):893–901. [DOI] [PubMed]

- 68.Locke EA, Latham GP. Building a practically useful theory of goal setting and task motivation: a 35-year odyssey. am Psychol. 2002;57(9):705–717. doi: 10.1037/0003-066X.57.9.705. [DOI] [PubMed] [Google Scholar]

- 69.Kaufman DM. Applying educational theory in practice. In: Cantillon P, Wood D, editors. ABC of learning and teaching in medicine. 2. Oxford: Blackwell Publishing Ltd; 2010. pp. 1–5. [Google Scholar]

- 70.Wadsworth BJ. Piaget’s theory of cognitive and affective development: foundations of constructivism., 5th edn. White Plains: Longman Publishing; 1996. [Google Scholar]

- 71.Sweller J. Cognitive load during problem solving: effects on learning. Cogn Sci. 1988;12(2):257–285. doi: 10.1207/s15516709cog1202_4. [DOI] [Google Scholar]

- 72.Boud D, Molloy E. Rethinking models of feedback for learning: the challenge of design. Assessment Eval Higher Educ. 2012;38(6):698–712. doi: 10.1080/02602938.2012.691462. [DOI] [Google Scholar]

- 73.Butler DL, Winne PH. Feedback and self-regulated learning: a theoretical synthesis. Rev Educ Res. 1995;65(3):245. doi: 10.3102/00346543065003245. [DOI] [Google Scholar]

- 74.Tai JH-M, Canny BJ, Haines TP, Molloy EK: The role of peer-assisted learning in building evaluative judgement: opportunities in clinical medical education. Adv in Health Sci Educ Theory Pract. 2015;12. [Epub ahead of print]. PMID: 26662035. [DOI] [PubMed]

- 75.Deci EL, Ryan RM. The ‘What’ and ‘why’ of goal pursuits: human needs and the self-determination of behavior. Psychol Inq. 2000;11(4):227. doi: 10.1207/S15327965PLI1104_01. [DOI] [Google Scholar]

- 76.Rudolph JW, Simon R, Rivard P, Dufresne RL, Raemer DB. Debriefing with good judgment: combining rigorous feedback with genuine inquiry. Anesthesiol Clin. 2007;25(2):361–376. doi: 10.1016/j.anclin.2007.03.007. [DOI] [PubMed] [Google Scholar]

- 77.Silverman J, Kurtz S. The Calgary-cambridge approach to communication skills teaching ii: the set-go method of descriptive feedback. Educ Gen Pract. 1997;8:16–23. [Google Scholar]

- 78.Brett JF, Atwater LE. 360degree feedback: accuracy, reactions, and perceptions of usefulness. J Appl Psychol. 2001;86(5):930–942. doi: 10.1037/0021-9010.86.5.930. [DOI] [PubMed] [Google Scholar]

- 79.Kernis MH, Johnson EK. Current and typical self-appraisals: differential responsiveness to evaluative feedback and implications for emotions. J Res Pers. 1990;24(2):241–257. doi: 10.1016/0092-6566(90)90019-3. [DOI] [Google Scholar]

- 80.Ten Cate OTJ. Why receiving feedback collides with self determination. Adv in Health Sci Educ. 2013;18(4):845–849. doi: 10.1007/s10459-012-9401-0. [DOI] [PubMed] [Google Scholar]

- 81.Ten Cate OTJ, Kusurkar RA, Williams GC. How self-determination theory can assist our understanding of the teaching and learning processes in medical education. AMEE Guide No. 59. Med. Teach. 2011;33(12):961–973. doi: 10.3109/0142159X.2011.595435. [DOI] [PubMed] [Google Scholar]

- 82.Jolly B, Boud D. Written feedback. In: Boud D, Molloy E, editors. Feedback in higher and professional education. London: Routledge; 2013. pp. 104–124. [Google Scholar]

- 83.Dweck CS. Motivational processes affecting learning. Am Psychol. 1986;41(10):1040–1048. doi: 10.1037/0003-066X.41.10.1040. [DOI] [Google Scholar]

- 84.Sargeant J, Mcnaughton E, Mercer S, Murphy D, Sullivan P, Bruce DA. Providing feedback: exploring a model (emotion, content, outcomes) for facilitating multisource feedback. Med. Teach. 2011;33(9):744–749. doi: 10.3109/0142159X.2011.577287. [DOI] [PubMed] [Google Scholar]

- 85.Rudolph JW, Simon R, Raemer DB, Eppich WJ. Debriefing as formative assessment: closing performance gaps in medical education. Acad Emerg Med. 2008;15(11):1010–1016. doi: 10.1111/j.1553-2712.2008.00248.x. [DOI] [PubMed] [Google Scholar]

- 86.Holmboe ES, Sherbino J, Long DM, Swing SR, Frank JR. The role of assessment in competency-based medical education. Med. Teach. 2010;32(8):676–682. doi: 10.3109/0142159X.2010.500704. [DOI] [PubMed] [Google Scholar]