Abstract

Penalized regression methods, such as L1 regularization, are routinely used in high-dimensional applications, and there is a rich literature on optimality properties under sparsity assumptions. In the Bayesian paradigm, sparsity is routinely induced through two-component mixture priors having a probability mass at zero, but such priors encounter daunting computational problems in high dimensions. This has motivated continuous shrinkage priors, which can be expressed as global-local scale mixtures of Gaussians, facilitating computation. In contrast to the frequentist literature, little is known about the properties of such priors and the convergence and concentration of the corresponding posterior distribution. In this article, we propose a new class of Dirichlet–Laplace priors, which possess optimal posterior concentration and lead to efficient posterior computation. Finite sample performance of Dirichlet–Laplace priors relative to alternatives is assessed in simulated and real data examples.

Keywords: Bayesian, Convergence rate, High dimensional, Lasso, L1, Penalized regression, Regularization, Shrinkage prior

1. INTRODUCTION

The overwhelming emphasis in the literature on high-dimensional data analysis has been on rapidly producing point estimates with good empirical and theoretical properties. However, in many applications, it is crucial to obtain a realistic characterization of uncertainty in estimates of parameters, functions of parameters, and predictions. Usual frequentist approaches to characterize uncertainty, such as constructing asymptotic confidence regions or using the bootstrap, can break down in high-dimensional settings. For example, in regression when the number of subjects n is equal to or larger than the number of predictors p, one cannot naively appeal to asymptotic normality and resampling from the data may not provide an adequate characterization of uncertainty.

Most penalized estimators correspond to the mode of a Bayesian posterior distribution. For example, Lasso/L1 regularization [28] is equivalent to maximum a posteriori (MAP) estimation under a Gaussian linear regression model having a double exponential (Laplace) prior on the coefficients. Given this connection, it is natural to ask whether we can use the entire posterior distribution to provide a probabilistic measure of uncertainty. In addition to providing a characterization of uncertainty, a Bayesian perspective has distinct advantages in terms of tuning parameter choice, allowing key penalty parameters to be marginalized over the posterior distribution instead of relying on cross-validation.

From a frequentist perspective, we would like to be able to choose a default shrinkage prior that leads to similar optimality properties to those shown for L1 penalization and other approaches. However, instead of showing that a penalized estimator obtains the minimax rate under sparsity assumptions, we would like to show that the entire posterior distribution concentrates at the optimal rate, i.e., the posterior probability assigned to a shrinking neighborhood of the true parameter value converges to one, with the neighborhood size proportional to the frequentist minimax rate.

An amazing variety of shrinkage priors have been proposed in the Bayesian literature; however with essentially no theoretical justification in the high-dimensional settings for which they were designed. [14] and [6] provided conditions on the prior for asymptotic normality of linear regression coefficients allowing the number of predictors p to increase with sample size n, with [14] requiring a very slow rate of growth and [6] assuming p ≤ n. These results required the prior to be sufficiently flat in a neighborhood of the true parameter value, essentially ruling out shrink-age priors. [3] considered shrinkage priors in providing simple sufficient conditions for posterior consistency in linear regression where the number of variables grows slower than the sample size, though no rate of contraction was provided.

In studying posterior contraction in high-dimensions, several properties of the prior distribution are critical, including the prior concentration around sparse vectors and the implied dimensionality of the prior. Studying these properties of shrinkage priors is challenging due to the lack of exact zeros, with the prior draws being sparse in only an approximate sense. This technical hurdle has prevented any previous results on posterior concentration in high-dimensional settings for shrinkage priors. Investigating these properties is critical not just in studying frequentist optimality properties of Bayesian procedures but for Bayesians in obtaining improved insight into prior elicitation. Without such a technical handle, prior selection remains an art. Our overarching goal is to obtain theory allowing design of novel priors, which are appealing from a Bayesian perspective while having frequentist optimality properties.

2. A NEW CLASS OF SHRINKAGE PRIORS

2.1 Bayesian sparsity priors in normal means problem

For concreteness, we focus on the normal means problem ([9, 11, 20]); although the methods developed in this paper generalize trivially to high-dimensional linear and generalized linear models. In the normal means setting, one aims to estimate a n-dimensional mean1 based on a single observation corrupted with i.i.d. standard normal noise:

| (1) |

Let l0[q; n] denote the subset of ℝn given by

For a vector x ∈ ℝr, let ‖x‖2 denote its Euclidean norm. If the true mean θ0 is qn-sparse, i.e., θ0 ∈ l0[qn; n], with qn = o(n), the squared minimax rate in estimating θ0 in l2 norm is 2qn log(n/qn)(1+ o(1)) [11], i.e.2

| (2) |

In the above display, Eθ0 denotes an expectation with respect to a Nn(θ0, In) density. In the presence of sparsity, one looses a logarithmic factor in the ambient dimension for not knowing the locations of the zeroes. Moreover, (2) implies that one only needs a number of replicates in the order of the sparsity to consistently estimate the mean. Appropriate thresholding/penalized estimators achieve the minimax rate (2); see [9] for detailed references.

For a subset S ⊂ {1, …, n}, let |S| denote the cardinality of S and define θS = (θj : j ∈ S) for a vector θ ∈ ℝn. Denote supp(θ) to be the support of θ, the subset of {1, …, n} corresponding to the non-zero entries of θ. For a vector θ ∈ ℝn, a natural way to incorporate sparsity is to use point mass mixture priors:

| (3) |

where π = Pr(θj ≠ 0), 𝔼{|supp(θ)| | π} = nπ is the prior guess on model size (sparsity level), and gθ is an absolutely continuous density on ℝ. These priors are highly appealing in allowing separate control of the level of sparsity and the size of the signal coefficients. If the sparsity parameter π is estimated via empirical Bayes, the posterior median of θ is a minimax-optimal estimator [20] which can adapt to arbitrary sparsity levels as long as qn = o(n).

In a fully Bayesian framework, it is common to place a beta prior on π, leading to a beta-Bernoulli prior on the model size, which conveys an automatic multiplicity adjustment [27]. In a recent paper, [9] established that prior (3) with an appropriate beta prior on π and suitable tail conditions on gθ leads to a minimax optimal rate of posterior contraction, i.e., the posterior concentrates most of its mass on a ball around θ0 of squared radius of the order of qn log(n/qn):

| (4) |

where M > 0 is a constant and . [24] obtained consistency in model selection using point-mass mixture priors with appropriate data-driven hyperparameters.

2.2 Global-local shrinkage rules

Although point mass mixture priors are intuitively appealing and possess attractive theoretical properties, posterior sampling requires a stochastic search over an enormous space, leading to slow mixing and convergence [26]. Computational issues and consideration that many of the θjs may be small but not exactly zero has motivated a rich literature on continuous shrinkage priors; for some flavor refer to [3, 8, 16, 18, 25]. [26] noted that essentially all such shrinkage priors can be represented as global-local (GL) mixtures of Gaussians,

| (5) |

where τ controls global shrinkage towards the origin while the local scales {ψj} allow deviations in the degree of shrinkage. If g puts sufficient mass near zero and f is appropriately chosen, GL priors in (5) can intuitively approximate (3) but through a continuous density concentrated near zero with heavy tails.

GL priors potentially have substantial computational advantages over point mass priors, since the normal scale mixture representation allows for conjugate updating of θ and ψ in a block. Moreover, a number of frequentist regularization procedures such as ridge, lasso, bridge and elastic net correspond to posterior modes under GL priors with appropriate choices of f and g. For example, one obtains a double-exponential prior corresponding to the popular L1 or lasso penalty if f is an exponential distribution. However, many aspects of shrinkage priors are poorly understood, with the lack of exact zeroes compounding the difficulty in studying basic properties, such as prior expectation, tail bounds for the number of large signals, and prior concentration around sparse vectors. Hence, subjective Bayesians face difficulties in incorporating prior information regarding sparsity, and frequentists tend to be skeptical due to the lack of theoretical justification.

This skepticism is warranted, as it is clearly the case that reasonable seeming priors can have poor performance in high-dimensional settings. For example, choosing π = 1/2 in prior (3) leads to an exponentially small prior probability of 2−n assigned to the null model, so that it becomes literally impossible to override that prior informativeness with the information in the data to pick the null model. However, with a beta prior on π, this problem can be avoided [27]. In the same vein, if one places i.i.d. N(0, 1) priors on the entries of θ, then the induced prior on ‖θ‖ is highly concentrated around leading to misleading inferences on θ almost everywhere. Although these are simple examples, similar multiplicity problems [27] can transpire more subtly in cases where complicated models/priors are involved and hence it is fundamentally important to understand properties of the prior and the posterior in the setting of (1).

There has been a recent awareness of these issues, motivating a basic assessment of the marginal properties of shrinkage priors for a single θj. Recent priors such as the horseshoe [8] and generalized double Pareto [3] are carefully formulated to obtain marginals having a high concentration around zero with heavy tails. This is well justified, but as we will see below, such marginal behavior alone is not sufficient; it is necessary to study the joint distribution of θ on ℝn. With such motivation, we propose a class of Dirichlet-kernel priors in the next subsection.

2.3 Dirichlet-kernel priors

Let ϕ0 denote the standard normal density on ℝ. Also, let DE(τ) denote a zero mean double-exponential or Laplace distribution with density f(y) = (2τ)−1e−|y|/τ for y ∈ ℝ. Integrating out the local scales ψj’s, (5) can be equivalently represented as a global scale mixture of a kernel 𝒦(·),

| (6) |

where is a symmetric unimodal density on ℝ and . For example, ψj ~ Exp(1/2) corresponds to a double-exponential kernel 𝒦 ≡ DE(1), while ψj ~ IG(1/2, 1/2) results in a standard Cauchy kernel 𝒦 ≡ Ca(0, 1).

These choices lead to a kernel which is bounded in a neighborhood of zero. However, if one instead uses a half Cauchy prior , then the resulting horseshoe kernel [7, 8] is un-bounded with a singularity at zero. This phenomenon coupled with tail robustness properties leads to excellent empirical performance of the horseshoe. However, the joint distribution of θ under a horseshoe prior is understudied and further theoretical investigation is required to understand its operating characteristics. One can imagine that it concentrates more along sparse regions of the parameter space compared to common shrinkage priors since the singularity at zero potentially allows most of the entries to be concentrated around zero with the heavy tails ensuring concentration around the relatively small number of signals.

The above class of priors rely on obtaining a suitable kernel 𝒦 through appropriate normal scale mixtures. In this article, we offer a fundamentally different class of shrinkage priors that alleviate the requirements on the kernel, while having attractive theoretical properties. In particular, our proposed class of Dirichlet-kernel (Dk) priors replaces the single global scale τ in (6) by a vector of scales (ϕ1τ, …, ϕnτ), where ϕ = (ϕ1, …, ϕn) is constrained to lie in the (n − 1) dimensional simplex and is assigned a Dir(a, …, a) prior:

| (7) |

In (7), 𝒦 is any symmetric (about zero) unimodal density with exponential or heavier tails; for computational purposes, we restrict attention to the class of kernels that can be represented as scale mixture of normals [32]. While previous shrinkage priors obtain marginal behavior similar to the point mass mixture priors (3), our construction aims at resembling the joint distribution of θ under a two-component mixture prior.

We focus on the Laplace kernel from now on for concreteness, noting that all the results stated below can be generalized to other choices. The corresponding hierarchical prior given τ,

| (8) |

is referred to as a Dirichlet–Laplace prior, denoted θ | τ ~ DLa(τ).

To understand the role of ϕ, we undertake a study of the marginal properties of θj conditional on τ, integrating out ϕj. The results are summarized in Proposition 2.1 below.

Proposition 2.1. If θ | τ ~ DLa(τ), then the marginal distribution of θj given τ is unbounded with a singularity at zero for any a < 1. Further, in the special case a = 1/n, the marginal distribution is a wrapped Gamma distribution WG(τ−1, 1/n), where WG(λ, α) has a density f(x; λ, α) ∝ |x|α−1 e−λ|x| on ℝ.

Thus, marginalizing over ϕ, we obtain an unbounded kernel 𝒦, so that the marginal density of θj | τ has a singularity at 0 while retaining exponential tails. A proof of Proposition 2.1 can be found in the appendix.

The parameter τ plays a critical role in determining the tails of the marginal distribution of θj’s. We consider a fully Bayesian framework where τ is assigned a prior g on the positive real line and learnt from the data through the posterior. Specifically, we assume a gamma(λ, 1/2) prior on τ with λ = na. We continue to refer to the induced prior on θ implied by the hierarchical structure,

| (9) |

as a Dirichlet–Laplace prior, denoted θ ~ DLa.

There is a frequentist literature on including a local penalty specific to each coefficient. The adaptive Lasso [31, 34] relies on empirically estimated weights that are plugged in. [23] instead sample the penalty parameters from a posterior, with a sparse point estimate obtained for each draw. These approaches do not produce a full posterior distribution but focus on sparse point estimates.

2.4 Posterior computation

The proposed class of DL priors leads to straightforward posterior computation via an efficient data augmented Gibbs sampler. The DLa prior (9) can be equivalently represented as

We detail the steps in the normal means setting noting that the algorithm is trivially modified to accommodate normal linear regression, robust regression with heavy tailed residuals, probit models, logistic regression, factor models and other hierarchical Gaussian cases. To reduce auto-correlation, we rely on marginalization and blocking as much as possible. Our sampler cycles through (i) θ | ψ, ϕ, τ, y, (ii) ψ | ϕ, τ, θ, (iii) τ | ϕ, θ and (iv) ϕ | θ. We use the fact that the joint posterior of (ψ, ϕ, τ) is conditionally independent of y given θ. Steps (ii) – (iv) together give us a draw from the conditional distribution of (ψ, ϕ, τ) | θ, since

Steps (i) – (iii) are standard and hence not derived. Step (iv) is non-trivial and we develop an efficient sampling algorithm for jointly sampling ϕ. Usual one at a time updates of a Dirichlet vector lead to tremendously slow mixing and convergence, and hence the joint update in Theorem 2.2 is an important feature of our proposed prior; a proof can be found in the Appendix. Consider the following parametrization for the three-parameter generalized inverse Gaussian (giG) distribution: Y ~ giG(λ, ρ, χ) if f(y) ∝ yλ−1e−0.5(ρy+χ/y) for y > 0.

Theorem 2.2. The joint posterior of ϕ | θ has the same distribution as (T1/T, …, Tn/T), where Tj are independently distributed according to a giG(a − 1, 1, 2|θj|) distribution, and .

Summaries of each step are provided below.

- To sample θ | ψ, ϕ, τ, y, draw θj independently from a distribution with

The conditional posterior of ψ | ϕ, τ, θ can be sampled efficiently in a block by independently sampling ψj | ϕ, θ from an inverse-Gaussian distribution iG(μj, λ) with μj = ϕjτ/|θj|, λ = 1.

Sample the conditional posterior of τ | ϕ, θ from a distribution.

To sample ϕ | θ, draw T1, …, Tn independently with Tj ~ giG(a − 1, 1, 2|θj|) and set ϕj = Tj/T with .

3. CONCENTRATION PROPERTIES OF DIRCHLET–LAPLACE PRIORS

In this section, we study a number of properties of the joint density of the Dirichlet–Laplace prior DLa on ℝn and investigate the implied rate of posterior contraction (4) in the normal means setting (1). Recall the hierarchical specification of DLa from (9). Letting ψj = ϕjτ for j = 1, …, n, a standard result (see, for example, Lemma IV.3 of [33]) implies that ψj ~ gamma(a, 1/2) independently for j = 1, …, n. Therefore, (9) can be alternatively represented as3

| (10) |

The formulation (10) is analytically convenient since the joint distribution factors as a product of marginals and the marginal density can be obtained analytically in Proposition 3.1 below. The proof follows from standard properties of the modified Bessel function [15]; a proof is sketched in the Appendix.

Proposition 3.1. The marginal density Π of θj for any 1 ≤ j ≤ n is given by

| (11) |

where

is the modified Bessel function of the second kind.

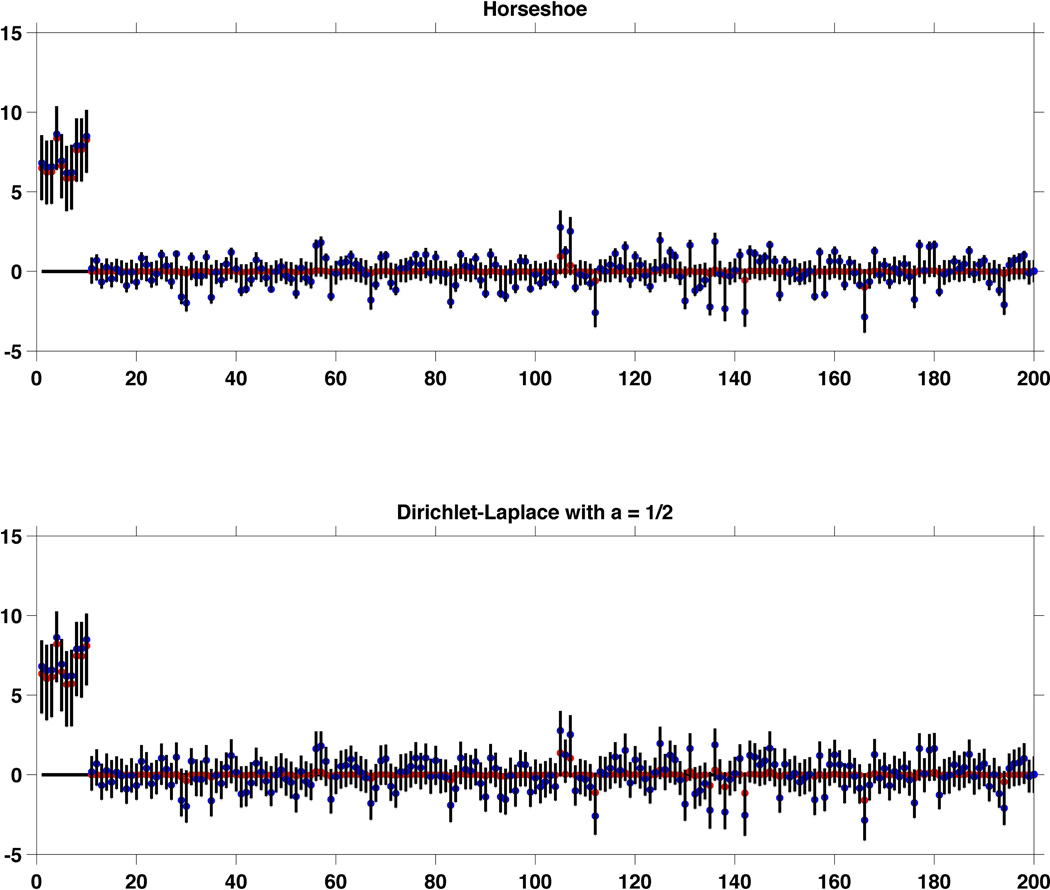

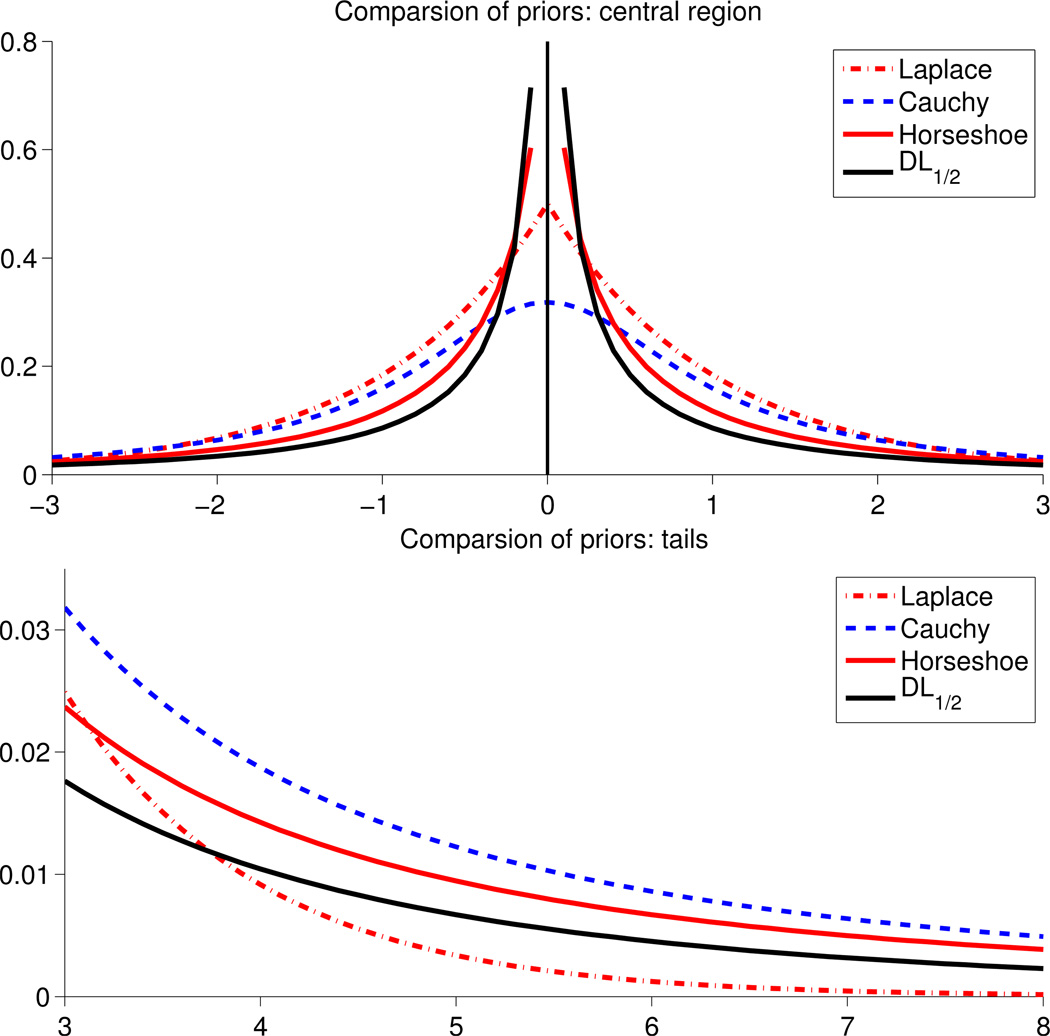

Figure 3 plots the marginal density (11) to compare with common shrinkage priors.

Figure 3.

Simulation results from a single replicate with n = 200, qn = 10, A = 7. Blue circles = entries of y, red circles = posterior median of θ, shaded region: 95% point wise credible interval for θ. Left panel: Horseshoe, right panel: DL1/2 prior

We continue to denote the joint density of θ on ℝn by Π, so that . For a subset S ⊂ {1, …, n}, let ΠS denote the marginal distribution of θS = {θj : j ∈ S} ∈ ℝ|S|. For a Borel set A ⊂ ℝn, let ℙ(A) = ∫A Π(θ)dθ denote the prior probability of A, and ℙ(A | y(n)) the posterior probability given data y(n) = (y1, …, yn) and the model (1). Finally, let Eθ0/Pθ0 respectively indicate an expectation/probability w.r.t. the Nn(θ0, In) density. We now establish that under mild restrictions on ‖θ0‖, the posterior arising from the DLa prior (9) contracts at the minimax rate of convergence for an appropriate choice of the Dirichlet concentration parameter a.

Theorem 3.1. Consider model (1) with θ ~ DLan as in (9), where an = n−(1+β) for some β > 0 small. Assume θ0 ∈ l0[qn; n] with qn = o(n) and . Then, with and for some constant M > 0,

| (12) |

If an = 1/n instead, then (12) holds when qn ≳ log n.

A proof of Theorem 3.1 can be found in Section 6. Theorem 3.1 is the first result obtaining posterior contraction rates for a continuous shrinkage prior in the normal means setting or the closely related high-dimensional regression problem. Theorem 3.1 posits that when the parameter a in the Dirichlet–Laplace prior is chosen, depending on the sample size, to be n−(1+β) for any β > 0 small, the resulting posterior contracts at the minimax rate (2), provided . Using the Cauchy–Schwartz inequality, for θ0 ∈ l0[qn; n] and the bound on ‖θ0‖2 implies that ‖θ0‖1 ≤ qn(log n)2. Hence, the condition in Theorem 3.1 permits each non-zero signal to grow at a (log n)2 rate, which is a fairly mild assumption. Moreover, in a recent technical report, the authors showed that a large subclass of global-local priors (5) including the Bayesian lasso lead to a sub-optimal rate of posterior convergence; i.e., the expression in (12) converges to 0 whenever . Therefore, Theorem 3.1 indeed provides a substantial improvement over a large class of GL priors.

The choice an = n−(1+β) will be evident from the various auxiliary results in Section 3.1, specifically Lemma 3.3 and Theorem 3.4. The conclusion of Theorem 3.1 continues to hold when an = 1/n under an additional mild assumption on the sparsity qn. In Tables 1 & 2 of Section 4, detailed empirical results are provided with an = 1/n as a default choice. Based on empirical experience, we find that a = 1/n may have a tendency to over-shrink the signals in cases where there are several relatively small signals. In such settings, depending on the practitioner’s utility function, the singularity at zero can be softened using a DLa prior for a larger value of a. We report the results for a = 1/2 in Section 4, whence computational gains arise as the distribution of Tj in (iv) turns out to be inverse-Gaussian (iG), for which exact samplers are available. A fully Bayesian procedure with a discrete hyper prior on a is considered in the real data application.

Table 1.

Squared error comparison over 100 replicates. Average squared error across replicates reported for DL (Dirichlet–Laplace) with a = 1/n and a = 1/2, LS (Lasso), EBMed (Empirical Bayes median), PM (Point mass prior), BL (Bayesian lasso) and HS (horseshoe). Signal strength A = 5, 6.

| n | 100 | 200 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 | 10 | 20 | 5 | 10 | 20 | ||||||||

| A | 5 | 6 | 5 | 6 | 5 | 6 | 5 | 6 | 5 | 6 | 5 | 6 | |

| DL1/n | 21.90 | 14.09 | 32.43 | 21.81 | 55.26 | 38.71 | 44.86 | 31.57 | 50.24 | 35.39 | 95.14 | 85.54 | |

| DL1/2 | 14.77 | 12.54 | 21.79 | 18.26 | 37.13 | 31.06 | 28.52 | 25.46 | 43.74 | 37.45 | 75.37 | 65.29 | |

| LS | 22.21 | 20.67 | 36.41 | 36.54 | 65.91 | 66.60 | 43.37 | 43.51 | 74.05 | 75.98 | 139.20 | 137.94 | |

| EBMed | 15.42 | 13.68 | 28.26 | 27.86 | 57.93 | 58.00 | 28.94 | 27.50 | 56.03 | 57.65 | 120.67 | 119.85 | |

| PM | 14.50 | 11.99 | 24.97 | 23.66 | 49.92 | 49.16 | 26.66 | 24.86 | 49.96 | 50.89 | 103.40 | 101.69 | |

| BL | 30.84 | 31.61 | 44.32 | 46.65 | 61.23 | 63.46 | 59.70 | 62.30 | 88.10 | 94.02 | 125.89 | 128.72 | |

| HS | 12.50 | 9.31 | 21.63 | 17.63 | 39.60 | 35.89 | 23.41 | 18.75 | 42.95 | 38.21 | 81.07 | 74.31 | |

Table 2.

Squared error comparison over 100 replicates. Average squared error across replicates reported for DL (Dirichlet–Laplace) with a = 1/n and a = 1/2, LS (Lasso), EBMed (Empirical Bayes median), PM (Point mass prior), BL (Bayesian lasso) and HS (horseshoe). Signal strength A = 7, 8.

| n | 100 | 200 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 | 10 | 20 | 5 | 10 | 20 | ||||||||

| A | 7 | 8 | 7 | 8 | 7 | 8 | 7 | 8 | 7 | 8 | 7 | 8 | |

| DL1/n | 8.20 | 7.19 | 17.29 | 15.35 | 32.00 | 29.40 | 16.07 | 14.28 | 33.00 | 30.80 | 65.53 | 59.61 | |

| DL1/2 | 11.77 | 11.87 | 17.57 | 18.36 | 30.24 | 30.42 | 20.30 | 22.70 | 36.51 | 35.30 | 61.43 | 65.79 | |

| LS | 21.25 | 19.09 | 38.68 | 37.25 | 68.97 | 69.05 | 41.82 | 41.18 | 75.55 | 75.12 | 137.21 | 136.25 | |

| EBMed | 13.64 | 12.47 | 29.73 | 27.96 | 60.52 | 60.22 | 26.10 | 25.52 | 57.19 | 56.05 | 119.41 | 119.35 | |

| PM | 12.15 | 10.98 | 25.99 | 24.59 | 51.36 | 50.98 | 22.99 | 22.26 | 49.42 | 48.42 | 101.54 | 101.62 | |

| BL | 33.05 | 33.63 | 49.85 | 50.04 | 68.35 | 68.54 | 64.78 | 69.34 | 99.50 | 103.15 | 133.17 | 136.83 | |

| HS | 8.30 | 7.93 | 18.39 | 16.27 | 37.25 | 35.18 | 15.80 | 15.09 | 35.61 | 33.58 | 72.15 | 70.23 | |

In the regression setting y ~ Nn(Xβ, In) with the number of covariates pn ≤ n, it is typically assumed that the eigenvalues of (XTX)/n are bounded between two absolute constants [4, 19]. In such cases, Theorem 3.1 can be used to derive posterior convergence rates for the vector of regression coefficients β in l2 norm. [4] studied posterior consistency in this setting for a class of shrinkage priors but did not provide contraction rates. While our methods can be extended trivially to the ultra high-dimensional regression setting, substantial work will be required to establish theoretical properties under standard restricted isometry type conditions on the design matrix commonly used to prove oracle inequalities for frequentist penalized methods [29]. Even with point mass mixture priors, such contraction results are unknown with the exception of a recent technical report [10]. While we propose a heuristic variable selection method which seems to work well in practice, obtaining model selection consistency results similar to [19, 24] is challenging and will be addressed elsewhere.

3.1 Auxiliary results

In this section, we state a number of properties of the DL prior which provide a better understanding of the joint prior structure and also crucially help us in proving Theorem 3.1. We first provide useful bounds on the joint density of the DL prior in Lemma 3.2 below; a proof can be found in the Appendix.

Lemma 3.2. Consider the DLa prior on ℝn for a small. Let S ⊂ {1, …, n} and η ∈ ℝ|S|.

If min1≤j≤|S| |ηj| > δ for δ small, then

| (13) |

where C > 0 is an absolute constant.

If ‖η‖2 ≤ m for m large, then

| (14) |

where C > 0 is an absolute constant.

It is evident from Figure 3 that the univariate marginal density Π has an infinite spike near zero. We quantify the probability assigned to a small δ-neighborhood of the origin in Lemma 3.3 below.

Lemma 3.3. Assume θ1 ∈ ℝ has a probability density Π as in (11). Then, for δ > 0 small,

where C > 0 is an absolute constant.

A proof of Lemma 3.3 can be found in the Appendix.

In case of point mass mixture priors (3), the induced prior on the model size |supp(θ)| follows a Binomial (n, π) prior (given π), facilitating study of the multiplicity phenomenon [27]. However, ℙ(θ = 0) = 0 for any continuous shrinkage prior, which compounds the difficulty in studying the degree of shrinkage for these classes of priors. Letting suppδ(θ) = {j : |θj| > δ} to be the entries in θ larger than δ in magnitude, we propose |suppδ(θ)| as an approximate measure of model size for continuous shrinkage priors. We show in Theorem 3.4 below that for an appropriate choice of δ, |suppδ(θ)| doesn’t exceed a constant multiple of the true sparsity level qn with posterior probability tending to one, a property which we refer to as posterior compressibility.

Theorem 3.4. Consider model (1) with θ ~ DLan as in (9), where an = n−(1+β) for some β > 0 small. Assume θ0 ∈ l0[qn; n] with qn = o(n). Let δn = qn/n. Then,

| (15) |

for some constant A > 0. If an = 1/n instead, then (15) holds when qn ≳ log n.

The choice of δn in Theorem 3.4 guarantees that the entries in θ smaller than δn in magnitude produce a negligible contribution to ‖θ‖. Observe that the prior distribution of |suppδn(θ)| is Binomial(n, ζn), where ζn = ℙ(|θ1| > δn). When an = n− (1+β), ζn can be bounded above by log n/n1+β in view of Lemma 3.3 and the fact that Γ(x) ≥ 1/x for x small. Therefore, the prior expectation 𝔼 |suppδn(θ)| ≤ log n/nβ. This implies an exponential tail bound for ℙ(|suppδn(θ) | > Aqn) by Chernoff’s method, which is instrumental in deriving Theorem 3.4. A proof of Theorem 3.4 along these lines can be found in the Appendix.

The posterior compressibility property in Theorem 3.4 ensures that the dimensionality of the posterior distribution of θ (in an approximate sense) doesn’t substantially overshoot the true dimensionality of θ0, which together with the bounds on the joint prior density near zero and infinity in Lemma 3.2 delivers the minimax rate in Theorem 3.1.

4. SIMULATION STUDY

To illustrate the finite-sample performance of the proposed DL prior, we tabulate the results from a replicated simulation study with various dimensionality n and sparsity level qn in Tables 1 and 2. In each setting, we sample 100 replicates of a n-dimensional vector y from a Nn(θ0, In) distribution with θ0 having qn non-zero entries which are all set to be a constant A > 0. We chose two values of n, namely n = 100, 200. For each n, we let the model size qn to be 5, 10, 20% of n and vary A over 5, 6, 7, 8. This results in 24 simulation settings in total; for each setting the squared error loss corresponding to the posterior median averaged across simulation replicates is tabulated. The simulations were designed to mimic the setting in Section 3 where θ0 is sparse with a few moderate-sized coefficients.

Based on the discussion in Section 3, we present the results for the DL prior with a = 1/n and a = 1/2. To offer grounds for comparison, we have tabulated the results for Lasso (LS), Empirical Bayes median (EBMed)4 [20], posterior median with a point mass prior (PM) [9], posterior median corresponding to the Bayesian lasso [25] and the horseshoe [8]. For the fully Bayesian analysis using point mass mixture priors, we use a complexity prior on the subset-size, πn(s) α exp{−κs log(2n/s)} with κ = 0.1 and independent standard Laplace priors for the non-zero entries as in [9].5 A wide difference between most of the competitors and the proposed DL1/n is observed in Table 2. As evident from Tables 1 and 2, DL1/2 is robust to smaller signal strength as is the horseshoe.

We also illustrate a high-dimensional simulation setting akin to an example in [8], where one has a single observation y from a n = 1000 dimensional Nn(θ0, In) distribution, with θ0[1 : 10] = 10, θ0[11 : 100] = A, and θ0[101 : 1000] = 0. We then vary A from 2 to 7 and summarize the squared error averaged across 100 replicates in Table 3. We only compare the Bayesian shrinkage priors here; the squared error for the posterior median is tabulated. Table 3 clearly illustrates the need for prior elicitation in high dimensions.

Table 3.

Squared error comparison over 100 replicates. Average squared error for the posterior median reported for BL (Bayesian Lasso), HS (horseshoe) and DL (Dirichlet–Laplace) with a = 1/n and a = 1/2 respectively.

| n | 1000 | |||||

|---|---|---|---|---|---|---|

| A | 2 | 3 | 4 | 5 | 6 | 7 |

| BL | 299.30 | 385.68 | 424.09 | 450.20 | 474.28 | 493.03 |

| HS | 306.94 | 353.79 | 270.90 | 205.43 | 182.99 | 168.83 |

| DL1/n | 368.45 | 679.17 | 671.34 | 374.01 | 213.66 | 160.14 |

| DL1/2 | 267.83 | 315.70 | 266.80 | 213.23 | 192.98 | 177.20 |

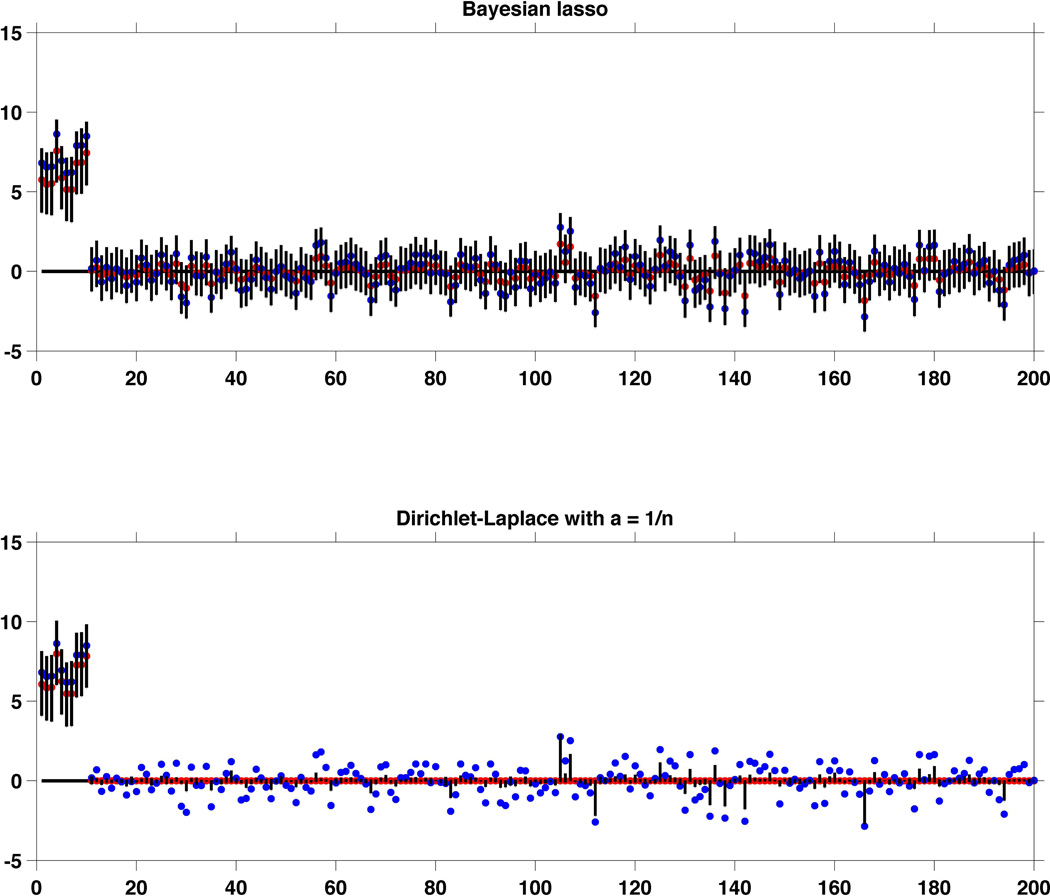

For visual illustration and comparison, we present the results from a single replicate in the first simulation setting with n = 200, qn = 10 and A = 7 in Figure 2 & 3. The blue circles indicate the entries of y, while the red circles correspond to the posterior median of θ. The shaded region corresponds to a 95% point wise credible interval for θ.

Figure 2.

Simulation results from a single replicate with n = 200, qn = 10, A = 7. Blue circles = entries of y, red circles = posterior median of θ, shaded region: 95% point wise credible interval for θ. Left panel: Bayesian lasso, right panel: DL1/n prior

5. PROSTATE DATA APPLICATION

We consider a popular dataset [12, 13] from a microarray experiment consisting of expression levels for 6033 genes for 50 normal control subjects and 52 patients diagnosed with prostate cancer. The data take the form of a 6033 × 102 matrix with the (i, j)th entry corresponding to the expression level for gene i on patient j; the first 50 columns correspond to the normal control subjects with the remaining 52 for the cancer patients. The goal of the study is to discover genes whose expression levels differ between the prostate cancer patients (treatment) and normal subjects (control). A two sample t-test with 100 degrees of freedom was implemented for each gene and the resulting t-statistic ti was converted to a z-statistic zi = Φ−1(T100(ti)). Under the null hypothesis H0i of no difference in expression levels between the treatment and control group for the ith gene, the null distribution of zi is N(0, 1). Figure 4 shows a histogram of the z-values, comparing it to a N(0, 1) density with a multiplier chosen to make the curve integrate to the same area as the histogram. The shape of the histogram suggests the presence of certain interesting genes [12].

Figure 4.

Histogram of z-values

The classical Bonferroni correction for multiple testing flags only 6 genes as significant, while the two-group empirical Bayes method of [20] found 139 significant genes, being much less conservative. The local Bayes false discovery rate (fdr) [5] control method identified 54 genes as non-null. For detailed analysis of this dataset using existing methods, refer to [12, 13].

To apply our method, we set up a normal means model zi = θi + εi, i = 1, …, 6033 and assign θ a DLa prior. Instead of fixing a, we place a discrete uniform prior on a supported on the interval [1/6000, 1/2], with the support points of the form 10(k + 1)/6000, k = 0, 1, …, K. Such a fully Bayesian approach allows the data to dictate the choice of the tuning parameter a which is only specified up to a constant by the theory and also avoids potential numerical issues arising from fixing a = 1/n when n is large. Updating a is straightforward since the full conditional distribution of a is again a discrete distribution on the chosen support points.

A referee pointed out that the z-values for 6033 genes obtained using n = 102 observations are unlikely to be independent and recommended investigating robustness of our approach under correlated errors. We conducted a small simulation study to this effect to evaluate the performance of our method when the error distribution is misspecified. We generated data from the model yi = θi + εi with (ε1, …, εn)T ~ Nn(0, Ω), where Ω corresponds to the covariance matrix of an auto-regression sequence with pure error variance σ2 and auto-regressive coefficient ρ. We placed a discrete uniform prior on a supported on the interval [1/1000, 1/2], with the support points of the form 10(k + 1)/1000, k = 0, 1, …, K. We observe that the mean squared error of the posterior median for 50 replicated datasets with n = 1000, qn = 50, A = 4, 5, 6, σ2 = 1 increases by only 3% on average when ρ increases from 0 to 0.1. Therefore, the proposed method is robust to mild misspecification in the covariance structure. However, if further prior information is available regarding the covariance structure, that should be incorporated in the modeling framework.

For the real data application, we implemented the Gibbs sampler in Section 2.4 for 10,000 draws discarding a burn-in of 5,000. Mixing and convergence of the Gibbs sampler was satisfactory based on examination of trace plots, with the 5,000 retained samples having an effective sample size of 2369.2 averaged across the θi’s. The computational time per iteration scaled approximately linearly with the dimension. The posterior mode of a was at 1/20.

In this application, we expect there to be two clusters of |θi|s, with one concentrated closely near zero corresponding to genes that are effectively not differentially expressed and another away from zero corresponding to interesting genes for further study. As a simple automated approach, we cluster |θi|s at each MCMC iteration using kmeans with 2 clusters. For each iteration, the number of non-zero signals is then estimated by the smaller cluster size out of the two clusters. A final estimate (M) of the number of non-zero signals is obtained by taking the mode over all the MCMC iterations. The M largest (in absolute magnitude) entries of the posterior median are identified as the non-zero signals.

Using the above selection scheme, our method declared 128 genes as non-null. Interestingly, out of the 128 genes, 100 are common with the ones selected by EBMed. Also all the 54 genes obtained using FDR control form a subset of the selected 128 genes. Horseshoe is overly conservative; it selected only 1 gene (index: 610) using the same clustering procedure; the selected gene was the one with the largest effect size (refer to Table 11.2 in [13]).

6. PROOF OF THEOREM 3.1

We prove Theorem 3.1 for an = 1/n in details and note the places where the proof differs in case of an = n−(1+β). Recall θS ≔ {θj, j ∈ S} and for δ ≥ 0, suppδ(θ) ≔ {j : |θj| > δ}. Let Eθ0/Pθ0 respectively indicate an expectation/probability w.r.t. the Nn(θ0, In) density.

For a sequence of positive real numbers rn to be chosen later, let δn = rn/n. Define . Let

be a subset of σ(y(n)), the sigma-field generated by y(n), as in Lemma 5.2 of [9] such that . Let 𝒮n be the collection of subsets S ⊂ {1, 2, …, n} such that |S| ≤ Aqn. For each such S and a positive integer j, let {θS,j,i : i = 1, …, NS,j} be a 2jrn net of ΘS,j,n = {θ ∈ ℝn : suppδn(θ) = S, 2jrn ≤ ‖θ − θ0‖2 ≤ 2(j + 1)rn} created as follows. Let {ϕS,j,i : i = 1, …, NS,j} be a jrn net of the |S|-dimensional ball {‖ϕ − θ0S‖ ≤ 2(j+1)rn}; we can choose this net in a way that NS,j ≤ C|S| for some constant C (see, for example, Lemma 5.2 of [30]). Letting and for k ∈ Sc, we show this collection indeed forms a 2jrn net of ΘS,j,n. To that end, fix θ ∈ ΘS,j,n. Clearly, ‖θS − θ0S‖ ≤ 2(j +1)rn. Find 1 ≤ i ≤ NS,j such that . Then,

proving our claim. Therefore, the union of balls BS,j,i of radius 2jrn centered at θS,j,i for 1 ≤ i ≤ NS,j cover ΘS,j,n. Since Eθ0ℙ(|suppδn(θ)| > Aqn | y(n)) → 0 by Theorem 3.4, it is enough to work with Eθ0ℙ(θ : ‖θ − θ0‖2 > 2Mrn, suppδn(θ) ∈ 𝒮n | y(n)). Using the standard testing argument for establishing posterior convergence rates (see, for example, the proof of Proposition 5.1 in [9]), we arrive at

| (16) |

where

Let . The proof of Theorem 3.1 is completed by deriving an upper bound to βS,j,i in the following Lemma 6.1 akin to Lemma 5.4 in [9].

Lemma 6.1. .

Proof.

| (17) |

Next, we find an upper bound to

| (18) |

Let υq(r) denote the q-dimensional Euclidean ball of radius r centered at zero and |υq(r)| denote its volume. For the sake of brevity, denote υq = |υq(1)|, so that |υq(r)| = rqυq. The numerator of (18) can be clearly bounded above by |υ|S|(2jrn)| sup|θj|>δn ∀ j∈S ΠS(θS). Since the set {‖θS0 − θ0S0‖2 < rn} is contained in the ball υ|S0|(‖θ0S0‖2 + rn) = {‖θS0‖2 ≤ ‖θ0S0‖2 + rn} and ‖θ0S0‖2 = ‖θ0‖2, the denominator of (18) can be bounded below by |υ|S0|(rn)| infυ|S0|(tn) ΠS0(θS0), where tn = ‖θ0‖2 + rn. Putting together these inequalities and invoking Lemma 3.2, we have

| (19) |

Using υq ≍ (2πe)q/2q−q/2−1/2 (see Lemma 5.3 in [9]) and , we can bound from above by . Therefore, we have

| (20) |

Now, since and , we have and hence . Substituting in (20), we have

Finally, ℙ(|θ1| < δn)n−|S|/ℙ(|θ1| < δn)n−qn ≤ ℙ(|θ1| < δn)−|S|. Using Lemma 3.3, ℙ(|θ1| < δn) ≥ (1 − log n/n), which implies that ℙ(|θ1| < δn)−|S| ≤ elog n.

Substituting the upper bound for βS,j,i obtained in Lemma 6.1, and noting that |S| ≤ Aqn and |NS,j| ≤ eC|S|, the expression in the left hand side of (16) can be bounded above by

Since , it follows that Eθ0ℙ(θ : ‖θ − θ0‖2 > 2Mrn, suppδn(θ) ∈ 𝒮n | y(n)) → 0 for large M > 0.

When an = n−(1+β), the conclusion of Lemma 6.1 remains unchanged and the proof of Theorem 3.4 does not require qn ≳ log n. The rest of the proof remains exactly the same.

Figure 1.

Marginal density of the DL prior with a = 1/2 in comparison to other shrinkage priors.

ACKNOWLEDGEMENTS

We thank Dr. Ismael Castillo and Dr. James Scott for sharing source code. Dr. Anirban Bhattacharya, Dr. Debdeep Pati and Dr. Natesh S. Pillai acknowledge support from the Office of Naval Research (ONR BAA 14-0001). Dr. Pillai is also partially supported by NSF-DMS 1107070. Dr. David B. Dunson is partially supported by the DARPA MSEE program and the grant number R01 ES017240-01 from the National Institute of Environmental Health Sciences (NIEHS) of the National Institutes of Health (NIH).

APPENDIX

Proof of Proposition 2.1

When a = 1/n, ϕj ~ Beta(1/n, 1 − 1/n) marginally. Hence, the marginal distribution of θj given τ is proportional to

Substituting z = ϕj/(1 − ϕj) so that ϕj = z/(1 + z), the above integral reduces to

In the general case, ϕj ~ Beta(a, (n − 1)a) marginally. Substituting z = ϕj/(1 − ϕj) as before, the marginal density of θj is proportional to

The above integral can clearly be bounded below by a constant multiple of

The above expression clearly diverges to infinity as |θj| → 0 by the monotone convergence theorem.

Proof of Theorem 2.2

Integrating out τ, the joint posterior of ϕ | θ has the form

| (A.1) |

We now state a result from the theory of normalized random measures (see, for example, (36) in [22]). Suppose T1, …, Tn are independent random variables with Tj having a density fj on (0,∞). Let ϕj = Tj/T with . Then, the joint density f of (ϕ1, …, ϕn−1) supported on the simplex 𝒮n−1 has the form

| (A.2) |

where . Setting in (A.2), we get

| (A.3) |

We aim to equate the expression in (A.3) with the expression in (A.1). Comparing the exponent of ϕj gives us δ = 2 − a. The other requirement n − 1 − nδ = λ − n − 1 is also satisfied, since λ = na. The proof is completed by observing that fj corresponds to a giG(a − 1, 1, 2|θj|) when δ = 2 − a.

Proof of Proposition 3.1

By (10),

The result follows from 8.432.7 in [15].

Proof of Lemma 3.2

Letting h(x) = log Π(x), we have logΠS(η) = ∑1≤j≤|S| h(ηj).

We first prove (13). Since Π(x), and hence h(x), is monotonically decreasing in |x|, and |ηj| > δ for all j, we have log ΠS(η) ≤ |S|h(δ). Using Kα(z) ≍ z−α for |z| small and Γ(a) ≍ a−1 for a small, we have from (11) that Π(δ) ≍ a−1|δ|(a−1) and hence h(δ) ≍ (1 − a) log(δ−1) − loga−1 + C ≤ C log(δ−1).

We next prove (14). Noting that Kα(z) ≳ e−z/z for |z| large (section 9.7 of [1]), we have from (11) that for |x| large. Using Cauchy–Schwartz inequality twice, we have , which implies .

Proof of Lemma 3.3

Using the representation (9), we have ℙ(|θ1| > δ | ψ1) = e−δ/ψ1, so that,

| (A.4) |

where C > 0 is a constant independent of δ. Using a bound for the incomplete gamma function from Theorem 2 of [2],

| (A.5) |

for δ small. The proof is completed by noting that (1/2)a is bounded above by a constant and C + log(1/δ) ≤ 2 log(1/δ) for δ small enough.

Proof of Theorem 3.4

For θ ∈ ℝn, let fθ(·) denote the probability density function of a Nn(θ, In) distribution and fθi denote the univariate marginal N(θi, 1) distribution. Let S0 = supp(θ0). Since |S0| = qn, it suffices to prove that

Let . By (10), is independent of {θi, i ∈ S0} conditionally on y(n). Hence

| (A.6) |

where and respectively denote the numerator and denominator of the expression in (A.6). Observe that

| (A.7) |

where is a subset of σ(y(n)) as in Lemma 5.2 of [9] (replacing θ by and θ0 by 0) defined as

with for some sequence of positive real numbers rn. We set here. With this choice, from (A.7),

| (A.8) |

We have , with the equality following from the representation in (10). Using Lemma 3.3, , implying . Next, clearly ℙ(ℬn) ≤ ℙ(|suppδn(θ)| > Aqn). As indicated in Section 3.1, |suppδn (θ)| ~ Binomial(n, ζn), with ζn = ℙ(|θ1| > δn) ≤ log n/n in view of Lemma 3.3. A version of Chernoff’s inequality for the binomial distribution [17] states that for B ~ Binomial(n, ζ) and ζ ≤ a < 1,

| (A.9) |

When an = 1/n, qn ≥ C0 log n for some constant C0 > 0. Setting an = Aqn/n, clearly ζn < an for some A > 1/C0. Substituting in (A.9), we have ℙ(|suppδn (θ)| > Aqn) ≤ eAqn log(e log n)−Aqn log Aqn. Choosing A ≥ 2e/C0 and using the fact that qn ≥ C0 log n, we obtain ℙ(|suppδn(θ)| > Aqn) ≤ e−Aqn log 2. Substituting the bounds for ℙ(ℬn) and in (A.8) and choosing larger A if necessary, the expression in (A.7) goes to zero.

If an = n−(1+β), ζn ≤ log n/n1+β in view of Lemma 3.3. In (A.9), set an = Aqn/n as before. Clearly ζn < an. Substituting in (A.9), we have ℙ(|suppδn(θ) | > Aqn) ≤ e−CAqn log n. Substituting the bounds for ℙ(ℬn) and in (A.8), the expression in (A.7) goes to zero.

Footnotes

Following standard practice in this literature, we use n to denote the dimensionality and it should not be confused with the sample size.

Given sequences an, bn, we denote an = O(bn) or an ≲ bn if there exists a global constant C such that an ≤ Cbn and an = o(bn) if an/bn → 0 as n → ∞.

This formulation only holds when τ ~ gamma(na, 1/2) and is not true for the general DLa(τ) class with τ ~ g.

The EBMed procedure was implemented using the package [21].

Given a draw for s, a subset S of size s is drawn uniformly. Set θj = 0 for all j ∉ S and draw θj, j ∈ S i.i.d. from standard Laplace. The beta-bernoulli priors in (3) induce a similar prior on the subset size.

Contributor Information

Anirban Bhattacharya, Email: anirbanb@stat.tamu.edu.

Debdeep Pati, Email: debdeep@stat.fsu.edu.

Natesh S. Pillai, Email: pillas@fas.harvard.edu.

David B. Dunson, Email: dunson@stat.duke.edu.

REFERENCES

- 1.Abramowitz M, Stegun IA. Handbook of mathematical functions: with formulas, graphs, and mathematical tables. Vol. 55. Dover publications; 1965. [Google Scholar]

- 2.Alzer H. On some inequalities for the incomplete gamma function. Mathematics of Computation. 1997;66(218):771–778. [Google Scholar]

- 3.Armagan A, Dunson D, Lee J. Generalized double pareto shrinkage. Statistica Sinica, to appear. 2013 [PMC free article] [PubMed] [Google Scholar]

- 4.Armagan Artin, Dunson David B, Lee Jaeyong, Bajwa Waheed U, Strawn Nate. Posterior consistency in linear models under shrinkage priors. Biometrika. 2013;100(4):1011–1018. [Google Scholar]

- 5.Benjamini Yoav, Hochberg Yosef. Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B (Methodological) 1995:289–300. [Google Scholar]

- 6.Bontemps D. Bernstein–von mises theorems for gaussian regression with increasing number of regressors. The Annals of Statistics. 2011;39(5):2557–2584. [Google Scholar]

- 7.Carvalho CM, Polson NG, Scott JG. Handling sparsity via the horseshoe. Journal of Machine Learning Research W&CP. 2009;5(73–80) [Google Scholar]

- 8.Carvalho CM, Polson NG, Scott JG. The horseshoe estimator for sparse signals. Biometrika. 2010;97(2):465–480. [Google Scholar]

- 9.Castillo I, van der Vaart A. Needles and straws in a haystack: Posterior concentration for possibly sparse sequences. The Annals of Statistics. 2012;40(4):2069–2101. [Google Scholar]

- 10.Castillo Ismael, Schmidt-Hieber Johannes, van der Vaart Aad W. Bayesian linear regression with sparse priors. arXiv preprint arXiv: 1403.0735. 2014 [Google Scholar]

- 11.Donoho DL, Johnstone IM, Hoch JC, Stern AS. Maximum entropy and the nearly black object. Journal of the Royal Statistical Society. Series B (Methodological) 1992:41–81. [Google Scholar]

- 12.Efron Bradley. Microarrays, empirical bayes and the two-groups model. Statistical Science. 2008:1–22. [Google Scholar]

- 13.Efron Bradley. Large-scale inference: empirical Bayes methods for estimation, testing, and prediction. Vol. 1. Cambridge University Press; 2010. [Google Scholar]

- 14.Ghosal S. Asymptotic normality of posterior distributions in high-dimensional linear models. Bernoulli. 1999;5(2):315–331. [Google Scholar]

- 15.Gradshteyn IS, Ryzhik IM. Corrected and enlarged edition. Tables of Integrals, Series and Products Academic Press, New York. 1980 [Google Scholar]

- 16.Griffin JE, Brown PJ. Inference with normal-gamma prior distributions in regression problems. Bayesian Analysis. 2010;5(1):171–188. [Google Scholar]

- 17.Hagerup Torben, Rüb Christine. A guided tour of chernoff bounds. Information processing letters. 1990;33(6):305–308. [Google Scholar]

- 18.Hans C. Elastic net regression modeling with the orthant normal prior. Journal of the American Statistical Association. 2011;106(496):1383–1393. [Google Scholar]

- 19.Johnson Valen E, Rossell David. Bayesian model selection in high-dimensional settings. Journal of the American Statistical Association. 2012;107(498):649–660. doi: 10.1080/01621459.2012.682536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Johnstone IM, Silverman BW. Needles and straw in haystacks: Empirical bayes estimates of possibly sparse sequences. The Annals of Statistics. 2004;32(4):1594–1649. [Google Scholar]

- 21.Johnstone IM, Silverman BW. Ebayesthresh: R and s-plus programs for empirical bayes thresholding. J. Statist. Soft. 2005;12:1–38. [Google Scholar]

- 22.Kruijer W, Rousseau J, van der Vaart A. Adaptive bayesian density estimation with location-scale mixtures. Electronic Journal of Statistics. 2010;4:1225–1257. [Google Scholar]

- 23.Leng C. Variable selection and coefficient estimation via regularized rank regression. Statistica Sinica. 2010;20(1):167. [Google Scholar]

- 24.Naidu Narisetty Naveen, He Xuming, et al. Bayesian variable selection with shrinking and diffusing priors. The Annals of Statistics. 2014;42(2):789–817. [Google Scholar]

- 25.Park T, Casella G. The bayesian lasso. Journal of the American Statistical Association. 2008;103(482):681–686. [Google Scholar]

- 26.Polson NG, Scott JG. Shrink globally, act locally: Sparse Bayesian regularization and prediction. In. In: Bernardo JM, Bayarri MJ, Berger JO, Dawid AP, Heckerman D, Smith AFM, West M, editors. Bayesian Statistics 9. New York: Oxford University Press; 2010. pp. 501–538. [Google Scholar]

- 27.Scott JG, Berger JO. Bayes and empirical-bayes multiplicity adjustment in the variable-selection problem. The Annals of Statistics. 2010;38(5):2587–2619. [Google Scholar]

- 28.Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological) 1996:267–288. [Google Scholar]

- 29.Van De Geer Sara A, Bühlmann Peter, et al. On the conditions used to prove oracle results for the lasso. Electronic Journal of Statistics. 2009;3:1360–1392. [Google Scholar]

- 30.Vershynin R. Introduction to the non-asymptotic analysis of random matrices. Arxiv preprint arxiv:1011.3027. 2010 [Google Scholar]

- 31.Wang H, Leng C. Unified lasso estimation by least squares approximation. Journal of the American Statistical Association. 2007;102(479):1039–1048. [Google Scholar]

- 32.West M. On scale mixtures of normal distributions. Biometrika. 1987;74(3):646–648. [Google Scholar]

- 33.Zhou M, Carin L. Negative binomial process count and mixture modeling. arXiv preprint arXiv:1209.3442. 2012 doi: 10.1109/TPAMI.2013.211. [DOI] [PubMed] [Google Scholar]

- 34.Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101(476):1418–1429. [Google Scholar]