Abstract

The present paper is a novel contribution to the field of bioinformatics by using grammatical inference in the analysis of data. We developed an algorithm for generating star-free regular expressions which turned out to be good recommendation tools, as they are characterized by a relatively high correlation coefficient between the observed and predicted binary classifications. The experiments have been performed for three datasets of amyloidogenic hexapeptides, and our results are compared with those obtained using the graph approaches, the current state-of-the-art methods in heuristic automata induction, and the support vector machine. The results showed the superior performance of the new grammatical inference algorithm on fixed-length amyloid datasets.

1. Introduction

Grammatical inference (GI) is an intensively studied area of research that sits at the intersection of several fields including formal languages, machine learning, language processing, and learnability theory. The main task of the field is about finding some unknown rule when given some elements: examples and counterexamples. This presentation of elements may be finite (in practice) or infinite (in theory). As this study will be especially focused on obtaining a regular expression from finite positive and negative data, the various models of incremental learning and their decidability questions have not been mentioned. The book by de la Higuera [1] can be of major help on such theoretical aspects of grammatical inference.

Here and subsequently S = (S +, S −) stands for a sample where S + is the set of examples and S − is the set of counterexamples over a fixed alphabet Σ. Our aim is to obtain a compact description of a finite language L satisfying all the following conditions: (i) L ⊂ Σ+, (ii) S +⊆L, and (iii) S −∩L = ∅. We will consider a star-free regular expression (i.e., without the Kleene closure operator) as the compact description of a language L. It is worthy to emphasize that such a formulation of an induction problem is justified by intended applications in bioinformatics. A sample in biological or medical domains consists of positive and negative objects (mainly proteins) with certain properties, whereas a star-free regular expression may serve to predict new objects. The data explored by Tian et al. [2] and Maurer-Stroh et al. [3] are good illustrations. They consist of examples and counterexamples of amyloids, that is, proteins which have been associated with the pathology of more than 20 serious human diseases. In the experimental part of the present paper, we are going to undertake an examination of binary classification efficiency for selected real biological/medical data. By binary classification, we mean mapping a string to one out of two classes by means of induced regular expressions (regex). For classification, especially for two-class problems, a variety of measures has been proposed. Since our experiments lie in a (bio)medical context, the Matthews Correlation Coefficient is regarded as a primary score, as the goal of this whole process is to predict new strings that are likely to be positive.

There is a number of closely related works to our study. Angluin showed that the problem of inferring minimum-size regular expression satisfying (i), (ii), and (iii) remains NP-complete even if a regex is required to be star-free (containing no “∗” operations) [4]. In our previous work [5] similar bioinformatics datasets have been analyzed, but with different acceptors—directed acyclic word graphs. Some of classical automata learning algorithms like ECGI [6], k-RI [7], and k-TSSI [8] could be applied to the problem, but they do not make use of counterexamples. Many authors advocated the benefit of viewing the biological sequences as sentences derived from a formal grammar or automaton. As a good bibliographical starting point, see articles by Coste and Kerbellec [9], Sakakibara [10], and Searls [11]. In connection with this problem of data classification, it is worth remembering that there is a field of computer science that can be also involved, namely, machine learning (ML), which includes such methods as classification trees, clustering, the support vector machine [12], and rough sets [13]. All above-mentioned ML methods are aimed at compact description of input data, though in various ways. In view of our applications, they have, however, a drawback. The problem is that they are not suited for variable-length data.

In the present algorithm a star-free regular expression (SFRE) is achieved based on a learning sample containing the examples and counterexamples (these examples and counterexamples are also called positive and negative words). It is a two-phase procedure. In the first phase an initial graph is built in order to reveal possible substring interchanges. In the second phase all maximal cliques of the graph are yielded to build a SFRE. We have implemented our induction algorithm of a SFRE and started applying it to a real bioinformatics task, that is, classification of amyloidogenic hexapeptides. Amyloids are proteins capable of forming fibrils instead of the functional structure of a protein [14] and are responsible for a group of diseases called amyloidosis, such as Alzheimer's, Huntington's disease, and type II diabetes [15]. Furthermore, it is believed that short segments of proteins, like hexapeptides consisting of 6-residue fragments, can be responsible for amyloidogenic properties [16]. Since it is not possible to experimentally test all such sequences, several computational tools for predicting amyloid chains have emerged, inter alia, based on physicochemical properties [17] or using machine learning approach [18–21].

To test the performance of our SFRE approach, the following six additional programs have been used in experiments: the implementation of the Trakhtenbrot-Barzdin state merging algorithm, as described in [22]; the implementation of Rodney Price's Abbadingo winning idea of evidence-driven state merging [23]; a program based on the Rlb state merging algorithm [24]; ADIOS (for Automatic Distillation of Structure)—a context-free grammar learning system, which relies on a statistical method for pattern extraction and on structured generalization [25]; our previous approach with directed acyclic word graphs [5]; and, as an instance of ML methods, the support vector machine [26].

A rigorous statistical procedure has been applied to compare all the above methods in terms of a correlation between the observed and predicted binary classification (Matthews Correlation Coefficient, MCC). The proposed approach significantly outperforms both GI-based methods and ML algorithm on fixed-length amyloid datasets.

2. Materials and Methods

2.1. Datasets

The algorithm for generating star-free regular expressions SFRE has been tested over three recently published Hexpepset datasets, that is, Waltz [3], WALTZ-DB [27], and exPafig [5]. The first two databases consist of only experimentally asserted amyloid sequences. Note that the choice of experimental verified short peptides is very limited since very few data are available. The Waltz dataset has been published in 2010 and is composed of 116 hexapeptides known to induce amyloidosis (S +) and by 161 hexapeptides that do not induce amyloidosis (S −). The WALTZ-DB has been prepared by the same science team in the Switch Lab from KU Leuven and published in 2015. This dataset expands the Waltz set to total number of hexapeptides of 1089. According to Beerten et al. (2015), additional 720 hexapeptides were derived from 63 different proteins and combined with 89 peptides taken from the literature [27]. In the WALTZ-DB database, 244 hexapeptides are regarded as positive for amyloid formation (S +) and 845 hexapeptides as negative for amyloid formation (S −).

SFRE algorithm was also validated and trained on database (denoted by exPafig), which was computationally obtained with Pafig method [2], and then statistically processed [5]. exPafig consists of 150 amyloid positive hexapeptides (S +) and 2259 negative hexapeptides (S −). As seen, the database is strongly imbalanced.

2.2. An Algorithm for the Induction of a SFRE

2.2.1. Definitions

Definition 1 . —

Σ will be a finite nonempty set, the alphabet. Σ+ will denote the set of all nonempty strings over the alphabet Σ. If s, t ∈ Σ+, the concatenation of s and t, written st, will denote the string formed by making a copy of s and following it by a copy of t. If A, B⊆Σ+, then

(1)

To simplify the representations for finite languages, we define the notion of star-free regular expressions over alphabet Σ as follows.

Definition 2 . —

The set of star-free regular expressions (SFREs) over Σ will be the set of strings R such that

- (1)

∅ ∈ R which represents the empty set;

- (2)

Σ⊆R; each element a of the alphabet represents language {a};

- (3)

if r A and r B are SFREs representing languages A and B, respectively, then (r A + r B) ∈ R and (r A r B) ∈ R representing A ∪ B, AB, respectively, where the symbols (, ), + are not in Σ.

We will freely omit unnecessary parentheses from SFREs assuming that concatenation has higher priority than union. If r ∈ R represents language A, we will write L(r) = A.

Definition 3 . —

A sample S over Σ will be an ordered pair S = (S +, S −) where S +, S − are finite subsets of Σ+ and S +∩S − = ∅. S + will be called the positive part of S, and S − the negative part of S. A star-free regular expression r is consistent (or compatible) with a sample S = (S +, S −) if and only if S +⊆L(r) and S −∩L(r) = ∅.

Definition 4 . —

A graph G is a finite nonempty set of objects called vertexes together with a (possibly empty) set of unordered pairs of distinct vertexes of G called edges. The vertex set of G is denoted by V(G), while the edge set is denoted by E(G). The edge e = {u, v} is said to join the vertexes u and v. If e = {u, v} is an edge of a graph G, then u and v are adjacent vertexes. In a graph G, a clique is a subset of the vertex set C⊆V(G) such that every two vertexes in C are adjacent. By definition, a clique may be also composed of only one vertex. If a clique does not exist exclusively within the vertex set of a larger clique, then it is called a maximal clique.

Definition 5 . —

Let Σ be an alphabet and let G be a graph. Suppose that every vertex in G is associated with an ordered pair of nonempty strings over Σ; that is, V(G) = {v 1, v 2,…, v n}, where v i = (u i, w i) ∈ Σ+ × Σ+ for 1 ≤ i ≤ n. Let C = {v i1, v i2,…, v im} be a clique in G. Then

(2) is a star-free regular expression over Σ induced by C.

For the simplicity's sake, we also denote the set L(u i1 + ⋯+u im) = {u i1,…, u im} by U and the set L(w i1 + ⋯+w im) = {w i1,…, w im} by W in the context of C.

2.2.2. The Algorithm

In this section, we are going to show how to generate a SFRE compatible with a given sample. These expressions do not have many theoretical properties but have marvelous accomplishment in the analysis of some bioinformatics data in terms of classification quality.

Let S = (S +, S −) be a sample over Σ in which every string is at least of length 2. Construct the graph G with vertex set

| (3) |

and with edge set E(G) given by

| (4) |

Next, find a set of cliques 𝒞 = {C 1, C 2,…, C k} in G such that S +⊆∑i=1 k r(C i). For this purpose one can take advantage of an algorithm proposed by Tomita et al. [28] for generating all maximal cliques. Although it takes O(n3n/3) time in the worst case for an n-vertex graph, computational experiments described in Section 3 demonstrate that it runs very fast in practice (a few seconds for thousands of vertexes). Finally, return the union of SFREs induced by all maximal cliques 𝒞; that is, e = r(C 1) + r(C 2)+⋯+r(C k).

In order to reduce the computational complexity of the induction, instead of Tomita's algorithm, the ensuing randomized procedure could be applied. Consecutive cliques C i with their catenations U i W i are determined until S +⊆⋃i=1 k U i W i. The catenations emerge in the following manner. In step i + 1, a vertex v s1 = (u, w) ∈ V(G) for which uw ∉ ⋃m=1 i U m W m is chosen at random. Let U i+1 = {u} and W i+1 = {w}. Then sets U i+1 and W i+1 are updated by adding words from the randomly chosen neighbor of v s1, say v s2, and subsequently by adding words from the randomly chosen neighbor v s3 of {v s1, v s2}, and so forth. In the end, a maximal clique C i+1 is obtained for which L(r(C i+1)) = U i+1 W i+1. Naturally, e = r(C 1) + r(C 2)+⋯+r(C k) fulfills S +⊆L(e), and the whole procedure runs in polynomial time with respect to the input size.

Here are some elementary properties of a resultant expression e and the complexity of the induction algorithm.

-

(i)

S −∩L(e) = ∅ is implied from (4).

-

(ii)

If all strings in a sample have equal length, let us say ℓ, then all strings from L(e) also are of the same length ℓ.

-

(iii)

Let n = ∑s∈S | s|. A graph G, based on (3) and (4), may be constructed in O(n 3) time. Determining a set of cliques 𝒞 and corresponding regular expressions r(C 1), r(C 2),…, r(C k) also takes no more than O(n 3) time, assuming that the graph is represented by adjacency lists. Thus, the overall computational complexity is O(n 3).

2.2.3. An Illustrative Run

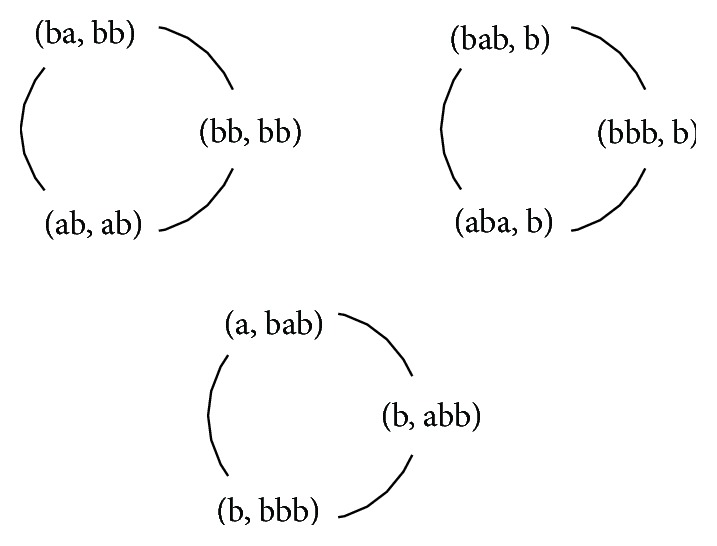

Suppose , is a sample (one of possible explanations for the input is, each follows at least one ). A constructed graph G is depicted in Figure 1. It has three maximal cliques and regardless of the method—either Tomita's or randomized algorithm was selected—all of them would be determined in this case. The final SFRE induced by the cliques is

| (5) |

Among all words of length four over the alphabet it does not accept , but accepts .

Figure 1.

A graph G built from a sample S, according to definitions (3) and (4).

2.3. Validation with Other Methods

The SFRE classification quality over hexapeptides from three datasets was compared to three state-of-the-art tools for heuristic state merging DFA induction: the Trakhtenbrot-Barzdin state merging algorithm (denoted Traxbar) [22], Rodney Price's Abbadingo winning idea of evidence-driven state merging (Blue-fringe) [23], Rlb state merging algorithm (Rlb) [24], and a context-free grammar learning system ADIOS [25]. The compared set of methods was extended by our previous approach with directed acyclic word graphs (DAWG) [5] and the support vector machine with linear kernel function (SVM) [26].

Trakhtenbrot and Barzdin described an algorithm for constructing the smallest DFA consistent with a complete labeled training set [29]. The input to the algorithm is the prefix-tree acceptor which directly embodies the training set. This tree is collapsed into a smaller graph by merging all pairs of states that represent compatible mappings from string suffixes to labels. This algorithm for completely labeled trees has been generalized by Lang [22] to produce a (not necessarily minimum) machine consistent with a sparsely labeled tree (we used implementations from the archive http://abbadingo.cs.nuim.ie/dfa-algorithms.tar.gz for the Traxbar and for the two remaining state merging algorithms).

The second algorithm that starts with the prefix-tree acceptor for the training set and folds it up into a compact hypothesis by merging pairs of states is Blue-fringe. This program grows a connected set of red nodes that are known to be unique states, surrounded by a fringe of blue nodes that will either be merged with red nodes or be promoted to red status. Merges only occur between red nodes and blue nodes. Blue nodes are known to be the roots of trees, which greatly simplifies the code for correctly doing a merge. The only drawback of this approach is that the pool of possible merges is small, so occasionally the program has to do a low scoring merge.

The idea that lies behind the third algorithm, Rlb, is as follows. It dispenses with the red-blue restriction and is able to do merges in any order. However, to have a practical run time, only merges between nodes that lie within a distance “window” of the root on a breadth-first traversal of the hypothesis graph are considered. This introduction of a new parameter is a drawback to this program, as is the fact that its run time scales very badly with training string length. However, on suitable problems, it works better than the Blue-fringe algorithm. The detailed description of heuristics for evaluating and performing merges can be found in Lang's work [24].

ADIOS starts by loading the corpus (examples) onto a directed graph whose vertexes are all lexicon entries, augmented by two special symbols, begin and end. Each corpus sentence defines a separate path over the graph, starting at begin and ending at end, and is indexed by the order of its appearance in the corpus. Loading is followed by an iterative search for significant patterns, which are added to the lexicon as new units. The algorithm generates candidate patterns by traversing in each iteration a different search path, seeking subpaths that are shared by a significant number of partially aligned paths. The significant patterns are selected according to a context-sensitive probabilistic criterion defined in terms of local flow quantities in the graph. At the end of each iteration, the most significant pattern is added to the lexicon as a new unit, the subpaths it subsumes are merged into a new vertex, and the graph is rewired accordingly. The search for patterns and equivalence classes and their incorporation into the graph are repeated until no new significant patterns are found. The Java implementation of ADIOS made available to us by one of the authors was used in our experiments.

DAWG is a two-phase procedure. In the first phase, an initial directed graph is built in a way that resembles the construction of the minimal DFA, but nondeterminism is also allowed. In the second phase, the directed graph is extended in an iterative process by putting some additional labels onto the existing arcs. The order of putting new labels alters the results; hence a greedy heuristic has been proposed in order to obtain the words most consistent with a sample. We used the same implementation of DAWG as in our earlier work on classification of biological sequences [5].

SVM constructs a hyperplane or set of hyperplanes in a high-dimensional space, which can be used for classification, regression, or other tasks. A good separation is achieved by the hyperplane that has the largest distance to the nearest training data points of any class (so-called functional margin), since, in general, the larger the margin, the lower the generalization error of the classifier. In the experiments, we took advantage of scikit SVM, a machine learning Python library with default parameters [30].

2.4. Experiment Design and Statistical Analysis

To estimate the SFRE's and compared approaches' ability to classify unseen hexapeptides repeated stratified k-fold cross-validation (cv) strategy was used. Note that holdout method is the simplest kind of cross-validation, but multiple cv is thought to be more reliable than holdout due to its evaluation variance [31]. The simplest form of cross-validation is to split the data randomly into k mutually exclusive folds, building a model on all but one fold, and to evaluate the model on the skipped fold. The procedure is repeated k-times, each time evaluating the model on the next omitted fold. The overall assessment of the model is based on the mean of k-individual evaluations. Since the cv assessment would depend on the random assignment samples, a common practice is to stratify the folds themselves [32]. In a stratified variant of cv, the pseudorandom folds are generated in such a way that each fold contains approximately the same percentage of samples of each class as the whole set. Although the cv is considered as one of the most utilized validation methods, it is well known that cv-based estimators have high variance and nonzero bias [33–36]. It is therefore recommended to use a repeated cross-validation approach [37].

The main problem with (repeated) cv is that the training and test sets are not independent samples. Dietterich [31] found that comparing algorithms on the basis of repeated resampling of the same data can cause very high Type-I errors. It means that statistical hypothesis test, like the standard paired t-test, incorrectly rejects a true null hypothesis (so-called false positive). Note that cv can be viewed as a kind of random subsampling. To correct the variance estimate of dependent samples, Nadeau and Bengio [38] proposed the following statistic of the corrected resampled t-test:

| (6) |

where x j is the difference of the performance quality between two compared algorithms on j-run (1 ≤ j ≤ n). We assume that in each run n 1 samples are used for training and n 2 samples for testing. stands for the variance of the n differences. This statistic obeys approximately Student's t-distribution with n − 1 degrees of freedom. The only difference to the standard t-test is that the factor 1/n in the denominator is by the factor 1/n + n 2/n 1. The corrected resampled t-test has the Type-I error close to the significance level and—opposite to the McNemar test and the 5 × 2 cv test—low Type-II error (i.e., the failure to reject a false null hypothesis). If we consider test based on r-times k-fold cv, the statistic

| (7) |

has k · r − 1 degrees of freedom and is called corrected repeated k-fold cv test. To detect performance differentiation of compared algorithms we use 10 × 10 cv scheme with 10 (instead of 99) degrees of freedom. This scheme was shown [39] to have excellent replicability. Note that, to perform multiple comparisons involving a control method (i.e., SFRE), we are supposed to control the family-wise error (FWER) [40, 41]. FWER is the probability of making Type-I error when testing many null hypotheses simultaneously. Several methods of relaxing the FWER have been proposed [42]. To keep the probability of rejecting any true null hypothesis small, in our experiments we applied Holm correction [43].

The predictive performance of algorithms was evaluated with the confusion matrix and some of the figures of merit associated with it. First, the following four scores were defined as tp, fp, fn, and tn, representing the numbers of true positives (correctly recognized amyloids), false positives (nonamyloids recognized as amyloids), false negatives (amyloids recognized as nonamyloids), and true negatives (correctly recognized nonamyloids), respectively. The following three figures of merit were considered here, since they are widely used.

The Sensitivity, also known as true positive rate, represents the percentage of correctly identified positive cases and is defined as

| (8) |

Specificity, known is as true negative rate, represents the percentage of correctly identified negative cases and is calculated as

| (9) |

Matthews Correlation Coefficient is defined as

| (10) |

Note that several other scores derived from the confusion matrix can be used for estimating the prediction reliability. These three figures of merit, that is, Sensitivity, Specificity, and Matthews Correlation Coefficient, seem to be indispensable for the following reasons. Sensitivity and Specificity tend to be anticorrelated and monitor different aspects of the prediction process. Both of them may range from 0 to +1, where +1 means perfect prediction. Second, Matthews Correlation Coefficient [44] considers both the true positives and true negatives as successful predictions. MCC is always between −1 and +1. A value of −1 indicates total disagreement, 0 random prediction, and +1 perfect prediction. What is important in our case is, MCC is resistant to imbalanced dataset.

3. Result and Discussion

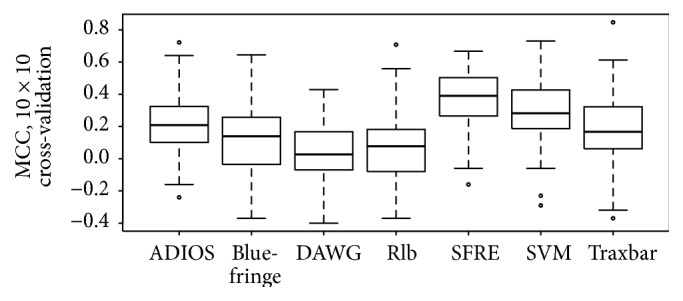

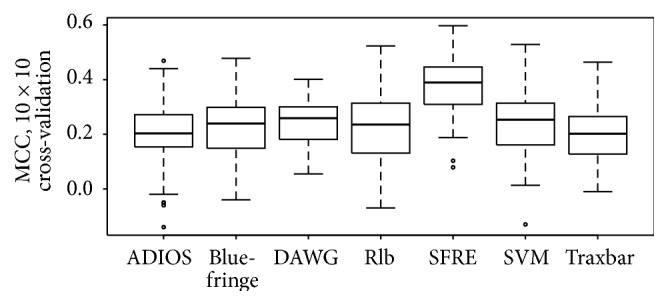

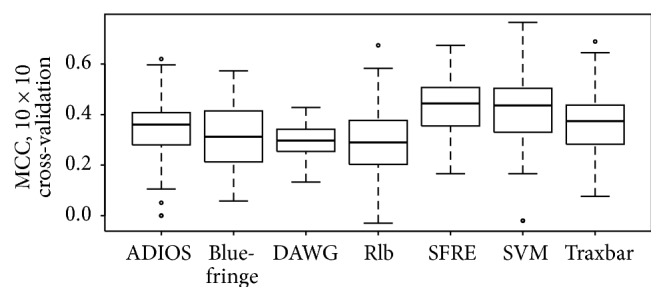

Figure 2 and Table 1, Figure 3 and Table 2, and Figure 4 and Table 3 summarize the performances of the SFRE algorithm and compared methods on Waltz, WALTZ-Db, and exPafig databases, respectively. The figures present boxplots representing the MCC values obtained from 10 × 10 cross-validation, whereas the tables give unadjusted and adjusted by Holm procedure p values for the comparison of the SFRE algorithm (the control method) with the remaining algorithms. Note that adjusted p for each method and each database is lower than desired level of a confidence α, 0.05, in our experiments. These p values indicate that there are significant performance differences between SFRE algorithm and compared methods.

Figure 2.

Performance comparison of ADIOS, Blue-fringe, DAWG, Rlb, SFRE, SVM, and Traxbar methods on Waltz database [3]. Boxplots represent the MCC values obtained from 10 × 10 cross-validation. The ratio of S +/S − is 116/161.

Table 1.

p values for the comparison of the SFRE (the control algorithm) with the other methods on Waltz database. The initial level of confidence α = 0.05 is adjusted by Holm procedure.

| SFRE versus | Unadjusted p | Holm p |

|---|---|---|

| ADIOS | 1.872447e − 04 | 3.744893e − 04 |

| Blue-fringe | 5.341761e − 09 | 2.136704e − 08 |

| DAWG | 1.089587e − 13 | 6.537519e − 13 |

| RLB | 7.529027e − 12 | 3.764514e − 11 |

| SVM | 9.527442e − 03 | 9.527442e − 03 |

| Traxbar | 4.257174e − 07 | 1.277152e − 06 |

Figure 3.

Performance comparison of ADIOS, Blue-fringe, DAWG, Rlb, SFRE, SVM, and Traxbar methods on WALTZ-DB database [27]. Boxplots represent the MCC values obtained from 10 × 10 cross-validation. The ratio of S +/S − is 240/836.

Table 2.

p values for the comparison of the SFRE (the control algorithm) with the other methods on WALTZ-DB database. The initial level of confidence α = 0.05 is adjusted by Holm procedure.

| SFRE versus | Unadjusted p | Holm p |

|---|---|---|

| ADIOS | 4.904483e − 13 | 1.961793e − 12 |

| Blue-fringe | 4.495071e − 10 | 4.495071e − 10 |

| DAWG | 4.838106e − 14 | 2.419053e − 13 |

| RLB | 3.326237e − 11 | 6.652474e − 11 |

| SVM | 1.703864e − 11 | 5.111592e − 11 |

| Traxbar | 9.161256e − 16 | 5.496754e − 15 |

Figure 4.

Performance comparison of ADIOS, Blue-fringe, DAWG, Rlb, SFRE, SVM, and Traxbar methods on exPafig database [5]. Boxplots represent the MCC values obtained from 10 × 10 cross-validation. The ratio of S +/S − is 150/2259.

Table 3.

p values for the comparison of the SFRE (the control algorithm) with the other methods on exPafig database. The initial level of confidence α = 0.05 is adjusted by Holm procedure.

| SFRE versus | unadjusted p | Holm p |

|---|---|---|

| ADIOS | 1.501499e − 05 | 4.504496e − 05 |

| Blue-fringe | 1.019785e − 08 | 4.079139e − 08 |

| DAWG | 4.319299e − 12 | 2.591579e − 11 |

| RLB | 7.401268e − 11 | 3.700634e − 10 |

| SVM | 3.295963e − 02 | 3.295963e − 02 |

| Traxbar | 1.368667e − 04 | 2.737334e − 04 |

SFRE algorithm outperforms all other compared methods in terms of MCC over both experimentally asserted datasets, Waltz and WALTZ-DB, and computationally generated exPafig. It is worth noting that all p values except for comparing with SVM algorithm are lower than not only 0.05, but also the often used 0.01, hence confirming the superiority of the SFRE.

Comparative analysis of the three figures of merit (Sensitivity, Specificity, and Matthews Correlation Coefficient) is summarized in Table 4. These quantities are reported for seven compared predictors and three databases (Waltz, WALTZ-DB, and exPafig). Numerical results reported in Table 4 show that SFRE has the highest Average MCC (0.40) followed by SVM (0.31), ADIOS and Traxbar (0.25), Blue-fringe (0.22), and DAWG and Rlb (0.19). Furthermore, SFRE has the highest MCC score compared to the other predictors on each dataset (0.37, 0.38, and 0.44, resp.). Although the results of MCC score seem to be not high (at the level of 0.40), it should be noted that many of the amyloid predictors are reported to have similar or lower values [45]. It is also worth mentioning that all methods have gained the highest MCC values for the computationally generated exPafig dataset.

Table 4.

Performance of compared methods on Waltz, WALTZ-DB, and exPafig databases in terms of Sensitivity (Sen), Specificity (Spe), and Matthews Correlation Coefficient (MCC). The results are ordered by decreasing Average MCC (Ave MCC).

| Method | Waltz | WALTZ-DB | exPafig | Ave MCC | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sen | Spe | MCC | Sen | Spe | MCC | Sen | Spe | MCC | ||

| SFRE | 0.30 | 0.97 | 0.37 | 0.33 | 0.95 | 0.38 | 0.25 | 1.00 | 0.44 | 0.40 |

| SVM | 0.35 | 0.90 | 0.30 | 0.15 | 0.98 | 0.24 | 0.22 | 1.00 | 0.40 | 0.31 |

| ADIOS | 0.36 | 0.82 | 0.22 | 0.64 | 0.59 | 0.20 | 0.51 | 0.90 | 0.34 | 0.25 |

| Traxbar | 0.56 | 0.61 | 0.17 | 0.46 | 0.76 | 0.20 | 0.42 | 0.96 | 0.37 | 0.25 |

| Blue-fringe | 0.58 | 0.53 | 0.11 | 0.36 | 0.85 | 0.23 | 0.33 | 0.96 | 0.32 | 0.22 |

| DAWG | 0.90 | 0.13 | 0.04 | 0.81 | 0.47 | 0.24 | 0.73 | 0.80 | 0.30 | 0.19 |

| Rlb | 0.36 | 0.70 | 0.07 | 0.26 | 0.90 | 0.22 | 0.25 | 0.97 | 0.29 | 0.19 |

SFRE has a higher Specificity score than other methods except SVM in case of WALTZ-DB (0.95 to 0.98, resp.) and exPafig databases (both Spe of 1.00). These two predictors have a very good capacity at predicting nonamyloid hexapeptides, with Spe higher than 0.90 for each database. The counterpart is their poor Sensitivity. Concerning Sen score, DAWG, our earlier proposal, has the highest value on each database (0.90, 0.81, and 0.73, resp.). SFRE algorithm showed a low Sensitivity for each tested dataset (0.30, 0.33, and 0.25, resp.).

The evaluation of SFRE on three amyloidogenic hexapeptide datasets revealed its accuracy to predict nonamyloid segments. We showed that the new grammatical inference algorithm gives the best Matthews Correlation Coefficient in comparison to six other methods, including support vector machine.

4. Conclusions

In the present paper, the way in which regex induction may support predicting new hexapeptides has been revealed. We, therefore, studied the following problem: given a sample S = (S +, S −), find a “general” star-free regular expression e such that S +⊆L(e), S −∩L(e) = ∅, and L(e) − S + contain only strings of “similar characteristics” to those of S +. To this end, a new GI method has been proposed which is especially suited to the fixed-length datasets. The conducted experiments showed that our algorithm outperforms compared methods in terms of a correlation between the observed and predicted binary classification (MCC) and with real datasets taken from a biomedical domain.

The proposed idea is not free from objections. Among the most serious complications is the exponential computational complexity of generating maximal cliques, which is the second phase of the algorithm. However, it can be overcome by using a proposed randomized procedure instead. Our first experiments on larger datasets uncovered that this is a good direction for the future research.

The high Sensitivity of DAWG approach and high Specificity of SFRE method over tested databases suggest the second direction of future research. These two classifiers could be combined into a metapredictor having, hopefully, both good Sensitivity and Specificity. Such meta-approaches are reported to gain often better results in terms of aggregate indicators (as MCC) than individual predictors [45].

Acknowledgments

This research was supported by National Science Center (Grant DEC-2011/03/B/ST6/01588) and by a statutory grant of the Wroclaw University of Technology.

Competing Interests

The authors declare no conflict of interests.

Authors' Contributions

Wojciech Wieczorek proposed and implemented SFRE algorithm; Olgierd Unold designed the methodology and experiments. Wojciech Wieczorek conceived and performed the experiments; Olgierd Unold designed and performed the statistical data analysis. Both authors wrote and approved the final paper.

References

- 1.de la Higuera C. Grammatical Inference: Learning Automata and Grammars. Cambridge University Press; 2010. [DOI] [Google Scholar]

- 2.Tian J., Wu N., Guo J., Fan Y. Prediction of amyloid fibril-forming segments based on a support vector machine. BMC Bioinformatics. 2009;10(supplement 1, article S45) doi: 10.1186/1471-2105-10-s1-s45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Maurer-Stroh S., Debulpaep M., Kuemmerer N., et al. Exploring the sequence determinants of amyloid structure using position-specific scoring matrices. Nature Methods. 2010;7(3):237–242. doi: 10.1038/nmeth.1432. [DOI] [PubMed] [Google Scholar]

- 4.Angluin D. An application of the theory of computational complexity to the study of inductive inference [Ph.D. thesis] Oakland, Calif, USA: University of California; 1976. [Google Scholar]

- 5.Wieczorek W., Unold O. Induction of directed acyclic word graph in a bioinformatics task. Proceedings of the 12th International Conference of Grammatical Inference; September 2014; Kyoto, Japan. pp. 207–217. [Google Scholar]

- 6.Rulot H., Vidal E. Modelling (sub)string length based constraints through a grammatical inference method. In: Devijver P. A., Kittler J., editors. Pattern Recognition Theory and Applications. Vol. 30. Springer; 1987. pp. 451–459. (NATO ASI Series). [DOI] [Google Scholar]

- 7.Angluin D. Inference of reversible languages. Journal of the ACM. 1982;29(3):741–765. doi: 10.1145/322326.322334. [DOI] [Google Scholar]

- 8.Garcia P., Vidal E., Oncina J. Learning Locally Testable Languages in the Strict Sense. ALT; 1990. [Google Scholar]

- 9.Coste F., Kerbellec G. Learning automata on protein sequences. 7th Journées Ouvertes Biologie Informatique Mathématiques (JOBIM '06); July 2006; Bordeaux, France. pp. 199–210. [Google Scholar]

- 10.Sakakibara Y. Grammatical inference in bioinformatics. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2005;27(7):1051–1062. doi: 10.1109/tpami.2005.140. [DOI] [PubMed] [Google Scholar]

- 11.Searls D. B. The language of genes. Nature. 2002;420(6912):211–217. doi: 10.1038/nature01255. [DOI] [PubMed] [Google Scholar]

- 12.Alpaydin E. Introduction to Machine Learning. 2nd. Cambridge, Mass, USA: MIT Press; 2010. [Google Scholar]

- 13.Polkowski L., Skowron A. Rough Sets in Knowledge Discovery 2: Applications, Case Studies and Software Systems. Physica; 1998. [Google Scholar]

- 14.Jaroniec C. P., MacPhee C. E., Bajaj V. S., McMahon M. T., Dobson C. M., Griffin R. G. High-resolution molecular structure of a peptide in an amyloid fibril determined by magic angle spinning NMR spectroscopy. Proceedings of the National Academy of Sciences of the United States of America. 2004;101(3):711–716. doi: 10.1073/pnas.0304849101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Uversky V. N., Fink A. L. Conformational constraints for amyloid fibrillation: the importance of being unfolded. Biochimica et Biophysica Acta (BBA)—Proteins and Proteomics. 2004;1698(2):131–153. doi: 10.1016/j.bbapap.2003.12.008. [DOI] [PubMed] [Google Scholar]

- 16.Thompson M. J., Sievers S. A., Karanicolas J., Ivanova M. I., Baker D., Eisenberg D. The 3D profile method for identifying fibril-forming segments of proteins. Proceedings of the National Academy of Sciences of the United States of America. 2006;103(11):4074–4078. doi: 10.1073/pnas.0511295103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hamodrakas S. J. Protein aggregation and amyloid fibril formation prediction software from primary sequence: towards controlling the formation of bacterial inclusion bodies. The FEBS Journal. 2011;278(14):2428–2435. doi: 10.1111/j.1742-4658.2011.08164.x. [DOI] [PubMed] [Google Scholar]

- 18.Stanislawski J., Kotulska M., Unold O. Machine learning methods can replace 3D profile method in classification of amyloidogenic hexapeptides. BMC Bioinformatics. 2013;14(1, article 21) doi: 10.1186/1471-2105-14-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Unold O. Fuzzy grammar-based prediction of amyloidogenic regions. JMLR: Workshop and Conference Proceedings. 2012;21:210–219. [Google Scholar]

- 20.Unold O. How to support prediction of amyloidogenic regions—the use of a GA-based wrapper feature selections. Proceedings of the 2nd International Conference on Advances in Information Mining and Management (IMMM '12); October 2012; Venice, Italy. [Google Scholar]

- 21.Liu B., Zhang W., Jia L., Wang J., Zhao X., Yin M. Prediction of ‘aggregation-prone’ peptides with hybrid classification approach. Mathematical Problems in Engineering. 2015;2015:9. doi: 10.1155/2015/857325.857325 [DOI] [Google Scholar]

- 22.Lang K. J. Random DFA's can be approximately learned from sparse uniform examples. Proceedings of the 5th Annual Workshop on Computational Learning Theory (COLT '92); July 1992; Pittsburgh, Pa, USA. ACM; pp. 45–52. [DOI] [Google Scholar]

- 23.Lang K. J., Pearlmutter B. A., Price R. A. Results of the abbadingo one DFA learning competition and a new evidence-driven state merging algorithm. Proceedings of the 4th International Colloquium on Grammatical Inference, (ICGI '98) Ames, Iowa, USA, July 1998; 1998; Springer; pp. 1–12. [Google Scholar]

- 24.Lang K. J. Montpelier, Vt, USA: NECI; 1997. Merge Order count. [Google Scholar]

- 25.Solan Z., Horn D., Ruppin E., Edelman S. Unsupervised learning of natural languages. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(33):11629–11634. doi: 10.1073/pnas.0409746102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cortes C., Vapnik V. Support-vector networks. Machine Learning. 1995;20(3):273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 27.Beerten J., Van Durme J., Gallardo R., et al. WALTZDB: a benchmark database of amyloidogenic hexapeptides. Bioinformatics. 2015;31(10):1698–1700. doi: 10.1093/bioinformatics/btv027. [DOI] [PubMed] [Google Scholar]

- 28.Tomita E., Tanaka A., Takahashi H. The worst-case time complexity for generating all maximal cliques and computational experiments. Theoretical Computer Science. 2006;363(1):28–42. doi: 10.1016/j.tcs.2006.06.015. [DOI] [Google Scholar]

- 29.Trakhtenbrot B., Barzdin Y. Finite Automata: Behavior and Synthesis. North-Holland Publishing; 1973. [Google Scholar]

- 30.Pedregosa F., Varoquaux G., Gramfort A., et al. Scikit-learn: machine learning in Python. Journal of Machine Learning Research. 2011;12:2825–2830. [Google Scholar]

- 31.Dietterich T. G. Approximate statistical tests for comparing supervised classification learning algorithms. Neural Computation. 1998;10(7):1895–1923. doi: 10.1162/089976698300017197. [DOI] [PubMed] [Google Scholar]

- 32.Kotsiantis S., Pintelas P. Combining bagging and boosting. International Journal of Computational Intelligence. 2004;1(4):324–333. [Google Scholar]

- 33.Kohavi R. A study of cross-validation and bootstrap for accuracy estimation and model selection. Proceedings of the 14th International Joint Conference on Artificial Intelligence (IJCAI '95); August 1995; Montreal, Canada. pp. 1137–1143. [Google Scholar]

- 34.Braga-Neto U. M., Dougherty E. R. Is cross-validation valid for small-sample microarray classification? Bioinformatics. 2004;20(3):374–380. doi: 10.1093/bioinformatics/btg419. [DOI] [PubMed] [Google Scholar]

- 35.Hastie T., Tibshirani R., Friedman J., Hastie T., Friedman J., Tibshirani R. The Elements of Statistical Learning. 2, no. 1. Berlin, Germany: Springer; 2009. [Google Scholar]

- 36.Arlot S., Celisse A. A survey of cross-validation procedures for model selection. Statistics Surveys. 2010;4:40–79. doi: 10.1214/09-ss054. [DOI] [Google Scholar]

- 37.Krstajic D., Buturovic L. J., Leahy D. E., Thomas S. Cross-validation pitfalls when selecting and assessing regression and classification models. Journal of Cheminformatics. 2014;6(1, article 10) doi: 10.1186/1758-2946-6-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Nadeau C., Bengio Y. Inference for the generalization error. Machine Learning. 2003;52(3):239–281. doi: 10.1023/a:1024068626366. [DOI] [Google Scholar]

- 39.Bouckaert R. R., Frank E. Evaluating the replicability of significance tests for comparing learning algorithms. In: Dai H., Srikant R., Zhang C., editors. Advances in Knowledge Discovery and Data Mining. Vol. 3056. Springer; 2004. pp. 3–12. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 40.Demšar J. Statistical comparisons of classifiers over multiple data sets. The Journal of Machine Learning Research. 2006;7:1–30. [Google Scholar]

- 41.Japkowicz N., Shah M. Evaluating Learning Algorithms: A Classification Perspective. Cambridge, UK: Cambridge University Press; 2011. [Google Scholar]

- 42.Romano J. P., Shaikh A. M., Wolf M. Control of the false discovery rate under dependence using the bootstrap and subsampling. TEST. 2008;17(3):417–442. doi: 10.1007/s11749-008-0126-6. [DOI] [Google Scholar]

- 43.Holm S. A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics. 1979;6(2):65–70. [Google Scholar]

- 44.Matthews B. W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochimica et Biophysica Acta (BBA)—Protein Structure. 1975;405(2):442–451. doi: 10.1016/0005-2795(75)90109-9. [DOI] [PubMed] [Google Scholar]

- 45.Emily M., Talvas A., Delamarche C. MetAmyl: a METa-predictor for AMYLoid proteins. PLoS ONE. 2013;8(11) doi: 10.1371/journal.pone.0079722.e79722 [DOI] [PMC free article] [PubMed] [Google Scholar]