Abstract

Recognition and identification of aesthetic preference is indispensable in industrial design. Humans tend to pursue products with aesthetic values and make buying decisions based on their aesthetic preferences. The existence of neuromarketing is to understand consumer responses toward marketing stimuli by using imaging techniques and recognition of physiological parameters. Numerous studies have been done to understand the relationship between human, art and aesthetics. In this paper, we present a novel preference-based measurement of user aesthetics using electroencephalogram (EEG) signals for virtual 3D shapes with motion. The 3D shapes are designed to appear like bracelets, which is generated by using the Gielis superformula. EEG signals were collected by using a medical grade device, the B-Alert X10 from advance brain monitoring, with a sampling frequency of 256 Hz and resolution of 16 bits. The signals obtained when viewing 3D bracelet shapes were decomposed into alpha, beta, theta, gamma and delta rhythm by using time–frequency analysis, then classified into two classes, namely like and dislike by using support vector machines and K-nearest neighbors (KNN) classifiers respectively. Classification accuracy of up to 80 % was obtained by using KNN with the alpha, theta and delta rhythms as the features extracted from frontal channels, Fz, F3 and F4 to classify two classes, like and dislike.

Keywords: Neuro-aesthetics, Electroencephalogram (EEG), Brain-computer interface (BCI), 3-Dimensional (3D) shape preference, Aesthetic design, Support vector machines (SVM), K-nearest neighbors (KNN)

Introduction

Recognition and identification of user aesthetics is important in industrial design since it allows users to have better experience of pleasure and satisfaction toward a certain product. Aesthetic appreciation that is experienced by users can trigger pleasurable responses in the brain that lead to users to have preferences for a particularly-designed product over others. However, aesthetic preference of a person could be different from one another due to numerous factors such as their experiential backgrounds and societal influences (Konecni 1978).

Palmer et al. (2013) believes that aesthetic response in human is triggered by what they perceive. However aesthetic response is not always conscious but depending on what is being perceived has relatively low or high arousal and/or valence, the aesthetic response will spontaneously change from the subconscious into the conscious. Numerous studies have been done on aesthetic response in humans (Brown and Dissanayake 2009; Cupchik 1995; palmer et al. 2013; Prinz 2007), which lead us to believe that aesthetic experiences can be recognized and classified for everyday user products based on EEG signals.

Neuromarketing is a new research field which studies human emotional responses and preferences toward market stimuli by using imaging technologies [e.g. functional magnetic resonance imaging (FMRI), electroencephalogram (EEG), and magnetoencephalography (MEG)], and conventional measurements of physiological parameters (e.g. blood pressure, heart rate, body core temperature and respiration rate). EEG is a technique of measuring brainwaves from a human subject’s scalp through the use of a brain-computer interface (BCI), where this technique is a mature method that has been used in various domains such as wheelchair control (Shalbaf et al. 2014) and anaesthesia depth measurement (Wang et al. 2014). The use of EEGs in understanding human preference has been widely studied for different stimuli, ranging from music (Hadjidimitriou and Hadjileontiadis 2012, 2013), automotive brands (Murugappan et al. 2014), shoes (Yılmaz et al. 2014), to images with various colours and patterns (Khushaba et al. 2012) and beverage brands (Brown et al. 2012). Several well-known companies (e.g. PepsiCo, Hyundai, Google, Walt Disney Co., Microsoft, Yahoo, and Ebay’s PayPal) utilise neuromarketing techniques and service to determine the consumer’s thoughts and preferences in order to improve their sales and marketing results.

Many of the researchers used frequency bands as features to study the emotion using EEG which include Li and Lu (2009) and Wang et al. (2014). Meanwhile, several studies on preference also make use frequency bands as input features in their study, which include Moon et al. (2013), Kim et al. (2015), and Hadjidimitriou and Hadjileontiadis (2012).

Moon et al. (2013) measure the preference of visual stimuli using band power and asymmetry scores as features for four preference classes using the Emotiv Epoc headset to obtain accuracies of up to 97.39 % (±0.73 %). Kim et al. (2015) measured the preference of images individually using band power as the feature for 2 preference classes using the Emotiv Epoc headset and obtained accuracies of up to 88.54 % on average. Hadjidimitriou and Hadjileontiadis (2012) measured the preference of music using band power as the feature for 2 preference classes using the Emotiv Epoc headset and obtained accuracies of up to 86.52 % (±0.76 %). Although the recognition of emotion has widely studied, the recognition of preference lacks exploration in comparison.

During aesthetic judgment, multiple cognitive operations take place in different areas in the brain at different times (Nadal et al. 2008). Cela-Conde et al. (2004) showed that the magnetic field obtained using MEG devices in the dorsolateral prefrontal cortex (DLPFC) relates to aesthetic perception and higher activation for aesthetics or “like” conditions in the left hemisphere of the brain, corresponding to the location F3 in international 10–20 electrode placement system (Beam et al. 2009).

Prinz (2007) believes that emotions play an important role in directing aesthetic preference. Aurup (2011) also support that human emotions affect their preferences. Since emotions could be related to aesthetic preference and the frontal lobe of brain plays an important role in triggering emotional responses (Liu et al. 2010; Schmidt and Trainor 2001; Wang et al. 2011), the signal emitted by the frontal lobe may contain aesthetic responses as well. Meanwhile, Pakhomov and Sudin (2013) believe the processing of decision making are similar with the processing of emotion decision.

Different stimuli elicit cognitive responses differently. Brown et al. (2011) showed that the result on classifying three classes appear to be 41 % for pictures as stimuli while it could achieve 82 % accuracy for film clips as stimuli. However, there is no previous study on using neutral motion 3D shapes as stimuli to classify neither emotion nor preference, hence the motivation in initiating this study.

In this novel study, we investigate the possibility of classifying human aesthetic preferences for 3-dimensional (3D) shapes by using EEG signals. In previous studies of aesthetic preference, the visual stimuli were presented in the form of static 2-dimensional (2D) images whereas in this study, the visual stimuli is presented as a rotating 3D object in order for the aesthetics of the object to be better appreciated compared to a static 2D presentation. Several studies show that there are differences in brain activities between perception of 2D and 3D stimuli (Cottereau et al. 2014; Todd 2004). Brainwaves are acquired from the human scalp using an EEG recording device during viewing of the 3D bracelet-like stimuli. Machine learning algorithms in the form of support vector machines (SVM) and K-nearest neighbor classifiers (KNN) are used to classify and detect the user’s preference based on features obtained through time–frequency analysis.

Data acquisition

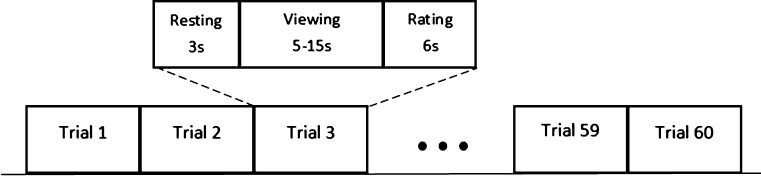

The flow of data acquisition is as shown in Fig. 1 where there are 60 trials with 60 3D shape stimuli in each experiment, for each subject. In each trial, there are three main states, rest, view and rate.

Fig. 1.

The flow structure of the data acquisition process

At the beginning of the data acquisition process, a blank screen of 3 s is displayed as a resting state in order to avoid any brain activities related to the previous trial. This is followed by 5–15 s of 3D stimuli viewing with the minimum and maximum time of 5 and 15 s respectively. After the minimum time, subject is able to proceed to the rating state based on their free will while at the maximum time, the system will automatically proceed to the rating state. The purpose of enabling the subject to decide on their viewing time for the stimuli is to avoid the subject from becoming bored and fatigued during the data acquisition process. Asking the user to view repetitively at fixed intervals and rate the stimuli could lead boredom (Shackleton 1981) which may then further cause fatigue (Craig et al. 2012). Hence allowing the user to option to move to the rating state could save time and reduce fatigue since the subject no longer needs to wait until the maximum time in order to conduct the rating. The subject could move to the rating state simply by pressing space bar, and the EEG signal acquired during the rating state were not included in the analysis.

At the end of the shape viewing process, a rating with a scale from 1 to 5 (1: like very much, 2: like, 3: undecided, 4: do not like, 5: do not like at all) is displayed to the subject, which is adopted from related studies (Hadjidimitriou and Hadjileontiadis 2012, 2013). The 5-point rating scale is displayed to the user since the subject is new to the shape, hence subjects might have a strong feeling towards the stimuli during early stages of the experiment, where it leaves enough room for subsequent rating of feelings later in the experiment. The overall time for each experiment ranged from 14 to 24 min.

Stimuli

Recognition in preference of 3D stimuli is a new area of research, hence, this study utilized a simple and controllable generation method to generate neutral shapes with different complexity. 3D shapes were presented in the form of a bracelet-like object generated using the Gielis superformula (Gielis 2003) is used as the stimuli in this study. Modification towards the parameters of the superformula (1) allows the generation of various natural and elegantly-designed shapes.

| 1 |

where r is the radius, ϕ is the angle, a and b control the size of the supershape, where a > 0, b > 0, m is the symmetry number, n1, n2 and n3 represent the shape coefficients.

Through multiplying additional superformula with the use of spherical product as shown in (2), (3) and (4), the generated shapes are extended to three dimensions.

| 2 |

| 3 |

| 4 |

where −π ≤ θ < π and ≤ ϕ < .

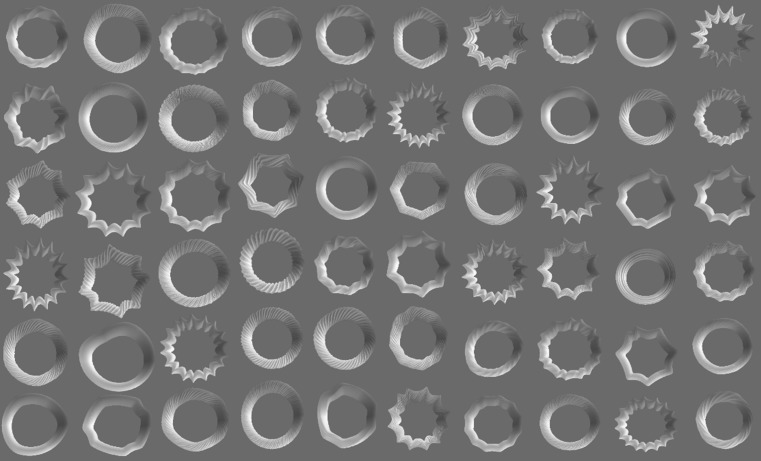

A total of 10 parameters (m1, n11, n12, n13, m2, m21, m22, m23, c, and t) are required for the generation of bracelet 3D model. 60 bracelet models as shown in Fig. 2 were generated by using random real numbers between 0 and 20 for parameters m1, n11, n13, m2, n21, and n23, random real number between 15 and 30 for n12 and n22, random integer number between 0 and 10 for c, and t is fixed at 5.5 to maintain the radius of the shape generated. Random generation was intended to generate both pleasing and non-pleasing shapes. Various ranges were selected for the different parameters in order to be able to generate shapes that appear closest to a bracelet-like object as well to generate a variety of shapes which include pleasing and non-pleasing shapes. Jewellery and also bracelets are commercially significant 3D objects, in which aesthetic preference is an important factor in making a purchasing decision.

Fig. 2.

The 60 3D stimuli generated by Superformula

There are different methods for humans to perceive 3D from 2D presentation, such as shading and texture (Georgieva et al. 2008). Meanwhile, motion is a natural factor in recognizing objects (Vuong and Tarr 2004). 3D stimuli were displayed virtually on a computer and with rotations on different axes of the presented stimuli so that it could be viewed at different angles. Meanwhile, a rotational form was chosen based on humans having the ability to visually present 2D or 3D objects in their mind with a rotating representation, where this ability is known as mental rotation (Shephard and Metzler 1971). All the stimuli were presented to subjects only one time to avoid a mere exposure effect (Zajonc 2001).

Subjects

A total of five subjects (3 females and 2 males) with an age range of 22–26 (mean age = 22.8) were selected to participate in this study.

All subjects had no history of psychiatric illness and had normal or corrected-to-normal vision. All subjects are informed of the aim, estimated time and design of the study before performing the experiment. Subjects are advised to minimize their movements, to sit at their most comfortable position and not to touch or put their hands on their faces during the experiment to reduce artefacts on the EEG signals being acquired.

Consent was been obtained from all subjects prior to conducting all tests.

Data acquisition device

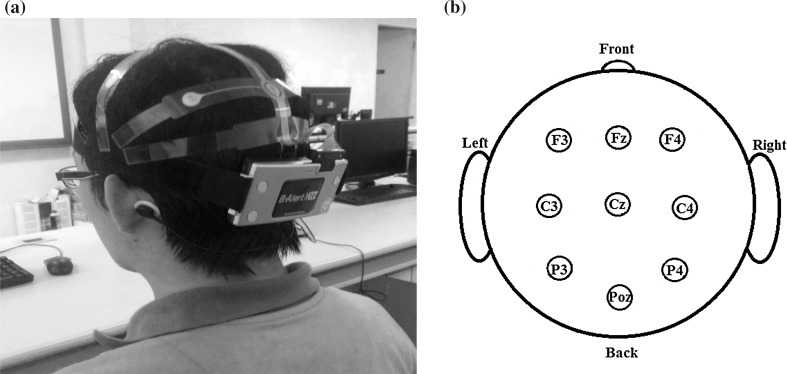

A medical grade wireless EEG device from Advanced Brain Monitoring (ABM), the B-alert X10 headset as shown in Fig. 3a, is used in this study to acquire the EEG signals from the subjects.

Fig. 3.

a The ABM b-alert X10 headset. b Electrode positions of ABM b-alert X10 according to international 10–20 electrode placement system

The B-alert X10 consists of 9 electrode channels (i.e. F3, Fz, F4, C3, Cz, C4, P3, POz and P4) with electrode placements on the subjects’ scalp based on the international 10–20 electrode placement system as shown in Fig. 3b with reference electrodes placed on left and right mastoids.

The sampling frequency of the device is 256 Hz with a resolution of 16 bits.

Signal processing and feature extraction

Since humans have the ability to process visual stimuli at a fast rate of around 150 ms (Thorpe et al. 1996), a fixed viewing time of 5 s was used in feature extraction. In most of the trials, many of the subjects wished to proceed to the rating state within 5 s and hence the interval time of 5 s was used. Comparing the use of total viewing time and fixed viewing time of 5 s, the classification results from using a fixed viewing time performs better than the use of total viewing time.

The acquired EEG signals are decontaminated internally by using the software development kit (SDK) as provided by ABM in Matlab from 5 types of artefacts, namely EMG (electromyography), eye blinks, excursions, saturations and spikes, which are conducted automatically. Artefacts such as excursions, saturations and spikes in the signals are replaced with zero values by the ABM SDK. The nearest neighbor interpolation (NNI) (Garcia 2010) is then applied to replace zero values in the signals.

The features of interest in this experiment are the five frequency bands, alpha (8–13 Hz), beta (13–30 Hz), gamma (30–49 Hz), theta (4–8 Hz), and delta (1–4 Hz), due to the increase and decrease of each rhythm reflecting different brain states.

This experiment adopts Hadjidimitriou and Hadjileontiadis (2012; 2013) methods in feature extraction where the feature estimation is based on the event-related synchronization/desynchronization (ERS/ERD) theory. Feature F was computed as shown in (5) to obtain the quantities relevant to brain activation.

| 5 |

where A represents the estimated quantity during stimulus viewing (SV) and R represents the estimated quantity during rest state (RS).

Additionally, A is computed for each trial j and channel i as the average of the TF values in all five EEG frequency bands (fb) as in (6) and R is computed in the same way as A as shown in (7).

| 6 |

| 7 |

where A represents the quantity estimation during stimulus viewing (SV), R represents the quantity estimation during rest state (RS), A for each trial j and channel i, the average of the TF values in each of the 5 frequency bands and in time is computed. is the number of data point in the frequency band of interest. [t, f] represents the discrete (time, frequency) point in the TF plane.

The short-time fourier transform (STFT) was used in this experiment in which it is a built-in Matlab function spectrogram. Each section of length 256 was divided from the signal, windowed with Hamming window, 256 discrete fourier transform (DFT) points, an overlap of 0 windows, and a sampling rate of 256 Hz were used as parameter for spectrogram.

The number of features (F) for like and dislike for the training and testing sets for each subject are shown in Table 1. In the training set, the number of features for like and dislike are 79 and 102 respectively. In the testing set, the number of features for like and dislike are 16 and 40 respectively.

Table 1.

Training and testing feature for each subject

| Subject | Training set | Testing set | ||||

|---|---|---|---|---|---|---|

| Like F | Dislike F | Total F | Like F | Dislike F | Total F | |

| 1 | 16 | 14 | 30 | 1 | 8 | 9 |

| 2 | 11 | 20 | 31 | 1 | 7 | 8 |

| 3 | 21 | 24 | 45 | 6 | 9 | 15 |

| 4 | 15 | 17 | 32 | 4 | 7 | 11 |

| 5 | 16 | 27 | 43 | 4 | 9 | 13 |

| Total | 79 | 102 | 181 | 16 | 40 | 56 |

Classification

The classifiers used are the Gaussian kernel support vector machines (SVM) (Gunn 1998) with box constraint set to 1 and K-nearest neighbor (KNN) classifier (Song et al. 2007) with the nearest neighbor parameter set to 4 after testing from a range of 4–8 where 4 gave the best average accuracy. The classifiers used are default functions from Matlab, fitcsvm and fitcknn for SVM and KNN respectively. Meanwhile, Hadjidimitriou and Hadjileontiadis (2012) and Moon et al. (2013) utilizing brain rhythms in classifying human preference selected k = 4 in their study. The features were grouped into 2 classes namely Like and Dislike.

In the Like class, it is corresponding to the trials which were rated by the subjects at 4: Like and 5: Like very much while in the Dislike class, the feature vectors were corresponding to the trials which were rated by the subject as 1: do not like at all and 2: do not like. The neutral class which is corresponding to rating at 3: Undecided is not included. The ratio of training to testing cases of the data is 3:1.

Results

The classification is tested using several different combination and selection of channels, especially for channels located at the frontal lobe (F3, F4 and Fz) since the frontal lobe is believed to contribute to aesthetic response in humans (Liu et al. 2010; Schmidt and Trainor 2001; Wang et al. 2011) and also the parietal-occipital midline channel (POz) was found to be involved in processing 3D shapes from motion (Paradis et al. 2000). Both classifiers were tested with 25 different combinations of rhythms with 13 different combinations of channels.

The results of the classification are shown in Table 2 and Table 3 using KNN and SVM respectively.

Table 2.

Classification accuracy by using KNN

| All | FZ F3 F4 | F3 F4 | F3 F4 Poz | F3 F4 Fz Poz | F3 | F3 Poz | F4 | F4 Poz | F4 Fz | Poz | F3 F4 P3 P4 | P3 P4 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| α (%) | 59 | 59 | 61 | 64 | 61 | 61 | 64 | 66 | 61 | 55 | 55 | 55 | 54 |

| β (%) | 63 | 61 | 52 | 55 | 64 | 59 | 63 | 66 | 63 | 66 | 63 | 63 | 64 |

| θ (%) | 70 | 68 | 64 | 64 | 66 | 66 | 63 | 61 | 66 | 71 | 63 | 70 | 66 |

| γ (%) | 57 | 57 | 61 | 61 | 59 | 64 | 64 | 59 | 64 | 54 | 55 | 64 | 70 |

| δ (%) | 68 | 71 | 73 | 73 | 70 | 66 | 71 | 68 | 63 | 68 | 63 | 73 | 57 |

| α γ β (%) | 59 | 61 | 55 | 59 | 59 | 63 | 63 | 59 | 57 | 57 | 64 | 54 | 59 |

| β γ (%) | 55 | 61 | 66 | 54 | 68 | 57 | 59 | 68 | 55 | 63 | 75 | 57 | 63 |

| α β (%) | 59 | 57 | 54 | 55 | 63 | 66 | 66 | 55 | 52 | 59 | 61 | 50 | 61 |

| α β γ δ θ (%) | 68 | 71 | 79 | 70 | 70 | 68 | 57 | 77 | 70 | 75 | 63 | 77 | 61 |

| α β γ δ (%) | 64 | 71 | 71 | 64 | 63 | 68 | 70 | 55 | 59 | 63 | 59 | 68 | 61 |

| α θ (%) | 63 | 66 | 64 | 55 | 61 | 59 | 66 | 63 | 57 | 63 | 57 | 68 | 57 |

| γ θ (%) | 71 | 66 | 64 | 64 | 66 | 75 | 57 | 64 | 70 | 68 | 59 | 71 | 66 |

| θ δ (%) | 70 | 71 | 71 | 66 | 68 | 64 | 64 | 71 | 70 | 71 | 55 | 73 | 68 |

| α γ β θ (%) | 57 | 64 | 61 | 61 | 61 | 63 | 64 | 68 | 66 | 68 | 55 | 59 | 71 |

| β γ θ (%) | 71 | 68 | 71 | 70 | 73 | 64 | 63 | 50 | 63 | 68 | 57 | 68 | 61 |

| α β θ (%) | 57 | 66 | 59 | 63 | 61 | 63 | 63 | 68 | 66 | 68 | 55 | 63 | 68 |

| α γ θ (%) | 63 | 63 | 63 | 57 | 59 | 61 | 66 | 64 | 61 | 64 | 61 | 63 | 63 |

| β θ (%) | 71 | 68 | 73 | 71 | 70 | 66 | 64 | 46 | 64 | 68 | 61 | 63 | 63 |

| γ θ δ (%) | 70 | 71 | 71 | 70 | 68 | 64 | 64 | 70 | 71 | 70 | 55 | 73 | 68 |

| α γ (%) | 63 | 57 | 61 | 64 | 59 | 66 | 64 | 61 | 59 | 57 | 55 | 55 | 52 |

| α δ θ (%) | 70 | 80 | 79 | 68 | 71 | 71 | 57 | 73 | 71 | 73 | 63 | 77 | 57 |

| α β δ θ (%) | 71 | 71 | 79 | 68 | 70 | 68 | 59 | 77 | 64 | 73 | 64 | 77 | 63 |

| β δ θ (%) | 64 | 70 | 70 | 70 | 71 | 63 | 68 | 68 | 70 | 70 | 52 | 71 | 61 |

| α δ (%) | 63 | 70 | 73 | 64 | 61 | 64 | 73 | 66 | 61 | 64 | 52 | 70 | 57 |

Where α represents alpha, β represents beta, θ represents theta, γ represents gamma, and δ represents delta rhythm bands respectively

Table 3.

Classification accuracy by using SVM

| All | Fz F3 F4 | F3 F4 | F3 F4 Poz | F3 F4 Fz Poz | F3 | F3 Poz | F4 | F4 Poz | F4 Fz | Poz | F3 F4 P3 P4 | P3 P4 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| α (%) | 61 | 61 | 70 | 68 | 61 | 71 | 71 | 77 | 77 | 64 | 68 | 70 | 55 |

| β (%) | 64 | 70 | 71 | 71 | 68 | 71 | 73 | 71 | 73 | 71 | 71 | 70 | 68 |

| θ (%) | 70 | 68 | 64 | 59 | 63 | 71 | 68 | 70 | 61 | 57 | 71 | 70 | 66 |

| γ (%) | 71 | 71 | 71 | 71 | 71 | 71 | 71 | 71 | 71 | 71 | 71 | 71 | 71 |

| δ (%) | 66 | 64 | 71 | 68 | 70 | 68 | 71 | 71 | 68 | 63 | 66 | 57 | 70 |

| α γ β (%) | 61 | 55 | 57 | 64 | 59 | 71 | 66 | 68 | 66 | 61 | 66 | 68 | 59 |

| β γ (%) | 63 | 71 | 71 | 70 | 64 | 71 | 70 | 71 | 71 | 71 | 71 | 70 | 68 |

| α β (%) | 59 | 59 | 59 | 63 | 63 | 70 | 66 | 66 | 68 | 61 | 68 | 66 | 59 |

| α β γ δ θ (%) | 71 | 73 | 71 | 71 | 71 | 64 | 66 | 73 | 73 | 68 | 68 | 71 | 66 |

| α β γ δ (%) | 71 | 70 | 64 | 66 | 70 | 73 | 66 | 68 | 66 | 66 | 61 | 71 | 63 |

| α θ (%) | 71 | 71 | 75 | 68 | 68 | 71 | 64 | 68 | 70 | 66 | 68 | 77 | 55 |

| γ θ (%) | 70 | 70 | 63 | 61 | 59 | 71 | 68 | 61 | 66 | 55 | 71 | 70 | 66 |

| θ δ (%) | 71 | 73 | 64 | 70 | 73 | 66 | 64 | 70 | 73 | 64 | 64 | 70 | 63 |

| α γ β θ (%) | 71 | 68 | 70 | 70 | 71 | 66 | 66 | 66 | 66 | 66 | 71 | 73 | 55 |

| β γ θ (%) | 75 | 63 | 66 | 61 | 66 | 71 | 66 | 71 | 64 | 61 | 68 | 70 | 70 |

| α β θ (%) | 71 | 70 | 75 | 70 | 71 | 70 | 64 | 64 | 66 | 61 | 70 | 75 | 61 |

| α γ θ (%) | 71 | 70 | 71 | 70 | 71 | 71 | 63 | 71 | 70 | 66 | 68 | 77 | 55 |

| β θ (%) | 73 | 63 | 63 | 61 | 70 | 71 | 66 | 68 | 64 | 64 | 68 | 70 | 68 |

| γ θ δ (%) | 71 | 70 | 66 | 70 | 73 | 68 | 63 | 70 | 73 | 61 | 64 | 70 | 63 |

| α γ (%) | 61 | 61 | 73 | 66 | 63 | 71 | 68 | 79 | 75 | 64 | 68 | 66 | 61 |

| α δ θ (%) | 71 | 73 | 71 | 70 | 73 | 64 | 64 | 75 | 68 | 71 | 61 | 71 | 63 |

| α β δ θ (%) | 71 | 73 | 71 | 71 | 73 | 64 | 64 | 73 | 73 | 68 | 68 | 71 | 66 |

| β δ θ (%) | 71 | 73 | 64 | 68 | 71 | 71 | 66 | 70 | 68 | 63 | 61 | 70 | 66 |

| α δ (%) | 70 | 70 | 70 | 64 | 68 | 70 | 71 | 71 | 68 | 71 | 59 | 66 | 63 |

Where α represents alpha, β represents beta, θ represents theta, γ represents gamma, and δ represents delta rhythm bands respectively

The accuracy for KNN ranges from 46 to 80 % while the accuracy of SVM ranges from 55 to 79 %. The mean accuracy for SVM classifier is 68 % while the mean accuracy for KNN classifier is 64 %.

Several combinations of rhythms and channels could produce accuracies of up to 79 % using SVM. The combinations of rhythms and channels obtaining an accuracy of 75 % include F3 and F4 channels with alpha and theta rhythms, F3 and F4 channels with alpha, beta and theta rhythms, F4 and POz with alpha and gamma rhythms, F3, F4, P3 and P4 with alpha gamma and theta rhythms, channel F4 with alpha, theta and delta rhythms and all channels with beta, gamma and theta rhythms. Meanwhile, F4 with alpha rhythm, F4 and POz with alpha rhythm, F3, F4, P3 and P4 with alpha, beta and theta rhythms, and also F3, F4, P3 and P4 with alpha and theta rhythms produced 77 % accuracy while F4 with alpha and gamma rhythms produced the best accuracy of SVM with 79 %.

Apart from that, KNN classifier also produced accuracies of up to 80 %. The combinations of rhythms and channels obtaining an accuracy of 75 % include F3 with gamma and theta rhythms, POz with beta and gamma rhythms, and also F4 and Fz with all the rhythms whereas the combinations of rhythms and channels obtaining accuracies of 77 % include F4 with all the rhythms, channel F4 with alpha, beta, theta and delta rhythms, F3, F4, P3 and P4 with alpha, beta, theta and delta rhythms, F3, F4, P3, and P4 with alpha, theta and delta rhythms and F3, F4, P3 and P4 with all the rhythms. An accuracy of 79 % was obtained by using F3 and F4 channels and all the rhythms, F3 and F4 channels with alpha, beta, theta and delta rhythms and F3 and F4 channels with alpha, beta, theta and delta rhythms.

In both classifiers, it was shown that the use of rhythms from left and right frontal channels could achieve an accuracy up to 79 %. The channel F3 and F4 located at left and right DLPFC respectively. DLPFC plays important role in different cognition processes, e.g. working memory (Pochon et al. 2001), emotion processing (Grimm et al. 2008) and moral decision-making (Jeurissen et al. 2014). The left DLPFC is activated during memorizing verbal stimuli while the right DLPFC is activated during memorizing the visual stimuli (Geuze et al. 2008), this might explains channel F4 obtained an accuracy of 75 % using SVM with alpha, theta and delta rhythms, 77 % of accuracy using KNN with all rhythms, KNN with alpha, beta, theta and delta rhythms and SVM with alpha rhythm, and finally 79 % of accuracy using SVM with alpha and gamma rhythms, where the gamma rhythm is believed to play a part in memory encoding and retrieval (Jackson et al. 2011). Meanwhile, suppression of low alpha activities at right frontal channel indicates high arousal (Mikutta et al. 2012), which implies the preference.

Nevertheless, the use of channels F3 and F4 with alpha and theta rhythms, and also alpha, beta and theta rhythms using SVM obtained an accuracy of 75 %. Channels F3 and F4 with all the rhythms using KNN, F3 and F4 with alpha, beta, theta and delta rhythms using KNN, and channels F3 and F4 with alpha, theta and delta using KNN obtained an accuracy of 79 %. This suggests that channels located at DLPFC are suitable for the classification of human preference for motion 3D shapes, whereas the use of all channels as well as channel POz did not indicate any outperformance. Furthermore, the use of alpha, theta and delta rhythms from all frontal channels (i.e. Fz, F3 and F4) achieved an accuracy of 80 %. This suggests that the rhythms from frontal channels are informative and it is suitable for identification of human preference in 3D shapes.

The classification of using rhythms from only the left and right parietal lobes (P3 and P4 respectively) does not show any accuracy higher than 71 % for both classifiers. However, together with the rhythms from left and right, DLPFC could perform up to 77 % for both classifiers.

Conclusion and future work

The aim of this study was to identify the possibility of recognizing aesthetic preferences using EEG signals for 3D shapes with motion. This paper described the process and approach toward recognizing human preference for 3D shapes that appear to be bracelet-like.

EEG signals were acquired using 9 channels through the ABM B-Alert X10 BCI device on 5 subjects. The obtained decontaminated signal underwent NNI followed by feature extraction using time frequency analysis to obtain the alpha, beta, gamma, theta and delta rhythms. SVM and KNN classifiers were trained with different combinations and selections of rhythms and EEG channels. The classifier is then tested with another set of data to identify the accuracy of the classifier. In general, the SVM classifiers performed more consistently in providing classification of the EEG signals compared to the KNN classifiers.

The best accuracy was 80 %, which was obtained using the classifier trained with 3 frontal channels of the ABM B-Alert X10, channels Fz, F3 and F4 using alpha theta and delta rhythms with KNN. The experimental results suggest that rhythms from frontal channels are suitable for identification of human preference on moving 3D shapes.

For future work, we plan to continue investigating the specific channels with specific rhythms to optimize the accuracy using minimum channels and rhythms, and to also investigate the use of different machine learning classifiers and different features. Meanwhile, in the near future, the recognition of preference will be implemented into a real-time application such as in an interactive evolutionary computation (IEC) to optimize the shape of different domains without physical interaction, where the optimized shapes could be printed out using 3D printers and applied to real-life usage.

Acknowledgments

This project is supported through the research Grant Ref: FRGS/2/2013/ICT02/UMS/02/1 from the Ministry of Education, Malaysia.

Contributor Information

Lin Hou Chew, Phone: +60149941909, Email: chewqueenie@hotmail.com.

Jason Teo, Phone: +60128320099, Email: jtwteo@ums.edu.my.

James Mountstephens, Phone: +60128349044, Email: jmountstephens@hotmail.com.

References

- Aurup GMM (2011). User preference extraction from bio-signals: an experimental study (Doctoral dissertation, Concordia University)

- Beam W, Borckardt JJ, Reeves ST, George MS. An efficient and accurate new method for locating the F3 position for prefrontal TMS applications. Brain Stimul. 2009;2(1):50–54. doi: 10.1016/j.brs.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S, Dissanayake E (2009) The arts are more than aesthetics: Neuroaesthetics as narrow aesthetics. Neuroaesthetics 43–57

- Brown L, Grundlehner B, Penders J. (2011) Towards wireless emotional valence detection from EEG. In Engineering in Medicine and Biology Society EMBC, 2011 annual international conference of the IEEE pp. 2188–2191 Augest 2011 [DOI] [PubMed]

- Brown C, Randolph AB, Burkhalter JN. The story of taste: using EEGs and self-reports to understand consumer choice. Kennesaw J Undergrad Res. 2012;2(1):5. [Google Scholar]

- Cela-Conde CJ, Marty G, Maestú F, Ortiz T, Munar E, Fernández A, Roca M, Rosselló J, Quesney F. Activation of the prefrontal cortex in the human visual aesthetic perception. Proc Natl Acad Sci USA. 2004;101(16):6321–6325. doi: 10.1073/pnas.0401427101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cottereau BR, McKee SP, Norcia AM. Dynamics and cortical distribution of neural responses to 2D and 3D motion in human. J Neurophysiol. 2014;111(3):533–543. doi: 10.1152/jn.00549.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig A, Tran Y, Wijesuriya N, Nguyen H. Regional brain wave activity changes associated with fatigue. Psychophysiology. 2012;49(4):574–582. doi: 10.1111/j.1469-8986.2011.01329.x. [DOI] [PubMed] [Google Scholar]

- Cupchik GC. Emotion in aesthetics: reactive and reflective models. Poetics. 1995;23(1):177–188. doi: 10.1016/0304-422X(94)00014-W. [DOI] [Google Scholar]

- Garcia D. Robust smoothing of gridded data in one and higher dimensions with missing values. Comput Stat Data Anal. 2010;54(4):1167–1178. doi: 10.1016/j.csda.2009.09.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Georgieva SS, Todd JT, Peeters R, Orban GA. The extraction of 3D shape from texture and shading in the human brain. Cereb Cortex. 2008;18(10):2416–2438. doi: 10.1093/cercor/bhn002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geuze E, Vermetten E, Ruf M, de Kloet CS, Westenberg HG. Neural correlates of associative learning and memory in veterans with posttraumatic stress disorder. J Psychiatr Res. 2008;42(8):659–669. doi: 10.1016/j.jpsychires.2007.06.007. [DOI] [PubMed] [Google Scholar]

- Gielis J. A generic geometric transformation that unifies a wide range of natural and abstract shapes. Am J Bot. 2003;90(3):333–338. doi: 10.3732/ajb.90.3.333. [DOI] [PubMed] [Google Scholar]

- Grimm S, Beck J, Schuepbach D, Hell D, Boesiger P, Bermpohl F, Niehaus L, Boeker H, Northoff G. Imbalance between left and right dorsolateral prefrontal cortex in major depression is linked to negative emotional judgment: an fMRI study in severe major depressive disorder. Biol Psychiatry. 2008;63(4):369–376. doi: 10.1016/j.biopsych.2007.05.033. [DOI] [PubMed] [Google Scholar]

- Gunn SR (1998) Support vector machines for classification and regression. Technical report, University of Southampton, Department of electrical and computer science

- Hadjidimitriou SK, Hadjileontiadis LJ. Toward an EEG-based recognition of music liking using time-frequency analysis. Biomed Eng IEEE Trans. 2012;59(12):3498–3510. doi: 10.1109/TBME.2012.2217495. [DOI] [PubMed] [Google Scholar]

- Hadjidimitriou SK, Hadjileontiadis LJ. EEG-Based Classification of Music Appraisal Responses Using Time-Frequency Analysis and Familiarity Ratings. Affect Comput IEEE Trans. 2013;4(2):161–172. doi: 10.1109/T-AFFC.2013.6. [DOI] [Google Scholar]

- Jackson J, Goutagny R, Williams S. Fast and slow gamma rhythms are intrinsically and independently generated in the subiculum. J Neurosci. 2011;31(34):12104–12117. doi: 10.1523/JNEUROSCI.1370-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeurissen D, Sack AT, Roebroeck A, Russ BE, Pascual-Leone A (2014) TMS affects moral judgment, showing the role of DLPFC and TPJ in cognitive and emotional processing. Front Neurosci 8:18. doi:10.3389/fnins.2014.00018 [DOI] [PMC free article] [PubMed]

- Khushaba RN, Kodagoda S, Dissanayake G, Greenacre L, Burke S, Louviere J (2012). A neuroscientific approach to choice modeling: Electroencephalogram (EEG) and user preferences. In Neural Networks (IJCNN), The 2012 international joint conference on pp. 1–8 IEEE June 2012

- Kim Y, Kang K, Lee H, Bae C (2015) Preference measurement using user response electroencephalogram. In: Computer science and its applications. Springer Berlin, Heidelberg, pp 1315–1324

- Konecni VJ. Determinants of aesthetic preference and effects of exposure to aesthetic stimuli: social emotional, and cognitive factors. Prog Exp Personal Res. 1978;9:149–197. [PubMed] [Google Scholar]

- Li M, Lu BL (2009) Emotion classification based on gamma-band EEG. Engineering in Medicine and Biology Society: annual international conference of the IEEE: EMBC. 1223–1226 [DOI] [PubMed]

- Liu Y, Sourina O, Nguyen MK (2010). Real-time EEG-based human emotion recognition and visualization. In Cyberworlds (CW), 2010 International Conference on pp. 262–269 IEEE October 2010

- Mikutta C, Altorfer A, Strik W, Koenig T. Emotions, arousal, and frontal alpha rhythm asymmetry during Beethoven’s 5th symphony. Brain Topogr. 2012;25(4):423–430. doi: 10.1007/s10548-012-0227-0. [DOI] [PubMed] [Google Scholar]

- Moon J, Kim Y, Lee H, Bae C, Yoon WC. Extraction of user preference for video stimuli using EEG-based user responses. ETRI J. 2013;35(6):1105–1114. doi: 10.4218/etrij.13.0113.0194. [DOI] [Google Scholar]

- Murugappan M, Murugappan S, Gerard C (2014). Wireless EEG signals based neuromarketing system using Fast Fourier Transform (FFT). InSignal Processing and its Applications (CSPA), 2014 IEEE 10th International Colloquium on pp. 25–30 IEEE March 2014

- Nadal M, Munar E, Capó MÀ, Rossello J, Cela-Conde CJ. Towards a framework for the study of the neural correlates of aesthetic preference. Spat Vis. 2008;21(3):379–396. doi: 10.1163/156856808784532653. [DOI] [PubMed] [Google Scholar]

- Pakhomov A, Sudin N. Thermodynamic view on decision-making process: emotions as a potential power vector of realization of the choice. Cogn Neurodyn. 2013;7(6):449–463. doi: 10.1007/s11571-013-9249-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer SE, Schloss KB, Sammartino J. Visual aesthetics and human preference. Annu Rev Psychol. 2013;64:77–107. doi: 10.1146/annurev-psych-120710-100504. [DOI] [PubMed] [Google Scholar]

- Paradis AL, Cornilleau-Peres V, Droulez J, Van De Moortele PF, Lobel E, Berthoz A, Le Bihan D, Poline JB. Visual perception of motion and 3-D structure from motion: an fMRI study. Cereb Cortex. 2000;10(8):772–783. doi: 10.1093/cercor/10.8.772. [DOI] [PubMed] [Google Scholar]

- Pochon JB, Levy R, Poline JB, Crozier S, Lehéricy S, Pillon B, Dubois B. The role of dorsolateral prefrontal cortex in the preparation of forthcoming actions: an fMRI study. Cereb Cortex. 2001;11(3):260–266. doi: 10.1093/cercor/11.3.260. [DOI] [PubMed] [Google Scholar]

- Prinz J (2007). Emotion and aesthetic value. In American philosophical association Pacific meeting Vol. 15

- Schmidt LA, Trainor LJ. Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions. Cogn Emot. 2001;15(4):487–500. doi: 10.1080/02699930126048. [DOI] [Google Scholar]

- Shackleton VJ. Boredom and repetitive work: a review. Pers Rev. 1981;10(4):30–36. doi: 10.1108/eb055445. [DOI] [Google Scholar]

- Shalbaf R, Behnam H, Moghadam HJ. Monitoring depth of anesthesia using combination of EEG measure and hemodynamic variables. Cogn Neurodyn. 2014;9(1):41–51. doi: 10.1007/s11571-014-9295-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shephard RN, Metzler J. Mental rotation of three-dimensional objects. Science. 1971;171:701–703. doi: 10.1126/science.171.3972.701. [DOI] [PubMed] [Google Scholar]

- Song Y, Huang J, Zhou D, Zha H, Giles CL (2007) Iknn: Informative k-nearest neighbor pattern classification. In Knowledge Discovery in Databases: PKDD 2007 pp. 248–264 Springer Berlin, Heidelberg

- Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381(6582):520–522. doi: 10.1038/381520a0. [DOI] [PubMed] [Google Scholar]

- Todd JT. The visual perception of 3D shape. Trends in cognitive sciences. 2004;8(3):115–121. doi: 10.1016/j.tics.2004.01.006. [DOI] [PubMed] [Google Scholar]

- Vuong QC, Tarr MJ. Rotation direction affects object recognition. Vision Res. 2004;44(14):1717–1730. doi: 10.1016/j.visres.2004.02.002. [DOI] [PubMed] [Google Scholar]

- Wang XW, Nie D, Lu BL. January). EEG-based emotion recognition using frequency domain features and support vector machines. Neural Inf Process. 2011;7062:734–743. doi: 10.1007/978-3-642-24955-6_87. [DOI] [Google Scholar]

- Wang H, Li Y, Long J, Yu T, Gu Z. An asynchronous wheelchair control by hybrid EEG–EOG brain–computer interface. Cogn Neurodyn. 2014;8(5):399–409. doi: 10.1007/s11571-014-9296-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yılmaz B, Korkmaz S, Arslan DB, Güngör E, Asyalı MH. Like/dislike analysis using EEG: determination of most discriminative channels and frequencies. Comput Methods Progr Biomed. 2014;113(2):705–713. doi: 10.1016/j.cmpb.2013.11.010. [DOI] [PubMed] [Google Scholar]

- Zajonc RB. Mere exposure: a gateway to the subliminal. Curr Dir Psychol Sci. 2001;10(6):224–228. doi: 10.1111/1467-8721.00154. [DOI] [Google Scholar]