Abstract

Behavioral studies in many species and studies in robotics have demonstrated two sources of information critical for visually-guided navigation: sense (left-right) information and egocentric distance (proximal-distal) information. A recent fMRI study found sensitivity to sense information in two scene-selective cortical regions, the retrosplenial complex (RSC) and the occipital place area (OPA), consistent with hypotheses that these regions play a role in human navigation. Surprisingly, however, another scene-selective region, the parahippocampal place area (PPA), was not sensitive to sense information, challenging hypotheses that this region is directly involved in navigation. Here we examined how these regions encode egocentric distance information (e.g., a house seen from close up versus far away), another type of information crucial for navigation. Using fMRI adaptation and a regions-of-interest analysis approach in human adults, we found sensitivity to egocentric distance information in RSC and OPA, while PPA was not sensitive to such information. These findings further support that RSC and OPA are directly involved in navigation, while PPA is not, consistent with the hypothesis that scenes may be processed by distinct systems guiding navigation and recognition.

Keywords: Scene Recognition, Navigation, Occipital Place Area (OPA), Parahippocampal Place Area (PPA), Retrosplenial Complex (RSC)

1. INTRODUCTION

The navigability of a scene is completely different when mirror reversed (e.g., walking through a cluttered room to exit a door either on the left or right), or when viewed from a proximal or distal perspective (e.g., walking to a house that is either 50 feet or 500 feet in front of you). Indeed, behavioral evidence has demonstrated that both sense (left-right) and egocentric distance (proximal-distal) information are used in navigation by insects (Wehner et al. 1996), fish (Sovrano et al. 2002), pigeons (Gray et al. 2004), rats (Cheng 1986), rhesus monkeys (Gouteux et al. 2001), and humans (Hermer and Spelke 1994; Fajen and Warren 2003). Similarly, studies in robotics highlight the necessity of sense and egocentric distance information for successful visually-guided navigation (Schoner et al. 1995). The term navigation has been defined by the above studies and many other reports as a process of relating one’s egocentric system to fixed points in the world as one traverses the environment (Gallistel, 1990; Wang and Spelke 2002). Here we use this standard definition of navigation.

A recent fMRI adaptation study (Dilks et al. 2011) found sensitivity to one of the two critical types of information guiding navigation (i.e., sense information) in two human scene-selective cortical regions, the retrosplenial complex (RSC) (Maguire 2001), and the occipital place area (OPA) (Dilks et al. 2013), also referred to as the transverse occipital sulcus (Grill-Spector 2003), consistent with hypotheses that these regions play a role in human navigation (Maguire, 2001; Epstein 2008; Dilks et al., 2011). By contrast, another scene-selective region, the parahippocampal place area (PPA) (Epstein and Kanwisher 1998), was not sensitive to sense information, challenging hypotheses that this region is directly involved in navigation (Epstein and Kanwisher 1998; Ghaem et al. 1997; Janzen and van Turennout 2004; Cheng and Newcombe 2005; Rosenbaum et al. 2004; Rauchs et al. 2008; Spelke et al. 2010). Here we investigate how these regions encode egocentric distance information (e.g., a house seen from close up versus far away), another type of information crucial for navigation. Given that RSC and OPA are sensitive to sense information – one type of information that is crucial for navigation – we predict that these regions will also be sensitive to egocentric distance information. By contrast, since PPA is not sensitive to sense information, we predict that this region will also not be sensitive to egocentric distance information.

To test our predictions, we used an event-related fMRI adaptation paradigm (Grill-Spector and Malach 2001) in human adults. Participants viewed trials consisting of two successively presented images of either scenes or objects. Each pair of images consisted of one of the following: (1) the same image presented twice; (2) two completely different images; or (3) an image viewed from either a proximal or distal perspective followed by the opposite version of the same stimulus. If scene representations in scene-selective cortex are sensitive to egocentric distance information, then images of the same scene viewed from proximal and distal perspectives will be treated as different images, producing no adaptation across distance changes in scene-selective cortex. On the other hand, if scene representations are not sensitive to egocentric distance information, then images of the same scene viewed from proximal and distal perspectives will be treated as the same image, and the neural activity in scene-selective cortex will show adaptation across egocentric distance changes. We examined the representation of egocentric distance information in the three known scene-selective regions (PPA, RSC, and OPA) in human cortex.

2. METHODS

2.1. Participants

Thirty healthy individuals (ages 18–54; 17 females; 26 right handed) were recruited for the experiment. All participants gave informed consent. All had normal or corrected to normal vision. One participant was excluded for excessive motion, and another participant did not complete the scan due to claustrophobia. Thus, we report the results from 28 participants.

2.2. Design

We localized scene-selective regions of interest (ROIs) and then used an independent set of data to investigate the responses of these regions to pairs of scenes or objects that were identical, different, or varied in their perceived egocentric distance. For the localizer scans, we used a standard method described previously to identify ROIs (Epstein and Kanwisher 1998). Specifically, a blocked design was used in which participants viewed images of faces, objects, scenes, and scrambled objects. Each participant completed 3 runs. Each run was 336 s long and consisted of 4 blocks per stimulus category. The order of the stimulus category blocks in each run was palindromic (e.g., faces, objects, scenes, scrambled objects, scrambled objects, scenes, objects, faces) and was randomized across runs. Each block contained 20 images from the same category for a total of 16 s blocks. Each image was presented for 300 ms, followed by a 500 ms interstimulus interval (ISI). We also included five 16 s fixation blocks: one at the beginning, three in the middle interleaved between each palindrome, and one at the end of each run. Participants performed a one-back task, responding every time the same image was presented twice in a row.

For the experimental scans, participants completed 8 runs each with 96 experimental trials (48 ‘scene’ trials and 48 ‘object’ trials, intermixed), and an average of 47 fixation trials, used as a baseline condition. Each run was 397 s long. On each fixation trial, a white fixation cross (subtending 0.5° of visual angle) was displayed on a gray background. On each non-fixation trial, an image of either a scene or an object was presented for 300 ms, followed by an ISI of 400 ms and then by another image of the same stimulus category presented for 300 ms – following the method of Kourtzi and Kanwisher (2001) and many subsequent papers. After presentation of the second image, there was a jittered interval of ~3 s (ranging from 1 to 6 s) before the next trial began. Each pair of images consisted of one of the following: (1) the same image presented twice (Same condition); (2) two completely different images (Different condition); or (3) an image viewed from either a proximal or distal perspective followed by the opposite perspective of that same image (Distance condition) (Fig. 1A). In total, each subject viewed 128 trials of each condition (Same, Different, Distance). Note, in the Distance condition, we were careful to manipulate only perceived egocentric distance information, while not changing the angle from which the scenes were viewed. To ensure that viewing angle did not change between the Distance conditions in our stimuli, we first identified the same point in both the proximal and distal perspectives of each image (e.g., a window) and measured its distance (in pixels) away from two other points (to the right and left) in each image (e.g., fence posts). Next, we calculated the ratio of the distance from the central point and the point on the left to the distance between the central point and the point on the right, and finally, compared the ratios between the two perspectives. We found no difference in viewing angle between the near and far images of scenes (mean ratio: near = 2.35, far = 2.33; t(9) = 0.25, p = 0.81). Further, there were equal numbers of trials in which a proximal image preceded a distal image, and vice versa. This aspect of the experimental design is important because it allowed us to test whether the effects we measured in our experiment were indeed due to changes in perceived egocentric distance, and not due to i) angle changes, ii) the potential perception of navigating through the scene, which might be perceived if every trial consisted of a proximal image preceding a distal image, or iii) ‘boundary extension’ (Intraub and Richardson 1989), which is discussed in more detail in the Results sections. Trial sequence was generated using the Free-Surfer optseq2 function, optimized for the most accurate estimations of hemodynamic response (Burock et al. 1998; Dale et al. 1999). The images used as stimuli were photographs of 10 different scenes (5 indoor, 5 outdoor) from both a proximal and distal perspective. Thus, there were 20 different images of scenes (Supplementary Figure 1). Each set of images was created by first taking a photo from a distal perspective, and then walking in a straight line ~20 feet to ~100 feet and taking a photo from this proximal perspective. The camera zoom function was never utilized when generating the stimulus set to ensure that the stimuli did not induce a percept of zooming in/out between the ‘near’ and ‘far’ conditions. Two independent groups of participants rated the stimuli as either ‘near’ or ‘far’ to ensure that changes in our stimuli were indeed perceived as changes in egocentric distance during the fMRI experiment, and that the stimuli spanned a wide range of distances (Fig. 1B). One group was sitting upright at a computer when making these ratings, while the other group was supine in a mock scanner when making the ratings. The second group was included to make certain that lying down in the scanner did not affect judgments of egocentric distance. Indeed the near/far judgments were highly correlated across groups (r2 = 0.95, p < 0.0001). Thus, we can conclude that changes in egocentric distance in the stimuli are perceived as nearer or farther away both while sitting upright at a computer, and while supine in a scanner. Similarly, we included 10 images of objects viewed from both proximal and distal perspectives against backgrounds of varying textures to test the specificity of distance information in the scene-selective regions. Importantly, the background textures provided depth cues ensuring that participants perceived the objects as proximal and distal, and not simply as small or big. All stimuli were grayscale and 9° × 7° in size. Subjects were instructed to remain fixated on a white cross that was presented on the screen in between each pair of stimuli. Each image was presented at the central fixation and then moved 1° of visual angle either left or right. Participants performed an orthogonal task (not related to whether it was proximal or distal, or whether an image was a scene or object), responding via button box whether images in a pair were moving in the same or opposite direction. The motion task was particularly chosen to eliminate any early retinotopic confounds, and to further disrupt the potential perception of navigating through the scene.

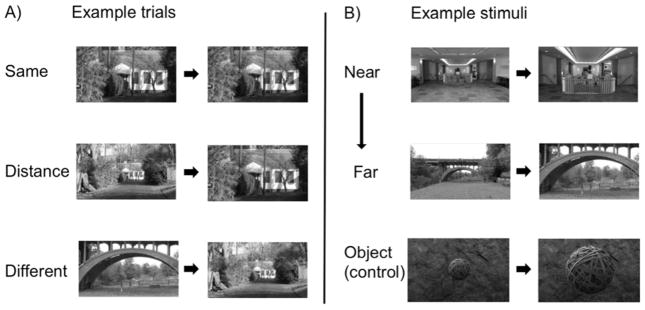

Figure 1.

(A) Example trials from each condition (i.e., Same, Distance, Different). (B) Example stimuli from the Distance condition, ranging from Near to Far. An independent set of 25 participants rated the stimuli as either near or far to ensure that our stimuli spanned a wide range of distances. The top row of panel B shows the scene pair that participants rated as the nearest distance change, the middle row as a medium distance change, and the next row as the farthest distance change. The bottom row is an example of an object trial that depicts the object from both proximal and distal perspectives against a background texture that provided depth cues, ensuring that participants perceived the objects as proximal and distal, and not simply as small or big.

2.3. fMRI scanning

Scanning was done on a 3T Siemens Trio scanner at the Facility for Education and Research in Neuroscience (FERN) at Emory University (Atlanta, GA). Functional images were acquired using a 32-channel head matrix coil and a gradient echo single-shot echo planar imaging sequence. Sixteen slices were acquired for both the localizer scans (repetition time = 2 s), and the experimental scans (repetition time = 1 s). For all scans: echo time = 30 ms; voxel size = 3.1 × 3.1 × 4.0 mm with a 0.4 mm interslice gap; and slices were oriented approximately between perpendicular and parallel to the calcarine sulcus, covering the occipital and temporal lobes. Wholebrain, high-resolution T1 weighted anatomical images were also acquired for each participant for anatomical localization.

2.5 Data analysis

fMRI data analysis was conducted using the FSL software (Smith et al. 2004) and custom MATLAB code. Before statistical analysis, images were skull-stripped (Smith 2002), and registered to the subjects’ T1 weighted anatomical image (Jenkinson et al. 2002). Additionally, localizer data, but not experimental data, were spatially smoothed (6 mm kernel), as described previously (e.g., Dilks et al., 2011), detrended, and fit using a double-gamma function. However, we also analyzed the experimental data after spatially smoothing with a 6 mm kernel, and the overall results did not change. After preprocessing, scene-selective regions PPA, RSC, and OPA were bilaterally defined in each participant (using data from the independent localizer scans) as those regions that responded more strongly to scenes than objects (p < 10−4, uncorrected) – following the method of Epstein and Kanwisher (1998) (Fig. 2). PPA was identified bilaterally in all 28 participants, RSC was identified in the right hemisphere in all 28 participants, and in the left hemisphere in 26 participants, and OPA was identified bilaterally in 26 participants. As a control region, we also functionally defined a bilateral foveal confluence (FC) ROI—the region of cortex responding to foveal stimulation (Dougherty et al. 2003). Specifically, the FC ROI was bilaterally defined in each of the 28 participants (using data from the localizer scans) as the regions that responded more strongly to scrambled objects than to intact objects (p < 10−6, uncorrected), as described previously (MacEvoy and Yang 2012; Linsley and MacEvoy 2014; Persichetti et al. 2015). For each ROI of each participant, the mean time courses (percentage signal change relative to a baseline fixation) for the experimental conditions (i.e., Same, Different, Distance) were extracted across voxels.

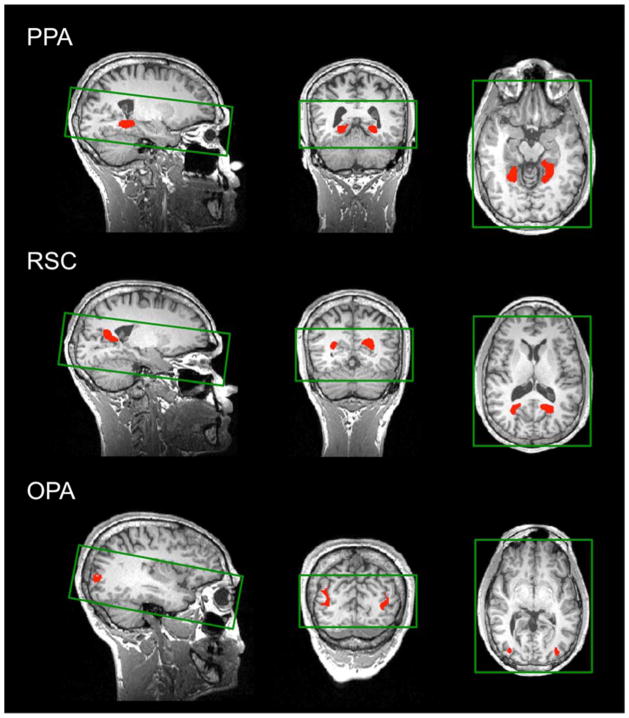

Figure 2.

Using independent data, PPA, RSC, and OPA (shown in red) were localized as regions that responded more strongly to scenes than objects (p < 10−4). The green rectangles overlaid on the brain images show the slice prescription used in the experiment (16 slices). Note that the slice prescription covered the entirety of the occipital and temporal lobes in all subjects, and thus we were able to capture all of the ROIs.

Next, the Same and Different condition time courses were separately averaged across the scene-selective ROIs and participants to identify an average response across ‘peak’ time points. More specifically, we identified the first time point to exhibit the expected adaptation effect (i.e., Different > Same) to the last time point to exhibit adaptation. We determined which time points showed the expected adaptation effect by conducting a paired t-test between the Different and Same conditions at each time point. We found that the Different condition was significantly greater than the Same condition at all time points from 5 s to 9 s after trial onset (all p values < 0.05). Conversely, none of the time points before 5 s or after 9 s showed this adaptation effect (all p values > 0.50). Finally, for each participant, these average responses for each scene-selective ROI were then extracted for each condition (Different, Distance, Same), and repeated-measures ANOVAs were performed on each.

A 3 (ROI: PPA, OPA, RSC) x 3 (condition: Different, Distance, Same) x 2 (hemisphere: Left, Right) repeated-measures ANOVA was conducted. We found no significant ROI x condition x hemisphere interaction at the average response (F(4,96) = 0.97, p = 0.43, ηP2 = 0.04). Thus, both hemispheres were collapsed for further analyses.

3. RESULTS

As predicted, we found that RSC and OPA were sensitive to egocentric distance information in images of scenes. For RSC, a 3 level (condition: Different, Distance, Same) repeated-measures ANOVA on the average response from 5 s to 9 s (see Methods for details) revealed a significant main effect of condition (F(2,52) = 6.04, p < 0.005, ηP2 = 0.19), with a significantly greater response to the Different condition compared to the Same condition (main effect contrast, p < 0.001, d = 0.91), and a marginally significant difference between the Distance and Same conditions (main effect contrast, p = 0.05, d = 0.47). There was no significant difference between the Distance and Different conditions (main effect contrast, p = 0.17, d = 0.40) (Fig. 3). These results demonstrate the expected fMRI adaptation effect (i.e., Different > Same) in RSC, but no adaptation across perceived egocentric distance (i.e., Distance > Same), revealing that RSC is sensitive to changes in egocentric distance information in images of scenes.

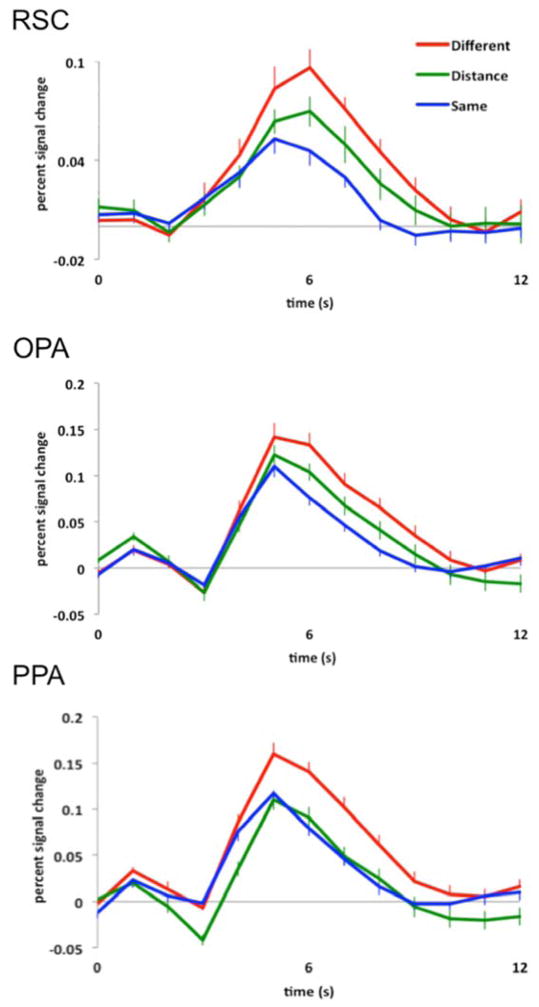

Figure 3.

Hemodynamic time courses (percentage signal change) of three scene selective regions of cortex, RSC, OPA, and PPA to (1) two completely different images of scenes (red line labeled “Different”), (2) the same image of a scene presented twice (blue line labeled “Same”), and (3) an image of a scene viewed from either a proximal or distal perspective followed by the opposite version of the same stimulus (green line labeled “Distance”). Note sensitivity to egocentric distance information in both RSC and OPA, but invariance to such information in PPA.

Similarly, for OPA, a 3 level (condition: Different, Distance, Same) repeated measures ANOVA on the average response revealed a significant main effect of condition (F(2,50) = 7.93, p < 0.001, ηP2 = 0.24), with a significantly greater response to the Different condition compared to the Same condition (main effect contrast, p < 0.001, d = 1.05), and a significant difference between the Distance and Same conditions (main effect contrast, p < 0.05, d = 0.62). There was no significant difference between the Distance and Different conditions (main effect contrast, p = 0.12, d = 0.43) (Fig. 3). These results demonstrate the expected fMRI adaptation effect (i.e., Different > Same) in OPA, but no adaptation across perceived egocentric distance (i.e., Distance > Same), revealing that OPA is sensitive to changes in egocentric distance information in images of scenes.

By contrast, PPA was not sensitive to egocentric distance information in images of scenes. A 3 level (condition: Different, Distance, Same) repeated-measures ANOVA on the average response revealed a significant main effect of condition (F(2,54) = 11.36, p < 0.001, ηP2 = 0.30), with a significantly greater response to the Different condition compared to either the Same or Distance conditions (main effect contrasts, both p values < 0.005, both d’s > 0.90), and no significant difference between the Distance and Same conditions (main effects contrast, p = 0.73, d = 0.08) (Fig. 3).

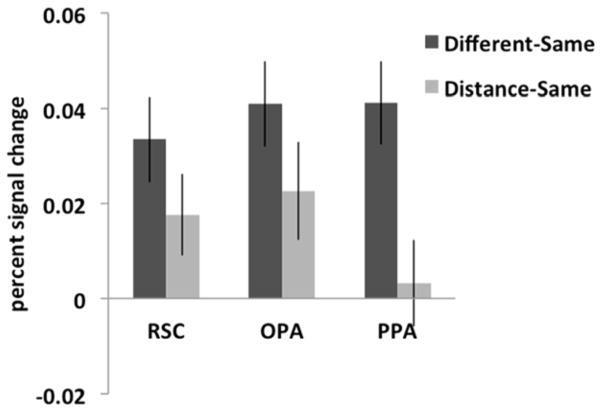

The above analyses suggest that the three scene-selective regions encode egocentric distance information in images of scenes differently, so we directly tested this suggestion by comparing the differences in response across the three ROIs. Specifically, for each ROI the difference between the average responses for two different images of scenes and the same images (i.e., expected adaptation) was compared to the difference between the average responses for proximal vs. distal images and the same images (Fig. 4). Crucially, a 3 (ROI: OPA, RSC, PPA) x 2 (difference score: Different-Same, Distance-Same) repeated-measures ANOVA revealed a significant interaction (F(2,50) = 4.35, p < 0.02, ηP2 = 0.15), with a significantly greater difference between the Different and Same conditions than between the Distance and Same conditions for PPA, relative to both RSC and OPA (interaction contrasts, both p values < 0.05, both ηP2 > 0.15). There was not a significant difference in the responses between the RSC and OPA (interaction contrast, p = 0.85, ηP2 = 0.001). These results show that the scene selective regions encode egocentric distance information differently: RSC and OPA are sensitive to egocentric distance information in scenes, while PPA is not. To further probe this difference across the three ROIs, we conducted three additional analyses. First, results from paired t-tests comparing the Different-Same and Distance-Same conditions for each ROI independently revealed no significant difference in RSC or OPA (t(26)=1.43, p=0.17; t(25)=1.60, p=0.12, respectively), but a significant difference in PPA (t(27)=3.49, p<0.01). Second, results from one-sample t-tests comparing the Distance-Same condition to 0 for each ROI independently revealed no significant difference in RSC or OPA (t(26)=2.10; t(25)=2.19, both p-values<0.05), but a significant difference in PPA (t(27)=0.35, p=0.73).

Figure 4.

For each scene-selective ROI, the difference between the peak responses for two different images of scenes and the same images (labeled “Different-Same”) was compared to the difference between the peak responses for two images of the same scene viewed from either a proximal or distal perspective and the opposite version of the same image and the same images (labeled “Distance-Same”). A 3 (ROI: RSC, OPA, PPA) x 2 (difference score: Different-Same, Distance-Same) repeated-measures ANOVA revealed a significant interaction (F(2,50) = 4.35, p < 0.02, ηP2 = 0.15), with a significantly greater difference between the Different and Same conditions than between the Distance and Same conditions for PPA, relative to RSC or OPA. This result suggests that the scene-selective regions represent egocentric distance information differently: RSC and OPA are sensitive to egocentric distance information in scenes, while PPA is not sensitive to such information.

Third, we ran a one-way repeated-measures ANOVA asking whether the signal change for the Distance-Same condition was different across the three regions. Indeed, we found a significant effect (F(2,50)=3.43, p<0.05), with PPA responding significantly less to Distance-Same compared to RSC and OPA (both p-values < 0.02), and no difference between RSC and OPA (p=0.67). Taken together, these results demonstrate significant adaptation to egocentric distance information in PPA only, not RSC or OPA.

But might it be the case that the sensitivity to egocentric distance information in images of scenes in RSC or OPA is due to a feed-forward effect from earlier visual areas, rather than indicative of egocentric distance sensitivity to scenes in particular? While we do not think this could be the case (because participants were asked to fixate, and thus the stimuli were moving across the fovea), we directly addressed this question by comparing the average response to the three conditions in an independently defined region of cortex representing the fovea, and found that ‘foveal confluence’ did not even show fMRI adaptation for Different versus Same scenes (main effect contrast, p = 0.30, d = 0.22), thus confirming that neither OPA nor RSC’s sensitivity to egocentric distance information in scenes is due to adaptation in early visual cortex.

Finally, might it be the case that the invariance to perceived egocentric distance in images of scenes in PPA is due to ‘boundary extension’, instead of actual insensitivity to egocentric distance information? Boundary extension is a process in which people, when asked to remember a photograph of a scene, remember a more expansive view than was shown in the original photograph. Thus, the representation of the scene extends beyond the pictures boundaries, particularly when the view is close up (Intraub and Richardson 1989). Consistent with these behavioral data, Park and colleagues (2007), using an fMRI adaptation paradigm, found adaptation in PPA when a wide-view of a scene followed a close-up view of a scene, but not when the wide view preceded the close-up view, and concluded that PPA is involved in boundary extension. If the effect in our study can be explained by boundary extension, then we should see the same pattern of results as reported by Park and colleagues. To test this hypothesis, we divided the Distance trials in half: one half was made up of trials in which a wide-view of a scene followed a close-up view of a scene, and the other half was the reverse condition. If we are observing a boundary extension effect in PPA, then adaptation should be greater when a wide-view of a scene is followed by a close-up view than on the reverse condition. A 4 level (condition: Different, Wide-Close, Close-Wide, Same) repeated measures ANOVA on the average response revealed a significant main effect of condition (F(3,81) = 5.22, p < 0.005, ηP2 = 0.16), with a significantly greater response to the Different versus Same condition (p < 0.001, d = 0.92), demonstrating the expected fMRI adaptation. Crucially, however, there were no significant differences between the Wide-Close, Close-Wide, or Same conditions (p values > 0.40, both d’s < 0.20). Thus, these results confirm that the effects found in our study are due to insensitivity to egocentric distance information in images of scenes in PPA, rather than to boundary extension. The reason for this conflicting result is not entirely clear, but could be due to differences between the two studies with respect to i) the level of processing (i.e., perception versus memory: boundary extension does not occur while sensory information is present, as is the case in this study, but rather involves distortion of the scene representation over time) (Intraub and Richardson 1989), ii) task demands (i.e., in our study participants were performing an orthogonal task, while in Park et al.’s study participants were asked to memorize the layout and overall details of the scene), or iii) the definition of the PPA (we defined the PPA using the contrast scenes versus objects, while Park et al. defined the PPA using the contrast scenes versus faces).

Given that our stimuli included objects as well as scenes, we were also able to investigate how RSC, OPA, and PPA might respond to changes in egocentric distance information in images of objects (the non-preferred category). We found that none of the responses within scene-selective regions exhibit the expected adaptation effect (i.e., Different > Same) to object stimuli (all p values > 0.15). Thus, the question of sensitivity to egocentric distance information in objects for scene-selective regions is moot.

4. DISCUSSION

The current study asked how scene-selective regions encode egocentric distance information. As predicted, the results demonstrate that the regions of scene-selective cortex are differentially sensitive to perceived egocentric distance information. Specifically, using an fMRI adaptation paradigm we found that two scene-selective regions (i.e., RSC and OPA) were sensitive to egocentric distance information, while the PPA, another scene-selective region, was not sensitive to such information. These results are specific to images of scenes, not to images of objects, and cannot be explained by viewing angle changes across scene images, by a feed-forward effect from earlier visual areas, or by ‘boundary extension’.

But, might it be the case that the sensitivity to egocentric distance information in images of scenes in RSC or OPA is simply due to size changes of features in the scenes (i.e., proximal features in the scene subtend larger visual angles than distal features), rather than characteristic of egocentric distance sensitivity to scenes in particular? We do not think this could be the case because as a scene image switches from a distal to proximal perspective (and vice versa) some features of the scene (e.g., a tree or a bridge) increase (or decrease) in size, while other features (e.g., the ground plane or sky) decrease (or increase) in size. Given the varying size changes within each pair of scene images, it seems highly unlikely then that a scene region that simply tracks size (i.e., responding to either ‘big’ or ‘small’ features in the scene) would respond, and thus size alone cannot explain the sensitivity to changes in egocentric distance in scenes found in RSC and OPA.

Our finding that PPA is not sensitive to egocentric distance information, one kind of information critical for navigation, provides further evidence challenging the hypothesis that PPA is directly involved in navigation (Epstein and Kanwisher 1998; Ghaem et al. 1997; Janzen and van Turennout 2004; Cheng and Newcombe 2005; Rosenbaum et al. 2004; Rauchs et al. 2008; Spelke et al. 2010). Recall that Dilks and colleagues (2011) also found that PPA was not sensitive to sense information, another type of information crucial for navigation. Rather, we hypothesize then that human scene processing may be composed of two systems: one responsible for navigation, including RSC and OPA, and another responsible for the recognition of scene category, including PPA. While navigation is no doubt crucial to our successful functioning (e.g., walking to the market or even getting around one’s own house), it is reasonable to argue that the ability to recognize a scene as belonging to a specific category (e.g., kitchen, beach, or city) also plays a necessary role in one’s everyday life. After all, our ability to categorize a scene makes it possible to know what to expect from, and how to behave in, different environments (Bar 2004). Taken together, these arguments support the necessity of both navigation and scene categorization systems, and the current data suggest that visual scene processing may not serve a single purpose (i.e., for navigation), but rather has multiple purposes guiding us not only through our environments, but also guiding our behaviors within them. If our two-systems-for-scene-processing hypothesis is correct, then the PPA may contribute to the ‘categorization system’, while the RSC, OPA, or both may contribute to the ‘navigation system’. Indeed, support for this hypothesis comes from two multi-voxel pattern analysis (MVPA) studies demonstrating that while activity patterns in both PPA and RSC contain information about scene category (e.g., beaches, forests, highways), only the activation patterns in PPA, not RSC, are related to behavioral performance on a scene categorization task (Walther et al. 2011; Walther et al. 2009).

Our hypothesis that the scene processing system may be divided into two systems might sound familiar. For example, Epstein (2008) proposed that human scene processing is divided into two systems – both serving the primary function of navigation – with PPA representing the local scene, and RSC supporting orientation within the broader environment. This hypothesis is quite different from what we propose here. While we agree that the primary role of the RSC is navigation, we disagree that the PPA shares this role. Rather, we hypothesize that the PPA is a part of a functionally distinct pathway devoted to scene recognition and categorization. Thus, our two-systems-for-scene-processing hypothesis is instead more like the two functionally distinct systems of visual object processing proposed by Goodale and Milner (1992), with one system responsible for recognition, and another for visually-guided action. Note that our hypothesis of two distinct systems for human scene processing – a categorization system including PPA, and a navigation system including RSC and OPA – does not mean that the two systems cannot and do not interact. Indeed, two recent studies found functional correlations between the RSC and anterior portions of the PPA, and between the OPA and posterior PPA (Baldassano et al. 2013; Nasr et al. 2013), suggesting these two regions are functionally (and most likely anatomically) connected, thereby facilitating crosstalk between the two systems.

It is well established that PPA responds to ‘spatial layout’, or the geometry of local space, initially based on evidence that this region responds significantly more strongly to images of sparse, empty rooms than to these same images when the walls, floors and ceilings have been fractured and rearranged (Epstein and Kanwisher 1998). At first glance, the idea that PPA encodes geometric information may seem contradictory to its involvement in the recognition of scene category. In fact, such spatial layout representation in PPA has even led to hypotheses that the PPA might be the neural locus for a ‘geometry module’ (Hermer and Spelke 1994), necessary for reorientation and navigation (Epstein and Kanwisher 1998). But spatial layout information need not be used for navigation only, and could also easily facilitate the recognition of scene category. Indeed, several behavioral and computer vision studies have found that scenes can be categorized based on their overall spatial layout (Walther et al. 2011; Oliva and Schyns 1997; Oliva and Torralba 2001; Greene and Oliva 2009). However, spatial layout representation in PPA is only half of the story. A number of recent studies have found that PPA is also sensitive to object information, especially object information that might facilitate the categorization of a scene. For example, several studies found that PPA responds to i) objects that are good exemplars of specific scenes (e.g., a bed or a refrigerator) (MacEvoy and Epstein 2009; Harel et al. 2013), ii) objects that are strongly associated with a given context (e.g., a toaster) versus low ‘contextual’ objects (e.g., an apple) (Bar 2004; Bar and Aminoff 2003; Bar et al. 2008), and iii) objects that are large and not portable, thus defining the space around them (e.g., a bed or a couch versus a small fan or a box) (Mullally and Maguire 2011). Taken together, the above findings are consistent with our idea that PPA may be involved in the recognition of scene category.

In conclusion, we have shown that RSC and OPA are sensitive to egocentric distance information in images of scenes, while PPA is not. This finding coupled with the finding that RSC and OPA are also sensitive to sense information, while PPA is not, suggest that the computations directly involved in navigation do not occur in the PPA. These results are consistent with the hypothesis that there exist two distinct pathways for processing scenes: one for navigation, including RSC and OPA, and another for the recognition of scene category, including PPA. Ongoing studies are directly testing this hypothesis by correlating behavioral measures of navigation and categorization tasks to the fMRI signal in each scene-selective ROI. Furthermore, the current study does not distinguish the precise roles of RSC and OPA in navigation. It is possible that RSC and OPA may both be involved in navigation more generally, but support different functions within navigation. Specifically, OPA may be involved in navigating the local visual environment (Kamps et al., submitted), while RSC is more involved in more complex forms of navigation (i.e., orienting the individual to the broad environment) (Vass & Epstein, 2013; Marchette et al. 2014). Ongoing studies are investigating this possibility.

Supplementary Material

Acknowledgments

We would like to thank the Facility for Education and Research in Neuroscience (FERN) Imaging Center in the Department of Psychology, Emory University, Atlanta, GA. We also thank Frederik S. Kamps and Samuel Weiller for insightful conversation and technical support, as well as Russell Epstein and the members of his lab for helpful feedback. This work was supported by Emory College, Emory University (DD) and National Eye Institute grant T32 EY7092-28 (AP). The authors declare no competing financial interests.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Baldassano C, Beck DM, Fei-Fei L. Differential connectivity within the Parahippocampal Place Area. NeuroImage. 2013;75:228–237. doi: 10.1016/j.neuroimage.2013.02.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5:617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E. Cortical analysis of visual context. Neuron. 2003;38:347–358. doi: 10.1016/s0896-6273(03)00167-3. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E, Schacter DL. Scenes Unseen: The Parahippocampal Cortex Intrinsically Subserves Contextual Associations, Not Scenes or Places Per Se. J Neurosci. 2008;28:8539–8544. doi: 10.1523/JNEUROSCI.0987-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burock MA, Buckner RL, Woldorff MG, Rosen BR, Dale AM. Randomized event-related experimental designs allow for extremely rapid presentation rates using functional MRI. Neuroreport. 1998;9:3737–3739. doi: 10.1097/00001756-199811160-00030. [DOI] [PubMed] [Google Scholar]

- Cheng K. A purely geometric module in the rat’s spatial representation. Cognition. 1986;23:149–178. doi: 10.1016/0010-0277(86)90041-7. [DOI] [PubMed] [Google Scholar]

- Cheng K, Newcombe NS. Is there a geometric module for spatial orientation? Squaring theory and evidence. Psychon Bull Rev. 2005;12:1–23. doi: 10.3758/bf03196346. [DOI] [PubMed] [Google Scholar]

- Dale AM, Greve DN, Burock MA. Stimulus sequences for event-related fMRI. Hum Brain Mapp. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dilks DD, Julian JB, Kubilius J, Spelke ES, Kanwisher N. Mirror-image sensitivity and invariance in object and scene processing pathways. J Neurosci Off J Soc Neurosci. 2011;31:11305–11312. doi: 10.1523/JNEUROSCI.1935-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dilks DD, Julian JB, Paunov AM, Kanwisher N. The occipital place area is causally and selectively involved in scene perception. J Neurosci Off J Soc Neurosci. 2013;33:1331–1336a. doi: 10.1523/JNEUROSCI.4081-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dougherty RF, Koch VM, Brewer AA, Fischer B, Modersitzki J, Wandell BA. Visual field representations and locations of visual areas V1/2/3 in human visual cortex. J Vis. 2003;3:586–598. doi: 10.1167/3.10.1. [DOI] [PubMed] [Google Scholar]

- Epstein R, Graham KS, Downing PE. Viewpoint-specific scene representations in human parahippocampal cortex. Neuron. 2003;37:865–876. doi: 10.1016/s0896-6273(03)00117-x. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Epstein RA. Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn Sci. 2008;12:388–396. doi: 10.1016/j.tics.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein RA, Higgins JS. Differential parahippocampal and retrosplenial involvement in three types of visual scene recognition. Cereb Cortex. 2007;17:1680–1693. doi: 10.1093/cercor/bhl079. [DOI] [PubMed] [Google Scholar]

- Epstein RA, Higgins JS, Thompson-Schill SL. Learning places from views: variation in scene processing as a function of experience and navigational ability. J Cogn Neurosci. 2005;17:73–83. doi: 10.1162/0898929052879987. [DOI] [PubMed] [Google Scholar]

- Fajen BR, Warren WH. Behavioral dynamics of steering, obstable avoidance, and route selection. J Exp Psychol Hum Percept Perform. 2003;29:343–362. doi: 10.1037/0096-1523.29.2.343. [DOI] [PubMed] [Google Scholar]

- Gallistel CR. The Organization of Learning. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- Ghaem O, Mellet E, Crivello F, Tzourio N, Mazoyer B, Berthoz A, Denis M. Mental navigation along memorized routes activates the hippocampus, precuneus, and insula. Neuroreport. 1997;8:739–744. doi: 10.1097/00001756-199702100-00032. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Gouteux S, Thinus-Blanc C, Vauclair J. Rhesus monkeys use geometric and nongeometric information during a reorientation task. J Exp Psychol Gen. 2001;130:505–519. doi: 10.1037//0096-3445.130.3.505. [DOI] [PubMed] [Google Scholar]

- Gray ER, Spetch ML, Kelly DM, Nguyen A. Searching in the Center: Pigeons (Columba livid) Encode Relative Distance From Walls of an Enclosure. J Comp Psychol. 2004;118:113–117. doi: 10.1037/0735-7036.118.1.113. [DOI] [PubMed] [Google Scholar]

- Greene M, Oliva A. Recognition of natural scenes from global properties: Seeing the forest without representing the trees. Cognit Psychol. 2009;58:137–176. doi: 10.1016/j.cogpsych.2008.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K. The neural basis of object perception. Curr Opin Neurobiol. 2003;13:159–166. doi: 10.1016/s0959-4388(03)00040-0. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol Amst. 2001;107:293–321. doi: 10.1016/s0001-6918(01)00019-1. [DOI] [PubMed] [Google Scholar]

- Harel A, Kravitz DJ, Baker CI. Deconstructing visual scenes in cortex: gradients of object and spatial layout information. Cereb Cortex N Y N 1991. 2013;23:947–957. doi: 10.1093/cercor/bhs091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hermer L, Spelke ES. A geometric process for spatial reorientation in young children. Nature. 1994;370:57–59. doi: 10.1038/370057a0. [DOI] [PubMed] [Google Scholar]

- Intraub H, Richardson M. Wide-angle memories of close-up scenes. J Exp Psychol Learn Mem Cogn. 1989;15:179–187. doi: 10.1037//0278-7393.15.2.179. [DOI] [PubMed] [Google Scholar]

- Janzen G, van Turennout M. Selective neural representation of objects relevant for navigation. Nat Neurosci. 2004;7:673–677. doi: 10.1038/nn1257. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved Optimization for the Robust and Accurate Linear Registration and Motion Correction of Brain Images. NeuroImage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Kamps FS, Julian JB, Kubilius J, Kanwisher N, Dilks DD. The occipital place area represents the local elements of scenes. doi: 10.1016/j.neuroimage.2016.02.062. submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Representation of perceived object shape by the human lateral occipital complex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Linsley D, MacEvoy SP. Evidence for participation by object-selective visual cortex in scene category judgments. J Vis. 2014;14:19–19. doi: 10.1167/14.9.19. [DOI] [PubMed] [Google Scholar]

- MacEvoy SP, Epstein RA. Decoding the Representation of Multiple Simultaneous Objects in Human Occipitotemporal Cortex. Curr Biol. 2009;19:943–947. doi: 10.1016/j.cub.2009.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacEvoy SP, Yang Z. Joint neuronal tuning for object form and position in the human lateral occipital complex. NeuroImage. 2012;63:1901–1908. doi: 10.1016/j.neuroimage.2012.08.043. [DOI] [PubMed] [Google Scholar]

- Maguire EA. The retrosplenial contribution to human navigation: a review of lesion and neuroimaging findings. Scand J Psychol. 2001;42:225–238. doi: 10.1111/1467-9450.00233. [DOI] [PubMed] [Google Scholar]

- Marchette SA, Vass LK, Ryan J, Epstein RA. Anchoring the neural compass: coding of local spatial reference frames in human medial parietal lobe. Nat Neurosci. 2014;17:1598–1606. doi: 10.1038/nn.3834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullally SL, Maguire EA. A new role for the parahippocampal cortex in representing space. J Neurosci Off J Soc Neurosci. 2011;31:7441–7449. doi: 10.1523/JNEUROSCI.0267-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasr S, Devaney KJ, Tootell RBH. Spatial encoding and underlying circuitry in scene-selective cortex. NeuroImage. 2013;83:892–900. doi: 10.1016/j.neuroimage.2013.07.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliva A, Schyns PG. Coarse Blobs or Fine Edges? Evidence That Information Diagnosticity Changes the Perception of Complex Visual Stimuli. Cognit Psychol. 1997;34:72–107. doi: 10.1006/cogp.1997.0667. [DOI] [PubMed] [Google Scholar]

- Oliva A, Torralba A. Modeling the shape of a scene: a holistic representation of the spatial envelope. Int J Comput Vis. 2001;42:145–175. [Google Scholar]

- Park S, Chun MM. Different roles of the parahippocampal place area (PPA) and retrosplenial cortex (RSC) in panoramic scene perception. Neuroimage. 2009;47:1747–1756. doi: 10.1016/j.neuroimage.2009.04.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park S, Intraub H, Yi D-J, Widders D, Chun MM. Beyond the Edges of a View: Boundary Extension in Human Scene-Selective Visual Cortex. Neuron. 2007;54:335–342. doi: 10.1016/j.neuron.2007.04.006. [DOI] [PubMed] [Google Scholar]

- Persichetti AS, Aguirre GK, Thompson-Schill SL. Value Is in the Eye of the Beholder: Early Visual Cortex Codes Monetary Value of Objects during a Diverted Attention Task. J Cogn Neurosci. 2015;27:893–901. doi: 10.1162/jocn_a_00760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauchs G, Orban P, Balteau E, Schmidt C, Degueldre C, Luxen A, Maquet P, Peigneux P. Partially segregated neural networks for spatial and contextual memory in virtual navigation. Hippocampus. 2008;18:503–518. doi: 10.1002/hipo.20411. [DOI] [PubMed] [Google Scholar]

- Rosenbaum RS, Ziegler M, Winocur G, Grady CL, Moscovitch M. “I have often walked down this street before”: fMRI studies on the hippocampus and other structures during mental navigation of an old environment. Hippocampus. 2004;14:826–835. doi: 10.1002/hipo.10218. [DOI] [PubMed] [Google Scholar]

- Schoner G, Dose M, Engels C. Dynamics of behavior: Theory and applications for autonomous robot architectures. Robot Auton Syst. 1995;16:213–245. [Google Scholar]

- Smith SM. Fast robust automated brain extraction. Hum Brain Mapp. 2002;17:143–55. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage. 2004;23(Suppl 1):S208–219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Sovrano VA, Bisazza A, Vallortigara G. Modularity and spatial reorientation in a simple mind: encoding of geometric and nongeometric properties of a spatial environment by fish. Cognition. 2002;85:B51–B59. doi: 10.1016/s0010-0277(02)00110-5. [DOI] [PubMed] [Google Scholar]

- Spelke E, Lee SA, Izard V. Beyond core knowledge: Natural geometry. Cogn Sci. 2010;34:863–884. doi: 10.1111/j.1551-6709.2010.01110.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vass LK, Epstein RA. Abstract Representations of Location and Facing Direction in the Human Brain. J Neurosci. 2013;33:6133–6142. doi: 10.1523/JNEUROSCI.3873-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walther DB, Caddigan E, Fei-Fei L, Beck DM. Natural Scene Categories Revealed in Distributed Patterns of Activity in the Human Brain. J Neurosci. 2009;29:10573–10581. doi: 10.1523/JNEUROSCI.0559-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walther DB, Chai B, Caddigan E, Beck DM, Fei-Fei L. Simple line drawings suffice for functional MRI decoding of natural scene categories. Proc Natl Acad Sci U S A. 2011;108:9661–9666. doi: 10.1073/pnas.1015666108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang RF, Spelke ES. Human spatial representation: insights from animals. Trends Cogn Sci. 2002;6:376–382. doi: 10.1016/s1364-6613(02)01961-7. [DOI] [PubMed] [Google Scholar]

- Wehner, Michel, Antonsen Visual navigation in insects: coupling of egocentric and geocentric information. J Exp Biol. 1996;199:129–140. doi: 10.1242/jeb.199.1.129. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.