Abstract

How are lexical representations retrieved during sign production? Similar to spoken languages, lexical representation in sign language must be accessed through semantics when naming pictures. However, it remains an open issue whether lexical representations in sign language can be accessed via routes that bypass semantics when retrieval is elicited by written words. Here we address this issue by exploring under which circumstances sign retrieval is sensitive to semantic context. To this end we replicate in sign language production the cumulative semantic cost: The observation that naming latencies increase monotonically with each additional within-category item that is named in a sequence of pictures. In the experiment reported here, deaf participants signed sequences of pictures or signed sequences of Italian written words using Italian Sign Language. The results showed a cumulative semantic cost in picture naming but, strikingly, not in word naming. This suggests that only picture naming required access to semantics, whereas deaf signers accessed the sign language lexicon directly (i.e., bypassing semantics) when naming written words. The implications of these findings for the architecture of the sign production system are discussed in the context of current models of lexical access in spoken language production.

The cognitive machinery that underlies language processing has always attracted the interest of many researchers. Psycholinguistic research has dealt with several linguistic issues (e.g., comprehension, production, speech perception, reading, etc.), methodologies (e.g., behavioral, neuroimaging, neuropsychological, etc.), and populations (e.g., monolingual, bilingual, brain-damage individuals, etc.). The overwhelming majority of that research, however, has concentrated on spoken languages, and only to a lesser degree considered signed languages. Thus, although we have many detailed models of lexical access in spoken language production, there is little data available that would be informative about which aspects of those models may or may not apply to sign language production. Our goal here was to begin to address this gap in our knowledge about sign language production by exploring a key feature of language production: the retrieval of words from the mental lexicon. In particular, we explore whether sign retrieval undergoes the same semantic constraints as spoken word retrieval and whether the same dissociation between picture and word naming that is observed for spoken languages is also observed for signed languages.

Lexical access refers to the processes involved in the retrieval of words from the speaker’s memory system. Lexical access in language production is semantically driven because speakers must first select the semantic representations corresponding to the words they want to utter. A well-documented phenomenon in the field is that the speed and accuracy of word retrieval is affected by semantic context. Semantic context effects have been extensively studied in the last years as they provide leverage on key issues that all models of word production must address (for a recent review, see Navarrete, Del Prato, Peressotti, & Mahon, 2014). Perhaps the most exploited experimental paradigm showing semantic context effects in language production is the picture-word interference task, in which participants name a picture (e.g., train) while ignoring a simultaneously presented distractor word that can be semantically related (e.g., car) or semantically unrelated (e.g., cat). In that task, the semantic relationship between the target responses and the distractor words is manipulated for each specific trial: Therefore, the semantic context is manipulated at an intra-trial level. Research on language production has also focused on semantic context effects that are based on manipulations at the inter-trial level, that is, across different trials in a picture-naming task. For instance, the time required to name a picture of an object (e.g., train) is reduced when the previously named picture corresponds to a semantic coordinate (e.g., car; e.g., Huttenlocher & Kubicek, 1983). However, when a semantic coordinate is named several trials before rather than on the immediately preceding trial, naming latencies are slowed down by a semantic coordinate relationship (e.g., Brown, 1981). This effect is referred to as long-lasting semantic interference in picture naming.

There is general agreement that facilitation from coordinate pictures in naming tasks is due to semantic priming (i.e., spreading activation between linked representations at the semantic level that percolates down to the lexical level). However, models of lexical access diverge in their explanation of the origin of semantic interference (e.g., Belke, 2013; Navarrete, Del Prato, & Mahon, 2012; Oppenheim, Dell, & Schwartz, 2010; for a recent review, see Spalek, Damian, & Bölte, 2013). Broadly speaking, two approaches have been proposed to account for long-lasting semantic interference. One approach, recently emphasized by Oppenheim, Dell, and Schwartz (2007; 2010), implements an incremental learning mechanism through which semantic-to-lexical connection weights are adjusted after each naming event (for precedent, see Damian & Als, 2005; Howard, Nickels, Coltheart, & Cole-Virtue, 2006). Specifically, the production of a word, given a target picture, strengthens the connections between the semantic and the lexical representations of that word (e.g., cat) and, at the same time, weakens the connections between the semantic and lexical representations of semantic coordinates of that word (e.g., dog, horse). Thus, when on a subsequent trial, a within-category item has to be selected (e.g., dog), naming latencies are relatively longer because of the weakened semantic-to-lexical connection (see also Navarrete et al., 2012; Navarrete, Mahon, & Caramazza, 2010; Vitkovitch & Humphreys, 1991). An alternative account of long-lasting semantic interference is based on the hypothesis that lexical selection is a competitive process, in that the time needed to select a word from the mental lexicon depends on the levels of activation of activated nontarget words (e.g., Levelt, Roelofs, & Meyer, 1999; Roelofs, 1992). According to that view, when a word is produced in response to a picture stimulus, it retains lexical activation for a certain period of time, making it a stronger competitor when a semantic coordinate has to be retrieved on a subsequent trial (Howard et al., 2006).

Common across these two main approaches for modeling, long-lasting semantic interference is the expectation that interference should emerge when words are accessed via semantic representations (as for instance when the stimuli to be named are pictures) but not when words can be accessed by bypassing semantic representations (as for instance when the stimuli are printed words). Congruent with these expectations, compelling evidence shows that long-lasting semantic interference emerges when the task implies lexical retrieval via semantic representations, as in picture naming or in naming to definition tasks (e.g., Damian, Vigliocco, & Levelt 2001; Navarrete et al., 2012; Wheeldon & Monsell, 1994), whereas there is no semantic interference when the stimuli are words and the task at hand is word reading (Belke, 2013; Damian et al., 2001; Navarrete et al., 2010; but see Vitkovitch & Cooper, 2012; Vitkovitch, Cooper-Pye, & Ali, 2010).

Here, we sought to replicate this interaction between semantic interference and stimulus type (pictures or words) in sign language production. Naming a picture is a semantically driven process that entails adjustments to semantic-to-lexical connections; therefore, a semantic interference effect is expected in sign production with picture stimuli. Of critical relevance for models of sign production is the word condition. In that condition, the presence or lack of a semantic interference effect will indicate how words in sign language are accessed from printed word stimuli—via semantics or directly from the printed the stimulus.

In the main experiment we report here, deaf participants named in Italian Sign Language a sequence of pictures or “read” (i.e., translated) a sequence of Italian printed words. We used a continuous naming paradigm in which participants are presented with a sequence of pictures from diverse semantic categories in a (seemingly) random order. A reliable phenomenon with this paradigm is the cumulative semantic cost: picture-naming latencies increase for every successive category exemplar that is named within the sequence. Specifically, naming latencies increase linearly as a function of ordinal position within-category. For instance, in a sequence like “train—apple—table—car—hammer—ship—violin—tomato—airplane—etc,” naming latencies to the second vehicle (e.g., car) are slower than naming latencies to the first vehicle (e.g., train); likewise, the naming latencies to the third vehicle (e.g., ship) are slower, and by the same amount, than naming latencies to the second vehicle, and so on (for early work, see Brown, 1981; for more recent work, see Alario & Martin, 2010; Costa, Strijkers, Martin, & Thierry, 2009; Howard et al., 2006; Navarrete et al., 2010; Runnqvist, Strijkers, Alario, & Costa, 2012). The critical issue in this study is the contrasting predictions regarding naming of picture and word stimuli.

As mentioned previously, as naming a picture is a semantically driven process, we predict a semantic cumulative cost when participants sign picture names (e.g., Howard et al., 2006). Likewise, this prediction would be congruent with recent studies showing semantic interference in sign production using a picture-sign interference task (i.e., the signed version of the picture-word interference task, Baus, Gutiérrez-Sigut, Quer, & Carreiras, 2008; for a recent review, see Corina, Gutierrez, & Grosvald, 2014). In relation to word stimuli, in Italian Sign Language, there is no direct correspondence between printed words and the corresponding signs (i.e., no orthography to phonology conversion procedures can be applied). In order to sign written words, deaf participants must retrieve lexical representations from memory because the response cannot be directly derived from the orthographic stimulus. Critically, deaf participants of this study had Italian Sign Language as a primary language and spoken and written Italian as a second language. Thus, naming Italian printed words with a sign of the Italian Sign Language is a translation task. The predictions about the word stimuli condition benefit from consideration of prior work in speech production in bilinguals.

The only study exploring the cumulative semantic cost in a bilingual context is that by Runnqvist and colleagues (2012). Those authors compared Spanish–Catalan bilinguals when naming a sequence of pictures using one language (i.e., Spanish or Catalan) to when they were using two languages (i.e., some pictures in Spanish and other in Catalan). The results revealed a cumulative semantic cost that transferred between languages, that is, when within-category items were named in two different languages (i.e., some items in Spanish and the rest in Catalan). Critically, the magnitude of the cumulative semantic cost between languages was similar to the magnitude of the cost obtained when a single language was used (i.e., when all within-category items were named in the same language). However, Runnqvist and colleagues’ study was with picture naming only, and thus does not speak directly to word translation. Kroll and Stewart (1994) explored semantic context effects during word translation in oral languages. In their influential paper, Kroll and Stewart explored semantic effects in a group of Dutch–English bilinguals that performed a word translation task from L1 (i.e., first language) to L2 (i.e., second language) or from L2 to L1 in the context of the blocked naming paradigm. In this task, participants are slower to name pictures if they are grouped together within a block of all within-category items (e.g., cat, dog, horse) compared with blocks of items from different categories (e.g., cat, table, lemon; for recent discussions of the blocking effect, see Navarrete et al., 2012; 2014). Kroll and Stewart (1994) reported a semantic interference effect, with slower translation responses in the homogenous condition than in the heterogeneous condition, in the L1-to-L2 translation condition only. In the L2-to-L1 translation condition, there was no semantic interference effect. Based on the assumption that lexical links from L2 to L1 are stronger than those from L1-to-L2, the authors concluded that semantic interference emerges only in the circumstance in which word translation is semantically driven (L1–L2), whereas no effects are obtained when the translation is lexically driven (L2–L1). In the same vein, if deaf participants can sign Italian written words via a direct lexical route that bypasses semantic access (as it is assumed in the case of oral language readers of alphabetic orthographies), no cumulative semantic cost should be observed with printed word stimuli, replicating the main finding of Kroll and Stewart (1994) for the L2-to-L1 translation. By contrast, if deaf participants use a different procedure to retrieve the signs, which mandatorily involves the activation of semantic-to-lexical connections, then a cumulative semantic cost with word stimuli would emerge as is predicted in picture naming. Further motivation for this last prediction is provided by the recent findings of Vinson, Thompson, Skinner, Fox, and Vigliocco (2010). Those authors found that native British Sign Language participants produced more semantic errors when signing English written words in homogenous blocks than in heterogeneous blocks, although no differences between homogenous and heterogeneous blocks were observed in the number of nonsemantic errors.

Finally, in order to allow a direct comparison with the above-reviewed studies based on spoken languages, a control group of hearing participants of comparable age was submitted to the same two conditions, that is, word and picture naming.

Experiment

Method

Participants

Twenty-two Italian Sign Language speakers, students at the Magarotto School for deaf people (Padova, Italy), were included in the experimental group (mean age = 18; range: 15–30; standard deviation [SD] = 4.26). All participants had normal or corrected to normal vision and none had cognitive deficits, or other sensory deficits aside from being deaf. They used Italian Sign Language as their primary and preferred means of communication at school and in everyday life. All deaf participants were also proficient users of spoken and written Italian, which was part of the school curriculum. Twenty participants were native Italian Sign Language signers. Two participants were nonnative signers, that is, the first language to which they were exposed was not Italian Sign Language; both became fluent Italian Sign Language speakers when they entered the Magarotto School at the age of 13. All students participated voluntarily in the experiment and provided written informed consent; for participants younger than 18 years, written consent from parents was required and obtained. Twenty hearing native Italian speakers, without knowledge of Italian Sign Language, of the same age (mean age = 18; range: 15–30; SD = 4.36) took part in the experiment requiring spoken responses. The hearing participants were students of the University of Padova or students at other schools in Padova (Italy).

Materials

Eighty-eight color photographs were taken from the Internet and sized to fit within a square of 400×400 pixels. Fifty of the 88 photographs belonged to 10 different semantic categories, with 5 items in each semantic category (see Appendix). The rest of the photographs were filler items and did not come from the same categories as the critical items.

Design

The eighty-eight pictures were randomly inserted into a sequence with the following constraints. Pictures from each category were separated by lags of 2, 4, 6, or 8 intervening items. The first 5 items of the sequence were filler items. Filler items and the order of the categories in the sequence were randomly assigned. This process was repeated nine times following the same constraints and structure, resulting in 10 experimental sequences (see Navarrete et al., 2010, for precedent on these constraints). Two different versions of each sequence were created: one containing picture stimuli and the other containing word stimuli (in Italian). Each participant received four different experimental sequences (i.e., blocks). The first 2 experimental blocks contained picture stimuli and the last two word stimuli, or vice versa. This was counterbalanced evenly across participants.

Procedure

An experimental trial for the deaf participants involved the following events. At the center of the screen the instruction “Press z + m” was presented. Participants were required to press the keys “z” and “m” on the keyboard with the index fingers of the left and right hands, respectively. As soon as the two keys were pressed, the target stimulus appeared on the screen. Participants were asked to name the item as fast and as accurately as possible with the corresponding sign. Items were presented until one of the two keys was released from the keyboard. An inter-trial interval of 1,500ms was initiated when the second key was released from the keyboard. After the inter-trial interval, the instructions for the next trial were presented (“Press z + m”). Reaction times were measured for both the first and the second key releases (analyses below are based on the first release). There was a short pause between each block. There was no familiarization and participants were not corrected throughout the experimental session (as in previous studies of the cumulative semantic cost, e.g., Howard et al., 2006; Navarrete et al., 2010). Before the start of the experiment, participants were trained with the naming procedure. Fourteen new filler pictures were selected for the training phase. Only when the participant was able to perform the task correctly did the experiment begin. Eighteen participants required only one training block, whereas four required two training blocks.

In the control experiment with native Italian speakers, the instruction “Press z + m” was not presented and participants orally named (i.e., in Italian spoken language) picture and word stimuli. All participants in the control experiment completed one training block.

Analysis

Analyses were performed only on experimental items and separately for word and picture blocks. Naming latencies were measured from the onset of the target picture until the first key release in the deaf group and until speech onset in the hearing group. The experimenter was located behind the participants and judged the response for correctness. Three types of responses were scored as errors and excluded from the analyses of responses latencies: (a) production of clearly erroneous names; (b) production of disfluencies or utterance repairs or hesitations; and (c) response times less than 250ms or greater than 2,500ms. For the deaf group, disfluencies included those responses in which the different parameters of the sign was produced in an intermittent or discontinuous manner, and repairs referred to those responses in which participants started to produce a wrong sign, stopped, and then produced the correct sign. Two deaf participants (one starting with picture blocks and one with word blocks) produced more than 25% of errors and were discarded from the analysis. Separate analyses for pictures and words, collapsing across the two blocks, were performed. Analyses were carried out treating participants and categories as random factors on the within-subject factor, ordinal position within-category (five levels: 1–5).

Results

Deaf group

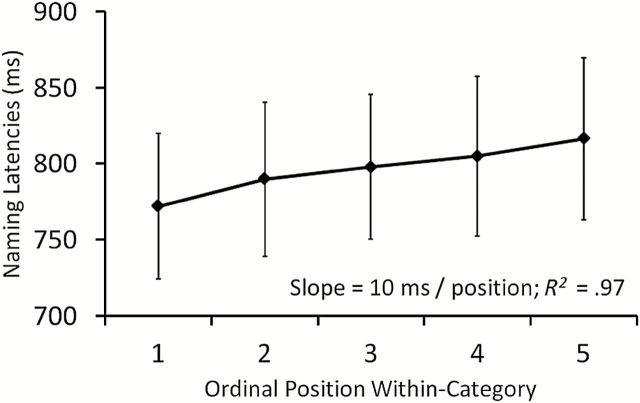

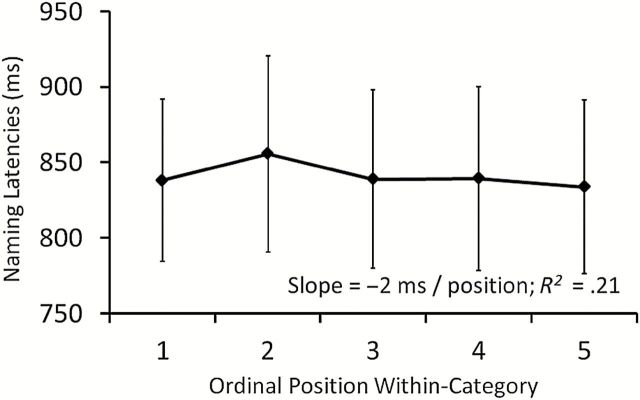

In the picture blocks, the effect of ordinal position within-category was significant in the analysis of the naming latencies (F1 (4, 76) = 4.01, p < .01, ηp 2 = .17; F2 (4, 36) = 4.05, p < .01, ηp 2 = .31) but not in the error analysis (Fs < 1). Response times increased for each subsequent within-category item (see Table 1). In the word blocks, the effect of ordinal position within-category was not significant in either the analysis of naming latencies or the analysis of error rates (Fs < 1; see Table 1). In an analysis of the linear trend, response times in the picture blocks increased linearly with each additional within-category item that was named (F1 (1, 19) = 20.29; p < .01; ηp 2 = .51; F2 (1, 9) = 13.78, p < .01, ηp 2 = .6, see Figure 1); there was no effect of a linear trend in the word blocks (F1 (1, 19) = 1.01; p = .32; ηp 2 = .05; F2 (1, 9) = 1.21, p = .3, ηp 2 p = .11, see Figure 2). In a further analysis of naming latencies, we explored the interaction between the factor ordinal position within-category and the factor block (i.e., picture vs. words). The interaction between those two factors was marginally significant by participants and not significant by items (F1 (4, 76) = 2.18; p = .08; ηp 2 = .1; F2 (4, 36) = 1.69, p = .17, ηp 2 = .15). Critically, in the analysis of the linear trend, the interaction between those factors was significant (F1 (1, 19) = 14.25; p < .01; ηp 2 = .42; F2 (1, 9) = 10.29, p < .02, ηp 2 = .53), reflecting a cumulative semantic cost with picture targets but not with word targets.

Table 1.

Mean naming latencies (response times [RT] in milliseconds), standard deviations of the RTs (SD), and percentage of error rates (E) by ordinal position within-category for picture and word stimuli in the deaf group

| Order position | Pictures | Words | ||||

|---|---|---|---|---|---|---|

| RT | SD | E | RT | SD | E | |

| 1 | 772 | 215 | 6 | 838 | 240 | 12.3 |

| 2 | 790 | 227 | 5.5 | 856 | 290 | 11.5 |

| 3 | 798 | 213 | 6.5 | 839 | 264 | 11.5 |

| 4 | 805 | 234 | 6.5 | 839 | 272 | 12.8 |

| 5 | 816 | 238 | 5.5 | 834 | 257 | 12 |

| Mean | 796 | 6 | 841 | 12 | ||

Figure 1.

Mean naming latencies by ordinal position within-category collapsed across blocks for picture stimuli in the deaf group.

Figure 2.

Mean naming latencies by ordinal position within-category collapsed across blocks for word stimuli in the deaf group.

It may be argued that the absence of the effect in the word blocks is due to the fact that naming latencies were too slow to detect an effect. This argument is weakened by the fact that naming times for words were, on average, only 40 ms slower than for pictures (this difference was marginally significant in the item analysis; t1 < 1; t2 (1, 9) = 2, p = .07). However, this concern that response latencies were too slow to detect an effect is decisively ruled out by the fact that there was a reliable effect of repetition priming when naming words, with longer word naming latencies in the first block than in the second block (886 and 798ms, respectively; F1 (1, 19) = 12.67, p < .01, ηp 2 p = .4; F2 (1, 9) = 122.27, p < .01, ηp 2 p = .93). Furthermore, there was a negative correlation between response times and written-word frequency for word stimuli (r = −.55, p <. 01) that remained when word length (i.e., number of letters) was partialled out (r = −.42, p < .01). 1 In sum, these results indicate that the lack of a cumulative semantic cost in the word naming condition is a real effect.

Hearing group

In picture blocks, the effect of ordinal position within-category was significant in the analysis of naming latencies (F1 (4, 76) = 13.81, p < .01, ηp 2 p = .42; F2 (4, 36) = 13.85, p < .01, ηp 2 p = .61) and error rates (F1 (4, 76) = 5.06, p < .01, ηp 2 p = .21; F2 (4, 36) = 5.26, p < .01, ηp 2 p = .36). Response times and error rates increased for each subsequent within-category item (see Table 2). In the word blocks, the effect of ordinal position within-category was not significant in the analysis of naming latencies (F1 < 1; F2 (4, 36) = 1.37, p = .26, ηp 2 p = .13). In the analysis of the error rates, the effect of ordinal position within-category was significant in the participant analysis only (F1 (4, 76) = 2.5, p < .05, ηp 2 p = .11; F2 (4, 36) = 2.1, p = .1, ηp 2 p = .19), errors decreased with each within-category item that was named (see Table 2). In the analysis of the linear trend, response times in the picture blocks increased linearly with each additional within-category item that was named (F1 (1, 19) = 31.2; p < .01; ηp 2 p = .62; F2 (1, 9) = 34.16, p < .01, ηp 2 p = .79); there was no effect of a linear trend in the word blocks (F1 (1, 19) = 1.74; p = .2; ηp 2 p = .08; F2 (1, 9) = 2.41, p = .15, ηp 2 p = .21). Overall, response times for word trials were faster than response times for picture trials (555 and 855ms, respectively; t1 (1, 19) = 14, p < .01; t2 (1, 9) = 14.55, p < .01). As for the deaf group, we explored the interaction between the factor ordinal position within-category and the factor block (i.e., picture vs. words). The interaction between those factors was significant (F1 (4, 76) = 12.87; p < .01; ηp 2 = .4; F2 (4, 36) = 15.64, p < .01, ηp 2 = .63). Paralleling the results of the deaf group, in the analysis of the linear trend, the interaction between those two factors was also significant (F1 (1, 19) = 31.69; p < .01; ηp 2 = .62; F2 (1, 9) = 36.55, p < .01, ηp 2 = .8), reflecting the cumulative semantic cost with picture targets but not with word targets.

Table 2.

Mean naming latencies (response times [RT] in milliseconds), standard deviations of the RTs (SD), and percentage of error rates (E) by ordinal position within-category for picture and word stimuli in the hearing group

| Order position | Pictures | Words | ||||

|---|---|---|---|---|---|---|

| RT | SD | E | RT | SD | E | |

| 1 | 806 | 114 | 10 | 557 | 94 | 6.5 |

| 2 | 828 | 104 | 8.7 | 558 | 95 | 5 |

| 3 | 856 | 111 | 11 | 555 | 95 | 5.3 |

| 4 | 882 | 113 | 9.7 | 556 | 97 | 2.8 |

| 5 | 888 | 90 | 18.5 | 551 | 97 | 3.2 |

| Mean | 852 | 12 | 555 | 5 | ||

General Discussion

Deaf speakers of Italian Sign Language signed sequences of words or sequences of pictures. A cumulative semantic cost with picture stimuli was observed, such that naming times increased with each additional within-category item that was named, replicating previous studies with spoken word production (e.g., Brown, 1981). Critically, no cumulative semantic cost was observed with word stimuli. Finally, in the control experiment, a cumulative semantic cost was observed when native Italian speakers named, in spoken language, picture stimuli but not when they named word stimuli, in line with previous findings (Belke, 2013; Navarrete et al., 2010). The results of this study suggest that semantic interference in sign production emerges in those circumstances in which lexical access is semantically driven (e.g., from target pictures) but not in those circumstances in which lexical access is lexically driven (e.g. from word stimuli). Below we discuss the implications of our results for a processing model of signed production, as well as for models of spoken word production and reading aloud.

First, our results offer a straightforward demonstration of the distinction between lexical and semantic representations in signed languages. This claim has been questioned by theories that, based on the iconicity present in some of signs, postulated a unique representational level for deaf signers, that is, the semantic phonology (Stokoe, 1991). According to that view, the form of a sign can be derived on the basis of some semantic features of its meaning, obviating the distinction between meaning and form. Contrary to this idea, our results suggest that deaf speakers can access the lexical representation of a sign from the orthographic representation of the word and that semantic mediation is not required for this form of translation.

Beyond this broad distinction between semantic and lexical representations, extant theories of spoken language production agree on the notion that the translation of the speaker’s communicative intention into a specific set of sounds is completed in (at least) two distinct stages of processing. The first stage entails the activation of at least one lexical representation, which is semantically and syntactically mediated, whereas the second stage entails the activation of form-based representations (i.e., phonological segments, e.g., Caramazza, 1997; Levelt et al., 1999). Perhaps, the simplest evidence for the distinction between lexical and phonological stages is the tip-of-the-tongue (TOT) experience, the sensation of being temporarily unable to retrieve a known word for production. Interestingly, speakers experiencing a TOT can often retrieve some information associated with the intended word such as some of its phonemes or, in languages with grammatical gender, its grammatical gender, (e.g., Brown, 1991; 2012). Support for a clear distinction between lexical and phonological representations in sign languages has been presented by Thompson, Emmorey, and Gollan (2005) who demonstrated that in deaf speakers the “tip of the fingers” phenomenon is highly similar to the TOT phenomenon in hearing speakers. Thompson and colleagues interpreted their results as supporting a two-stage model of sign production in which lexical and phonological levels are clearly separated, similar to what is generally assumed for oral languages (see also Brentari, 1998; Liddell & Johnson, 1989). Congruent with this interpretation, recent studies have replicated in signed languages some well-documented phonological phenomena previously obtained in spoken languages. For instance, in a picture-sign interference paradigm in Catalan Sign language, Baus and colleagues (2008) manipulated the phonological overlap between the picture target and the sign distractor (specifically, the parameters of Handshape, Movement, and Location). The results showed that the time to sign the picture was affected by the presence of phonological overlap. These studies support the distinction between lexical and phonological representations in signed languages; the results reported here support the distinction between semantic and lexical representations. Taken together, this evidence suggests that, similar to what it is accepted in models of spoken word production (e.g., Levelt et al. 1999), sign production entails the retrieval of at least three distinct types of information: semantic, lexical, and phonological.

On the other hand, the absence of a cumulative semantic cost in word reading by deaf participants (i.e., translation to sign) would seem to be at odds with recent findings by Vinson and colleagues (2010) in a blocked naming paradigm. These authors report more semantic errors when native British Sign Language deaf participants sign English written words in homogenous blocks than in heterogeneous blocks. In the study by Vinson and colleagues, however, participants could lift their hands to begin signing regardless of whether or not they had already retrieved the sign and were ready to produce it. As a consequence, response latencies were extremely fast (350–400ms) and not analyzed (see footnote 2 in Vinson et al., 2010). By contrast, in the experiment reported herein, participants were instructed to release their fingers only when the response was available, making the procedure similar to that used in standard spoken naming experiments, where participants are instructed to name the stimuli and to avoid any extraneous sound that could trigger the microphone. Thus, it is difficult to directly compare the results obtained by Vinson and colleagues with those obtained in standard naming studies.

A second implication of our study derives from the observation that semantic interference effects in the hearing and deaf group were the same, suggesting that lexical retrieval mechanisms in signed and spoken languages might rely on highly similar cognitive principles. By contrast, the two groups of participants differed substantially in the pattern of latency effects: Although hearing participants were faster to name words than pictures, the reverse was true for deaf participants. As detailed previously, this fact can be explained because for deaf participants the word “naming” condition is in fact a translation task, and word translation may require greater processing demands than picture naming. Congruent with this, in a recent naming study in Chinese Sign Language, Hu, Wang, Liu, Peng, Yang, Li, Zhang and Ding (2013) observed greater activation of the left-inferior frontal gyrus with word stimuli than with picture stimuli, and activation in left-inferior frontal gyrus has been associated with task demands (e.g., Thompson-Schill, D’Esposito, Aguirre, & Farah, 1997).

In a study with English–French bilingual speakers, Kroll, Michael, Tokowicz, and Dufour (2002) reported that L2 proficiency determines the speed in both L1-to-L2 and L2-to-L1 translation tasks (i.e., forward and backward translations). Specifically, as L2 proficiency increases, faster forward and backwards translation latencies were observed. That study also reported a translation asymmetry effect, so that translation was faster from L2-to-L1 than from L1-to-L2. The translation asymmetry was larger for less proficient bilinguals, although high-proficient bilinguals showed the asymmetry as well. Kroll and colleagues interpreted this asymmetry as congruent with the bilingualism model proposed by Kroll and Stewart (1994), according to which there are distinct routes for translation. Although L2-to-L1 translation can be achieved mainly on the basis of direct links between the two lexicons, L1-to-L2 translation entails access to the meaning (see above). Participants of this study were recruited from a boarding school for deaf students where Italian Sign Language is used for teaching and is the primary and preferred language for communication. We cannot, however, exclude that deaf participants differ in their proficiency level of Italian. Therefore, and according to Kroll and colleagues (2002), it is plausible that latencies in the word naming condition were (overall) affected by the proficiency level of Italian. Critically, and more relevant to our principal aim here, such differences would not modulate the presence or absence of a cumulative semantic cost. Indeed, our results converge with the results reported by Kroll and Stewart (1994), where highly fluent Dutch–English bilinguals did not show semantic interference when translating English words into Dutch (L2-to-L1). Therefore, the lack of semantic effects when deaf participants are naming printed Italian words (L2) in Italian Sign Language (L1) would be consistent with Kroll and Stewart’s results. This finding is most striking as it suggests that direct lexical links can be also established between two languages of different modalities (auditory-motor vs. visuo-motor), as Italian and Italian Sign Language.

In relation to psycholinguistic models of spoken word production, this study extends previous results (e.g., Navarrete et al., 2010) in showing that no cumulative cost is obtained in response to word stimuli even when there is no clear correspondence between the orthography (i.e., the letter string) and the response (i.e., the sign). These data suggest that the absence of the cumulative cost in the previous studies in spoken languages cannot simply depend on sublexical processing of the word stimuli. An important open issue in the field of lexical access concerns the origin(s) of semantic interference in language production tasks. The findings reported here suggest that semantic interference arises as a consequence of semantically driven processes (Belke, 2013; Howard et al., 2006; Navarrete et al., 2010; Oppenheim et al., 2010) and are difficult to reconcile with accounts that assume that semantic interference is exclusively a lexical phenomenon (e.g., Vitkovitch & Cooper, 2012; Vitkovitch et al., 2010).

Our results also have implications for psycholinguistic models of bilingualism, in particular with respect to the issue of cross-linguistic activation. There exists general agreement that there is concurrent activation of the two languages of a bilingual speaker in the course of language production in a monolingual context (e.g., Colomé & Miozzo, 2010; Costa, Caramazza, & Sebastián-Gallés, 2000; Kroll, Bobb, & Wodniecka, 2006; for discussion, see Costa, Heij, & Navarrete, 2006). Similarly, research in comprehension also suggests cross-language activation of the two languages of a bilingual speaker (e.g., Dijkstra, 2005; Marian & Spivey, 2003). Nevertheless, the issue of whether there exists cross-activation between languages of different modalities has been addressed only in recent years. For instance, Morford, Wilkinson, Villwock, Piñar, and Kroll (2011) asked American Sign Language (ASL)-English bilinguals to make a semantic judgment task on pairs of written English words. Morford and colleagues observed that the time to perform the semantic task depended on whether or not the two written English words were phonologically related or not in ASL (i.e., the language not in use in the task). They concluded that bimodal bilinguals are co-activating both languages during the semantic tasks. Shook and Marian (2012) have reported similar findings using a visual world search paradigm. Specifically, in this study, ASL-English bilinguals were instructed, in English, to search for a target object in a display containing three other distractor objects. Bimodal bilinguals were slower to select the target object from the display when one of the distractor objects was phonologically related to the target object in ASL (see also, Kubus, Villwock, Morford, & Rathmann, 2014; Morford, Kroll, Piñar, & Wilkinson, 2014; Ormel, Hermans, Knoors, & Verhoeven, 2012). The results of this study, which reveal the existence of direct links between written words and Sign Language lexical representations, are certainly in line with the idea of cross-activation between linguistic systems of two languages of different modalities.

It is generally agreed that there are (at least) two routes through which a word can be read aloud in alphabetic languages (as for instance Italian or English; e.g., Ellis & Young, 1988; Harris & Coltheart, 1986; Morton, 1980). The nonlexical route operates according to grapheme–phoneme correspondence rules that map letters to phonemes. The semantic route involves reading via the semantic representation of the word. If deaf participants retrieved lexical representations in Italian Sign Language through semantically driven access (i.e. the semantic route), a cumulative semantic cost should have emerged. Since this was not the case, the use of the semantic lexical route can also be excluded. Importantly, a third reading route has also been proposed: a direct mapping from an orthographic representation of a word onto a production lexical representation, the lexical route. This third route was motivated based on the performance of some brain-damaged patients, and it has since received support at the experimental level (e.g., Fias, Reynvoet, & Brysbaert, 2001; Peressotti & Job, 2003). The neuropsychological evidence consists of cases with deficits to the semantic system as well as to the grapheme-to-phoneme conversion mechanism, but who are nonetheless able to read some words aloud (e.g., Funnell, 1983; Law, Wong, & Chiu, 2005; Sartori, Masterson, & Job, 1987). The interaction we observed between semantic interference and stimulus type (pictures vs. words) suggests that deaf participants are naming written words through direct mappings between orthographic representations and production representations of the sign (i.e., production level lexical representations of the sign), that is, the lexical route. It is important to note that the input representation that is mapped to the output lexical representation may be a modality-specific (i.e., orthographic-specific) lexical representation.

It is worth noting that models of reading aloud assume cascading activation between the different stages of processing involved in word reading. That is, although a word may be read through a lexical route, some amount of activation will be propagated back up to the semantic system and to the nonlexical route (Coltheart, Rastle, Perry, Langdon, & Ziegler, 2001; Perry, Ziegler, & Zorzi, 2007). In the same vein, although deaf participants in our experiment signed printed words through the lexical route, activation would be propagated up to the semantic system. Evidence that this is also the case in signed languages comes from the recent naming study conducted by Hu and colleagues (2013). Those authors obtained a high overlap of activation in several brain regions for both types of stimuli (i.e., words and pictures) and suggest that word and picture naming shared several cognitive processes (i.e., conceptual, lexical, phonological, and articulatory processes). Nevertheless, Hu and colleagues reached a different conclusion to the one we reached here. They interpret the similar pattern of activation as congruent with the notion that deaf participants retrieve lexical representations for both stimulus type (picture or word) through the semantic system. By contrast, the interaction between semantic interference and stimulus type we observed here leads us to conclude that deaf participants are naming words through direct mappings between orthographic representations and production representations. That said, there is nothing in the model we propose that would prevent deaf participants from reading via semantic representations. In other words, as it is for oral speakers, task demands are likely to bias which route is the critical route for naming word stimuli. An important issue for future research will be to unpack the task parameters that bias the use of different reading (i.e., translation) routes in deaf signers.

Conclusion

This research aimed to test whether lexical retrieval during sign production is sensitive to semantic context. Our results suggest that this is the case only when lexical representations are retrieved through the mappings that link semantic to lexical representations (i.e., from picture stimuli). These results parallel those obtained in spoken word production and suggest that deaf signers can directly map between orthographic representations of their spoken language (potentially at the lexical level) and production representations of their sign language. This finding closely parallels the result in unimodal bilingual (i.e., speakers of two or more oral languages), in support of the view that the surface difference between sign and oral languages does not prevent full bilingualism (Piñar, Dussias, & Morford, 2011; Shook & Marian, 2009).

Funding

National Science Foundation (NSF-1349042).

Conflicts of Interest

No conflicts of interest were reported.

Acknowledgments

The authors are grateful to Silvia Totolo and Elisa Sabbadin for their assistance in running the experiments.

AppendixExperimental materials organized by semantic category

Animals: donkey, cow, horse, pig, sheep.

Clothes: skirt, pants, sweater, jacket, sock.

Fruit: apple, banana, lemon, orange, pear.

Furniture: bed, chair, sofa, stool, table.

Tableware: cup, fork, glass, knife, spoon.

Transportation: truck, car, helicopter, plane, bus.

Musical instruments: drum, guitar, piano, trumpet, violin.

Body parts: ear, eye, finger, hand, nose.

Vegetables: garlic, eggplant, zucchini, onion, carrot.

White goods: washing machine, refrigerator, microwave, oven, dishwasher.

Note

The correlation between response times and frequency was also significant for picture stimuli (r = -.4, p < .01), as well as when word length was partialled out (r = -.31, p < .04).

References

- Alario F. X., Martín F. M. (2010). On the origin of the “cumulative semantic inhibition” effect. Memory & Cognition, 38, 57–66. 10.3758/MC.38.1.57 [DOI] [PubMed] [Google Scholar]

- Baus C., Gutiérrez-Sigut E., Quer J., Carreiras M. (2008). Lexical access in Catalan Signed Language (LSC) production. Cognition, 108, 856–865. 10.1016/j.cognition.2008.05.012 [DOI] [PubMed] [Google Scholar]

- Belke E. (2013). Long-lasting inhibitory semantic context effects on object naming are necessarily conceptually mediated: Implications for models of lexical-semantic encoding. Journal of Memory and Language, 69, 228–256. 10.1016/j.jml.2013.05.008 [Google Scholar]

- Brentari D. (1998). A prosodic model of sign language phonology. Cambridge, MA: MIT Press. [Google Scholar]

- Brown A. S. (1981). Inhibition in cued retrieval. Journal of Experimental Psychology: Human Learning and Memory, 7, 204–215. [Google Scholar]

- Brown A. S. (1991). A review of the tip-of-the-tongue experience. Psychological Bulletin, 109, 204–223. 10.1037//0033-2909.109.2.204 [DOI] [PubMed] [Google Scholar]

- Brown A. S. (2012). The tip of the tongue state. New York, NY: Psychology Press. [Google Scholar]

- Caramazza A. (1997). How many levels of processing are there in lexical access? Cognitive Neuropsychology, 14, 177–208. 10.1080/026432997381664 [Google Scholar]

- Colomé A., Miozzo M. (2010). Which words are activated during bilingual word production? Journal of Experimental Psychology: Learning, Memory, and Cognition, 36, 96–109. 10.1037/a0017677 [DOI] [PubMed] [Google Scholar]

- Coltheart M., Rastle K., Perry C., Langdon R., Ziegler J. (2001). DRC: A dual route cascaded model of visual word recognition and reading aloud. Psychological Review, 108, 204–256. 10.1037//0033-295X.108.1.204 [DOI] [PubMed] [Google Scholar]

- Corina D. P., Gutierrez E., Grosvald M. (2014). Sign language production: An overview. In Goldrick M., Ferreira V., Miozzo M. (Eds.), The Oxford handbook of language production (pp. 393–416). New York, NY: Oxford University Press. [Google Scholar]

- Costa A., Caramazza A., Sebastian-Galles N. (2000). The cognate facilitation effect: Implications for models of lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition, 26, 1283–1296. 10.1037/0278-7393.26.5.1283 [DOI] [PubMed] [Google Scholar]

- Costa A., Heij W. L., Navarrete E. (2006). The dynamics of bilingual lexical access. Bilingualism: Language and Cognition, 9, 137–151. 10.1017/S1366728906002495 [Google Scholar]

- Costa A., Strijkers K., Martin C., Thierry G. (2009). The time course of word retrieval revealed by event-related brain potentials during overt speech. Proceedings of the National Academy of Sciences of the United States of America, 106, 21442–21446. 10.1073/pnas.0908921106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damian M. F., Als L. C. (2005). Long-lasting semantic context effects in the spoken production of object names. Journal of Experimental Psychology: Learning, Memory, and Cognition, 31, 1372–1384. 10.1037/0278-7393.31.6.1372 [DOI] [PubMed] [Google Scholar]

- Damian M. F., Vigliocco G., Levelt W. J. M. (2001). Effects of semantic context in the naming of pictures and words. Cognition, 81, B77–B86. 10.1016/S0010-0277(01)00135-4 [DOI] [PubMed] [Google Scholar]

- Dijkstra T. (2005). Bilingual visual word recognition and lexical access. In Kroll J. F., De Groot A. M, B. (Eds.), Handbook of bilingualism: Psycholinguistic approaches (pp. 179–201). New York, NY: Oxford University Press. [Google Scholar]

- Ellis A. W., Young A. W. (1988). Human cognitive neuropsychology. Hove, UK: Lawrence Earlbaum Associates, Ltd. [Google Scholar]

- Fias W., Reynvoet B., Brysbaert M. (2001). Are Arabic numerals processed as pictures in a Stroop interference task? Psychological Research, 65, 242–249. 10.1007/s004260100064 [DOI] [PubMed] [Google Scholar]

- Funnell E. (1983). Phonological processes in reading: New evidence from acquired dyslexia. British Journal of Psychology, 74, 159–180. [DOI] [PubMed] [Google Scholar]

- Harris M., &, Coltheart M. (1986). Language processing in children and adults: An introduction. London, UK: Routledge and Kegan Paul. [Google Scholar]

- Howard D., Nickels L., Coltheart M., Cole-Virtue J. (2006). Cumulative semantic inhibition in picture naming: Experimental and computational studies. Cognition, 100, 464–482. 10.1016/j.cognition.2005.02.006 [DOI] [PubMed] [Google Scholar]

- Hu Z., Wang W., Liu H., Peng D., Yang Y., Li K., Zhang J.X., Ding G. (2011). Brain activations associated with sign production using word and picture inputs in deaf signers. Brain and Language, 116, 64–70. 10.1016/j.bandl.2010.11.006 [DOI] [PubMed] [Google Scholar]

- Huttenlocher J., Kubicek L. F. (1983). The source of relatedness effects on naming latency. Journal of Experimental Psychology: Learning, Memory, and Cognition, 9, 486–496. doi.apa.org/journals/xlm/9/3/486.pdf [Google Scholar]

- Kroll J. F., Stewart E. (1994). Category interference in translation and picture naming: Evidence for asymmetric connections between bilingual memory representations. Journal of Memory and Language, 33, 149–174. 10.1006/jmla.1994.1008 [Google Scholar]

- Kroll J. F., Bobb S. C., Wodniecka Z. (2006). Language selectivity is the exception, not the rule: Arguments against a fixed locus of language selection in bilingual speech. Bilingualism: Language and Cognition, 9, 119–135. 10.1017/S1366728906002483 [Google Scholar]

- Kroll J. F., Michael E., Tokowicz N., Dufour R. (2002). The development of lexical fluency in a second language. Second Language Research, 18, 137–171. 10.1191/0267658302sr201oa [Google Scholar]

- Kubus O., Villwock A., Morford J. P., Rathmann C. (2014). Word recognition in deaf readers: Cross-language activation of German Sign Language and German. Applied Psycholinguistics, 1.24. 10.1017/S0142716413000520 [Google Scholar]

- Law S. P., Wong W., Chiu K. M. (2005). Preserved reading aloud with semantic deficits: Evidence for a non-semantic lexical route for reading Chinese. Neurocase, 11, 167–175. 10.1080/13554790590944618 [DOI] [PubMed] [Google Scholar]

- Levelt W. J., Roelofs A., Meyer A. S. (1999). A theory of lexical access in speech production. The Behavioral and Brain Sciences, 22, 1–75. 10.1017/S0140525X99001776 [DOI] [PubMed] [Google Scholar]

- Liddell S., Johnson R. (1989). American Sign Language: The phonological base. Sign Language Studies, 64, 197–277. [Google Scholar]

- Marian, V., & Spivey, M. (2003). Competing activation in bilingual language processing Within- and between-language competition. Bilingualism Language and Cognition, 6, 97–115. 10.1017/S1366728903001068

- Morford J. P., Kroll J. F., Piñar P., Wilkinson E. (2014). Bilingual word recognition in deaf and hearing signers: Effects of proficiency and language dominance on cross-language activation. Second Language Research, 30, 251–271. 10.1177/0267658313503467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford J. P., Wilkinson E., Villwock A., Piñar P., Kroll J. F. (2011). When deaf signers read English: Do written words activate their sign translations? Cognition, 118, 286–292. 10.1016/j.cognition.2010.11.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morton J. (1980). The Logogen model and orthographic structure. In Frith U. (Ed.), Cognitive processes in spelling (pp. 117–133). London: Academic Press. [Google Scholar]

- Navarrete E., Del Prato P., Peressotti F., Mahon B. Z. (2014). Lexical retrieval is not by competition: Evidence from the blocked naming paradigm. Journal of Memory and Language, 76, 253–272. 10.1016/j.jml.2014.05.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarrete E., Del Prato P., Mahon B. Z. (2012). Factors determining semantic facilitation and interference in the cyclic naming paradigm. Frontiers in Psychology, 3, 38. 10.3389/fpsyg.2012.00038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarrete E., Mahon B. Z., Caramazza A. (2010). The cumulative semantic cost does not reflect lexical selection by competition. Acta Psychologica, 134, 279–289. 10.1016/j.actpsy.2010.02.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oppenheim G. M., Dell G. S., Schwartz M. F. (2007). Cumulative semantic interference as learning. Brain and Language, 103, 175–176. 10.1016/j.bandl.2007.07.102 [Google Scholar]

- Oppenheim G. M., Dell G. S., Schwartz M. F. (2010). The dark side of incremental learning: A model of cumulative semantic interference during lexical access in speech production. Cognition, 114, 227–252. 10.1016/j.cognition.2009.09.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ormel E., Hermans D., Knoors H., Verhoeven L. (2012). Cross-language effects in written word recognition: The case of bilingual deaf children. Bilingualism: Language and Cognition, 15, 288–303. 10.1017/S1366728911000319 [Google Scholar]

- Peressotti F., Job R. (2003). Reading aloud: Dissociating the semantic pathway from the non-semantic pathway of the lexical route. Reading and Writing, 16, 179–194. 10.1023/A:1022843209384 [Google Scholar]

- Perry C., Ziegler J. C., Zorzi M. (2007). Nested incremental modeling in the development of computational theories: The CDP+ model of reading aloud. Psychological Review, 114, 273–315. 10.1037/0033-295X.114.2.273 [DOI] [PubMed] [Google Scholar]

- Piñar P., Dussias P. E., Morford J. P. (2011). Deaf readers as bilinguals: An examination of deaf readers’ print comprehension in light of current advances in bilingualism and second language processing. Language and Linguistics Compass, 5, 691–704. 10.1111/j.1749-818x.2011.00307.x [Google Scholar]

- Roelofs A. (1992). A spreading-activation theory of lemma retrieval in speaking. Cognition, 42, 107–142. 10.1016/0010-0277(92)90041-F [DOI] [PubMed] [Google Scholar]

- Runnqvist E., Strijkers K., Alario F. X., Costa A. (2012). Cumulative semantic interference is blind to language: Implications for models of bilingual speech production. Journal of Memory and Language, 66, 350–869. 10.1016/j.jml.2012.02.007 [Google Scholar]

- Sartori G., Masterson J., Job R. (1987). Direct-route reading and the locus of lexical decision. In M. Coltheart, G. Sartori, and R. Job (Eds.), The cognitive neuropsychology of Language (pp. 59–77). Hillsdale, NJ: Lawrence Erlbaum Associates. [Google Scholar]

- Shook A., Marian V. (2009). Language processing in bimodal bilinguals. In Caldwell E. (Ed.), Bilinguals: Cognition, education and language processing (pp. 35–64). Hauppage, NY: Nova Science Publishers. [Google Scholar]

- Shook A., Marian V. (2012). Bimodal bilinguals co-activate both languages during spoken comprehension. Cognition, 124, 314–324. 10.1016/j.cognition.2012.05.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spalek K., Damian M. F., Bölte J. (2013). Is lexical selection in spoken word production competitive? Introduction to the special issue on lexical competition in language production. Language and Cognitive Processes, 28, 597–614. 10.1080/01690965.2012.718088 [Google Scholar]

- Stokoe W. (1991). Semantic phonology. Sign Language Studies, 71, 107–114. [Google Scholar]

- Thompson R., Emmorey K., Gollan T. H. (2005). “Tip of the fingers” experiences by deaf signers: Insights into the organization of a sign-based lexicon. Psychological Science, 16, 856–860. 10.1111/j.1467-9280.2005.01626.x [DOI] [PubMed] [Google Scholar]

- Thompson-Schill S. L., D’Esposito M., Aguirre G. K., Farah M. J. (1997). Role of left inferior prefrontal cortex in retrieval of semantic knowledge: A reevaluation. Proceedings of the National Academy of Sciences of the United States of America, 94, 14792–14797. 10.1073/pnas.94.26.14792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vinson D. P., Thompson R. L., Skinner R., Fox N., Vigliocco G. (2010). The hands and mouth do not always slip together in British sign language: Dissociating articulatory channels in the lexicon. Psychological Science, 21, 1158–1167. 10.1177/0956797610377340 [DOI] [PubMed] [Google Scholar]

- Vitkovitch M., Cooper E. (2012). My word! Interference from reading object names implies a role for competition during picture name retrieval. Quarterly Journal of Experimental Psychology (2006), 65, 1229–1240. 10.1080/17470218.2012.655699 [DOI] [PubMed] [Google Scholar]

- Vitkovitch M., Humphreys G. W. (1991). Perseverant naming errors in speeded picture naming: It’s in the links. Journal of Experimental Psychology: Learning, Memory and Cognition, 17, 664–680. [Google Scholar]

- Vitkovitch M., Cooper-Pye E., Ali L. (2010). The long and the short of it! Naming a set of prime words before a set of related picture targets at two different intertrial intervals. European Journal of Cognitive Psychology, 22, 161–171. 10.1080/09541440902743348 [Google Scholar]

- Wheeldon L. R., Monsell S. (1994). Inhibition of spoken word production by priming a semantic competitor. Journal of Memory and Language, 33, 332–356. 10.1006/jmla.1994.1016 [Google Scholar]