Abstract

Humans use shading as a cue to three-dimensional form by combining low-level information about light intensity with high-level knowledge about objects and the environment. Here, we examine how cuttlefish Sepia officinalis respond to light and shadow to shade the white square (WS) feature in their body pattern. Cuttlefish display the WS in the presence of pebble-like objects, and they can shade it to render the appearance of surface curvature to a human observer, which might benefit camouflage. Here we test how they colour the WS on visual backgrounds containing two-dimensional circular stimuli, some of which were shaded to suggest surface curvature, whereas others were uniformly coloured or divided into dark and light semicircles. WS shading, measured by lateral asymmetry, was greatest when the animal rested on a background of shaded circles and three-dimensional hemispheres, and less on plain white circles or black/white semicircles. In addition, shading was enhanced when light fell from the lighter side of the shaded stimulus, as expected for real convex surfaces. Thus, the cuttlefish acts as if it perceives surface curvature from shading, and takes account of the direction of illumination. However, the direction of WS shading is insensitive to the directions of background shading and illumination; instead the cuttlefish tend to turn to face the light source.

Keywords: cephalopod, visual depth, animal vision, visual ecology, visual cognition

1. Introduction

To add a third dimension to the two-dimensional retinal image, humans use stereopsis, motion parallax and also pictorial cues [1]. These different types of information are processed in separate cortical pathways, which are then integrated to give a single representation of depth [2]. Pictorial cues are so called because artists use them to give the illusion of depth on a flat canvas; they include shadows, shading, specular highlights and occlusion, but they are ambiguous. For example, our inferences about shape from shading assume that intensity changes are caused by differences in illumination rather than reflection, and that illumination is from a single source (figure 1) [3]. The rules for interpreting pictorial cues are grounded in physical probability, but their implementation requires a combination of long-range, viewer-informed analysis with low-level retinal input. Such processes are considered by some to be cortical and cognitive [4], and hence may be impossible for non-human animals. In contrast, Marr [5] argued that any visual system, be it biological or machine, should work on similar principles, being largely scene-based, object-centred and bottom-up, with internal representations tailored to visual ecology.

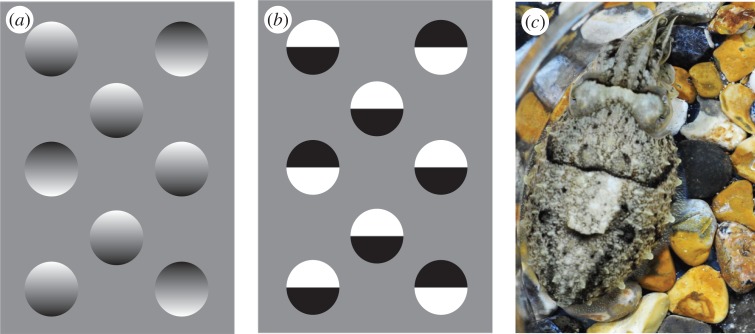

Figure 1.

Gradient shading creates an illusion of depth to human viewers. (a) Tokens look concave or convex depending on the shading. We generally assume light comes from above, but can switch this percept. However, we cannot see all tokens in the same form simultaneously, which Ramachandran [3] terms the common light source rule. (b) This sense of depth is lost in tokens where light and dark areas are distinct. (c) A cuttlefish showing asymmetrical shading of the white square (WS) component. (Online version in colour.)

Most studies of depth perception in non-human animals concern stereopsis and motion parallax [6], but there is evidence that pigeons use pictorial cues, including occlusion, size and texture density to determine the relative distances of object in a scene [7]. Cook et al. [8] found that pigeons can distinguish convex and concave surfaces defined by shading, but for a human viewer the three-dimensional relief seen in the two-dimensional stimuli used does not depend solely on shading, and it is not clear how the birds made this discrimination. To the best of our knowledge, no study has investigated how a non-human animal takes account of the direction of illumination. Here, we investigate how the European cuttlefish Sepia officinalis uses shading to sense pictorial depth, and hence to shade their own coloration pattern. How does the depth perception of this cephalopod mollusc, with no cerebral cortex, compare with our own ability to derive shape from shading (figure 1) [3]?

Cuttlefish, along with other cephalopods, offer a unique approach to biological vision through their ability to rapidly change their appearance for camouflage and communication [9–12]: they can display a wide range of body patterns, and their choice of pattern reports what the animal sees. Cuttlefish have excellent vision, with large eyes giving a near 360° view of the substrate [13], and visually driven camouflage as a response to the background has been used to investigate visual edge detection, texture perception and object recognition [11,12,14,15].

The cuttlefish coordinate the expression of some 40 discrete visual features on the skin, known as behavioural components, to produce a number of distinct (though variable) body patterns [16]. In particular, on backgrounds containing discrete objects such as pebbles or printed circles cuttlefish often display disruptive body patterns (figure 1c) [14], which often include the white square (WS) component (figure 1c). Consistent with its cryptic function, the WS is displayed when the background contains pebbles or printed white circles of about the same size as the square itself (approx. 10 mm across for the animals here) [12,14,16–20]. The WS is also expressed in the presence of edge fragments or features defined by texture differences [11,19]. This implies that cuttlefish do not simply match the physical stimulus on their retina (e.g. light patches), but use multiple visual cues to infer the nature of the background, much as humans do for finding and recognizing objects [1,5].

Depth perception is used to measure the distance to objects, and also for interpreting three-dimensional shape. Cuttlefish may well have veridical depth perception, based on stereopsis and/or motion parallax. They probably use stereopsis (based on vergence angle of the eyes) to measure the range of prey before a strike [20], and can distinguish real pebble substrates from photographs that offer near-identical pictorial cues [10]. Evidence for the use of pictorial depth cues is seen in cuttlefishes' ability to recognize contour fragments as belonging to single larger (occluded) objects [19], and to sense texture gradients [21]. In addition, on light/dark checkerboard backgrounds, real visual depth (i.e. with one set of squares lying above the other) enhances the expression of disruptive patterns when the light squares lie above the dark, but not vice versa [10]. This finding suggests that the cuttlefish combine direct measurement of depth (perhaps by stereopsis or motion parallax) with the rule that light surfaces lie in front of dark surfaces.

Cuttlefish not only sense depth; they also asymmetrically colour the WS [22,23], which, at least for the human eye, produces pictorial shading, giving a sense of three-dimensional relief and making the WS appear convex when physically it is almost flat (figure 1c) [11,22,23]. Although we cannot be sure how a natural adversary (such as a predatory fish) would see it, the shading could enhance camouflage, either by allowing the WS to resemble a pebble in the background, or by disrupting the planar surface of the mantle and hence obscuring the animal's three-dimensional shape. Regardless of its particular function, this remarkable ability allows us to investigate how cuttlefish see shape from shading. Here, we compare the coloration of the WS produced when cuttlefish settle on a substrate containing three-dimensional hemispheres to that on a range of two-dimensional test patterns (figure 2), which are similar to those used by Ramachandaran [3] to study how humans interpret pictorial shading. We also tested how the animals combined illumination with pictorial cues in three illumination scenarios: (i) light from the side congruent with the shading cues for a convex surface; (ii) light from the side incongruent with the background stimulus; and (iii) light from both sides.

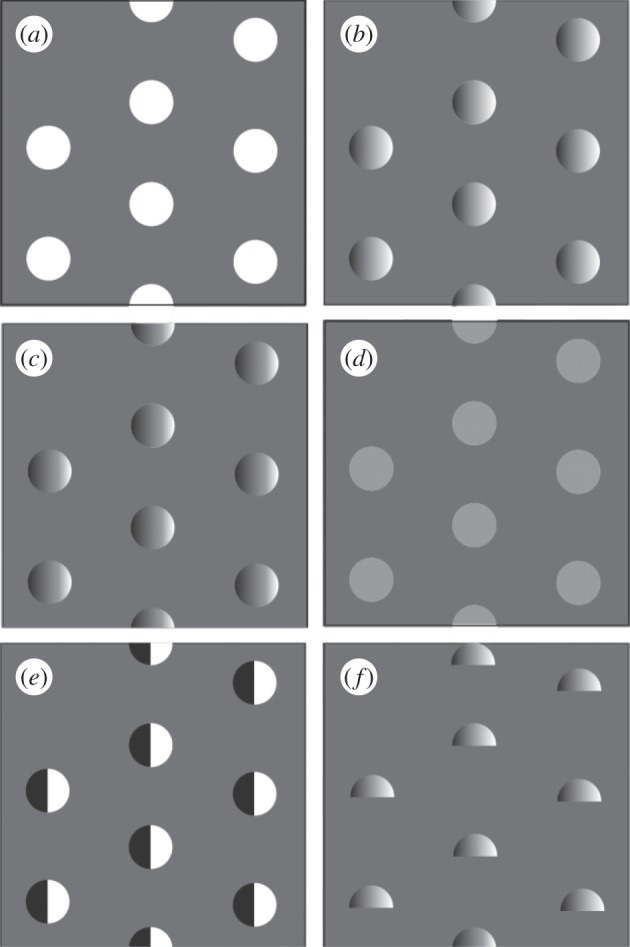

Figure 2.

The six test stimuli which were placed on a background of 25% reflectance (75% grey). (a) White circles: nominal reflectance 100%. (b) Gradient A: shaded from white to black with 50% grey lying in the centre and a mean of 50% grey. (c) Gradient B: same as gradient A but with 75% grey in the centre and a mean of 75% grey. (d) Low-contrast circles: with reflectance of 50%. (e) Circles: circles composed of half white and half black. (f) Three-dimensional objects: hemispheres made of white Plasticine. All stimuli were 24 mm in diameter. A negative control of uniform grey of 25% reflectance is not shown.

2. Material and methods

(a). Animals and experimental setup

We tested 10 juvenile cuttlefish aged eight to nine months with mantle lengths 70 ± 5 mm. Juveniles are used in most studies of this kind for practical reasons, not because in this context (to the best of our knowledge) their visual behaviour differs from adults. The animals were kept in purpose-built research facilities at the Sea Life Brighton aquarium, and housed together in a large (100 × 50 × 75 cm) continuous-flow tank. Experiments were conducted in a 90 × 75 cm filming tank 15 cm deep with seawater, which was refreshed for each animal. Responses of all 10 animals (taken in a random order) to a single stimulus were assayed on a single day. After testing, each animal was placed in a temporary holding tank (35 × 25 cm) until all 10 had been assayed, at which point they were returned to the main holding tank. The filming tank was housed within black-painted metal walls with a large mirror fixed at 45° and a small opening in the front to enable animals to be photographed without disturbance. Photographs were taken with a Nikon D700 digital SLR camera. Animals were placed in a circular, transparent Perspex test arena with a diameter of 25 cm, which was placed on test backgrounds with the stimuli extending 25 cm beyond the arena walls, so that the animal was always surrounded by the stimulus pattern. Animals were transferred to the arena and left for 15 min or longer, until they had settled on the background and were producing a stable body pattern. Three images were taken (in tiff format) of the animal with the surrounding arena at 5 min intervals.

(b). Stimuli

There were seven test backgrounds (figure 2), as follows. Negative control (not shown): a uniform 75% grey background (where 0% is white and 100% is black). White circles (figure 2a): circles of an area that would elicit a strong WS response in the cuttlefish based on their size [17], with a diameter of 24 mm. Gradient A (figure 2b): circles of same area as for white circles, but with a gradient of shading from white to black with 50% grey lying in the centre and a mean of 50% grey. Gradient B (figure 2c): as for gradient A but with 75% grey in the centre and a mean of 75% grey. Low-contrast circles (figure 2d): circles of same area as white circles, but low contrast with the background at 50% grey, having the same contrast as the mean of gradient A. Semicircles (figure 2d): circles composed of half white and half black with no gradient (this step edge does not result in illusory depth to human viewers; figure 1). Three-dimensional objects (figure 2e): hemispheres made of white Plasticine with the same base diameter as the circles in the previous stimuli, which were arranged on a uniform 75% grey background in the same number, separation and pattern as the printed circles. All two-dimensional backgrounds were designed using Adobe Illustrator, printed onto white heavyweight paper and laminated with matte laminator pouches. Backgrounds measured 50 × 50 cm.

(c). Illumination

The test arena was lit by either one or two Jolby Gorillapod LED lights covered with a layer of white felt to act as a diffuser. The internal light metering system of the SLR camera was checked regularly to ensure constant light levels. Lights were positioned at a 45° angle relative to the arena, 25 cm from the bottom of the test tank and 35 cm from the arena. Backgrounds were each tested under three lighting scenarios: (i) lighting congruent with configuration of light/dark areas for gradient and semicircle backgrounds (this scenario was also used for the other backgrounds as a control); (ii) lighting incongruent with configuration of light/dark areas for gradient and semicircle backgrounds (this scenario was also used for the other backgrounds as a control); and (iii) background lit evenly from both directions. The orientation of either background or lighting was changed between animals to pseudo-randomize the absolute direction of the light. For lighting scenario (iii), where the background was lit from both sides, light intensity was adjusted to give the same overall intensity as for (i) and (ii). This resulted in a total of 18 treatments.

(d). Image analysis

Three images of each animal were taken in tiff format at 5, 10 and 15 min after the start of each treatment, resulting in 630 images. After checking for consistency over time, images taken at 15 min were used in analysis, resulting in 210 images. These were pre-processed manually in Adobe Photoshop: images were each rotated so that the animal faced left and the body axis was horizontal, and the image area containing the animal's mantle was then cropped, with this area constant throughout the image set. These images were retained as tiffs, and files were renamed and reordered using a random number generator to randomize for animal and treatment, and to remove bias in the analysis.

(e). White square presence

The presence or absence of the WS body pattern component was scored by a single observer (S.Z.) for the entire randomized mantle image set. Where any WS defining boundary was discernable, it was classified as present; where no WS characteristics were discernable, it was classified as absent.

(f). White square asymmetry

A total of 144 images showed the WS component. To measure the degree of asymmetry between each WS half, we calculated the Jaccard distance (JD), which is commonly used to assess similarities/differences in binary matrices [24,25]. To do this, the cropped mantle image files were processed using a MATLAB script [26]: images were thresholded using Bradley adaptive thresholding (figure 3b); two abutting rectangles a and b (constant area, 100 × 50 pixels) were positioned manually to sample each side of the animal's WS (figure 3b); matrix b was transposed along the horizontal axis to match the position of matrix a. This was repeated three times for each image with randomization of presentation to allow for error in manually positioning the rectangles through the mid axis of the WS. The JD was calculated for each pair of rectangles. Data were then reassigned to original animals/treatments and a mean was taken of the three values.

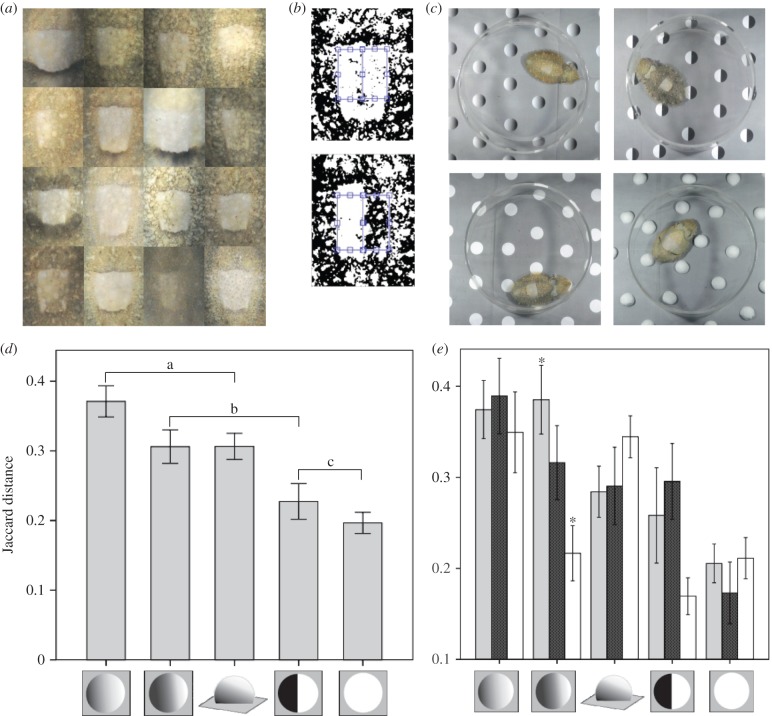

Figure 3.

(a) Some of the range of observed WS shading responses across all treatments. (b) Two examples of thresholded WS regions with little shading (top) and heavily asymmetrical shading (bottom), with blue rectangles showing areas compared. (c) Examples of typical responses to four of the test stimuli (note the dark posterior mantle bar on the animal with real hemispheres). (d) Mean difference in WS shading between halves as determined by JD for the stimuli shown. Brackets show homogeneous subgroups determined by Tukey's HSD post hoc tests following univariate analysis of variance. Error bars show standard error. (e) Mean difference in WS shading between halves as determined by JD for the stimuli showing the effects of different illumination conditions. Light grey bars: direction of illumination congruent with shading where appropriate; stippled bars: direction of illumination incongruent with shading cues where appropriate; white bars: illumination from both directions. Asterisks indicate significant difference between conditions determined by Tukey's post hoc pairwise comparisons. Error bars show 1 standard error. (Online version in colour.)

Statistical analysis was done with SPSS [27]. Data were normally distributed (Kolmogorov–Smirnov, p = 0.06), so generalized linear modelling univariate analysis was used to test for interactions between the two factors (stimulus and illumination) on the JD, with Bonferroni-adjusted pairwise comparisons to identify where significance lay.

It was not practical to score the level of expression of the WS independently of asymmetry, but the comparatively low levels of asymmetry in response to the black and white semicircles which gave the weakest WS response implies that asymmetry is not a correlate of weak expression.

(g). Orientation

We measured body orientation to a chosen point (the position of the ‘congruent’ light) of all original images in the test arena using the MB-Ruler program over 360°. For statistical analysis, we used absolute angles from the standard illumination point over 180° to avoid the problem of high and low values being similar in reality. Statistical analysis was done with SPSS; data were not normally distributed (Kolmogorov–Smirnov,  ). Because this analysis was an unplanned addition to the experiment, we did not include these data in the overall analysis, but tested for interactions of orientation with stimulus and illumination using generalized linear mixed modelling with a gamma distribution, log-link function and sequential Bonferroni adjustment for pairwise comparisons. We used Spearman's rank test to test for a correlation between WS asymmetry and body orientation.

). Because this analysis was an unplanned addition to the experiment, we did not include these data in the overall analysis, but tested for interactions of orientation with stimulus and illumination using generalized linear mixed modelling with a gamma distribution, log-link function and sequential Bonferroni adjustment for pairwise comparisons. We used Spearman's rank test to test for a correlation between WS asymmetry and body orientation.

3. Results

Ten juvenile cuttlefish were tested on seven types of visual background (figure 2, and a uniform grey). Three illumination conditions were used: from left or right (thus congruent or incongruent with shading in the case of asymmetrical stimuli), or from both sides, giving a total of 210 trials. We recorded presence or absence of the WS component, WS asymmetry and body orientation relative to the illumination and the background.

(a). White square presence

A uniform grey background (stimulus 5; negative control) invariably elicited a uniform body pattern with no WS [16], whereas low-contrast circles did so in 73% of tests. These responses are expected from previous studies [11,15], and as the WS was absent, the responses were removed from subsequent analysis of WS asymmetry. Responses to black and white semicircles were more interesting. When the illumination was congruent with the stimulus (i.e. from the light side), all 10 animals displayed a WS, whereas when illumination was incongruent with the stimulus six gave a uniform body pattern. Thus, there is a significant effect of illumination direction on WS expression (χ2; p = 0.0143; two-tailed), consistent with the animal seeing the stimulus pattern as a convex surface lit from the side. The WS component was expressed in all tests with high-contrast white circles, shaded circles and the hemispheres.

Interestingly, in 65% of cases where the WS was present the right side of the square was darker than the left (binomial test p = 0.044), which is reminiscent of other observations of lateralization in cephalopod behaviour [25,28]. As the animals were housed together, we were unable to assess whether the direction of lateralization was animal-specific, but a previous study found that a single animal could shade either left or right halves of the WS [22].

(b). White square asymmetry

Left–right asymmetry was measured by the JD [29,30] between each half of the WS, for all images where the WS was present (n = 144). The JD is the difference in light and dark areas between the two halves of the WS, and is objective, robust and simple to use. With this measure, gradient A (figures 2b and 3d) gave the greatest asymmetry (mean JD 0.371 ± 0.122), and the lowest was from the white circles (mean JD 0.197 ± 0.083). Generalized linear modelling univariate analysis revealed a highly significant effect of stimulus (d.f. = 4, f = 10.76, p < 0.001), no overall effect of illumination condition (d.f. = 2, f = 2.125, p = 0.124), and a significant interaction between illumination and stimulus (d.f. = 8, f = 2.343, p = 0.022).

Tukey's post hoc tests showed significantly greater asymmetry in responses to gradient A compared with the white circles and the semicircles ( in both cases). There were no significant differences between the responses to gradient A and gradient B (p = 0.155), nor to the three-dimensional objects (p = 0.160). Responses to white circles and semicircles (if the WS was expressed) did not differ significantly (p = 0.843). There were no significant differences in responses to gradient B and semicircles (p = 0.075), nor to three-dimensional objects (p > 0.5), with the former statistic probably owing to the animals' sensitivity to the light direction for both gradient B and the semicircles (figure 3e). Specifically, post hoc tests for the effects of the interaction between the illumination condition and stimulus found that for gradient B, illumination congruent with shading cues resulted in significantly greater asymmetry compared with bi-directional illumination (p = 0.008). With incongruent illumination, the shading asymmetry did not significantly differ from either the congruent or bi-directional illumination (figure 2a). Together, these observations suggest that cuttlefish use pictorial shading of background features and the direction of illumination as cues for WS expression and shading.

in both cases). There were no significant differences between the responses to gradient A and gradient B (p = 0.155), nor to the three-dimensional objects (p = 0.160). Responses to white circles and semicircles (if the WS was expressed) did not differ significantly (p = 0.843). There were no significant differences in responses to gradient B and semicircles (p = 0.075), nor to three-dimensional objects (p > 0.5), with the former statistic probably owing to the animals' sensitivity to the light direction for both gradient B and the semicircles (figure 3e). Specifically, post hoc tests for the effects of the interaction between the illumination condition and stimulus found that for gradient B, illumination congruent with shading cues resulted in significantly greater asymmetry compared with bi-directional illumination (p = 0.008). With incongruent illumination, the shading asymmetry did not significantly differ from either the congruent or bi-directional illumination (figure 2a). Together, these observations suggest that cuttlefish use pictorial shading of background features and the direction of illumination as cues for WS expression and shading.

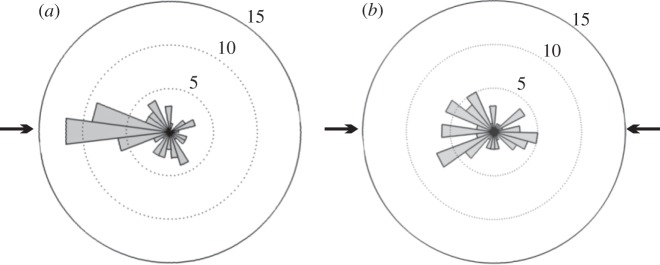

(c). Body orientation with respect to the illumination

One might expect cuttlefish to orient the shading of the WS with respect the light source, so as to enhance the impression of convexity, but in fact there was no correlation between WS asymmetry and the animals' orientation relative to the light source (rs (142) =−0.050, p = 0.55). Unexpectedly, however, cuttlefish tend to face the light sources (figure 4). We tested whether there was an interaction between this orientation response and the background or illumination conditions. Generalized linear mixed models found no significant effect of stimulus or illumination condition per se on orientation (F = 0.882 (4, 129), p = 0.477 and F = 2.044 (2, 129), p = 0.134, respectively), but they did reveal a significant interaction between stimulus and illumination (F = 2.768 (8, 129), p = 0.007). Specifically, pairwise contrasts show significantly reduced orientation to illumination in the case of gradient A when illumination was incongruent with the stimulus compared with when the illumination was congruent (adjusted p = 0.008) or bidirectional (adjusted p = 0.040).

Figure 4.

Examples of animal body orientation relative to direction of light, showing the influence of light direction. (a) Mean body orientation for all animals on all stimuli where lighting is from a single direction. (b) Mean body orientation for animals in response to the same stimuli, but where illumination is from both directions. Arrows indicate direction of illumination in both cases, numbers indicate number of incidences and bars indicate animals with heads positioned towards that direction.

4. Discussion

Asymmetry between the left and right sides in body patterns is well known in cephalopod communication signals, for example male Sepia apama use unilateral moving bands of dark chromatophores in courtship displays and agonistic contests [31,32], but shading of the WS (figures 1 and 2) may be the sole use of asymmetry in a cuttlefish camouflage pattern [23]. Human viewers naturally tend to see a convex surface rather than a shaded pattern, and it may be that this is how the shading works to prevent detection by predatory fish. Compared with plain white circles Sepia accentuates the shading in response to white hemispheres and also to shaded two-dimensional circles (figures 1–3). It is noteworthy that uniform circles of the same average contrast as the shaded stimuli failed to elicit a WS in 73% of tests, and instead the cuttlefish produced a uniform body pattern [16], which suggests that the animals see the shaded circles as discrete three-dimensional objects [11,15]. Evidence that the animals are not simply matching the intensity distribution on the surface is given by the observation that the asymmetry displayed in response to the black/white semicircle stimulus was no greater than to an unshaded white circle (figure 3d), and for the black/white semicircles, they were more likely to express the WS, rather than a uniform coloration pattern, when illumination was congruent with the white side of the pattern than when it was incongruent or bi-directional.

The implication that cuttlefish use pictorial shading to fine-tune camouflage is reinforced by the observations that they are sensitive to the direction of illumination. As mentioned above, for black/white semicircles they are more likely to express the WS with congruent than with incongruent illumination (i.e. light falling from the direction of the light semicircle). While for gradient B the shading (i.e. asymmetry) of the WS is enhanced when the illumination is from the lighter side of the stimuli, as expected for a convex surface, compared with bi-directional illumination (figure 3e). Illumination direction did not affect the WS asymmetry for gradient A, which gave the strongest overall asymmetry. This evidence suggests that shading dominates directional lighting cues, but that pictorial and illumination cues interact under certain conditions, so the cuttlefish is more likely to see a lightness gradient as being due to a shaded convex surface if the shading is consistent with the illumination. The cuttlefish do not, however, shade their pattern consistent with the illumination, but rather tend to turn to face the light source (figure 4a).

The fact that WS asymmetry in response to three-dimensional hemispheres was the same to the two two-dimensional gradient stimuli does not mean that the two-dimensional and three-dimensional stimuli look identical to the cuttlefish, as the overall body patterns differed. For example, they often displayed a posterior transverse mantle bar in combination with an overall paling of the body in response to the hemispheres, but not to the two-dimensional stimuli (figure 1b) [16]. This might be due to a mismatch between the angle/intensity of the illumination conditions and the pictorial cues in the two-dimensional objects, but it is more likely that the animals sense intrinsic differences between two- and three-dimensional stimuli. One interesting possibility is that different cues to depth and surface relief affect separate visuomotor responses, which are manifest in the different components of the animals body pattern (e.g. the WS versus the posterior mantle bar), but further work is required to understand the fascinating question of how cuttlefish integrate multiple cues to depth and form to produce camouflage [10,33].

(a). Pictorial depth and aquatic camouflage

Lateral asymmetry in our experiments is substantial, up to a mean of 37% (figure 3d). How the asymmetry is deployed in nature and how it is perceived by fish are matters for further study. The evidence here is however consistent with the hypothesis that cuttlefish, and presumably the animals that prey upon them, use pictorial depth cues in visual images much like humans [3]. Nonetheless, the response is not ‘perfect’ in that although they are sensitive to the direction of illumination in their response to shaded objects the animals do not shade the WS consistent with the illumination.

In fact, it would not be surprising if there were differences between humans and cuttlefish owing to attributes of their respective visual environments. European cuttlefish live over a range of depths in coastal waters from less than 2 m. In clear coastal water, shadows and relief are readily visible down to at least 10–15 m (S.J. 2000, personal observation). By comparison, owing to the high refractive index of water, specular highlights are less prominent than in air. In addition, lighting geometry is different above and below the water surface. On land, the sun moves across the sky, whereas in water, directional illumination is from above through Snell's window, and there is much diffuse light from scatter by suspended particles, meaning that pictorial relief will rapidly lose directionality over distance. However, where water is shallow and clear the cuttlefish need to distinguish between general visual texture and true relief cues to ensure good camouflage, and so combining illumination with pictorial shading could be crucial in providing protection from visual predators.

Ethics

This work was carried out in 2012 in accordance with Sea Life Centre guidelines under ethical approval from the Sea Life Centre (Merlin Entertainments).

Authors' contributions

S.Z. conceived, designed and carried out the experiments, carried out the data analysis and drafted the manuscript; D.O. helped plan experiments and draft the manuscript; S.J. coordinated the study and helped draft the manuscript. All authors gave final approval for publication.

Competing interests

We have no competing interests.

Funding

S.Z. and S.J. were supported by Office of Naval Research MURI grant no. N00014-09-1-1053.

References

- 1.Bruce V, Green PR, Georgeson MA. 1996. Visual perception: physiology, psychology and ecology, 3rd edn Hove, UK: Psychology Press. [Google Scholar]

- 2.Welchman AE, Deubelius A, Conrad V, Bülthoff HH, Kourtzi Z. 2005. 3D shape perception from combined depth cues in human visual cortex. Nat. Neurosci. 8, 820–827. ( 10.1038/nn1461) [DOI] [PubMed] [Google Scholar]

- 3.Ramachandran VS. 1988. Perception of shape from shading. Nature 331, 163–166. ( 10.1038/331163a0) [DOI] [PubMed] [Google Scholar]

- 4.Gerardin P, Kourtzi Z, Mamassian P. 2010. Prior knowledge of illumination for 3D perception in the human brain. Proc. Natl Acad. Sci. USA 107, 16 309–16 314. ( 10.1073/pnas.1006285107) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Marr D. 1982. Vision: a computational investigation into the human representation and processing of visual information. New York, NY: Henry Holt and Co. [Google Scholar]

- 6.Kral K. 2003. Behavioural–analytical studies of the role of head movements in depth perception in insects, birds and mammals. Behav. Process. 64, 1–12. ( 10.1016/S0376-6357(03)00054-8) [DOI] [PubMed] [Google Scholar]

- 7.Cavoto BR, Cook RG. 2006. The contribution of monocular depth cues to scene perception by pigeons. Psychol. Sci. 17, 628–634. ( 10.1111/j.1467-9280.2006.01755.x) [DOI] [PubMed] [Google Scholar]

- 8.Cook RG, Qadri MA, Kieres A, Commons-Miller N. 2012. Shape from shading in pigeons. Cognition 124, 284–303. ( 10.1016/j.cognition.2012.05.007) [DOI] [PubMed] [Google Scholar]

- 9.Chiao C-C, Hanlon RT. 2001. Cuttlefish camouflage: visual perception of size, contrast and number of white squares on artificial checkerboard substrata initiates disruptive coloration. J. Exp. Biol. 204, 2119–2125. [DOI] [PubMed] [Google Scholar]

- 10.Kelman EJ, Osorio D, Baddeley R.. 2008. Review on sensory neuroethology of Cuttlefish camouflage and visual object recognition. J. Exp. Biol. 211, 1757–1763. ( 10.1242/jeb.015149) [DOI] [PubMed] [Google Scholar]

- 11.Zylinski S, Osorio D, Shohet AJ. 2009. Perception of edges and visual texture in the camouflage of the common cuttlefish, Sepia officinalis. Phil. Trans. R. Soc. B 364, 439–448. ( 10.1098/rstb.2008.0264) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chiao CC, Chubb C, Hanlon RT. 2015. A review of visual perception mechanisms that regulate rapid adaptive camouflage in cuttlefish. J. Comp. Physiol. A. 201, 933–945. ( 10.1007/s00359-015-0988-5) [DOI] [PubMed] [Google Scholar]

- 13.Mäthger LM, Hanlon RT, Håkansson J, Nilsson D-E. 2013. The W-shaped pupil in cuttlefish (Sepia officinalis): functions for improving horizontal vision. Vision Res. 83, 19–24. ( 10.1016/j.visres.2013.02.016) [DOI] [PubMed] [Google Scholar]

- 14.Kelman EJ, Badderley RJ, Shohet AJ, Osorio D. 2007. Perception of visual texture and the expression of disruptive camouflage by the cuttlefish Sepia officinalis. Proc. R. Soc. B 274, 1369–1375. ( 10.1098/rspb.2007.0240) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zylinski S, Osorio D, Shohet AJ. 2009. Edge detection and texture classification by cuttlefish. J. Vision 9, 1–10. ( 10.1167/9.13.13) [DOI] [PubMed] [Google Scholar]

- 16.Hanlon RT, Messenger JB. 1988. Adaptive coloration in young cuttlefish (Sepia officinalis L): the morphology and development of body patterns and their relation to behaviour. Phil. Trans. R. Soc. Lond. B 320, 437–487. ( 10.1098/rstb.1988.0087) [DOI] [Google Scholar]

- 17.Barbosa A, Mäthger LM, Buresch KC, Kelly J, Chubb C, Chiao C-C, Hanlon RT. 2008. Cuttlefish camouflage: the effects of substrate contrast and size in evoking uniform, mottle or disruptive body patterns. Vision Res. 48, 1242–1253. ( 10.1016/j.visres.2008.02.011) [DOI] [PubMed] [Google Scholar]

- 18.Chiao C-C, Kelman EJ, Hanlon RT. 2005. Disruptive body patterning of cuttlefish (Sepia officinalis) requires visual information regarding edges and contrast of objects in natural substrate backgrounds. Biol. Bull. 208, 7–11. ( 10.2307/3593095) [DOI] [PubMed] [Google Scholar]

- 19.Zylinski S, Darmaillacq A-S, Shashar N. 2012. Visual interpolation for contour completion by the European cuttlefish (Sepia officinalis) and its use in dynamic camouflage. Proc. R. Soc. B 279, 2386–2390. ( 10.1098/rspb.2012.0026) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Messenger JB. 1968. Visual attack of cuttlefish Sepia officinalis. Anim. Behav. 16, 342–357. ( 10.1016/0003-3472(68)90020-1) [DOI] [PubMed] [Google Scholar]

- 21.Josef N, Mann O, Sykes AV, Fiorito G, Reis J, Maccusker S, Shashar N. 2014. Depth perception: cuttlefish (Sepia officinalis) respond to visual texture density gradients. Anim. Cogn. 17, 1–8. ( 10.1007/s10071-014-0774-8) [DOI] [PubMed] [Google Scholar]

- 22.Anderson JC, Baddeley RJ, Osorio D, Shashar N, Tyler CW, Ramachandran VS, Crook AC, Hanlon RT. 2003. Modular organization of adaptive colouration in flounder and cuttlefish revealed by independent component analysis. Netw. Comput. Neural Syst. 14, 321–333. ( 10.1088/0954-898X_14_2_308) [DOI] [PubMed] [Google Scholar]

- 23.Langridge KV. 2006. Symmetrical crypsis and asymmetrical signalling in the cuttlefish Sepia officinalis. Proc. R. Soc. B 273, 959–967. ( 10.1098/rspb.2005.3395) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hanlon RT, Chiao CC, Mäthger LM, Barbosa A, Buresch KC, Chubb C. 2009. Cephalopod dynamic camouflage: bridging the continuum between background matching and disruptive coloration. Phil. Trans. R. Soc. B 364, 429–437. ( 10.1098/rstb.2008.0270) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Byrne RA, Kuba MJ, Meisel DV. 2004. Lateralized eye use in Octopus vulgaris shows antisymmetrical distribution. Anim. Behav. 68, 1107–1114. ( 10.1016/j.anbehav.2003.11.027) [DOI] [Google Scholar]

- 26.MathWorks 2012 MATLAB and statistics toolbox release 2012b. Natick, MA: MathWorks.

- 27.IBM 2012 SPSS statistics for Windows 21.0. Armonk, NY: IBM Corp. [Google Scholar]

- 28.Jozet-Alves C, Viblanc VA, Romagny S, Dacher M, Healy SD, Dickel L. 2012. Visual lateralization is task and age dependent in cuttlefish, Sepia officinalis. Anim. Behav. 83, 1313–1318. ( 10.1016/j.anbehav.2012.02.023) [DOI] [Google Scholar]

- 29.Hubalek Z. 1982. Coefficients of association and similarity, based on binary (presence–absence) data: an evaluation. Biol. Rev. 57, 669–689. ( 10.1111/j.1469-185X.1982.tb00376.x) [DOI] [Google Scholar]

- 30.Choi S-S, Cha S-H, Tappert CC. 2010. A survey of binary similarity and distance measures. J. Syst. Cybern. Informatics 8, 43–48. [Google Scholar]

- 31.Hall KC, Hanlon RT. 2002. Principle features of the mating system of a large spawning aggregation of the giant Australian cuttlefish Sepia apama (Mollusca: Cephalopoda). Mar. Biol. 140, 533–545. ( 10.1007/s00227-001-0718-0) [DOI] [Google Scholar]

- 32.Zylinski S, How M, Osorio D, Hanlon RT, Marshall N. 2011. To be seen or to hide: visual characteristics of body patterns for camouflage and communication in the Australian giant cuttlefish Sepia apama. Am. Nat. 177, 681–690. ( 10.1086/659626) [DOI] [PubMed] [Google Scholar]

- 33.Ulmer KM, Buresch KC, Kossodo M, Mäthger L, Siemann LA, Hanlon RT. 2013. Vertical visual features have a strong influence on cuttlefish camouflage. Biol. Bull. 224, 110–118. [DOI] [PubMed] [Google Scholar]