Abstract

Reconstructing images from their noisy and incomplete measurements is always a challenge especially for medical MR image with important details and features. This work proposes a novel dictionary learning model that integrates two sparse regularization methods: the total generalized variation (TGV) approach and adaptive dictionary learning (DL). In the proposed method, the TGV selectively regularizes different image regions at different levels to avoid oil painting artifacts largely. At the same time, the dictionary learning adaptively represents the image features sparsely and effectively recovers details of images. The proposed model is solved by variable splitting technique and the alternating direction method of multiplier. Extensive simulation experimental results demonstrate that the proposed method consistently recovers MR images efficiently and outperforms the current state-of-the-art approaches in terms of higher PSNR and lower HFEN values.

1. Introduction

Magnetic Resonance Imaging (MRI) plays an essential role in medical diagnostic tool, which provides clinicians with important anatomical information in the absence of ionizing radiation. Despite its superiority in obtaining high-resolution images and excellent depiction of soft tissues and acting as a noninvasive and nonionizing technique, the imaging speed in MR is limited by physical and physiological constraints. Its long scanning time leads to artifacts caused by the motion of patient's discomfort. Therefore, it is necessary to seek for a method to reduce the acquisition time. However, the reduction of the acquired data which compromises with its diagnostic value may result in degrading the image quality. Considering these reasons, finding an approach for accurate reconstruction from highly undersampled k-space data is of great necessity for both quick MR image acquisition and clinical diagnosis [1].

Generally, the existing regularization approaches fall into two categories: the predefined transform and the adaptively learned dictionary. The first category of predefined transform methods is usually related to total variation and wavelet transform. For example, Ma et al. employed the total variation (TV) penalty and the wavelet transform for MRI reconstruction [2]. As for the TV regularization that only considers the first-order derivatives, it is well known that it can preserve shape edges but often leads to stair casing artifacts and results in patchy, cartoon-like images which appear unnatural. In [3–6], the total generalized variation (TGV) which involves high-order derivatives was proposed. This regularization preserves the high-order smoothness better. Actually, TGV is equivalent to TV in terms of edge preservation and noise removal, which can also be true of imaging situations where the assumption of what TV is based on is not effective. It is more precise in describing intensity variation in smooth regions and thus reduces oil painting artifacts while still being able to preserve sharp edges like TV dose [7, 8]. Recently, Guo et al. proposed an outstanding method that combines shearlet transform and TGV (SHTGV) [9], which is able to recover both the texture and the smoothly varied intensities while the other methods such as shearlet and TGV only models either return a cartoon image or lose the textures. SHTGV is able to preserve edges and fine features and provide more “natural-looking” images. Although this work has improved the reconstruction result, it is still an analytically designed dictionary, which can be considered as only forcing the reconstructed image to be sparse with respect to spatial differences, as well as having intrinsic deficiency and lacking the adaptability to various images [10–12].

The second type of regularization method exploits the nonlocal similarity and sparse representation. Most of the existing DL models adopt a two-step iterative scheme, in which on the one hand the sparse representation approximations are found with the dictionary fixed and on the other hand the dictionary is subsequently optimized based on the current sparse representation coefficients [13–15]. Numerical experiments have indicated that these data-learning approaches obtained considerable improvements compared to previous predefined dictionaries-based methods [16, 17]. For instance, Ravishankar and Bresler assumed every image patch has sparse representation and proposed an outstanding two-step alternating method named dictionary learning-based MRI (DLMRI) reconstruction [18]. The first step is for adaptively learning dictionary; another step is for reconstructing image from highly undersampled k-space data. Nevertheless, most of existing methods fail to consider the representation of the sharp edge, which may lead to the loss of fine details. Motivated by this deficiency, we prefer to use total generalized variation to compensate the insufficiency of DL based methods [16–18].

In the proposed work, we exploit the strengths of both total generalized variation and patch-based adaptive dictionary for MR image reconstruction. This idea is motivated by the proceeding work about dictionary learning-based sparse representation and the second-order total generalized variation regularization. The proposed algorithm integrates the TGV regularizer and dictionary learning, which recovers both edges and details of images and selectively regularizes different image region at different levels. The solution of the proposed algorithm is derived by the variable splitting technique and the alternating direction method of multiplier (ADMM) [19, 20], alternatively calculating the dictionary and sparse coefficients of image patches and estimating the reconstructed image. The main contribution of this paper is the development of a more accurate and robust method. Firstly, the introduction of adaptively learned dictionary alleviates the artifacts caused by the piecewise smooth assumption and allows an image with complex structure to be recovered accurately. Secondly, the total generalized variation is equipped with options to accommodate the high degrees of smoothness that involved higher order derivatives and is more appropriate to represent the regularities of piecewise smooth images.

The remainder of this paper is organized as follows. We start with a brief review on the applications of dictionary learning and total generalized variation in Section 2. The proposed model integrating the dictionary learning and the TGV regularizer is presented and solved in detail in Section 3. Many numerical simulation results are illustrated in Section 4 to show the superiority of the proposed method, using a variety of sampling schemes and noise levels. Finally, conclusion is given in Section 5.

2. Background and Related Work

In this section, we review some classical models and algorithms for image reconstruction in the context of sparse representation. After the dictionary learning model and the total generalized variation algorithm were briefly reviewed, the proposed algorithm dictionary learning with total generalized variation (DLTGV) algorithm was derived in detail by incorporating the dictionary learning into the plain TGV algorithm. The following notation conventions are used throughout the paper. Let u ∈ ℂ n×n be the underlying image reconstructed, and let b ∈ ℂ Q represent the undersampled Fourier measurements. The partially sampled Fourier encoding matrix K ∈ ℂ Q×n2 projects u to b domain such that b = Ku + ξ, with the ξ error. MRI reconstruction problem is formulated as the retrieval of the vector u based on the observation b and given the partially sampled Fourier encoding matrix K.

2.1. Dictionary Learning Recovery Model

Besides predefined sparsifying transform, sparse and redundant representations of image patches based on learned dictionaries have drawn considerable attention in recent years. Adaptive dictionary updating can represent image better than preconstructed dictionary. Owing to its adaptability to various image contents, dictionary learning possesses strong capability in preserving fine structures and details for image recovery problems. The patch-based sparsity can efficiently capture local image structures and can potentially alleviate aliasing artifacts. Sparse coding and simple dictionary updating steps make the algorithm converge in small iterations. The sparse model J(u) = (λ 0/2)[‖AΓ − Ru‖2 2 + λ 1‖Γ‖1] is denoted as the regularization term for MRI reconstruction and solves the objective function as follows:

| (1) |

Consequently, the present method solves the objective function (1) by reformulating it as follows:

| (2) |

where A = [a 1, a 2,…, a J] ∈ ℂ M×J and Γ = [x 1, x 2,…, x I] ∈ ℂ J×I. Ru stands for the extracted patches. The MR image is reconstructed as a minimizer of a liner combination of two terms corresponding to the dictionary learning-based sparse representation and least square data fitting. The first term enforces data fidelity in k-space, while the second term enforces sparsity of image with respect to an adaptive dictionary. The parameter λ 0 balances the sparse level of the image patches and the approximation error in the updating dictionary. The parameter λ 1 balances the weight of coefficient. For many natural or medical images, the value of λ 1 can be determined empirically with robust performance in our work. J = T · M, T denotes the overcompleteness factor of the dictionary. The classical method to solve model (2) is DLMRI, through a two-step alternating manner. DLMRI model has performed superiorly compared to those using fixed basis. We exploit DL techniques to be more effective and efficient by adding high-order regularization of image, which will be presented in Section 3.

2.2. Total Generalized Variation

Image reconstruction using method of TGV achieves better results in many practical situations. The TGV of order k is defined as follows:

| (3) |

where C c k(Ω, Symk(ℝ d)) is the space of compactly supported symmetric tensor field and Symk(ℝ d) is the space of symmetric tensor on ℝ d. Choosing k = 1 and α = (1,1) yields the classical total variation. It constitutes a new image model which can be interpreted to incorporate smoothness from the first up to the kth derivative. Particularly, the second-order TGV can be written as

| (4) |

where directional derivatives ∇1 u and ∇2 u can be approximated by D 1 u and D 2 u and D 1 and D 2 are the circulate matrices corresponding to the forward finite difference operators with periodic boundary conditions along the x-axis and y-axis, respectively. Then ∇u is approximated by Du and is approximated by

| (5) |

The proposed method derived another form of TGVα 2 in terms of l 1 minimization so that the model can be solved efficiently by ADMM. After discretization, (4) can be efficiently solved by ADMM. Image reconstruction with TGV regularization produces piecewise polynomial intensities. The convexity of TGV makes it computationally feasible. It refers to [3, 4] for further details and comparisons.

3. Proposed Algorithm DLTGV

In this work, we propose a new regularization scheme, combining adaptive dictionary learning with the regularization approach total generalized variation TGVα 2 to reconstruct the target image with a lot of directional features and high-order smoothness. The dictionary learning is related to the image patch-based coefficient matrix and dictionary. The proposed method reconstructs the image simultaneously from highly undersampled k-space data and consists of a variable splitting solver alternating direction method of multiplier (ADMM). In the smooth regions of image u, the second derivative is locally small. Hence, using the generalized variation algorithm to regularize the nonconvex function will perform better, leading to a more faithful reconstruction of MR image. The proposed method recovers both edges and details of images and selectively regularizes different image region at different levels and thus largely avoids oil painting artifacts.

3.1. Proposed New Model

To reconstruct image u using the dictionary learning and total generalized variation regularization, we propose a new model to reconstruct the MRI images u as follows:

| (6) |

where the parameter β > 0 is related to the noise level ξ. We utilize the second-order TGVα 2 in our proposed method. With the new formulation of the discrete TGVα 2 in (4), the proposed model (6) turns to be

| (7) |

As in (5), the discrete version of (7) is

| (8) |

3.2. Algorithm to Solve Model (8)

As discussed in the previous section, dictionary updating and sparse coding to (2) are performed sequentially. In the following, we investigate that using TGVα 2 as the regularization leads to an absence of the staircasing effect which is often observed in total variation regularization. To solve the proposed model, the first step of this alternating scheme is solved, image u is assumed fixed, and the dictionary and the sparse representations of the images are jointly updated. In the next step, the dictionary and sparse representation are fixed, and image u is updated through ADMM algorithm to satisfy data consistency.

The minimization equation (8) with respect to image u is derived as follows. Noting that there are two l 1 terms in the reformulated model in (8) besides the second term, we apply ADMM to solve the optimization problem. We introduce auxiliary variable y and z for each l 1 term:

| (9) |

So (8) is equivalent to

| (10) |

After applying the ADMM, we achieve the following algorithm:

| (11) |

Similar to the above section, we apply ADMM and decompose the optimization problem into five sets of subproblems as follows.

3.2.1. Solve y n+1 and z n+1

The first two subproblems are similar and the solutions are given explicitly by shrinkage operation. The solution to the y subproblem is

| (12) |

where y n+1(l) ∈ ℝ 2 represents the component of y n+1 located at l ∈ Ω, and the isotropic shrinkage operator shrink2 is defined as

| (13) |

Likewise, we have the solution to the z problem as

| (14) |

where z n+1(l) ∈ s 2×2 is the component of z n+1 corresponding to the pixel l ∈ Ω and

| (15) |

Note that 0 here is a 2 × 2 zero matrix and ‖·‖F is the Frobenius norm of matrix.

3.2.2. Update Dictionary and Coefficient

The minimization equation (8) with respect to dictionary and coefficient thus can be solved separately. Dictionary learning and coefficient updating step: in this step, the problem is solved with fixed image u, with the second term corresponding subproblem as follows:

| (16) |

The parameter τ 1 in (16) is the required sparsity level. The strategy to solve (16) is to alternatively update dictionary A and sparsely represented coefficient Γ, the same as that used in K-SVD and DLMRI model. Specifically, in the sparse coding step, the solution of (16) is achieved by the orthogonal matching pursuit with respect to a fixed dictionary A. While at the dictionary updating step, the columns of the designed dictionary (represented by a k, 1 ≤ k ≤ K) are updated sequentially by using singular value decomposition (SVD) to minimize the approximation error. The K-SVD algorithm is used to learn the dictionary A. With the dictionary that is learnt, sparse coding is performed on the image to get the sparse represented coefficient Γ. Specifically, K-SVD is exploited to train the sparsifying dictionary for removing aliasing and noise, so that the target image u is reconstructed from learned dictionary and sparse representation.

3.2.3. Solve u n+1 and p n+1

To solve the (u, p) subproblem, we obtain the second directional derivatives and the discretization with periodic boundary conditions, respectively, and then define the Lagrangian function. Taking the partial derivatives with respect to u, p 1, p 2, we get the normal equations as

| (17) |

Depending on the formulation of K, many methods can be used to solve (17) liner system. In this work, we illustrate the idea by means of solving the compressive sensing reconstruction problem. In this section, we fix attention on incomplete Fourier measurements as they have a wide range of applications in medical imaging and are very popular. We denote K = F p = PF, where P is a selection matrix and F is a 2D matrix representing the 2D Fourier transform. The selection matrix P keeps the identity matrix if the data is sampled.

For incomplete Fourier transform, the subproblem equation (17) seems complicated. By the fact that it is easy to solve as the circulate matrix diagonalized by 2D Fourier transform F, next we demonstrate how to obtain the closed-form solution to (17). After grouping the like terms in (17), we get the following liner system:

| (18) |

where the block matrix is defined as

| (19) |

Next we multiply a preconditioner matrix from the left to linear system so that the coefficient matrix is block-wise diagonal:

| (20) |

The above operation can also be equivalently performed by multiplying each equation in (17) from the left with F. Denote

| (21) |

Similar to the scalar case, Fu, Fp 1, and Fp 2 can be obtained by applying the Cramer's rule. So u, p 1, and p 2 have the following closed forms:

| (22) |

where the division is component-wise and

| (23) |

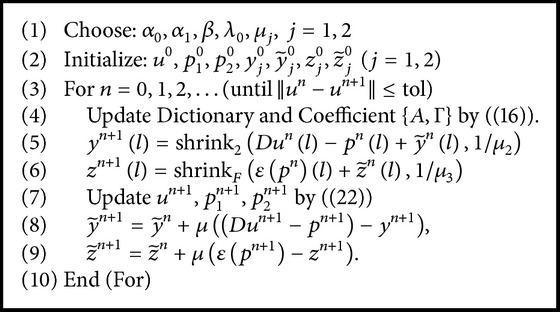

Now, we summarize our proposed method for MRI reconstruction here, which we call dictionary learning with total generalized variation (DLTGV). The detailed description of the proposed method is listed in Algorithm 1. The proposed algorithm DLTGV alternatively updates image patch related coefficients, auxiliary variables, and the target solution u. The difference between the plain SHTGV and the DLTGV methods mainly lies on the difference of shearlet transform and dictionary learning. In DLTGV, adaptively learned dictionary alleviates the artifacts caused by the piecewise smooth assumption and allows an image with complex structure to be recovered accurately. The performance of DLTGV also depends on the selection of parameters, which will be explained in Section 4.

Algorithm 1.

DLTGV.

4. Experiments Results

In this section, the performance of proposed method was presented under a variety of sampling schemes and different undersampling factors. The sampling schemes used in our experiments include trajectory radial sampling, the 2D random sampling, and Cartesian sampling with random phase encoding (1D random). In the experiments, reconstruction results were obtained in simulated MRI data and complex-value data. The synthetic experiments used the images that are in vivo MR scans of size 512 × 512 (many of which are used in [18]). The complex-valued image [21, 22] in Figures 5 and 6 is of size 512 × 512 and those in Figures 7 and 8 are of size 256 × 256. According to many prior works on the CS data acquisition was simulated by subsampling the 2D discrete Fourier transform of the MR images (except the test with real acquired data).

Figure 5.

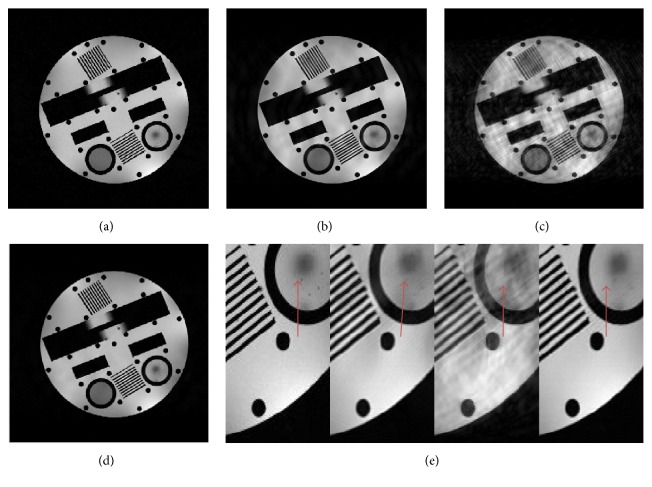

Reconstruction comparison of the physical phantom. (a) Fully sampled image. ((b), (c), (d)) Reconstruction results corresponding to DLMRI, SHTGV, and DLTGV at 8-fold undersampling. (e) The local area of enlargements of (a), (b), (c), and (d).

Figure 6.

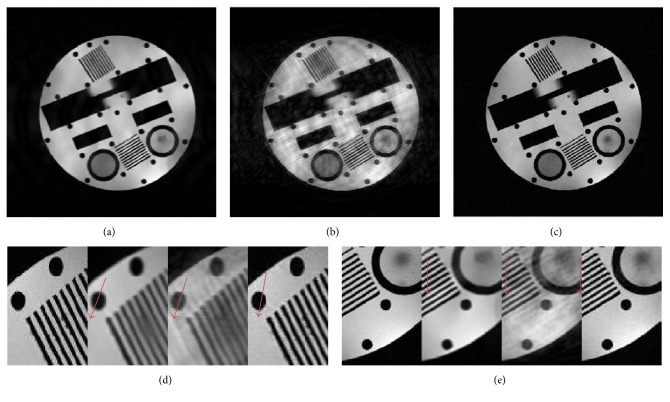

Comparison of reconstruction of a physical MR image with noise of σ = 30. ((a), (b), (c)) Reconstruction results of DLMRI, SHTGV, and DLTGV at 8-fold undersampling. ((d), (e)) The area of enlargements corresponding to (a), (b), and (c).

Figure 7.

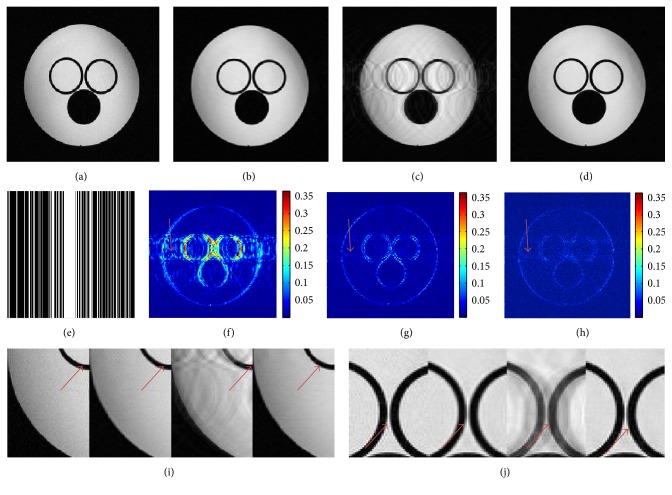

Reconstructed water phantom images at 35% undersampling. (a) The fully sampled image. ((b), (c), (d)) The reconstruction images corresponding to DLMRI, SHTGV, and DLTGV. (e) Mask data with 35% Cartesian sampling. ((f), (g), (h)) Reconstruction error magnitudes for DLMRI, SHTGV, and DLTGV. ((i), (j)) Enlargements of (a), (b), (c), and (d).

Figure 8.

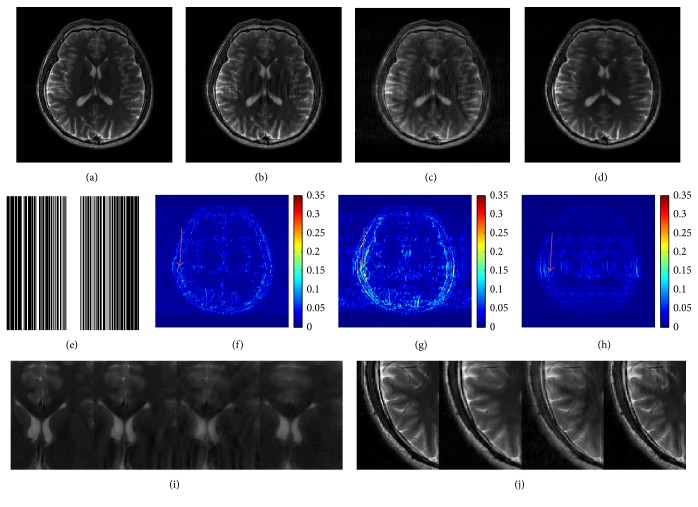

Reconstructed brain images at 40% undersampling. (a) The fully sampled image. ((b), (c), (d)) The reconstruction images using DLMRI, SHTGV, and DLTGV. (e) Mask data with 40% Cartesian sampling. ((f), (g), (h)) Reconstruction error magnitudes for DLMRI, SHTGV, and DLTGV. ((i), (j)) Enlargements of (a), (b), (c), and (d).

In the experiments, our proposed method was compared with the leading DLMRI and SHTGV methods that have shown the substantial outperformance compared to other CS-MRI methods. The implementation coefficients of dictionary learning in DLMRI and our method DLTGV are the same, which is solved by K-SVD algorithm. The parameters of DLMRI and SHTGV methods were set to the default values. We introduced the peak signal-to-noise ratio (PSNR) and high-frequency error norm (HFEN) to quantify the quality of our reconstruction. All experiments were implemented in MATLAB 7.11 on a PC equipped with Intel core i7-3632QM and 4 GByte RAM.

4.1. Impact of Undersampling Schemes

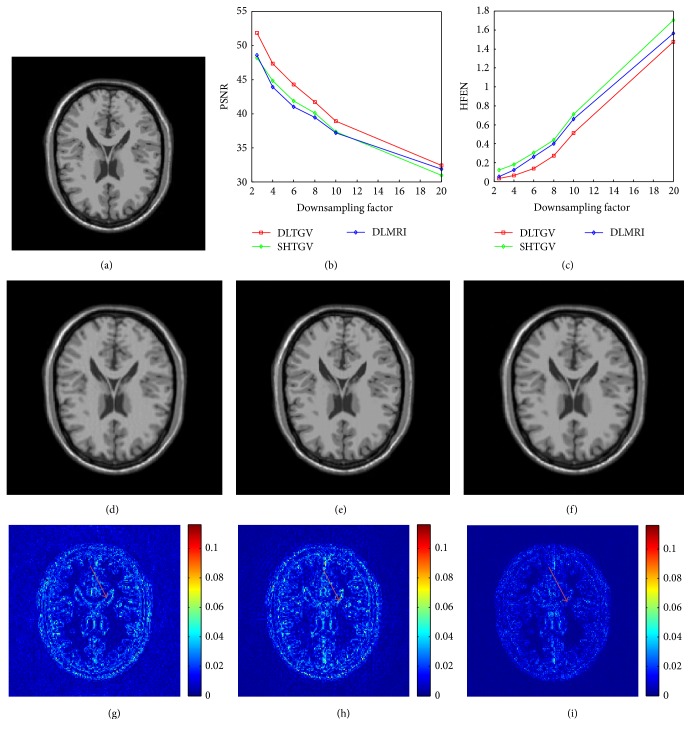

In this subsection, we evaluated the performance of DLTGV under different undersampling ratio at pseudo radial sampling trajectory. Figure 1 illustrates the reconstruction results with the pseudo radial sampled k-space at a range of undersampling factors with 2.5, 4, 6, 8, 10, and 20. The PSNR and HFEN values for DLMRI, SHTGV, and DLTGV at a variety of factors are presented in Figures 1(b) and 1(c). For the subjective comparison, the construction results and magnitude image of the reconstruction error produced by the three methods at 8-fold undersampling are presented in Figures 1(d), 1(e), and 1(f) and Figures 1(g), 1(h), and 1(i), respectively. As can be seen in Figure 1, the magnitude image of the reconstruction error for DLTGV shows less pixel errors and detail information than those of SHTGV (Figure 1(e)) and DLMRI (Figure 1(f), Table 1).

Figure 1.

(a) The reference image. ((b), (c)) The PSNR and HFEN versus the downsampling factor. ((d), (e), (f)) The reconstruction results under pseudo radial sampling trajectory of DLMRI, SHTGV, and DLTGV. ((g), (h), (i)) The corresponding reconstruction error magnitudes of (d), (e), and (f).

Table 1.

Reconstruction PSNR (dB) and HFEN values at different undersampling factors with the same pseudo radial sampling trajectories.

| Downsampling factor | 20-folder | 10-folder | 8-folder | 6-folder | 4-folder | 2.5-folder |

|---|---|---|---|---|---|---|

| DLMRI | 31.86 (1.56) | 37.17 (0.66) | 39.45 (0.40) | 41.01 (0.26) | 43.92 (0.12) | 48.60 (0.05) |

| SHTGV | 30.92 (1.70) | 37.31 (0.71) | 40.06 (0.44) | 41.85 (0.31) | 44.82 (0.18) | 48.18 (0.12) |

| DLTGV | 32.39 (1.48) | 38.89 (0.51) | 41.70 (0.27) | 44.30 (0.14) | 47.34 (0.06) | 51.87 (0.03) |

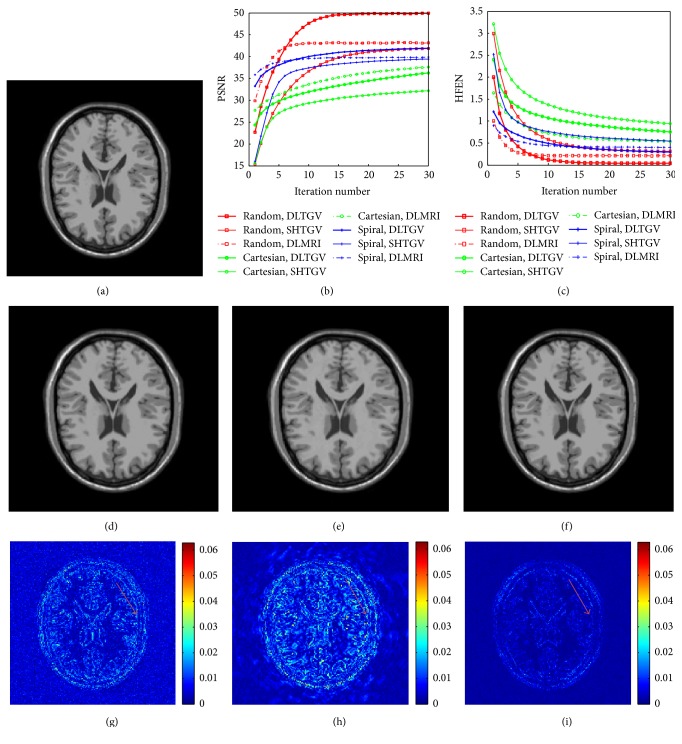

The results with 7.11-fold undersampling of three different sampling schemes, including 2D random sampling, the sampling of central k-space phase encoding lines, and spiral sampling, are presented in Figure 2. The PSNR and HFEN curves are plotted in Figures 2(b) and 2(c) corresponding to DLMRI, SHTGV, and DLTGV. It can be seen that the more irrelevant the acquisition is, the better the reconstruction will be gained, and therefore the PSNRs obtained by 2D random sampling get more improvements than those of other sampling schemes. The results achieved by applying 2D random sampling are presented in Figures 2(d), 2(e), and 2(f). The magnitude error image for DLTGV shows that the reconstructed result using the proposed algorithm is more consistent than other methods. It can be seen that, under the same undersampling rate, the improvements gained by DLTGV outperform other methods at different trajectories.

Figure 2.

Reconstruction of an axial T2-weighed brain image at 7–11-fold undersampling. (a) The reference image. ((b), (c)) The PSNR and HFEN versus the number of iterations. ((d), (e), (f)) The reconstruction results under 2D random sampling by three methods DLMRI, SHTGV, and DLTGV. ((g), (h), (i)) The corresponding reconstruction error magnitudes of (d), (e), and (f).

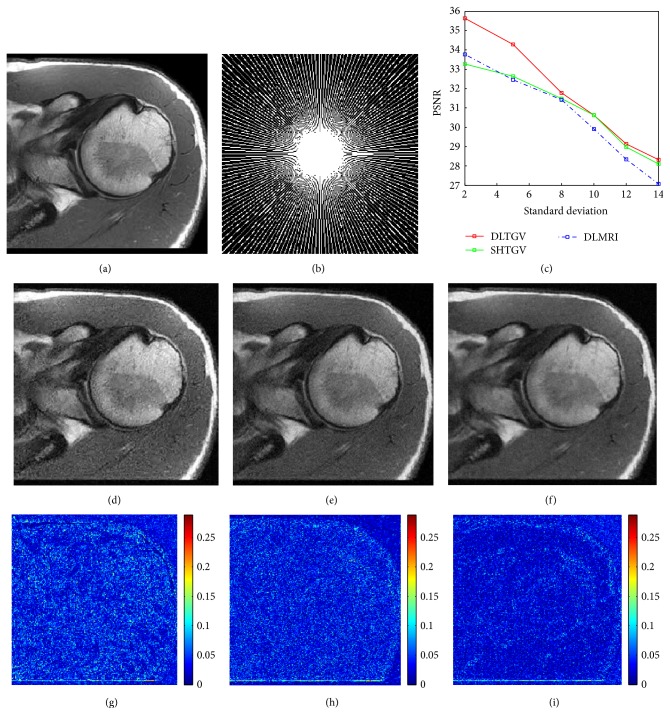

4.2. Performance with Noise

To investigate the sensitivity of DLTGV to different levels of complex white Gaussian noise, DLMRI, SHTGV, and DLTGV were applied to reconstruct image under pseudo radial sampling at 6.09-fold acceleration. Figure 3 presents the reconstruction results of three methods at different levels of complex white Gaussian noise, which were added to the k-space samples. PSNRs of the recovered MR images by DLMRI (blue curves), SHTGV (green curves), and DLTGV (red curves) at a sequence of different standard deviations (σ = 2, 5, 8, 10, 12, 14) are shown in Figure 3(c). In the case of σ = 2, the PSNR of the image obtained by DLMRI is only 33.75 dB, SHTGV is 33.28 dB, and DLTGV reached 35.67 dB. Obviously, the difference gap between three methods is significant at low noise levels. The corresponding magnitudes of the reconstruction errors with σ = 14 are shown in Figures 3(d), 3(e), and 3(f). It can be observed that the DLTGV reconstruction appears less obscured than those in the DLMRI results. Meanwhile, the reconstruction by DLTGV is clearer than that by DLMRI and SHTGV and is relatively devoid of aliasing artifacts. It reveals that our method provides a more accurate reconstruction of image contrast and sharper anatomical depiction in noisy case.

Figure 3.

(a) Reference image. (b) Sampling mask in k-space with 6.09-fold undersampling. (c) PSNR versus noise level for DLMRI, SHTGV, and DLTGV. ((d), (e), (f)) Reconstructed images using DLMRI, SHTGV, and DLTGV. ((g), (h), (i)) Reconstruction error magnitudes for DLMRI, SHTGV, and DLTGV with noise σ = 14.

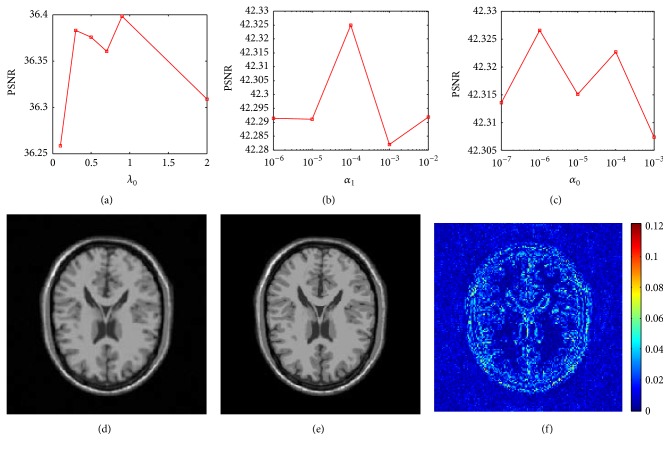

4.3. Parameter Evaluation

Similar to the detail-preserving regularity scheme, this section evaluates the sensitivity of the proposed method to parameter settings by varying one parameter at a time while keeping the rest fixed at their nominal values. The parameter evaluation in Figure 4 was investigated in radial trajectory sampling with 8-fold undersampling. The parameters β and λ 1 were observed to work well at their normal value and hence are not studied separately. The three parameters λ 0, α 1, and α 0 are related to the noise level as well as the sparsity of underlying image of interest under dictionary leaning and TGV regularity. PSNRs values are plotted in Figure 4 over these parameters. It is obvious that more fine tuning of the parameters may lead to better results, but the results with the parameters setting are consistently promising. The plots of Figure 4 indicate that the “nominal” parameter values work reasonably well. The results demonstrate that the algorithm is not very sensitive to parameters and can be used without tuning.

Figure 4.

Parameter evaluation. (a) PSNR versus λ 0. (b) PSNR versus α 1. (c) PSNR versus α 0. (d) The reference image. (e) Reconstructions with λ 0 = 0.9, α 1 = 10−4, and α 0 = 10−6. (f) The reconstruction errors of (e).

4.4. Reconstruction of Complex-Valued Data

Figure 5 displayed the comparison results under Cartesian sampling on a physical phantom which is usually used to assess the resolution of MRI system. Figures 5(b), 5(c), and 5(d) showed the results of DLMRI, SHTGV, and DLTGV, at 8-fold undersampling. The PSNRs of DLMRI, SHTGV, and DLTGV were 29.02 dB, 22.78 dB, and 33.05 dB. Our result surpassed those of DLMRI and SHTGV, respectively, by 4.03 dB and 10.27 dB. The reconstruction with the three methods showed obvious differences in visual quality. While the DLMRI and SHTGV reconstructions displayed visible aliasing artifacts along the horizontal direction, the DLTGV reconstruction was more explicit and less artifacts at the same direction. The zoom-in map was presented in Figure 5(e).

Furthermore, we added the noise σ = 30 to investigate the sensitivity of presented method on complex-valued data. The PSNR values of 26.33 dB, 22.00 dB, and 31.55 dB were obtained by DLMRI, SHTGV, and DLTGV, respectively. The reconstruction results of the three methods were shown in Figures 6(a), 6(b), and 6(c). The enlargements of two region-of-interests were presented in Figures 6(d) and 6(e). It indicates that the proposed method reflected the superior denoising ability compared to the other two methods. Moreover, the illustrated red arrow in Figures 6(d) and 6(e) showed that DLTGV exhibited less obscured phenomenon than that in the DLMRI and SHTGV results.

In order to further verify the performance of presented method DLTGV, we utilized the datasets [21, 22] which included complex-valued water phantom image in Figure 7(a) and T2-weighed brain image in Figure 8(a). For the water phantom image tested in Figure 7, Cartesian sampling trajectory with 35% undersampling was employed in this experiment. For the visual comparison, the proposed method produced better resolution and fewer artifacts than the other two methods. For the quantitative comparison, the PSNR values of DLMRI and SHTGV were 34.06 dB and 26.16 dB, and at the same time the PSNR value of DLTGV reached 36.56 dB. As can be observed in Figures 7(i) and 7(j), along the horizontal direction, the DLTGV reconstruction contained less aliasing artifacts than the other reconstructions.

The performance of using T2-weighed brain image was displayed in Figure 8. Cartesian sampling trajectory with 40% undersampling was employed. The PSNRs were 33.70 dB, 29.02 dB, and 35.16 dB obtained by DLMRI, SHTGV, and DLTGV, respectively. Figures 8(f) and 8(g) presented a microscopic comparison between the reference image and the results reconstructed by DLMRI, SHTGV, and DLTGV. It can be observed that the DLTGV has provided a better reconstruction of long object edge between tissues and suppressed aliasing artifacts. In general, the proposed method produced greater intensity fidelity to the image reconstructed from the full data.

5. Conclusion

In this paper, we proposed a novel algorithm based on adaptive dictionary learning and TGV regularization to reconstruct MR image simultaneously from highly undersampled k-space data. The TGV algorithm leads to better performance in the nonconvex function regularization and the dictionary learning is related to the image patch-based coefficient matrix and dictionary. To figure out the nondifferential terms in our model, we apply ADMM to solve the optimization problem. The whole algorithm converges in a small number of iterations by means of the accelerated sparse coding and simple dictionary updating. The proposed method recovers both edges and details of images and selectively regularizes different image region at different levels, thus largely avoiding oil painting artifacts. Numerical experiments show that the proposed method converges quickly and the performance is superior to other existing methods under a variety of sampling trajectories and k-space acceleration factors. Particularly, it achieves better reconstruction results than those by using SHTGV and DLMRI. It even provides highly accurate reconstructions for severely undersampled MR measurements.

Acknowledgments

The authors would like to thank Xiaobo Qu et al. and Ravishankar et al. for sharing their experiment materials and source codes. This work was supported in part by the National Natural Science Foundation of China under 61261010, 61362001, 61362008, and 61503176, the international scientific and technological cooperation projects of Jiangxi Province (20141BDH80001), Jiangxi Advanced Projects for Post-Doctoral Research Funds (2014KY02) and International Postdoctoral Exchange Fellowship Program, Natural Science Foundation of Jiangxi Province (20151BAB207008, 20132BAB211035), and Young Scientists Training Plan of Jiangxi Province (20133ACB21007, 20142BCB23001).

Competing Interests

The authors declare that there are no competing interests regarding the publication of this paper.

References

- 1.Lustig M., Donoho D., Pauly J. M. Sparse MRI: the application of compressed sensing for rapid MR imaging. Magnetic Resonance in Medicine. 2007;58(6):1182–1195. doi: 10.1002/mrm.21391. [DOI] [PubMed] [Google Scholar]

- 2.Ma S., Yin W., Zhang Y., Chakraborty A. An efficient algorithm for compressed MR imaging using total variation and wavelets. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR '08); June 2008; IEEE; pp. 1–8. [DOI] [Google Scholar]

- 3.Bredies K., Kunisch K., Pock T. Total generalized variation. SIAM Journal on Imaging Sciences. 2010;3(3):492–526. doi: 10.1137/090769521. [DOI] [Google Scholar]

- 4.Knoll F., Bredies K., Pock T., Stollberger R. Second order total generalized variation (TGV) for MRI. Magnetic Resonance in Medicine. 2011;65(2):480–491. doi: 10.1002/mrm.22595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bredies K., Valkonen T. Inverse problems with second-order total generalized variation constraints. Proceedings of the International Conference on Sampling Theory and Applications; May 2011; Singapore. p. p. 201. [Google Scholar]

- 6.Valkonen T., Bredies K., Knoll F. Total generalized variation in diffusion tensor imaging. SIAM Journal on Imaging Sciences. 2013;6(1):487–525. doi: 10.1137/120867172. [DOI] [Google Scholar]

- 7.Papafitsoros K., Schönlieb C. B. A combined first and second order variational approach for image reconstruction. Journal of Mathematical Imaging and Vision. 2014;48(2):308–338. doi: 10.1007/s10851-013-0445-4. [DOI] [Google Scholar]

- 8.Fei Y., Luo J. Undersampled MRI reconstruction comparison of TGV and TV. Proceedings of the 3rd IEEE/IET International Conference on Audio, Language and Image Processing (ICALIP '12); July 2012; Shanghai, China. pp. 657–660. [DOI] [Google Scholar]

- 9.Guo W., Qin J., Yin W. 13-04. UCLA CAM; 2013. A new detail-preserving regularity scheme. [Google Scholar]

- 10.Bredies K. Efficient Algorithms for Global Optimization Methods in Computer Vision: International Dagstuhl Seminar, Dagstuhl Castle, Germany, November 20–25, 2011, Revised Selected Papers. Vol. 8293. Berlin, Germany: Springer; 2014. Recovering piecewise smooth multichannel images by minimization of convex functionals with total generalized variation penalty; pp. 44–77. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 11.Qu X., Hou Y., Lam F., Guo D., Zhong J., Chen Z. Magnetic resonance image reconstruction from undersampled measurements using a patch-based nonlocal operator. Medical Image Analysis. 2014;18(6):843–856. doi: 10.1016/j.media.2013.09.007. [DOI] [PubMed] [Google Scholar]

- 12.Liu R. W., Shi L., Huang W., Xu J., Yu S. C. H., Wang D. Generalized total variation-based MRI Rician denoising model with spatially adaptive regularization parameters. Magnetic Resonance Imaging. 2014;32(6):702–720. doi: 10.1016/j.mri.2014.03.004. [DOI] [PubMed] [Google Scholar]

- 13.Aharon M., Elad M., Bruckstein A. K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Transactions on Signal Processing. 2006;54(11):4311–4322. doi: 10.1109/tsp.2006.881199. [DOI] [Google Scholar]

- 14.Rubinstein R., Peleg T., Elad M. Analysis K-SVD: a dictionary-learning algorithm for the analysis sparse model. IEEE Transactions on Signal Processing. 2013;61(3):661–677. doi: 10.1109/tsp.2012.2226445. [DOI] [Google Scholar]

- 15.Huang Y., Paisley J., Lin Q., Ding X., Fu X., Zhang X.-P. Bayesian nonparametric dictionary learning for compressed sensing MRI. IEEE Transactions on Image Processing. 2014;23(12):5007–5019. doi: 10.1109/tip.2014.2360122. [DOI] [PubMed] [Google Scholar]

- 16.Liu Q., Wang S., Yang K., Luo J., Zhu Y., Liang D. Highly Undersampled magnetic resonance image reconstruction using two-level Bregman method with dictionary updating. IEEE Transactions on Medical Imaging. 2013;32(7):1290–1301. doi: 10.1109/TMI.2013.2256464. [DOI] [PubMed] [Google Scholar]

- 17.Liu Q., Wang S., Ying L., Peng X., Zhu Y., Liang D. Adaptive dictionary learning in sparse gradient domain for image recovery. IEEE Transactions on Image Processing. 2013;22(12):4652–4663. doi: 10.1109/TIP.2013.2277798. [DOI] [PubMed] [Google Scholar]

- 18.Ravishankar S., Bresler Y. MR image reconstruction from highly undersampled k-space data by dictionary learning. IEEE Transactions on Medical Imaging. 2011;30(5):1028–1041. doi: 10.1109/TMI.2010.2090538. [DOI] [PubMed] [Google Scholar]

- 19.Boyd S., Parikh N., Chu E., Peleato B., Eckstein J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends in Machine Learning. 2010;3(1):1–122. doi: 10.1561/2200000016. [DOI] [Google Scholar]

- 20.Deng W., Yin W. TR12-14. Rice University CAAM; 2012. On the global and linear convergence of the generalized alternating direction method of multipliers. [Google Scholar]

- 21.Qu X., Guo D., Ning B., et al. Undersampled MRI reconstruction with patch-based directional wavelets. Magnetic Resonance Imaging. 2012;30(7):964–977. doi: 10.1016/j.mri.2012.02.019. [DOI] [PubMed] [Google Scholar]

- 22.Ning B., Qu X., Guo D., Hu C., Chen Z. Magnetic resonance image reconstruction using trained geometric directions in 2D redundant wavelets domain and non-convex optimization. Magnetic Resonance Imaging. 2013;31(9):1611–1622. doi: 10.1016/j.mri.2013.07.010. [DOI] [PubMed] [Google Scholar]