Abstract

Background

Prior educational interventions to increase seeking evidence by medical students have been unsuccessful.

Methods

We report two quasirandomized controlled trials to increase seeking of medical evidence by third-year medical students. In the first trial (1997–1998), we placed computers in clinical locations and taught their use in a 6-hour course. Based on negative results, we created SUMSearch(TM), an Internet site that automates searching for medical evidence by simultaneous meta-searching of MEDLINE and other sites. In the second trial (1999–2000), we taught SUMSearch's use in a 5½-hour course. Both courses were taught during the medicine clerkship. For each trial, we surveyed the entire third-year class at 6 months, after half of the students had taken the course (intervention group). The students who had not received the intervention were the control group. We measured self-report of search frequency and satisfaction with search quality and speed.

Results

The proportion of all students who reported searching at least weekly for medical evidence significantly increased from 19% (1997–1998) to 42% (1999–2000). The proportion of all students who were satisfied with their search results increased significantly between study years. However, in neither study year did the interventions increase searching or satisfaction with results. Satisfaction with the speed of searching was 27% in 1999–2000. This did not increase between studies years and was not changed by the interventions.

Conclusion

None of our interventions affected searching habits. Even with automated searching, students report low satisfaction with search speed. We are concerned that students using current strategies for seeking medical evidence will be less likely to seek and appraise original studies when they enter medical practice and have less time.

Background

Unfortunately, most clinicians either do not seek evidence or ineffectively seek evidence [1].Practicing clinicians rarely search MEDLINE [2],and, when they do, they usually find half of the germane research that a medical librarian finds [3].Even librarians can only find evidence to answer half of the questions posed [4].As a result, many clinicians are "practicing with less than has been proved"[5].

To encourage evidence-seeking, students have been taught critical appraisal of medical research. Regardless of the instructional format (such as "Journal Club"), research has demonstrated that this instruction does not increase medical students' actual use of literature[6,7].

We hypothesized that we could increase students' frequency of searching for evidence by focusing on how to find evidence, as well as how to appraise it. We conducted two controlled trials to test this hypothesis. In the first trial, we taught students in the intervention group how to search for evidence as well as how to appraise evidence. Prior to the second trial, we created an Internet site, SUMSearch (http://SUMSearch.UTHSCSA.edu) [8],to automate finding evidence. We taught how to appraise evidence and how to use SUMSearch to students in the intervention group. Results were measured with questionnaires at the end of the study periods.

Materials and methods

Subjects

Subjects were third-year medical students at The University of Texas Health Science Center at San Antonio. During the medicine clerkship, we taught the students how to find and appraise medical evidence. We performed two quasirandomized controlled studies (1997–1998 and 1999–2000). For each trial, we surveyed the entire class of approximately 200 third-year medical students at 6 months, after approximately half of the class had taken the course (intervention group). The remaining students comprised the control group.

Group assignment occurred in a quasirandomized manner. At our institution students place themselves into 16 groups of 10 to12 members. The Dean's Office combines these 16 groups into 8 larger groups in order to achieve similar distributions of gender, ethnicity, and academic performance. The Dean's office randomly assigns medical students to a sequence of rotations. A student from each group draws from a bowl a number that indicates the order of clerkship rotations.

Interventions

1997–1998 Trial

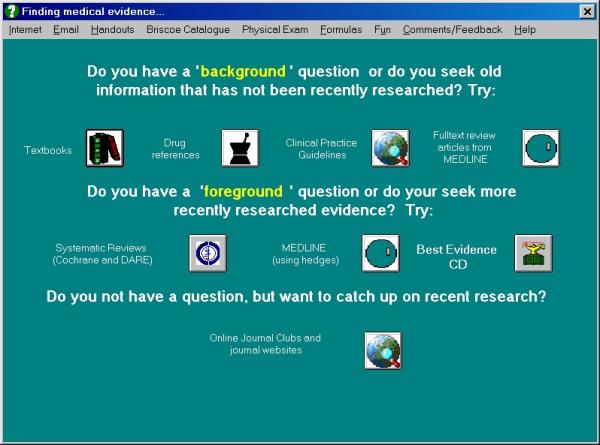

We made four computers available to all students in key clinical locations. Each computer provided the following software compatible with Microsoft Windows®: Scientific American Textbook of Medicine®, the Cochrane Library®, and Best Evidence®. MEDLINE was accessible with OVID® Windows® Client, which was customized with search filters [9]. The Internet access encouraged use of the Database of Abstracts of Reviews of Effectiveness (DARE), practice guidelines, online journals, and journal clubs. The computers had a customized interface (Figure 1) that displayed and organized these resources. The interface, which could not be removed, offered context-sensitive, online help (Additional File 1: "lifelong.hlp") and tracked the usage of each resource. We used the usage logs to guide enhancements to the interface. Because we prioritized accessibility and the ability to log in rapidly, we did not use usernames and passwords to track individual students. We taught the intervention group students how to find and appraise medical evidence using the resources on these computers (Table 1).

Figure 1.

Computer interface used during the 1997–1998 trial. The interface could not be removed from display, offered context-sensitive help and tracked the usage of each resource.

Table 1.

Comparison of Content and Structure of Study Interventions.

| Session | Length | Content | 1997–98 | 1999–2000 |

| 1 | 90 min | Levels of evidence for journal articles. | X | X |

| Role of systematic reviews and meta- | X | X | ||

| analyses. | ||||

| Recognizing study type. | X | X | ||

| Markers of quality on Internet. | X | X | ||

| Using scope of question (broad vs. | ||||

| specific) to select resource for search. | X | X | ||

| 2 | 90 min | Understanding and using the MeSH | X | X |

| vocabulary, including exploding terms. | ||||

| Textword searching and truncating words. | ||||

| MeSH terms vs. textwords. | ||||

| Using limits (AIM, English, human) | ||||

| Using filters or hedges with OVID® . | X | |||

| 3 | 90 min | Using filters or hedges with OVID® . | X | |

| Using filters with PubMed. | X | |||

| How to retrieve full-text review articles. | X | |||

| Search algorithm. | X | |||

| How to formulate questions. | X | |||

| How to format queries for electronic | ||||

| searching (using SUMSearch™ as | X | |||

| example). | ||||

| DARE for systematic reviews. | X | |||

| National Guidelines Clearinghouse. | X | |||

| 4 | 60 min | DARE for systematic reviews. | X | |

| Specialty organization guidelines. | X | |||

| Scientific American Textbook of Medicine. | X | |||

| Online Journal Clubs (ACP Journal Club | X | |||

| and Journal Watch) and Journal sites . | ||||

| Review of searches performed by students | ||||

| for assigned talk. | X |

1999–2000 Trial

We made several changes in our resources after the first study. Because of developments in the Internet, we converted access to all resources to the Internet via the public address, http://Clinical.UTHSCSA.edu. This allowed access from home and hospital networks and easier maintenance. We replaced the Windows® interface on our clinical computers with a customized Internet browser whose home page was this Internet address. We had observed during the first trial that searching for evidence, even after instruction, was still very difficult for students. Because searching for medical evidence can follow an algorithm, we created SUMSearch http://SUMSearch.UTHSCSA.edu, an Internet site that automates searching for medical evidence [8]. SUMSearch searches for medical evidence at high quality Internet sites including DARE, National Guideline Clearinghouse, and PubMed. SUMSearch searches PubMed with four different strategies. Three strategies are for finding systematic reviews, practice guidelines, and traditional review articles, and the fourth will find original research using validated search filters [8]. In addition, SUMSearch automatically reformats and re-executes the search for original research multiple times until the best possible results are found.

Based on feedback from students between studies during the 1998–1999 academic year, we also changed our educational intervention. We reduced instruction on each individual resource, but we increased instruction on SUMSearch (Table 1).

At the same time as our second trial, the Department of Family Medicine was also teaching an evidence-based course in their clerkship. Thus, half of the students who had been on the Family Medicine rotation during the first half of the year received that intervention. The Family Medicine intervention consisted of three 90-minute sessions that taught how to frame clinical questions, search for evidence, and critically appraise evidence. A comparison of the two courses indicated that the Internal Medicine course focused on how to find evidence, while the Family Medicine course focused on how to appraise evidence.

Outcomes

The principal outcomes were self-report of search frequency, satisfaction with results, and satisfaction with speed of searching. We queried these principal outcomes identically in both trials. We also asked in the second trial whether search frequency influenced patient care. In both trials, we assessed retention of content, although we do not report these results at this time.

For both trials, surveys were administered at 6 months, after half of the students in the third-year class had taken the course. The median time between completion of the course and administration of the questionnaires was 3 months for students in the intervention groups.

Analyses

Principal outcomes measuring search frequencies and influence from searching were dichotomized into at least once a week and less often or no answer. Outcomes measuring satisfaction were dichotomized into being satisfied with more than half of search and less often or no answer.

Questionnaires were analyzed by intervention and control group. We tested significance with the two-tailed Chi-square test. In the second trial, we performed a Mantel-Haenszel analysis to quantify the independent contributions of the Internal Medicine and Family Medicine courses on the outcomes[10].

Results

1997–1998 study

Of 207 third-year medical students in the first trial, 167 (81%) students completed the year-end questionnaire: 63 (62%) of 102 students who had attended the course and 87 (83%) of 105 who had not. We excluded 17 incomplete questionnaires.

Table 2 summarizes their self-reported behaviors. The intervention and control groups did not differ significantly in self-report of frequency of literature searches (20% and 17%, respectively), satisfaction with quality of results (32% and 28%, respectively), and satisfaction with speed of searching (24% and 17%, respectively).

Table 2.

Self-report of searching behavior

| Frequency | Satisfaction | Speed | Influenced | |

| % (CI95) | % (CI95) | % (CI95) | % (CI95) | |

| number | number | number | number | |

| 1997–1998 study | ||||

| Overall | 18(12–24) | 30(23–37) | 19(13–25) | NA |

| n=159 | 30 | 50 | 31 | |

| Intervention | 20(10–31) | 32(20–43) | 24(13–25) | NA |

| N=63 | 12 | 20 | 15 | |

| Control | 17(10–26) | 28(19–37) | 17(9–24) | NA |

| N=96 | 15 | 27 | 16 | |

| 1999–2000 study | ||||

| Overall | 44*(36–51) | 47*(39–55) | 27(20–34) | 15(10–21) |

| n=157 | 66 | 74 | 42 | 24 |

| Intervention | 38(28–48) | 40(30–50) | 22(14–31) | 14(8–22) |

| n=84 | 34 | 36 | 20 | 13 |

| Control | 48(35–59) | 57(44–68) | 33(22–44) | 16(8–26) |

| n=67 | 32 | 38 | 22 | 11 |

*Comparing the two studies, frequency and satisfaction with searching significantly (p<0.05) increased. No comparisons within the two studies were significant.

B. 1999–2000 study

Of 205 third-year medical students in the second trial, 157 (77%) students completed the questionnaire, of which 90 (87%) of 104 students had completed their medicine clerkship and 67 (66%) of 101 students had not. Of the students with missing results, 24 were on one rotation that we could not access during the survey period. These 24 students had been randomly assigned to a sequence of rotations that placed both their Internal Medicine and Family Medicine rotations in the latter part of the year, and thus the control group contains more missing data.

Similar to the results of the first study, there were no significant differences in self-reported habits between the two groups. We detected an insignificant trend for control group students to search more and be more satisfied with quality and speed of their searches.

Lastly, we stratified the analysis by whether students had received both courses, one course, or no course. No combined impact was detected on frequency of searching or satisfaction with searching. The only significant finding was self-report of being influenced by a literature search. Mantel-Haenszel analysis confirmed that this effect was attributable to the Family Medicine course, even though the Family Medicine course was not associated with increased search frequency.

Comparing the two studies

Among all students, the self-report of frequent searching and satisfaction with results significantly increased from the 1997–1998 to the 1999–2000 study (Table 2). Searching once a week or more increased from 18% of all students in the first study to 44% of all students in the second study. Students were more satisfied with their results (47%) than with speed (27%) of their searches. Satisfaction with speed of searches did not increase.

Power analysis

In the 1997–1998 study, the intervention group was smaller (60 students). In the 1999–2000 study, the control group was smaller (67 students). Both studies had approximately an 80% chance of detecting a 20% absolute difference between the intervention and control groups[11].

There were 60 non-responders in the 1997–1998 study. Among these students, if all 42 students in the intervention groups and none of the 18 control group students had actually become frequent searchers, the prevalence of frequent searchers would have been 53% and 14%, respectively. This result and a similar reanalysis of the 1999–2000 would have been statistically significant.

Discussion

Neither of the two Internal Medicine courses nor the 1999–2000 Internal Medicine course in conjunction with the Family Medicine course increased the self-report of search frequency. Despite teaching both searching for and appraising evidence, our results are consistent with the negative impact of "journal clubs"[6,7]. Possibly, students lack time to search for evidence. In both study years, students were less satisfied with the speed of their searches than the results of their searches.

Although all students reported increased searching and satisfaction with in 1999–2000, our courses had no impact. In addition, it is doubtful that the SUMSearch (™) Internet site, which was taught only to the intervention students but available to all students, was responsible. Search frequency increased more in the control than the intervention groups (31% and 18%, respectively). Search frequency increased most in 1999–2000, when the control group again reported more frequent searching than the intervention group (48% and 38%, respectively). Although search frequency increased, the quality of students' medical searches was unclear. Other studies have reported the apparent satisfaction of novice searchers with searches of uncertain quality[3,12]. Moreover, clinicians demonstrated satisfaction with their searches even though librarians, who later replicated the searches, found twice as many relevant studies and half as many irrelevant studies[3].

Our power analysis reveals that we might have missed a positive result if all non-responders had answered in a way to favor an intervention effect. However, we believe that non-response more likely indicated reluctance to report infrequent literature searching. In the second study, logistical reasons prevented us from surveying all 24 students on one rotation in the control study. In the first study, all students were surveyed in one large room at the same time; however, fewer students in the intervention group completed their questionnaire. We believe that students who adopted our teachings and became frequent searchers after our course were unlikely to sit in the room and not complete the questionnaire. Therefore, we believe that having better response rates would not reveal a positive effect, but rather would make the results even more negative in the first study.

Published recommendations vary on how to best search for evidence in order to practice evidence-based medicine. First, some authors recommend using "Best Evidence" at the beginning of a search[13]. We taught the use of Best Evidence during the first trial (Table 1), but not during the second study since we had informally observed that Best Evidence less often provided answers. Second, others encourage searching of the Cochrane Library. We discontinued teaching this strategy before our studies, because the Cochrane Library addresses only selected therapeutic questions. Instead, we taught the use of the Internet site of the Database of Abstracts of Reviews of Effectiveness (DARE). DARE contains succinct summaries of Cochrane reviews and high quality systematic reviews from other sources that address diagnostic and therapeutic questions. We also recommended searching MEDLINE for additional systematic reviews and the National Guidelines Clearinghouse for selected practice guidelines based on systematic literature review (e.g., United States Preventive Services Task Force and Canadian Task Force for Preventive Health Care). Third, some experts advocate not searching MEDLINE due to the difficulties in searching it[13]. Because very recently published original studies may not be included in systematic reviews or secondary publications, we taught, and automated with SUMSearch, the use of PubMed to search MEDLINE and PreMEDLINE for recent studies. Neither our intervention that taught and automated one strategy [14] nor the intervention by Family Medicine, which recommended another strategy using Best Evidence and the Cochrane Library [13], influenced search frequency or satisfaction.

We propose two explanations for why our interventions failed. First, students lacked sufficient time to seek evidence. Studies suggest that a wide gap exists between the 2 minutes clinicians typically allot to seeking evidence [2] and the amount of time needed to search MEDLINE[4,12,13]. Indeed, "some have likened MEDLINE searching to attempting to drink water from a fire hose"[15]. Although MEDLINE is certainly more difficult to search than other resources recommended for evidence-based medicine, the other resources, in aggregate, probably require excessive time to search if they are searched in sequence as recommended. Even though SUMSearch automated searching of MEDLINE and other resources for evidence-based medicine, generally students were dissatisfied with the speed of searches (Table 2).

Second, our search strategy, like the other existing search strategies, requires clinicians to spend time comparing and contrasting individual documents. All strategies recommend starting a search by seeking individual documents, whether the document is a study, a secondary publication of a single study, or a systematic review. Although efficient when a recent, well-done systematic review captures all the relevant studies, this strategy is burdensome when the results of trials or systematic reviews are contradictory or when original studies have been published since the last systematic review. To be sure, a single study may not adequately address a clinical question[16,17].

Admittedly, our course might have increased search frequency by students if the instruction had been integrated across all the clerkships and linked to students' clinical duties. However, even with course improvements, we might have achieved success in teaching a technique that is too cumbersome to use during clinical care. That is, the technique may require more time than students will have when they enter practice.

Further research and development of more efficient strategies for seeking medical evidence are needed. Haynes recently questioned, "Is it time to change how you seek best evidence" and proposed that we first seek evidence in resources such as UpToDate, Scientific American, or Clinical Evidence[18]. These "systematic textbooks" contain summaries of medical evidence, are reliably updated, and are well-referenced with links to the abstracts of references. Our negative study supports this idea, as it may reduce the need to search for and appraise individual studies and systematic reviews. Thus a proposed strategy is to search a systematic textbook first, and then use an automated meta-search engine to seek evidence published since the literature search of the systematic textbook. However, research is needed to assess the validity of the content of systematic textbooks. In addition, research is needed to quantify how up to date are these resources.

Conclusion

Exposure to two courses within one academic year and the use of automated searching did not increase students' self-report of frequency of seeking medical evidence. While we recognize the importance of students' understanding how medical evidence is found and appraised, we are concerned that students lack the time to search for original research and will have even less time when they enter medical practice. We encourage innovations in evidence-based medicine that will reduce the time needed to search for medical evidence.

The two trials in this report were separately reported at the American Association of Medical Colleges Group on Educational Affairs/Society of Directors of Research in Medical Education, San Antonio, Texas, 1/31/2000; and Clerkship Directors in Internal Medicine, Annual Meeting, Denver, Colorado, 9/98.

Competing Interests

Dr. Badgett is the creator and maintainer of SUMSearch.

Dr. Badgett is compensated by UpToDate to coordinate peer review of UpToDate.

Pre-publication history

The pre-publication history for this paper can be accessed here:

http://www.biomedcentral.com/content/backmatter/1472-6920-1-3-b1.pdf

Supplementary Material

1. lifelong.hlp (855 KB) This file provides the online help and contains the course content for the 1997–1998 study. This file can be opened to its table of contents. In addition, the user interface in Figure 1 opens the help file in the content appropriate to the student's location in the interface. This is a Windows help file and can be viewed on any computer with a 32-bit Windows operating system (Windows 95 or more recent).

2. lifelong-BMC.rtf (82 KB) This file is a word processor version of the Windows Help file. This version can be viewed on most all computers, but will not have the features of the Windows help file.

Acknowledgments

Acknowledgments

We acknowledge the assistance of the clerkship directors of The University of Texas Health Science Center at San Antonio and the support of the Briscoe Medical Library.

Contributor Information

Robert G Badgett, Email: Badgett@UTHSCSA.edu.

Judy L Paukert, Email: Paukert@UTHSCSA.edu.

Linda S Levy, Email: Levy@UTHSCSA.edu.

References

- Hersh W, Hickam D. The use of a multi-application computer workstation in a clinical setting. Bull Med Libr Assoc. 1994;82:382–389. [PMC free article] [PubMed] [Google Scholar]

- Ely JW, Osheroff JA, Ebell MH, Bergus GR, Levy BT, Chambliss ML, Evans ER. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999;319:358–361. doi: 10.1136/bmj.319.7206.358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes RB, McKibbon KA, Walker CJ, Ryan N, Fitzgerald D, Ramsden MF. Online access to MEDLINE in clinical settings. A study of use and usefulness. Ann Intern Med. 1990;112:78–84. doi: 10.7326/0003-4819-112-1-78. [DOI] [PubMed] [Google Scholar]

- Gorman PN, Ash J, Wykoff L. Can primary care physicians' questions be answered using the medical journal literature? Bull Med Libr Assoc. 1994;82:140–146. [PMC free article] [PubMed] [Google Scholar]

- Mulrow CD. Rationale for systematic reviews. BMJ. 1994;309:597–599. doi: 10.1136/bmj.309.6954.597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Audet N, Gagnon R, Ladouceur R, Marcil M. [How effective is the teaching of critical analysis of scientific publications? Review of studies and their methodological quality]. CMAJ. 1993;148:945–952. [PMC free article] [PubMed] [Google Scholar]

- Landry FJ, Pangaro L, Kroenke K, Lucey C, Herbers J. A controlled trial of a seminar to improve medical student attitudes toward, knowledge about, and use of the medical literature. J Gen Intern Med. 1994;9:436–439. doi: 10.1007/BF02599058. [DOI] [PubMed] [Google Scholar]

- Booth A, O'Rourke A. Resource Corner. ACP Journal Club. 2000;132:A15. [Google Scholar]

- Haynes RB, et al. Developing optimal search strategies for detecting clinically sound studies in MEDLINE. J Am Med Informatics Assoc. 1994;1:447–458. doi: 10.1136/jamia.1994.95153434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dickman P. strat2x2.xls. 1996. http://www.pauldickman.com/teaching/excel.html

- Hulley SB, Cummings SR. Designing Clinical Research. Baltimore: Williams & Wilkins. 1988. pp. 139–150.

- Wildemuth BM, Moore ME. End-user search behaviors and their relationship to search effectiveness. Bull Med Libr Assoc. 1995;83:294–304. [PMC free article] [PubMed] [Google Scholar]

- Hunt DL, Jaeschke R, McKibbon KA. Users' guides to the medical literature: XXI. Using electronic health information resources in evidence-based practice. Evidence-Based Medicine Working Group. JAMA. 2000;283:1875–1879. doi: 10.1001/jama.283.14.1875. [DOI] [PubMed] [Google Scholar]

- Williams BC, Van Harrison R. Motivating Physicians for Self-Education. SGIM Forum. 2000. p. 23.http://www.sgim.org/Publicweb/forum/issues/forum0007.htm

- Westberg EE, Miller RA. The Basis for Using the Internet to Support the Information Needs of Primary Care. J Am Med Informatics Assoc. 1999;6:6–25. doi: 10.1136/jamia.1999.0060006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidoff F, Case K, Fried PW. Evidence-Based Medicine: Why All The Fuss? Ann Intern Med. 1995;122:727. doi: 10.7326/0003-4819-122-9-199505010-00012. [DOI] [PubMed] [Google Scholar]

- Williams BC. How Good Is the Evidence for Evidence-Based Medicine? Heretical Thoughts of a True Believer. SGIM Forum. 1999;22:7–8. http://www.sgim.org/Publicweb/forum/issues/forum9903.html [Google Scholar]

- Haynes RB. Of studies, syntheses, synopses, and systems: the 4S evolution of services for finding current best evidence. ACP J Club. 2001;134:A11–3. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

1. lifelong.hlp (855 KB) This file provides the online help and contains the course content for the 1997–1998 study. This file can be opened to its table of contents. In addition, the user interface in Figure 1 opens the help file in the content appropriate to the student's location in the interface. This is a Windows help file and can be viewed on any computer with a 32-bit Windows operating system (Windows 95 or more recent).

2. lifelong-BMC.rtf (82 KB) This file is a word processor version of the Windows Help file. This version can be viewed on most all computers, but will not have the features of the Windows help file.