Summary

Introduction

While studies have shown that usability evaluation could uncover many design problems of health information systems, the usability of health information systems in developing countries using their native language is poorly studied. The objective of this study was to evaluate the usability of a nationwide inpatient information system used in many academic hospitals in Iran.

Material and Methods

Three trained usability evaluators independently evaluated the system using Nielsen’s 10 usability heuristics. The evaluators combined identified problems in a single list and independently rated the severity of the problems. We statistically compared the number and severity of problems identified by HIS experienced and non-experienced evaluators.

Results

A total of 158 usability problems were identified. After removing duplications 99 unique problems were left. The highest mismatch with usability principles was related to “Consistency and standards” heuristic (25%) and the lowest related to “Flexibility and efficiency of use” (4%). The average severity of problems ranged from 2.4 (Major problem) to 3.3 (Catastrophe problem). The experienced evaluator with HIS identified significantly more problems and gave higher severities to problems (p<0.02).

Discussion

Heuristic Evaluation identified a high number of usability problems in a widely used inpatient information system in many academic hospitals. These problems, if remain unsolved, may waste users’ and patients’ time, increase errors and finally threaten patient’s safety. Many of them can be fixed with simple redesign solutions such as using clear labels and better layouts. This study suggests conducting further studies to confirm the findings concerning effect of evaluator experience on the results of Heuristic Evaluation.

Keywords: Hospital information systems, health information systems, user-computer interface, inpatients, usability, Heuristic Evaluation

Introduction

There are several health information systems in medicine that have been designed to prevent or reduce medical errors, facilitate and accelerate health care activities, prevent duplications, reduce costs and eventually improve patients’ safety. One of these systems is Hospital Information System (HIS) which is a computerized system used as a set of electronic tools for managing patient information [1, 2]. HIS can share health information among users by linking computers in different wards of hospitals. HISs have been implemented in Iran for several years. More than 20 private and governmental companies already develop HISs in Iran. Each of these companies, depending on their experience and knowledge, define specific features and functionalities for their systems. Most of the developed HISs contain various subsystems including inpatient, outpatient, emergency, pharmacy, accounting, radiology, laboratory and medical records [3].

One of the most important subsystems of HIS is inpatient admission system. The data of this system is shared with other hospital information systems and all hospital wards use this data to fulfill their clinical, administrative, financial, and related communication tasks. This requires completing the data accurately and precisely. In addition, the high number of inpatient admissions to health care facilities, especially to the academic hospitals, has increased the workload of the users of this system. Therefore, this system should be usable to such an extent that its users be able to perform admission activities easily, quickly and pleasantly. Information systems’ ease of use and users’ good experience can reduce working flaws and the consecutive harms to patients [4].

Many studies have evaluated the usability of health information systems in some developed countries, but the usability of health information in some developing countries like Iran is not sufficiently studied. Specific design specifications such as use of domestic layout, logos and languages can affect usability of information systems. A report by Iranian ministry of health entitled “History of electronic health records in Iran” [5], suggested that evaluation is an essential requirement for health information systems. Since the distribution of this report, several evaluation studies have been performed on national health information systems [3]. Most of these studies have used questionnaires and checklists to study different aspects of the systems such as quality of collected information, the impact of systems on hospital performance and on the quality of health care services, and users’ attitude regarding advantages and disadvantages of the systems. A review of the studies revealed that although few studies have addressed usability of the systems so far, a very few of them have used one of the common usability evaluation methods [3]. Usability focuses on different system features such as ease of learning and memorability, efficiency, error prevention, and user satisfaction [6].

Different usability evaluation methods are available which can be used by usability experts with or without participating system users [7–9]. Heuristic Evaluation (HE) is an easy, efficient, and low cost method which can be carried out by a low number of evaluators without involving users, and identifies a relatively high number of usability problems in a limited time [10, 11]. This method can be used by both designers and usability experts to evaluate prototypes and user interface elements such as dialog boxes, menus, and navigators [12]. This method was first introduced by Jacob Nielsen [13]. Nielsen’s method uses a set of principles to systemically identify usability problems and to determine their degree of severity [6, 8].

Studies have also shown that this method is an effective and cost benefit method for evaluating clinical information systems [14, 15]. In this method, 3 to 5 evaluators can identify most of the usability problems [16]. Evaluators identify any violation of the ten principles in system’s user interface design as a usability problem. Violations are problems that can potentially make annoying obstacles for effective interaction of users with the user interface of a system. Finally, the results can be used to optimize the user interface [6].

Many studies have successfully applied Heuristic Evaluation for usability evaluation of healthcare information systems. For example in two studies for the evaluation of a dental practice management system [17] and a computer-based patient education program, Heuristic Evaluation identified high numbers of usability problems of high severity [18].

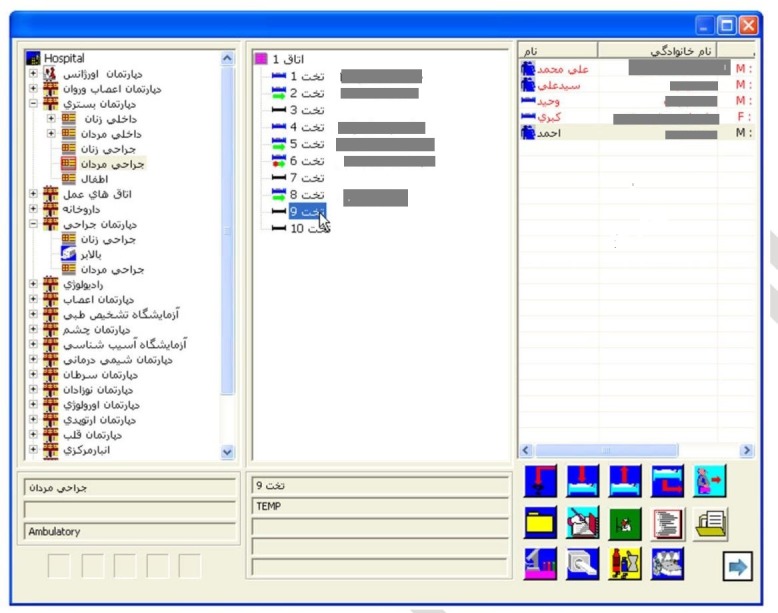

Mashhad University of Medical Sciences (MUMS) is among the first universities that has employed HIS in Iranian hospitals. This HIS is widely used in many academic hospitals in Iran, including 22 different clinical inpatient departments (► Figure 1). Preliminary studies pointed to the problems users encounter while using this system [19, 20]. So far, few studies have been conducted for identifying usability problems of its subsystems [20, 21], but not of the inpatient information (sub) system. Given the critical importance of the inpatient admissions activities, in this study we evaluated the usability of an inpatient information system integrated into HIS using Heuristic Evaluation.

Fig. 1.

Poorly designed and unlabelled icons at the left bottom corner in the first page of the MUMS’s inpatient system. The function of these icons is not clear from their shape or alignment on the page. Users should engage in a time consuming error and trial effort to realize the function of each icon. It is also hard to recognize or remember the function of the icons the next time they require and action.

Material and Methods

This study was conducted on an inpatient information system embedded into an HIS used in 28 hospitals affiliated with MUMS in Khorasan and many hospitals in twelve other provinces in Iran. Routinely about 4,000 daily active users interact with this system in MUMS and more than 23000 throughout the country. Through the system, a patient’s diagnosis and status is recorded, physicians’ orders are sent to the related departments, and nurses receive the orders and enter their notes and observations in response to the physicians’ orders. All functionalities and features of the system which are evaluated by HE are equal in all hospitals and other healthcare centers. One screen shot of the system user interface (UI) is shown in ► Figure 1.

Three usability evaluators, of which one had four years working experience with different modules of studied HIS, evaluated the usability of the inpatient information system using Nielsen’s ten principles [18] (the principles and descriptions are shown in ► Table 1. Since, Heuristic Evaluation method focuses on the evaluation of UI design without involvement of users, it can be done in a laboratory. Therefore, this study was done at a computer laboratory in paramedical sciences faculty of MUMS. The system is a subsystem of a widely used HIS in many academic hospitals in Iran with thousands of daily active users. Evaluators received the same theoretical and practical Heuristic Evaluation training before evaluation. To ensure that all evaluators are sufficiently acquainted with the system and have the same understanding of the principles, a couple of meetings were held by the evaluation team.

Table 1.

Nielsen’s ten Heuristic Evaluation principles [12]

| Heuristic Evaluation principles | Description | |

|---|---|---|

| 1 | Visibility of system status | The system should always keep users informed about what is going on, through appropriate feedback within a reasonable time |

| 2 | Match between system and the real world | The system should speak the users’ language rather than with system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order |

| 3 | User control and freedom | Users often need a clearly marked “emergency exit” when making mistakes. Support undo and redo |

| 4 | Consistency and standards | Users should not be forced to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions. |

| 5 | Help users recognize, diagnose, and recover from errors | Error messages should be expressed in a simple language, and should precisely indicate the problem, and constructively suggest a solution |

| 6 | Error prevention | The design of the system should either eliminate error-prone conditions or check for them and present users with a confirmation option before they commit to the action |

| 7 | Recognition rather than recall | Minimize the user’s memory load by making objects, actions, and options visible. The user should not have to remember information. Instructions should be visible or easily retrievable. |

| 8 | Flexibility and efficiency of use | Accelerators— unseen by the novice user—may often speed up the interaction for the expert user. Allow users to tailor frequent actions. |

| 9 | Aesthetic and minimalist design | Dialogues should not contain information which is irrelevant or rarely needed. Extra information diminishes the relative visibility of relevant information. |

| 10 | Help and documentation | It may be necessary that the system provide help and documentation. Any such information should be easy to search, focused on the user’s task, list concrete steps to be carried out, and not be too large |

In this study evaluators reviewed the user interface of the system and independently evaluated the accordance of its design to the predefined principles. Any violation was noted as a usability problem. All three evaluators had passed theoretical and practical courses of usability engineering and had experiences with usability evaluation methods before the study.

On completion of the evaluation, identified problems were discussed and combined in a meeting held by three evaluators and a list of unique problems was provided. A unique problem is a same problem which has been identified by more than one evaluator and agreed upon among all the evaluators. Any disagreement concerning identified problems and assigning them to particular heuristics was resolved through consensus. Copies of this list were distributed among evaluators for severity rating of the problems as described in ► Table 2. Severity ratings were assigned based on the frequency of the problem, its impact on users, and its persistence [18]. The average of severities given by evaluators was calculated as the absolute severity of each problem and was reported based on ► Table 2. Finally, the severity scores assigned by the more experienced evaluator were compared with the others’ by independent t-test. Statistical analyses were performed using the SPSS for Windows (version 17) software. P-value of < 0.05 was considered as statistically significant.

Table 2.

Rating scale used to rate the severity of usability problems [18]

| Problem | Severity | Description |

|---|---|---|

| No problem | 0 | I don’t agree that this is a usability problem at all |

| Cosmetic | 1 | Cosmetic problem only: need not be fixed unless extra time is available on project |

| Minor | 2 | Minor usability problem: fixing this should be given low priority |

| Major | 3 | Major usability problem: important to fix, so should be given high priority |

| Catastrophe | 4 | Usability catastrophe: imperative to fix this before product can be released |

Results

In this study, 158 usability problems related to inpatient information system were identified by three independent evaluators (50, 51 and 57 problems, respectively). After merging the identified problems by the evaluators, 99 unique problems were left of which 19% (n=19) were identified by all three evaluators and 57% (n=56) by at least two evaluators. ► Table 4 represents the identified usability problems categorized by different heuristics, number of evaluators, and also the severity of problems.

Table 4.

Number of identified usability problems by Heuristic Evaluation in terms of violated heuristic, number of evaluators and severity

| Violated usability heuristic | Total number of problems | Number of Unique problems | Problems Identified by two evaluators | Problems Identified by three evaluators | Problems Identified by one evaluator | (Average) severity |

|---|---|---|---|---|---|---|

| Visibility of system status | 14 | 10 | 6 | 3 | 1 | 2.4 (Major) |

| Match between system and the real world | 14 | 9 | 3 | 2 | 4 | 2.9 (Major) |

| User control and freedom | 9 | 8 | 3 | 1 | 4 | 3.1 (Catastrophe) |

| Consistency and standards | 42 | 25 | 11 | 5 | 9 | 3.3 (Catastrophe) |

| Help users recognize, diagnose, and recover from error | 36 | 18 | 2 | 4 | 12 | 3.1 (Catastrophe) |

| Error prevention | 10 | 7 | 4 | - | 3 | 3.2 (Catastrophe) |

| Recognition rather than recall | 19 | 11 | 6 | 2 | 3 | 2.9 (Major) |

| Flexibility and efficiency of use | 6 | 4 | 1 | 1 | 2 | 3 (Catastrophe) |

| Aesthetic and minimalist design | 8 | 7 | 2 | 1 | 4 | 2.7 (Major) |

| Help and documentation | not implemented in the system | 4 (Catastrophe) | ||||

| 158 | 99 | 38 | 19 | 42 | ||

Most problems discovered in the system were related to “Consistency and standards” heuristic (n=25). The heuristic “Flexibility and efficiency of use” with 4 usability problems had the lowest number of violations. Since no help or other instructive information is implemented in the system, all evaluators assigned severity 4 to this problem related to “Help and documentation” principle. Among other principles, 62 out of 99 unique problems were catastrophe and 37 were major. The minimum average severity of problems was 2.4, related to “Visibility of system status” and the maximum average severity was 3.3, related to “Consistency and standards”.

The total number of problems identified by the more experienced evaluator was a little more than those of other two evaluators (57 vs. 50 and 51 problems respectively). After combining the results, the number of unique problems identified by the more experienced evaluator, but not by the other two evaluators was higher (39 vs. 31 and 29 unique problems) than those identified only by one of other evaluators. Moreover, the number of problems identified by experienced evaluator but not by other evaluators was significantly more for each class of heuristics (p=0.01). The average severity assigned by this evaluator to the problems was significantly more than the severity assigned by other two evaluators (3.4 vs. 2.7 and 2.9) (p=0.003 and p=0.014).

Discussion

In this study, Heuristic Evaluation method was employed to evaluate the usability of the inpatient information system in hospitals of MUMS in Iran. The most common problems were related to violation of “Consistency and standards”, “Help users recognize, diagnose, and recover from errors”, and “Recognition rather than recall” heuristics. For the easier and more effective use of the system, correcting these problems must necessarily be in priority.

The research findings concerning “Consistency and standards” and “Recognition rather than recall” heuristics are consistent with the results of Chan et al. [22], Khajouei et al. [23], Nabovati et al. [20], and Edwards et al. [24] which have been done in developing and non-developed countries. Examples for violations of the principle “Consistency and standards” are using unlabeled and poorly designed icons that do not conform to their functions (as illustrated in ► Figure 1). These icons could result in user confusion. As well, due to different colors and locations of these icons in multiple screens, they were difficult to work with. These problems could be solved easily by simple redesign solutions such as using labels or clear images conforming to the functions of icons, and using the same color and location for similar icons throughout the system. Users should not have to wonder whether different words, situations, or actions mean the same thing [6, 25]. Diversity in the presentation of data can result in ineffective communication, impairing continuity of care and sharing the users experience among similar applications [26, 27]. It is critical to use common standards and observe the consistency of similar items in the whole system (e.g. for the use of words, graphical objects and colors). Moreover, presented information in any format should be self-explanatory, so that the users can initiate actions any time without recalling their experience from the previous use of the system. The Heuristic Evaluation identified other problems such as inconsistency of information presentation and layout in tables with the rules of Persian language which is a right to left language. In some screens the exit button, that would be expected to be placed in the bottom of the screen, was located in the top right corner. This sort of problems could engage users in a cumbersome and time consuming search to figure out the navigation of the system. It is recommended that always the information on the screen to be set out according to users’ habits and expectations. This reduces the users’ mental workload which not only minimizes the number of errors, but also lowers the amount of cognitive and physical activities required to perform an action. In addition, users will not engage in a lengthy trial and error task when completing a step using the system. Some items and solutions that can save the users’ time include the following:

The menu items should be sorted logically or alphabetically.

The ability to disrupt the activities in progress should exist.

Undo and Redo functionalities should be added to the system.

Menus with long items (e.g. medication list) should be searchable by the first letters.

For accelerating the tasks, the value of some frequently used fields such as physician’ name should be defined by default.

In the event of an error related to a data entry field, after viewing and confirming by the user, it should directly lead the user to the corresponding field.

This study showed that in the second place, most violations were related to “Help users recognize, diagnose, and recover from errors” heuristic. For example, the texts of some alerts were long and ambiguous. This result has little compatibility with the results of similar studies [10, 18, 21, 22]. Improving clarity of system messages by minor textual revisions helps users understand the problems and recover from their errors. Accuracy of data and information in a system increases system reliability leading to more accurate patient data management and decision making. Eliminating usability problems concerning this heuristic will finally result in improving the quality of working processes [9, 28].

One of the ten heuristics is “Help and documentation” that has not been developed in the inpatient information system. In addition to the training role of this feature on users, if provided, it could help users solve the problems they may encounter while working with the system. Using this feature can prevent users’ confusion, unnecessary contact with the system administrator and saves the time needed to be spent on learning the system. Therefore, provision of “Help and documentation” functionality for assisting users is recommended.

Current study also showed that after aforementioned usability problems, the rest of the problems were mostly related to violation of “Visibility of system status” heuristic. Providing information concerning the state of the system improves the understanding of the system behavior, and facilitates navigation of the system and decision making for the next actions. This result was similar to that of Nabovati et al. [20] on the laboratory and radiology information subsystems of the same HIS and Edwards et al. [24] on a commercial electronic health record. The rate of detected problems related to system visibility was lower than that reported in the study by Khajouei et al. [21]. Simple designs such as providing an appropriate title for each page of the UI and also designing status bars to show how the current situation is going on can help users recognize status of the system.

In addition to the fact that the absence of “Help and documentation” functionality in the system is a massive drawback; the average severities of detected problems related to other heuristics were also major and catastrophe. Hence, ignoring these problems is an obstacle in achieving the primary goals of HIS such as improving efficiency, accuracy, and user satisfaction. The findings of this study agree with the results of other studies. In the study of Khajouei et al. [21] the violations of eight out of nine examined principles were catastrophe. In the study of Chan et al. [22] twenty five percent of detected problems received high priority and in the study of Choi et al. [10] the average severity was between 0.5 and 2, rated on a 5-point Likert scale from 1 (strongly disagree) to 5 (strongly agree)). In addition, in the study of Joshi et al. [18] the mean severity of identified problems was 3 (range 0 – 4) indicating major problems. Finally, the results of this study confirm the results of the study of Khajouei et al. and Nabovati et al. [20, 21]. This might be because of the rather similar design of these two information systems. This result is different from the results of Chan et al. and Choi et al. [10, 22] studies and has higher degree of catastrophic violations.

According to the results and the type of identified problems, with a low amount of resources, Heuristic Evaluation can provide fairly good results. This method can identify several problems bothering users when interacting with system [29]. Considering usability principles in the design of health information systems can increase users’ efficiency and satisfaction and finally improve patient safety and quality of care by reducing user system interaction problems. This is also important about information systems that are being used in an inpatient department. Because, when users have lower concentration for example due to issues such as high rate of patient encounters in the case of natural disasters or severity of patients’ status, effective systems central to any subtle medical intervention. Patient information management in inpatient departments is crucial. According to researches, systems with poor usability not only reduce the speed and precision of users while working, but also lead to confusion, anger, and dissatisfaction of users [30].

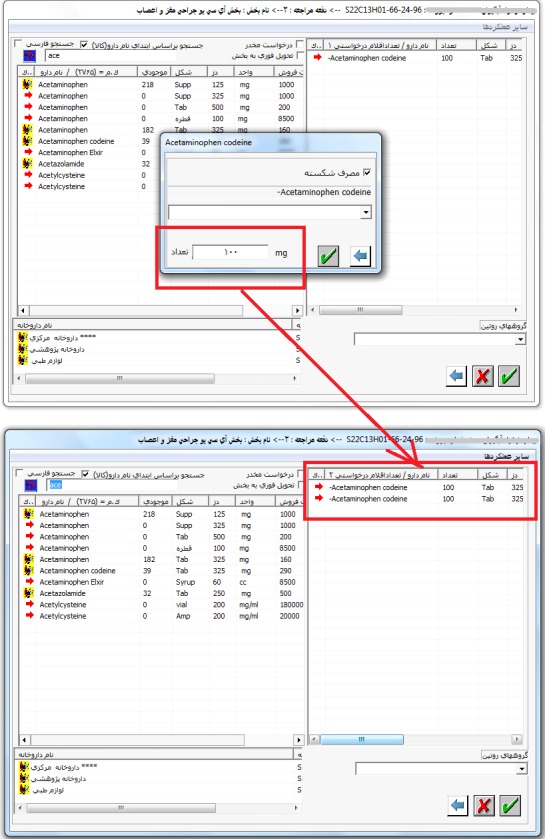

This study has three limitations. First, some studies have shown that many of predicted violations detected by inspection methods such as Heuristic Evaluation maybe not real problems [31–33]. However, the problems recognized by all evaluators collectively might attain more validity. The evaluation with usability inspection methods must be improved such that it can help researchers to determine why such prediction failures occur, and thus can take an informed approach to method improvement [31]. Some argue that Heuristic Evaluation appears to perform best with superficial, almost ‘obvious’ problems and maybe some subtle, complex interactions tend to be missed [32, 33]. Although the effectiveness of HE is debatable [33], it should be noted that some of these discovered problems in real world may result in life threatening errors, especially in the domain of healthcare [7, 29]. An example of such life threatening problems can be seen in ► Figure 2. Second, this study was conducted on a system that is being used in many hospitals in Iran. The results may not totally be generalizable to the systems developed by different companies, but most of the current systems have the same design and hence similar problems. Although doing this kind of evaluation needs usability expertise and experience, some studies have shown that familiarity with the environment and the proper implementation of the evaluation method results in credible findings [18, 34, 35]. Third, evaluation in this study has been carried out by only three evaluators. This does not seem to affect our results since, previous studies recommended recruiting three to five evaluators since using a larger number does not provide much additional information [25]. Since, using more evaluators or combining evaluation methods would be more effective [36], we recommend future studies to evaluate the impact of recruiting more evaluators of different background and the combination of different methods on the results.

Fig. 2.

Poor design of data entry fields when a fraction of a medication should be taken each time. This is an example of a situation that can threaten the patient’s health or life. This task requires reviewing the two screens and a small pop-up window shown in this figure. To prescribe a fraction of medication, physician should check the box in the pop-up window called “taking a fraction” (in Persian). Then the fraction dosage should be entered in the last field of this window. There are two problems that may endanger the life of patient if the order proceeds: 1) Two labels are associated with this field. The first is “the number” (of medication e.g. pills), at the right in Persian, and the second is “mg” indicating the dosage of medication, at the left. This is very confusing and may lead to an overly inaccurate prescription. 2) (According to an experience HIS users) this field should be filled with fraction dosage. If a user presumes this field as the dosage field and fills it with the corresponding value, in the next page, this value is presented in the “number” column rather than “dosage” column. Dealing with this problems is not only frustrating and time consuming for physicians but is not safe for patients.

One of our evaluators had experience working with HIS in a real environment. This study showed that the experienced evaluator identified somewhat more usability problems and gave a higher severity rating to the problems. Studies showed that double experts (usability experts with specific expertise in the kind of interface) are better than usability experts in performing Heuristic Evaluation [6, 34]. Review of previous studies showed that working experience of an evaluator with a system has not been addressed sufficiently so far. In our study, due to the low number of participated evaluators, we cannot definitely conclude that the reported sensitivity is due to the evaluator’s experience in using the HIS.

Despite the widespread use of health information systems in Iran, some of them have many usability problems that should be considered before and after the implementation of the system. This study showed that Heuristic Evaluation method can be used to identify health information system’s usability problems to a large extent. Considering critical role of health information systems on well-being of patients and the high number of identified usability problems in such studies, detection and elimination of usability problems can be lifesaving. Our results showed that many of major and catastrophe problems can be fixed by minimal rearrangement of screen icons or simple redesigns such as using clear labels and better layouts. Using evaluators with usability experience and also system experience can lead to better and more accurate identification of problems.

Table 3.

Final severity of problems based on the average of their severities

| Severity | Average |

|---|---|

| No problem | 0 |

| Cosmetic | 0<average<1 |

| Minor | 1<average<2 |

| Major | 2<average<3 |

| Catastrophe | 3<average<4 |

Acknowledgments

The authors appreciate IT administrators of the Faculty of Paramedical Sciences, and the staff in the Department of Management and Health Information Technology, Mashhad University of Medical Sciences, especially Mrs. Zahra S. Ershadnia for their kind assistance during this study. We wish to thank Mrs. Kolsum Deldar for her detailed comments and cooperation.

Footnotes

Conflicts of Interest

The authors declare that there is no conflicts of interest in the research.

Protection of Human and Animal Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects, and was reviewed by Ethics Committee of Mashhad University of Medical Sciences.

References

- 1.Menachemi N, Collum TH. Benefits and drawbacks of electronic health record systems. Risk management and healthcare policy 2011; 4: 47–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gulcin Yucela SC, Bo Hoegec, Ahmet F. Ozok. A fuzzy risk assessment model for hospital information system implementation. Expert Systems with Applications 2012; 39(1): 1211-1218. [Google Scholar]

- 3.Ahmadian L, Salehi Nejad S, Khajouei R. Evaluation methods used on health information systems (HISs) in Iran and the effects of HISs on Iranian healthcare: A systematic review. International journal of medical informatics 2015; 84(6):444–453 [DOI] [PubMed] [Google Scholar]

- 4.Khajouei R, Jaspers MW. The impact of CPOE medication systems’ design aspects on usability, workflow and medication orders: a systematic review. Methods of information in medicine 2010; 49(1):3–19. [DOI] [PubMed] [Google Scholar]

- 5.HR. EHR History in Iran: Ministry of Health and Medical Education 2009. [Google Scholar]

- 6.JN. Usability Engineering. USA, San Diego: Academic Press; 1993. [Google Scholar]

- 7.Khajouei R, Peek N, Wierenga PC, Kersten MJ, Jaspers MW. Effect of predefined order sets and usability problems on efficiency of computerized medication ordering. International journal of medical informatics 2010; 79(10): 690-698. [DOI] [PubMed] [Google Scholar]

- 8.Lathan CE, Sebrechts MM, Newman DJ, Doarn CR. Heuristic Evaluation of a web-based interface for internet telemedicine. Telemedicine journal: the official journal of the American Telemedicine Association 1999; 5(2): 177-185. [DOI] [PubMed] [Google Scholar]

- 9.Hertzum M. Images of Usability. International Journal of Human-Computer Interaction 2010; 26(6):567–600. [Google Scholar]

- 10.Choi J, Bakken S. Web-based education for low-literate parents in Neonatal Intensive Care Unit: development of a website and Heuristic Evaluation and usability testing. International journal of medical informatics 2010; 79(8): 565-575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Turner-Bowker DM, Saris-Baglama RN, Smith KJ, DeRosa MA, Paulsen CA, Hogue SJ. Heuristic Evaluation and usability testing of a computerized patient-reported outcomes survey for headache sufferers. Telemedicine journal and e-health : the official journal of the American Telemedicine Association 2011; 17(1): 40-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nielsen JM, R. Usability Inspection Methods. New York: John Wiley & Sons; 1994. [Google Scholar]

- 13.Molich R. NJIah-cd. Improving a human-computer dialogue: Commun ACM; 1990; 338–348. [Google Scholar]

- 14.Tang Z, Johnson TR, Tindall RD, Zhang J. Applying Heuristic Evaluation to improve the usability of a tele-medicine system. Telemedicine journal and e-health : the official journal of the American Telemedicine Association 2006; 12(1):24–34. [DOI] [PubMed] [Google Scholar]

- 15.Zhang J, Johnson TR, Patel VL, Paige DL, Kubose T. Using usability heuristics to evaluate patient safety of medical devices. Journal of biomedical informatics 2003; 36(1–2): 23–30. [DOI] [PubMed] [Google Scholar]

- 16.Thyvalikakath TP, Monaco V, Thambuganipalle H, Schleyer T. Comparative study of Heuristic Evaluation and usability testing methods. Studies in health technology and informatics 2009; 143: 322-327. [PMC free article] [PubMed] [Google Scholar]

- 17.Thyvalikakath TP, Schleyer T. K., Monaco V. Heuristic Evaluationofclinicalfunctionsinfourpracticemanagementsystems: a pilot study. J Am Dent Assoc 2007; 138(2): 208-212. [DOI] [PubMed] [Google Scholar]

- 18.Joshi A, Arora M, Dai L, Price K, Vizer L, Sears A. Usability of a patient education and motivation tool using Heuristic Evaluation. Journal of medical Internet research 2009; 11(4): e47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kimiafar K MG, Sadoughi F, Hosseini F. A study on the user’s views on the quality of teaching hospitals information system of Mashhad University of Medical Sciences-2006. Journal of Health Administration 2007; 10(29): 31-36. [Google Scholar]

- 20.Nabovati E, Vakili-Arki H, Eslami S, Khajouei R. Usability evaluation of Laboratory and Radiology Information Systems integrated into a hospital information system. Journal of medical systems 2014; 38(4): 35. [DOI] [PubMed] [Google Scholar]

- 21.Khajouei R AA, Atashi A. Usability Evaluation of an Emergency Information System: A Heuristic Evaluation. Journal of Health Administration 2013; 16(25):61–72. [Google Scholar]

- 22.Chan AJ, Islam MK, Rosewall T, Jaffray DA, Easty AC, Cafazzo JA. Applying usability heuristics to radiotherapy systems. Radiotherapy and oncology: journal of the European Society for Therapeutic Radiology and Oncology 2012; 102(1): 142-147. [DOI] [PubMed] [Google Scholar]

- 23.Khajouei RAA, Atashi A. Usability Evaluation of an Emergency Information System: A Heuristic Evaluation. Journal of Health Administration 2013; 16(25):61–72. [Google Scholar]

- 24.Edwards P., MKP J., Jacko J. A, Sainfort F. Evaluating usability of a commercial electronic healthrecord: Acase study. Int J Hum Comput Stud 2008; 66(10): 718-728. [Google Scholar]

- 25.Nielsen J. How to Conduct a Heuristic Evaluation 1995. 2015 Sep 22; Available from: http://www.nngroup.com/articles/how-to-conduct-a-heuristic-evaluation/.

- 26.Ahmadian L CR, Van Klei WA, DE Keizer NF. Data collection variation in preoperative assessment: a literature review. Comput Inform Nurs 2011; 29(11): 662-670. [DOI] [PubMed] [Google Scholar]

- 27.Ahmadian L CR, Van Klei WA, DE Keizer NF. Diversity in preoperative-assessment data collection, a literature review. Stud Health Technol Inform 2008; 136: 127-132. [PubMed] [Google Scholar]

- 28.Hertzum M JN. The Evaluator Effect: A Chilling Fact About Usability Evaluation Methods. International Journal of Human-Computer Interaction 2003; 15(1). [Google Scholar]

- 29.Penha M, Correia WFM, Campos FFdC, Barros MdLN. Heuristic Evaluation of Usability – a Case study with the Learning Management Systems (LMS) of IFPE. International Journal of Humanities and Social Science 2014; 4: 295–303. [Google Scholar]

- 30.Khajouei R, de Jongh D, Jaspers MW. Usability evaluation of a computerized physician order entry for medication ordering. Studies in health technology and informatics 2009; 150: 532-536. [PubMed] [Google Scholar]

- 31.Cockton G, Woolrych A. Understanding inspection methods: lessons from an assessment of Heuristic Evaluation. People and Computers XV – Interaction without Frontiers Springer; London: 2001; 171-191. [Google Scholar]

- 32.Cockton G, Woolrych A. Sale must end: should discount methods be cleared off HCI’s shelves? Interactions 2002; 9(5): 13-18. [Google Scholar]

- 33.Law E, Hvannberg E, editors. Analysis of strategies for improving and estimating the effectiveness of Heuristic Evaluation. The third Nordic conference on Human-computer interaction; 2004: ACM. [Google Scholar]

- 34.Morten Hertzum RM, Niels Ebbe Jacobsen. What you get is what you see: revisiting the evaluator effect in usability tests. Behaviour & Information Technology 2014; 33(2): 144-163. [Google Scholar]

- 35.Khajouei R AL, Jaspers MW. Methodological concerns in usability evaluation of software prototypes. J Biomed Inform 2011; 44(4): 700-701. [DOI] [PubMed] [Google Scholar]

- 36.Hornbæk K. Dogmas in the assessment of usability evaluation methods. Behaviour & Information Technology 2010; 29(1):97–111. [Google Scholar]