Summary

Background

Older adults are at risk for inadequate emergency department (ED) pain care. Unrelieved acute pain is associated with poor outcomes. Clinical decision support systems (CDSS) hold promise to improve patient care, but CDSS quality varies widely, particularly when usability evaluation is not employed.

Objective

To conduct an iterative usability and redesign process of a novel geriatric abdominal pain care CDSS. We hypothesized this process would result in the creation of more usable and favorable pain care interventions.

Methods

Thirteen emergency physicians familiar with the Electronic Health Record (EHR) in use at the study site were recruited. Over a 10-week period, 17 1-hour usability test sessions were conducted across 3 rounds of testing. Participants were given 3 patient scenarios and provided simulated clinical care using the EHR, while interacting with the CDSS interventions. Quantitative System Usability Scores (SUS), favorability scores and qualitative narrative feedback were collected for each session. Using a multi-step review process by an interdisciplinary team, positive and negative usability issues in effectiveness, efficiency, and satisfaction were considered, prioritized and incorporated in the iterative redesign process of the CDSS. Video analysis was used to determine the appropriateness of the CDS appearances during simulated clinical care.

Results

Over the 3 rounds of usability evaluations and subsequent redesign processes, mean SUS progressively improved from 74.8 to 81.2 to 88.9; mean favorability scores improved from 3.23 to 4.29 (1 worst, 5 best). Video analysis revealed that, in the course of the iterative redesign processes, rates of physicians’ acknowledgment of CDS interventions increased, however most rates of desired actions by physicians (such as more frequent pain score updates) decreased.

Conclusion

The iterative usability redesign process was instrumental in improving the usability of the CDSS; if implemented in practice, it could improve geriatric pain care. The usability evaluation process led to improved acknowledgement and favorability. Incorporating usability testing when designing CDSS interventions for studies may be effective to enhance clinician use.

Keywords: Clinical decision support systems, decision support techniques, aged, acute pain, medical record systems, computerized, man-machine systems

1. Background

1.1 Geriatric pain in the ED

Older adults typically receive poorer pain care in the emergency department – for several reasons [1, 2]. The patients themselves may lack the mobility or agency to advocate for themselves or articulate their complaints. Another reason is that clinicians are wary of impaired drug metabolism in the elderly, and often give lower doses of pain medications, less frequently, for fear of causing adverse events from doses that younger adult patients would tolerate well. Finally, elderly patients often present to the emergency department (ED) with more complex complaints, often due to multiple comorbidities and medications, which require lengthier workups. ED clinicians focused on diagnosis and patient disposition may forget to re-assess pain scores as they would traditional vital signs [3]. And yet, there is increasing evidence that unrelieved acute pain is associated with poorer outcomes during hospitalization – including later pain [4], chronic pain [5], longer hospital lengths of stay, delays to ambulation, and delirium [4, 6, 7]. The American Geriatrics Society (AGS) has issued guidelines for the prompt management of geriatric pain [8, 9]. Yet clinicians, in the ED and elsewhere, may not consider pain scores as they would other vital signs – worthy of frequent reassessments and prompt interventions when the values warrant treatment [1].

Thus, management of pain for older adults in the ED is a process that could be improved. Clinical decision support systems (CDSS) in an ED’s electronic health record (EHR) have the potential to change practice and promote guideline adherence; CDSS has been shown to improve guideline adherence for ED imaging in back pain [10] and mild head injury [11] as well as for CDC guidelines following sexual assault [12].

1.2 Current CDSS Implementations fall short of Best Practices

The adoption of EHR in the United States has been spurred, in large part, through financial incentives, with marked increases in the proportion of clinics and hospitals attesting to Meaningful Use (MU) of EHR [13]. One of the principal stated reasons for these incentives was to improve patient safety and outcomes, through CDS systems [14].

Yet early results across a variety of healthcare settings in the US have had mixed results, without clear benefit of patient safety or improved outcomes consistently demonstrated [15–17]. Even now, as CDS systems are integrated with EHR implementation in a variety of settings, many have not consistently shown improved outcomes [18–21]. Well-recognized best practices of CDS, such as integration into workflows, review and feedback [22, 23], are not routine followed as clinical informatics resources are allocated toward other priorities.

While analyses have been complex, and many factors have been cited as contributing to the uneven benefits of EHR seen thus far [15, 17], one possibility is that MU provided minimal standards for usability [24]. Usability is a quality attribute that assesses the ease of use of an interface, defined in part by learnability, efficiency, memorability, satisfaction, and potential for errors [25]. Poor usability diminishes the effect of CDS systems on clinician workflows and patient care. Instead of presenting opportunities for improved care or advancing evidence-based clinical goals such as geriatric pain care, EHR and poor implementation of CDSS is seen, in some circumstances, as hampering care.

Effective CDS is often the product of an interactive design process based on usability evaluation and redesign [26]. While this process can be time-consuming and laborious, it may improve CDS systems with the goal of presenting clinicians with clinically relevant, minimally disruptive decision support that can improve patient care, helping to realize the promise of EHR.

1.3 Objectives

The objective of this study was to conduct an iterative usability and redesign process of a novel geriatric abdominal pain care CDSS. We hypothesized this process would result in the creation of more usable and favorable pain care interventions.

2. Methods

2.1 CDS Conceptualization & Development

There are several reasons we considered an elderly pain CDS system for ED clinicians to be a rich, high-value opportunity for study. There are many opportunities to increased clinician mindfulness about elderly pain care and improve workflows during an ED visit. In addition, there are quality indicators for optimal geriatric ED pain care that recommend pain assessment, reassessment, and treatment of pain.1 Abdominal pain in particular tends to be under-treated, compared to other sources of acute pain such as fracture [27].

Thus we set about developing an elderly abdominal pain CDSS, with a goal of improving:

all elderly pain ‘assessments’ and reassessments,

improving pain treatment when indicated (i.e., when patients have pain) and

improving appropriateness of choice and dose of pain medication, using a customized order set for geriatric pain medications.

2.2 CDSS Intervention Design

An interdisciplinary team developed a series of CDS interventions with the goal of improving geriatric emergency pain care. With several authors’ knowledge of ED workflows (NG, FLT, KB), clinical decision support best practices (MSK, JK), optimal management of geriatric pain care (UH), and usability evaluation (MSK, RB, LR), 5 CDS interventions were conceptualized and developed. These CDS interventions were designed to enhance physician awareness about geriatric pain assessment and treatment, and appeared at different points during a patient’s ED workup (► Table 1). The interventions included two alerts (a “10 out of 10” Pain Assessment and Pain Re-Assessment Alert), a documentation reminder to encourage clinicians to address pain (what we call a pain “keycept” – using the EHR to highlight a key concept and allowing the user to respond, in this case, within the HPI (history of presenting illness) documentation template), a one-click pain score update, an elderly analgesic order set, and a discharge pain prompt. This study was approved by the Mount Sinai Program for the Protection of Human Subjects.

Table 1.

A description of the five CDS interventions to promote mindfulness of geriatric pain.

| CDS Interventions | Description |

|---|---|

|

1a. Pain Assessment Alert (Interruptive) |

When a clinician enters the chart of a patient who reports a pain score of 10, a standard EHR CDS alert (Epic’s BPA) prompts use of a specialized geriatric pain order set or reassessment/adjustment of patient’s pain score |

|

1b. Pain Re-Assessment Alert (Non-interruptive) |

The clinician sees a standard EHR CDS alert when an elevated pain score has not been reassessed for a clinically significant amount of time. Clinician is prompted to make use of the customized analgesia order set, or reassess/adjust the pain score. |

| 2. Pain “Keycept” | While documenting in an HPI template, the clinician is prompted to address any pain or reassess/adjust the patient’s pain score. |

| 3. One-click Pain Score Update | Adjusting the patient’s pain score, normally a multi-step process hidden under other activity headers, is facilitated by a button that remains visible among other common activities in the patent’s chart. NB: This intervention was added in Round 2. |

| 4. Customized Analgesia Order Set for Older Adults | The clinician can view and order appropriate doses of common pain medications, based on Beers criteria and tenants of acute geriatric pain care. |

| 5. Discharge Pain Prompt | When a clinician attempts to print discharge papers on a patient with an elevated pain score, a modal window (hard stop) appears, prompting analgesia or re-assessment of the pain score. The papers will not print until the pain score improves or meds are ordered. |

We sought to collect feedback and usability measures from ED physicians on these CDS interventions across three rounds of usability testing sessions.

2.3 Organizational Setting

The CDSS interventions were built and implemented in a testing environment (similar to the production environment) of an EHR module for Emergency Departments, Epic ASAP (Epic Systems, Verona WI), so that they would be seen and evaluated by physicians participating in our usability analysis.

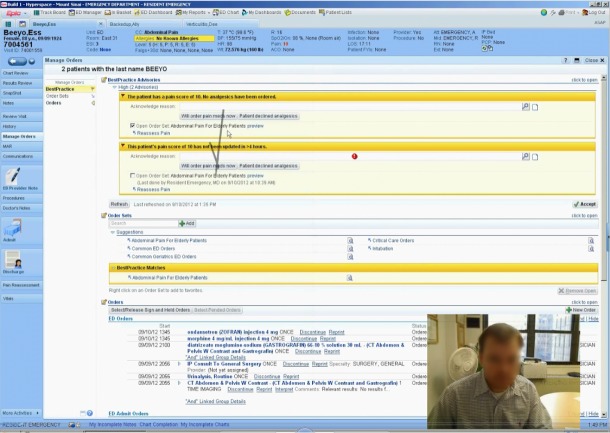

The usability test sessions were conducted in a closed office in the Department of Emergency Medicine at the Icahn School of Medicine at Mount Sinai, from July 25, 2012 to October 3, 2012. This included a computer and EHR mirroring the clinical environment to which the test users were accustomed – the only substantive difference was the CDSS interventions being tested. The test EHR environment was built in Epic 2010 ASAP v5, within XenApp (Citrix Corp.) running on a standard desktop PC platform with Windows 7 Enterprise OS (Microsoft). User input was provided via standard 104-key keyboard and optical mouse with scroll wheel. The setup utilized a 17-inch LCD display with web camera for video/audio capture (Logitech Webcam Pro 9000, Logitech Corp). Data were recorded using screen capture and video/audio capture of participants with Morae Recorder version 3.3 software (TechSmith Corp). This allowed for later analysis of test sessions as well as realtime observation of the screen/audio/video by study investigators in a nearby office using Morae Observer [28]. With informed consent, study participant’s face and audio were captured during the test sessions (► Figure 1).

Fig. 1.

A screen capture of a usability testing session, using Morae software. A physician’s voice and facial expressions are captured while she or he navigates the EHR.

2.4 Participants

Usability participants were recruited from attending physicians from the Mount Sinai Emergency Department, and recent graduates of the residency program who were familiar with the EHR. Several rounds of usability evaluation were planned, to ensure user errors would be identified and corrected, and CDSS usability would improve. Ultimately, there were a total of 17 usability test sessions with participation by 13 unique emergency physicians across three rounds of iterative CDSS improvements. To assess whether the same CDS misses or issues were encountered, Round 2 had a repeat user from Round 1, and Round 3 had a repeat user from Round 2, and a repeat user from Round 1 and 2. All participants were compensated for their time with a $100 gift certificate for completion of each one-hour test session.

2.5 Clinical Scenarios and Tasks

Three novel mock clinical scenarios were developed by the study team for usability test sessions. Each physician usability subject would log into the EHR test environment and “see” 3 older adult patients with acute abdominal pain complaints. Specific measures to simulate ED workflows included

placing orders and documenting findings, as ED physicians typically do in this HER

the presentations of cases at different points of their ED care,

switching tasks between patients (e.g., upon ordering tests for one patient, the facilitator presented the study participant with results from a previous patient).

The scenarios were designed so that all 5 interventions would appear at least once over the course of the planned one-hour session – though some would appear multiple times depending on the physicians’ actions. During the clinical simulation, the physicians would learn 2 of the patients would be discharged – one with a diagnosis of constipation, another with diverticulitis, and a third would require admission for small bowel obstruction. The different (but nonetheless common) diagnoses for these mock clinical scenarios were chosen to reflect the diversity of ED presentations and improve the fidelity of the simulation.

2.6 Measures & Data Collection

At the conclusion of each usability session, the physician participants were asked to provide structured and unstructured feedback. A System Usability Score survey [29, 30] was completed at the end of each test session utilizing the survey function of Morae software. Following this survey, participants were asked to complete a facilitator-led structured favorability questionnaire [scored 1 as least favorable, 5 as most favorable], and open-ended narrative feedback of each CDSS intervention. Participants were shown pictures of each intervention as they appeared in the EHR and were asked if they found the intervention useful (or not) and if they had any concerns or questions about the intervention. Users were also asked about areas of improvement for each intervention with open-ended feedback.

2.7 Data Analysis for Iterative CDS Redesign

Three rounds of iterative redesign were a priori planned and conducted. After each usability test session, the same interdisciplinary team that developed the initial CDSS met for formative usability evaluation – to discuss the user provided feedback and study investigator observations, reviewing both structured and unstructured feedback and favorability scores. The majority of this interdisciplinary team was active in the data acquisition phase of the usability studies, allowing informal realtime assessment and discussion among team members prior to formalized meetings. Using this information, the team identified discrete elements within each CDSS intervention that could improve usability, discussed which changes were feasible within the constraints of time and the EHR system, then incorporated changes to these elements in the next iteration of CDSS design.

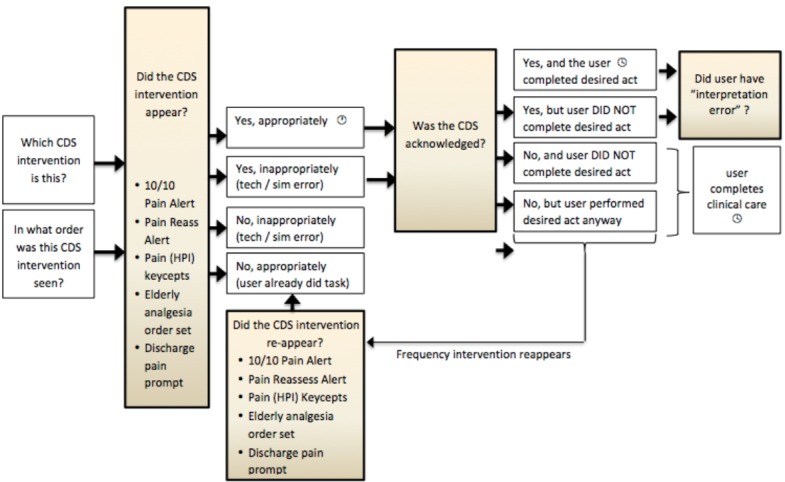

2.8 Test Session Coding and Further Data Analysis

Screen recordings of the 17 physician test sessions, spanning 3 iterations of CDSS redesign, were reviewed and coded for four events:

the frequency of the CDSS interventions appearance,

the appropriateness of their appearance,

whether the participants acknowledged the CDSS, and

whether the CDSS prompted the desired physician actions (► Figure 2).

Fig. 2.

Schematic diagram of usability test coding process. Coders used this flowsheet as they reviewed video recordings of the usability sessions, to document both the CDS appearances and the physician responses. NB: for usability sessions in which the One-Click Pain Score update was visible (Rounds 2 & 3) the intervention was visible for the duration of the testing session, so coding its appropriateness was not possible.

In each recording, the time of appearance of the various CDSS interventions was noted in an MS Excel spreadsheet by a research associate (CS, RB).

Two sessions were chosen at random to be coded as gold standard evaluations of clinician responsiveness to the CDSS interventions. Two ED physicians (NG, FLT) coded the appropriateness of the CDSS appearance and the participants’ response to the intervention, with a 3rd ED physician (UH) adjudicating disagreement. The 3 additional coders (CS, LR, RB) were trained until coding was found to be 90% consistent with the gold standard. Using the gold standard, the three coders tabulated CDSS appearances and participants’ responses for the remaining sessions; the sessions were subsequently reviewed by the investigators (NG, FLT) to check accuracy.

Reviewing session coding, we tabulated whether or not the participants acknowledged the CDS intervention, and whether the physician took desired actions (“success” rates) in response to the CDS (e.g., ordering pain medication or updating the pain score). We chose to adopt the nomenclature of McCoy’s method of evaluating provider response to CDS [31], categorizing appearance of CDS as appropriate or inappropriate, and physician responses to appropriate alerts therefore either successful (when the desired action is performed) or non-adherent (when the desired action is not performed). However, non-adherence implies a user chose to ignore a CDS intervention, and with Morae evaluation software, it was possible to detect instances when the physician simply failed to notice or acknowledge an appropriate alert. Thus, we borrowed from Ong’s approach to CDS evaluations, and scored non-adherence as the “miss” rate [32].

3. Results

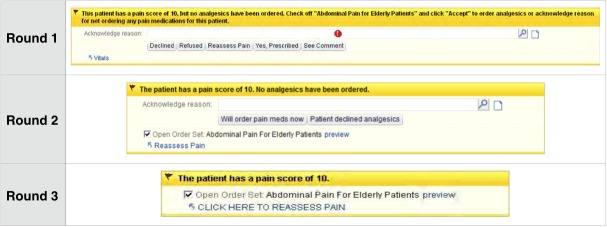

Three rounds of iterative usability testing, feedback, and redesign were completed for the 5 CDS interventions (► Figure 3 for an example of CDS changes across three rounds of iterative testing and redesign; additional CDS interventions are available upon request). The mean age of study participants was 33.7 years (SD 5.00). 38.5% of participants were women. The average user had at 1.06 (SD 0.65) years’ experience using this system.

Fig. 3.

Screenshots of the Pain Assessment Alert (Interruptive), across three rounds of usability evaluation and redesign. These alerts appeared when clinicians entered the chart of geriatric ED patients who reported a pain score of 10, and prompted clinicians to use a specialized order set or to reassess or adjust a patient’s pain score in the EHR. With each iteration, the alert became smaller, more terse, and offered fewer options.

With each round of testing, members of the interdisciplinary team reviewed feedback and made changes to the CDS interventions. This iterative process substantially reduced the amount of text used in interventions, decreased the amount of required responses or fields, and improved the linking of the intervention to actionable items (► Table 2 for select comments from the participants for each intervention, after each round, that motivated design changes).

Table 2.

Representative comments from physician testers, about each of the CDS interventions, through successive iterations of usability testing.

| Post-testing feedback | Round 1 | Round 2 | Round 3 |

|---|---|---|---|

| Pain Assessment Alerts | “Too complex; I assumed it was an error and ignored it.” | “If I click the box “Will order pain meds now”, it would only be helpful if it took me to where I can make the order.” | “Great, straightforward. I would want to do something about a pain score of 10.” |

| Pain HPI Keycept | “I didn’t fully understand this intervention. “It’s a little much.” | “I actually liked this one. It was a reminder to do something about a pain score of 10.“ | Those are really good because they’re in your face, if someone had forgotten to order pain meds, then it would be helpful. |

| One-Click Pain Score Update Button | N/A (this intervention was added in Round 2) |

(Providers offered feedback that the idea of a one-click button for updating the pain score would be helpful) | “Before, you would click on the vitals and there would be 800 different boxes to check so it always takes a long time to find the pain assessment. So I liked that it is very easy to see the button and it takes you only to the pain scale” quickly. |

| Elderly Analgesia Order Set | “Good. Has all options laid out, including small doses. ‘Easy’ to order them all. Reminds to start low, slow.” | “Best practice pearls in the order set. I think this is a great reminder, especially for more junior people.” | “I like that the order set gives suggestions of pain control in the elderly.” |

| Discharge Pain Prompt | “A little different – comes in a different place than the BPA. Thought it was an error. Forced me to re-evaluate the pain score.” | “VERY annoying. Because it forces me to document in a specific way. I did document in the text. I felt it didn’t benefit the patient in any way.” | [Repeat-user]: “This obviously has worked for me because I am already programmed to update patients’ pain score before they go home.” [New user]: “I didn’t like it but it was good for me.” |

In collecting qualitative feedback from semi-structured interviews, several recurring comments emerged. Assessment and documentation of pain scores was often cited as a nursing responsibility, and several physicians expressed surprise and irritation at alerts prompting them to perform this function. Several physicians also noted that they suffered alert fatigue (the tendency to ignore alerts after excessive exposure desensitizes the user) from their real-world EHR experience, and stated they ignored CDS alerts in the testing session. Study participants often cited the favorability of turning reminders into actionable alerts with one-click, direct links to recommended activities, which was incorporated into CDS redesign.

System Usability Scores and Favorability Scores were noted to increase across each round of usability testing (► Table 3), from a mean of 74.8 after Round 1 to 81.2 after Round 2 to 88.9 after Round 3 (scores below 50 are considered unacceptable, with 50–62 low marginal, 63–70 high marginal, and above 70 considered acceptable, the maximum score is 100 [33]). Feedback regarding the pain keycept intervention explained its high favorability as due to minimal interference with physician workflow. Feedback also demonstrated that many of the interventions described as “annoying” by the subjects were also cited as “most helpful” in terms of promoting good clinical practice, and the patients’ best interest.

Table 3.

Mean System Usability Scores (SUS) and Favorability scores (Fav.) with minimum and maximum scores, across three rounds of iterative usability testing.

| Iteration | SUS Mean (range) | Fav. Mean (range) | n |

|---|---|---|---|

| 1 | 75 (64–87) | 3.4 (2.2–4.2) | 5 |

| 2 | 81 (70–96) | 2.9 (1.8–3.8) | 5 |

| 3 | 89 (69–100) | 4.5 (4.4–4.7) | 7 |

Coding of the recorded screen captures allowed appearances of geriatric CDS ED pain interventions to be quantified. These were tabulated whether the participant noticed or acknowledged the intervention (miss rate), and the responsiveness of the participant to the CDS in generating the desired action (success rate). (► Table 4). A table of the coded interventions by rounds is available upon request. Overall, with each round of usability testing, physicians CDS acknowledgement rates increased (perhaps reflecting improved usability). However, with each round of usability testing, for many CDS interventions, the success rate (such as updating the pain score) decreased. An exception to this is the Discharge Pain Prompt, which was mandatory (a patient could not receive discharge papers unless the pain score was updated to a level less than 10).

Table 4.

Usability Session Coding, by physician misses (interventions not acknowledged despite appropriately appearing in scenarios) and successes (interventions leading to desired physician behavior after appropriately appearing in scenarios). (NB: Discharge Pain Prompts can have action rates above 100% because actions were defined as ordering pain medications and updating the pain score – both of which can be prompted by a single intervention.)

| Round | 1 | 2 | 3 | 1 | 2 | 3 |

|---|---|---|---|---|---|---|

| CDSS Intervention | Miss Rate | Miss Rate | Miss Rate | Success Rate | Success Rate | Success Rate |

| 1a. 10/10 Pain Assessment Alwert | 69.6% | 56.7% | 0.0% | 78.3% | 50.0% | 45.1% |

| 1b. Pain Re-Assessment Alert | 44.4% | 41.2% | 15.4% | 33.3% | 70.6% | 15.4% |

| 2. Pain Keycept (HPI) | 40.0% | 0.0% | 0.0% | 80.0% | 54.5% | 26.7% |

| 3. One-Click Pain Score Update | n/a | 0.0% | 0.0% | n/a | 41.7% | 52.6% |

| 4. Elderly Analgesia Order Set | 95.0% | 5.3% | 16.2% | 20.0% | 47.4% | 41.0% |

| 5. Discharge Pain Prompt | 10.0% | 0.0% | 0.0% | 70.0% | 120.0% | 150% |

4. Discussion

4.1 Significance and Implications

This is the first study to show that iterative usability testing can result in the development of more favorable clinical decision support interventions that have high usability levels for the management of geriatric patients in the ED with pain. This study further demonstrates that developing a new CDSS through iterative testing and feedback is feasible, but requires the investment of several weeks of testing, analysis and redesign. Increased favorability and usability of CDS interventions, however, may not correlate with desired actions planned of the interventions.

When initially designing a CDSS around older adult pain management in the ED, we considered five interventions that we hypothesized would be usable and efficacious. As most authors have overlapping expertise in clinical informatics, decision support, emergency medicine, and geriatric pain care, we had some confidence that the interventions would be efficacious and usable with the earlier test sessions. After the first round of usability testing, however, we noted SUS scores averaged just above marginal, CDS acknowledgement for several interventions was low, and feedback suggested ample room for improvement.

When feedback was incorporated and the CDS interventions redesigned, through formative usability evaluation, we saw improvement – both from subsequent feedback and also our own subjective evaluation. The alerts were simpler and more actionable, yet less disruptive (for example, ► Figure 3). CDS acknowledgement climbed among physicians in subsequent testing rounds. Iterative usability testing and redesign improved CDS.

4.2 CDS Miss Rates and Success Rates

Rates of skipping the CDS were seen to decline across iterations, reflecting improved usability. However, three of our CDS interventions also saw decreasing influence on their desired actions – success rates for updating the pain score in response to initial or reassessment alerts fell, as did the HPI documentation keycept. We have several theories as to why this may be. One possibility is that, the more favorable a CDS intervention is rated, the less likely it is to provoke behavioral change. Perhaps there is a trade-off between CDS favorability and CDS efficacy, at least, as a new intervention is introduced in a trial setting. In a real clinical environment, over time, more favorable CDS interventions seem likely to be more effective.

Another possibility is that the test environment did not fully mirror the clinical practice environment – although we took pains to create realistic ED scenarios with patients at various stages of their workup, it remained a simulated environment of our EHR that mimicked the production environment in every way except for the presence of the CDSS. Still, asking a physician to respond to a simulated patient’s pain score is more problematic than, say, asking that physician to initiate the appropriate workup or therapy for a given scenario. For various reasons, recognizing and treating pain often depends on a degree of empathy by the treating clinician, which can be difficult to elicit in simulated encounters. The nature of the test environment may favor a more detached, clinical perspective that fails to properly elicit the desired response from the CDS, despite high rates of acknowledgment.

Finally, as we discovered during the usability sessions, clinicians have a perceived notion about clinical duties. In this case, many of the physician participants expressed that pain care is a nursing responsibility. Development of a geriatric pain care CDS intervention for physicians, while more favorable and more usable, will may not result in the desired pain care actions if the physicians regard pain care as the duty of other clinicians.

4.3 EHR and CDS, in Context

While EHR adoption has surged in recent years, including in the ED environment, many physicians are dissatisfied with vendor software; one survey showed only a minority of physicians feel the Meaningful Use program will help improve care [34], and others suggesting EHR adoption takes doctors away from the bedside and turns them into data-entry clerks [35]. Still, hospital administrators focused on the bottom line will note that attention to EHR implementation does not negatively affect ED performance metrics, including patient throughput and patient satisfaction scores [36].

Designing better CDS systems has the potential to improve both EHR usability and clinical care. While it is true that formative usability evaluation of CDS is time consuming and expensive, and that efforts like the work described here are just one aspect of the EHR experience that needs improvement, we would argue that similar efforts should be undertaken and shared. It is regrettable but not surprising that software vendors are not prioritizing user experience or clinical care; unless the regulatory environment or marketplace shifts drastically, we cannot rely on others to do this, and must instead advocate such processes for our patients and ourselves.

4.4 Alert Fatigue and Workarounds

Physician participants also commented that, based on their clinical experience, they already developed alert fatigue and a tendency to ignore the characteristic yellow alerts of this EHR’s CDS. In our testing environment, only alerts from our pain CDSS appeared, but participants may have been predisposed to ignore them from past experience, limiting their efficacy.

In some instances, we also observed the beginning of workarounds – clinicians who responded to CDS by adjusting pain scores or ordering pain meds without the customary re-assessments of pain. Perhaps this was an artifact of the artificial testing environment – though the physicians were instructed to ask the facilitator for new information regarding the simulated patients. Another possibility is that some physicians were unaccustomed to adjusting pain scores (or indeed, adjusting any vital signs, as some physicians delegate these tasks to nursing). A formal requirements assessment prior to initiating this CDSS design process may have uncovered this practice variation, as well as other avenues for improving geriatric ED pain. Certainly in practice we would not expect clinicians to order pain meds or adjust pain scores without first talking with, or re-examining, the patient – but if the workflow is disrupted until such action is taken, the disruption may foster a workaround like this.

Another limitation to this research is the test sessions were short – a single one-hour session involving 3 simulated patients. Acceptability scores may decline over weeks or months of repeated firing, alert fatigue may take root, and the effectiveness of the CDS may fall further with time. A “commandment” of usable CDS is to monitor impact, get feedback and respond – this iterative design phase was just the beginning [22].

5. Conclusion

The usability evaluation process led to improved acknowledgement and favorability. The next step in development of this CDS should be additional usability evaluation in actual clinical care, leading to a trial of this CDSS in a real-world environment. A blinded, randomized, controlled trial may be feasible; a quasi-experimental design of cluster randomized control trial may suffice. Only after these trials can conclusions be made of true usability and long-term effectiveness of this geriatric ED pain CDSS. With sufficient sample size and duration, we plan to measure user’s assessment of the CDSS, and also the CDSS impact on how physicians manage elderly pain.

Footnotes

Statement of Clinical Relevance

Clinical decisions support systems (CDSS) have the potential to improve physician behavior with respect to recognized problems such as older adult oligoanalgesia. However, some CDSS efforts are undermined by poor usability. Pain interventions were developed, deployed in usability scenarios, and iteratively improved across three rounds of usability testing, leading to improved provider usability scores.

Statement about Authors’ Conflicts of Interest

The authors of this manuscript declare they have no conflicts of interest to disclose.

Statement Regarding the Protection and Privacy of Human Subjects

Prior to this undertaking, our research plan was reviewed by the Mount Sinai Program for the Protection of Human Subjects, and was given their approval to proceed.

References

- 1.Hwang U, Richardson LD, Harris B, Morrison RS. The quality of emergency department pain care for older adult patients. J Am Geriatr Soc 2010; 58: 2122–2128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Platts-Mills TF, Esserman DA, Brown L, Bortsov AV, Sloane PD, McLean SA. Older US emergency department patients are less likely to receive pain medication than younger patients: results from a national survey. Ann Emerg Med 2012; 60: 199–206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fosnocht D, Swanson E, Barton E. Changing attitudes about pain and pain control in emergency medicine. Emerg Med Clin N Amer 2005; 23: 297–306. [DOI] [PubMed] [Google Scholar]

- 4.Morrison RS, Magaziner J, McLaughlin MA, Orosz G, Silberzweig SB, Koval KJ, Siu AL. The impact of post-operative pain on outcomes following hip fracture. Pain 2003; 103(3):303–311. [DOI] [PubMed] [Google Scholar]

- 5.Dworkin R. Which individuals with acute pain are most likely to develop a chronic pain syndrome? Pain Forum 1997; 6: 127–136. [Google Scholar]

- 6.Duggleby W, Lander J. Cognitive status and postoperative pain: older adults. J Pain Sympt Manage 1994; 9: 19–27. [DOI] [PubMed] [Google Scholar]

- 7.Lynch E, Lazor M, Gelis J, Orav J, Goldman l, Marcantonio E. The impact of postoperative pain on the development of postoperative delirium. Anesth Analg 1998; 86: 781–785. [DOI] [PubMed] [Google Scholar]

- 8.The AGS guideline on the management of persistent pain in older persons. 2002; http://www.americangeriatrics.org/products/positionpapers/persistent_pain_guide.shtml. Accessed January 16, 2007.

- 9.American Geriatrics Society Panel on the Pharmacological Management of Persistent Pain in Older Persons. Pharmacological management of persistent pain in older persons. Pain Med 2009; 10: 1062–1083. [DOI] [PubMed] [Google Scholar]

- 10.Jenkins HJ, Hancock MJ, French SD, Maher CG, Engel RM, Magnussen JS. Effectiveness of interventions designed to reduce the use of imaging for low-back pain: a systematic review. CMAJ 2015; 187: 401-408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gupta A, Ip IK, Raja AS. Effect of clinical decision support on documented guideline adherence for head CT in emergency department patients with mild traumatic brain injury. J Am Med Inform Assoc, 21 (2014), pp. e347–e351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Britton DJ, Bloch RB, Strout TD. Impact of a computerized order set on adherence to Centers for Disease Control guidelines for the treatment of victims of sexual assault. J Emerg Med 2013; 44: 528–535. [DOI] [PubMed] [Google Scholar]

- 13.Dashboard.HealthIT.gov[Internet]. Washington, D.C.: Health IT Adoption & Use Dashboard from U.S. HHS/ONC; [updated 2015 Apr; cited 2015 Aug 15]. Available from: http://dashboard.healthit.gov/HITA-doption/?view=0 [Google Scholar]

- 14.Institute of Medicine (U.S.), and Committee on Patient Safety and Health Information Technology. Health IT and Patient Safety: Building Safer Systems for Better Care. Washington, D.C.: National Academies Press; 2012. [PubMed] [Google Scholar]

- 15.Romano MJ, Stafford RS. Electronic health records and clinical decision support systems: impact on national ambulatory care quality. Arch Intern Med 2015; 171(10): 897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jones SS, Rudin RS, Perry T, Shekelle PG. Health information technology: an updated systematic review with a focus on meaningful use. Ann Intern Med 2014; 160(1):48–54. [DOI] [PubMed] [Google Scholar]

- 17.Chaudhry B, Wang J, Maglione M, Monica W, Roth E, Morton SC, Shekelle PG. Systematic review: Impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006; 144(10):742–752. [DOI] [PubMed] [Google Scholar]

- 18.Strom BL, Schinnar R, Bilker W, Hennessy S, Leonard CE, Pifer E. Randomized clinical trial of a customized electronic alert requiring an affirmative response compared to a control group receiving a commercial passive CPOE alert: NSAID – warfarin co-prescribing as a test case. J Am Med Inform Assoc 2010; 17(4):411–415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Strom BL, Schinnar R, Aberra F, Bilker W, Hennessy S, Leonard CE, Pifer E. Unintended effects of a computerized physician order entry nearly hard-stop alert to prevent a drug interaction: a randomized controlled trial. Arch Intern Med 2010; 170(17):1578–1583. [DOI] [PubMed] [Google Scholar]

- 20.Tamblyn R, Reidel K, Huang A, Taylor L, Winslade N, Bartlett G, Grad R, Jacques A, Dawes M, Larochelle P, Pinsonneault A. Increasing the detection and response to adherence problems with cardiovascular medication in primary care through computerized drug management systems: a randomized controlled trial. Med Decis Making 2010; 30(2):176–188. [DOI] [PubMed] [Google Scholar]

- 21.Gurwitz JH, Field TS, Rochon P, Judge J, Harold LR, Bell CM, Lee M, White K, LaPrino J, Erramuspe-Mainard J, DeFlorio M, Gavendo L, Baril JL, Reed G, Bates DW. Effect of computerized provider order entry with clinical decision support on adverse drug events in the long-term care setting. J Am Geriatr Soc 2008; 56(12):2225–2233. [DOI] [PubMed] [Google Scholar]

- 22.Bates DW, Kuperman GJ, Wang S, Gandhi T, Kittler A, Volk L, Spurr C, Khorassani R, Tanasijevic M, Middletone B. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003; 10: 523–530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005; 330: 765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gold M, Hossain M, Charles DR, Furukawa MF. Evolving vendor market for HITECH-certified ambulatory EHR products. Am J Manag Care 2013; 11; 353-361. [PubMed] [Google Scholar]

- 25.Nielson J. Usability 101: Introduction to Usability [Internet]. Freemont: Nielsen Norman Group; 2012. Jan [cited 2015 Aug 15]. Available from: http://www.nngroup.com/articles/usability-101-introduction-to-usability/ [Google Scholar]

- 26.Horsky J, Schiff GD, Johnston D, Mercincavage L, Bell D, Middleton B. Interface design principles for usable decision support: a targeted review of best practices for clinical prescribing interventions. J Biomed Inform 2012; 45: 1202-1216. [DOI] [PubMed] [Google Scholar]

- 27.Hwang U, Belland LK, Handel DA, Yadav K, Heard K, Rivera-Reyes L, Eisenberg A, Noble MJ, Mekala S, Valley M, Wingel G, Todd KH, Morrison RS. Is all pain is treated equally? A multicenter evaluation of acute pain care by age. Pain 2014; 155: 2568-2574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Techsmith. Morae – usability testing software. http://www.techsmith.com/morae.asp. Accessed March 27, 2011.

- 29.Brooke J. SUS: a ’quick and dirty’ usability scale. Jordan PW, Thomas B, Weerdmeester BA, McClelland IL. editors. Usability Evaluation in Industry. London: Taylor and Francis; p. 189–194. [Google Scholar]

- 30.Bangor A, Kortum P, Miller J. Determining what individual SUS scores mean; adding an Aadjective rating scale. J Usability Studies 2009; 4: 114–123. [Google Scholar]

- 31.McCoy AB, Thomas EH, Krousel-Wood M, Sittig DF. Clinical decision support alert appropriateness: a review and proposal for improvement. Ochsner J 2014; 14(2):195–202. [PMC free article] [PubMed] [Google Scholar]

- 32.Ong M-S, Coiera E. Evaluating the effectiveness of clinical alerts: a signal detection approach. AMIA Annu Symp Proc 2011; 1036–1044. [PMC free article] [PubMed] [Google Scholar]

- 33.Lewis JR, Sauro J. The factor structure of the system usability scale. HCI International 2009: Proceedings of the 1st International Conference on Human Centered Design; 2009; San Diego:Springer-Verlag; 94–103. [Google Scholar]

- 34.Emani S, Ting DY, Healey M, Lipsitz SR, Karson AS, Einbinder JS, Leinen L, Suric V, Bates DW. Physician beliefs about the impact of meaningful use of the EHR: a cross-sectional study. Apple Clin Infom 2014; 27: 789–801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bukata R. EHRs are Inevitable, Yet Studies Still Pose Serious Questions. Emer Phys Monthly. 2014;10:4 Available from: http://epmonthly.com/article/ehrs-are-inevitable-yet-studies-still-pose-serious-questions/ [Google Scholar]

- 36.Ward MJ, Landman AB, Case K, Berthelot J, Pilgrim RL, Pines JM. The effect of electronic health record implementation on community emergency department operational measures of performance. Ann Emerg Med 2014; 63: 723-730. [DOI] [PMC free article] [PubMed] [Google Scholar]