Summary

Background

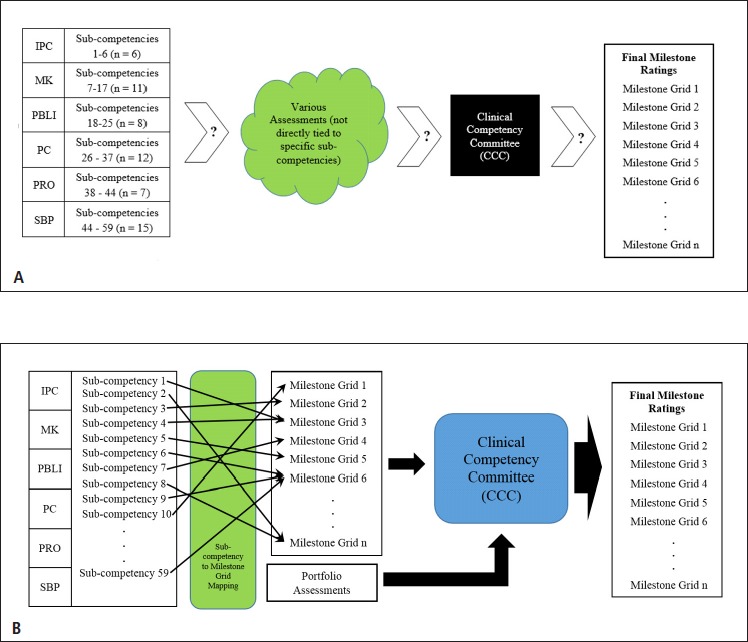

Milestones refer to points along a continuum of a competency from novice to expert. Resident and fellow assessment and program evaluation processes adopted by the ACGME include the mandate that programs report the educational progress of residents and fellows twice annually utilizing Milestones developed by a specialty specific ACGME working group of experts. Milestones in clinical training programs are largely unmapped to specific assessment tools. Residents and fellows are mainly assessed using locally derived assessment instruments. These assessments are then reviewed by the Clinical Competency Committee which assigns and reports trainee ratings using the specialty specific reporting Milestones.

Methods and Results

The challenge and opportunity facing the nascent specialty of Clinical Informatics is how to optimally utilize this framework across a growing number of accredited fellowships. The authors review how a mapped milestone framework, in which each required sub-competency is mapped to a single milestone assessment grid, can enable the use of milestones for multiple uses including individualized learning plans, fellow assessments, and program evaluation. Furthermore, such a mapped strategy will foster the ability to compare fellow progress within and between Clinical Informatics Fellowships in a structured and reliable fashion. Clinical Informatics currently has far less variability across programs and thus could easily utilize a more tightly defined set of milestones with a clear mapping to sub-competencies. This approach would enable greater standardization of assessment instruments and processes across programs while allowing for variability in how those sub-competencies are taught.

Conclusions

A mapped strategy for Milestones offers significant advantages for Clinical Informatics programs.

Keywords: Education, clinical informatics, professional training, physician, general healthcare providers, training and education requirements

Background and History

Introduction

In 2014, Milestones for the new American Board of Medical Specialties (ABMS) Clinical Informatics (CI) subspecialty were developed. This paper outlines how graduate medical education has evolved from a focus on program resources to the assessment of individual resident performance outcomes and the birth of Milestones as the primary resident / fellow assessment reporting tool.

It then looks at the development of CI milestones as a case study in developing ACGME compliant fellow assessment tools that are applicable for any CI fellowship program regardless of which Residency Review Committee provides program accreditation, are internally consistent, and useful as assessment instruments and for fellow competence reporting.

Background

At the turn of the 19th century, the idea of rigorous standards for the evaluation of physicians and other medical practitioners were only a vision by a few far-sighted individuals. Medicine was in a “Wild West” stage with many individuals believing in the benefit of an unregulated system.

Between 1910 and 1935, formal organizations in medicine, particularly the specialties of ophthalmology, otolaryngology, obstetrics and gynecology, and dermatology, began to organize into a system that would eventually become the accreditation and certification system we know today. We can trace back evaluation challenges that exist in organized medicine today to those early organizational efforts.

In 1897, the professional society for Otolaryngology created the Triological Society and proposed a prescribed period of postgraduate medical education [1]. The movement toward specialty training also took hold in other specialties resulting in the creation of the American Boards of Ophthalmology (1917), Otolaryngology (1924), Obstetrics and Gynecology (1927), and Dermatology (1932). The primary motivation for the creation of the original certification boards was the desire to improve the training of residents.

It was specified in the first Booklet of Information of The Board (Dermatology), published in 1932, that one of the purposes of the Board “is to improve the standards of practice of dermatology and syphilolgy by instruction in the specialty [2].

The original boards developed standardized curriculum documents [3] and a process to identify graduate training programs that met the boards’ standards. Improving training and identifying acceptable training programs were completely integrated into the goals of certification boards and remain there to this day. Boards self-identified as agents to attest to the successful completion of a high-standard training program, not as an end in itself [1].

The evolution of Graduate Medical Education (GME) program accreditation moved from specialty content development by boards to the public listing of acceptable programs.

In 1933 Dr. Guy Lane (Secretary of the ABD) prepared a list of the fifteen 3-year institutions or hospitals in this country that were able to provide adequate training in dermatology or syphilology. This list of Opportunities for Post-Graduate Studies was sent to physicians on request [2].

Subsequently, the boards cooperated informally with the AMA’s Council on Medical Education that had experience in surveying and reviewing training programs.

Dr. Fox, Chairman of the Board (American Board of Dermatology), noted that, “Several Boards have accepted the Council’s (AMA-CME) offer and it is anticipated that others will follow suit. The Plan of Cooperation includes the setting of standards for and investigation of approved residencies, fellowships and graduate courses of instruction [2].

In 1955, the Board of Dermatology joined the AMA Council on Medical Education and Hospitals in forming a Residency Review Committee (RRC) for Dermatology. In 1972 the Liaison Committee on Graduate Medical Education (LCGME) was established and reorganized in 1981 to become the Accreditation Council for Graduate Medical Education (ACGME). The goal of these organization-forming processes was to support the goal of the boards to improve graduate medical education through an efficient and successful program evaluation process.

The Evaluation Environment

The boards focused initially on adherence by graduate medical education programs to curricular documents published by the boards. These early core content documents aligned the content of the training programs with the examination matters. This period also saw the first requirements for facilities and faculty. The structure carried forward to the RRCs for LCGME and ACGME with the assumption that proper resources and curricula would lead to an effective educational experience for residents and fellows resulting in successful practitioners. The actual assessment of residents and fellows was left to the individual program and resulted in a wide range of assessment methods and materials. The ACGME did not systematically collect outcome data on individual residents and fellows.

In the late 1980s, a discussion within the major GME organizations arose on the success of the accreditation and certification system in achieving the desired targets. The ABMS debated the meaning of certification and recertification and the proper role of the ABMS member boards in meeting their obligations to the public through recertification of diplomats in practice. Within the ACGME, the debate focused on the program framework as the evaluation process target and the accreditation process itself.

The result was the establishment of Maintenance of Certification (MOC) through a four step process to replace the confirmation of licensure and recertification exams of the past. This program underwent significant revisions and continues to be the subject of major attention by the ABMS boards and their diplomates.

In 1999, the Accreditation Council for Graduate Medical Education (ACGME) established six domains of clinical competency to the profession (ACGME competencies):

Patient care

Medical knowledge

Professionalism

Practice-based learning and improvement

Systems-based practice

Interpersonal and communication skills

These competencies were subsequently adopted by the ABMS and the Association of American Medical Colleges. The common set of competencies created a momentum to their adoption and their incorporation into training.

The 2009 decision by ACGME to begin a multiyear process of restructuring its accreditation system – named the Next Accreditation System (NAS) – focused on educational outcomes related to the six competencies [4]. NAS collected program data annually, visited sites every decade, and established a new evaluation element called “milestones” as a measure for individual resident outcomes.

Milestones continue to be developed by a Milestone Working Group co-convened by ACGME and relevant ABMS specialty board for each specialty. Groups are composed of ABMS specialty board representatives, program director association members, specialty college members, ACGME Review Committee members, residents, fellows, and others.

This paper will outline the new evaluation process that has been adopted by the ACGME and thoughtfully discuss the implementation of the milestone development process in the new subspecialty of Clinical Informatics. It will further address the challenges in their development and implementation at the subspecialty and program levels. The paper will not address the issues of effectively and efficiently evaluating individuals in a complex profession such as medicine.

Core Concepts

Competency

Competency is a term often used to refer to a specific area of performance that can be described and measured, such as the ACGME competencies that were identified by the Outcomes Project of the ACGME [5].

For the purposes of this article, the 59 clinical informatics sub-competencies specified in the Clinical Informatics Fellowship Program Requirements [6] are referred to as “program sub-competencies”.

Milestones

Milestones – as suggested by their name – imitate well known tools in Pediatrics, the developmental milestones, to aid in the evaluation of trainees and the training program. Developmental milestones indicate the normal age by which children are expected to reach certain developmental stages. Similar to a child, trainees are not expected to have achieved all the milestones expected from a “fully-developed”, trained expert, but are expected to add these skills, behaviors, knowledge, and attributes to their repertoire over time during their training and beyond through life-long learning.

In the context of residencies and fellowships, milestones refer to points along a continuum of a competency or sub-competency from novice to expert that characterize expectations for learners at various stages of developing expertise in that competency [5]. For example, a milestone for Clinical Informatics could be the level of performance of a fellow on an ACGME sub-competency in Interpersonal Communication Skills: “Communicate effectively with patients, families, other health professionals (interprofessional team members), health related agencies and the public, as appropriate, across a broad range of socioeconomic and cultural backgrounds.” Fellows are expected to progress in the complexity and difficulty of the skills and behaviors over time.

Milestone Grids

Milestone grids provide specific behavioral descriptors which allow evaluators to specify more precisely where that learner is on the continuum of capability for a competency or set of sub-competencies. Taken together, the milestone grids should be collectively exhaustive in that each required sub-competency is uniquely mapped to one milestone grid and each milestone grid is mapped to at least one sub-competency. If these two conditions are met, the union of all milestone grids will cover all of the sub-competencies.

For milestone grids to be practical and useful for resident / fellow assessments, each individual milestone grid must exhibit “horizontal coherence” by meeting the following criteria:

The behavioral descriptors for the level of competency increases from novice to master from left to right and incorporates all competencies represented by that grid.

Specific behaviors are described which demonstrate level of capability for all competencies mapped to the grid.

The ACGME further elaborates on the purpose and use of milestones:

ACGME posts reporting Milestones “that each program must use to judge the developmental progress of its residents/fellows twice per year, and on which each program must submit reports to the ACGME’s Accreditation Data System.

The reporting Milestones are designed to guide a synthetic judgment of progress roughly twice a year. Utilizing language from the Milestones may be helpful as part of a mapping exercise to determine what competencies are best covered in specific rotation and curricular experiences. The reporting Milestones can also be used for self-assessment by a resident/fellow and in creating individual learning plans. Residents and fellows should use the Milestones for self-assessment with input and feedback from a faculty advisor, mentor, or program director [7].

While programs are required to report milestone data twice a year, there is no requirement that milestone grids be used as assessment instruments. While this permits great flexibility for individual programs to create their own assessment instruments, the variability of approaches raises concerns about comparability of assessment data within and among programs.

Direct mapping of competencies to milestone grids offers some advantages (‣ Table 1) however this remains problematic since milestones were often not written with a view toward such a mapping and the diversity of existing assessment approaches make a migration toward mapped milestones difficult. These mapped and unmapped approaches are displayed in ‣ Figure 1. As a new subspecialty with a small number of accredited programs, Clinical Informatics may have a much easier time adopting a mapped assessment strategy. However milestones assessments alone cannot fully capture the performance of fellows. It is therefore important for faculty to base their ratings on direct observation and for programs to include other “portfolio” elements particularly with regard to work on longitudinal projects as this is an important requirement of Clinical Informatics Fellowship programs.

Table 1.

Pros and Cons of Unmapped versus Mapped Milestones

| Unmapped Milestones | Mapped Milestones | |

|---|---|---|

| Pros |

|

|

| Cons |

|

|

Fig. 1.

- “Unmapped” Assessment (i.e., sub-competencies not directly tied to specific assessment tools)

- “Mapped” Assessment (i.e., each sub-competency mapped directly to a specific Milestone grid)bis

Creating Clinical Informatics Milestones Grids

Individual CI programs can be accredited by any of nine participating ACGME Residency Review Committees (Anesthesiology, Diagnostic Radiology, Emergency Medicine, Family Medicine, Internal Medicine, Medical Genetics, Pathology, Pediatrics, or Preventive Medicine). Starting in 2018, only fellows who graduated from an ACGME accredited fellowship will be eligible to sit for the Clinical informatics Board Examination administered by the American Board of Preventive Medicine (ABPM) [8]. In order to graduate successfully, the performance of trainees has to be measured during their time in training and as a result a need was identified to create the Clinical Informatics Milestones in 2014.

The ACGME created a working group of experts in education in Clinical Informatics, who was advised by a group of educational experts, who had been involved in Milestone creation for other specialties. These expert groups joined for a daylong meeting in Chicago to propose the initial Clinical Informatics Milestones. The work of the group was focused along the six ACGME core competencies with the goal to provide Milestones for each of the core competencies. At the same time, attention was paid to achieve goals for trainees, programs, and the accreditation process (‣ Table 2). The milestones developed by this ACGME committee will be the standard for all CI fellowship programs without reference to their primary sponsor at the institutional level and each of the nine RRCs which provide program accreditation will use this common set of milestones in its review process.

Table 2.

Intended effect of Milestones on trainees, training programs, and the accreditation process

| Trainees | Outline and make transparent performance expectations |

| Enhance the ability to perform self-assessment | |

| Improve self-directed learning | |

| Improve feedback by trainees for programs to facilitate educational experiences directed towards achieving milestones | |

| Training Programs | Provide a detailed description of expectations for trainees based on their length in the program to aid the competency committee |

| Encourage improved assessment processes (Example: Develop surveys for supervising physician to include milestones) | |

| Create triggers to identify underperforming trainees | |

| Aid in the development of new learning opportunities and training settings | |

| Improve curricula | |

| Creation and publication of performance measures for training programs | |

| Accreditation process | Increase transparency and accountability of training programs |

| Use in the determination for site visit frequency |

To align the ACGME six core competencies, the existing program requirements for fellowships in Clinical Informatics were used to identify suitable requirements that aligned with the core competencies (‣ Table 3) [9]. Of importance to note is the fact that not all program requirements were utilized, but certain requirements were highlighted not only for their reflection of core competencies but because of their suitability for measurement in trainees. The final work product of the group was published on the ACGME web site [10].

Table 3.

Areas of Clinical Informatics competency, skill, and attributes in relationship to the six ACGME core competencies

| Patient Care | Technology Assessment |

| Clinical Decision Support Systems | |

| Impact of Clinical Informatics on Patient Care | |

| Project Management | |

| Information System Lifecycle | |

| Assessing User Needs | |

| Medical Knowledge | Clinical Informatics Fundamentals and Programming |

| Leadership and Change Management | |

| Systems-based Practice | Patient Safety and Unintended Consequences |

| Resource Utilization | |

| Workflow and Data Warehouse/Repository | |

| Practice-based Learning and Improvement | Recognition of Errors and Discrepancies |

|---|---|

| Scholarly Activity | |

| Professionalism | Demonstrates honesty, integrity, and ethical behavior |

| Demonstrates responsibility and follow-through on tasks | |

| Gives and receives feedback | |

| Demonstrates responsiveness and sensitivity to individuals’ distinct characteristics and needs | |

| Understands and practices information security and privacy | |

| Interpersonal and Communication Skills | Effective Communications with Interprofessional Teams |

| Communication with Patients and Families |

The Clinical Informatics Milestones developed by ACGME do not cover all the content outlined in the Core Content outlines for the Clinical Informatics subspecialty [11], nor do they address the complete training requirements outlined in the program requirements [5]. It will be left to the program directors in the new subspecialty to expand and to enhance the ACGME Milestones to achieve an even better definition of the requirements for trainees and expectations for training programs.

During the summer of 2014 as the Clinical Informatics fellowship program at the University of Arizona (UA) composed the ACGME accreditation application for a Clinical Informatics Fellowship, the Clinical Informatics Milestone Project had not yet completed its work on the creation of Clinical Informatics Milestones. Since UA’s CI fellow assessment processes were built around milestone assessments, UA created its own “local milestones” with the notion that these would be replaced by the Clinical Informatics Milestone Project versions when they were available

The creation of local milestones began with a careful review of the 59 program sub-competencies specified in the ACGME Clinical Informatics Fellowship Program Requirements [6]. To avoid creating a milestone grid for each clinical informatics sub-competency, within the six major ACGME competency sections similar sub-competencies were grouped into ‘clusters’ yielding a total of 18 milestone grids which met the criteria described above. Namely,

each sub-competency specified in the Clinical Informatics Fellowship Program Requirements is mapped 1:1 to only one milestone grid and

each milestone grid is mapped to one or more sub-competencies.

‣ Table 6 depicts a milestone grid which can be used to assess five of the sub-competencies of the ACGME “Interpersonal Communication Skills” competency. Since UA applied to the Internal Medicine (IM) RRC for accreditation, whenever possible grids were matched to a specific IM Subspecialty Milestone grid. In those instances where no IM Subspecialty Milestone grid matched a cluster, a grid was created de novo (‣ Table 5). As an additional ‘calibration check’, Family Practice and Pediatric national milestones were also reviewed but did not offer significant content beyond the Internal Medicine milestones. It is important to note that the final Clinical Informatics grids are designed specifically for the Clinical Informatics subspecialty and as such can be used regardless of what specialty RRC provides program accreditation for an individual program. Each grid incorporates detailed descriptions of what behaviors should be observed for that specific sub-competency ‘cluster’ from novice to expert.

Table 6.

Individualized Learning Plan Worksheet Questions

|

Table 5.

Listing of Milestone Grids Mapped to the Clinical Informatics Program Sub-competencies

| Competency | IM Grid* | CI Grid** | Grid Title |

|---|---|---|---|

| Interpersonal and Communication Skills | IM ICS 1 | ICS CI 1 | Communicate effectively with patients, families, other health professionals (interprofessional team members), health related agencies and the public, as appropriate, across a broad range of socioeconomic and cultural backgrounds. |

| IM ICS 2 | ICS CI 2 | Appropriate utilization and completion of health records. | |

| Medical Knowledge | IM MK 1 | MK CI 1 | Possesses clinical informatics knowledge. |

| None | MK CI 2 | Knowledge and application of health care environment, information systems management skills and leadership skills. | |

| None | MK CI 3 | Impact of information systems and processes on decision making, risk management, safety and quality. | |

| None | MK CI 4 | Clinical information systems impact, adoption and improvement. | |

| Practice-based Learning and Improvement | IM PBLI 1 | PBLI CI 1 | Monitors practice with a goal for improvement. |

| IM PBLI 2 | PBLI CI 2 | Learns and improves via performance audit. | |

| IM PBLI 3 | PBLI CI 3 | Learns and improves at the point of care. | |

| Patient Care and Procedural Skills | IM PC 1 | PC CI 1 | Gather and synthesize essential and accurate information to define clinical informatics problem(s). |

| IM PC 2 | PC CI 2 | Develop and achieve comprehensive management plan for clinical informatics problem(s). | |

| IM PC 3 | PC CI 3 | Manage clinical informatics systems and processes with progressive responsibility and independence. | |

| IM PC 4 | PC CI 4 | Skill in the fundamentals of clinical informatics projects, processes and implementations. | |

| Professionalism | None | PRO CI 1 | Is sensitive to the impact of information systems on the individual, systems, organizations, and society at-large. |

| IM PROF 1 IM PROF 3 | PRO CI 3 | Responds to unique characteristics and needs of each patient or system user. | |

| Systems-based Practice | IM SBP1 | SBP CI 1 | Work effectively within an interprofessional team (e.g., with peers, consultants, nursing, ancillary professionals, and other support personnel). |

| IM SBP2 | SBP CI 2 | Recognizes system error and advocates for system improvement. | |

| IM SBP3 | SBP CI 3 | Identifies forces that impact the cost of health care, and advocates for and practices cost-effective care. |

* IM Grid = Internal Medicine Subspecialty Grid which Clinical Informatics Grid was based. In those instances where an appropriate grid was not available from the Internal Medicine Subspecialty Grids, “None” is indicated.

** CI Grid = Clinical Informatics Grid designation

For a full listing of these local milestones, see ‣ supplementary online material.

Using Clinical Informatics Milestone at the Program Level

A properly composed set of Milestone Grids is essential for the assessment of learners in each of the following areas:

Fellow Self-Assessment

The CI Fellowship Program Requirements specify that one of the core responsibilities of the CI Fellowship Program Director is to “ensure that each fellow’s individualized learning plan includes documentation of Milestone evaluation (page 7)” [6]. These requirements further state that “Each fellow must have an individualized learning plan that allows him or her to demonstrate proficiency in all required competencies within the specified length of the educational program (page 17)” [6].

Using milestone grids, collaborative authoring of an individualized learning plan (ILP) for each fellow can be done simply and effectively. Upon matriculation into the fellowship, each fellow completes a self-assessment utilizing all 18 grids which forms the basis for the creation of their individualized learning plan. Each fellow then completes an ILP worksheet by addressing the questions depicted in ‣ Table 6 which is then reviewed, discussed, and finalized with the Program Director.

Assessment of Fellows on Rotations

Each rotation must have clearly stated learning objectives that ideally are derived directly from the CI Fellowship sub-competencies ensuring that each sub-competency is assigned to at least one rotation (or other training element, such as didactic conferences) and that each rotation has one or more sub-competency assigned to it.

Using the above information, a complete list of all learning objectives for rotations can be produced. The assessment of a fellow on a rotation is composed of the union of milestone grids which are mapped to the learning objectives for that rotation (since each learning objective is mapped to a single specific milestone grid). This can be augmented by additional questions regarding strengths, weaknesses, etc. based on unique features of the training program and culture of the sponsoring institution. Implementation of this methodology is quite simple within most graduate medical education curricular systems which are engineered to convey milestone assessments in this fashion to those responsible for evaluating fellows.

The ACGME notes that “It is imperative that programs remember that the Milestones are not inclusive of the broader curriculum, and limiting assessments to the Milestones could leave many topics without proper and essential assessment and evaluation” [7]. Milestones thus can serve as one element of a portfolio of assessments including project reports, reflective writing assignments, conference presentation evaluations, performance in didactic and/or online instruction, etc.

Semi-Annual and Summative Reviews of Fellows

A core requirement of the CI Fellowship Program Requirements mandates that the faculty Clinical Competency Committee (CCC) “review all resident evaluations semi-annually and prepare and assure the reporting of Milestones evaluations of each resident semi-annually to ACGME (page 18)” [6].

Prior to each semi-annual review, each fellow repeats the self-assessment for all milestone grids. All milestone grid assessments received from assessors across all rotations / activities during the assessment period are summarized using a simple average and standard deviation for each milestone grid across all assessments received. The CCC reviews all information and issues a final rating for each grid. During subsequent individual meetings with each fellow, the matriculation, prior semiannual, and current ratings provide a detailed snapshot of progress and the ILP can be revised accordingly.

Additionally core requirements include that “the program director must provide a summative evaluation” and “the specialty-specific milestones must be used as one of the tools to ensure fellows are able to practice core professional activities without supervision upon completion of the program (page 19)” [6]. The final CCC ratings for each grid milestone grid assigned during each of the four semi-annual reviews are depicted on the semi-annual evaluation, which reflects the competency ratings (including trajectory and velocity) for each milestone grid.

Analysis of National Clinical Informatics Milestone Grids

As noted above, in October of 2014, the Clinical Informatics Milestone Project released a set of national milestones for Clinical Informatics. To maintain our mapped strategy, a mapping from the Clinical Informatics Fellowship sub-competencies to the newly published national milestones was required, but we discovered quickly that this mapping was problematic:

For many CI program sub-competencies, the mapping was difficult and ambiguous. For example our initial mapping by two of the authors (a CI Fellowship Program Director (HS) and a former Executive Director of the American Board of Emergency Medicine (BM) familiar with Clinical Informatics) were substantially different with agreement on only 41% of the sub-competency to milestone grid assignments mappings.

Some sub-competencies did not appear to relate to any of the available grids.

Many CI program sub-competencies did not map easily onto a specific milestone grid. Some mapped most clearly to a milestone grid in an ACGME competency category other than that of the sub-competency (42% for HS and 63% for BM). For example, both authors mapped the Patient Care sub-competency “Demonstrate skill in fundamental programming, database design, and user interface design” to the national milestone grid for Medical Knowledge (MK1: Clinical Informatics Fundamentals and Programming) reflecting either inherent ambiguity or a potential incorrect categorization of the national milestone.

Discussion

Milestones are a tool to measure and/or report fellow and fellowship performance. The utility of milestone grids in individual programs beyond simple reporting is directly related to the ability to provide a clear and unambiguous map from the sub-competencies to the milestone grids. Furthermore, the lack of a clear map will significantly impair the ability to provide meaningful data across programs, since each program would be likely to have substantially different maps.

In many clinical specialties and particularly in those with multiple subspecialties, the milestone grids are intentionally written in broad, ambiguous terms to allow for variability across multiple program settings and subspecialties (unmapped approach). However, Clinical Informatics has far less variability across programs and thus could easily utilize a more tightly defined set of milestones with a clear mapping to sub-competencies (mapped approach) while allowing for variability in how those sub-competencies are taught across programs. The small number of currently accredited programs allows a window of opportunity to revise the milestone to more accurately map the expected outcomes.

The question facing us is whether CI Fellowships should use a ‘mapped’ or ‘unmapped’ strategy. CI Fellowships are in the unique position of having time to make such a choice. All of the other existing specialties may evolve over time to a mapped strategy. The ACGME milestones will evolve over time with use and experience and raising this question now is a timely step in the evolution of the new specialty of Clinical Informatics.

Our recommendation is for the Clinical Informatics Program Directors to define and adopt a mapped strategy and enter into conversations with the ACGME to implement this approach.

Table 4.

Sample milestone grid for the ACGME Competency of Interpersonal Communication Skills covering five specific Clinical Informatics Program Sub-competencies

| Not Yet Assessable | Level 1 | Level 2 | Level 3 | Level 4 | Level 5 |

|---|---|---|---|---|---|

| Does not consult patient or team for input and preferences for plan of care Does not engage patient or team members in shared decision-making Engages in nonproductive relationships with patients, caregivers or other health professionals |

Engages patients and team members in discussions of care or project plans and respects patients and team member preferences when offered by them, but does not actively solicit preferences Attempts to develop therapeutic relationships with patients, caregivers and team members but is not always successful Defers difficult or ambiguous conversations to others when serving as a liaison among patients, administrators, and clinicians |

Engages patients and team members in shared decision-making in uncomplicated conversations Requires assistance facilitating discussions in difficult or ambiguous conversations or other aspects of serving as a liaison among patients, families, IT professionals, administrators, and clinicians Requires guidance or assistance to engage in communication with persons or different professional backgrounds |

Identifies and incorporates patient and/or team member preferences in shared decision-making in complex patient care or project conversations Quickly establishes effective relationships with patients, caregivers and team members, including persons of different professional backgrounds Able to function independently and effectively as a liaison among patients, families, IT professionals, administrators, and clinicians |

Role models effective communication and development of therapeutic relationships in both routine and challenging situations Models cross-cultural and interprofessional communication and establishes effective relationships with persons of diverse professional backgrounds Assists others with effective communication and development of effective relationships with patients, families, IT professionals, administrators, and clinicians |

|

Clinical Informatics Sub-competencies Covered (with reference to Program Requirements):

| |||||

Footnotes

Conflicts of Interest The authors declare that they have no conflicts of interest in the research.

Protection of Human and Animal Subjects This work did not involve human or animal subjects.

Clinical Relevance Statement The implication of this paper is to provide specific suggestions for improvement of Clinical Informatics Fellowship assessment processes and tools at the local and national level. These improvements would foster a greater accuracy and consistency of assessment data which in turn would serve to inform improvements in Clinical Informatics Fellowship requirements and fellow assessment tools.

References

- 1.Cantrell RW, Goldstein JC. The American Board of Otolaryngology, 1924–1999: 75 years of excellence. Arch Otolaryngol Head Neck Surg. 1999; 125(10): 1071-1079. [DOI] [PubMed] [Google Scholar]

- 2.Livingood CS. History of the American Board of Dermatology, Inc. (1932–1982). J Am Acad Dermatol 1982; 7(6): 821-850. [DOI] [PubMed] [Google Scholar]

- 3.Cordes FC, Rucker CW. History of the American Board of Ophthalmology. Trans Am Ophthalmol Soc 1961; 59: 295–332. [PMC free article] [PubMed] [Google Scholar]

- 4.Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system--rationale and benefits. N Engl J Med 2012; 366(11): 1051-1056. [DOI] [PubMed] [Google Scholar]

- 5.Sklar DP. Competencies, milestones, and entrustable professional activities: what they are, what they could be. Acad Med 2015; 90(4): 395-397. [DOI] [PubMed] [Google Scholar]

- 6.ACGME Program Requirements for Graduate Medical Education in Clinical Informatics. (Review Committees for Anesthesiology, Diagnostic Radiology, Emergency Medicine, Family Medicine, Internal Medicine, Medical Genetics and Genomics, Pathology, Pediatrics, or Preventive Medicine) [Internet]. Chicago: IL: Accreditation Council for Graduate Medical Education; 7/1/2015 [updated 2/3/2014; cited 12/12/2015]. Available from: https://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/381_clinical_informatics_07012015.pdf. [Google Scholar]

- 7.Frequently Asked Questions: Milestones [Internet]. Chicago: IL: Accreditation Council for Graduate Medical Education (ACGME) [updated 9/2015; cited 12/12/2015]. Available from: https://www.acgme.org/acgmeweb/Portals/0/MilestonesFAQ.pdf. [Google Scholar]

- 8.Lehmann CU, Shorte V, Gundlapalli AV. Clinical informatics sub-specialty board certification. Pediatr Rev 2013; 34(11): 525-530. [DOI] [PubMed] [Google Scholar]

- 9.Safran C, Shabot MM, Munger BS, Holmes JH, Steen EB, Lumpkin JR, et al. Program requirements for fellowship education in the subspecialty of clinical informatics. J Am Med Inform Assoc 2009; 16(2): 158-166. Erratum in: J Am Med Inform Assoc 2009; 16(4): 605. PubMed PMID: 19074295; PubMed Central PMCID: PMC2649323 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.The Clinical Informatics Milestone Project. [Internet]. Chicago: IL: A Joint initiative of The Accreditation Council for Graduate Medical Education; The American Board of Anesthesiology; The American Board of Emergency Medicine; The American Board of Family Medicine; The American Board of Genetics and Genomics; The American; 10/2014 [cited 12/12/2015]. Available from: https://www.acgme.org/acgmeweb/Portals/0/PDFs/Milestones/ClinicalInformatics.pdf. [Google Scholar]

- 11.Gardner RM, Overhage JM, Steen EB, Munger BS, Holmes JH, Williamson JJ, et al. Core content for the subspecialty of clinical informatics. J Am Med Inform Assoc 2009; 16(2): 153-157. [DOI] [PMC free article] [PubMed] [Google Scholar]