Abstract

Using a generalized random recurrent neural network model, and by extending our recently developed mean-field approach, we study the relationship between the network connectivity structure and its low dimensional dynamics. Each connection in the network is a random number with mean 0 and variance that depends on pre- and post-synaptic neurons through a sufficiently smooth function g of their identities. We find that these networks undergo a phase transition from a silent to a chaotic state at a critical point we derive as a function of g. Above the critical point, although unit activation levels are chaotic, their autocorrelation functions are restricted to a low-dimensional subspace. This provides a direct link between the network's structure and some of its functional characteristics. We discuss example applications of the general results to neuroscience where we derive the support of the spectrum of connectivity matrices with heterogeneous and possibly correlated degree distributions, and to ecology where we study the stability of the cascade model for food web structure.

I. INTRODUCTION

Advances in measurement techniques and statistical inference methods allow us to characterize the connectivity properties of large biological systems such as neural and gene regulatory networks [1–4]. In many cases connectivity is shown to be well modeled by a combination of random and deterministic components. For example, in neural networks, the location of neurons in anatomical or functional space, as well as their cell-type identity influences the likelihood that two neurons are connected [2, 5, 6].

For these reasons it has become increasingly popular to study the spectral properties of structured but random connectivity matrices using a range of techniques from mathematics and physics [7–14]. In most cases, the spectrum of the random matrix of interest is studied independently of the dynamics of the biological network it implies. Therefore, these results can be used only to make statements about the dynamics of a linear system where knowing the eigenvalues and eigenvectors is sufficient to characterize the dynamics.

Here we study the dynamics of nonlinear random recurrent networks with a continuous synapse-specific gain function that can depend on the pre- and post-synaptic neurons’ locations in an anatomical or functional space. These networks become spontaneously active at a critical point that is derived here, directly related to the boundary of the spectrum of a new random matrix model. Given the gain function we predict analytically the network's leading principal components in the space of individual neurons’ autocorrelation functions.

In the context of analysis of single and multi-unit recordings our results offer a mechanism for relating structured recurrent connectivity to functional properties of individual neurons in the network; and suggest a natural reduced space where the system's trajectories can be fit by a simple state-space model. This approach has been used to explain the dynamics of neurons in motor cortex by comparing the results of training recurrent artificial neural networks (“reservoir computing”) to neural data [15, 16]. These applications have thus far assumed the initial condition (i.e. the network before training) is a completely unstructured neural substrate.

Recently we showed how a certain type of mesoscopic structure can be introduced into the class of random recurrent network models by drawing synaptic weights from a finite number of cell-type-dependent probability distributions [13]. In contrast to networks with a single cell-type [17], these networks can sustain multiple “modes,” characterized in terms of the individual neuron autocorrelation functions.

Here these results are further generalized to networks where the synaptic weight between neurons i, j is drawn from a distribution with mean 0 and variance , where N is the size of the network. The smoothness conditions satisfied by the gain function g are stated below. This allows us to treat, for example, networks with continuous spatial modulation of the synaptic gain. The solution to the network's system of mean-field equations that we derive offers a new viewpoint on how functional properties of single neurons can in fact be a network phenomenon.

A. Model and main results

Consider a general synapse-specific gain function g(zi, zj) that depends on normalized neuron indices zi = i/N, where i = 1, . . . , N. We assume that there is some length scale s0 > 0 below which g has no discontinuities. That is, we let be a uniformly bounded, continuous function everywhere on the unit square except possibly on a measure zero set S0. The function g may depend on N in such a way that its Lipschitz constant , with and 1 > β ≥ 0. Every point where g does not satisfy the above smoothness conditions must be on the boundary between squares of side s0 where it does.

The network connectivity matrix is then with elements 2

| (1) |

where is a random matrix with elements drawn at random from a distribution with mean 0, variance 1/N and finite fourth moment. In the simulations we use a Gaussian distribution unless noted otherwise.

In this paper we analyze the eigenvalue spectrum of the connectivity matrix J and the corresponding dynamics of the neural network. Note that by requiring that g is bounded and differentiable on the unit square outside of S0 we allow the synaptic gain function to be a combination of discrete modulation (e.g., cell-type dependent connectivity for distinct cell types, as in [13]) and of continuous modulation (e.g., networks with heterogeneous and possibly correlated in- and out-degree distributions, as in [18, 19]).

When g can be written as an outer product of two vectors [i.e. g(zi, zj) = g1(zi)g2(zj)], the model discussed here overlaps with that studied by Wei and by Ahmadian et al. [9, 12], but those works also consider matrix models that are not studied here.

The connectivity matrix J must not represent an all-to-all connected network, as the distribution of elements of J0 can have a finite mass at 0 (see Sec. V). However, in the current work we do not consider sparse models where the number of non-zero elements is finite or scales sublinearly with N. Studies of such matrices exist in the literature (for example, [20, 21]), but are limited to models with no structure.

In Sec. II we show that the spectral density of J is circularly symmetric in the complex plane, and is supported by a disk centered at the origin with radius with

| (2) |

where is a deterministic matrix with elements . Note that Λ1 is the Perron-Frobenious eigenvalue of a non-negative matrix, so indeed . For general synapse-specific gain function g it has not been possible so far to obtain an explicit formula for Λ1. However, we have been able to derive explicit analytic formulas in three cases of biological significance. First, in Sec. IV we discuss the case where is a circulant matrix such that g(zi, zj) = g(zij) with

| (3) |

and show that . This special case is important for large neural networks where connectivity often varies smoothly as a function of neuron's index. Moreover, for this parametrization all the eigenvalues and corresponding eigenvectors can be computed analytically, which will make it possible to make stronger statements about the dynamics as is explained in Secs. III and IV.

For two additional parametrizations of g, the current mean-field approach is insufficient to fully characterize the dynamics, but we can use the general result [Eq. (2)] to analytically characterize the spectrum of the connectivity matrix. In Sec. V we derive the support of the bulk spectrum and the outliers of a random connectivity matrix with heterogeneous joint in- and out-degree distribution. Finally, in Sec. VI we discuss a third example pertinent to large scale models of ecosystems. These systems are often modeled using g that has a triangular structure and in this case we also derive an analytic formula for Λ1.

Given the connectivity matrix J defined in Eq. (1), the dynamics of neural network model with N neurons is described by

| (4) |

where ϕj(t) = tanh[xj(t)]. The x variables can be thought of as the membrane potential of each neuron, and the ϕ variables as the deviation of the firing rates from their average values.

Using a modified version of dynamic mean field theory we show that in the limit N → ∞ this system undergoes a phase transition, where r is the coordinate that describes this transition and r = 1 is the critical point. Below the critical point (r < 1), the neural network has a single stable fixed point at x = 0. Above the critical point the system is chaotic.

We analyze the dynamics above the critical point in more detail and find a direct link between the network structure (g) and its functional properties. To that end we define N dimensional autocorrelation vectors

| (5) |

where 〈·〉 denotes average over the ensemble of matrices J and time. Note that because the average of each element of J is zero and the nonlinearity is an odd function, autocorrelations are computed about the fixed point xi = ϕi = 0. These vectors are restricted to the potentially low-dimensional subspace spanned by the right eigenvectors of with corresponding eigenvalues that have a real part greater than 1. Thus, although the network dynamics are chaotic, they are confined to a low-dimensional space, which has been suggested as a mechanism that could make computation in the network more robust [22].

B. Separate excitation and inhibition

There are some limitations to the interpretation of the dynamics in Eq. (4) with connectivity described by Eq. (1) as a neuronal network. Most importantly, every column of J has both positive and negative elements corresponding to the unrealistic assumption that every neuron is both excitatory and inhibitory, and the hyperbolic tangent nonlinearity implies that firing rates can be both positive and negative. The usual justification of these assumptions is that every degree of freedom xi is in fact an average over a small number of neurons some of which are inhibitory and some are excitatory, and that ϕi represents the deviation of the firing rate from the steady state.

A more satisfying treatment to this problem is the recent work of Kadmon and Sompolinsky [23] extending our previous work [13]. They studied a network with block structure, where the distribution of elements in each block has a non-zero mean such that, if appropriately defined, the elements in each column can have the same sign. They also considered non-negative transfer functions in addition to the hyperbolic tangent. Their analysis showed that in addition to the stable fixed point and chaotic regimes, there is an additional regime of saturated firing rates. For the general case it was not possible to determine whether the transition to chaotic or saturated regime is seen first upon variation of the connectivity parameters, or what the dynamics look like if conditions for both instabilities hold (i.e., what the saturated and chaotic dynamics look like). We anticipate that for cases where the first transition is to the chaotic regime, the analysis presented here for synaptic weight distributions with mean 0 will apply (with the appropriate modifications similar to those in [23]) when the mean is nonzero.

Here we also treat networks with separate excitatory and inhibitory populations (Sec. V), by deriving the support of the bulk spectrum and outliers of connectivity matrices with heterogeneous degree distributions.

II. DERIVATION OF THE CRITICAL POINT

A. Finite number of partitions

We begin by recalling our recent results for a function g that has block structure. We defined a D × D matrix with elements gcd and partitioned the indices 1, . . . , N into D groups, where the cth partition has a fraction αc neurons. The synaptic gain function was then defined by g(zi, zj) = gcicj, where ci is the partition index of the ith neuron (c = 1, . . . , D).

Defining allows us to write formally . With these definitions, we rewrite Eq. (4):

| (6) |

In [13] we used the dynamic mean field approach [17, 24, 25] to study the network behavior in the N → ∞ limit. Averaging Eq. (6) over the ensemble from which J is drawn implies that neurons that belong to the same group are statistically identical. Thus, the behavior of the full network can be summarized by D representative neurons ξd(t) and their inputs ηd(t), provided that (a) they satisfy

| (7) |

and (b) that ηd(t) is drawn from a Gaussian distribution with moments satisfying

| (8) |

| (9) |

Here 〈·〉 denotes averages over i = nc–1 + 1, . . . , nc and k = nd–1 + 1, . . . , nd in addition to average over realizations of J. The average firing rate correlation vector is denoted by C (τ). Its components using the mean field variables are

| (10) |

The cross-covariance matrix 〈ηc (t) ηd (t + τ)〉 is diagonal so we define the vector H(τ) to be the diagonal. Now we can rewrite Eq. (9) as

| (11) |

where is a constant matrix reflecting the network connectivity structure: .

A trivial solution to this equation is H(τ) = C(τ) = 0 which corresponds to the silent network state: xi(t) = 0. Recall that in the network with a Girko matrix as its connectivity matrix (D = 1), the matrix M = g2 is a scalar and Eq. (11) reduces to H(τ) = g2C(τ). In this case the silent solution is stable only when g < 1. For g > 1 the autocorrelations of η are non-zero which leads to chaotic dynamics in the N dimensional system [17].

When D > 1, Eq. (11) can be projected on the eigenvectors of M leading to D consistency conditions, each equivalent to the single group case. Each projection has an effective scalar given by the eigenvalue in place of g2 in the D = 1 case. Hence, the trivial solution will be stable if all eigenvalues of M have real part < 1. This is guaranteed if Λ1, the largest eigenvalue of M, is < 1. If Λ1 > 1 the projection of Eq. (11) on the leading eigenvector of M gives a scalar self-consistency equation analogous to the D = 1 case for which the trivial solution is unstable. As we know from the analysis of the D = 1 case, this leads to chaotic dynamics in the full network. Therefore Λ1 = 1 is the critical point of the D > 1 network. Furthermore, the fact that in the D = 1 case the presence of the destabilized fixed point at x = 0 corresponds to a finite mass of the spectral density of J with real part > 1 [17, 26] allowed us to read the radius of the support of the connectivity matrix with D > 1 and identify it as [13].

B. Continuous case

The vector dynamic mean field theory we developed in [13] relies on having an infinite number of neurons in each partition with the same statistics. The natural choice is therefore to have the size of each group of neurons be linear in the system size: Nc = αcN.

This scaling imposes two limitations for comparing the results to the dynamics of more realistic networks. First, it requires knowledge of the cell-type identity of each neuron in the recording, which often is not available. Second, it limits the analysis of the dynamics to quantities that are averaged over neurons that belong to the same cell type.

To lift the requirement of block structured variances [i.e., now g = g(zi, zj)], we can do the following. Let be a weakly monotonic function of N such that

| (12) |

Recall that we allow the Lipschitz constant of g to grow as Nβ with 1 > β ≥ 0, implying that limN→∞ K(N) = ∞. A natural choice is with , but as long as the specific scaling behavior will not matter in our analysis. For convenience we will suppress the N dependence when possible.

Let μ = 1, . . . , K and let

| (13) |

Furthermore, define with elements

| (14) |

In other words, g̃ is an N × N matrix with K2 equally sized square blocks. The value of elements in each block is the value of the function g in the middle of that block. These definitions allow us to bridge the gap between the block and the continuous cases. Indeed, consider the random connectivity matrix with elements and the network that has J̃ as its connectivity.

First, since N/K → ∞ as N → ∞, the number of neurons in each group goes to infinity, and we may use the vector dynamic mean field theory as before, but in a K dimensional space [rather than D which was O(1)]. The critical point is now given in terms of the largest eigenvalue of an N × N matrix M̃ with elements

| (15) |

where rank .

Second, recall that the function g is assumed to be smooth outside of a set with measure zero S0. These properties will allow us to show (see Appendix A) that as N → ∞ we have

| (16) |

meaning that by studying the system with connectivity structure g̃ in the limit N → ∞ we are in fact obtaining results for the generalized connectivity matrix with a smooth synaptic gain function g.

C. Circular symmetry of spectrum

In [11] we used random matrix theory techniques to derive, for the case of block-structured J, an implicit equation that the full spectral density of J satisfies. The circular symmetry of the spectrum for that case is obvious because the equations [see Eq. (3.6) in [11]] depend on the complex variable z only through |z|2. Similar implicit equations, with integrals instead of sums, can be written for the continuous case. Rigorous mathematical analysis of the spectral density implied by such equations is beyond the scope of this paper and will be presented elsewhere. Nevertheless, the integral equations still depend on |z|2, supporting the circular symmetry of the spectrum.

III. DYNAMICS ABOVE THE CRITICAL POINT

A. Finite number of partitions

To study the spontaneous dynamics above the critical point we recall again the analogous result for a matrix with block structure. The D dimensional average autocorrelation vectors C(τ), Δ(τ) (see definition below) are restricted to a D★ dimensional subspace, where D★ is the number of eigenvalues of M with real part > 1 (i.e., the algebraic multiplicity of these eigenvalues). This result is obtained by projecting Eq. (11) on the right eigenvectors of M [13].

The definitions of the d = 1, . . . , D component of these vectors are

| (17) |

| (18) |

and the D★ dimensional subspace is

| (19) |

where are the right eigenvectors of M in descending order of the real part of their corresponding eigenvalue (see examples in Fig. 1). An equivalent statement is that, independent of the lag τ, projections of the vectors C(τ), Δ(τ) on any vector in the orthogonal complement subspace are approximately 0. Note that for asymmetric (but diagonalizable) M, Δ(τ) on any vector in the orthogonal complement subspace are approximately 0. Note that for asymmetric (but diagonalizable) M, is spanned by the left rather than the right eigenvectors of M:

| (20) |

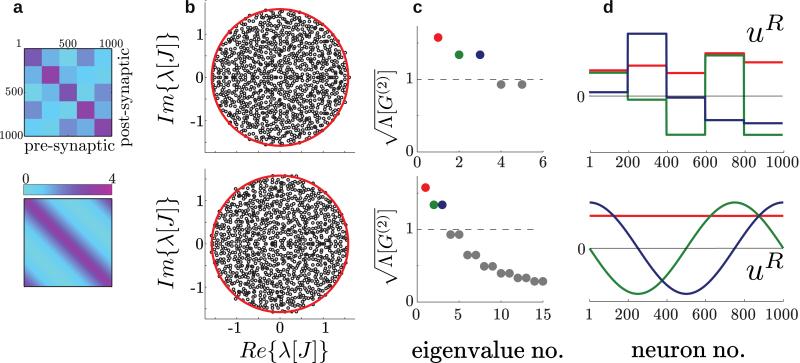

FIG. 1.

Eigenspaces of two example networks – one with block structured connectivity (top) and another with continuous gain modulation (bottom). (a) The synaptic gain matrix gij. (b) The spectrum of the random connectivity matrix J in the complex plane. The spectrum is supported by a disk with radius indicated in red. (c) The square root of the largest eigenvalues of . When these are greater than 1, the corresponding eigenvectors [shown in (d)] are active autocorrelation modes. For the continuous function we chose the circulant parametrization (see Sec. IV A) with g0 = 0.3, g1 = 3.0 and γ = 2.0. For the block structured connectivity, g was chosen such that the first five eigenvalues match exactly to those of the continuous network.

B. Autocorrelation modes in the generalized model

We can repeat the analysis of [13] for a network with connectivity that has K2 blocks, and for each N, K(N) obtain the subspace UM̃ that the K dimensional autocorrelation vectors C̃(τ), are restricted to. These vectors have components

| (21) |

| (22) |

Now when we take the limit N → ∞ the dimensionality of the autocorrelation vectors C̃(τ), becomes infinite as well, but the subspace UM̃ may be of finite dimension K★, where K★ is the algebraic multiplicity of eigenvalues of M̃with real part greater than 1 (see Sec. IV for an example).

We have shown that for g that satisfies the smoothness conditions, studying the network with connectivity is equivalent to studying the network with connectivity J̃ in the limit N → ∞. Therefore, in that limit, the individual neuron autocorrelation functions Ci(τ), Δi(τ) [Eq. 5] are restricted to the subspace spanned by the right eigenvectors of corresponding to eigenvalues with real part > 1.

This in fact is equivalent to, given the network structure g, predicting analytically the leading principal components in the N dimensional space of individual neuron autocorrelation functions (see Fig. 2). Note that traditionally principal component analysis is performed in the N dimensional space of neuron firing rates rather than autocorrelation functions. Numerical analysis performed in [27] suggests that the system's trajectories, when considered in the space spanned by the vectors x or ϕ(x) (individual neuron activations or firing rates), occupy a space of dimension that is extensive in the system size N. However, when considered in the space of individual neuron autocorrelation functions, the dimension of trajectories is intensive in N and usually finite. In the subspace we derive here the information about the relative phases between neurons is lost, but the amplitude and frequency information is preserved. Section VII includes further discussion of the consequences of our predictions and how they may be applied.

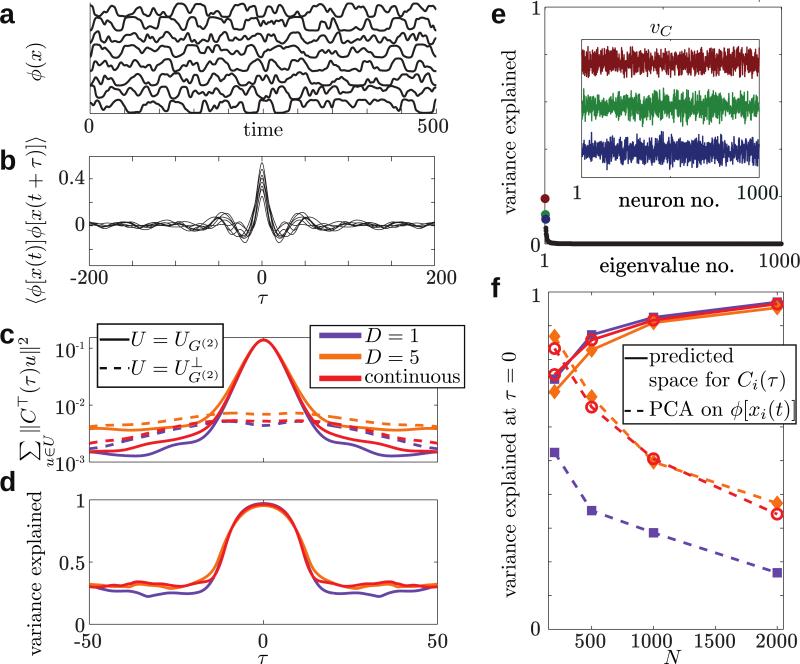

FIG. 2.

Low-dimensional structure of network dynamics. Traces of the firing rates ϕ[xi(t)] (a) and autocorrelations Ci(τ) (b) of eight example neurons chosen at random from the network with continuous gain modulation (shown in the bottom row of Fig. 1). (c) The sum of squared projections of the vector Ci(τ) on the vectors spanning UG(2) (the active modes, solid lines) or (the inactive modes, dashed lines). The dimension of the subspace UG(2) is K★ = 1 for the network with g = const and K★ = 3 for the block and continuous cases (orange and red, respectively), much smaller than N – K★ ≈ N, orthogonal the dimension of the complement space . (d) Our analytically derived subspace accounts for almost 100 percent of the variance in the autocorrelation vector for (in units of the synaptic time constant). (e) Reducing the dimensionality of the dynamics via principal component analysis on ϕ(x) leads to vectors (inset) that account for a much smaller portion of the variance (when using same dimension K★ for the subspace), and lack structure that could be related to the connectivity. (f) Summary data from 50 simulated networks per parameter set (N, structure type) at τ = 0. As N grows the leak into diminishes if one reduces the space of the Ci(τ) data while the fraction of variance explained becomes smaller when using PCA on the ϕ[xi(t)] data, a signature of the extensiveness of the dimension of the chaotic attractor.

C. Finite N behavior

For a finite system it is evident from numerical simulations that the N dimensional vector of autocorrelation functions has nonzero projections on inactive modes – eigenvectors of with corresponding eigenvalue which is < 1 (see Fig. 2). Here we study the magnitude of this effect, and specifically its dependence on N and on the model's structure function g. For simplicity, we will study the projections of the autocorrelation vector C(τ) at lag τ = 0. Let

| (23) |

where matrix with columns equal to orthogonalized eigenvectors of with corresponding eigenvalue less than 1 [see Eqs. (19) and (20)]. Here 〈·〉 denotes averaging over an ensemble of connectivity matrices (with the same structure g and same size N).

Consider the homogeneous network [i.e., constant g(zi, zj) = g0 > 1]. Now contains all the vectors in perpendicular to the dc mode and the squared norm ∥C(0)∥2 = O(N) because on average all neurons have the same autocorrelation function [Eq. (10)]. Thus, is simply the variance over the neural population of the individual neuron autocorrelation functions at lag τ = 0.

We can now use the mean-field approximation to determine the N dependence of . For N >> 1, the elements of the vector C(0) follow a scaled χ2 distribution

| (24) |

where and χ2(N) is the standard χ2 distribution N degrees of freedom. Thus, in this limit,

| (25) |

The autocorrelation function is in general a single neuron property. Therefore, their variation about the mean is uncorrelated across neurons independent of the network's structure: 〈Ci(0)Cj(0)〉 – 〈Ci(0)〉〈Cj(0)〉 ∝ δij. Thus, we can use the notation 〈(δCi(0))2〉 = 〈Ci(0)Ci(0)〉 – 〈Ci(0)〉〈Ci(0)〉.

In the case with D partitions the vectors that span UG(2) are no longer parallel to the dc mode. We assume that the projections on can still be estimated using the χ2 distribution, but here with αcN degrees of freedom for the c partition [instead of N, see Eq. (24)]. Thus, for a network with D partitions,

| (26) |

Finally, for K(N) partitions,

| (27) |

At this stage, Eq. (27) remains ambiguous because the function K(N) is not a property of the neural network model. Rather, it is a construction we use to show that in the limit N → ∞ we are able to characterize the dynamics using the vector dynamic mean field approach. Therefore, for finite N we now wish to estimate an appropriate value of K = K[g].

This can be done by noting that the network with block structured connectivity is a special case of the one with a continuous structure function. For that special case we know that K[g] = D. Since g is smooth, for sufficiently large N, we can assume that in each block g is linear in both variables zi and zj:

| (28) |

Here is the first derivative of g with respect to the first variable, evaluated in the middle of the μi, μj block.

The only expression for K[g] that depends on first derivatives of g and agrees with the homogeneous and block cases is

| (29) |

We are unable to test this prediction quantitatively, because we do not know the dependence of the function q on the structure g. We are able to show however that the dependence on N is the same as for the block models, which is confirmed by numerical simulations [compare solid purple, orange and red lines in Fig. 2(f)]. In the cases where g depends on N, the value of K[g] will also depend on N, such that the scaling of the “leak” may no longer be ∝ N−1.

IV. AN EXAMPLE WHERE g IS CIRCULANT

When the matrix g(zi, zj) is circulant such that g(zi, zj) = g(zij) with

| (30) |

the eigenvalues and eigenvectors of are given in closed form by integrals of the function g2(zij) and the Fourier modes with increasing frequency. In particular, the largest eigenvalue of corresponds to the zero frequency eigenvector ∝[1, . . . ,1]. To show this, consider the k+1 eigenvalue of the circulant matrix :

| (31) |

So in the limit N → ∞,

| (32) |

as desired.

A. A ring network

As an example we study a network with ring structure that will be defined by g(zi, zj) = g0 + g1(1–2zij), such that neurons that are closer are more strongly connected.

This definition leads to the following form for the critical coordinate along which the network undergoes a transition to chaotic behavior

| (33) |

Interestingly, as g1 increases continuously, additional discrete modes with increasing frequency over the network's spatial coordinate become active by crossing the critical point Λk = 1. When modes with sufficiently high spatial frequency have been introduced, nearby neurons may have distinct firing properties.

B. A toroidal network

In contrast to the ring network discussed above, the connectivity in real networks often depends on multiple factors. These could be the spatial coordinates of the cell body or the location in a functional space (e.g., the frequency that each particular neuron is sensitive to). Therefore we would like to consider a network where the function g depends on the distance between neurons embedded in a multidimensional space.

This problem was recently addressed by Muir and Mrsic-Flogel [14] by studying the spectrum of a specific type of Euclidean random matrix. In their model, neurons were randomly and uniformly distributed in a space of arbitrary dimension, and the connectivity was a deterministic function of their distance. While their approach resolves the issue of the spectral properties of the random matrix when connectivity depends on distance in more than one dimension, the dynamics these matrices imply remain unknown.

To study the spectrum and the dynamics jointly, we define a network where neurons’ positions form a square K × K grid (with ) on the [0, 1] × [0, 1] torus [see Fig. 3(a)]:

| (34) |

The positions of the neurons on the torus are schematized in Fig. 3(a).

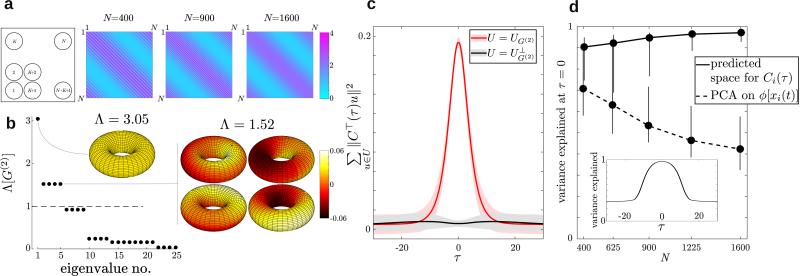

FIG. 3.

Results for a toroidal network. (a) A grid strategy with for tiling the [0, 1] × [0, 1] torus with N neurons (left) and the resulting deterministic gain matrix with elements gij for three values of N as defined in Eq. (35) (right). Unlike the ring network, here g depends on N, and its derivative is unbounded so as N increases the gain function “folds.” The parameters of the connectivity matrix are g0 = 0.7, g1 = 0.8. (b) The 25 nonzero eigenvalues of for N = 1600 and the eigenvectors corresponding to eigenvalues that are greater than 1 plotted on a torus with coordinates . (c) The sum of squared projections of the vector Ci(τ) on the vectors spanning UG(2) (the active modes, red line) or (the inactive modes, black line). Shades indicate the standard deviation computed from 50 realizations. (d) Comparison of the variance explained at τ = 0 by our predicted subspace (solid line) and by performing PCA on ϕ(x) (dashed line). Error bars represent 95% confidence intervals. Inset: the subspace we derived accounts for a large portion of the variance for time lags (in units of the synaptic time constant).

An analogous parametrization for g to the one we used in the ring example which respects the toroidal geometry reads

| (35) |

Note that now g depends on N, but it is bounded and its Lipschitz constant scales as , so it satisfies the smoothness conditions.

Figure 3(b) shows the spectrum of G(2) and the corresponding eigenvectors, plotted on a torus. Because there are non-uniform modes that are active (2 through 5), then each neuron has a different participation in the vector of autocorrelation functions. In Figs. 3(c) and 3(d) we show for a network with a range of N values that indeed the vector of autocorrelation functions is restricted to the predicted subspace in contrast to the firing rate vector.

The gain function analyzed here depends on a Euclidean distance on the torus. Other metrics, for example a city-block norm, can be treated similarly.

Overall these results provide a mechanism whereby continuous and non-fine -tuned connectivity that depends on a single or multiple factors can lead to a few active dynamic modes in the network. Importantly, the modes maintained by the network inherit their structure from the deterministic part of the connectivity.

V. MATRICES WITH HETEROGENEOUS DEGREE DISTRIBUTIONS

Here we will use our general result to compute the spectrum of a random connectivity matrix with specified in- and out-degree distributions. Realistic connectivity matrices found in many biological systems have degree distributions which are far from the binomial distribution that would be expected for standard Erdős-Rényi networks [28]. Specifically, they often exhibit correlation between the in- and out-degrees, clustering, community structures and possibly heavy-tailed degree distributions [5, 29]. Some results exist for symmetric adjacency matrices with broad degree distributions [30, 31] that are useful to studying systems beyond neuroscience.

We consider a connectivity matrix appropriate for a neural network model. Since each element of this matrix will have a nonzero mean, our current theory cannot make statements about the dynamics. Nevertheless the spectrum of the connectivity matrix is important on its own as a step towards understanding the behavior of random networks with general and possibly correlated degree distributions.

Consider a network with NE excitatory and NI inhibitory neurons (N = NE + NI). The connectivity is defined through the in- and out-degree sequences, N dimensional vectors where the ith element represents the number of incoming or outgoing connections to or from neuron i. Each inhibitory neuron has incoming or outgoing connections with probability p0 to or from every other neuron in the network. Within the excitatory subnetwork, degree distributions are heterogeneous. Specifically, kin, kout are the average excitatory in- and out-degree sequences that are drawn from a joint degree distribution that could be correlated. We assume that , where k̄ is the mean connectivity, and that the marginals of the degree distribution are equal. Define x, y to be the NE dimensional vectors and .

The matrix P defines the probability of connections given the fixed normalized degree sequences and p0:

| (36) |

The random adjacency matrix is then Aij ~ Bernoulli (Pij). Note that because the adjacency matrix is random, kin and kout are the average in- and out-degree sequences.

The connectivity matrix is then

| (37) |

with

| (38) |

where W0 is the ratio of the synaptic weight of inhibitory to excitatory synapses. It should be noted that the connections to and from inhibitory neurons are much less well characterized empirically, and the evidence for specific structure in these connections is weaker than for excitatory neurons. For this reason, outside of the excitatory subnetwork connectivity is assumed to be homogeneous.

To leading order, the distribution of eigenvalues of J will depend only on the mean and variance of its elements, which are summarized in the deterministic matrices Q (means) and (variances) with elements

| (39) |

| (40) |

We will show that the rank of the deterministic matrix Q is ≤ 3 (generically for large N and non-fine-tuned parameters rank {Q} = 3). In [10], Tao considered a case similar to ours, studying the spectrum of the sum of a random matrix with independent and identically distributed elements and a low-rank perturbation. In Sec. 2 of that paper, it is shown that because the resolvent, (J0–z)−1 (where z is a complex number), is close to outside the support of the spectrum, outlying eigenvalues fluctuate around the nonzero eigenvalues of the low-rank perturbation. Adapting the arguments of [32] in Sec. 5, as done in [11], it can be shown the resolvent of random matrices with independent and non identically distributed entries is also close to outside the support of the bulk spectrum. Hence, outlying eigenvalues will fluctuate around the deterministic low-rank eigenvalues.

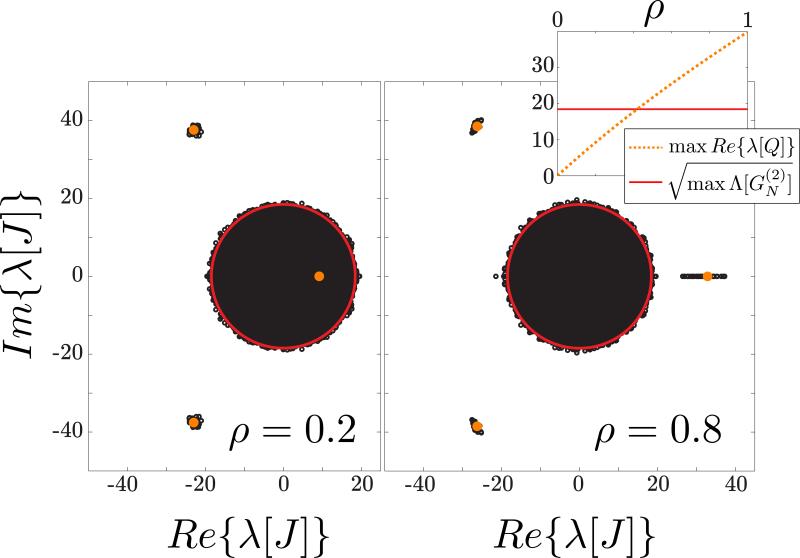

Combining these, we expect that if the nonzero eigenvalues of Q are outside of the bulk that originates from the random part of the matrix, the spectrum of the matrix J (with nonzero means) will be approximately a composition of the bulk and outliers that can be computed separately and that the approximation will become exact as N → ∞. This is verified through numerical calculations (Fig. 4).

FIG. 4.

Spectrum of connectivity matrices with heterogeneous, correlated joint degree distribution. The network parameters were chosen to be κ = 0.7, θ = 28.57, NE = 1000, NI = 250, p0 = 0.05, W0 = 5, where κ and θ are the form and scale parameters respectively of the distribution from which the in- and out-degree sequences are randomly drawn. The average correlation ρ between the in- and out-degree sequences was varied between 0 and 1. For the values ρ = 0.2 (left) and ρ = 0.8 (right) we drew 25 degree sequences and based on them drew the connectivity matrix according to the prescription outlined in Sec. V. The eigenvalues of each matrix were computed numerically and are shown in black. For each value of ρ we computed the average functions , etc., and the roots of the characteristic polynomials and (see Appendixes B and C for derivation). The predictions for the support of the bulk (solid red line) and the outliers (orange points and dotted line) are in agreement with the numerical calculation. Inset: as a function of ρ, there is a positive outlier that exits the disk to the right.

Viewing the normalized degree sequences x, y as deterministic ministic variables we define

| (41) |

Given the parameters W0,p0, NE, NI, we show in Appendix B that (generically for large N and non-fine-tuned parameters ) and its characteristic polynomial is with

| (42) † |

and ak = 0 for k > 4. Therefore, using our results, the radius of the bulk spectrum of J is equal to the square root of the largest solution to .

Furthermore we show that the nonzero eigenvalues of Q are equal to the roots of the polynomial , with

| (43) |

and bk = 0 for k > 3, such that the outlying eigenvalues of J are approximated by the roots of that lie outside of the bulk.

If the degree sequences are not specified, but only the joint in- and out-degree distribution they are drawn from, the random matrix J will be constructed in two steps: first kin and kout are drawn from their joint in- and out-degree distribution, and then the elements of J are drawn using the prescription outlined above. In such cases, one can in principle compute the averages , , etc., in terms of the moments of the joint degree distribution, and substitute these averages into the formulas we give assuming the degree sequences are fixed.

We have carried out that calculation (Appendix C) for Γ degree distributions with form parameter κ, scale parameter θ and arbitrary correlation ρ of the in- and out-degree sequences (see Fig. 4). We find that, for fixed marginals, the radius of the bulk spectrum depends extremely weakly on the correlation of the in- and out-degree sequences (see solid red line in inset to Fig. 4). The matrix Q however has a real, positive eigenvalue that for typical examples increases monotonically with the correlation, such that for some value it exits the bulk to the right (see Fig. 4). Work by Roxin [18], Schmeltzer et al. [19], and unpublished work by Landau and Sompolinsky [33] has shown that the broadness and correlation of the joint degree distribution can lead to qualitative changes in the behavior of a spiking network. Further work is required to investigate whether and why these changes can be explained by the spectrum of the connectivity matrix derived here. We anticipate that the outlier exiting the spectrum may be related to the transition to a saturated state observed in networks with block structure in [23].

VI. AN EXAMPLE FROM ECOLOGY

Random matrices have been used to study a wide variety of complex systems outside of neuroscience. Examples include metabolic networks, gene-regulatory networks, and communication networks. Here we analyze matrices with triangular structure that arise in ecology of food webs. The past few years have seen a resurgence of interest in the use of methods from random matrix theory to study the stability of ecosystems [34–36]. While the original work by R. May assumed a random unstructured connectivity pattern between species [37], experimental data shows marked departures from random connectivity [38]. This includes hierarchical organization within ecosystems where larger species have asymmetric effect on smaller species, larger variance in the number of partners for a given species [39], and fewer cycles involving three or more interacting species than would be expected from an Erdős-Rényi graph [40]. A popular model for food web structure is the cascade model [41], where species are rank ordered, and each species can exclusively prey upon lower-ranked species. The differential effects between predators and prey in the cascade model can be described using connectivity matrices with different statistics for entries above and below the diagonal [42]:

| (44) |

with

| (45) |

| (46) |

where Θ is the Heaviside step function. We use the convention Θ(0) = 0. Here, J describes the interactions between different species in the ecosystem. For μa, μb > 0 and sufficiently larger than ga, gb, the entries above (below) the diagonal are positive (negative), so the matrix describes a perfectly hierarchical food web, where the top-ranked species consumes all the other species, the second species consumes all the species but the first, and so on.

We will focus on the random part of the matrix (i.e., we set μa = μb = 0). The spectrum of the sum of the deterministic and random parts remains a problem for future study. Note that since the deterministic part has full rank, one cannot apply simple perturbation methods.

According to our analysis, the support of the spectrum of J is a disk with radius , , and

| (47) |

Following the derivation in [42] we will show that .

The characteristic polynomial is simplified by subtracting the i+1 column from the ith column for i = 1,..., N – 1 giving

| (48) |

where we have defined and . This simplifies to the recursion relation . Taking into account that , this recursion relation can be solved, giving:

| (49) |

Setting the characteristic polynomial to 0 leads to the equation,

| (50) |

which has multiple roots

| (51) |

We are interested in the largest among the N roots, which is real and positive. Taking into account the dependence of a and b on N, we find that:

| (52) |

as desired.

Interestingly, for all values of ga, gb the spectral radius of J is smaller than the radius of the network if the predator-prey structure did not exist. The latter is equal to . This suggests that the hierarchical structure of the interaction network serves to stabilize the ecosystem regardless of how dominant the predators are over the prey.

Note however that in this model there are no correlations. In [42], it was shown numerically that correlations (i.e., the expectation value of ) can dramatically change the stability of the network, compared with one that has no correlations.

VII. DISCUSSION AND CONCLUSIONS

We studied jointly the spectrum of a random matrix model and the dynamics of the neural network model it implies. We found that, as a function of the deterministic structure of the network (given by g), the network becomes spontaneously active at a critical point.

Identifying a space where the dynamics of a neural network can be described efficiently and robustly is one of the challenges of modern neuroscience [43]. In our model, above the critical point, the deterministic dynamics of the entire network are well approximated by a potentially low-dimensional probability distribution, with dimension equal to the number of eigenvalues of a deterministic matrix that have real part greater than 1.

The two limitations of our previous studies [11, 13] for interpreting multi-unit recordings are (1) that the cell-type identity of each neuron in the network has to be known and (2) that predictions are averaged over all neurons of a specific type.

Here both limitations are remedied. First, while some information about the connectivity structure is still required, this could be in the form of global spatial symmetries (“rules”) present in the network, such as the connectivity rule we used in the ring model. Second, our analysis provides a prediction for single neuron quantities, namely the participation of every neuron in the network in the global active dynamic modes.

Existence of discrete network modules with no apparent fine-tuned connectivity has been shown to exist in networks of grid cells in mammalian medial entorhinal cortex [44]. These cells fire when the animal's position is on the vertices of a hexagonal lattice, and are thought to be important for spatial navigation. Interestingly, when characterizing the firing properties of many such cells in a single animal one finds that the the lattice spacing of all cells belongs (approximately) to a discrete set that forms a geometric series [44]. Much work has been devoted to trying to understand how such a code could be used efficiently to represent the animal's location (see for example [45, 46]) and how such a code could be generated [47].

However, we are not aware of a model that explains how multiple modules (subnetworks with distinct grid spacing) could be generated without fine-tuned connectivity that is not observed experimentally. In our model, continuous changes to a connectivity parameter can introduce additional discrete and spatially periodic modes into the network represented by finer and finer lattices. We are not arguing that the random network we are studying here could serve as a model of grid-cell networks, as there are many missing details that cannot be accounted for by our model. Nevertheless our analysis uncovers a mechanism by which a low-dimensional, spatially structured dynamics could arise as a result of random connectivity.

More broadly, our results offer insight into the question of what is the appropriate random matrix model for studying networks with structured connectivity. We focus our discussion on networks with increased probability of bidirectional connections (see for example [5]). Most empirical datasets consist of connectivity measurements within a subnetwork of a few neurons, and thus cannot distinguish between the following two processes giving rise to the observed over-representation of bidirectional connections [48]. One possibility is that microscopic (e.g., molecular) signaling is responsible for an increased probability that neuron i is connected to j, given that j is connected to i. An alternative possibility is that the in and out degree sequences are correlated macroscopically, so that if neuron j is connected to i the in- and out-degrees of i will be large with increased probability, so the chance that i is connected to j is larger than the average connectivity in the network. These two possibilities are related to different random matrix models that imply markedly different network dynamics: the first to an elliptic model where the elements Jij and Jji are correlated [7], and the second to a model with heterogeneous and correlated degree distributions, such as the one studied here that has a circularly symmetric spectrum.

ACKNOWLEDGMENTS

The authors would like to thank N. Brunel, S. Allesina, A. Roxin, and J. Heys for discussions. J.A. was supported by NSF CRCNS Grant No. IIS-1430296. M.V. acknowledges a doctoral grant by Fundació “la Caixa” and a travel grant by “Fundació Ferran Sunyer i Balaguer. T.O.S. was supported by NIH Grants No. R01EY019493 and No. P30 EY019005, NSF Career Award No. IIS 1254123 and NSF Grant No. 1556388, and by the Salk Innovations program.

Appendix A: The limit K, N → ∞

Here we will show that the difference between the piecewise estimate g̃ and the continuous synaptic gain function g goes to 0 as N → ∞. We assumed that the unit square can be tiled by square subsets of area where g is bounded, differentiable, and its first derivative is bounded in each subset. Note that the Lipschitz constant of g can depend on N, but s0 cannot.

For , recall our definitions for g̃ and μi [Eqs. (13) and (14)] and define kij = (K−1(μi – 1), K−1μi × (K−1(μj – 1), K−1μj. Also recall our assumption that each point is either inside a square with side s0 within which there are no discontinuities or on the border of such a subset. Thus, for we can assume that every constant region of g̃ is contained within a single square subset.

We would like to show that for all i, j

| (A1) |

Since s0 is independent of N, we only have to show that Eq. (A1) is true within a subset where g satisfies the smoothness conditions.

Using our definitions and the fact that g has Lipschitz constant ,

| (A2) |

So finally,

| (A3) |

Appendix B: The characteristic polynomials of and Q

Here we compute directly the characteristic polynomials of and Q (Eqs. 42, 43) using the minor expansion formula.

1. Calculation of spectrum of G(2)

Recall that N = NE + NI, and let be the k × k matrix with elements taken from the intersection of k specific rows and columns of . The notation will indicate that exactly kE and kI of these rows and columns correspond to excitatory and inhibitory neurons, respectively.

For convenience we will use ν = p0 (1 – p0) and . We would like to write an expression for the characteristic polynomial of using the sums over its diagonal minors

| (B1) |

where for k ≥ 1 and a0 = 1. The notation means a sum over all combinations of NE, NI such that NE + NI = k (i.e. the so-called k-row diagonal minors of ). We will compute a0, . . . , a4 explicitly and show that ak = 0 for k > 4.

We begin by noting that the determinant of the 3 × 3 matrix

is 0 because the middle matrix is the sum of two rank 1 matrices.

The coefficient a0

By definition, a0 = 1.

The coefficient a1

The second coefficient, a1 is simply the trace

| (B2) |

where in the second row we used the functions of the degree sequences [Eq. (41)].

The coefficient a2

The third coefficient a2 is the sum of two row diagonal minors. There are three types of diagonal minors, only two of which are nonzero:

| (B3) |

Carrying out the summation over possible combinations

| (B4) |

Putting these together we get

| (B5) |

The coefficient a3

The fourth coefficient a3 is the sum of all three row diagonal minors. Now there are four types of minors, only one of which is nonzero:

| (B6) |

Carrying out the sum,

| (B7) |

The coefficient a4

The last nonzero coefficient is a4, the sum of all four row diagonal minors. Here there are five types, only one of which is nonzero:

| (B8) |

Carrying out the sum we get

| (B9) |

The coefficients ak with k > 4

Now we show that ak = 0 for k > 4. A diagonal minor representing a subnetwork of five neurons or more can have NI = 0, NI = 1, or NI ≥ 2. If NI ≥ 2 the diagonal minor is zero because of repeated columns. If NI = 1, then NE ≥ 4. Here, the determinant is a weighted sum of k = NE −1 = N −2 row diagonal minors of the form which is zero for NE ≥ 4. Last, if NI = 0 then again we have a sum of terms of the form which are zero as discussed above.

2. Calculation of spectrum of Q

Using a similar approach we will compute the characteristic polynomial of Q and show that generically rank {Q} = 3. Using the sums over diagonal minors of QNE+NI,

| (B10) |

where for k ≥ 1 and where is a k × k matrix with elements taken from the intersection of k rows and columns of Q. Again, will indicate that kE and kI rows and columns correspond to excitatory and inhibitory neurons, respectively.

The coefficient b0

By definition we have b0 = 1.

The coefficient b1

The second term is the trace

| (B11) |

The coefficient b2

The third coefficient is the sum over two row diagonal minors:

| (B12) |

carrying out the summation, we get

| (B13) |

The coefficient b3

The fourth and last nonzero coefficient is the sum over three row diagonal minors

Carrying out the sum,

| (B15) |

The coefficients bk with k > 3

Now we show that bk = 0 for k > 3. A minor representing a subnetwork of four neurons or more can have NI = 0, NI = 1, or NI ≥ 2. If NI ≥ 2 the minor is zero because of repeated columns. If NI = 1, then NE ≥ 3. Here, the determinant is a sum of k = NE − 1 N −2 row diagonal minors of the form which is zero for E ≥ 3. Lastly, if NI = 0 then again we have a sum of terms of the form which is zero as discussed above.

Appendix C: Networks with Γ degree distributions

We choose a specific parametrization where the marginals of the joint in- and out-degree distribution are Γ with form parameter κ, scale parameter θ and have average correlation ρ. Owing to the properties of sums of random variables that follow a Γ distribution, we can write the random in- and out-degree sequences as

| (C1) |

where 1 ≤ i ≤ NE. In this Appendix 〈·〉 will denote averages over the joint in- and out-degree distribution.

The moments of the distribution imply that, for this parametrization,

| (C2) |

for all 1 ≤ i ≤ NE. Here, since elements of and are (separately) independent and identically distributed we will suppress the subscript i and superscripts in, out when possible, and let 〈k2〉 = 〈kin⊤kin〉, kinkout = kin⊤kout, etc.

One can verify that indeed the average correlation between the in- and out-degree sequences is

| (C3) |

Using this parametrization we compute the averages , , etc., and express them in terms of ρ, θ, κ, and NE.

The functional

| (C4) |

The functional

| (C5) |

The functional

| (C6) |

The functional

To compute we first derive an expression for kin2kout2. Using the independence of k1, k2, k3,

| (C7) |

Now we can write

| (C8) |

The functional

To compute (and ) we first derive an expression for 〈kinkout2〉. Using the independence of k1; k2; k3,

| (C9) |

Now we can write

| (C10) |

The functional

| (C11) |

References

- 1.Kleinfeld D, Bharioke A, Blinder P, Bock DD, Briggman KL, Chklovskii DB, Denk W, Helmstaedter M, Kaufhold JP, Lee W-CA, Meyer HS, Micheva KD, Oberlaender M, Prohaska S, Reid RC, Smith SJ, Takemura S, Tsai PS, Sakmann B. The Journal of Neuroscience. 2011;31:16125. doi: 10.1523/JNEUROSCI.4077-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ko H, Hofer SB, Pichler B, Buchanan KA, Sjöström PJ, Mrsic-Flogel TD. Nature. 2011;473:87. doi: 10.1038/nature09880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Karlebach G, Shamir R. Nature Reviews Molecular Cell Biology. 2008;9:770. doi: 10.1038/nrm2503. [DOI] [PubMed] [Google Scholar]

- 4.Chung K, Deisseroth K. Nature Methods. 2013;10:508. doi: 10.1038/nmeth.2481. [DOI] [PubMed] [Google Scholar]

- 5.Song S, Sjöström PJ, Reigl M, Nelson S, Chklovskii DB. PLoS Biology. 2005;3:e68. doi: 10.1371/journal.pbio.0030068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yoshimura Y, Callaway EM. Nature Neuroscience. 2005;8:1552. doi: 10.1038/nn1565. [DOI] [PubMed] [Google Scholar]

- 7.Sommers HJ, Crisanti A, Sompolinsky H, Stein Y. Physical Review Letters. 1988;60:1895. doi: 10.1103/PhysRevLett.60.1895. [DOI] [PubMed] [Google Scholar]

- 8.Rajan K, Abbott LF. Physical Review Letters. 2006;97:188104. doi: 10.1103/PhysRevLett.97.188104. [DOI] [PubMed] [Google Scholar]

- 9.Wei Y. Physical Review E. 2012;85:066116. doi: 10.1103/PhysRevE.85.066116. [DOI] [PubMed] [Google Scholar]

- 10.Tao T. Probability Theory and Related Fields. 2013;155:231. [Google Scholar]

- 11.Aljadeff J, Renfrew D, Stern M. Journal of Mathematical Physics. 2015;56:103502. [Google Scholar]

- 12.Ahmadian Y, Fumarola F, Miller KD. Physical Review E. 2015;91:012820. doi: 10.1103/PhysRevE.91.012820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Aljadeff J, Stern M, Sharpee T. Physical Review Letters. 2015;114:088101. doi: 10.1103/PhysRevLett.114.088101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Muir DR, Mrsic-Flogel T. Physical Review E. 2015;91:042808. doi: 10.1103/PhysRevE.91.042808. [DOI] [PubMed] [Google Scholar]

- 15.Sussillo D, Abbott LF. Neuron. 2009;63:544. doi: 10.1016/j.neuron.2009.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sussillo D, Churchland MM, Kaufman MT, Shenoy KV. Nature Neuroscience. 2015;18:1025. doi: 10.1038/nn.4042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sompolinsky H, Crisanti A, Sommers HJ. Physical Review Letters. 1988;61:259. doi: 10.1103/PhysRevLett.61.259. [DOI] [PubMed] [Google Scholar]

- 18.Roxin A. Frontiers in Computational Neuroscience 5. 2011 doi: 10.3389/fncom.2011.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Schmeltzer C, Kihara AH, Sokolov IM, Rüdiger S. PLoS ONE. 2015;10:e0121794. doi: 10.1371/journal.pone.0121794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rogers T, Castillo IP. Physical Review E. 2009;79:012101. doi: 10.1103/PhysRevE.79.012101. [DOI] [PubMed] [Google Scholar]

- 21.Neri I, Metz FL. Physical Review Letters. 2012;109:030602. doi: 10.1103/PhysRevLett.109.030602. [DOI] [PubMed] [Google Scholar]

- 22.Sussillo D, Barak O. Neural Computation. 2013;25:626. doi: 10.1162/NECO_a_00409. [DOI] [PubMed] [Google Scholar]

- 23.Kadmon J, Sompolinsky H. Physical Review X. 2015;5:041030. [Google Scholar]

- 24.Amari S-I. Systems, Man and Cybernetics, IEEE Transactions on. 1972;643 [Google Scholar]

- 25.Sompolinsky H, Zippelius A. Physical Review B. 1982;25:6860. [Google Scholar]

- 26.Molgedey L, Schuchhardt J, Schuster HG. Physical Review Letters. 1992;69:3717. doi: 10.1103/PhysRevLett.69.3717. [DOI] [PubMed] [Google Scholar]

- 27.Rajan K, Abbott LF, Sompolinsky H. Advances in Neural Information Processing Systems. 2010 [Google Scholar]

- 28.Barabási A-L, Albert R. Science. 1999;286:509. doi: 10.1126/science.286.5439.509. [DOI] [PubMed] [Google Scholar]

- 29.Boccaletti S, Latora V, Moreno Y, Chavez M, Hwang D-U. Physics Reports. 2006;424:175. [Google Scholar]

- 30.Farkas IJ, Derényi I, Barabási A-L, Vicsek T. Phys. Rev. E. 2001;64:026704. doi: 10.1103/PhysRevE.64.026704. [DOI] [PubMed] [Google Scholar]

- 31.Dorogovtsev SN, Goltsev AV, Mendes JFF, Samukhin AN. Physical Review E. 2003;68:046109. doi: 10.1103/PhysRevE.68.046109. [DOI] [PubMed] [Google Scholar]

- 32.O'Rourke S, Renfrew D. Electronic Journal of Probability. 2014;19:1. [Google Scholar]

- 33.Landau I, Sompolinsky H. Cosyne Abstracts 2015, Salt Lake City USA. 2015 [Google Scholar]

- 34.Allesina S, Tang S. Population Ecology. 2015;57:63. [Google Scholar]

- 35.Namba T. Population Ecology. 2015;57:3. [Google Scholar]

- 36.Tokita K. Population Ecology. 2015;57:53. [Google Scholar]

- 37.May RM. Nature. 1972;238:413. doi: 10.1038/238413a0. [DOI] [PubMed] [Google Scholar]

- 38.Cohen JE. Theoretical Population Biology. 1990;37:55. [Google Scholar]

- 39.Dunne JA, Williams RJ, Martinez ND. Proceedings of the National Academy of Sciences. 2002;99:12917. doi: 10.1073/pnas.192407699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Allesina S, Alonso D, Pascual M. Science. 2008;320:658. doi: 10.1126/science.1156269. [DOI] [PubMed] [Google Scholar]

- 41.Cohen J, Newman C. Proceedings of the Royal Society of London B: Biological Sciences. 1985;224:421. [Google Scholar]

- 42.Allesina S, Grilli J, Barabás G, Tang S, Aljadeff J, Maritan A. Nature Communications. 2015;6 doi: 10.1038/ncomms8842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Gao P, Ganguli S. Current Opinion in Neurobiology. 2015;32:148. doi: 10.1016/j.conb.2015.04.003. [DOI] [PubMed] [Google Scholar]

- 44.Stensola H, Stensola T, Solstad T, Frøland K, Moser M-B, Moser EI. Nature. 2012;492:72. doi: 10.1038/nature11649. [DOI] [PubMed] [Google Scholar]

- 45.Stemmler M, Mathis A, Herz AV. Science Advances. 2015;1:e1500816. doi: 10.1126/science.1500816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wei X.-x., Prentice J, Balasubramanian V. eLife. 2015:e08362. doi: 10.7554/eLife.08362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Burak Y, Fiete IR. PLoS Computational Biology. 2009;5:e1000291. doi: 10.1371/journal.pcbi.1000291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Vegué M, Roxin A. Program No. 94.11. Neuroscience Meeting Planner. Society for Neuro-science; Chicago: 2015. [Google Scholar]